Chapter 4. Data Governance for Master Data Management

4.1. Introduction

Both external and internal pressures introduce constraints on the use of enterprise information. Financial reporting, regulatory compliance, and privacy policies are just a few examples of the kinds of pressures that prompt data management professionals and senior business managers to institute a framework for ensuring the quality and integrity of the data asset for all of its purposes. These challenges are magnified as data sets from different applications are consolidated into a master data environment. Numerous aspects of ensuring this integrity deal with the interactions between the applications, data, and individuals in the enterprise, and therefore it is worthwhile to explore the mechanics, virtues, and ongoing operations of instituting a data governance program within an organization.

At its core, the objective of data governance is predicated on the desire to assess and manage the many kinds of risks that lurk within the enterprise information portfolio and to reduce the impacts incurred by the absence of oversight. Although many data governance activities might be triggered by a concern about regulatory compliance, the controls introduced by data governance processes and protocols provide a means for quantitative metrics for assessing risk reduction as well as measuring business performance improvements. By introducing a program for defining information policies that relate to the constraints of the business and adding in management and technical oversight, the organization can realign itself around performance measures that include adherence to business policies and the information policies that support the business.

One of the major values of a master data management program is that, because it is an enterprise initiative, it facilitates the growth of an enterprise data governance program. As more lines of business integrate with core master data object repositories, there must be some assurance that the lines of business are adhering to the rules that govern participation. Although MDM success relies on data governance, a governance program can be applied across different operational domains, providing economies of scale for enterprise-wide deployment. In this chapter, we will look at the driving factors for instituting a data governance program in conjunction with master data management, setting the stage for deploying governance, critical data elements and their relation to key corporate data entities, stages for implementation, and roles and responsibilities.

One special note, though: the directive to establish data governance is a double-edged sword. An organization cannot expect to dictate into existence any type of governance framework without the perception of business value, which prevents the creation of a full-scale oversight infrastructure overnight. On the other hand, the absence of oversight will prevent success for any enterprise information initiative. This chapter, to some extent, presents an idealized view of how data governance is structured in the best of cases. However, the value of governance is linked to the value of what is being governed; incremental steps may be necessary to build of successes as a way of achieving “collateral.” Continuous reassessment of the data governance program is a good way to ensure that the appropriate amount of effort is spent.

4.2. What Is Data Governance?

There are different perceptions of what is meant by the term “data governance.” Data governance is expected to ensure that the data meets the expectations of all the business purposes, in the context of data stewardship, ownership, compliance, privacy, security, data risks, data sensitivity, metadata management, and MDM. What is the common denominator? Each of these issues centers on ways that information management is integrated with controls for management oversight along with verification of organizational observance of information policies. In other words, each aspect of data governance relates to the specification of a set of information policies that reflect business needs and expectations, along with the processes for monitoring conformance to those information policies.

Whether we are discussing data sensitivity, financial reporting, or the way that team members execute against a sales system, each aspect of the business could be boiled down to a set of business policy requirements. These business policies rely on the accessibility and usability of enterprise data, and the way each business policy uses data defines a set of information usage policies. Each information policy embodies a set of data rules or constraints associated with the definitions, formats, and uses of the underlying data elements.

Qualitative assertions about the quality of the data values, records, and the consistency across multiple data elements are the most granular level of governance. Together, these provide a layer of business metadata that will be employed in automating the collection and reporting of conformance to the business policies. Particularly in an age where noncompliance with external reporting requirements (e.g., Sarbanes-Oxley in the United States) can result in fines and prison sentences, the level of sensitivity to governance of information management will only continue to grow.

4.3. Setting the Stage: Aligning Information Objectives with the Business Strategy

Every organization has a business strategy that should reflect the business management objectives, the risk management objectives, and the compliance management objectives. Ultimately, the success of the organization depends on its ability to manage how all operations conform to that business strategy. This is true for information technology, but because of the centrality of data in the application infrastructure, it is particularly true in the area of data management.

The challenge lies in management's ability to effectively communicate the business strategy, to explain how nonconformance to the strategy impacts the business, and to engineer the oversight of information in a way that aligns the business strategy with the information architecture. This alignment is two-fold—it must demonstrate that the individuals within the organization understand how information assets are used as well as how that information asset is managed over time.

These concepts must be kept in mind when developing the policies and procedures associated with the different aspects of the MDM program. As a representation of the replicated data sets is integrated into a unified view, there is also a need to consolidate the associated information policies. The union of those policies creates a more stringent set of constraints associated with the quality of master data, requiring more comprehensive oversight and coordination among the application owners. To prepare for instituting the proper level of governance, these steps may be taken:

4.3.1. Clarifying the Information Architecture

Many organizational applications are created in a virtual vacuum, engineered to support functional requirements for a specific line of business without considering whether there is any overlap with other business applications. Although this tactical approach may be sufficient to support ongoing operations, it limits an enterprise's analytical capability and hampers any attempt at organizational oversight.

Before a data governance framework can be put into place, management must assess, understand, and document the de facto information architecture. A prelude to governance involves taking inventory to understand what data assets exist, how they are managed and used, and how they support the existing application architecture, and then evaluating where existing inefficiencies or redundancies create roadblocks to proper oversight.

The inventory will grow organically—initially the process involves identifying the data sets used by each application and enumerating the data attributes within each data set. As one would imagine, this process can consume a huge amount of resources. To be prudent, it is wise to focus on those data elements that have widespread, specific business relevance; the concept of the key data entity and the critical data element is treated in 4.10 and 4.11.

Each data element must have a name, a structural format, and a definition, all of which must be registered within a metadata repository. Each data set models a relevant business concept, and each data element provides insight into that business concept within the context of the “owning” application. In turn, each definition must be reviewed to ensure that it is correct, defensible, and is grounded by an authoritative source.

This collection of data elements does not constitute a final architecture. A team of subject matter experts and data analysts must look at the physical components and recast them into a logical view that is consistent across the enterprise. A process called “harmonization” examines the data elements for similarity (or distinction) in meaning. Although this activity overlaps with the technical aspects of master data object analysis, its importance to governance lies in the identification and registration of the organization's critical data elements, their composed information structures, and the application functions associated with the data element life cycle.

4.3.2. Mapping Information Functions to Business Objectives

Every activity that creates, modifies, or retires a data element must somehow contribute to the organization's overall business objectives. In turn, the success or failure of any business activity is related to the appropriate and correct execution of all information functions that support that activity. For example, many website privacy policies specify that data about children below a specified age will not be shared without permission of the child's parent. A data element may be used to document each party's birth date and parent's permission, and there will also be functions to verify the party's age and parental permission before the information is shared with another organization.

When assessing the information architecture, one must document each information function and how it maps to achieving business objectives. In an environment where there are many instances of similar data, there will also be many instances of similar functionality, and as the data are absorbed into a master data hierarchy, the functional capabilities may likewise be consolidated into a service layer supporting the enterprise.

A standardized approach for functional description will help in assessing functional overlap, which may be subject for review as the core master data objects are identified and consolidated. However, in all situations, the application functionality essentially represents the ways that information policies are implemented across the enterprise.

4.3.3. Instituting a Process Framework for Information Policy

The goal of the bottom-up assessment is to understand how the information architecture and its associated functionality support the implementation of information policy. But in reality, the process should be reversed—information policy should be defined first, and then the data objects and their associated services should be designed to both implement and document compliance with that policy.

This process framework is supported by and supports a master data management environment. As key data entities and their associated attributes are absorbed under centralized management, the ability to map the functional service layer to the deployment of information policy also facilitates the collection and articulation of the data quality expectations associated with each data attribute, record, and data set, whether they are reviewed statically within persistent storage or in transit between two different processing stages. Clearly specified data quality expectations can be deployed as validation rules for data inspection along its “lineage,” allowing for the embedding of monitoring probes (invoking enterprise services) that collectively (and reliably) report on compliance.

4.4. Data Quality and Data Governance

A data quality and data governance assessment clarifies how the information architecture is used to support compliance with defined information policies. It suggests that data quality and data standards management are part of a much larger picture with respect to oversight of enterprise information.

In the siloed environment, the responsibilities, and ultimately the accountability for ensuring that the data meets the quality expectations of the client applications lie within the management of the corresponding line of business. This also implies that for MDM, the concept of data ownership (which is frequently treated in a cavalier manner) must be aligned within the line of business so that ultimate accountability for the quality of data can be properly identified. But looking at the organization's need for information oversight provides a conduit for reviewing the dimensions of data quality associated with the data elements, determining their criticality to the business operations, expressing the data rules that impact compliance, defining quantitative measurements for conformance to information policies, and determining ways to integrate these all into a data governance framework.

4.5. Areas of Risk

What truly drives the need for governance? Although there are many drivers, a large component boils down to risk. Both business and compliance risks drive governance, and it is worthwhile to look at just a few of the areas of risk associated with master data that require information management and governance scrutiny.

4.5.1. Business and Financial

If the objective of the MDM program is to enhance productivity and thereby improve the organization's bottom line, then the first area of risk involves understanding how nonconformance with information policies puts the business's financial objectives at risk. For example, identifying errors within financial reports that have a material impact requiring restatement of results not only demonstrates instability and lack of control, it also is likely to have a negative impact on the company (and its shareholders) as a whole, often reflected in decrease in the company's value.

Absence of oversight for the quality of financial data impacts operational aspects as well. The inability to oversee a unified master view of accounts, customers, and suppliers may lead to accounting anomalies, including underbilling of customers, duplicate payments or overpayments to vendors, payments to former employees, and so on.

4.5.2. Reporting

Certain types of regulations (e.g., Sarbanes-Oxley for financial reporting, 21 CFR Part 11 for electronic documentation in the pharmaceutical industry, Basel II for assessing capital risk in the banking industry) require that the organization prepare documents and reports that demonstrate compliance, which establishes accurate and auditable reporting as an area of risk. Accuracy demands the existence of established practices for data validation, but the ability to conduct thorough audits requires comprehensive oversight of the processes that implement the information policies. Consequently, ensuring report consistency and accuracy requires stewardship and governance of the data sets that are used to populate (or materialize data elements for) those reports.

For example, consider that in financial reporting, determining that flawed data was used in assembling a financial statement may result in material impacts that necessitate a restatement of the financial report. Restatements may negatively affect the organization's stock price, leading to loss of shareholder value, lawsuits, significant “spin control” costs, and potentially jail time for senior executives. A governance program can help to identify opportunities for instituting data controls to reduce the risk of these kinds of situations.

4.5.3. Entity Knowledge

Maintaining knowledge of the parties with whom the organization does business is critical for understanding and reducing both business risks (e.g., credit rating to ensure that customers can pay their bills) and regulatory risks. Many different industries are governed by regulations that insist on customer awareness, such as the USA PATRIOT Act, the Bank Secrecy Act, and Graham-Leach-Bliley, all of which require the ability to distinguish between unique individual identities. Ensuring that the tools used to resolve identities are matching within expected levels of trust and that processes exist for remediating identity errors falls under the realm of governance and stewardship.

4.5.4. Protection

The flip side of entity knowledge is protection of individual potentially private information. Compliance directives that originate in regulations such as HIPAA and Graham-Leach-Bliley require that organizations protect each individual's data to limit data breaches and protect personal information. Similarly to entity knowledge, confidence in the management of protected information depends on conformance to defined privacy and data protection constraints.

4.5.5. Limitation of Use

Regulations and business arrangements (as codified within contractual agreements) both establish governance policies for limiting how data sets are used, how they are shared, what components may be shared, and the number of times they can be copied, as well as overseeing the determination of access rights for the data. Data lineage, provenance, and access management are all aspects of the types of information policies whose oversight is incorporated within the governance program.

4.6. Risks of Master Data Management

If these types of risks were not enough, the deployment of a master data management program introduces organizational risks of its own. As a platform for integrating and consolidating information from across vertical lines of business into a single source of truth, MDM implies that independent corporate divisions (with their own divisional performance objectives) yield to the needs of the enterprise.

As an enterprise initiative, MDM requires agreement from the participants to ensure program success. This leads to a unique set of challenges for companies undertaking an MDM program.

4.6.1. Establishing Consensus for Coordination and Collaboration

The value of the master data environment is the agreement (across divisions and business units) that it represents the highest quality identifying information for enterprise master data objects. The notion of agreement implies that all application participants share their data, provide positive input into its improvement, and trust the resulting consolidated versions. The transition to using the master data asset suggests that all application groups will work in a coordinated manner to share information and resources and to make sure that the result meets the quality requirements of each participant.

4.6.2. Data Ownership

As an enterprise resource, master data objects should be owned by the organization, and therefore the responsibilities of ownership are assigned to an enterprise resource. There are some benefits to this approach. For example, instead of numerous agents responsible for different versions of the same objects, each is assigned to a single individual. In general, management and oversight is simplified because the number of data objects is reduced, with a corresponding reduction in resource needs (e.g., storage, backups, metadata). One major drawback to centralizing ownership is political, because the reassignment of ownership, by definition, removes responsibilities from individuals, some of whom are bound to feel threatened by the transition. The other is logistic, focusing on the process of migrating the responsibilities for data sets from a collection of individuals to a central authority.

But as any enterprise initiative like MDM or enterprise resource planning (ERP) is based on strategic drivers with the intention of adjusting the way the organization works, master data consolidation is meant to provide a high-quality, synchronized asset that can streamline application sharing of important data objects. ERP implementations reduce business complexity because the system is engineered to support the interactions between business applications, instead of setting up the barriers common in siloed operations. In essence, the goals of these strategic activities are to change the way that people operate, increase collaboration and transparency, and transition from being tactically driven by short-term goals into a knowledge-directed organization working toward continuous performance improvement objectives.

Therefore, any implementation or operational decisions made in deploying a strategic enterprise solution should increase collaboration and decrease distributed management. This suggests that the approach by agents for the entire organization to obtain centralized ownership of master data entities is more aligned with the strategic nature of an MDM or ERP program.

However, each line of business may have its own perception of data ownership, ranging from an information architecture owned by the business line management data that is effectively captured and embedded within an application (leading to a high level of data distribution), to undocumented perceptions of ownership on behalf of individual staff members managing the underlying data sets. As diffused application development likely occurs when there is no official data ownership policy, each business application manager may view the data owned by the group or attached to the application.

When centralizing shared master data, though, the consolidation of information into a single point of truth implies that the traditional implied data ownership model has been dissolved. This requires the definition and transference of accountability and responsibility from the line of business to the enterprise, along with its accompanying policies for governance.

4.6.3. Semantics: Form, Function, and Meaning

Distributed application systems designed and implemented in isolation are likely to have similar, yet perhaps slightly variant definitions, semantics, formats, and representations. The variation across applications introduces numerous risks associated with the consolidation of any business data object into a single view. For example, different understandings of what is meant by the term “customer” may be tolerated within the context of each application, but in a consolidated environment, there is much less tolerance for different counts and sums. Often these different customer counts occur because of subtleties in the definitions. For example, in the sales organization, any prospect is a “customer,” but in the accounting department, only parties that have agreed to pay money in exchange for products are “customers.”

An MDM migration requires establishing processes for resolving those subtle (and presumed meaningless) distinctions in meaning that can become magnified during consolidation, especially in relation to data element names, definitions, formats, and uses. This can be addressed by providing guidelines for the capture of data element metadata and its subsequent syntactic and semantic harmonization. Organizations can also establish guidelines for collaboration among the numerous stakeholders so that each is confident that using the master version of data will not impact the appropriate application. Processes for collecting enterprise metadata, harmonizing data element names, definitions, and representations are overseen by the governance program.

4.7. Managing Risk through Measured Conformance to Information Policies

Preventing exposure to risk requires creating policies that establish the boundaries of the risk, along with an oversight process to ensure compliance with those policies. Although each set of policies may differ depending on the different related risks, all of these issues share some commonalities:

Federation. In each situation, the level of risk varies according to the way that information is captured, stored, managed, and shared among applications and individuals across multiple management or administrative boundaries. In essence, because of the diffused application architecture, to effectively address risks, all of the administrative groups must form a federation, slightly blurring their line-of-business boundaries for the sake of enterprise compliance management.

Defined policy. To overcome the challenges associated with risk exposure, policies must be defined (either externally or internally) that delineate the guidelines for risk mitigation. Although these policies reflect operational activities for compliance, they can be translated into rules that map against data elements managed within the enterprise.

Need for controls. The definition of policy is one thing, but the ability to measure conformance to that policy is characterized as a set of controls that inspect data and monitor conformance to expectations along the process information flow.

Transparent oversight. One aspect of each of the areas of risk described here is the expectation that there is some person or governing body to which policy conformance must be reported. Whether that body is a government agency overseeing regulatory compliance, an industry body that watches over industry guidelines, or public corporate shareholders, there is a need for transparency in the reporting framework.

Auditability. The need for transparency creates a need for all policy compliance to be auditable. Not only does the organization need to demonstrate compliance to the defined policies, it must be able to both show an audit trail that can be reviewed independently and show that the processes for managing compliance are transparent as well.

The upshot is that whether the objective is regulatory compliance, managing financial exposure, or overseeing organizational collaboration, centralized management will spread data governance throughout the organization. This generally happens through two techniques. First, data governance will be defined through a collection of information policies, each of which is mapped to a set of rules imposed over the life cycle of critical data elements. Second, data stewards will be assigned responsibility and accountability for both the quality of the critical data elements and the assurance of conformance to the information policies. Together these two aspects provide the technical means for monitoring data governance and provide the context to effectively manage data governance.

4.8. Key Data Entities

Key data entities are the fundamental information objects that are employed by the business applications to execute the operations of the business while simultaneously providing the basis for analyzing the performance of the lines of business. Although the description casts the concept of a key data entity (or KDE) in terms of its business use, a typical explanation will characterize the KDE in its technical sense. For example, in a dimensional database such as those used by a data warehouse, the key data entities are reflected as dimensions. In a master data management environment, data objects that are designated as candidates for mastering are likely to be the key data entities. Managing uniquely accessible instances of key data entities is a core driver for MDM, especially because of their inadvertent replication across multiple business applications.

Governing the quality and acceptability of a key data entity will emerge as a critical issue within the organization, mostly because of the political aspects of data ownership. In fact, data ownership itself is always a challenge, but when data sets are associated with a specific application, there is a reasonable expectation that someone associated with the line of business using the application will (when push comes to shove) at least take on the responsibilities of ownership. But as key data entities are merged together into an enterprise resource, it is important to have policies dictating how ownership responsibilities are allocated and how accountability is assigned, and the management framework for ensuring that these policies are not just paper tigers.

4.9. Critical Data Elements

Critical data elements are those that are determined to be vital to the successful operation of the organization. For example, an organization may define its critical data elements as those that represent protected personal information, those that are used in financial reports (both internal and external), regulatory reports, the data elements that represent identifying information of master data objects (e.g., customer, vendor, or employee data), the elements that are critical for a decision-making process, or the elements that are used for measuring organizational performance.

Part of the governance process involves a collaborative effort to identify critical data elements, research their authoritative sources, and then agree on their definitions. As opposed to what our comment in Section 4.3.1 might imply, a mass assessment of all existing enterprise data elements is not the most efficient approach to identifying critical data elements.

Rather, the best process is to start at the end and focus on the information artifacts that end clients are using—consider all reports, analyses, or operations that are critical to the business, and identify every data element that impacts or contributes to each report element or analytical dimension. At the same time, begin to map out the sequence of processes from the original point of entry until the end of each critical operation. The next step is to assemble a target to source mapping: look at each of the identified data elements and then look for data elements on which the selected data element depends. For example, if a report tallies the total sales for the entire company, that total sales number is a critical data element. However, that data element's value depends on a number of divisional sales totals, which in turn are composed of business-line or product-line detailed sales. Each step points to more elements in the dependence chain, each of which is also declared to be a critical data element.

Each data element's criticality is proportional to its contribution to the ultimate end values that are used to run or monitor the business. The quality of each critical data element is related to the impact that would be incurred should the value be outside of acceptable ranges. In other words, the quality characteristics are based on business impact, which means that controls can be introduced to monitor data values against those quality criteria.

4.10. Defining Information Policies

Information policies embody the specification of management objectives associated with data governance, whether they are related to management of risk or general data oversight. Information policies relate specified business assertions to their related data sets and articulate how the business policy is integrated with the information asset. In essence, it is the information policy that bridges the gap between the business policy and the characterization of critical data element quality.

For example, consider the many regulations requiring customer knowledge, such as the anti-money laundering (AML) aspects required by the USA PATRIOT Act. The protocols of AML imply a few operational perspectives:

▪ Establishing policies and procedures to detect and report suspicious transactions

▪ Ensuring compliance with the Bank Secrecy Act

▪ Providing for independent testing for compliance to be conducted by outside parties

But in essence, AML compliance revolves around a relatively straightforward concept: know your customer. Because all monitoring centers on how individuals are conducting business, any organization that wants to comply with these objectives must have processes in place to identify and verify customer identity.

Addressing the regulatory policy of compliance with AML necessarily involves defining information policies for managing customer data, such as the suggestions presented in the sidebar. These assertions are ultimately boiled down into specific data directives, each of which is measurable and reportable, which is the cornerstone of the stewardship process.

▪ Identity of any individual involved in establishing an account must be verified.

▪ Records of the data used to verify a customer's identity must be measurably clean and consistent.

▪ Customers may not appear on government lists of known or suspected terrorists or belong to known or suspected terrorist organizations.

▪ A track record of all customer activity must be maintained.

▪ Managers must be notified of any behavior categorized as “suspicious.”

4.11. Metrics and Measurement

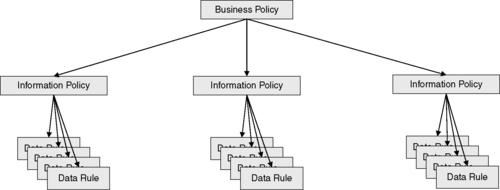

Any business policy will, by virtue of its implementation within an application system, require conformance to a set of information policies. In turn, each information policy should be described as a set of assertions involving one or more key data entities, examining the values of critical data elements, as can be seen in Figure 4.1. In other words, the information policy might be further clarified into specific rules that would apply to both the master data set as well as the participating applications. In the example that required the tracking of customer activity, one might define a rule prescribing that each application that manages transactions must log critical data elements associated with the customer identity and transaction in a master transaction repository. Conformance to the rule can be assessed by verifying that all records of the master transaction repository are consistent with the application systems, where consistency is defined as a function of comparing the critical data values with the original transaction.

|

| ▪Figure 4.1 A hierarchy of data rules is derived from each business policy. |

Metrics reflecting conformance with an information policy can be viewed as a rollup of the various data rules into which the policy was decomposed. As long as each rule is measurable, we can create a hierarchy of metrics that ultimately can be combined into key performance indicators for the purposes of data governance.

4.12. Monitoring and Evaluation

Essentially, measurements of conformance to business policies define the key performance indicators for the business itself. This collection of key performance indicators provides a high-level view of the organization's performance with respect to its conformance to defined information policies. In fact, we can have each indicator reflect the rolled-up measurements associated with the set of data rules for each information policy. Thresholds may be set that characterize levels of acceptability, and the metrics can be drilled through to isolate specific issues that are preventing conformance to the defined policy, enabling both transparency and auditability.

But for the monitoring to be effective, those measurements must be presented directly to the individual that is assigned responsibility for oversight of that information policy. It is then up to that individual to continuously monitor conformance to the policy and, if there are issues, to use the drill-through process to determine the points of failure and to initiate the processes for remediation.

4.13. Framework for Responsibility and Accountability

One of the biggest historical problems with data governance is the absence of follow-through; although some organizations may have well-defined governance policies, they may not have established the underlying organizational structure to make it actionable. This requires two things: the definition of the management structure to oversee the execution of the governance framework and a compensation model that rewards that execution.

A data governance framework must support the needs of all the participants across the enterprise, from the top down and from the bottom up. With executive sponsorship secured, a reasonable framework can benefit from enterprise-wide participation within a data governance oversight board, while all interested parties can participate in the role of data stewards. A technical coordination council can be convened to establish best practices and to coordinate technical approaches to ensure economies of scale. The specific roles include the following:

▪ Data governance director

▪ Data governance oversight board

▪ Data coordination council

▪ Data stewards

These roles are dovetailed with an organizational structure that oversees conformance to the business and information policies, as shown in Figure 4.2. Enterprise data management is integrated within the data coordination council, which reports directly to an enterprise data governance oversight board.

|

| ▪Figure 4.2 A framework for data governance management. |

As mentioned at the beginning of the chapter, be aware that pragmatically the initial stages of governance are not going to benefit from a highly structured hierarchy; rather they are likely to roll the functions of an oversight board and a coordination council into a single working group. Over time, as the benefits of data governance are recognized, the organization can evolve the management infrastructure to segregate the oversight roles from the coordination and stewardship roles.

4.14. Data Governance Director

The data governance director is responsible for the day-to-day management of enterprise data governance. The director provides guidance to all the participants and oversees adherence to the information policies as they reflect the business policies and necessary regulatory constraints. The data governance director plans and chairs the data governance oversight board. The director identifies the need for governance initiatives and provides periodic reports on data governance performance.

▪ Review corporate information policies and designate workgroups to transform business policies into information policies and then into data rules

▪ Approve data governance policies and procedures

▪ Manage the reward framework for compliance with governance policies

▪ Review proposals for data governance practices and processes

▪ Endorse data certification and audit processes

4.15. Data Governance Oversight Board

The data governance oversight board (DGOB) guides and oversees data governance activities. The DGOB is composed of representatives chosen from across the community. The main responsibilities of the DGOB are listed in the sidebar.

4.16. Data Coordination Council

The actual governance activities are directed and managed by the data coordination council, which operates under the direction of the data governance oversight board. The data coordination council is a group composed of interested individual stakeholders from across the enterprise. It is responsible for adjusting the processes of the enterprise as appropriate to ensure that the data quality and governance expectations are continually met. As part of this responsibility, the data coordination council recommends the names for and appoints representatives to committees and advisory groups.

The data coordination council is responsible for overseeing the work of data stewards. The coordination council also does the following:

▪ Provides direction and guidance to all committees tasked with developing data governance practices

▪ Oversees the tasks of the committees and advisory groups related to data governance

▪ Recommends that the data governance oversight board endorse the output of the various governance activities for publication and distribution

▪ Recommends data governance processes to the data governance oversight board for final endorsement

▪ Advocates for enterprise data governance by leading, promoting, and facilitating the governance practices and processes developed

▪ Attends periodic meetings to provide progress reports, review statuses, and discuss and review the general direction of the enterprise data governance program

4.17. Data Stewardship

The data steward's role essentially is to support the user community. This individual is responsible for collecting, collating, and evaluating issues and problems with data. Typically, data stewards are assigned either based on subject areas or within line-of-business responsibilities. However, in the case of MDM, because the use of key data entities may span multiple lines of business, the stewardship roles are more likely to be aligned along KDE boundaries. Prioritized issues must be communicated to the individuals who may be impacted. The steward must also communicate issues and other relevant information (e.g., root causes) to staff members who are in a position to influence remediation.

As the person accountable for the quality of the data, the data steward must also manage standard business definitions and metadata for critical data elements associated with each KDE. This person also oversees the enterprise data quality standards, including the data rules associated with the data sets. This may require using technology to assess and maintain a high level of conformance to defined information policies within each line of business, especially its accuracy, completeness, and consistency. Essentially, the data steward is the conduit for communicating issues associated with the data life cycle—the creation, modification, sharing, reuse, retention, and backup of data. If any issues emerge regarding the conformance of data to the defined policies over the lifetime of the data, it is the steward's responsibility to resolve them.

Data stewardship is not necessarily an information technology function, nor should it necessarily be considered to be a full-time position, although its proper execution deserves an appropriate reward. Data stewardship is a role that has a set of responsibilities along with accountability to the line-of-business management. In other words, even though the data steward's activities are overseen within the scope of the MDM program, the steward is accountable to his or her own line management to ensure that the quality of the data meets the needs of both the line of business and of the organization as a whole.

4.18. Summary

For companies undertaking MDM, a hallmark of successful implementations will be the reliance and integration of data governance throughout the initiative. The three important aspects of data governance for MDM are managing key data entities and critical data elements, ensuring the observance of information policies and documenting and ensuring accountability for maintaining high-quality master data.

Keeping these ideas in mind during the development of the MDM program will ensure that the master data management program does not become relegated to the scrap heap of misfired enterprise information management initiatives. Rather, developing a strong enterprise data governance program will benefit the MDM program as well as strengthen the ability to manage all enterprise information activities.

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.