Chapter 13. Management Guidance for MDM

The approach taken in this book to introduce and provide details about the development of a master data management program is to develop an awareness of the critical concepts of master data management before committing time, resources, or money to technology acquisition or consulting engagements. That being said, readers who have reached this point in the book show a readiness to take on the challenges of MDM, in expectation of the benefits in terms of business productivity improvement, risk management, and cost reduction—all facilitated via the conceptual master repository.

Therefore, this chapter reviews the insights discussed throughout the book and provides a high-level, end-to-end overview of the activities associated with developing the master data management program. This process begins with the initial activities of determining the business justifications for introducing MDM, developing a road map, identifying roles and responsibilities, planning the stages of the MDM development projects, and so on. The process continues through the middle stages of documenting the business process models, developing a metadata management facility, instituting data governance, and analyzing and developing master data models. Finally, it extends to the later stages of development, such as selecting and instantiating MDM architectures, developing master data services, deploying a transition strategy for existing applications, and then maintaining the MDM environment.

In this chapter, we review the key activities covered earlier in the book and provide guidance on the milestones and deliverables from each activity. An experienced project manager will cherry-pick from the list of activities depending on how critical each stage is to achieving the organization's business objectives. However, understanding the scale and scope of justifying, designing, planning, implementing, and maintaining an MDM system can be a large process in its own right. One of the hardest parts of the entire process is knowing where and how to start, and to that end, it is often more cost effective to engage external experts or consultants during the initial stages of the project to expedite producing the early deliverables.

13.1. Establishing a Business Justification for Master Data Integration and Management

As we have seen, master data integration and master data management are not truly the end objectives; rather they are the means by which other objectives are successfully accomplished, such as those promised by customer relationship management systems, product information management systems, Enterprise Resource Planning (ERP) systems, and so on. And while the principles that drive and support MDM reflect sound data management practices, it would be unusual (although not unheard of) that senior management would embrace and fund the MDM effort solely for the sake of establishing good practices.

That being said, the business justification for MDM should be coupled with those programs that will benefit from the availability of the unified view of master data. That means that MDM can be justified in support of a business initiative that relies on any of the following MDM benefits:

Comprehensive customer knowledge. A master data repository for customer data will provide the virtual single source for consolidating all customer activity, which can then be used to support both operational and analytical applications in a consistent manner.

Consistent reporting. Reliance on end-user applications to digest intermittent system data extracts leads to questions regarding inconsistency from one report to another. Reliance on the reports generated from governed processes using master data reduces the inconsistencies experienced.

Improved risk management. The improvement in the quality of enterprise information is the result of master data management; more trustworthy and consistent financial information improves the ability to manage enterprise risk.

Improved decision making. The information consistency that MDM provides across applications reduces data variability, which in turn minimizes organizational data mistrust and allows for faster and more reliable business decisions.

Regulatory compliance. As one of the major enterprise areas of risk, compliance drives the need for quality data and data governance, and MDM addresses both of these needs. Information auditing is simplified across a consistent master view of enterprise data, enabling more effective information controls that facilitate compliance with regulations.

Increased information quality. Collecting metadata comprising standardized models, value domains, and business rules enables more effective monitoring of conformance to information quality expectations across vertical applications, which reduces information scrap and rework.

Quicker results. A standardized view of the information asset reduces the delays associated with extraction and transformation of data, speeding the implementation of application migrations, modernization projects, and data warehouse/data mart construction.

Improved business productivity. Master data help organizations understand how the same data objects are represented, manipulated, or exchanged across applications within the enterprise and how those objects relate to business process workflows. This understanding allows enterprise architects the opportunity to explore how effective the organization is in automating its business processes by exploiting the information asset.

Simplified application development. The consolidation activities of MDM are not limited to the data; when the multiple master data objects are consolidated into a master repository, there is a corresponding opportunity to consolidate the application functionality associated with the data life cycle. Consolidating many product systems into a single resource allows us to provide a single functional service for the creation of a new product entry to which the different applications can subscribe, thereby supporting the development of a services-oriented architecture (SOA).

Reduction in cross-system reconciliation. The unified view of data reduces the occurrence of inconsistencies across systems, which in turn reduces the effort spent on reconciling data between multiple source systems.

In this context, enterprise applications such as Customer Relationship Management (CRM) and ERP maintain components that apply to instances of the same object type across processing streams (e.g., “Order to Cash”), and maintaining a unified version of each master data object directly supports the enterprise initiative. Therefore, it is reasonable to justify incorporating an MDM initiative as part of these comprehensive projects.

The key deliverables of this stage are the materials for documenting the business case for the stakeholders in the organization, including (not exclusively) the following:

▪ Identification of key business drivers for master data integration

▪ Business impacts and risks of not employing an MDM environment

▪ Cost estimates for implementation

▪ Success criteria

▪ Metrics for measuring success

▪ Expected time to deploy

▪ Break-even point

▪ Education, training, and/or messaging material

13.2. Developing an MDM Road Map and Rollout Plan

The implementation of a complex program, such as MDM, requires strategic planning along with details for its staged deployment. Specifying a road map for the design and development of the infrastructure and processes supporting MDM guides management and staff expectations.

13.2.1. MDM Road Map

Road maps highlight a plan for development and deployment, indicating the activities to be performed, milestones, and a general schedule for when those activities should be performed and when the milestones should be reached. In deference to the planning processes, activity scheduling, resource allocation, and project management strategies that differ at every organization, it would be a challenge to define a road map for MDM that is suitable to everyone. However, there are general stages of development that could be incorporated into a high-level MDM road map.

Evaluate the business goals. This task is to develop an organizational understanding of the benefits of MDM as they reflect the current and future business objectives. This may involve reviewing the lines of business and their related business processes, evaluating where the absence of a synchronized master view impedes or prevents business processes from completing at all or introducing critical inefficiencies, and identifying key business areas that would benefit from moving to an MDM environment.

Evaluate the business needs. This step is to prioritize the business goals and determine which are most critical, then determine which have dependences on MDM as success criteria.

Assess the current state, Here, staff members can use the maturity model to assess the current landscape of available tools, techniques, methods, and organizational readiness for MDM.

Assess the initial gap. By comparing the business needs against the evaluation of the current state, the analysts can determine the degree to which the current state is satisfactory to support business needs, or alternatively determine where there are gaps that can be addressed by implementing additional MDM capabilities.

Envision the desired state. If the current capabilities are not sufficient to support the business needs, the analysts must determine the maturity level that would be satisfactory and set that as the target for the implementation.

Analyze the capability gap. This step is where the analysts determine the gaps between current state and the desired level of maturity and identify which components and capabilities are necessary to fill the gaps.

Perform capability mapping. To achieve interim benefits, the analysts seek ways to map the MDM capabilities to existing application needs to demonstrate tactical returns.

Plan the project. Having identified the capabilities, tools, and methods that need to be implemented, the “bundle” can be handed off to the project management team to assemble a project plan for the appropriate level of requirements analysis, design, development, and implementation.

13.2.2. Rollout Plan

Once the road map has been developed, a rollout plan can be designed to execute against the road map; here is an example of a staged plan.

Preparation and adoption. During this initial stage, the information and application environments are reviewed to determine whether there is value in instituting an MDM program, developing the business justification, marketing and compiling educational materials, and other tasks in preparation for initiating a project. Tasks may include metadata assessment, data profiling, identification of selected data sets for consolidation (“mastering”), vendor outreach, training sessions, and the evaluation of solution approaches.

Proof of concept. As a way of demonstrating feasibility, a specific master data object type is selected as an initial target for a consolidation project. There may be an expectation that the result is a throw-away, and the process is intended to identify issues in the overall development process. This may be taken as an assessment opportunity for vendor products, essentially creating a bake-off that allows each vendor to showcase a solution. At the same time, more comprehensive enterprise-wide requirements are determined, documented, and associated within a metadata framework to capture the correlation between business expectations, business rules, and policy management directed by the MDM environment. By the end of this stage, the target MDM architecture will have been determined and the core set of necessary services enumerated.

Initial deployment. Subsequent to the determination of a solution approach and any associated vendor product selection, the lessons learned from the proof of concept stage are incorporated into this development and deployment activity. Master data models are refined to appropriately reflect the organization's needs; data quality expectations are defined in terms of specific dimensions; and identity searching and matching is in place for both batch and inline record linkage and consolidation. A master registry will be produced for each master data object, and both core services and basic application function services will be made available. The master view of data is more likely to be used as reference data. Data governance processes are identified and codified.

Release 1.0. As the developed registries/repositories and services mature, the program reaches a point where it is recognized as suitable for general release. At this point, there are protocols for the migration of business applications to the use of the master data environment, and there are defined policies for all aspects of master data management, including auditing data integration and consolidation, compliance to defined data expectations, and SLAs for ensuring data quality, and the roles and responsibilities for data governance are activated. Modifications to the master data resources are propagated to application clients. New application development can directly use the master data resource.

Transition. At this point, the application migration processes transition legacy applications to the master data resource. As different types of usage scenarios come into play, the directives for synchronization and coherence are employed. Additional value-added services are implemented and integrated into the service layer.

Figure 13.1 shows a sample template with project milestones that can be used to model an organization's high-level road map. The detailed road map enumerates the specific tasks to be performed in order to reach the defined milestones.

|

| ▪Figure 13.1 A sample template for developing an MDM development road map. |

The key deliverable for this stage is a development road map that does the following:

13.3. Roles and Responsibilities

Managing the participants and stakeholders associated with an MDM program means clearly articulating each specific role, each participant's needs, the tasks associated with each role, and the accountability associated with the success of the program. A sampling of roles is shown in Figure 13.2.

|

| ▪Figure 13.2 Roles associated with master data management programs. |

To ensure that each participant's needs are addressed and that their associated tasks are appropriately performed, there must be some delineation of specific roles, responsibilities, and accountabilities assigned to each person; in Chapter 2 we reviewed using a RACI model, with the acronym RACI referring to the identification of who is responsible, accountable, consulted, and informed of each activity.

Within the model, the participation types are allocated by role to each task based on whether the role is responsible for deliverables related to completing the task, accountable for the task's deliverables, consulted for advice on completing the task, or is informed of progress or issues associated with the task.

The key deliverables of this stage include the following:

▪ An enumeration and description of each of the stakeholder or participant roles

▪ A RACI matrix detailing the degrees of responsibility for each of the participants

13.4. Project Planning

As the road map is refined and the specific roles and responsibilities identified, a detailed project plan can be produced with specific tasks, milestones, and deliverables. For each of the phases specified in the road map, a more detailed list of milestones and deliverables will be listed, as reflected by the responsibilities in the RACI matrix. The list of tasks to accomplish each milestone or deliverable will be detailed, along with resource/staff allocation, estimated timeframes, dependencies, and so on. The project plan will incorporate the activities in the following sections of this chapter. The key deliverable for this stage is a detailed project plan with specific milestones and deliverables.

13.5. Business Process Models and Usage Scenarios

Business operations are guided by defined performance management goals and the approaches to be taken to reach those goals, and applications are developed to support those operations. Applications are driven by business policies, which should be aligned with the organization's strategic direction, and therefore the applications essentially implement business processes that execute those policies.

Although many application architectures emerge organically, they are always intended to implement those business policies through the interaction with persistent data. By documenting the business processes at a relatively granular level, analysts can understand which data entities are touched, determine if those entities reflect master object types, and map those data entities to their corresponding master type. Ultimately, looking at the ways that these applications access apparent master data objects embedded within each data repository helps drive determination of the initial targets for integration into the master environment.

Finally, and most important, one must consider both the existing applications that will be migrated to the new environment and how their operations must evolve, as well as any new business processes for which new applications will be developed targeting the master data environment. Evaluating the usage scenarios shows the way that the participants within each business process will access master data, which will contribute later to the decisions regarding master data models, master data services, and the underlying architectures.

The key deliverables for this activity are as follows:

▪ A set of business process models for each of the end-client activities that are targeted for inclusion in the master data management program

▪ A set of usage scenarios for the business applications that will be the clients of the master data asset

13.6. Identifying Initial Data Sets for Master Integration

During this activity, the business process models are reviewed to identify applications and their corresponding data sets that are suitable as initial targets for mastering. The business process models reflect the data interchanges across multiple processing streams. Observing the use of similar abstract types of shared data instances across different processing streams indicates potential master data objects.

Because the business justification will associate master data requirements with achieving business objectives, those requirements should identify the conceptual master data objects that are under consideration, such as customers, products, or employees. In turn, analysts can gauge the suitability of any data set for integration as a function of the business value to be derived (e.g., in reducing cross-system reconciliation or as a by-product of improving customer profiling) and the complexity in “unwinding” the existing data access points into the master view. Therefore, this stage incorporates the development of guidelines for determining the suitability of data sets for master integration and applying those criteria to select the initial data sets to be mastered.

The key deliverable for this phase is a list of the applications that will participate in the MDM program (at least the initial set) and the master data object types that are candidates for mastering. The actual collection of metadata and determination of extraction and transformation processes are fleshed out in later stages.

13.7. Data Governance

As discussed in Chapter 4, data governance essentially focuses on ways that information management is integrated with controls for management oversight along with the verification of organizational observance of information policies. In this context, the aspects of data governance relate to specifying the information policies that reflect business needs and expectations and the processes for monitoring conformance to those information policies. This means introducing both the management processes for data governance and the operational tools to make governance actionable.

The key deliverables of this stage include the following:

▪ Management guidance for data governance

▪ Hierarchical management structures for overseeing standards and protocols

▪ Processes for defining data expectations

▪ Processes for defining, implementing, and collecting quantifiable measurements

▪ Definitions of acceptability thresholds for monitored metrics

▪ Processes for defining and conforming to directives in SLAs

▪ Processes for operational data governance and stewardship

▪ Identification and acquisition of tools for monitoring and reporting compliance with governance directives

13.8. Metadata

In Chapter 6, we looked at how the conceptual view of master metadata starts with basic building blocks and grows to maintain comprehensive views of the information that is used to support the achievement of business objectives. Metadata management provides an anchor for all MDM activities, and the metadata repository is used to “control” the development and maintenance of the master data environment by capturing information as necessary to drive the following:

▪ The analysis of enterprise data for the purpose of structural and semantic discovery

▪ The correspondence of meanings to data element types

▪ The determination of master data element types

▪ The models for master data object types

▪ The interaction models for applications touching master data

▪ The information usage scenarios for master data

▪ The data quality directives

▪ Access control and management

▪ The determination of core master services

▪ The determination of application-level master services

▪ Business policy capture and correspondence to information policies

Specifying the business requirements for capturing metadata relevant to MDM is the prelude to the evaluation and acquisition of tools that support the ways for resolving business definitions; classify reference data assets; document data elements structures, table layouts, general information architecture; as well as develop higher-order concepts such as data quality business rules, service level agreements, services metadata, and business data such as the business policies driving application design and implementation.

The following are the milestones and deliverables for this stage:

▪ Business requirements for metadata management tools

▪ Evaluation and selection of metadata tools

▪ Processes for gaining consensus on business data definitions

▪ Processes for documenting data element usage and service usage

13.9. Master Object Analysis

In Chapter 7, we looked at how the challenges of master data consolidation are not limited to determining what master objects are used but rather incorporate the need to find where master objects are used in preparation for standardizing the consolidated view of the data. This ultimately requires a strategy for standardizing, harmonizing, and consolidating the different representations in their source formats into a master repository or registry. It is important to provide the means for both the consolidation and the integration of master data as well as to facilitate the most effective and appropriate sharing of that master data. This stage takes place simultaneously with the development of the master data models for exchange and persistence. Actual data analytics must be applied to address some fundamental issues, such as the following, regarding the enterprise assets themselves:

▪ Determining which specific data objects accessed within the business process models are master data objects

▪ Mapping application data elements to master data elements

▪ Selecting the data sets that are the best candidates to populate the organization's master data repository

▪ Locating and isolating master data objects that may be hidden across various applications

▪ Applying processes for collecting master metadata and developing data standards for sharing and exchange

▪ Analyzing the variance between the different representations in preparation for developing master data models

The expected results of this stage will be used to configure the data extraction and consolidation processes for migrating data from existing resources into the master environment; they include the following:

13.10. Master Object Modeling

In Chapter 8, we looked at the issues associated with developing models for master data—from extraction, consolidation, persistence, and delivery. This is the stage for developing and refining the models used for extraction, consolidation, persistence, and sharing. It is important to realize that becoming a skilled practitioner of data modeling requires a significant amount of training and experience, so it is critical to engage individuals with the proper skill sets to successfully complete this task.

Every master data modeling exercise must encompass the set of identifying attributes—the data attribute values that are combined to provide a candidate key that is used to distinguish each record from every other. This will rely on the cross-system data analysis and data profiling performed during the master data object analysis stage, and the outputs of that process iteratively inspire the outputs of the modeling processes. It also relies on the documented metadata that have been accumulated from analysis, interviews, and existing documentation.

The following are the expected deliverables from this stage:

▪ A model for linearized representations of data extracted from original sources in preparation for sharing and exchange

▪ A model used during the data consolidation process

▪ A model for persistent storage of selected master data attributes

13.11. Data Quality Management

One of the key value statements related to application use of master data is an increased level of trust in the representative unified view. This implies a level of trustworthiness of the data as it is extracted from the numerous sources and consolidated into the master data asset. At the operational level, business expectations for data quality will have been associated with clearly defined business rules related to ensuring the accuracy of data values and monitoring the completeness and consistency of master data elements. This relies on what has been accumulated within the master metadata repository, including assertions regarding the core definitions, perceptions, formats, and representations of the data elements that compose the model for each master data object.

The data governance guidance developed during earlier phases of the program will be operationalized using metadata management and data quality techniques. Essentially, monitoring and ensuring data quality within the MDM environment is associated with identifying critical data elements, determining which data elements constitute master data, locating and isolating master data objects that exist within the enterprise, and reviewing and resolving the variances between the different representations in order to consolidate instances into a single view. Even after the initial migration of data into a master repository, there will still be a need to instantiate data inspection, monitoring, and controls to identify any potential data quality issues and prevent any material business impacts from occurring.

Tools supporting parsing, standardization, identity resolution, and enterprise integration contribute to aspects of master data consolidation. During this stage, business requirements for tools necessary for assessing, monitoring, and maintaining the correctness of data within the master environment will be used as part of the technology-acquisition process.

The key deliverables for this stage are as follows:

▪ The determination of business requirements for data quality tools

▪ The formalization and implementation of operational data quality assurance techniques

▪ The formalization and implementation of operational data governance procedures

▪ The acquisition and deployment of data quality tools

13.12. Data Extraction, Sharing, Consolidation, and Population

At this point in the program, there should be enough information gathered as a result of the master object analysis and the creation of the data models for exchange and for persistence in order to formulate the processes for data consolidation. The collected information, carefully documented as master metadata, can now be used to create the middleware for extracting data from original sources and marshaling data within a linearized format suitable for data consolidation.

Providing data integration services captures the ability to extract the critical information from different sources and to ensure that a consolidated view is engineered into the target architecture. This stage is the time for developing the data extraction services that will access data in a variety of source applications as well as being able to select and extract data instance sets into a format that is suitable for exchange. Data integration products are designed to seamlessly incorporate the functions shown in the sidebar.

▪ Data extraction and transformation. After extraction from the sources, data rules may be triggered to transform the data into a format that is acceptable to the target architecture. These rules may be engineered directly within data integration services or may be embedded within supplemental acquired technologies.

▪ Data monitoring. Business rules may be applied at the time of transformation to monitor the conformance of the data to business expectations (such as data quality) as it moves from one location to another. Monitoring enables oversight of business rules discovered and defined during the data profiling phase to validate data as well as single out records that do not conform to defined data quality, feeding the data governance activity.

▪ Data consolidation. As different sources' data instances are brought together, the integration tools use the parsing, standardization, harmonization, and matching capabilities of the data quality technologies to consolidate data into unique records in the master data model.

Developing these capabilities is part of the data consolidation and integration phase. For MDM programs put in place either as a single source of truth for new applications or as a master index for preventing data instance duplication, the extraction, transformation and consolidation can be applied at both an acute level (i.e., at the point that each data source is introduced into the environment) and on an operational level (i.e., on a continuous basis, applied to a feed from the data source).

This stage's key deliverables and milestones include the following:

▪ Identification of business requirements for data integration tools

▪ Acquisition of data integration tools

▪ Identification and business requirements for data consolidation technology, including identity resolution, entity search and match, and data survivorship

▪ Development of data extraction “connectors” for the candidate source data systems

▪ Development of rules for consolidation

▪ Implementation of consolidation services (batch, inline)

▪ Development of rules for survivorship

▪ Implementation of rules for survivorship

▪ Formalization of the components within the master data services layer

13.13. MDM Architecture

There are different architectural styles along an implementation spectrum, and these styles are aligned in a way that depends on three dimensions:

1 The number of attributes maintained within the master data system

2 The degree of consolidation applied as data is brought into the master repository

3 How tightly coupled applications are to the data persisted in the master data environment

One end of the spectrum employs a registry, limited to maintaining identifying attributes, which is suited to loosely coupled application environments and where the drivers for MDM are based more on the harmonization of unique representation of master objects on demand. The other end of the spectrum is the transaction hub, best suited to environments requiring tight coupling of application interaction and a high degree of data currency and synchronization. Hybrid approaches can lie anywhere between these two ends, and the architecture can be adjusted in relation to the business requirements as necessary.

Other dimensions also contribute to the decision: service layer complexity, access roles and integration needs, security, and performance. Considering the implementation spectrum (as opposed to static designs) within the context of the dependent factors simplifies selection of an underlying architecture. Employing a template like the one shown in Chapter 9 can help score the requirements associated with defined selection criteria. The scores can be weighted based on identified key aspects, and the resulting assessment can be gauged to determine a reasonable point along the implementation spectrum that will support the MDM requirements.

The following are some of the key deliverables and milestones of this phase:

▪ Evaluation of master data usage scenarios

▪ Assessment of synchronization requirements

▪ Evaluation of vendor data models

▪ Review of the architectural styles along the implementation spectrum

▪ Selection of a vendor solution

13.14. Master Data Services

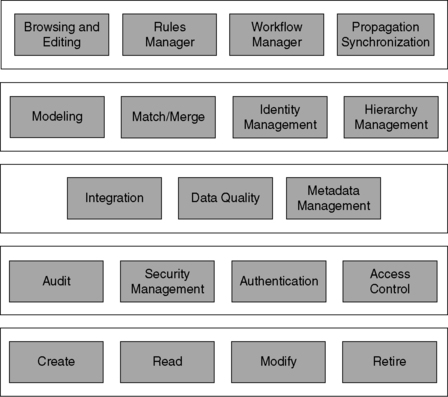

Master data service implementations may vary, but as discussed in Chapter 12, the services can be characterized within three layers:

▪ Core services, which focus on data object life cycle actions, along with the capabilities necessary for supporting general functions applied to master data

▪ Object services, which focus on actions related to the master data object type or classification (such as customer or product)

▪ Business application services, which focus on the functionality necessary at the business level

Figure 13.3 shows some example core services, such as the object create, update, and retire services, among others; access control; data consolidation; integration; and the components supporting consolidation, business process workflows, rule-directed operations, and so on. Although the differences inherent across many organizations will reflect slight differences in the business's catalog of services, this framework provides a reasonable starting point for evaluation and design.

|

| ▪Figure 13.3 Core master data services. |

There is a clearly mutual dependence between the success of applications relying on master data management and those reliant on a services-oriented architecture. A services-oriented approach to application development relies on the existence of a master data repository, and the success of an MDM program depends on the ability to deploy and use master object access and manipulation services. A flexible SOA enables the organizational changes necessary to use the data most efficiently. Driving application development from the business perspective forces a decision regarding harmonizing both the data objects and the ways those objects are used, and a services-based approach allows the organization to exploit enterprise data in relation to the ways that using enterprise data objects drive business performance.

Access to master data is best achieved when it is made transparent to the application layer, and employing a services-based approach actually serves multiple purposes within the MDM environment. During the initial phases of deploying the master environment, the use of a service layer simplifies the implementation of the master object life cycle and provides a transition path from a distributed application architecture into the consolidated master application infrastructure.

Key milestones and deliverables include the following:

▪ Qualification of master service layers

▪ Design of master data services

▪ Implementation and deployment of master data services

▪ Application testing

13.15. Transition Plan

Exposing master data for use across existing legacy applications poses a challenge for a number of factors, including the need for customized data extraction and transformation, reduction in the replication of functionality, and adjusting the legacy applications' data access patterns. Providing the component service layer addresses some of these issues; although the traditional extraction, transformation, and loading (ETL) method is insufficient for continuous synchronization, master data services support the data exchange that will use data integration tools in the appropriate manner. In essence, the transaction semantics necessary to maintain consistency can be embedded within the service components that support master data object creation and access, eliminating the concern that using ETL impedes consistency. The consolidation of application capabilities within a component service catalog through the resolution of functionality eliminates functional redundancy, thereby reducing the risks of inconsistency as a result of replication.

Yet the challenge of applications transitioning to using the master repository still exists, requiring a migration strategy that minimizes invasiveness. To transition to using the master repository, applications must be adjusted to target the master repository instead of their own local data store. Planning this transition involves developing an interface to the master repository that mimics each application's own existing interface, and the method discussed in Chapter 12 for this transition is developing a conceptual service layer as a stepping-stone in preparation for the migration using a design pattern known as “wrapper façades.”

During the transition stage, the data consolidation processes are iterated and tested to ensure that the right information is migrated from the source data systems into the master data asset. At the same time, any adjustments or changes to the master service layer are made to enable a cutover from dependence on the original source to dependence on the services layer (even if that services layer still accesses the original data). The application transition should be staged to minimize confusion with respect to identifying the source of migration problems. Therefore, instituting a migration and transition process and piloting that process with one application is a prelude to instituting that process on an ongoing basis.

13.16. Ongoing Maintenance

At this point, the fundamental architecture has been established, along with the policies, procedures, and processes for master data management, including the following:

▪ Identifying master data objects

▪ Creating a master data model

▪ Assessing candidate data sources for suitability

▪ Assessing requirements for synchronization and consistency

▪ Assessing requirements for continuous data quality monitoring

▪ Overseeing the governance of master data

▪ Developing master data services

▪ Transitioning and migrating legacy applications to the master environment

Having the major components of the master data strategy in place enables two maintenance activities: the continued migration of existing applications to the master environment and ensuring the proper performance and suitability of the existing master data system. The former is a by-product of the pieces put together so far; the latter involves ongoing analysis of performance against agreed-to service levels.

At the same time, the system should be reviewed to ensure the quality of record linkage and identity resolution. In addition, MDM program managers should oversee the continued refinement of master data services to support new parameterized capabilities or to support newly migrated applications.

The following are the expectations of this phase:

▪ Establishing minimum levels of service for data quality

▪ Establishing minimum levels of service for application performance

▪ Establishing minimum levels of service for synchronization

▪ Implementing service-level monitoring and reporting

▪ Enhancing governance programs to support MDM issue reporting, tracking, and resolution

▪ Reviewing ongoing vendor product performance

13.17. Summary: Excelsior!

Master data management can provide significant value to growing enterprise information management initiatives, especially when MDM improves interapplication information sharing, simplifies application development, reduces complexity in the enterprise information infrastructure, and lowers overall information management costs. In this chapter, we have provided a high-level road map, yet your mileage may vary, depending on your organization's readiness to adopt this emerging technology.

As this technology continues to mature, there will be, by necessity, an evolution in the way we consider the topics discussed here. Therefore, to maintain that view, consult this book's associated website (www.mdmbook.com) for updates, new information, and further insights.

That being said, there is great value in engaging others who have the experience of assessing readiness, developing a business case, developing a road map, developing a strategy, designing, and implementing a master data management program. Please do not hesitate to contact us directly ([email protected]) for advice. We are always happy to provide recommendations and suggestions to move your MDM program forward!

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.