Chapter 5. Knowledge of risk as an element of cybersecurity argument

Abstract

Ad hoc methods for identifying security holes in cybersystems suit hackers; however, the risk assessment process underlying cyberdefense must be systematic.

This chapter describes how risk analysis can be made more systematic, repeatable, and objective to provide a solid foundation for system assurance. Accumulating and distributing cybersecurity knowledge in the form of accredited and up-to-date machine-readable catalogs of threat events can make risk assessment more repeatable. Integrating cybersecurity knowledge with system facts makes identification of threats and the corresponding risks systematic and produces evidence for the assurance case. Accumulation and distribution of cybersecurity knowledge from more experienced analysts all the way down to the defenders of individual systems require more attention to exchange standards. The OMG Software Assurance Ecosystem involves a common vocabulary for assets, threat events, threat agents, threats, threat scenarios, and risks, as part of the integrated system model.

Keywords

asset, asset category, injury, security requirements, threat agent, threat activity, threat event, threat, risk, security safeguard, threat identification, hazard, vulnerability, cybersecurity knowledge, information exchange

How infinitely good that Providence is, which has settled in its government of mankind such narrow bounds to his sight and knowledge of things; and though he walks in the midst of so many thousand dangers, the sight of which, if discovered to him, would distract his mind and sink his spirits, he is kept serene and calm, by having the events of things hid from his eyes, and knowing nothing of the dangers which surround him.

—Daniel Defoe, Robinson Crusoe

5.1. Introduction

Each new cyber system creates new opportunities and causes new risks. Knowledge of specific risks, including unique threats and undesired events related to the system of interest is critical for the system assurance process, as described in Chapters 2 and 3. Risk assessment produces an estimate of the risk of security incidents involving the cybersystem of interest. It answers the following questions:

• What can go wrong?

• How bad could it be?

• How likely is it to occur?

Answers to these questions produce a measure of risk that is used to prioritize risks during the risk management process. Should the management of this risk be through reduction, then the safeguard selection process provides answers to the question:

What could be done to reduce the exposure?

The key to justified cybersecurity is the connection between traditional risk assessment and assurance to create an end-to-end argument that the risk of security incidents during the operation of the system is made as low as reasonably practicable by implementing safeguards that are effective against the risks identified. Confidence in the security posture of the system largely depends on understanding the risks that are specific to the system. Systematic and repeatable identification of risks is therefore essential for justifiable cybersecurity. While it is true to say that risks arise from uncertainty, justifiable cybersecurity focuses on such components of risk that are deterministic and predictable.

The two fundamental questions of identifying risks are “What is the risk to?” and “From what sources does the risk originate?” Assets are the targets of risk. Threats are the sources of risk. Threats are different from both threat agents and attacks. An attack is a particular scenario of how a given threat materializes and turns into a security incident. Detailed understanding of the components of cyber threats is required for making comprehensive threat statements, selecting countermeasures, and formulating clear and comprehensive claims regarding the effectiveness of these countermeasures. Knowledge of threats determines the structure of the assurance argument as described earlier in Chapter 3.

It is a common understanding that development teams that collectively have detailed knowledge of the design and implementation of the system are not well suited to identify threats. Developers focus on features, on building and creating, not on breaking things apart and finding flaws. There is a conceptual gap between what a development team knows about the system and what needs to be known about the ways the system can be attacked. In order to adequately identify threats, the risk assessment team must have comprehensive knowledge of the shady landscape of cyber crime, the experience of the incidents and attacks. Consequently, one risk analysis team performs better than another. What matters is the security experience, including knowledge of attacks that were successful. Are the former hackers the best people to perform risk assessment? Several risk analysis textbooks, especially those aiming at improving the security skills of developers, recommend brainstorming as the method for identifying threats. Of course, at the end each team produces a set of risks to manage and provides some recommendations on additional countermeasures. Yet there is a need for justified confidence that no more threats of the same or larger magnitude exist.

Some organizations favor penetration testing as the method of assessing their security posture. The so-called ‘red team’ of ethical hackers may be contracted to identify security risks. However in order to be comprehensive, penetration testing depends on the same knowledge that is required to identify threats by reviewing the design and implementation artifacts. As a result, penetration testing is plagued by the same subjectivity. A more experienced team may identify more problems but nevertheless leaves open the question of what problems may still be remain unidentified.

Ad hoc methods for identifying security holes in cybersystems are sufficient for hackers; however, the risk assessment process underlying cyberdefense must be systematic.

So how can risk assessment be made more systematic, repeatable, and objective to provide a solid foundation for system assurance? One approach is to accumulate cybersecurity knowledge and distribute it to the defenders. Accumulating attack knowledge can make risk assessment more repeatable by applying accredited and up-to-date checklists, so that even an unexperienced risk analyst can be systematic in the process—for example, when a list of the key words describing the system can be used to query a comprehensive attack knowledge repository—and produce a credible list of threats. When such a resource is available early in the system's life cycle, many mistakes and inconsistencies in the downstream processes can be avoided. The OMG Software Assurance Ecosystem emphasizes development of the standard protocols for exchanging cybersecurity knowledge based on the system facts, which facilitate the transformation of cybersecurity knowledge into machine-readable content that can be accumulated, exchanged, and used as input into automated assurance tools. Accumulation and distribution of cybersecurity knowledge from more experienced analysts all the way down to the defenders of individual systems require more attention to the exchange standards. Cybersecurity knowledge should be systematically collected and accumulated, unlocked from the tools, and distributed from the few experts onto the larger community. This means knowledge should be turned into a commodity, in very much the same way as electricity was unlocked from disconnected closed-circuit proprietary systems that included production, transmission, and consumption into a normalized, interconnected “grid” that led to the explosive growth of how electricity is utilized.

Currently, there is a large knowledge gap between attackers and defenders. Collaborative cybersecurity and accumulation of cybersecurity knowledge is the starting point for closing this gap. Once collaboration in the area of cybersecurity picks up, risk assessments will become more repeatable, but will this also lead to more affordable cybersecurity? Many believe that it will. Once the cyberdefense community understands what knowledge has to be accumulated and exchanged, then standards and other protocols for communicating the knowledge will become progressively more efficient and automated tools will appear.

The complementary approach to more systematic risk assessment is through the development of an integrated assurance case that extends claims and arguments to the identification of threats. System assurance focuses on the justification aspect of both engineering and risk assessment. Justified identification of threats provides the foundation for selecting security countermeasures and contributes to justification of the countermeasures' effectiveness. In particular, during the identification of threats it is important that the risk assessment team provide clear and defendable justification that sufficient threats have been identified (commensurate with the selected security criteria).

Since it is prohibitively expensive—and probably impossible—to safeguard information and assets against all threats, modern security practice is based on assessing threats and vulnerabilities with regard to the risk each presents, and then selecting appropriate, cost-effective countermeasures. Systematic identification of threats is particularly important in justifying such a balance, which otherwise leads to unknowingly accepting high risks.

Assessing the level of threat is even more difficult than identifying the threat itself. The level of threat is the measure of the probability of the threat event and the measure of the associated impact. The threat and its level are often collectively referred to as an individual “risk”. Without access to reliable, consistent, and comprehensive data on previous incidents, little useful guidance can be provided on the probability of the threat materializing. Several people have added that in the dynamic landscape of the cybersecurity threats and new attack methods, observation of past events is not necessarily a good guide to future accidents. Another way to access threats is to gather intelligence information related to identifying possible attackers and their capability, motivations, and plans from a network of agents, as is done in law enforcement and national security agencies. However, intelligence gathering only applies to certain types of threats and is unrealistic for most organizations. As a result, there is a tendency to base risk management on assessing vulnerabilities and their associated impact, or the level of damage that your organization would sustain if a threat event successfully exploited vulnerability. This is believed to be much easier, since both factors are within the scope of the organization. Consequently, a certain “mythification” of the concept of vulnerability occurs, as some condition that can be systematically detected in the system. The vulnerability approach is explored in more detail in Chapters 6 and 7. The current chapter focuses at deterministic strategies for systematic identification of threats as a precursor for systematic detection of vulnerabilities.

5.2. Basic cybersecurity elements

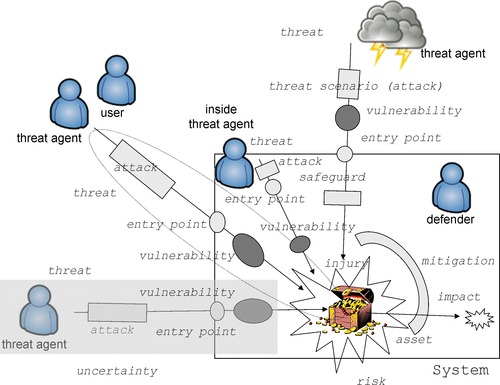

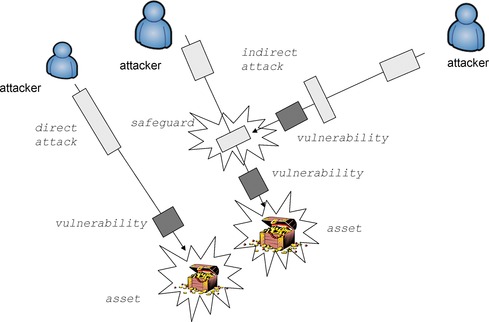

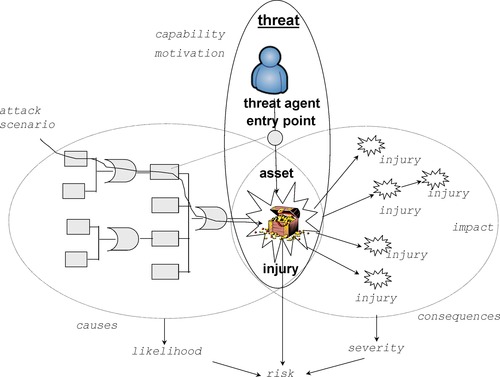

The framework for cybersecurity is defined in several international standards and recommendations such as ISO/IEC 13335 Guidelines for the Management of IT Security [ISO 13335], ISO-IEC 15443 A Framework for IT Security Assurance [ISO 15443], ISO/IEC 17779 A Code of Practice for Information Security Management [ISO 17779], ISO/IEC 27001 Information Security Management Systems [ISO 27001], ISO-IEC 15026 Systems and Software Assurance [ISO 15026], ISO/IEC 15408 Evaluation Criteria for IT security [ISO 15408], and NIST SP800-30 Risk Management Guide [NIST SP800-30]. The following six terms—assets, impacts, threats, safeguards, vulnerabilities, and risks—describe at a high level the major elements involved in cybersecurity assurance. The terminology is based on ISO/IEC 13335. These elements are illustrated at Figures 1 and 2. Precise fact-oriented vocabulary of discernable noun and verb phrases is described in the next section.

|

| Figure1 Cyber attack components |

|

| Figure 2 Direct and Indirect (multi-stage) attack |

5.2.1. Assets

The proper management of assets is vital to the success of the organization and is a major responsibility of all management levels. The assets of an organization include the following categories:

• Physical assets (e.g., computer hardware, communications facilities, buildings);

• Information (e.g., documents, databases);

• Software;

• The ability to produce some product or provide a service;

• People;

• Intangibles (e.g., goodwill, image).

Most of these assets may be considered valuable enough to warrant some degree of protection. As assessment of the risks being accepted is necessary to determine whether the assets are adequately protected.

From a security perspective, it is not possible to implement and maintain a successful security program if the assets of the organization are not identified. In many situations, the process of identifying assets and assigning a value can be accomplished at a very high level and may not require a costly, detailed, and time-consuming analysis. The level of detail for this analysis must be measured in terms of time and cost versus the value of assets. In any case, the level of detail should be determined on the basis of the security objectives. In many cases, it is helpful to group assets.

Asset attributes relevant to security include their value and/or sensitivity.

Chapter 4 described the complex environment of the system life cycle including the multiple support systems and supply chain which must be considered for the identification of assets related to the system of interest.

5.2.2. Impact

Impact is the consequence of an unwanted incident, caused either deliberately or accidentally, that affects the assets. The consequence could be the destruction of certain assets; damage to the information system; and loss of confidentiality, integrity, availability, nonrepudiation, accountability, authenticity, or reliability. Possible indirect consequences include financial losses and the loss of market share or company image. The measurement of impacts permits a balance to be reached between the results of an unwanted incident and the cost of the safeguards to protect against the unwanted incident. The frequency of occurrence of an unwanted incident needs to be taken into account. This is particularly important when the amount of harm caused by each occurrence is low but when the aggregate effect of many incidents over time may be harmful. Another example of impact is a multi-stage attack (see Figure 2). The assessment of impacts is an important element in the assessment of risks and the selection of safeguards.

Quantitative and qualitative measurements of impact can be achieved in a number of ways, such as:

• Establishing the financial cost;

• Assigning an empirical scale of severity (e.g., 1 through 10);

• Use of adjectives selected from a predefined list (e.g., low, medium, high).

5.2.3. Threats

Assets are subject to many kinds of threats. A threat has the potential to cause an unwanted incident that may result in harm to a system or an organization and its assets. This harm can occur from either direct or indirect attack on the information being handled by a system or service, for example, its unauthorized destruction, disclosure, modification, corruption, and unavailability or loss. A threat needs to exploit an existing vulnerability of the asset in order to successfully cause harm to the asset. Threats may be of natural or human origin and may be accidental or deliberate. Both accidental and deliberate threats should be identified and their level and likelihood assessed.

Threats may impact specific parts of an organization, for example, the disruption to personal computers. Some threats may be general to the surrounding environment in the particular location in which a system or an organization exists, such as damage to buildings from hurricanes or lightning. A threat may arise from within the organization, as in sabotage by an employee, or from outside, as in malicious hacking or industrial espionage. The harm caused by the unwanted incident may be temporary, for example, a five minute loss of service due to the restart of the server, or may be permanent, as in the case of destroying an asset.

The amount of harm caused by a threat can vary widely for each occurrence. For example, earthquakes in a particular location may have different strengths on each occasion.

Threats have characteristics that provide useful information about the threat itself. Examples of such information include:

• Source, that is, insider vs. outsider;

• Motivation of the threat agent, for example, financial gain, competitive advantage;

• Capability of the threat agent;

• Frequency of occurrence;

• Threat severity;

• Threats qualified in terms such as high, medium, and low, depending on the outcome of the threat assessment

Threats to the system of interest usually include threats to the support systems and supply chain, as described in Chapter 4.

5.2.4. Safeguards

Safeguards (countermeasures, controls) are practices, procedures, or mechanisms that may protect against a threat, reduce vulnerability, limit the impact of an unwanted incident, detect unwanted incidents, and facilitate recovery. Effective security usually requires a combination of different safeguards to provide layers of security for assets. For example, access control mechanisms applied to computers should be supported by audit controls, personnel procedures, training, and physical security. Some safeguards may already exist as part of the environment or as an inherent aspect of assets, or may be already in place in the system or organization. It is important to note that safeguards can come in different shapes and forms, such as technology choosing (e.g., choosing Java over C++ programming language for implementation of system components), design decisions (e.g., no information flow from architecture component A to architecture component B), or designing and implementing protective mechanisms (e.g., authentication safeguard or adding firewall).

Safeguards may be considered to perform one or more of the following functions:

• Prevention;

• Deterrence;

• Detection;

• Limitation;

• Correction;

• Recovery;

• Monitoring;

• Awareness.

An appropriate selection of safeguards is essential for a properly implemented security program. Many safeguards can server multiple functions. It is often more cost effective to select safeguards that will satisfy multiple functions. Some examples of areas where safeguards can be used include:

• Physical environment;

• Technical environment (hardware, software, and communications);

• Personnel;

• Administration;

• Security awareness, which is relevant to the personnel area.

Chapter 4 described the complex environment of the system life cycle that involves multiple support systems and supply chain which collectively determines the set of locations to which safeguards can be applied. Examples of specific safeguards are:

• Access control mechanisms;

• Antivirus software;

• Encryption for confidentiality;

• Digital signatures;

• Firewalls;

• Monitoring and analysis tools;

• Redundant power supplies;

• Backup copies of information;

• Personnel background checks.

5.2.5. Vulnerabilities

Vulnerabilities associated with assets include weaknesses in physical layout, organization, procedures, personnel, management, administration, hardware, software, or information. They may be exploited by a threat agent and cause harm to the information system or business objectives. A vulnerability in itself does not cause harm; a vulnerability is merely a condition or set of conditions that may allow a threat to affect an asset. Vulnerabilities arising from different sources need to be considered, for example, those intrinsic to the asset. Vulnerabilities may remain unless the asset itself changes such that the vulnerability no longer applies. An example of a vulnerability is lack of an access control mechanism—a vulnerability that could allow the threat of an intrusion to occur and assets to be lost. Within a specific system or an organization not all vulnerabilities will be susceptible to a threat. Vulnerabilities that have a corresponding threat are of immediate concern. However, as the environment can change dynamically, all vulnerabilities should be monitored to identify those that have been exposed to old or new threats.

Vulnerability analysis is the examination of features that may be exploited by identified threats. This analysis must take into account the environment and existing safeguards. The measure of a vulnerability of a particular system or asset to a threat is a statement of the ease with which the system or asset may be harmed.

Vulnerabilities may be qualified in terms such as high, medium, and low, depending on the outcome of the vulnerability assessment.

5.2.6. Risks

Risk is the potential that a given threat will exploit vulnerabilities of an asset and thereby cause loss or damage to an organization. Single or multiple threats may exploit single or multiple vulnerabilities. We distinguish the threat as a certain multi-component state of affairs in the system, and risk, which is a certain measure associated with the threat (the level of threat).

A threat scenario describes how a particular threat or group of threats may exploit a particular vulnerability or group of vulnerabilities exposing assets to harm. The risk is characterized by a combination of two factors, the probability of the unwanted incident occurring and its impact. Any change to assets, threats, vulnerabilities, and safeguards may have significant effects on risks. Early detection or knowledge of changes in the environment or system increases the opportunity for appropriate actions to be taken to reduce the risk.

5.3. Common vocabulary for threat identification

In order to systematically build assurance cases and reason about the effectiveness of security countermeasures, it is necessary to have a conceptualization of threats as something against which we build countermeasures. Such a concept must provide that knowledge of threats be aligned with the system facts and can be turned into machine-readable content able to be exchanged using a standard protocol for the purposes of system assurance. The goal of this chapter is to describe a discernable conceptualization that can support repeatable and systematic assurance and automation.

How far is the community from normalizing knowledge of cyber threats and collaborating by exchanging machine-readable documents? In 2008, the NATO report titled “Improving Common Security Risk Analysis” [NATO 2008] mentioned that the different methods used by various NATO countries such as EBIOS for France, NIST Risk Management SP800-30 and CRAMM for UK, ITSG-04 for Canada, and MAGERIT for Spain differ in their knowledge bases (assets, threats, vulnerabilities, etc.) and type of results (quantitative or qualitative), which makes it difficult or impossible to compare risk assessments when different methods have been used.

Our ability to identify cyber threats for a given system in a systematic and repeatable way depends on a common understanding of the threat theory and, in particular, understanding the locations that can be systematically covered during the search for threats. The cause of the incompatibilities between diverse risk assessment methodologies, reported by the NATO report, is that the definitions of threats and risks currently used in the cybersecurity community are rather high level and do not have the required precision that allows establishing traceability links to the system facts. High-level non-discernable definitions are often responsible for higher levels of subjectivity in applying a particular risk assessment methodology and for the larger variation between the outcomes, produced by different teams. The lack of discernable definitions was one of the challenges reported by the IDEAS Group during the Defense Enterprise Architecture Interoperability project [McDaniel 2008]. This issue is addressed by analyzing original vocabularies and applying the vocabulary disambiguation methodology, such as the BORO methodology (described in more detail in Chapter 9) to identify the basic discernable concepts for inclusion into the common vocabulary and establishing the mappings between the individual original vocabularies and the common vocabulary. In the rest of this chapter we use the so-called SBVR Structured English. You will recognize it by a distinctive typesetting. This notation is fully explained in Chapter 10. Chapter 9 explains the OMG Common Fact Model approach which provides guidance to building common vocabularies as contracts for information exchange including how to generate XML schema for information exchange from this notation and how it defines fact-based repositories.

5.3.1. Defining discernable vocabulary for assets

There is little disagreement between different methodologies regarding asset identification and its role in the risk assessment.

Asset

Concept type: noun concept

| Definition: | tangible or intangible things that are within the scope of the system and that require protection because they are valuable to the owner of the system. Assets are also of interest to potential attackers. Assets include but are not limited to information in all forms and media, networks, systems, materiel, real property, financial resources, employee trust, public confidence and reputation |

| Asset category | |

| Definition: | group of assets with similar characteristics |

| Concept type: | noun concept |

| Note: | This is a useful abstraction, which allows knowledge exchange between different systems within the global cybersecurity ecosystem. Asset category creates a hierarchy of assets. Various lists of asset categories are available as the so-called risk assessment checklists |

| Note: | Usually, a distinction is made between assets and capabilities (a demonstrable capacity or ability to perform a particular action). Service is defined as a mechanism to enable access to one or more capabilities, where the access is provided using a prescribed interface and is exercised consistent with constraints and policies as specified by the service description. Service provides access to a capability (which usually involves assets). A system delivers services. |

Asset categoryincludesasset category

Concept type:verb concept

Asset categoryincludesasset

Concept type:verb concept

The two often used top-level categories of assets are tangible and intangible assets:

• Tangible assets—concrete items;

• Software asset—computer program, procedures, and possible associated documentation and data pertaining to the operation of a computer system;

• Information asset—any pattern of symbols or sounds to which meaning may be assigned;

• Interface asset—connection points relating to the systems and hardware that are necessary for the delivery of service but are not proprietary;

• Physical assets—pretty much anything else that is concrete;

• Intangible assets—matters of attitude arising from personal perceptions, both individual and collective.

Enumeration of asset categories is an example of generic cybersecurity content that is exchanged in the OMG Assurance Ecosystem. Chapter 9 provides more details on the transformation of well-defined vocabularies of noun and verb concepts into standard information exchange protocols.

5.3.1.1. Characteristics of assets

Assets have value. For some assets it is possible to provide the dollar amount, for example, the cost of replacing a server in case of physical damage; however, in most cases only a qualitative estimate can be provided. Often the value of the asset is the inverse of the injury to the asset.

value

Definition:estimated worth, monetary, cultural or other

Concept type:property

| Note: | from the vocabulary perspective this is a special noun concept |

| Note: | the methodology for evaluating an asset may vary greatly between different risk analysis methodologies and different teams |

cost

Definition:appreciated or depreciated worth of tangible assets; replacement cost

General concept: value

assethasvalue

Concept type:is property-of-fact type

Note: from the vocabulary perspective, this is a special verb concept

5.3.1.2. Security requirements (confidentiality, integrity, and availability)

Confidentiality, integrity, and availability (CIA) enable analysts to identify the importance of the affected system asset to a user's organization, measured in terms of confidentiality, integrity, and availability. That is, if an asset supports a business function for which availability is most important, the analyst can assign a greater value to availability, relative to confidentiality and integrity. According to the NIST CVSS score specification [Schiffman 2004], each security requirement has three possible values: low, medium, or high.

Some risk analysis methodologies use a more abstract measure of “criticality.” However, a separate consideration of the asset's value in terms of losing its CIA is necessary as different safeguards are required to protect the asset against losing each CIA facet. When more primitive measures are selected as the base concepts, it is easier to reach agreement regarding any derived measures.

| confidentiality | |

| Definition: | the attribute that an asset must not be disclosed to unauthorized individuals because of the resulting impact on national or other interests |

| General concept: sensitivity | |

| Note: | incident related to confidentiality is unauthorized disclosure |

| integrity | |

| Definition: | the attribute that requires the accuracy and completeness of assets and the authenticity of transactions |

| General concept: sensitivity | |

| Note: | accuracy is related to the untampered form of the asset |

| Note: | incident related to integrity is tampering |

| availability | |

| Definition: | the attribute that requires the condition of being usable on demand to support operations, programs, and services. |

| General concept: sensitivity | |

| Note: | incident related to availability is interruption and loss |

| assethassensitivity | |

| Concept type: | property-of-fact type |

| asset evaluation | |

| Definition: | the process of estimating the value and the sensitivities of a particular asset |

| Concept type: | noun concept |

| General concept: | activity |

5.3.2. Threats and Hazards

Analysis of the definitions used throughout cybersecurity publications demonstrates a certain confusion in the definitions of threat and risk. In order to build a common vocabulary and provide guidance to the threat identification process in system assurance, we can draw some insights from a related body of knowledge developed within the safety community over the last five decades. The safety community has developed several systematic architecture-driven methods of identifying risks related to the safety of systems [Clifton 2005]. Architecture-driven methods focus at the concept of a location within one of the system views (as described in Chapter 4) as the basis for a systematic and repeatable system analysis.

System safety is concerned with the prevention of a safety accident, or mishap, which is defined as “an unplanned event or series of events resulting in death, injury, occupational illness, damage to or loss of equipment or property, or damage to the environment” [MIL-STD-882D]. System safety is built on the premise that mishaps are not random events; instead they are deterministic and controllable events that are the results of a unique set of conditions (i.e., hazards), which are predictable when properly analyzed. A hazard is a potential condition that can result in a mishap or an accident, given that the hazard occurs. A hazard is the precursor to a mishap; a hazard defines a potential event (i.e., a mishap), while a mishap is the occurred event.

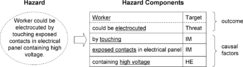

In order to build architecture-driven identification of hazards, system safety considers a hazard as an aggregate entity that involves several basic discernable components (see Figure 3). The components of a hazard define the necessary conditions for a mishap and the end outcome or effect of the mishap. In system safety, a hazard is comprised of the following three basic components [Clifton 2005]:

1. Hazardous element (HE). This is the basic hazardous resource creating the impetus for the hazard, such as a hazardous energy source such as explosives being used in the system.

2. Initiating mechanism (IM). This is the trigger or initiator event(s) causing the hazard to occur. The IM causes actualization or transformation of the hazard from a dormant state to an active mishap state.

3. Target and Threat (T/T). This is the person or thing that is vulnerable to injury and/or damage, and it describes the severity of the mishap event. This is the mishap outcome and the expected consequential damage and loss.

|

| Figure 3 Components of hazard |

The elements are necessary and sufficient to result in a mishap, which is useful in determining the hazard mitigation:

• When one of these components is removed, the hazard is eliminated.

• When the probability of the IM component is reduced, the mishap probability is reduced.

• When the element in the HE side or the T/T side of the triangle is reduced, the mishap severity is reduced.

Hazards can be described by the so-called hazard statements, based on the elements of the hazard triangle. Consider the following hazard statement: “Worker is electrocuted by touching exposed contacts in electrical panel containing high voltage.”

In this example all three hazard components are present and can be clearly identified as the elements of the system of interest (see Figure 4). In this particular example there are actually two IMs involved. The T/T defines the mishap outcome, while the combined HE and T/T define the mishap severity. The HE and IM are the hazard causal factors that define the mishap probability. If the high-voltage component can be removed from the system, the hazard is eliminated. If the voltage can be reduced to a lower, less harmful level, then the mishap severity is reduced.

|

| Figure 4 Hazard statement |

The causal factors of hazards are the specific items responsible for how a unique hazard exists in a system. Hazards in system safety are unavoidable, in part because hazardous elements must be used in the system, in the same way that security threats are unavoidable because attackers have access to the system through the same channels as used by the legitimate users. Hazards also result from inadequate safety and security considerations—either poor or insufficient design or incorrect implementation of a good design, resulting from the unmitigated effect of hardware failures, human errors, software glitches, or sneak paths.

Once a potential harmful event is identified, risk is a fairly straightforward concept, where risk is defined as Risk = Probability × Severity

The mishap probability factor is the probability of the hazard components occurring and transforming into the mishap. The mishap severity factor is the overall consequence of the mishap, usually in terms of loss resulting from the mishap (i.e., the undesired outcome). Both probability and severity can be defined and assessed in either qualitative or quantitative terms. Time is factored into the risk concept through the probability calculation of an undesired event, as the duration window of “exposure” during which one of the IM exists. For example, the risk of an adversary obtaining sensitive information from a 1-minute unencrypted communication may be considered smaller than the risk of a 1-hour unencrypted communication.

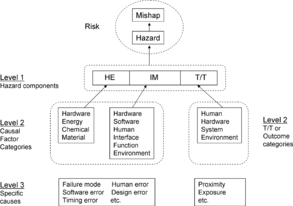

Hazards and mishaps are linked by risk. The three basic hazard components define both the hazard and the mishap. The three basic hazard components can be further decomposed into major hazard causal factor categories, which are: (1) hardware, (2) software, (3) humans, (4) interfaces, (5) functions, and (6) the environment. Finally, the causal factor categories are refined even further into the actual specific detailed causes, such as a hardware component failure mode (see Figure 5).

|

| Figure 5 Hazard causal factors |

In the area of system security, the mishaps are security incidents resulting in the loss of confidentiality, integrity, and/or availability of the assets. Some researchers explicitly add subversion of a system node as a separate incident type. The counterpart of the T/T component is Asset/Injury. A notable difference is that in the area of system security there is no explicit hazardous element. Instead, a typical source of security incidents is the malicious action of the threat agent. On the other hand, security assessment methodologies often consider natural hazards as one of the sources of threats, together with the action of intentional attackers. This demonstrates how close the two models are. Lightning is the source of high voltage (the HE component), which can cause loss of a server equipment (the T/T component). On the other hand, a hacker is the source of “attack capability” (hazardous element?) that can cause subversion of a system node running an unhardened version of Windows (asset and injury). The initiation mechanisms are practically identical between the safety and security areas, as they provide a cause and effect link between the hazardous element (or the threat agent) to the target and injury. The concept of an initiation mechanism is quite close to the concept of “vulnerability” that is used in system security, although there are some important differences that we will point out later. Several authors already made arguments for combined safety and security assurance, and the term security hazard has been used in several publications.

Note that both a safety hazard and a security threat are deterministic entities (like a mini system, consisting of a unique set of identifiable components that are traceable to the elements of the system of interest). Hazard components either exist or they do not. A mishap, on the other hand, has a certain probability of occurring, based on the probability of the initiating mechanisms, such as human error, component failures, or timing errors. The HE component has a probability of 1 of occurring, since it must be present in order for the hazard to exist.

On the other hand, in system security it is more difficult to determine the probability of the malicious actions by the attacker, and there is less statistical correlation with past historic data because attacker actions are not random and evolving.

One of the potential causes of ambiguity in the definitions of “security threat” in cybersecurity is the complex nature of both the causes and consequences of an elementary injury to an asset. This makes “security threat” a complex collection of interrelated facts, and different authors focus on different parts of this phenomenon. The complexity of the security threat can be described using a small number of elementary discernable concepts. The key is to identify an elementary “undesired event” associated with an asset—an “injury” to a specific asset. An “event” is a discernable concept because it is traceable to one or more statements in the code. Then a “security threat” becomes a discernable assembly of a threat agent, an entry point, an asset, and an injury. (Application of the BORO methodology, outlined in Chapter 9, shows that a “threat” is a tuple—a relationship between several noun concepts.) Multiple “events” can be identified as the causes of the “injury.” Similarly, an injury event may cause further damage by causing additional injuries to other assets. Multiple attack scenarios can be associated with the same threat: An attack scenario can be described as a path through the causal graph. Finally, at least one of the causal events must be associated with the entry point of the threat.

This discernable interpretation is consistent with the terminology defined at the beginning of this chapter based on [ISO 13335]. Yet the more conservative definitions are discernable and can be traced to existing system facts, enabling the systematic recognition of threats and causal analysis of the security posture. The rest of this section provides the details of this discernable vocabulary. Figure 6 provides the necessary illustration.

|

| Figure 6 Causes and consequences of threats |

5.3.3. Defining discernable vocabulary for injury and impact

Information security is about protecting information and information systems from unauthorized access, use, disclosure, disruption, modification, or destruction in order to provide:

1. Confidentiality, which means preserving authorized restrictions on access and disclosure, including means for protecting personal privacy and proprietary information;

2. Integrity, which means guarding against improper information modification or destruction, and includes ensuring information nonrepudiation and authenticity;

3. Availability, which means ensuring timely and reliable access to and use of information.

Certain events result in injury, such as “unauthorized use, disclosure, disruption, modification, or destruction of information and information systems.”

| injury | |

| Definition: | the damage that results from the compromise of assets |

| Note: | Injury is elementary damage that can be traced to system |

| Note: | in non cyber scenarios a physical access to the asset may be the prerequisite of injuries to the asset |

| Concept type: | noun concept |

| Synonym: | harm |

| Note: | impact is non elementary, cumulative damage |

| injurytargetsasset | |

| Concept type: | verb concept |

| injurytargetsasset category | |

| Concept type: | verb concept |

| Note: | This results in generic injury checklists |

| threat event | |

| Definition: | the event that results in compromise to assets |

| Synonym: | undesired event |

| Note: | threat event is an elementary event that can be traced to system |

| Note: | impact is a collection of threat events associated with a given initial threat event |

| threat eventcausesinjurytoasset | |

| Concept type: | verb concept |

| threat eventcausesthreat event | |

| Concept type: | verb concept |

| threat eventhasimpact | |

| Definition: | the state of affairs that injuriescaused bythreat event collectively comprise impact |

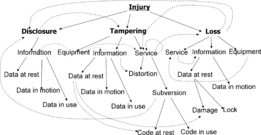

Enumeration of possible injuries is an example of generic cybersecurity content that is exchanged in the OMG Assurance Ecosystem. Chapter 9 provides more details on the transformation of well-defined vocabularies of noun and verb concepts into standard information exchange protocols. Here are some examples in which we describe pairs of injury/asset category.

Injuries related to confidentiality: disclosure of information assets, which can be further subdivided into disclosure of data at rest, disclosure of data in motion, disclosure of data in use, and disclosure of data in facilities (“dumpster diving”) and equipment (recovering sensitive information from a disposed hard drive or from a stolen laptop).

Injuries related to integrity:

• Tampering with equipment, facilities;

• Tampering with information assets;

• Tampering with service;

• Subversion of a system node.

Injuries related to availability: partial or full loss of equipment, service, information asset, facility, personnel illustrates impact statements. It shows several exemplary injuries/asset category pairs (solid lines) and then uses the dotted lines to show some impacts, portraying possible causal relationships between injuries.

The relationships shown in Figure 7 can be verbalized as follows:

• Disclosure of information causes tampering with information (e.g., when user login credentials are compromised).

• Tampering with equipment causes tampering with information (e.g., due to malfunction).

• Tampering with equipment causes disclosure of information (e.g., a telephone bug).

• Tampering with equipment causes tampering with service (both a distortion and subversion).

• Tampering with information causes distortion of service (a fancy way to say “garbage in–garbage out”).

• Subversion of service causes disclosure of information (e.g., a typical spybot scenario, when a trojan installs a keylogger and exports sensitive information, such as financial account information and credentials).

• Subversion of service causes subversion of service (i.e., further service, subverting other computers on the network).

• Subversion of service causes loss (of service, information).

• Loss of information causes tampering with service (e.g., when records are deleted).

• Loss of service causes tampering with service (e.g., when a protection mechanism is disabled).

• Loss of equipment causes disclosure of information (e.g., from a stolen usb stick).

• Loss of equipment causes loss of service.

|

| Figure 7 Injury and impact |

5.3.4. Defining discernable vocabulary for threats

| threat | |

| Definition: | a set of potential incidents in which a threat agent causes a threat event to an asset using a specific entry point into the system |

| Concept type: | noun concept |

| Note: | a threat event is the key concept. This is a specific incident customized for the given system. A threat event can belong to several abstract groups (threat activity and threat class) which provide means to manage knowledge about threats and build reusable libraries of threats |

| threatcausesinjurytoasset | |

| threat activity | |

| Definition: | a generic group of threats with common consequences or outcomes |

| Example: | sabotage |

| Note: | threat activity is used to build checklists of threats |

| threat eventbelongs tothreat activity | |

| Synonym: | threat activityincludesthreat event |

| Necessity: | threat activitiesbelongs tozero or morethreat activities |

| threat class | |

| Definition: | a generic group of threat activities with common characteristics |

| Example: | deliberate threat |

| Note: | threat class captures reusable categories of threat activities |

| threat activitybelongs tothreat class | |

| Necessity: | threat activitybelongs tozero or morethreat classes |

| threat eventis accidental | |

| Definition: | unplanned threats caused by human beings |

| threat eventis deliberate | |

| Definition: | planned or premeditated threats caused by human beings. |

| threat eventis a natural hazard | |

| Definition: | threats caused by forces of nature. |

| Example: | power failure |

| threataffectsasset | |

| Necessity: | threat eventaffectsone or moreassets |

| threat agent category | |

| Definition: | a subdivision of threat activity, intended to focus on deliberate threats with common motivation or accidental threats and natural hazards with similar causal factors |

| threat agent | |

| Definition: | an identifiable organization, individual or type of individual posing deliberate threats, or a specific kind of accident or natural hazard |

| Synonym: | threat source, attacker, adversary |

| threat agentcausesthreat | |

| Necessity: | eachthreat agentcausesat least onethreat |

| threat agentbelongs tothreat agent category | |

| Synonym: | threat agent categoryincludesthreat agent |

| Necessity: | threat agentbelongs toone or morethreat agent categories |

| threat agent categoryengages inthreat agent activity | |

| Necessity: threat agent categoryengages inone or morethreat agent activity | |

5.3.5. Threat scenarios and attacks

| threat scenario | |

| Definition: | a detailed chronological and functional description of an actual or hypothetical threat intended to facilitate risk analysis by creating a confirmed relationship between an Asset of value and a threat agent having motivation toward that asset and having the capability to exploit a vulnerability found in the same asset |

| Note: | a threat scenario occurs when a threat agent takes action against an asset by exploiting vulnerabilities within the system |

| threat scenariodescribesthreat | |

| Necessity: | threat scenariodescribesexactly onethreat |

| attack | |

| Definition: | sequence of actions that involve interaction with system and that results in threat event |

| Note: | the system must allow injury; the attack forces system into producing injury |

| Note: | attack may involve physical access to an asset |

| Note: | attack involves malicious intent |

| Note: | attack involves particular entry point, which is an attribute of the interactions with the system; attack may involve more than one entry point |

| attackresults ininjury | |

| Definition: | state of affairs that the attack resulted in injury |

| Note: | the system must allow injury; the attack forces system into producing injury |

| Synonym: | attackproducesinjury |

| attackhas impact | |

| Definition: | state of affairs that attack resulted in injuries that collectively comprise impact |

| attacktargetsasset | |

| Definition: | state of affairs that an attackproducesinjury to asset |

| Note: | there is some object that is valuable to the attacker; this is the attacker's viewpoint |

| Synonym: | attackinjuresasset |

| Note: | attack may impact additional asset as ‘collateral damage’; however in order to predict attacks, it is important to understand motivation of the attacker |

5.3.6. Defining discernable vocabulary for vulnerabilities

| vulnerability | |

| Definition: | an attribute of a system or the environment in which it is located that allows a threat event, or increases the severity of the impact |

| Note: | an inadequacy related to security that could permit an attacker to produce injury |

| Note: | an actual flaw or inadequacy related to a specific safeguard or a missing safeguard that could expose employees, assets, or service delivery to injury |

| Note: | a vulnerability is a characteristic, attribute, or weakness of any asset within a system or environment that increases the probability of a threat event occurring or the severity of its effects causing harm (in terms of confidentiality, availability, and/or integrity). The presence of vulnerability does not in itself produce injury; vulnerability is merely a condition or a set of conditions that could allow assets to be injured by a threat agent |

| Systemhasvulnerability | |

| Definition: | state of affairs that the vulnerability is an attribute of the system or the environment of the system |

| Note: | the fact that vulnerability has ‘location’ in the system is vital to systematic detection and mitigation of vulnerabilities |

| vulnerabilityis exploited bythreat agent | |

| Definition: | state of affairs that the attackerperforms an attack that results ininjury and that is enabled by the vulnerability |

| Note: | the presence of vulnerability in the system does not result in injury unless the vulnerability is exploited by the attacker in the course of interactions with the system |

| Note: | ‘exploitation’ means that injury is produced by the system, where the attacker forces the system into producing such injury |

| Note: | attacker is a simple case of a more general concept of a threat agent that may also include forces of nature and other unintentional events known as hazards |

| vulnerabilityenablesattack | |

| Definition: | state of affairs that the vulnerability is involved in interactions with the system that constitutes the attack |

| Synonym: | attackis enabled byvulnerability |

| Possibility: | attackis enabled byone or morevulnerability |

| vulnerabilityexposesasset | |

| Definition: | state of affairs that the vulnerabilityenables an attack that producesinjury to the asset |

| Note: | injury to the asset is only done as the result of a successful attack; the presence of a vulnerability does not in itself result in any injury to the asset |

| Vulnerability-1exposesvulnerability-2 | |

| Definition: | state of affairs that the vulnerability-1 enables an attack that reduces the effectiveness of a safeguard to another vulnerability-2 |

| Note: | injury to the asset is only done as the result of a successful attack; the presence of the vulnerability-1 or vulnerability-2 does not in itself result in any injury to the asset |

| Note: | vulnerability-1 indirectly exposesasset; injury to asset cannot be produced by an attack on vulnerability-1 because it is prevented by safeguard, however injury may be produced by a combination of attack on thesafeguardthatis enabled bythevulnerability-2, followed byattack on theasset itself |

| vulnerabilityhasimpact | |

| Definition: | state of affairs that the attackthatis enabled by the vulnerabilityhasimpact |

| Note: | vulnerability is associated with impact only through an attack; this association may be quite complex, since a particular attack may be enabled by more than one vulnerability |

| vulnerabilityhasseverity | |

| Definition: | a metric of vulnerability that enables prioritization of vulnerabilities for the purpose of mitigation |

| Note: | NIST SCAP standard called Common Vulnerability Scoring System (CVSS) defines a standard approach to evaluate severity of a vulnerability, called SCAP ‘score’ |

| vulnerability class | |

| Definition: | a generic group of vulnerability based on the broad security policy requirements |

| Note: | vulnerability class is important for managing knowledge of vulnerabilities |

| vulnerability group | |

| Definition: | a subdivision of vulnerability class, intended to capture all vulnerability associated with a related group of safeguard |

| Note: | vulnerability group is important for managing knowledge of vulnerabilities |

| vulnerabilitybelongs tovulnerability group | |

| vulnerability groupbelongs tovulnerability class | |

Since vulnerabilities are key to system assurance and risk management, Chapters 6 and 7 explore this subject in greater detail.

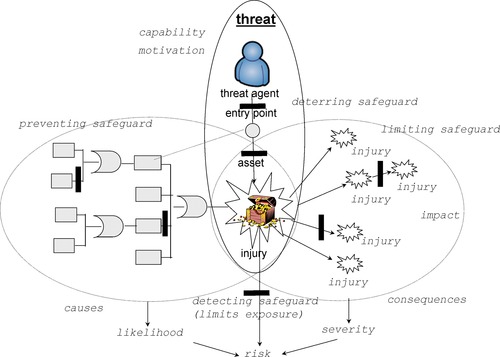

5.3.7. Defining discernable vocabulary for safeguards

| safeguard | |

| Definition: | practices, procedures or mechanisms that reduce the risk to personnel, assets, or service delivery by decreasing the likelihood of a threat event, reducing the probability of occurrence of the threat event, or mitigating the severity of the impact of the threat event |

| Synonym: | countermeasure |

| Synonym: | security control |

| Note: | there are eight categories of safeguards based on their interaction with the threat agent, vulnerability or asset |

safeguardmitigatesvulnerability

Synonym:safeguardcorrectsvulnerability

safeguardprotectsasset

Safeguarddetersthreat agent

Safeguarddetectsattack

Safeguardpreventsthreat event

Safeguardlimitsimpact of threat event

safeguardmonitorsthreat event

safeguardrecoversasset

safeguardis effective againstthreat event

The “bow-tie diagram” (showing causes of the threat to the left and consequences of the threat to the right) is a useful tool to analyze safeguards. Figure 9 shows preventing safeguards placed at particular branches of the causal tree; limiting safeguards placed at particular branches on the consequence tree, a deterring safeguard that lowers motivation of the attacker, a preventive safeguard that hardens the system by eliminating an entry point (e.g., disconnecting an unauthorized modem or turning off an unused service to shrink the so-called attack surface); and a detecting safeguard at the bottom), which reduces the risk by limiting exposure of an injury or exposure to a vulnerability. Corrective safeguards may also reduce the vulnerability exposure (e.g., a spring that forces an open door to close, reducing exposure) or undo the injury (e.g., by restarting the system or restoring the lost or corrupted information from backup). Finally, detecting and monitoring safeguards alert defenders about the ongoing threat activities which reduces risk by reducing the window of exposure to the attack. Some safeguards raise security awareness and thus contribute to hardening secure operational procedures.

|

| Figure 9 Threats and Safeguards |

5.3.8. Risk

| risk | |

| Definition: | the measure of the probability of occurence and the severity of impact of a specific threat |

| Synonym: | original risk |

| Note: | threat event causes specified injury resulting from natural hazards, accidental threats, or a deliberate threat agent, having motivation toward an asset and the capability to exploit a vulnerability of the asset (or the system containing an information asset), to successfully compromise the asset. Risk is the measure of the likelihood of the causes of the threat and severity of the impact of the threat |

| Threathasrisk | |

| Concept type: | is property-of-fact type |

| impact | |

| Definition: | a description of the cumulative effects on the system resulting from injury to employees or assets arising from a given threat event |

| Synonym: | Consequence |

| threat eventproducesinjury | |

| Concept type: | verb concept |

| Necessity: | threat event has zero or more injuries |

| Note: | threat event may produce injuries directly or indirectly, the combined injuries comprises impact |

| Causal events | |

| Definition: | set of events within the system culminating in a given threat event resulting in the injury to employees or assets |

| Note: | some causal events are at the boundary of the system, which makes them controllable by the threat agent; other causal events are internal to the system; some causal events are failures in system functions (that either occur naturally or are caused by other threats) |

| likelihood | |

| Definition: | a measure of the probability of a threat event determined by the probability of the causal events occurring within the given operational environment |

| threathaslikelihood | |

| Concept type: | is property-of-fact type |

| Necessity: | threat has exactly one likelihood |

| threathasimpact | |

| Concept type: | is property-of-fact type |

| Necessity: | threat has exactly one impact |

| risk assessment | |

| Definition: | the process of estimating the risk of a particular threat and the corresponding set of assets, and vulnerabilities with confirmed relationships to each other |

| Risk assessmentinvolvesthreat | |

| Necessity: | it is obligatory that risk assessment includes exactly one threat |

| Synonym: | threat event of the risk assessment |

| Risk assessmentinvolvesasset | |

| Necessity: | it is obligatory that risk assessment includes one or more assets that are affected by the threat of the risk assessment |

| Synonym: | business object of the risk assessment |

| Risk assessmentinvolvesvulnerability | |

| Possibility: | it is possible that risk assessment includes one or more vulnerabilities that are exploited by the threat of the risk assessment |

| Synonym: | vulnerability of the risk assessment |

| Risk assessmentinvolvesthreat agent | |

| Necessity: | it is obligatory that risk assessment includes exactly one threat agent that causes the threat of the risk assessment |

| Synonym: | threat agent of the risk assessment |

| Risk assessmentcalculatesrisk | |

| Systemhasrisk | |

| Definition: | the risks of the system is the set of risk of all threat to the system |

| Systemhasaggregated risk | |

| Definition: | the risk of the system is the cumulative risk of all threat to the system |

The fact-oriented discernable vocabulary for threat identification allows management of the information during the threat and risk analysis process in a fact-based repository, instead of, for example, a spreadsheet, in particular, verb concepts related to "risk assessment" correspond to typical entries of a TRA spreadsheet [RCMP 2007], [Sherwood 2005]. This fact-oriented vocabulary allows the use of automated tools for risk analysis, and allows integration of the threat and risk facts into the integrated system model, as described in Chapter 3.

5.4. Systematic threat identification

The systematic threat identification process is essential for producing stronger claims for the assurance case and building defendable assurance arguments and evidence. One of the characteristics of the threat identification process that is specific to its use in system assurance is the set of activities that lead to assurance of the process itself, by performing verification and validation tasks, and collecting evidence that justifies completeness of the list of identified threats that all sufficient threats have been identified.

Threat identification is the cognitive process of matching the components of the threat against the multitude of system facts (available as the integrated system model, as described in Chapter 3).

Systematic, repeatable, and objective identification of threats involves the “security threat” patterns that utilize “security threat” components as follows:

• Use threat activity and threat agent category checklists;

• Evaluate trigger events and causal factors;

• Use asset category checklists;

• Use checklists to identify injuries to assets;

• Evaluate possible assets and injuries;

• Evaluate injury events for consequential impact;

• Evaluate system facts to systematically identify entry points.

In addition, security threat identification can use key failure state questions and evaluations of the threat-triggering mechanisms.

The discernable threat concept provides the best threat recognition resource by evaluating individually each of the four threat component categories (threat agent, entry point, asset, and injury) against the systems facts. This means, for example, identifying and evaluating all of the unique entry point components in the system design as the first step [Swiderski 2004]. Subsequently, all system assets are evaluated for injuries and undesired events, then all causal factors.

Threat agent category and threat activity checklists are examples of cybersecurity content that is key to the justifiable identification of the threat agents of the security threats and further evaluation of the likelihood of the threat based on the capabilities and motivations of the identified threat agent. This is similar to using the industry standard hazardous source checklists, such as explosives, fuel, batteries, electricity, acceleration, and chemicals to identify safety hazards. System components that match the elements of one of the hazard source checklists may lead to identification of a potential safety hazard in the system. In the area of cybersecurity, threat agents are external to the system, so this knowledge cannot be turned into patterns that can be recognized in the system facts, but they are nevertheless important for systematic threat identification. Once the four threat components have been identified, knowledge of attack pattern can be used to further investigate the possible attack scenarios. Accumulating and exchanging the certified machine-readable threat agent category and threat activity checklists is required for distributing cyberdefense knowledge across the defender community.

Threats can be recognized by focusing on known or preestablished undesired events (the injury to asset component of a security threat). This means considering and evaluating known undesired events within the system. By following these undesired events backward, certain threats can be more readily recognized. A similar systematic approach is used in system safety for systematic identification of safety hazards [Clifton 2005]. For example, a missile system has certain undesired events that are known right from the conceptual stage of system development. In the design of missile systems, it is well accepted that an inadvertent missile launch is an undesired mishap. Therefore any conditions contributing to this event would formulate a hazard, such as autoignition, switch failures, and human error.

Another method for recognizing threats is through the use of key state questions. This method involves a set of clue questions that must be answered, each of which can trigger the recognition of a threat. The key states are potential states or ways the subsystem could fail or operate incorrectly and thereby result in creating a threat. For example, when evaluating each subsystem, answering the question “What happens when the subsystem fails to operate?” may lead to recognition of a security threat. A similar approach is the foundation for the HAZOP technique for systematic safety hazard identification.

Certain threats can be recognized by focusing on known causal threat-triggering mechanisms. In cybersecurity many threats involve the control- and data-flow path from some entry point to the “point of injury,” which is a particular location in the system capable of producing potential injury and the common safeguards in the form of data filters. In particular, this approach focuses at known safeguards and their components, and the possibilities of bypass. Similar techniques are applied in system safety. For example, in the design of aircraft it is common knowledge that fuel ignition sources and fuel leakage sources are initiating mechanisms for fire/explosion hazards. Therefore, the systematic safety hazard recognition would benefit from detailed review of the design for ignition sources and leakage sources when fuel is involved. Component failure modes and human error are common triggering modes for both safety hazards and security threats, for example, when system design indicates a human decision point used as a safeguard that mitigates a certain triggering mechanism.

Use of the integrated system model allows automation of several tasks of the systematic threat identification, based on the central concept of a vertical traceability link, as follows. The integrated system model contains detailed system facts (automatically derived from the system artifacts, such as binary and source code by knowledge discovery tools) as well as the high-level threat facts (identified and imported into the integrated system model, such as threat agents and asset classes). The high-level threat facts are linked to the low-level system facts through chains of vertical traceability links. Entry points, physical assets (especially the information assets and capability assets), and “points of injury” can be identified by automated pattern recognition against the detailed system facts, and then propagated to the high-level threat facts by using vertical traceability links. Similar to standard protocols for exchanging checklists for identifying external components of threats, accumulating and exchanging certified machine-readable patterns is required for distributing cyberdefense knowledge across the defender community. The key enabler for the exchange of cybersecurity patterns is the availability of a standard protocol for exchanging system facts, supporting traceability links, and integration of multiple vocabularies because both the facts about the system of interest (extracted by knowledge discovery tools) and the patterns must use the same protocol, the same conceptual commitment to the predefined common vocabulary. Cybersecurity patterns are further addressed in Chapter 7. The standard protocol for exchanging system facts is described in Chapter 11. The underlying mechanisms for fact-based integration of multiple vocabularies are described in Chapter 9. Finally, Chapter 10 describes the standard protocol for managing and exchanging vocabularies and defining new patterns. These components provide the foundation for the OMG Assurance Ecosystem described in more detail in Chapter 8.

5.5. Assurance strategies

Let's look at threat identification in the context of the system assurance strategy. First of all, we want to stress the point that assurance is a complex collection of activities throughout the life cycle of the system that contributes to governance of the system [ISO 15288]. This determines the overall system assurance strategy, including the integration of assurance activities with other system life cycle processes and the scope of individual system assurance projects. These considerations lead to the particular structure of the assurance case, which is tailored to the governance needs of the life cycle of the system of interest. However, within the complex mosaic of system life-cycle processes, the assurance activities follow certain steps based on the logical dependencies between inputs and outputs of these steps, as outlined in Chapter 3. The central goal of the assurance case developed in Chapter 3 is the so-called safeguard effectiveness claim. This claim involves one or more threats. Assurance strategy provides guidance on how to manage multiple safeguard effectiveness claims within the assurance case. With this understanding, systematic threat identification involves several distinct approaches, based on the particular threat component that is identified first. Selected strategy determines the structure of the collection of threats and consequently determines the detailed structure of the assurance argument and the goal structure of the assurance activities. Although multiple approaches are possible, the following five strategies are noteworthy:

• Injury argument (structure assurance argument by injuries to assets);

• Entry point argument (structure assurance argument by various entry points into the system);

• Threat activity argument (structure assurance argument by known threat categories, threat activities, and threat agent categories);

• Vulnerability argument (structure assurance argument by known vulnerabilities and weaknesses);

• Security requirements argument (structure assurance argument by the security requirements).

5.5.1. Injury argument

Injury argument considers various undesired events (threat events)—failures of assets and loss of assets and how this affects the mission of the system. Undesired events can, in turn, be structured by assets and asset types. Undesired events are used to identify the “points of injury” in the system, as specific locations in the system views (including the structure views as well as functional views) that are capable of producing injury to identified assets, and are therefore components of one or more individual security threats. The injury argument proceeds by identifying the safeguards in relation to how each undesired event is managed (deterred, prevented, detected, and reduced, see Figure 9). The injury argument justifies the selection of the safeguards, and if the resulting justification is weak and cannot be supported by defendable evidence, recommendations for additional safeguards for the corresponding system locations can be provided as feedback to the system engineering process. The injury strategy was used in the assurance case in Chapter 3.

5.5.2. Entry point argument

Entry point argument starts with the system entry points. This argument is warranted by the claim that all entry points are correctly identified. Potential issues related to knowledge of the entry points include hidden entry points (through the platform); hidden behaviors (in the platform); and interaction with the platform (incomplete facts related to behavior). Accurate information about the entry points can be acquired using a bottom-up approach. This approach starts with the implementation-level system facts to identify the physical entry points based on the well-known patterns determined by the underlying runtime platform and then traces them back to the conceptual entry points using the vertical traceability links. This approach usually provides very reliable, accurate information and therefore generates defendable evidence. This claim is further supported by the side argument justifying the accuracy and completeness of the implementation-level system facts (using evidence related to the properties of the knowledge discovery tools, the activities and transparency of the knowledge discovery process used, as well as the qualifications of the personnel running the knowledge discovery tools).

The argument then addresses each entry point separately. It identifies the behaviors related to the particular entry point, builds the behavior graph, and then assesses how effective are the safeguards in mitigating the undesired behaviors. This argument does not systematically enumerate the assets and injuries. Therefore, further assurance can be produced by cross-correlating the resulting list of threats with the systematically produced list of injuries, and/or the systematic inventory of assets to produce evidence that all high-impact behaviors have been addressed.

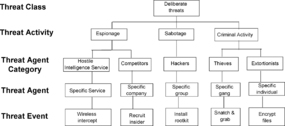

5.5.3. Threat argument

Threat argument starts with the preexisting catalog of threat categories from which threats to similar systems are selected. The initial list of threats is extended by system-specific threats based on the particular mission and the corresponding environment. The list of threats can be structured by the threat classes, threat activities, and threat agent categories (as illustrated in Figure 8). Then the safeguards are identified, and their effectiveness is evaluated to understand how well they mitigate the identified threats and whether any vulnerability can be identified by detecting a particular threat that is not adequately mitigated by safeguards.

|

| Figure 8 Illustration of threat and threat agent grouping |

The issue with this approach is related to the fundamental uncertainty of our knowledge of threats. One can be systematic, however, and construct a reasonable threat profile that can be further improved by additionally considering assets, impacts, and vulnerabilities. Accumulating cybersecurity knowledge in the form of machine-readable checklists created and updated by experts can significantly improve the rigor of security evaluations across the defender community. Completeness of the list of threats can be justified by the use of validated checklists, use of qualified and experienced personnel, and cross-correlation of the identified threats to systematic inventory of assets and injuries, including violation of system-specific security policy. This approach is not restricted to a catalog of known vulnerabilities, so it operates more from the first principles and can identify violations of security policy specific to the system of interest.

5.5.4. Vulnerability argument

The vulnerability argument starts with known vulnerabilities and weaknesses and proceeds to identifying safeguards and justifying that all identified vulnerabilities are adequately mitigated by the safeguards, commensurate with the security criteria. This strategy is based on availability of the following machine-readable content:

• Known vulnerabilities in off-the-shelf system elements;

• Known patterns of the “points of injury”;

• Known patterns related to the potential causal factors of threats;

• Known safeguard inefficiency patterns.

The advantage of the vulnerability-centric strategy is that it aligns with follow-up risk mitigation activities that are driven by the identified vulnerabilities. The disadvantage of this approach is that a system vulnerability is a complex phenomenon, which makes it difficult to detect systematically, with sufficient assurance. Detection of security vulnerabilities generates counterevidence to the security claims for the system. However, the inability to detect further vulnerabilities represents only indirect evidence in support of the security claims. Therefore the vulnerability argument needs to be supported by other considerations, outlined in this section, as well as by the backing evidence related to the qualification and experience of the personnel performing vulnerability detection; characteristics of the tools involved (static analysis tools, penetration testing tools, etc.), and the corresponding methodologies, including the coverage criteria and patterns involved. Vulnerability argument is described in more detail in Chapters 6 and 7.

5.5.5. Security requirement argument

When assurance activities are integrated into the technical processes of the system life cycle, as illustrated at the beginning of Chapter 3, the assurance argument is organized in a distinct three-phase collection of goals:

• Sufficient threats have been identified and security objectives have been set commensurate with the security criteria of the assurance project.

• Security requirements to system elements and system functions mitigate the threats and achieve the identified security objectives.

• The system adequately implements the identified security requirements and achieves security objectives.