Chapter 3. Secure Software Development Management and Organizational Models1

1. Many of the models presented in this chapter were initially discussed in Mead [2010b].

with Julia Allen and Dan Shoemaker

In This Chapter

• 3.2 Process Models for Software Development and Acquisition

• 3.3 Software Security Frameworks, Models, and Roadmaps

3.1 The Management Dilemma

When managers and stakeholders start a software acquisition or development project, they face a dazzling array of models and frameworks to choose from. Some of those models are general software process models, and others are specific to security or software assurance. Very often the marketing hype that accompanies these models makes it difficult to select a model or set of practices.

In our study of the problem, we realized that there is no single, recognized framework to organize research and practice areas that focuses on building assured systems. Although we did not succeed in defining a single “best” framework, we were able to develop guidance to help managers and stakeholders address challenges such as the following:

• How do I decide which security methods fit into a specific lifecycle activity?

• How do I know if a specific security method is sufficiently mature for me to use on my projects?

• When should I take a chance on a security research approach that has not been widely used?

• What actions can I take when I have no approach or method for prioritizing and selecting new research or when promising research appears to be unrelated to other research in the field?

In this chapter, we present a variety of models and frameworks that managers and stakeholders can use to help address these challenges. We define a framework using the following definitions from Babylon dictionary [Babylon 2009]:

3.1.1 Background on Assured Systems

The following topics exhibit varying levels of maturity and use differing terminology, but they all play a role in building assured systems:

• Engineering resilient systems encompasses secure software engineering, as well as requirements engineering, architecture, and design of secure systems and large systems of systems, and service, and system continuity of operations.

• Containment focuses on the problem of how to monitor and detect a component’s behavior to contain and isolate the effect of aberrant behavior while still being able to recover from a false assumption of bad behavior.

• Architecting secure systems defines the necessary and appropriate design artifacts, quality attributes, and appropriate trade-off considerations that describe how security properties are positioned, how they relate to the overall system/IT architecture, and how security quality attributes are measured.

• Secure software engineering (secure coding, software engineering, and hardware design improvement) improves the way software and hardware are developed by reducing vulnerabilities from software and hardware flaws. This work includes technology lifecycle assurance mechanisms, advanced engineering disciplines, standards and certification regimes, and best practices. Research areas in secure software engineering include refining current assurance mechanisms and developing new ones where necessary, developing certification regimes, and exploring policy and incentive options.

Secure software engineering encompasses a range of activities targeting security. The book Software Security Engineering [Allen 2008] presents a valuable discussion of these topics, and further research continues.

Some organizations have begun to pay more attention to building assured systems, including the following:

• Some organizations are participating in the Building Security In Maturity Model [McGraw 2015].

• Some organizations are using Microsoft’s Security Development Lifecycle (SDL) [Howard 2006].

• Some organizations are members of the Software Assurance Forum for Excellence in Code (SAFECode) consortium [SAFECode 2010].

• Some organizations are working with Oracle cyber security initiatives and security solutions [Oracle 2016].

• Members of the Open Web Application Security Project (OWASP) are using the Software Assurance Maturity Model (SAMM) [OWASP 2015].

• The Trustworthy Software Initiative in the UK, in conjunction with the British Standards Institution, has produced Publicly Available Specification 754 (PAS 754), “Software Trustworthiness—Governance and Management—Specification” [TSI 2014].

Software assurance efforts tend to be strongest in software product development organizations, which have provided the most significant contribution to the efforts listed above. However, software assurance efforts tend to be weaker in large organizations that develop systems for use in-house and integrate systems across multiple vendors. They also tend to be weaker in small- to medium-sized organizations developing software products for licensed use. It’s worth noting that there are many small- and medium-sized organizations that have good cyber security practices, and there are also large organizations that have poor ones. For a while, organizations producing industrial control systems lagged behind large software development firms, but this has changed over the past several years.

Furthermore, there are a variety of lifecycle models in practice. Even in the larger organizations that adopt secure software engineering practices, there is a tendency to select a subset of the total set of recommended or applicable practices. Such uneven adoption of practices for building assured systems makes it difficult to evaluate the results using these practices.

Let’s take a look at existing frameworks and lifecycle models for building assured systems. In the literature, we typically see lifecycle models or approaches that serve as structured repositories of practices from which organizations select those that are meaningful for their development projects.

Summary descriptions of several software development and acquisition process models that are in active use appear in Section 3.2, “Process Models for Software Development and Acquisition,” and models for software security are summarized in Section 3.3, “Software Security Frameworks, Models, and Roadmaps.”

3.2 Process Models for Software Development and Acquisition

A framework for building assured systems needs to build on and reflect known, accepted, common practice for software development and acquisition. One commonly accepted expression of the codification of effective software development and acquisition practices is a process model. Process models define a set of processes that, when implemented, demonstrably improve the quality of the software that is developed or acquired using such processes. The Software Engineering Institute (SEI) at Carnegie Mellon University has been a recognized thought leader for more than 25 years in developing capability and maturity models for defining and improving the process by which software is developed and acquired. This work includes building a community of practitioners and reflecting their experiences and feedback in successive versions of the models. These models reflect commonly known good practices that have been observed, measured, and assessed by hundreds of organizations. Such practices serve as the foundation for building assured systems; it makes no sense to attempt to integrate software security practices into a software development process or lifecycle if this development process is not defined, implemented, and regularly improved. Thus, these development and acquisition models serve as the basis against which models and practices for software security are considered. These development and acquisition models also serve as the basis for considering the use of promising research results. The models described in this section apply to newly developed software, acquired software, and (extending the useful life of) legacy software.

The content in this section is excerpted from publicly available SEI reports and the CMMI Institute website. It summarizes the objectives of Capability Maturity Model Integration (CMMI) models in general, CMMI for Development, and CMMI for Acquisition. We recommend that you familiarize yourself with software development and acquisition process models in general (including CMMI-based models) to better understand how software security practices, necessary for building assured systems, are implemented and deployed.

3.2.1 CMMI Models in General

The following information about CMMI models is from the CMMI Institute [CMMI Institute 2015]:

3.2.2 CMMI for Development (CMMI-DEV)

The SEI’s CMMI for Development report states the following [CMMI Product Team 2010b]:

What Is a Process Area?

A process area is a cluster of related practices in an area that, when implemented collectively, satisfies a set of goals considered important for making improvement in that area.

CMMI-DEV includes the following 22 process areas [CMMI Product Team 2010b]. The 22 process areas appear in alphabetical order by acronym:

• Causal Analysis and Resolution (CAR)

• Configuration Management (CM)

• Decision Analysis and Resolution (DAR)

• Integrated Project Management (IPM)

• Measurement and Analysis (MA)

• Organizational Process Definition (OPD)

• Organizational Process Focus (OPF)

• Organizational Performance Management (OPM)

• Organizational Process Performance (OPP)

• Organizational Training (OT)

• Product Integration (PI)

• Project Monitoring and Control (PMC)

• Project Planning (PP)

• Process and Product Quality Assurance (PPQA)

• Quantitative Project Management (QPM)

• Requirements Development (RD)

• Requirements Management (REQM)

• Risk Management (RSKM)

• Supplier Agreement Management (SAM)

• Technical Solution (TS)

• Validation (VAL)

• Verification (VER)

3.2.3 CMMI for Acquisition (CMMI-ACQ)

The SEI’s CMMI for Acquisition (CMMI-ACQ) report states the following [CMMI Product Team 2010a]:

CMMI-ACQ has 22 process areas, 6 of which are specific to acquisition practices, and 16 of which are shared with other CMMI models. These are the process areas specific to acquisition practices:

• Acquisition Requirements Development

• Solicitation and Supplier Agreement Development

• Agreement Management

• Acquisition Technical Management

• Acquisition Verification

• Acquisition Validation

In addition, the model includes guidance on the following:

• Acquisition strategy

• Typical supplier deliverables

• Transition to operations and support

• Integrated teams

The 16 shared process areas include practices for project management, organizational process management, and infrastructure and support.

3.2.4 CMMI for Services (CMMI-SVC)

The SEI’s CMMI for Services (CMMI-SVC) report states the following [CMMI Product Team 2010c]:

CMMI-SVC contains 24 process areas. Of those process areas, 16 are core process areas, 1 is a shared process area, and 7 are service-specific process areas. Detailed information on the process areas can be found in CMMI for Services, Version 1.3 [CMMI Product Team 2010c]. The 24 process areas appear in alphabetical order by acronym:

• Capacity and Availability Management (CAM)

• Causal Analysis and Resolution (CAR)

• Configuration Management (CM)

• Decision Analysis and Resolution (DAR)

• Incident Resolution and Prevention (IRP)

• Integrated Work Management (IWM)

• Measurement and Analysis (MA)

• Organizational Process Definition (OPD)

• Organizational Process Focus (OPF)

• Organizational Performance Management (OPM)

• Organizational Process Performance (OPP)

• Organizational Training (OT)

• Process and Product Quality Assurance (PPQA)

• Quantitative Work Management (QWM)

• Requirements Management (REQM)

• Risk Management (RSKM)

• Supplier Agreement Management (SAM)

• Service Continuity (SCON)

• Service Delivery (SD)

• Service System Development (SSD)

• Service System Transition (SST)

• Strategic Service Management (STSM)

• Work Monitoring and Control (WMC)

• Work Planning (WP)

3.2.5 CMMI Process Model Uses

CMMI models are one foundation for well-managed and well-defined software development, acquisition, and services processes. In practice, organizations have been using them for many years to improve their processes, identifying areas for improvement, and implementing systematic improvement programs. Process models have been a valuable tool for executive managers and middle managers. There are many self-improvement programs as well as consultants for this area.

In academia, process models are routinely taught in software engineering degree programs and in some individual software engineering courses, so that graduates of these programs are familiar with them and know how to apply them. In capstone projects, students are frequently asked to select a development process from a range of models.

The next section describes leading models and frameworks that define processes and practices for software security. Such processes and practices are, in large part, in common use by a growing body of organizations that are developing software to be more secure.

3.3 Software Security Frameworks, Models, and Roadmaps

In addition to considering process models for software development and acquisition, a framework for building assured systems needs to build on and reflect known, accepted, common practice for software security. The number of promising frameworks and models for building more secure software is growing. For example, Microsoft has defined their SDL and made it publicly available. In their recently released version 6, the authors of Building Security In Maturity Model [McGraw 2015] have collected and analyzed software security practices in 78 organizations.

The following subsections summarize models, frameworks, and roadmaps and provide excerpts of descriptive information from publicly available websites and reports to provide an overview of the objectives and content of each effort. You should have a broad understanding of these models and their processes and practices to appreciate the current state of the practice in building secure software and to aid in identifying promising research opportunities to fill gaps.

3.3.1 Building Security In Maturity Model (BSIMM)

An introduction on the BSIMM website states the following [McGraw 2015]:

A maturity model is appropriate for building more secure software—a key component of building assured systems—because improving software security means changing the way an organization develops software over time.

The BSIMM is meant to be used by those who create and execute a software security initiative. Most successful initiatives are run by a senior executive who reports to the highest levels in the organization, such as the board of directors or the chief information officer. These executives lead an internal group that the BSIMM calls the software security group (SSG), charged with directly executing or facilitating the activities described in the BSIMM. The BSIMM is written with the SSG and SSG leadership in mind.

The BSIMM addresses the following roles:

• SSG (software security staff with deep coding, design, and architectural experience)

• Executives and middle management, including line-of-business owners and product managers

• Builders, testers, and operations staff

• Administrators

• Line of business owners

• Vendors

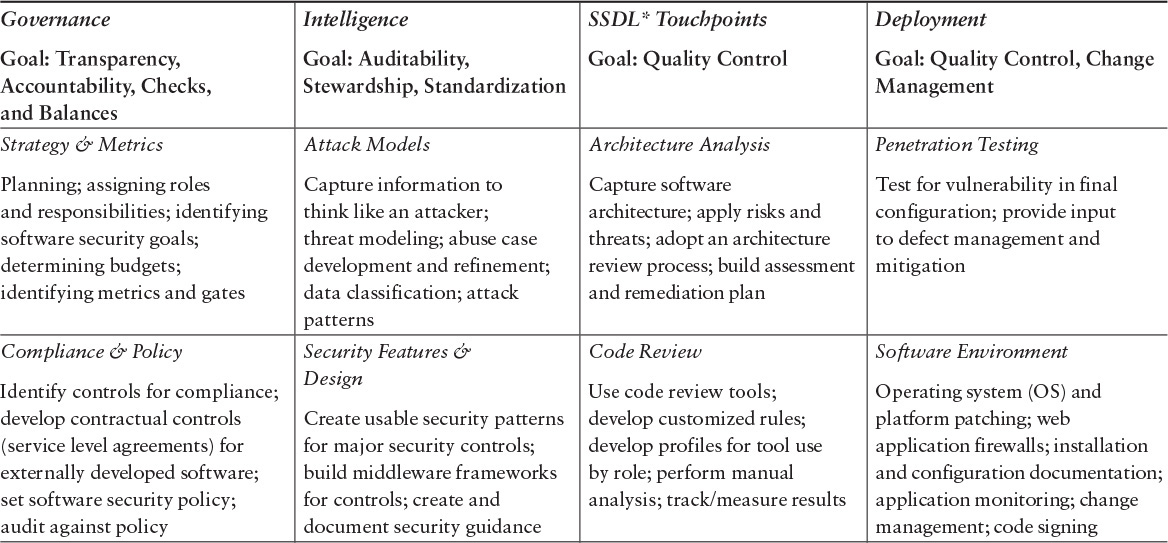

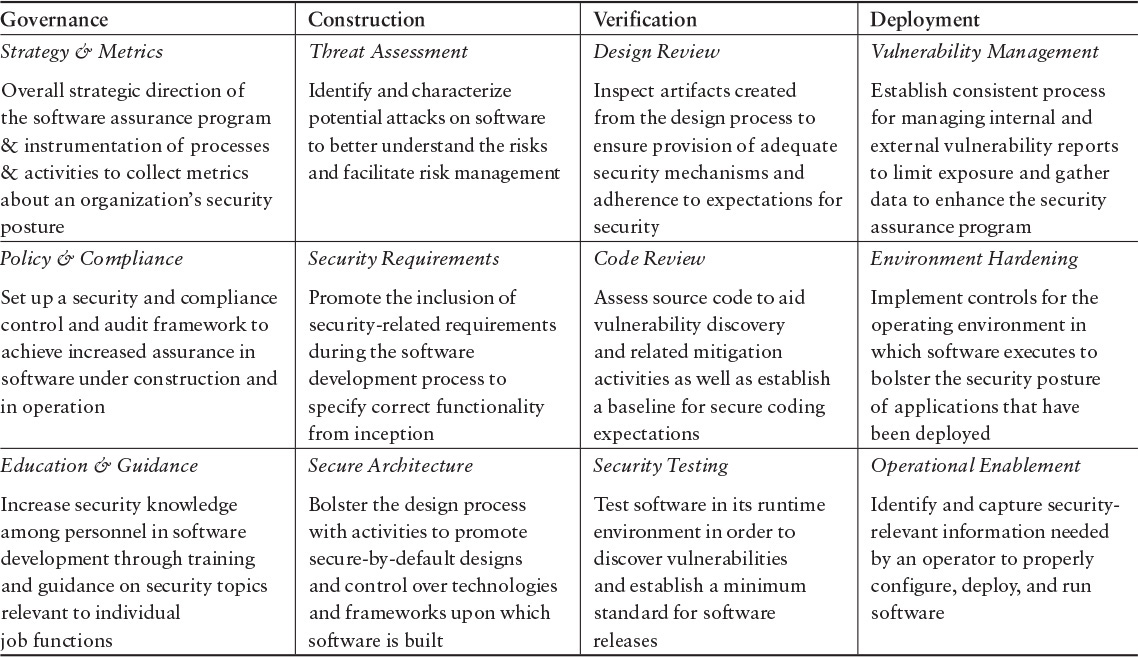

As an organizing structure for the body of observed practices, the BSIMM uses the software security framework (SSF) described in Table 3.1.

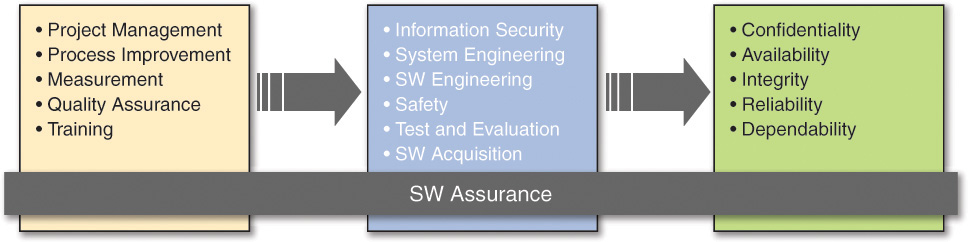

3.3.2 CMMI Assurance Process Reference Model

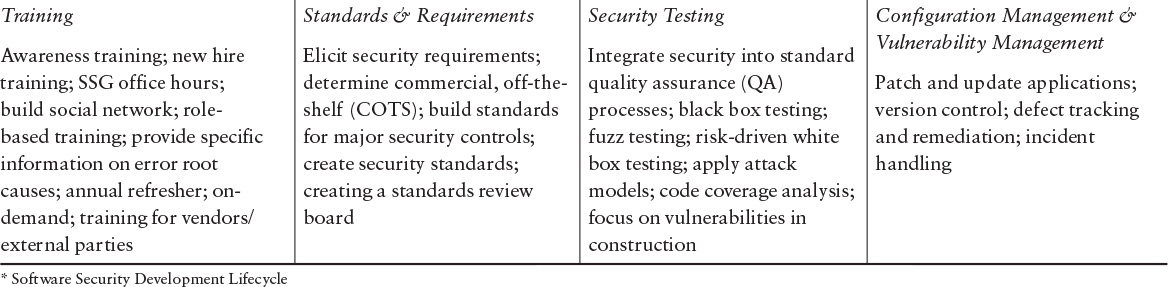

The Department of Homeland Security (DHS) Software Assurance (SwA) Processes and Practices Working Group developed a draft process reference model (PRM) for assurance in July 2008 [DHS 2008]. This PRM recommended additions to CMMI-DEV v1.2 to address software assurance. These also apply to CMMI-DEV v1.3. The “assurance thread” description2 includes Figure 3.1, which may be useful for addressing the lifecycle phase aspect of building assured systems.

2. https://buildsecurityin.us-cert.gov/swa/procwg.html

The DHS SwA Processes and Practices Working Group’s additions and updates to CMMI-DEV v1.2 and v1.3 are focused at the specific practices (SP) level for the following CMMI-DEV process areas (PAs):

• Process Management

• Organizational Process Focus

• Organizational Process Definition

• Organizational Training

• Project Planning

• Project Monitoring and Control

• Supplier Agreement Management

• Integrated Project Management

• Risk Management

• Engineering

• Requirements Development

• Technical Solution

• Verification

• Validation

• Support

• Measurement & Analysis

More recently, the CMMI Institute published “Security by Design with CMMI for Development, Version 1.3,” a set of additional process areas that integrate with CMMI [CMMI 2013].

3.3.3 Open Web Application Security Project (OWASP) Software Assurance Maturity Model (SAMM)

The OWASP website provides the following information on the Software Assurance Maturity Model (SAMM) [OWASP 2015]:

The SAMM presents success metrics for all activities in all 12 practices for all 4 critical business functions. Each practice has 3 objectives, and each objective has 2 activities, for a total of 72 activities.

3.3.4 DHS SwA Measurement Work

Nadya Bartol and Michele Moss, both of whom played important roles in the DHS SwA Measurement Working Group, led the development of several important metrics documents. These documents were published at an earlier time. Note that we discuss more recent work in the measurement area by the SEI in Chapter 6, “Metrics.”

According to the DHS SwA Measurement Working Group [DHS 2010]:

The following discussion is from the Practical Measurement Framework for Software Assurance and Information Security [Bartol 2008]:

The framework describes candidate goals and information needs for each stakeholder group. The framework then presents examples of supplier measures as a table, with columns for project activity, measures, information needs, and benefits. The framework includes supplier project activities—requirements management (five measures), design (three measures), development (six measures), test (nine measures)—and the entire software development lifecycle (SDLC) (three measures).

Examples of measures for acquirers are also presented and are intended to answer the following questions:

• Have SwA activities been adequately integrated into the organization’s acquisition process?

• Have SwA considerations been integrated into the SDLC and resulting product by the supplier?

The acquisition activities are planning (two measures), contracting (three measures), and implementation and acceptance (five measures).

Ten examples of measures for executives are presented. These are intended to answer the question “Is the risk generated by software acceptable to the organization?” The following are some of these examples of measures:

• Number and percentage of patches published on announced date

• Time elapsed for supplier to fix defects

• Number of known defects by type and impact

• Cost to correct vulnerabilities in operations

• Cost of fixing defects before system becomes operational

• Cost of individual data breaches

• Cost of SwA practices throughout the SDLC

Fifteen examples of measures for practitioners are presented. They are intended to answer the question “How well are current SwA processes and techniques mitigating software-related risks?”

3.3.5 Microsoft Security Development Lifecycle (SDL)

The Microsoft Security Development Lifecycle (SDL)3 is an industry-leading software security process. A Microsoft-wide initiative and a mandatory policy since 2004, the SDL has played a critical role in enabling Microsoft to embed security and privacy in its software and culture. Combining a holistic and practical approach, the SDL introduces security and privacy early and throughout all phases of the development process.

3. More information is available in The Security Development Lifecycle [Howard 2006], at the Microsoft Security Development Lifecycle website [Microsoft 2010a], and in the document Microsoft Security Development Lifecycle Version 5.0 [Microsoft 2010b].

The reliable delivery of more secure software requires a comprehensive process, so Microsoft defined a collection of principles it calls Secure by Design, Secure by Default, Secure in Deployment, and Communications (SD3+C) to help determine where security efforts are needed [Microsoft 2010b]:

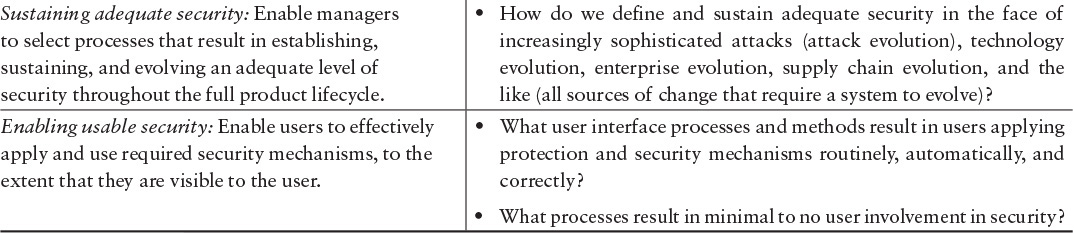

Figure 3.3 shows what the secure software development process model looks like.

Figure 3.3 Secure Software Development Process Model at Microsoft [Shunn 2013]

The Microsoft SDL documentation describes, in great detail, what architects, designers, developers, and testers are required to do during each lifecycle phase. The introduction states, “Secure software development has three elements—best practices, process improvements, and metrics. This document focuses primarily on the first two elements, and metrics are derived from measuring how they are applied” [Microsoft 2010b]. This description indicates that the document contains no concrete measurement-related information; measures would need to be derived from each of the lifecycle-phase practice areas.

3.3.6 SEI Framework for Building Assured Systems

In developing the Building Assured Systems Framework (BASF), we studied the available models, roadmaps, and frameworks. Given our deep knowledge of the MSwA2010 Body of Knowledge (BoK)—the core body of knowledge for a master of software architecture degree from Carnegie Mellon University—we decided to use it as an initial foundation for the BASF.

Maturity Levels

We assigned the following maturity levels to each element of the MSwA2010 BoK:

• L1—The approach provides guidance for how to think about a topic for which there is no proven or widely accepted approach. The intent of the area is to raise awareness and aid in thinking about the problem and candidate solutions. The area may also describe promising research results that may have been demonstrated in a constrained setting.

• L2—The approach describes practices that are in early pilot use and are demonstrating some successful results.

• L3—The approach describes practices that have been successfully deployed (mature) but are in limited use in industry or government organizations. They may be more broadly deployed in a particular market sector.

• L4—The approach describes practices that have been successfully deployed and are in widespread use. You can start using these practices today with confidence. Experience reports and case studies are typically available.

We developed these maturity levels to support our work in software security engineering [Allen 2008]. We associated the BoK elements and maturity levels by evaluating the extent to which relevant sources, practices, curricula, and courseware exist for a particular BoK element and the extent to which we have observed the element in practice in organizations.

MSwA2010 BoK with Outcomes and Maturity Levels

We found that the current maturity of the material being proposed for delivery in the MSwA2010 BoK varied. For example, a student would be expected to learn material at all maturity levels. If a practice was not very mature, we would still expect the student to be able to master it and use it in an appropriate manner after completing an MSwA program. We reasoned that the MSwA curriculum body of knowledge could be used as a basis for assessing maturity of software assurance practices, but to our knowledge, it has not been used for this purpose on an actual project, so it remains a hypothetical model. The portion of the table addressing risk management is shown below. The full table is contained in Appendix B, “The MSwA Body of Knowledge with Maturity Levels Added.”

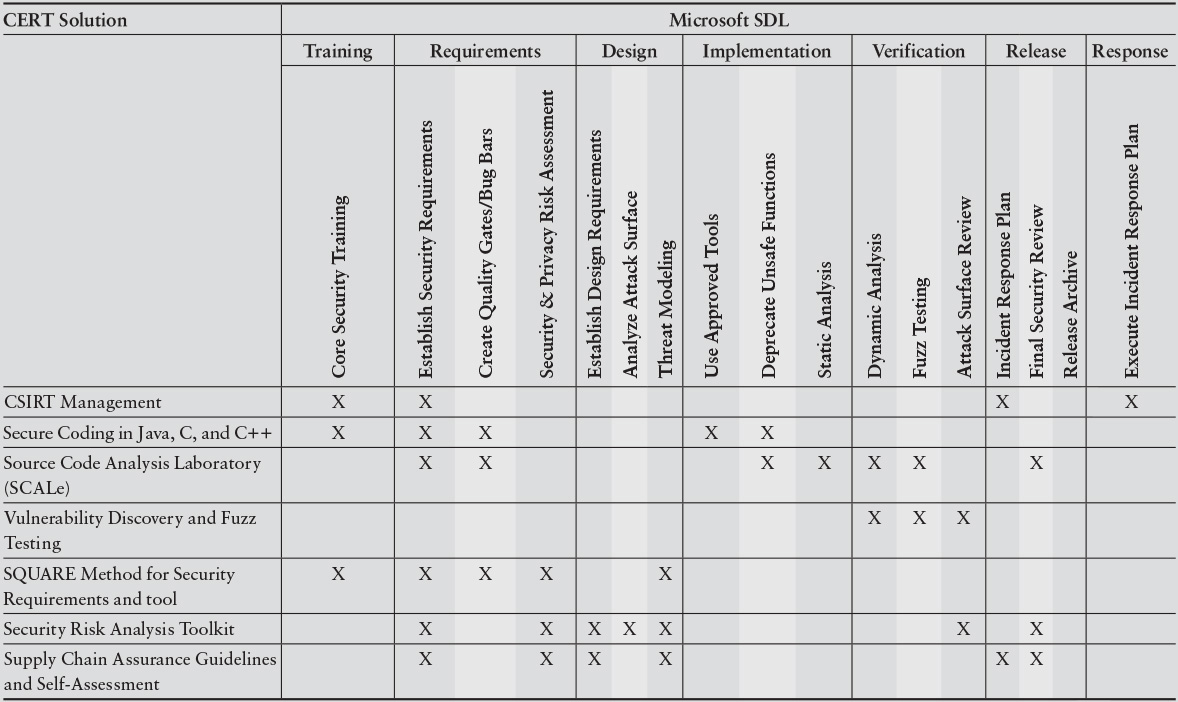

3.3.7 SEI Research in Relation to the Microsoft SDL

More recently, the SEI’s CERT Division examined the linkages between CERT research and the Microsoft SDL [Shunn 2013]. An excerpt from this report follows:

The report thus highlights a sample of CERT results with readily apparent connections to the SDL. Table 3.3 maps the CERT results to Microsoft SDL activities.

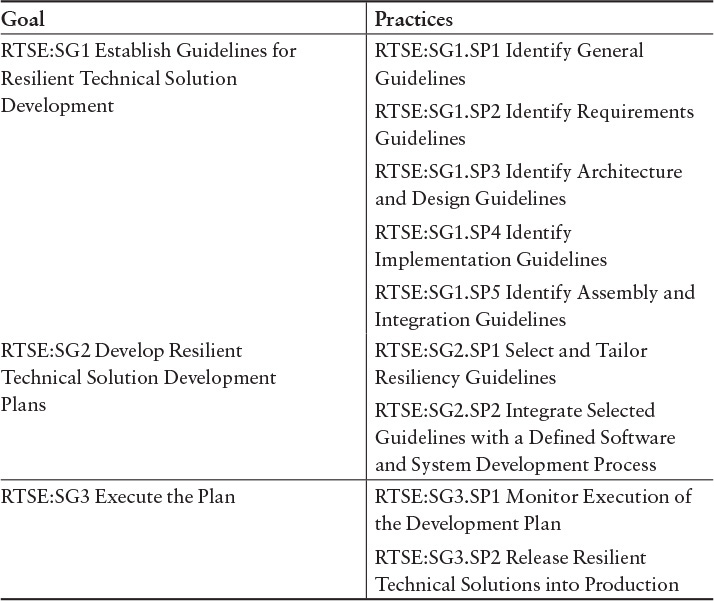

3.3.8 CERT Resilience Management Model Resilient Technical Solution Engineering Process Area

As is the case for software security and software assurance, resilience is a property of software and systems. Developing and acquiring resilient4 software and systems requires a dedicated process focused on this property that encompasses the software and system lifecycle. Version 1.1 of the CERT Resilience Management Model’s (CERT-RMM’s)5 Resilient Technical Solution Engineering (RTSE) process area defines what is required to develop resilient software and systems [Caralli 2011] (Version 1.2 is available as a free download. The associated release notes describe its updated features.6):

4. There is substantial overlap in the definitions of assured software (or software assurance) and resilient software (or software resilience). Resilient software is software that continues to operate as intended (including recovering to a known operational state) in the face of a disruptive event (satisfying business continuity requirements) so as to satisfy its confidentiality, availability, and integrity requirements (reflecting operational and security requirements) [Caralli 2011].

6. Version 1.2 of the Resilience Management Model document [Caralli 2016] can be downloaded from the CERT website (www.cert.org/resilience/products-services/cert-rmm/index.cfm).

Table 3.4 lists RTSE practices.

Organizations should consider the following goals—in addition to RTSE—when developing and acquiring software and systems that need to meet assurance and resiliency requirements [Caralli 2011]:

RTSE assumes that the organization has one or more existing, defined process for software and system development into which resiliency controls and activities can be integrated. If this is not the case, the organization should not attempt to implement the goals and practices identified in RTSE or in other CERT-RMM process areas as described above.

3.3.9 International Process Research Consortium (IPRC) Roadmap

From August 2004 to December 2006, the SEI’s process program sponsored a research consortium of 28 international thought leaders to explore process needs for today, the foreseeable future, and the unforeseeable future. One of the emerging research themes was the relationships between processes and product qualities, defined as “understanding if and how particular process characteristics can affect desired product (and service) qualities such as security, usability, and maintainability” [IPRC 2006]. As an example, or instantiation, of this research theme, two of the participating members, Julia Allen and Barbara Kitchenham, developed research nodes and research questions for security as a product quality. This content helps identify research topics and gaps that could be explored within the context of the BASF.

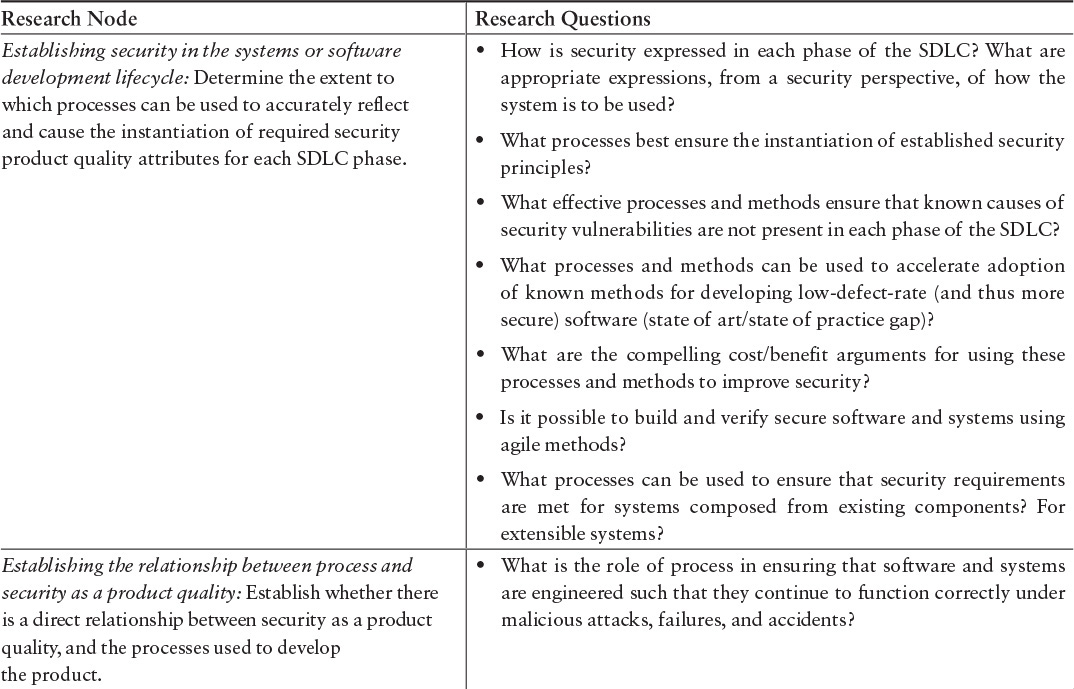

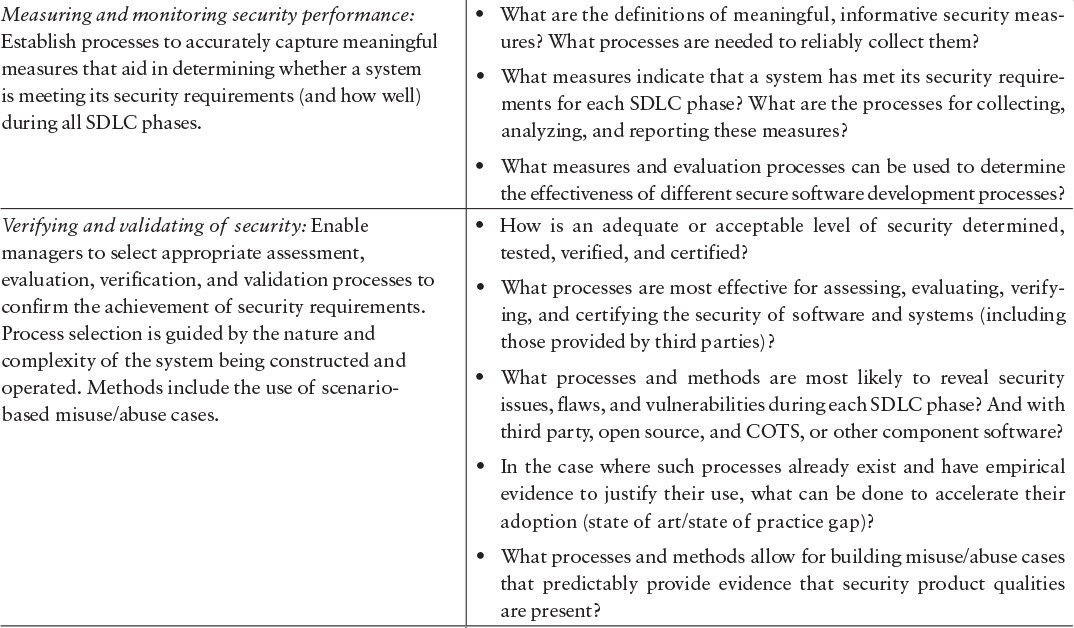

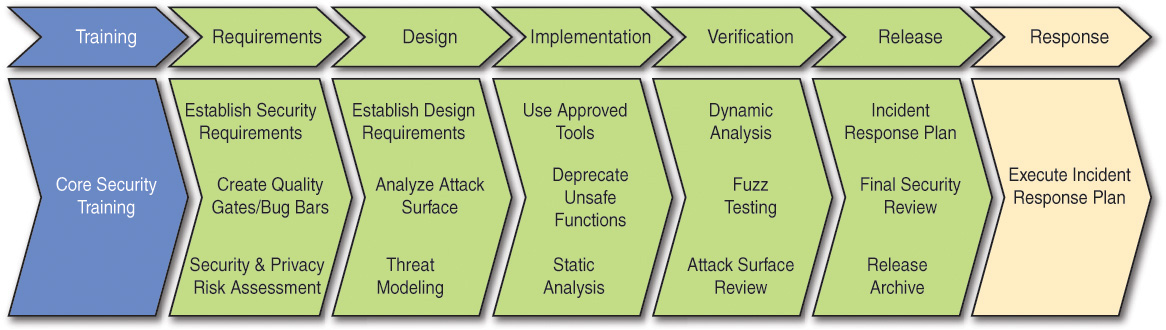

The descriptive material presented in Table 3.5 is excerpted from A Process Research Framework [IPRC 2006].

3.3.10 NIST Cyber Security Framework

The NIST Framework for Improving Critical Infrastructure Cybersecurity is the result of a February 2013 executive order from U.S. President Barack Obama titled Improving Critical Infrastructure Cybersecurity [White House 2013]. The order emphasized that “it is the Policy of the United States to enhance the security and resilience of the Nation’s critical infrastructure and to maintain a cyber-environment that encourages efficiency, innovation, and economic prosperity while promoting safety, security, business confidentiality, privacy, and civil liberties” [White House 2013].

The NIST framework provides an assessment mechanism that enables organizations to determine their current cyber security capabilities, set individual goals for a target state, and establish a plan for improving and maintaining cyber security programs [NIST 2014]. There are three components—Core, Profile, and Implementation tiers—as discussed in the following excerpt [NIST 2014]:

The Core presents the recommendations of industry standards, guidelines, and practices in a manner that allows for communication of cybersecurity activities and outcomes across the organization from the executive level to the implementation/operations level.

The Core is hierarchical and consists of five cyber security risk functions. Each function is further broken down into categories and subcategories.

The categories include processes, procedures, and technologies such as the following:

• Asset management

• Alignment with business strategy

• Risk assessment

• Access control

• Employee training

• Data security

• Event logging and analysis

• Incident response plans

Each subcategory provides a set of cyber security risk management best practices that can help organizations align and improve their cyber security capability based on individual business needs, tolerance to risk, and resource availability [NIST 2014].

The Core criteria are used to determine the outcomes necessary to improve the overall security effort of an organization. The unique requirements of industry, customers, and partners are then factored into the target profile. Comparing the current and target profiles identifies the gaps that should be closed to enhance cyber security. Organizations must prioritize the gaps to establish the basis for a prioritized roadmap to help make improvements.

Implementation tiers create a context that enables an organization to understand how its current cyber security risk management capabilities rate against the ideal characteristics described by the NIST Framework. Tiers range from Partial (Tier 1) to Adaptive (Tier 4). NIST recommends that organizations seeking to achieve an effective, defensible cyber security program progress to Tier 3 or 4.

3.3.11 Uses of Software Security Frameworks, Models, and Roadmaps

Because software security is a relatively new field, the frameworks, models, and roadmaps have not been in use, on average, for as long a time as the CMMI models, and their use is not as widespread. Nevertheless, there are important uses to consider.

Secure development process models are in use by organizations for which security is a priority:

• The Microsoft SDL and variants on it are in relatively wide use.

• BSIMM has strong participation, with 78 organizations represented in BSIMM6. Since its inception in 2008, the BSIMM has studied 104 organizations.

• Elements of CERT-RMM are also widely used.

There is less usage data on secure software process models than on the more general software process models, so the true extent of usage is hard to assess. As organizations and governments become more aware of the need for software security, we expect usage of these models to increase, and we expect to see additional research in this area, perhaps with the appearance of new models.

In academia, in addition to traditional software process models, software security courses often present one or more of the secure development models and processes. Such courses occur at all levels of education, but especially at the master’s level. Individual and team student projects frequently use the models or their individual components and provide a rich learning environment for students learning about secure software development.

3.4 Summary

This chapter presents a number of frameworks and models that can be used to help support cyber security decision making. These frameworks and models include process models, security frameworks and models in the literature, and the SEI efforts in this area. We do a deeper dive for some of these topics in Chapter 7, “Special Topics in Cyber Security Engineering,” which provides further discussion of governance considerations for cyber security engineering, security requirements engineering for acquisition, and standards.

As noted earlier in this chapter, we developed maturity levels to support our work in software security engineering [Allen 2008]. Since 2008, some of the practice areas have increased in maturity. Nevertheless, we believe you can still apply the maturity levels to assess whether a specific approach is sufficiently mature to help achieve your cyber security goals. Our earlier work [Allen 2008] also included a recommended strategy and suggested order of practice implementation. This work remains valid today, and we reiterate the maturity levels here. They also appear in Chapter 8, “Summary and Plan for Improvements in Cyber Security Engineering Performance,” where we rate the maturity levels of the methods presented throughout the book.