Chapter 1. Fundamentals of Networking Protocols and Networking Devices

This chapter covers the following topics:

![]() Introduction to TCP/IP and OSI models

Introduction to TCP/IP and OSI models

![]() Wired LAN and Ethernet

Wired LAN and Ethernet

![]() Frame switching

Frame switching

![]() Hub, switch, and router

Hub, switch, and router

![]() Wireless LAN and technologies

Wireless LAN and technologies

![]() Wireless LAN controller and access point

Wireless LAN controller and access point

![]() IPv4 and IPv6 addressing

IPv4 and IPv6 addressing

![]() IP routing

IP routing

![]() ARP, DHCP, ICMP, and DNS

ARP, DHCP, ICMP, and DNS

![]() Transport layer protocols

Transport layer protocols

Welcome to the first chapter of the CCNA Cyber Ops SECFND #210-250 Official Cert Guide. In this chapter, we go through the fundamentals of networking protocols and explore how devices such as switches and routers work to allow two hosts to communicate with each other, even if they are separated by many miles.

If you are already familiar with these topics—for example, if you already have a CCNA Routing and Switching certification—this chapter will serve as a refresher on protocols and device operations. If, on the other hand, you are approaching these topics for the first time, you’ll learn about the fundamental protocols and devices at the base of Internet communication and how they work.

This chapter begins with an introduction to the TCP/IP and OSI models and then explores link layer technologies and protocols—specifically the Ethernet and Wireless LAN technologies. We then discuss how the Internet Protocol (IP) works and how a router uses IP to move packets from one site to another. Finally, we look into the two most used transport layer protocols: Transmission Control Protocol (TCP) and User Datagram Protocol (UDP).

“Do I Know This Already?” Quiz

The “Do I Know This Already?” quiz helps you identify your strengths and deficiencies in this chapter’s topics. The 13-question quiz, derived from the major sections in the “Foundation Topics” portion of the chapter, helps you determine how to spend your limited study time. You can find the answers in Appendix A Answers to the “Do I Know This Already?” Quizzes and Q&A Questions.

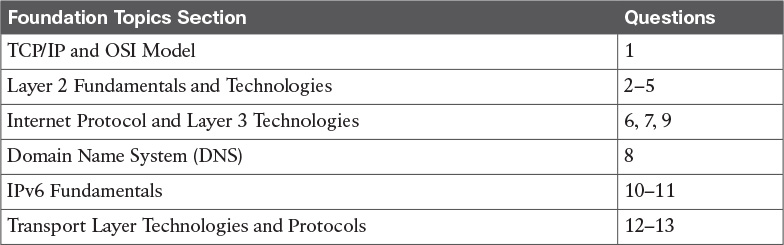

Table 1-1 outlines the major topics discussed in this chapter and the “Do I Know This Already?” quiz questions that correspond to those topics.

Table 1-1 “Do I Know This Already?” Section-to-Question Mapping

1. Which layer of the TCP/IP model is concerned with end-to-end communication and offers multiplexing service?

a. Transport

b. Internet

c. Link layer

d. Application

2. Which statement is true concerning a link working in Ethernet half-duplex mode?

a. A collision cannot happen.

b. When a collision happens, the two stations immediately retransmit.

c. When a collision happens, the two stations wait for a random time before retransmitting.

d. To avoid a collision, stations wait a random time before transmitting.

3. What is the main characteristic of a hub?

a. It regenerates the signal and retransmits on all ports.

b. It uses a MAC address table to switch frames.

c. When a packet arrives, the hub looks up the routing table before forwarding the packet.

d. It supports full-duplex mode of transmission.

4. Where is the information about ports and device Layer 2 addresses kept in a switch?

a. MAC address table

b. Routing table

c. L2 address table

d. Port table

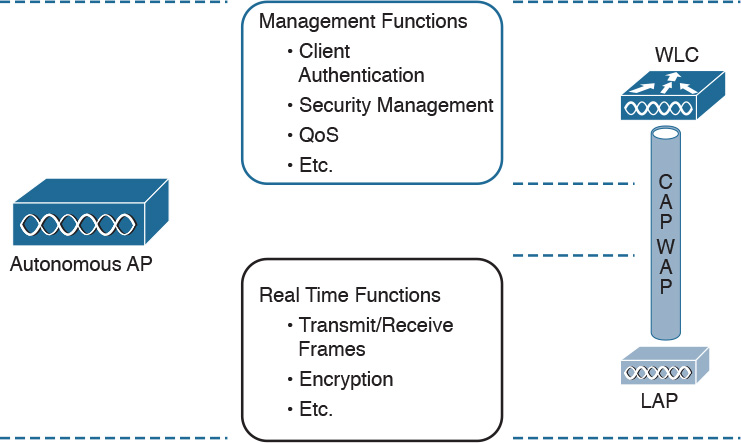

5. Which of the following features are implemented by a wireless LAN controller? (Select all that apply.)

a. Wireless station authentication

b. Quality of Service

c. Channel encryption

d. Transmission and reception of frames

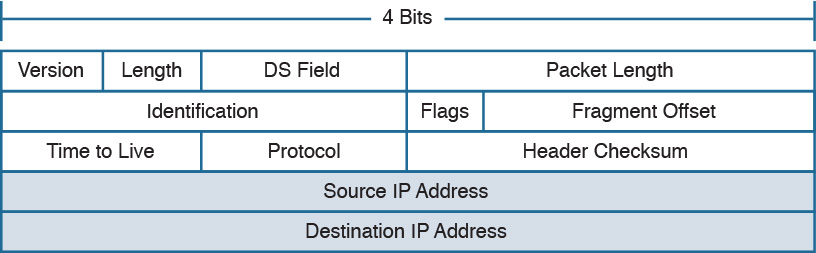

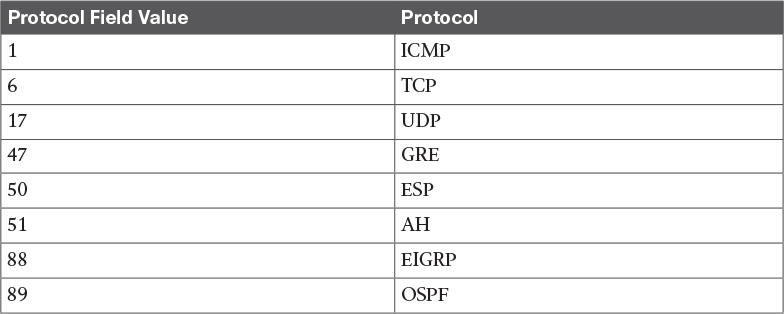

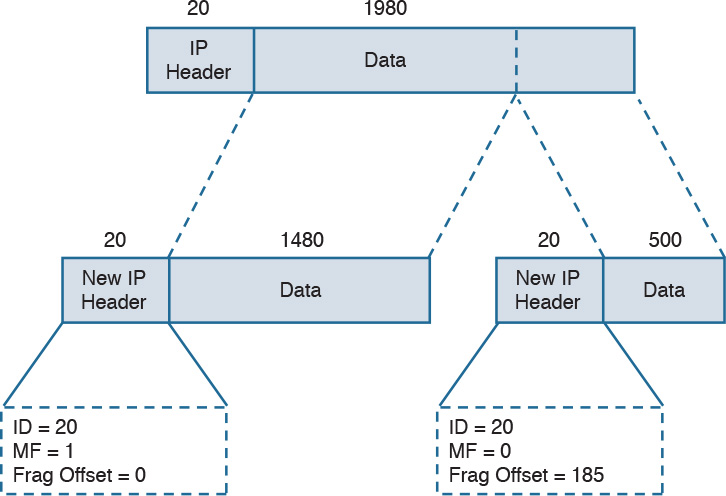

6. Which IP header field is used to recognize fragments from the same packet?

a. Identification

b. Fragment Offset

c. Flags

d. Destination Address

7. Which protocol is used to request a host MAC address given a known IP address?

a. ARP

b. DHCP

c. ARPv6

d. DNS

8. Which type of query is sent from a DNS resolver to a DNS server?

a. Recursive

b. Iterative

c. Simple

d. Type Q query

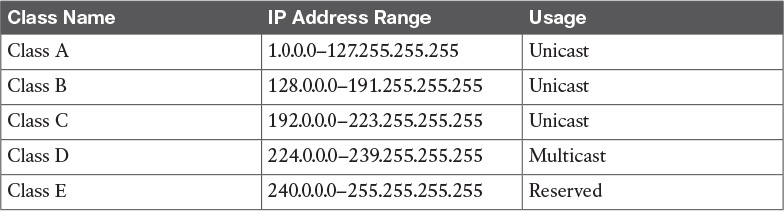

9. How many host IPv4 addresses are possible in a /25 network?

a. 126

b. 128

c. 254

d. 192

10. How many bits can be used for host IPv6 addresses assignment in the 2345::/64 network?

a. 48

b. 64

c. 16

d. 264

11. What is SLAAC used for?

a. To provide an IPv6 address to a client

b. To route IPv6 packets

c. To assign a DNS server

d. To provide a MAC address given an IP address

12. Which one of these protocols requires a connection to be established before transmitting data?

a. TCP

b. UDP

c. IP

d. OSPF

13. What is the TCP window field used for?

a. Error detection

b. Flow control

c. Fragmentation

d. Multiplexing

Foundation Topics

TCP/IP and OSI Model

Two main models are currently used to explain the operation of an IP-based network. These are the TCP/IP model and the Open System Interconnection (OSI) model. This section provides an overview of these two models.

TCP/IP Model

The TCP/IP model is the foundation for most of the modern communication networks. Every day, each of us uses some application based on the TCP/IP model to communicate. Think, for example, about a task we consider simple: browsing a web page. That simple action would not be possible without the TCP/IP model.

The TCP/IP model’s name includes the two main protocols we will discuss in the course of this chapter: Transmission Control Protocol (TCP) and Internet Protocol (IP). However, the model goes beyond these two protocols and defines a layered approach that can map nearly any protocol used in today’s communication.

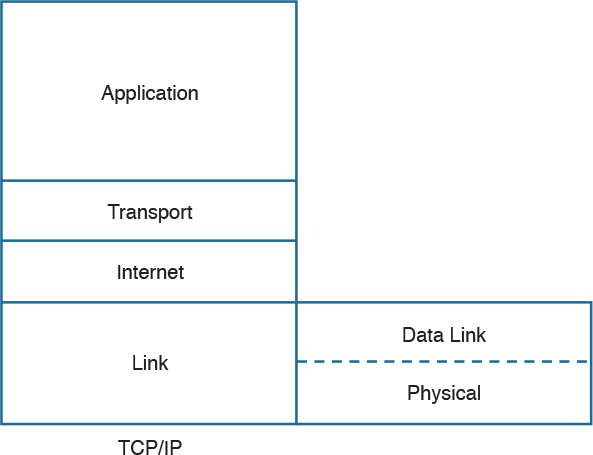

In its original definition, the TCP/IP model included four layers, where each of the layers would provide transmission and other services for the level above it. These are the link layer, internet layer, transport layer, and application layer.

In its most modern definition, the link layer is split into two additional layers to clearly demark the physical and data link type of services and protocols included in this layer. Internet layer is also sometimes called the networking layer, which is based on another very known model, the OSI model, which is described in the next section. Figure 1-1 shows the TCP/IP stack model.

The TCP/IP model works on two main concepts that define how the layers interact:

![]() On the same host, each layer works by providing services for the layer above it on the TCP/IP stack.

On the same host, each layer works by providing services for the layer above it on the TCP/IP stack.

![]() On different hosts, a same layer communication is established by using the same layer protocol.

On different hosts, a same layer communication is established by using the same layer protocol.

For example, on your personal computer, the TCP/IP stack is implemented to allow networking communication. The link layer provides services for the IP layer (for example, encapsulation of an IP packet in an Ethernet frame). The IP layer provides services to the transport layer (for example, IP routing and IP addressing), and so on. These are all examples of services provided to the layer above it within the host.

Now imagine that your personal computer wants to connect to a web server (for example, to browse a web page). The web server will also implement the TCP/IP stack. In this case, the IP layer of your personal computer and the IP layer of the web server will use a common protocol, IP, for the communication. The same thing will happen with the transport protocol, where the two devices will use TCP, and so on. These are examples of the same layer protocol used on different hosts to communicate.

Later in this chapter, the “Networking Communication with the TCP/IP Model,” section provides more detail about how the communication works between two hosts and how the TCP/IP stack is used on the same host.

The list that follows analyzes each layer in a bit more detail:

![]() Link layer: The link layer provides physical transmission support and includes the protocols used to transmit information over a link between two devices. In simple terms, the link layer includes the hardware and protocol necessary to send information between two hosts that are connected by a physical link (for example, a cable) or over the air (for example, via radio waves). It also includes the notion of and mechanisms for information being replicated and retransmitted over several ports or links by dedicated devices such as switches and bridges.

Link layer: The link layer provides physical transmission support and includes the protocols used to transmit information over a link between two devices. In simple terms, the link layer includes the hardware and protocol necessary to send information between two hosts that are connected by a physical link (for example, a cable) or over the air (for example, via radio waves). It also includes the notion of and mechanisms for information being replicated and retransmitted over several ports or links by dedicated devices such as switches and bridges.

Because different physical means are used to transmit information, there are several protocols that work at the link layer. One of the most popular is the Ethernet protocol. As mentioned earlier, nowadays the link layer is usually split further in the physical layer, which is concerned about physical bit transmission, and the data link layer, which provides encapsulation and addressing facilities as well as abstraction for the upper layers.

At link layer, the message unit is called a frame.

![]() Internet layer: Of course, not all devices can be directly connected to each other, so there is a need to transmit the information across multiple devices. The Internet layer provides networking services and includes protocols that allow for the transmission of information through multiple hops. To do that, each host is identified by an Internet Protocol (IP) address, or a different address if another Internet Protocol type is used. Each hop device between two hosts, called networking nodes, knows how to reach the destination IP address and transmit the information to the next best node to reach the destination. The nodes are said to perform the routing of the information, and the way each node, also called router, determines the best next node to the destination is called the routing protocol.

Internet layer: Of course, not all devices can be directly connected to each other, so there is a need to transmit the information across multiple devices. The Internet layer provides networking services and includes protocols that allow for the transmission of information through multiple hops. To do that, each host is identified by an Internet Protocol (IP) address, or a different address if another Internet Protocol type is used. Each hop device between two hosts, called networking nodes, knows how to reach the destination IP address and transmit the information to the next best node to reach the destination. The nodes are said to perform the routing of the information, and the way each node, also called router, determines the best next node to the destination is called the routing protocol.

At the Internet layer, the message unit is called a packet.

![]() Transport layer: When transmitting information, the sending host knows when the information is sent, but has no way to know whether it actually made it to the destination. The transport layer provides services to successfully transfer information between two end points. It abstracts the lower-level layer and is concerned about the end-to-end process. For example, it is used to detect whether any part of the information went missing. It also provides information about which type of information is being transmitted. For example, a host may want to request a web page and also start an FTP transaction. How do we distinguish between these two actions? The transport layer helps to separate the two requests by using the concept of a transport layer port. Each service is enabled on a different transport layer port—for example, port 80 for a web request or port 21 for an FTP transaction. So when the destination host receives a request on port 80, it knows that this needs to be passed to the application layer handling web requests. This type of service provided by the transport layer is called multiplexing.

Transport layer: When transmitting information, the sending host knows when the information is sent, but has no way to know whether it actually made it to the destination. The transport layer provides services to successfully transfer information between two end points. It abstracts the lower-level layer and is concerned about the end-to-end process. For example, it is used to detect whether any part of the information went missing. It also provides information about which type of information is being transmitted. For example, a host may want to request a web page and also start an FTP transaction. How do we distinguish between these two actions? The transport layer helps to separate the two requests by using the concept of a transport layer port. Each service is enabled on a different transport layer port—for example, port 80 for a web request or port 21 for an FTP transaction. So when the destination host receives a request on port 80, it knows that this needs to be passed to the application layer handling web requests. This type of service provided by the transport layer is called multiplexing.

At this layer, the message unit is called a segment.

![]() Application layer: The application layer is the top layer and is the one most familiar to end users. For example, at the application layer, a user may use the email client to send an email message or use a web browser to browse a website. Both of these actions map to a specific application, which uses a protocol to fulfill the service.

Application layer: The application layer is the top layer and is the one most familiar to end users. For example, at the application layer, a user may use the email client to send an email message or use a web browser to browse a website. Both of these actions map to a specific application, which uses a protocol to fulfill the service.

In this example, the Simple Message Transfer Protocol (SMTP) is used to handle the email transfer, whereas the Hypertext Transfer Protocol (HTTP) is used to request a web page within a browser. At this level, the protocols are not concerned with how the information will reach the destination, but only work on defining the content of the information being transmitted.

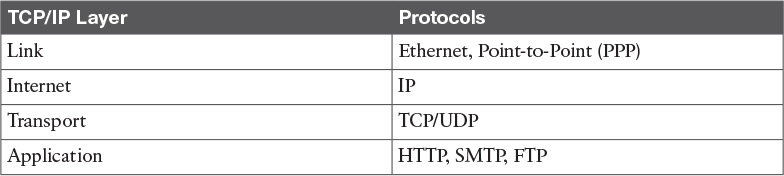

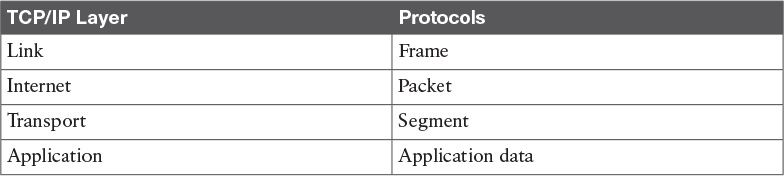

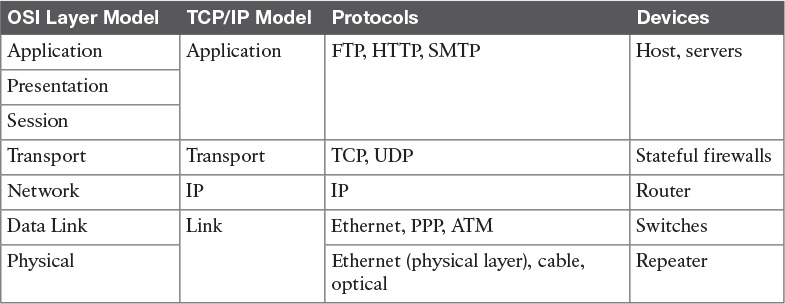

Table 1-2 shows examples of protocols working at each layer of the TCP/IP model.

Table 1-3 summarizes what message units are referred to as at each layer.

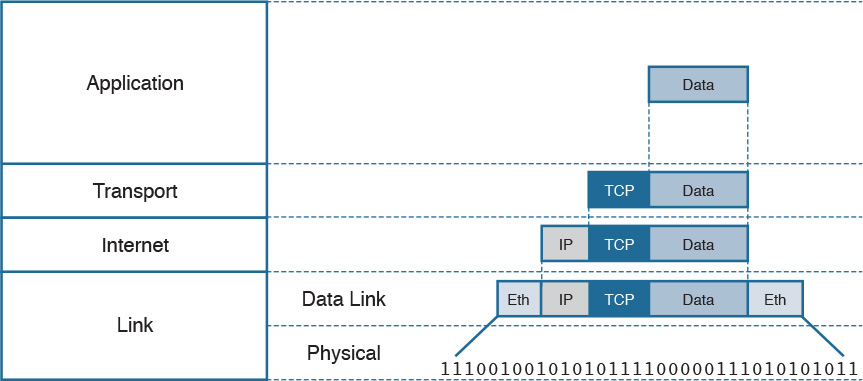

TCP/IP Model Encapsulation

In the TCP/IP model, each layer provides services for the level above it. Protocols at each layer include a protocol header and in some cases a trailer to the information provided by the upper layer. The protocol header includes enough information for the protocol to work toward the delivery of the information. This process is called encapsulation.

When the information arrives to the destination, the inverse process is used. Each layer reads the information present in the header of the protocol working at that specific layer, performs an action based on that information, and, if needed, passes the remaining information to the next layer in the stack. This process is called decapsulation.

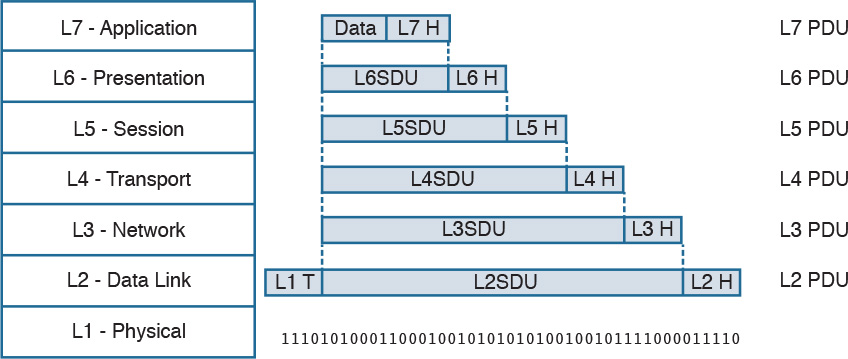

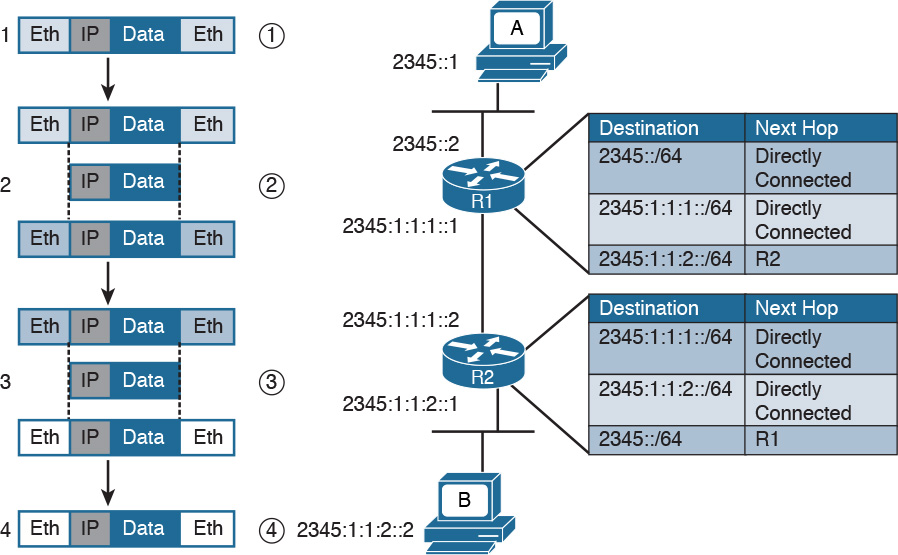

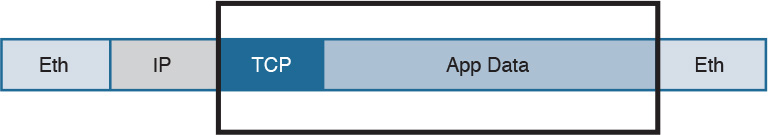

Figure 1-2 shows an example of encapsulation.

Referring to Figure 1-2, let’s assume that this represents the TCP/IP stack of a host, for example Host A, trying to request a web page using HTTP. Let’s see how the encapsulation works, step by step:

Step 1. In this example, the host has requested a web page using the HTTP application layer protocol. The HTTP application generates the information, represented as HTTP “data” in this example.

Step 2. On the host, the TCP/IP implementation would detect that HTTP uses TCP at the transport layer and will send the HTTP data to the transport layer for further handling. The protocol at the transport layer, TCP, will create a TCP header, which includes information such as the service port (TCP port 80 for a web page request), and will send it to the next layer, the Internet layer, for further processing. The TCP header plus the payload forms a TCP segment.

Step 3. The Internet layer receives the TCP information, attaches an IP header, and encapsulates it in an IP packet. The IP header will contain information to handle the packet at the Internet layer. This includes, for example, the IP addresses of the source and destination.

Step 4. The IP packet is then passed to the link layer for further processing. The TCP/IP stack detects that it needs to use Ethernet to transmit the frame to the next device. It will add an Ethernet header and trailer and transmit the frame to the physical network interface card (NIC), which will take care of the physical transmission of the frame.

When the information arrives to the destination, the receiving host will start from the bottom of the TCP/IP stack by receiving an Ethernet frame. The link layer of the destination host will read and process the header and trailer, and then pass the IP packet to the Internet layer for further processing.

The same process happens at the Internet layer, and the TCP segment is passed to the transport layer, which will again process the TCP header information and pass the HTTP data for final processing to the HTTP application.

Networking Communication with the TCP/IP Model

Let’s look back at the example of browsing a web page and see how the TCP/IP model is used to transmit and receive information through a networking connection path.

A networking device is a device that implements the TCP/IP model. The model may be fully implemented (for example, in the case of a user computer or a server) or partially implemented (for example, a router might implement the TCP/IP stack only up to the Internet layer).

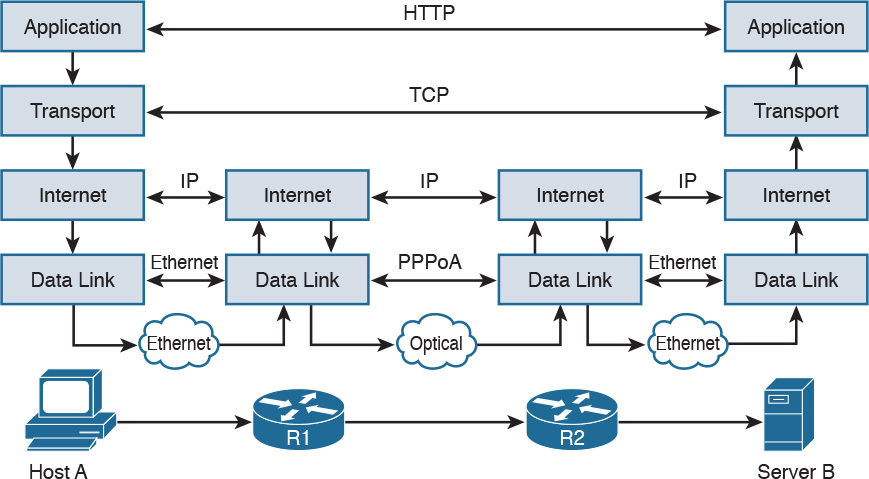

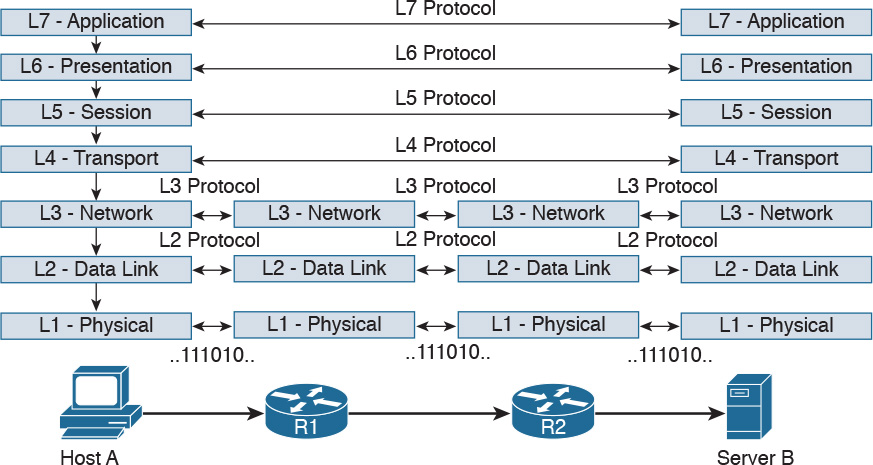

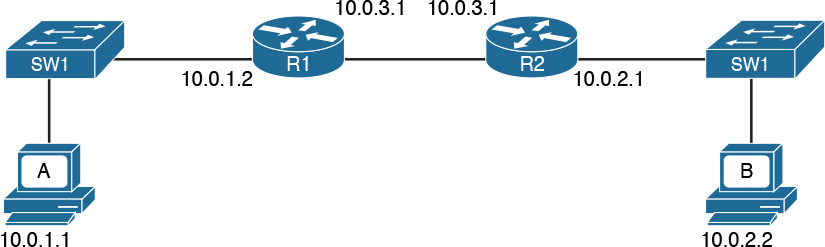

Figure 1-3 shows the logical topology. It includes two hosts: Host A, which is requesting a web page, and Server B, which is the destination of the request. The network connectivity is provided by two routers: R1 and R2, which are connected via an optical link. The host and server are directly connected to R1 and R2, respectively, with a physical cable.

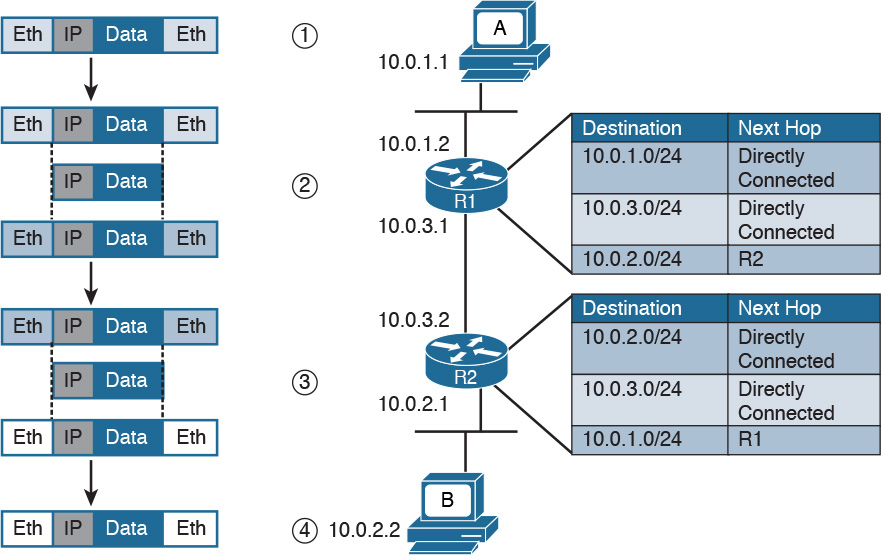

Figure 1-4 shows how each TCP/IP model layer interacts in this case.

Referring to Figure 1-4, let’s see how the steps are executed:

Step 1. The HTTP application on Host A will create an HTTP Application message that includes an HTTP header and the contents of the request in the payload. This will be encapsulated up to the link layer, as described in Figure 1-2, and transmitted over the cable to R1.

Step 2. The R1 link layer will receive the frame, extract the IP packet, and send it to the IP layer. Because the main function of the router is to forward the IP packet, it will not further decapsulate the packet. It will use the information in the IP header to forward the packet to the best next router, R2. To do that, it will encapsulate the IP packet in a new link layer frame—for example, Point-to-Point over ATM (PPPoA)—and send the frame on the physical link toward R2.

Step 3. R2 will follow the same process that R1 followed in step 2 and will send the IP packet encapsulated in a new Ethernet frame to Host B.

Step 4. Server B’s link layer will decapsulate the frame and send it to the Internet layer.

Step 5. The Internet layer detects that the packet is destined to Server B itself by looking into the IP header information (more specifically the value of the destination IP address). It strips the IP header and passes the TCP segment to the transport layer.

Step 6. The transport layer uses the port information included in the TCP header to determine to which application to pass the data (in this case, the web service application).

Step 7. The application layer, the web service, finally receives the request and may decide to respond (for example, by providing the web page to Host A). The process will start again, with the web service creating some data and passing it to the HTTP application layer protocol for handling.

The example in Figure 1-4 is very simplistic. For example, TCP requires a connection to be established before transmitting data. However, it is important that the main idea behind the TCP/IP model is clear as a basis for understanding how the various protocols work.

Open System Interconnection Model

The Open System Interconnection (OSI) reference model is another model that uses abstraction layers to represent the operation of communication systems. The idea behind the design of the OSI model is to be comprehensive enough to take into account advancement in network communications and to be general enough to allow several existing models for communication systems to transition to the OSI model.

The OSI model presents several similarities with the TCP/IP model described in the previous section. One of the most important similarities is the use of abstraction layers. As with TCP/IP, each layer provides service for the layer above it within the same computing device, while it interacts at the same layer with other computing devices.

The OSI model includes seven abstract layers, each representing a different function and service within a communication network:

![]() Physical layer—Layer 1 (L1): Provides services for the transmission of bits over the data link.

Physical layer—Layer 1 (L1): Provides services for the transmission of bits over the data link.

![]() Data link layer—Layer 2 (L2): Includes protocols and functions to transmit information over a link between two connected devices. For example, it provides flow control and L1 error detection.

Data link layer—Layer 2 (L2): Includes protocols and functions to transmit information over a link between two connected devices. For example, it provides flow control and L1 error detection.

![]() Network layer—Layer 3 (L3): This layer includes the function necessary to transmit information across a network and provides abstraction on the underlying means of connection. It defines L3 addressing, routing, and packet forwarding.

Network layer—Layer 3 (L3): This layer includes the function necessary to transmit information across a network and provides abstraction on the underlying means of connection. It defines L3 addressing, routing, and packet forwarding.

![]() Transport layer—Layer 4 (L4): This layer includes services for end-to-end connection establishment and information delivery. For example, it includes error detection, retransmission capabilities, and multiplexing.

Transport layer—Layer 4 (L4): This layer includes services for end-to-end connection establishment and information delivery. For example, it includes error detection, retransmission capabilities, and multiplexing.

![]() Session layer—Layer 5 (L5): This layer provides services to the presentation layer to establish a session and exchange presentation layer data.

Session layer—Layer 5 (L5): This layer provides services to the presentation layer to establish a session and exchange presentation layer data.

![]() Presentation layer—Layer 6 (L6): This layer provides services to the application layer to deal with specific syntax, which is how data is presented to the end user.

Presentation layer—Layer 6 (L6): This layer provides services to the application layer to deal with specific syntax, which is how data is presented to the end user.

![]() Application layer—Layer 7 (L7): This is the last (or first) layer of the OSI model (depending on how you see it). It includes all the services of a user application, including the interaction with the end user.

Application layer—Layer 7 (L7): This is the last (or first) layer of the OSI model (depending on how you see it). It includes all the services of a user application, including the interaction with the end user.

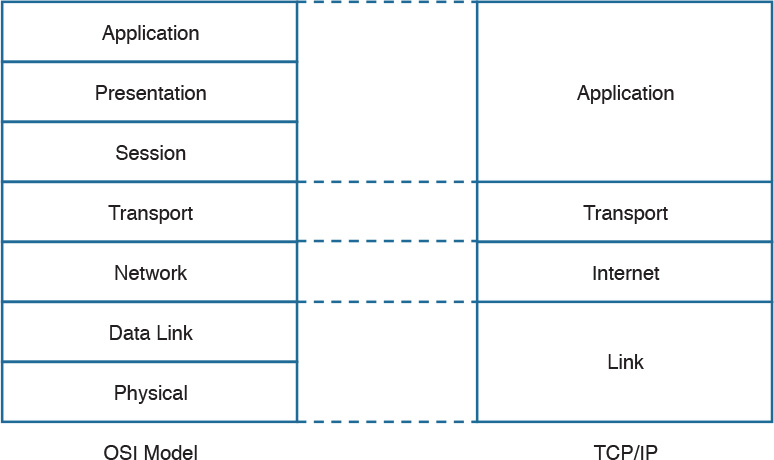

The functionalities of the OSI layers can be mapped to similar functionalities provided by the TCP/IP model. It is sometimes common to use OSI layer terminology to indicate a protocol operating at a specific layer, even if the communication device implements the TCP/IP model instead of the OSI model.

Figure 1-5 shows how each layer of the OSI model maps to the corresponding TCP/IP layer.

The physical and data link layers of the OSI model provide the same functions as the link layer in the TCP/IP model. The network layer can be mapped to the Internet layer, and the transport layer in OSI provides similar services as the transport layer in TCP/IP. The OSI session, presentation, and application layers map to the TCP/IP application layer.

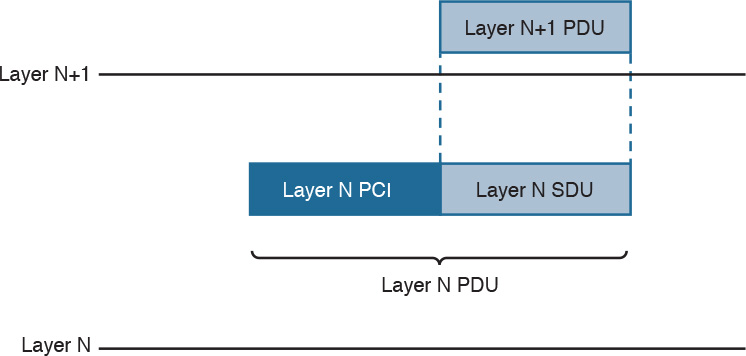

Within the same host, each layer interacts with the adjacent layer in a way that is similar to the encapsulation performed in the TCP/IP model. The encapsulation is formalized in the OSI model as follows:

![]() Protocol control information (PCI) for a layer (N) is the information added by the protocol.

Protocol control information (PCI) for a layer (N) is the information added by the protocol.

![]() A protocol data unit (PDU) for a layer (N) is composed by the data produced at that layer plus the PCI for that layer.

A protocol data unit (PDU) for a layer (N) is composed by the data produced at that layer plus the PCI for that layer.

![]() A service data unit (SDU) for a layer (N) is the (N+1) layer PDU.

A service data unit (SDU) for a layer (N) is the (N+1) layer PDU.

Figure 1-6 shows the relationship between PCI, PDU, and SDU.

For example, a TCP segment includes the TCP header, which maps to the L4PCI and a TCP payload, including the data to transmit. Together, they form a L4PDU. When the L4PDU is passed to the networking layer (for example, to be processed by IP), the L4PDU is the same as the L3SDU. IP will add an IP header, the L3PCI. The L3PCI plus the L3SDU will form the L3PDU, and so on.

The encapsulation process works in a similar way to the TCP/IP model. Each layer protocol adds its own protocol header and passes the information to the lower-layer protocol.

Figure 1-7 shows an example of encapsulation in the OSI model.

Table 1-4 shows examples of protocols and devices that work at a specific OSI layer. Note that each device is mapped to a level related to its main function capability. For example, a router’s main function is forwarding packets based on L3 information, so it is usually referred to as an L3 device; however, it also needs to incorporate L2 and L1 functionalities. Furthermore, a router may implement the full OSI model (for example, because it implements some additional features such as firewalling or VPN). The same rationale could be applied to firewalls. They are usually classified as L4 devices; however, most of the time they are able to inspect traffic up to the application layer.

The flow of information through a network in the OSI model is similar to what’s described in Figure 1-4 for the TCP/IP model. This is not by chance, because the OSI model has been designed to offer compatibility and enable the transition to the OSI model from multiple other communication models (for example, from TCP/IP).

Figure 1-8 shows a network implementing the OSI model.

In the rest of this book, we will use the OSI model and TCP/IP model layer names interchangeably.

Layer 2 Fundamentals and Technologies

This section goes through the fundamentals of the link layer (or Layer 2). Although it is not required to know specific implementations and configurations, the CCNA Cyber Ops SECFND exam requires candidates to understand the various link layer technologies, such as hubs, bridges, and switches, and their behavior. Candidates also need to understand the protocols that enable the link layer communication. Readers interested in learning more about Layer 2 technologies and protocols can refer to CCNA Routing and Switching materials for more comprehensive information on the topic.

Two very well-known concepts used to describe communication networks at Layer 2 are local area network (LAN) and wide area network (WAN). As the names suggest, a LAN is a collection of devices, protocols, and technologies operating nearby each other, whereas a WAN typically deals with devices, protocols, and technologies used to transmit information over a long distance.

The next sections introduce two of the most used LAN types: wired LANs (specifically Ethernet-based LANs) and wireless LANs.

Ethernet LAN Fundamentals and Technologies

Ethernet is a protocol used to provide transmission and services for the physical and data link layers, and it is described in the IEEE 802.3 standards collection. Ethernet is part of the larger IEEE 802 standards for LAN communication. Another example of the IEEE 802 standards is 802.11, which covers wireless LAN.

The Ethernet collection includes standards specifying the functionality at the physical layer and data link layer. The Ethernet physical layer includes several standards, depending on the physical means used to transmit the information. The data link layer functionality is provided by the Ethernet Medium Access Control (MAC) described in IEEE 802.3, together with the Logical Link Control (LLC) described in IEEE 802.2.

Note that MAC is sometimes referred to as Media Access Control instead of Medium Access Control. Both ways are correct according to the IEEE 802. In the rest of this document we will use Medium Access Control or simply MAC.

LLC was initially used to allow several types of Layer 3 protocols to work with the MAC. However, in most networks in use today, there is only one type of Layer 3 protocol, which is the Internet Protocol (IP), so LLC is seldom used because IP can be directly encapsulated using MAC.

The following sections provide an overview of the Ethernet physical layer and MAC layer standards.

Ethernet Physical Layer

The physical layer includes several standards to account for the various physical means possibly encountered in a LAN deployment. For example, the transmission can happen over an optical fiber, copper, and so on.

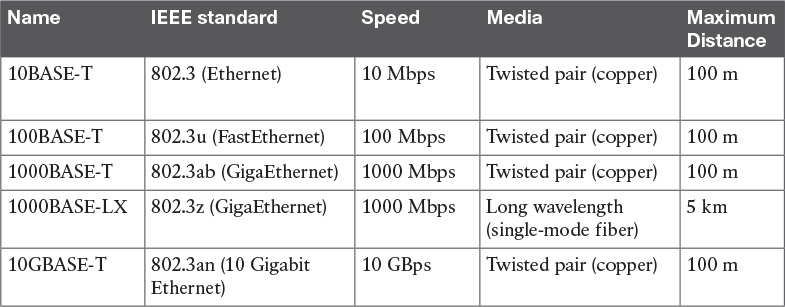

Examples of Ethernet standards are 10BASE-T and 1000BASE-LX. Each Ethernet standard is characterized by the maximum transmission speed and maximum distance between two connected stations. Specifically, the transmission speed has seen (and is currently seeing) the biggest evolution.

Table 1-5 shows examples of popular Ethernet physical layer standards.

The Ethernet nomenclature is easy to understand. Each standard name follows this format:

sTYPE-M

where:

![]() s: The speed (for example, 1000).

s: The speed (for example, 1000).

![]() TYPE: The modulation type (for example, baseband [BASE]).

TYPE: The modulation type (for example, baseband [BASE]).

![]() M: The information about the medium. Examples include T for twisted pair, F for fiber, L for long wavelength, and X for external sourced coding.

M: The information about the medium. Examples include T for twisted pair, F for fiber, L for long wavelength, and X for external sourced coding.

For example, with 1000BASE-T, the speed is 1000, the modulation is baseband, and the medium (T) is twisted-pair cable (copper).

An additional characteristic of a physical Ethernet standard is the type of cable and connector used to connect two stations. For example, 1000BASE-T would need a Category 6 (CAT 6) unshielded twisted-pair cable (UTP) and RJ-45 connectors.

Ethernet Medium Access Control

Ethernet MAC deals with the means used to transfer information between two Ethernet devices, also called stations, and it is independent from the physical means used for transmission.

The standard describes two modes of medium access:

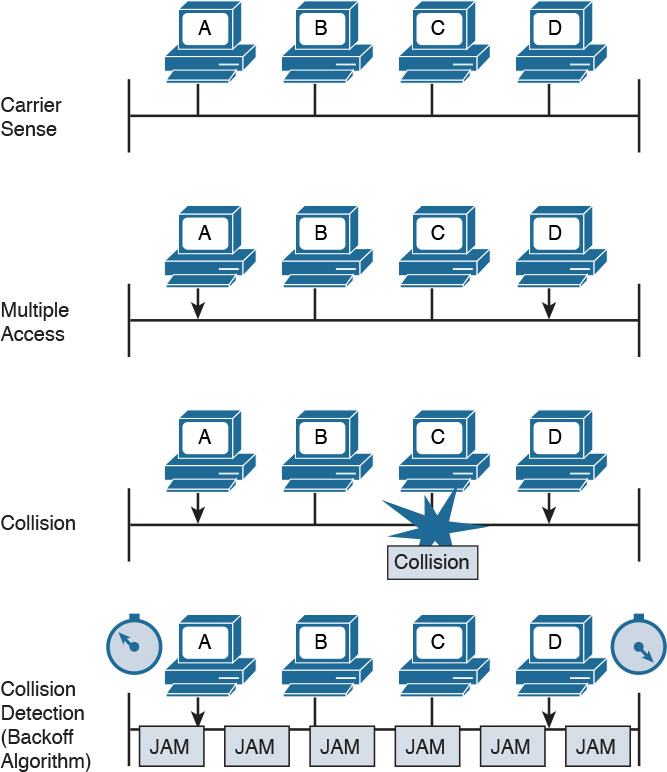

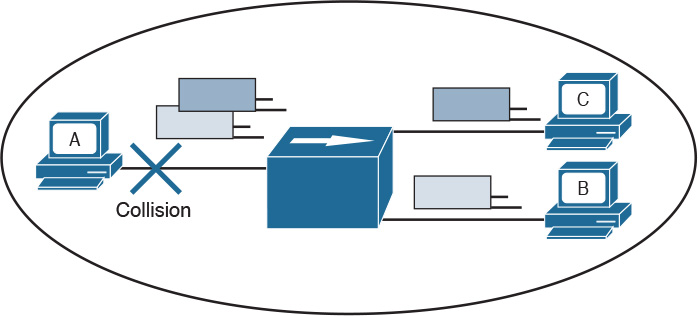

![]() Half duplex: In half-duplex mode, two Ethernet devices share a common transmission medium. The access is controlled by implementing Carrier Sense Multiple Access with Collision Detection (CSMA/CD). In CSMA/CD, a device has the ability to detect whether there is a transmission occurring over the shared medium. When there is no transmission, a device can start sending. It can happen that two devices send nearly at the same time. In that case, there is a message collision. When a collision occurs, it is detected by CSMA/CD-enabled devices, which will then stop transmitting and will delay the transmission for a certain amount of time, called the backoff time. The jam signal is used by the station to signal that a collision occurred. All stations that can sense a collision are said to be in the same collision domain.

Half duplex: In half-duplex mode, two Ethernet devices share a common transmission medium. The access is controlled by implementing Carrier Sense Multiple Access with Collision Detection (CSMA/CD). In CSMA/CD, a device has the ability to detect whether there is a transmission occurring over the shared medium. When there is no transmission, a device can start sending. It can happen that two devices send nearly at the same time. In that case, there is a message collision. When a collision occurs, it is detected by CSMA/CD-enabled devices, which will then stop transmitting and will delay the transmission for a certain amount of time, called the backoff time. The jam signal is used by the station to signal that a collision occurred. All stations that can sense a collision are said to be in the same collision domain.

Half-duplex mode was used in early implementations of Ethernet; however, due to several limitations, including transmission performance, it is rarely seen nowadays. A network hub is an example of a device that can be used to share a common transmission medium across multiple Ethernet stations. You’ll learn more about hubs later in this chapter in the “LAN Hubs and Bridges” section.

Figure 1-9 shows an example of CSMA/CD access.

![]() Full duplex: In full-duplex mode, two devices can transmit simultaneously because there is a dedicated channel allocated for the transmission. Because of that, there is no need to detect collisions or to wait before transmitting. Full duplex is called “collision free” because collisions cannot happen.

Full duplex: In full-duplex mode, two devices can transmit simultaneously because there is a dedicated channel allocated for the transmission. Because of that, there is no need to detect collisions or to wait before transmitting. Full duplex is called “collision free” because collisions cannot happen.

A switch is an example of a device that provides a collision-free domain and dedicated transmission channel. You’ll learn more about switches later in this chapter in the “LAN Switches” section.

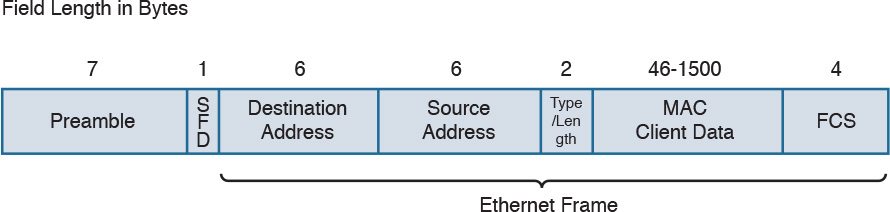

Ethernet Frame

Figure 1-10 shows an example of an Ethernet frame.

The Ethernet frame includes the following fields:

![]() Preamble: Used for the two stations for synchronization purposes.

Preamble: Used for the two stations for synchronization purposes.

![]() Start Frame Delimiter (SFD): Indicates the start of the Ethernet frame. This is always set to 10101011.

Start Frame Delimiter (SFD): Indicates the start of the Ethernet frame. This is always set to 10101011.

![]() Destination Address: Contains the recipient address of the frame.

Destination Address: Contains the recipient address of the frame.

![]() Source Address: Contains the source of the frame.

Source Address: Contains the source of the frame.

![]() Length/Type: This field can contain either the length of the MAC Client Data (length interpretation) or the type code of the Layer 3 protocol transported in the frame payload (type interpretation). The latter is the most common. For example, code 0800 indicates IPv4, and code 08DD indicates IPv6.

Length/Type: This field can contain either the length of the MAC Client Data (length interpretation) or the type code of the Layer 3 protocol transported in the frame payload (type interpretation). The latter is the most common. For example, code 0800 indicates IPv4, and code 08DD indicates IPv6.

![]() MAC Client Data and Pad: This field contains information being encapsulated at the Ethernet layer (for example, an LLC PDU or an IP packet). The minimum length is 46 bytes; the maximum length depends on the type of Ethernet frame:

MAC Client Data and Pad: This field contains information being encapsulated at the Ethernet layer (for example, an LLC PDU or an IP packet). The minimum length is 46 bytes; the maximum length depends on the type of Ethernet frame:

![]() 1500 bytes for basic frames. This is the most common Ethernet frame.

1500 bytes for basic frames. This is the most common Ethernet frame.

![]() 1504 bytes for Q-tagged frames.

1504 bytes for Q-tagged frames.

![]() 1982 bytes for envelope frames.

1982 bytes for envelope frames.

![]() Frame Check Sequence (FCS): This field is used by the receiving device to detect errors in transmission. This is usually called the Ethernet trailer. Optionally, an additional extension may be present.

Frame Check Sequence (FCS): This field is used by the receiving device to detect errors in transmission. This is usually called the Ethernet trailer. Optionally, an additional extension may be present.

Ethernet Addresses

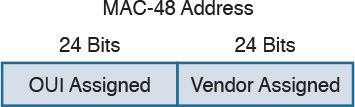

To transmit a frame, Ethernet uses source and destination addresses. The Ethernet addresses are called MAC addresses, or Extended Unique Identifier (EUI) in the new terminology, and they are either 48 bits (MAC-48 or EUI-48) or 64 bits (MAC-64 or EUI-64), if we consider all MAC addresses for the larger IEEE 802 standard.

The MAC address is usually expressed in hexadecimal. There are few ways it can be written for easier reading. The following two ways are the ones used the most:

![]() 01-23-45-67-89-ab (IEEE 802)

01-23-45-67-89-ab (IEEE 802)

![]() 0123.4567.89ab (Cisco notation)

0123.4567.89ab (Cisco notation)

There are three types of MAC addresses:

![]() Broadcast: A broadcast MAC address is obtained by setting all 1s in the MAC address field. This results in an address like FFFF.FFFF.FFFF. A frame with a broadcast destination address is transmitted to all the devices within a LAN.

Broadcast: A broadcast MAC address is obtained by setting all 1s in the MAC address field. This results in an address like FFFF.FFFF.FFFF. A frame with a broadcast destination address is transmitted to all the devices within a LAN.

![]() Multicast: A frame with a multicast destination MAC address is transmitted to all frames belonging to the specific group.

Multicast: A frame with a multicast destination MAC address is transmitted to all frames belonging to the specific group.

![]() Unicast: A unicast address is associated with a particular device’s NIC or port. It is composed of two sections. The first 24 bits contain the Organizational Unique Identifier (OUI) assigned to an organization. Although this is unique for an organization, the same organization can request several OUIs. For example, Cisco has multiple registered OUIs. The other portion of the MAC address (for example, the remaining 24 bits in the case of MAC-48) can be assigned by the vendor itself.

Unicast: A unicast address is associated with a particular device’s NIC or port. It is composed of two sections. The first 24 bits contain the Organizational Unique Identifier (OUI) assigned to an organization. Although this is unique for an organization, the same organization can request several OUIs. For example, Cisco has multiple registered OUIs. The other portion of the MAC address (for example, the remaining 24 bits in the case of MAC-48) can be assigned by the vendor itself.

Figure 1-11 shows the two portions of a MAC address.

Ethernet Devices and Frame-Forwarding Behavior

So far we have discussed the basic concepts of Ethernet, such as frame formats and addresses. It is now time to see how all this works in practice. We will start with the most basic case and progress toward a more complicated frame forwarding behavior and topology.

LAN Hubs and Bridges

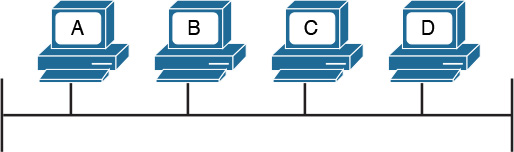

As discussed previously, a collision domain is defined as two or more stations needing to share the same medium. This setup requires some algorithm to avoid two frames being sent at nearly the same time and thus colliding. When a collision occurs, the information is lost. CSMA/CD has been used to resolve the collision problem by allowing an Ethernet station to detect a collision and avoid retransmitting at the same time.

The simplest example of a collision domain is an Ethernet bus where all the stations are connected as shown in Figure 1-12.

Because the Ethernet signal will degrade across the distance between the stations, the same topology could be obtained by using a central LAN hub where all the stations connect. The role of the LAN hub or repeater was to regenerate the signal uniquely and transmit this signal to all its ports. This topology is typically half-duplex transmission mode and, as in the case of an Ethernet bus, defines a single collision domain.

Figure 1-13 shows how the information sent by Host A is repeated over all the hub’s ports.

Figure 1-13 A Network Hub Where the Electrical Signal of a Frame Is Regenerated and the Information Sent Out to All the Device Ports

Before transmitting, a station senses the medium (also called carrier) to see if any frame is being transmitted. If the medium is empty, the station can start transmitting. If two stations start at nearly the same time, as is the case in this example, a collision occurs. All stations in the collision domain detect the collision and adopt a backoff algorithm to delay the transmission.

Figure 1-14 shows an example of a collision happening with a hub network. Note that B will also receive a copy of the frame sent from C, and C will receive a copy of the frame sent from B; although, this is not shown in the picture for simplicity.

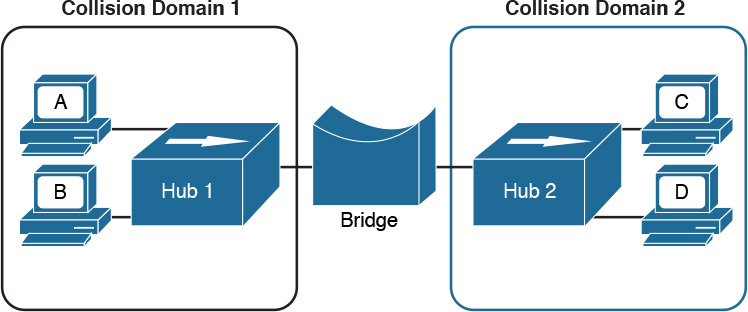

Collision domains are highly inefficient because two stations cannot transmit at the same time. The performance becomes even more impacted as the number of stations connected to the same hubs increases. To partially overcome that situation, networking bridges are used. A bridge is a device that allows the separation of collision domain.

Unlike a LAN hub, which will just regenerate the signal, a LAN bridge typically implements some frame-forwarding decision based on whether or not a frame needs to reach a device on the other side of the bridge.

Figure 1-15 shows an example of a network with hubs and bridges. The bridges partition the network into two collision domains, thus allowing the size of the network to scale.

LAN Switches

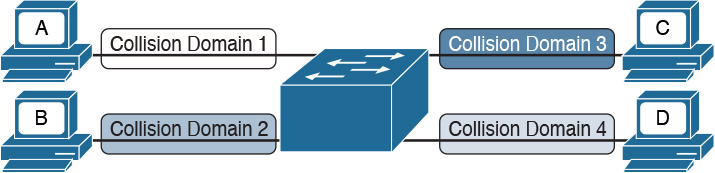

In modern networks, half-duplex mode has been replaced by full-duplex mode. Full-duplex mode allows two stations to transmit simultaneously because the transmission and receiver channels are separated. Because of that, in full duplex, CSMA/CD is not used because collisions cannot occur.

A LAN switch is a device that allows multiple stations to connect in full-duplex mode. This creates a separate collision domain for each of the ports, so collisions cannot happen. For example, Figure 1-16 shows four hosts connected to a switch. Each host has a separate channel to transmit and receive, so each port actually identifies a collision domain. Note that usually in this kind of scenario it does not make sense to refer to a port as collision domain, and it is usually more practical to assume that there is no collision domain—because no collision can occur.

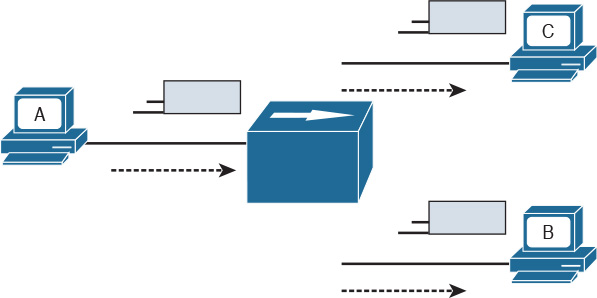

How does a switch forward a frame? Whereas a hub would just replicate the same information on all the ports, a switch tries to do something a bit more intelligent and use the destination MAC address to forward the frame to the right station.

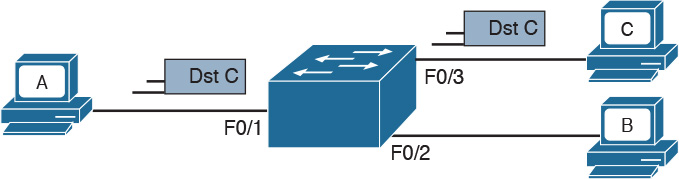

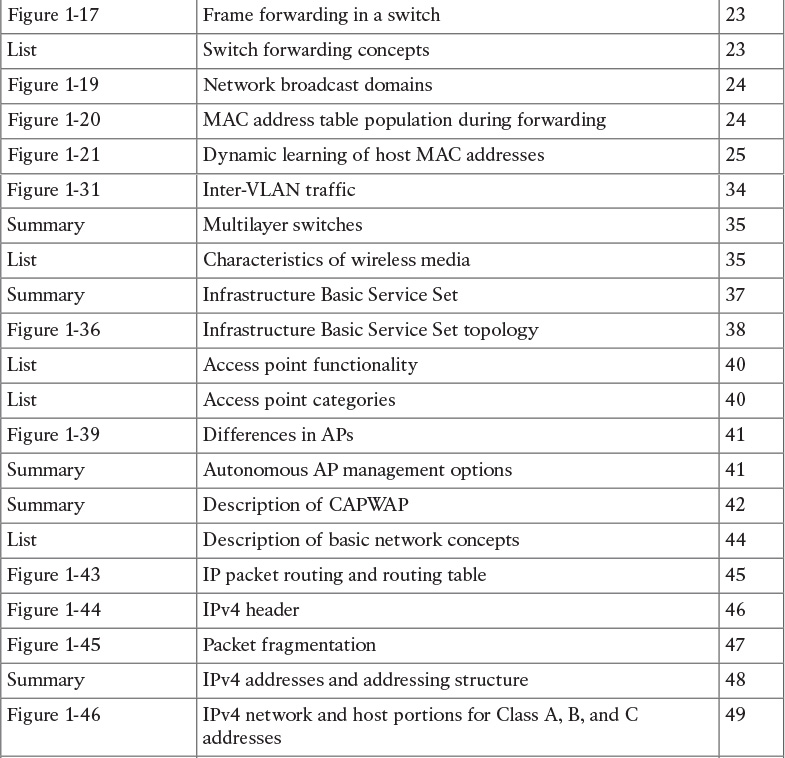

Figure 1-17 shows a simple example of frame forwarding.

How does a switch know to which port to forward a frame? Before this forwarding mechanism can be explained, we need to discuss three concepts:

![]() MAC address table: This table holds the link between a MAC address and the physical port of the switch where frames for that MAC address should be forwarded.

MAC address table: This table holds the link between a MAC address and the physical port of the switch where frames for that MAC address should be forwarded.

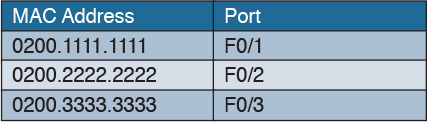

Figure 1-18 shows an example of a simplified MAC address table.

![]() Dynamic MAC address learning: It is possible to populate the MAC address table manually, but that is probably not the best use of anyone’s time. Dynamic learning is a mechanism that helps with populating the MAC address table. When a switch receives an Ethernet frame on a port, it notes the source MAC address and inserts an entry in the MAC address table, marking that MAC address as reachable from that port.

Dynamic MAC address learning: It is possible to populate the MAC address table manually, but that is probably not the best use of anyone’s time. Dynamic learning is a mechanism that helps with populating the MAC address table. When a switch receives an Ethernet frame on a port, it notes the source MAC address and inserts an entry in the MAC address table, marking that MAC address as reachable from that port.

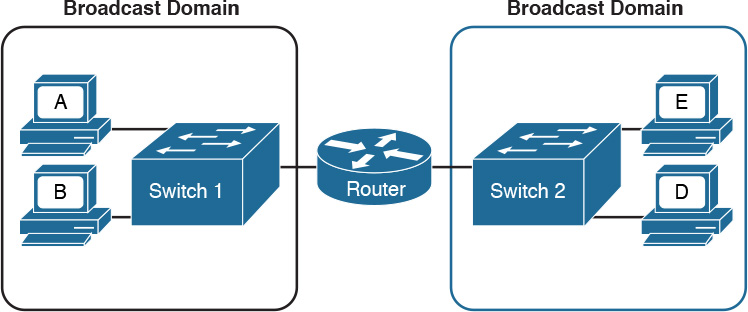

![]() Ethernet Broadcast domain: A broadcast domain is formed by all devices connected to the same LAN switches. Broadcast domains are separated by network layer devices such as routers. An Ethernet broadcast domain is sometimes also called a subnet.

Ethernet Broadcast domain: A broadcast domain is formed by all devices connected to the same LAN switches. Broadcast domains are separated by network layer devices such as routers. An Ethernet broadcast domain is sometimes also called a subnet.

Figure 1-19 shows an example of a network with two broadcast domains separated by a router.

Now that you have been introduced to the concepts of a MAC address table, dynamic MAC address learning, and broadcast domain, we can look at a few examples that explain how the forwarding is done.

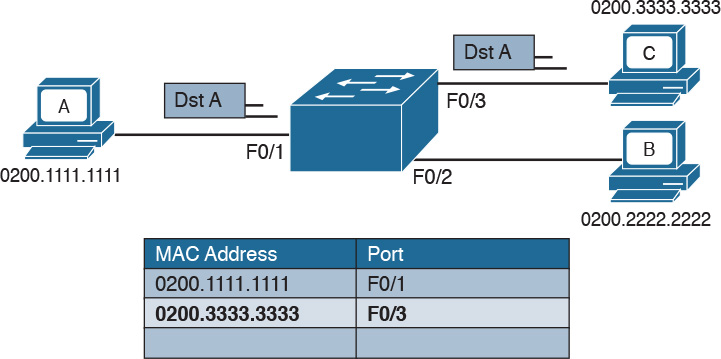

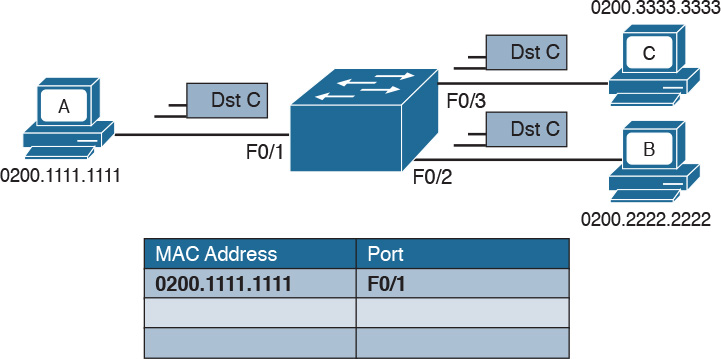

The forwarding decision is uniquely done based on the destination MAC address. In this example, Host A with MAC address 0200.1111.1111, connected to switch port F0/1, is sending traffic (Ethernet frames) to Host C with MAC address 0200.3333.3333, connected to port F0/3.

At the beginning, the MAC address table of the switch is empty. When the first frame is received on port F0/1, the switch does two things:

![]() It looks up the MAC address table. Because the table is empty, it forwards the frame to all its ports except the one where the frame was received. This is usually called flooding.

It looks up the MAC address table. Because the table is empty, it forwards the frame to all its ports except the one where the frame was received. This is usually called flooding.

![]() It uses dynamic MAC address learning to update the MAC address table with the information that 0200.1111.1111 is reachable through port F0/1.

It uses dynamic MAC address learning to update the MAC address table with the information that 0200.1111.1111 is reachable through port F0/1.

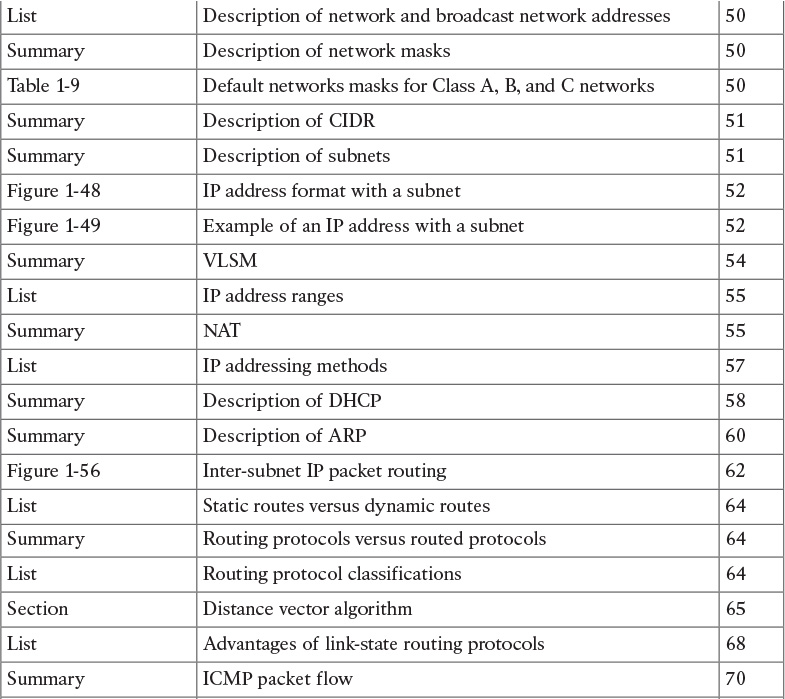

Figure 1-20 shows the frame flooding and the MAC address table updated with the information about Host A.

Figure 1-20 Example of a MAC Address Table Being Updated as the Frame Is Received and Forwarded by the Switch

Host B receives a copy of the frame; however, because the destination MAC address is not its own, it discards the frame. Host C receives the frame and may decide to respond. When Host C responds, the switch will look up the MAC address table. This time, it will find an entry for Host A and will just forward the frame on port F0/1 toward Host A. Like in the previous case, it will update the MAC address table to indicate that 0200.3333.3333 (Host C) is reachable through port F0/3, as shown in Figure 1-21.

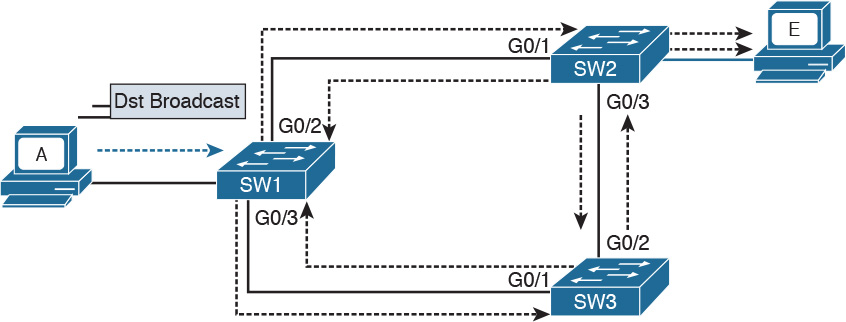

The flooding mechanism is also used when a frame has a broadcast destination MAC address. In that case, the frame will be forwarded to all ports in the Ethernet broadcast domain. In a more complex topology, switches may be connected to each other, sometimes with multiple ports to ensure redundancy; however, the basic forwarding principles do not change. All MAC addresses that are reachable via other switches will be marked in the MAC address table as reachable via the port where the switches are connected.

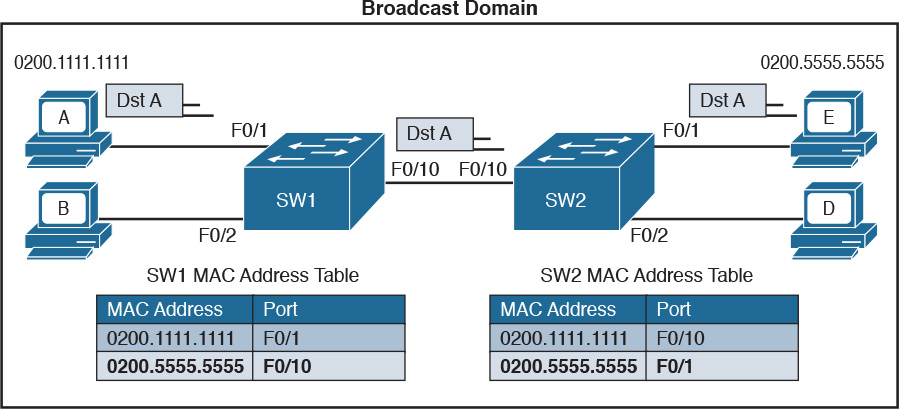

Figure 1-22 shows an example of Host A connected to port F0/1 of Switch 1 and sending traffic to Host E, connected to F0/1 of Switch 2. Switch 1 and Switch 2 are connected via port F0/10 on both sides.

Figure 1-22 Frame Forwarding and MAC Address Table Updates with Multiple Switches. Host A sends a frame for Host E.

When Host A sends the first frame, Switch 1 will flood it on all ports, including on port F0/10 toward Switch 2. Switch 2 will also flood on all its ports because it does not know where Host E is located. Both Switch 1 and Switch 2 will use dynamic learning to update their own MAC address tables. Switch 1 will mark Host A as reachable via F0/1, while Switch 2 will mark Host A as reachable via F0/10.

If Host E responds to Host A, the same steps will be repeated, as shown in Figure 1-23.

Figure 1-23 Frame Forwarding and MAC Address Table Updates with Multiple Switches. Host E replies to a frame sent by Host A.

Link Layer Loop and Spanning Tree Protocols

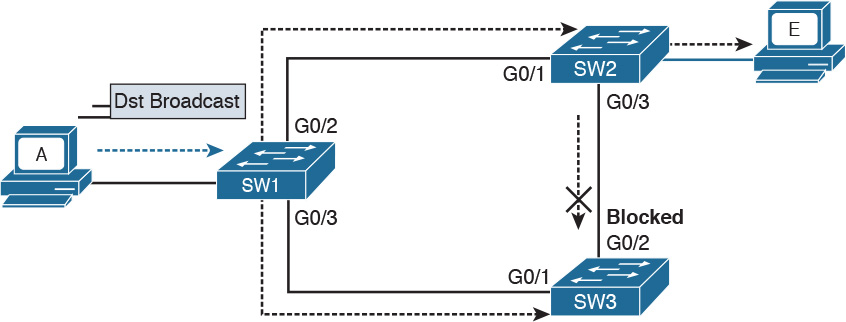

Let’s now consider another example, shown in Figure 1-24, where three switches (SW1, SW2, and SW3) are interconnected.

Assume that Host A, connected to SW1, sends a broadcast frame. SW1 will forward the frame to SW2 and SW3 on ports G0/2 and G0/3. SW2 will receive the frame and forward it to SW3 and Host E. SW3 will do the same and forward the frame to SW2. SW3 will again receive the frame from SW2 and will forward it to SW1, and so on.

As you can see, the frame will loop indefinitely within the LAN, thus causing degradation of the network performance due to the useless forwarding of frames. This is called a broadcast storm. Other types of loops can happen—for example, if Host A would have sent a frame to a host that never replies (hence, no switches know where the host is). In general, link layer (or Layer 2) loops can happen every time there is a redundant link within the Layer 2 topology.

The second undesirable effect of Layer 2 loops is MAC table instability. SW1 in the preceding example will keep (incorrectly) updating the MAC address table, marking Host A on port G0/2 and G0/3 as it receives the looping frames with the source address of Host A on these two ports. So, whenever SW1 receives frames for Host A, it will incorrectly send them to the wrong port, making the problem worse.

The third effect of a Layer 2 loop is that a host (for example, Host E) will keep receiving a copy of the same frame that’s circulating within the network. This can confuse the host and may result in higher-layer protocol failure.

Spanning Tree Protocols (STPs) are used to avoid Layer 2 loops. This section describes the fundamental concepts of STPs. Over the years, the concept has been enhanced to improve performance and to take into consideration the evolution of network complexity. In its basic function, the STP creates a logical Layer 2 topology that is loop free. This is done by allowing traffic on certain ports and blocking traffic on others. If the topology changes (for example, if a link fails), STP will recalculate the new logical topology (it is said to “reconverge”) and unblock certain ports to adapt to the new topology.

Figure 1-25 shows STP applied to the previous example. Port G0/2 on SW3 is marked as blocked, and it will not forward traffic. This avoids frames looping. If the link between SW1 and SW3 goes down, STP will unblock the link between SW3 and SW2 to allow traffic to pass and provide redundancy.

STP uses a spanning tree algorithm (STA) to create a tree-like, loop-free logical topology. To understand how a basic STP works, we need to explore a few concepts:

![]() Bridge ID (BID): An 8-byte ID that is independently calculated on each switch. The first 2 bytes of the BID contain the priority, while the remaining 6 bytes includes the MAC address of the switch (of one of its ports).

Bridge ID (BID): An 8-byte ID that is independently calculated on each switch. The first 2 bytes of the BID contain the priority, while the remaining 6 bytes includes the MAC address of the switch (of one of its ports).

![]() Bridge PDU (BPDU): Represents the STP protocol messages. The BPDU is sent to a multicast MAC address. The address may depend on the specific STP protocol in use.

Bridge PDU (BPDU): Represents the STP protocol messages. The BPDU is sent to a multicast MAC address. The address may depend on the specific STP protocol in use.

![]() Root switch: Represents the root of the spanning tree. The spanning tree root is identified through a process called root election. The root switch BID is called the root BID.

Root switch: Represents the root of the spanning tree. The spanning tree root is identified through a process called root election. The root switch BID is called the root BID.

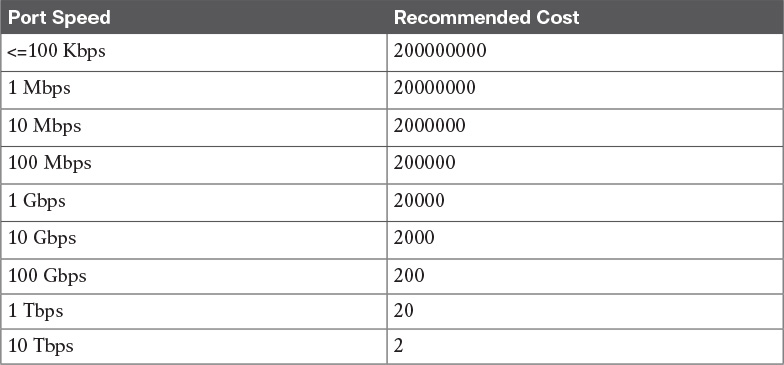

![]() Port cost: A numerical value associated to each spanning tree port. Usually this value depends on the speed of the port. The higher the speed, the lower the cost. Table 1-6 reports the recommended values from IEEE (in IEEE 802.1Q-2014).

Port cost: A numerical value associated to each spanning tree port. Usually this value depends on the speed of the port. The higher the speed, the lower the cost. Table 1-6 reports the recommended values from IEEE (in IEEE 802.1Q-2014).

![]() Root cost: Represents the cost to reach the root switch. The root cost is given by summing all the costs of the ports on the shortest path to the root switch. The root cost value of the root switch is 0.

Root cost: Represents the cost to reach the root switch. The root cost is given by summing all the costs of the ports on the shortest path to the root switch. The root cost value of the root switch is 0.

At initialization, an STP root switch needs to be identified. The root switch will be the switch with the lower BID. The BID priority field is used first to determine the lower BID; if two switches have the same priority, then the MAC address is used to determine the root.

The process to identify the switch with the lower BID is called root election. At the beginning, each switch tries to become the root and sends out a Hello BPDU to announce its presence in the network to the rest of the switches. The initial Hello BPDU includes its own switch BID as the root BID in the BPDU field.

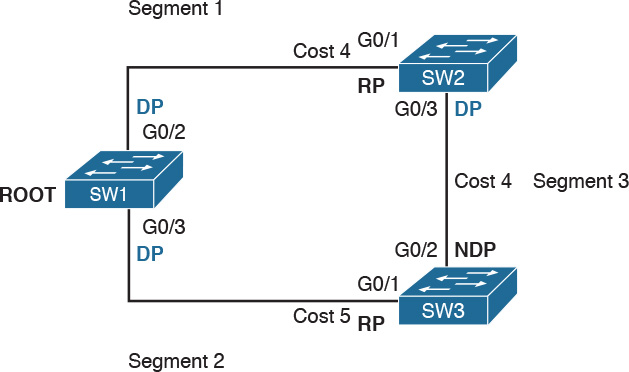

When a switch receives a Hello BPDU with a better root BID (lower BID), it will stop sending its own Hello BPDU and will forward the Hello BPDU generated from the root switch. It will also update the root cost and add the cost of the port where the BPDU was received. The process continues until the root election is over and a root switch is identified. At this point, all switches on the network know which switch is the root and what the root cost is to that switch. Figure 1-26 shows an example of root election in our sample topology.

SW1 will send a BPDU to SW2 and SW3. When SW2 receives the BPDU from SW1, it will see that the BID for SW1 is lower than its own BID, so it will update the Root BID entry to include the BID of SW1. SW2 will then forward the BPDU to SW3 with a root cost of 4.

SW3 has also received the BPDU from SW1 and already updated the Root BID entry with SW1’s BID because it is lower than its own BID. It will then forward the BPDU to SW2 with a root cost of 5. At the end, SW1 becomes the root within this topology.

As stated at the beginning of this section, the spanning tree is created by blocking a certain port. Once the root switch is elected, the tree can start to be built. At this point, we need to discuss the concepts of port role and port state:

![]() Port role: Depending on the STP-specific protocols, there are a few names and roles for ports; however, three main roles are important for understanding how STP works. Once that is clear, the nuances of the various STP protocols can be easily understood.

Port role: Depending on the STP-specific protocols, there are a few names and roles for ports; however, three main roles are important for understanding how STP works. Once that is clear, the nuances of the various STP protocols can be easily understood.

![]() Root port (RP) is the port that offers the lowest path cost (root cost) to the root on non-root switches.

Root port (RP) is the port that offers the lowest path cost (root cost) to the root on non-root switches.

![]() Designated port (DP) is the port that offers the lowest path to the root for a given LAN segment. For example, if a switch has a host attached to a port, that port becomes a DP because it’s the closest port to the root for that LAN segment. The switch is told to be the designated switch for that LAN segment. All ports on a root switch are DP.

Designated port (DP) is the port that offers the lowest path to the root for a given LAN segment. For example, if a switch has a host attached to a port, that port becomes a DP because it’s the closest port to the root for that LAN segment. The switch is told to be the designated switch for that LAN segment. All ports on a root switch are DP.

![]() Non-designated ports are all the other ports that are not either the RP or DP. Depending on the specific STP standards, they can assume various names, and the standard can define additional port categories.

Non-designated ports are all the other ports that are not either the RP or DP. Depending on the specific STP standards, they can assume various names, and the standard can define additional port categories.

Let’s look again at our topology, but in a bit different way. Referring to Figure 1-26, we can identify three segments. On the root switch, SW1, all ports are DPs because they offer the shortest path to the root for Segments 1 and 2. What is the DP for Segment 3? Port G0/3 on SW2 will become the DP because its cost to the root is 4, whereas Port G0/2 on SW3 would have a cost of 5.

The RP identification is a bit easier. For each port on a non-root switch, we select the port with the lower path to the root. In this case, G0/1 on SW2 and G0/1 on SW3 become the RP. All remaining ports will be non-designated ports.

![]() Port state: The port state is related to the specific action a port can take while in that state. As in the port role definition, the name of the state depends on the STP protocol being used. Here are some common examples of port states:

Port state: The port state is related to the specific action a port can take while in that state. As in the port role definition, the name of the state depends on the STP protocol being used. Here are some common examples of port states:

![]() Blocking: In this state, a port blocks all frames received except Layer 2 management frames (for example, BPDU).

Blocking: In this state, a port blocks all frames received except Layer 2 management frames (for example, BPDU).

![]() Listening: A port transitions to this state from the blocking state when the STP determines that the port needs to participate in the forwarding. At this stage, however, the port is not fully functional. It can process BPDU and respond to Layer 2 management messages, but it does not accept frames.

Listening: A port transitions to this state from the blocking state when the STP determines that the port needs to participate in the forwarding. At this stage, however, the port is not fully functional. It can process BPDU and respond to Layer 2 management messages, but it does not accept frames.

![]() Learning: The port transitions to learning after the listening phase. In this phase, the port still does not forward frames; however, it learns the MAC addresses via dynamic learning and fills in the MAC address table.

Learning: The port transitions to learning after the listening phase. In this phase, the port still does not forward frames; however, it learns the MAC addresses via dynamic learning and fills in the MAC address table.

![]() Forwarding: In this state, the port is fully operational and receives and forwards frames.

Forwarding: In this state, the port is fully operational and receives and forwards frames.

![]() Disabled: A port in disable state does not forward and receive frames and does not participate in the STP process, so it does not process BPDU.

Disabled: A port in disable state does not forward and receive frames and does not participate in the STP process, so it does not process BPDU.

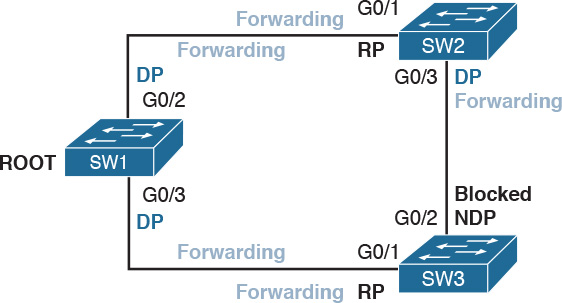

When the STP protocol has converged, which means the RPs and DPs are identified, each port transitions to a terminal state. Every RP and DP will be in the forwarding state, while all the other ports will be in the blocking state. Figure 1-27 shows the terminal state of the ports in our topology.

STP provides a critical function within communication networks, so a wrong design or implementation of the Spanning Tree Protocol (for example, an incorrect selection of the root switch) could lead to poor performance or even catastrophic failure in some cases.

Through the years, Spanning Tree Protocols have seen several updates and new standards have emerged. The most common versions of Spanning Tree Protocols in use today are Rapid STP, Per-VLAN STP+ (PVSTP+), and Multiple Spanning Tree (MST).

Virtual LAN (VLAN) and VLAN Trunking

So far, we have assumed that everything happens within a single LAN. In simple terms, a LAN can be identified as a part of the network within a single broadcast domain. LANs (and broadcast domains) are separated by Layer 3 devices such as routers.

As the network grows and becomes more complex, operating within a single broadcast domain degrades the network performance and adds complexity to management protocols, such as to the STP.

The concept of a virtual LAN (VLAN) has been introduced to overcome the issues created by a very large single LAN. A VLAN can exist within a switch, and each switch port can be assigned to a specific VLAN.

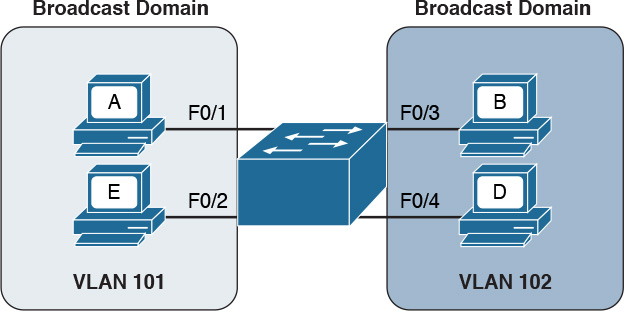

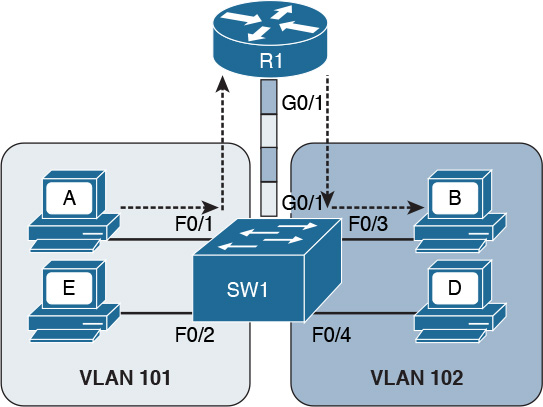

Figure 1-28 shows four hosts connected to the same switch. Host A and Host E are assigned to VLAN 101 whereas Host B and Host D are assigned to VLAN 102. The switch treats a host in one VLAN as being in a single broadcast domain. A packet from one VLAN cannot be forwarded to a different VLAN at Layer 2. As such, a VLAN provides Layer 2 network separation.

Here are some common benefits of using a VLAN:

![]() Reduces the number of devices receiving the broadcast frame and the related overhead

Reduces the number of devices receiving the broadcast frame and the related overhead

![]() Creates Layer 2 network separation

Creates Layer 2 network separation

![]() Reduces management protocols’ load and complexity

Reduces management protocols’ load and complexity

![]() Segments troubleshooting and failure areas, as failure in one VLAN will not be propagated to the rest of the network

Segments troubleshooting and failure areas, as failure in one VLAN will not be propagated to the rest of the network

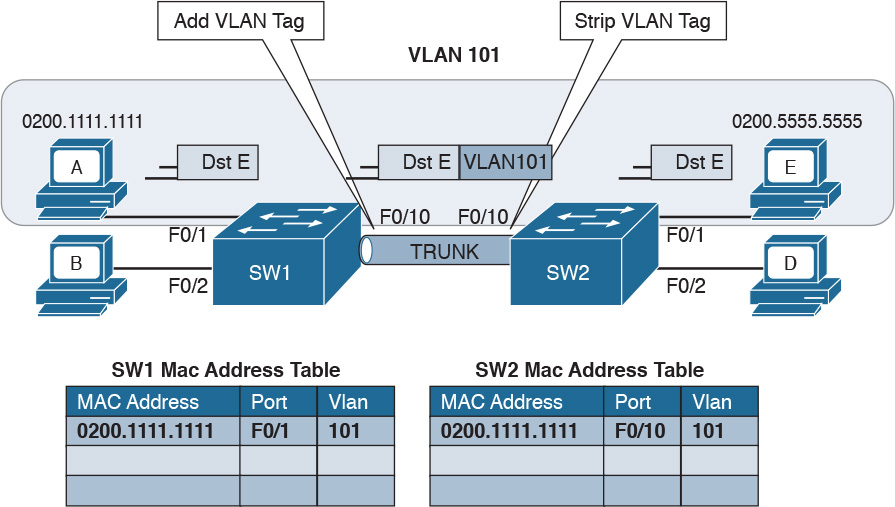

How does frame forwarding work in VLANs? The same process we described for a single LAN applies for each VLAN. The switch knows which port is linked to which VLAN and will forward the frame accordingly. In the case of multiple switches, the VLAN concept can still work. Figure 1-29 shows the VLAN concept across two switches.

In this case, Host A and Host E, although attached to two different switches, can still be configured within the same VLAN (for example, VLAN 101). The link between SW1 and SW2 is called a trunk, and it is a special link because it can transport frames belonging to several VLANs.

VLAN tagging is used to enable the forwarding between Host A and Host E within the same VLAN as well as across multiple switches. Referring to Figure 1-29, when Host A sends a frame to Host E, SW1 does not know where Host E is, so it will forward the frame to all ports in VLAN 101, including the trunk port to SW2.

As you can see, SW1 will not forward the frame to Host B because it is in a different VLAN. SW1, before sending the frame on the trunk link to SW2, will add a VLAN tag to the frame that carries the VLAN ID, VLAN 101. This tells SW2 that this frame should be forwarded to ports in VLAN 101 only.

SW2 receives the frame over the trunk link, strips the VLAN tagging, and forwards the frame to all its ports in VLAN 101 (in this case, only to F0/1). If Host E responds, the same process applies. SW2 will only send the packets over the trunk link (because SW2 now knows how to reach Host A) and will tag the packet with VLAN 101.

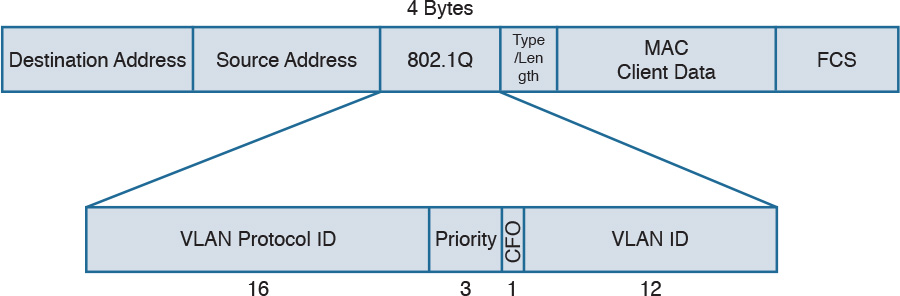

The VLAN information is added to the Ethernet frame. The way that it’s done depends on the protocol used for trunking. The most known and used trunking protocol nowadays is defined in IEEE 802.1Q (dot1q). Another protocol is Inter-Switch Link (ISL), which is a Cisco proprietary protocol that was used in the past.

In IEEE 802.1Q, the VLAN tagging is obtained by adding an IEEE 802.1Q tag between the source MAC address and the Type field in the Ethernet frame.

Figure 1-30 shows an example of an IEEE 802.1Q tag. The tag includes the VLAN ID.

IEEE 802.1Q introduces the concept of a native VLAN. The difference between a native and non-native VLAN is that a native VLAN goes without tag over the trunk link. When the trunk is configured for IEEE 802.1Q, if a switch receives a frame without a tag over a trunk link, it will interpret it as belonging to the native VLAN and forward accordingly.

Cisco VLAN Trunking Protocol

Cisco VLAN Trunking Protocol (VTP) is a Cisco proprietary protocol used to manage VLAN distribution across switches. VTP should not be confused with protocols that actually handle the tagging of frames with VLAN information when being sent over a trunk link. VTP is used to distribute information about existing VLANs to all switches in a VTP domain so that VLANs do not have to be manually configured, thus reducing the burden of the administrator.

For example, when a new VLAN is created on one switch, the same VLAN may need to be created on all switches to enable VLAN trunking and consistent use of VLAN IDs. VTP facilitates the process by sending automatic advertisements about the state of VLAN databases across the VTP domain. Switches that receive advertisements will maintain the VLAN database, synchronized based on the information found in the VTP message.

VTP relies on protocols such as 802.1Q to transmit information. VTP defines three modes of operation:

![]() Server mode: In VTP server mode, the administrator can configure or remove a VLAN. VTP will take care of distributing the information to other switches in the VTP domain.

Server mode: In VTP server mode, the administrator can configure or remove a VLAN. VTP will take care of distributing the information to other switches in the VTP domain.

![]() Client mode: In VTP client mode, a switch receives updates about a VLAN and advertises the VLAN configured already; however, a VLAN cannot be added or removed.

Client mode: In VTP client mode, a switch receives updates about a VLAN and advertises the VLAN configured already; however, a VLAN cannot be added or removed.

![]() Transparent mode: In transparent mode, the switch does not participate in VTP, so it does not perform a VLAN database update and does not generate VTP advertisement; however, it forwards VTP advertisements from other switches.

Transparent mode: In transparent mode, the switch does not participate in VTP, so it does not perform a VLAN database update and does not generate VTP advertisement; however, it forwards VTP advertisements from other switches.

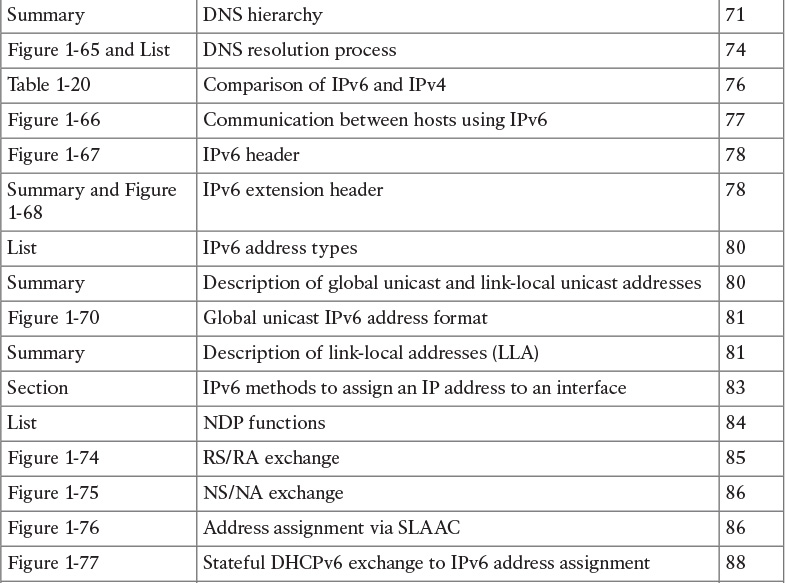

Inter-VLAN Traffic and Multilayer Switches

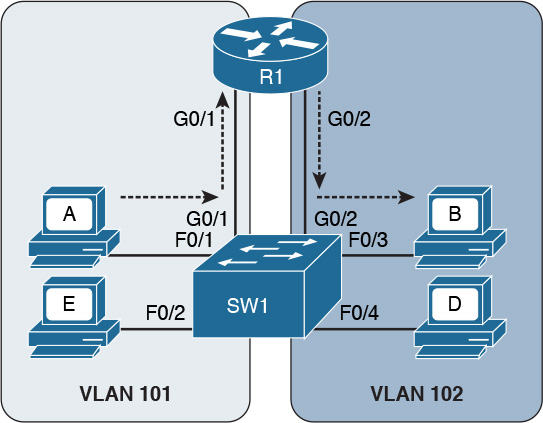

As described in the previous section, VLANs provide a convenient way to separate broadcast domains. This means, however, that a Layer 3 device is needed to forward traffic between two VLANs even if they are on the same switch. We have defined switches as Layer 2 devices, so a switch by itself would not be able to forward traffic from one VLAN to the other, even if the source and destination host reside physically on the same switch.

Figure 1-31 shows an example of inter-VLAN traffic. Host A in VLAN 101 is sending traffic to Host B in VLAN 102. Both hosts are connected to SW1. Because SW1 is a switch operating at Layer 2, a Layer 3 device (for example, a router, R1) is needed to forward the traffic. In the figure, the router uses two different interfaces connected to the switch, where G0/1 is in VLAN 101 and G0/2 is in VLAN 102.

Alternatively, R1 could have been configured with only one interface on the switch with trunking enabled. This alternative is sometimes defined as router on a stick (ROAS), as illustrated in Figure 1-32.

In both of the preceding examples, there is a waste of resources. For example, a packet needs to travel to the first router in the path, to then come back again to the same switch creating additional load on the links. Additionally, there is a loss in performance due to the encapsulation and upper-layer processing of the frame.

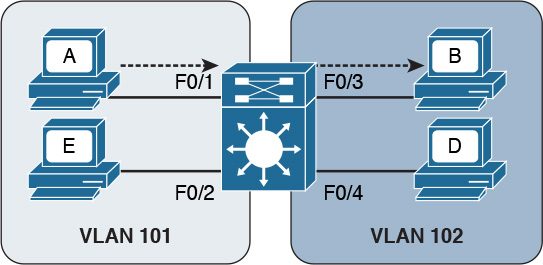

The solution is to integrate Layer 3 function within a classic Layer 2 switch. This type of switch is called a Layer 3 switch or sometimes a multilayer switch. Figure 1-33 shows an example of inter-VLAN flow with a multilayer switch.

Wireless LAN Fundamentals and Technologies

Together with Ethernet, which is defined as wired access to a LAN, wireless LAN (WLAN) is one of the most used technologies for LAN access. This book covers the basics of WLAN fundamentals and technologies. Interested readers can refer to the CCNA Wireless 200-355 Official Cert Guide book for additional information.

Wireless LAN is defined within the IEEE 802.11 standards. While in some aspects WLANs resemble classic Ethernet technology, there are several significant differences.

The first and most notable difference is the medium. Here are several other characteristics that distinguish a wireless medium from a wire medium:

![]() There is no defined boundary.

There is no defined boundary.

![]() It is more prone to interference by other signals on the same medium.

It is more prone to interference by other signals on the same medium.

![]() It is less reliable.

It is less reliable.

![]() The signal can propagate in asymmetric ways (for example, due to reflection).

The signal can propagate in asymmetric ways (for example, due to reflection).

The way stations access the medium is also different. In the previous section, you learned that Ethernet defines two operational modes: half duplex, where the stations can transmit one at time, and full-duplex, where stations can transmit simultaneously. In WLANs, network stations can only use half-duplex mode because they are not able to transmit and receive at the same time due to the limitation of the medium.

This means that two stations need to implement a way to detect if the medium (in this case, the radio frequency channel) is being used to avoid transmitting at the same time. This functionality is provided by a Carrier Sense Media Access with Collision Avoidance (CSMA/CA). Note that this is different from the CSMA/CD used in Ethernet. The main difference is in how a collision is handled. Wired devices can detect collisions over the medium, whereas wireless devices cannot.

Like we have seen for Ethernet, a wireless station senses the medium to determine whether is it possible to transmit. However, the way this is done is different for wired devices. In a wired technology, the device can sense an electrical signal on the wire and determine whether someone else is transmitting. This cannot happen in the case of wireless devices. There are mainly two methods for carrier sense:

![]() Physical carrier sense: When the station is not transmitting, it can sense the channel for the presence of other frames. This is sometimes referred to as Clear Channel Assessment (CCA).

Physical carrier sense: When the station is not transmitting, it can sense the channel for the presence of other frames. This is sometimes referred to as Clear Channel Assessment (CCA).

![]() Virtual carrier sense: Stations when transmitting a frame include an estimated time for the transmission of the frame in the frame header. This value can be used to estimate how long the channel will be busy.

Virtual carrier sense: Stations when transmitting a frame include an estimated time for the transmission of the frame in the frame header. This value can be used to estimate how long the channel will be busy.

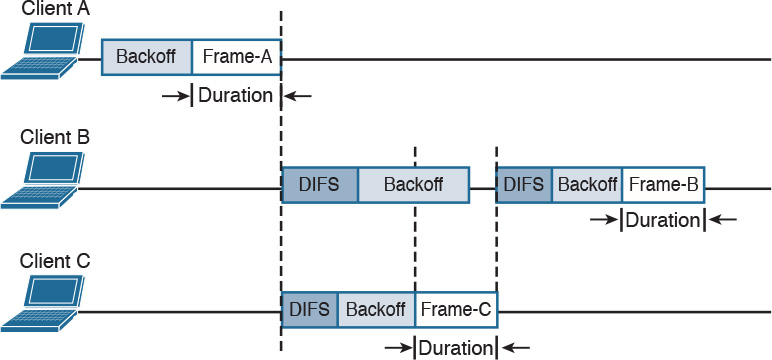

Collision detection is not possible for similar reasons. Wireless clients thus need to avoid collisions. To do that, they use a mechanism called Collision Avoidance. The mechanism works by using backoff timers. Each station waits a backoff period before transmitting. In addition to the backoff period, a station may need to wait for an additional time, called interframe space, which is used to reduce the likelihood of a collision and to allow an extra cushion of time between two frames.

802.11 defines several interframe space timers. The standard interframe timer is called Distributed Interframe Space (DIFS).

The basic process of transmitting frames includes three steps:

Step 1. Sense the channel to see whether it is busy.

Step 2. Select a delay based on the backoff timer. If, in the meantime, the channel gets busy, the backoff timer is stopped. When the channel is clear again, the backoff timer is restarted.

Step 3. Wait for an additional DIFS time.

Figure 1-34 illustrates the process of transmitting frames in a WLAN. Client A is ready to transmit, it senses the medium, selects a backoff time, and then transmits. The duration of the frame is included in the frame header. Client B and Client C wait until the frame from Client A has been transmitted plus the DIFS, and then start the backoff timer. Client C’s backoff timer expires before Client B’s, so Client C transmits before Client B. Client B finds the channel busy, so it stops the backoff timer. Client B waits for the new transmission time, the DIFS period and the remaining backoff timer, and then it transmits.

One particularity of WLANs compared to wired networks is that a WLAN requires the other party to send an acknowledgement so that the sender knows the frame has been received.

802.11 Architecture and Basic Concepts

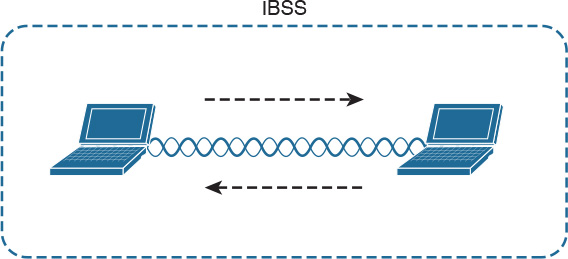

Unlike wired connections, where a station needs a physical connection to be able to transmit, the wireless medium is open, so any station can start transmitting. The IEEE 802.11 standards define the concept of Basic Service Set (BSS), which identifies a set of devices that share some common parameters and can communicate through a wireless connection. The most basic type of BSS is called Independent BSS (IBSS), and it is formed by two or more wireless stations communicating directly. IBSS is sometimes called ad-hoc wireless network.

Figure 1-35 shows an example of IBSS.

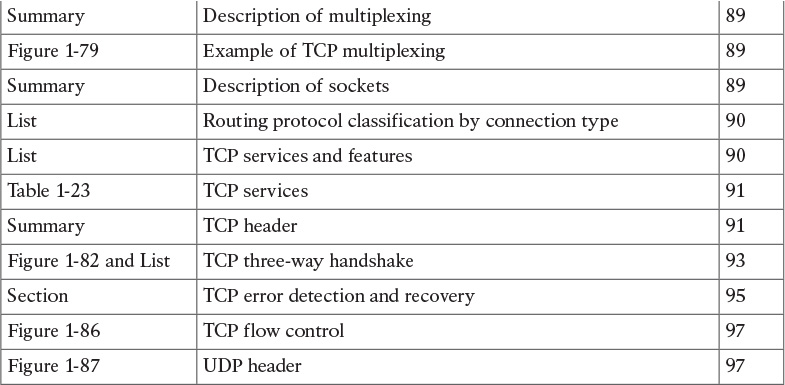

Another type of BSS is called infrastructure BSS. The core of an infrastructure BSS is a wireless access point, or simply an access point (AP). Each station will associate to the AP, and each frame is sent to the AP, which will then forward it to the receiving station. The access point advertises a Service Set Identifier (SSID), which is used by each station to recognize a particular network.

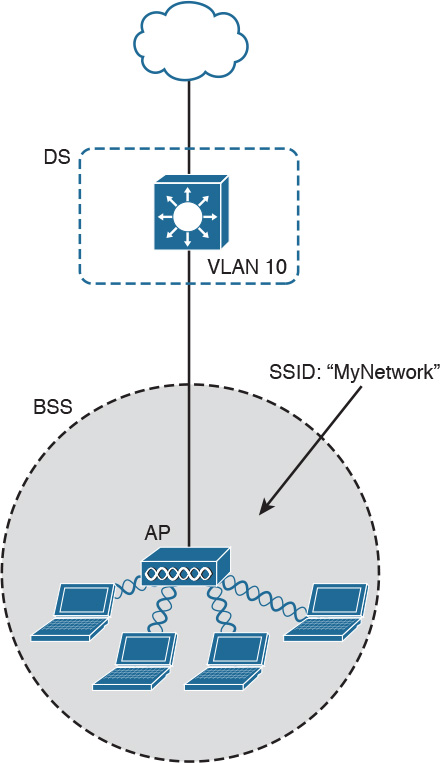

To communicate with other stations that are not in the same BSS (for example, a server station in the organization’s data center), access points can be connected in uplink with the rest of the organization’s network (for example, with a wired connection). The uplink wired network is called a Distribution System (DS). The AP creates a boundary point between the BSS and the DS.

Figure 1-36 shows an example of infrastructure BSS with four wireless stations and an access point connected upstream with a DS.

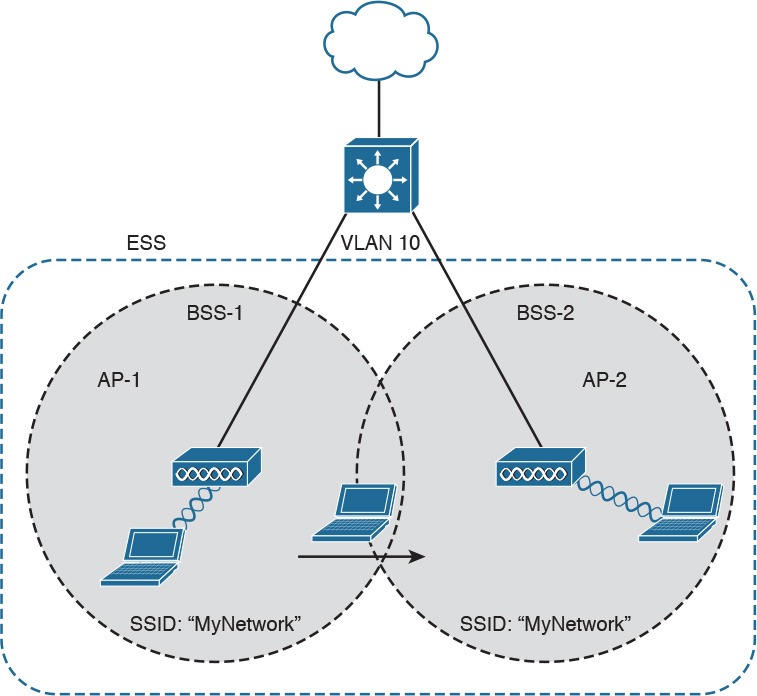

An access point has limited spatial coverage due to the wireless signal degradation. To extend the wireless coverage of a specific network (that is, a network identified by a single SSID), multiple BSSs can be linked together to form an Extended Service Set (ESS). A client can move from one AP to the other in a seamless way. The method to release a client from one AP and associate to the other AP is called roaming.

Figure 1-37 shows an example of an ESS with two APs connected to a DS and a user roaming between two BSSs.

802.11 Frame

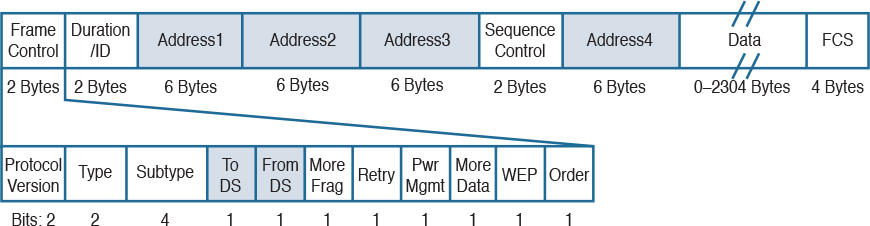

An 802.11 frame is a bit different from the Ethernet frame, although there are some commonalities. Figure 1-38 shows an example of 802.11 frame.

The 802.11 frame includes the following elements:

![]() Frame control: Includes some additional sub-elements, as indicated in Figure 1-37. It provides information on the frame type and whether this frame is directed toward the DS or is coming from the DS toward the wireless network.

Frame control: Includes some additional sub-elements, as indicated in Figure 1-37. It provides information on the frame type and whether this frame is directed toward the DS or is coming from the DS toward the wireless network.

![]() Duration field: Can have different meanings depending on the frame type. However, one common value is the expected time the frame will be traveling on the channel for the Virtual Carrier Sense functionality.

Duration field: Can have different meanings depending on the frame type. However, one common value is the expected time the frame will be traveling on the channel for the Virtual Carrier Sense functionality.

![]() Address fields: Contain addresses in 802 MAC format (for example, MAC-48). The following are the typical addresses included:

Address fields: Contain addresses in 802 MAC format (for example, MAC-48). The following are the typical addresses included:

![]() Transmitter address (TA) is the MAC address of the transmitter of the frame (for example, a wireless client).

Transmitter address (TA) is the MAC address of the transmitter of the frame (for example, a wireless client).

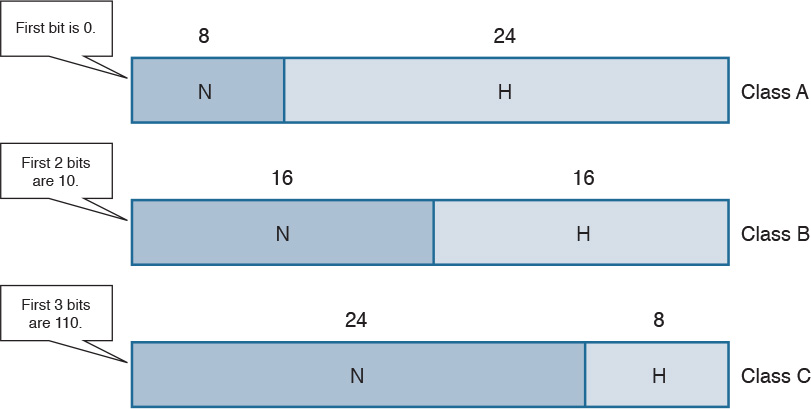

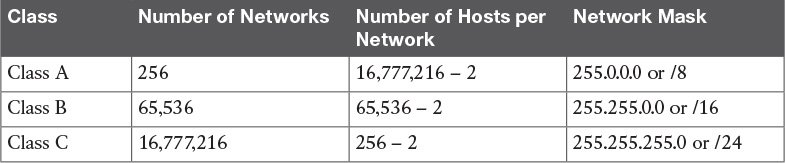

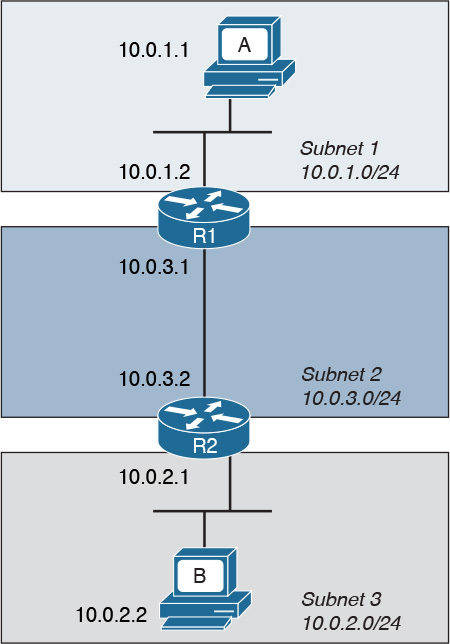

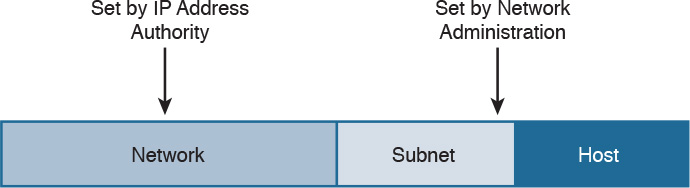

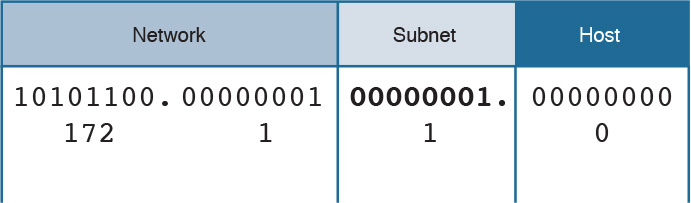

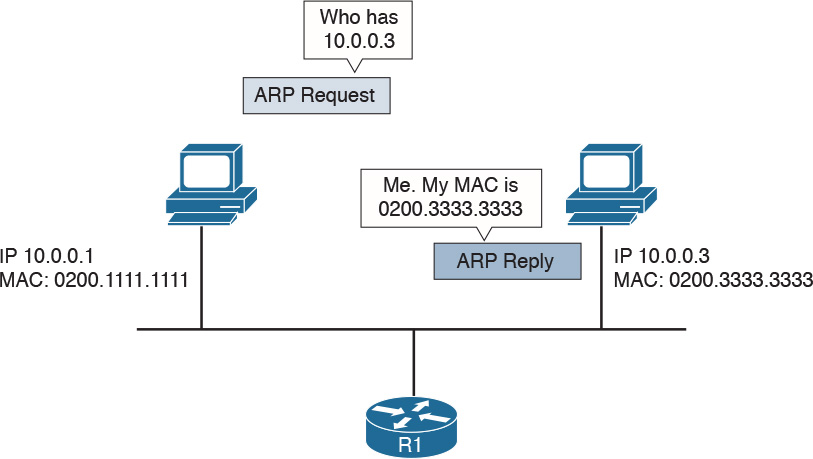

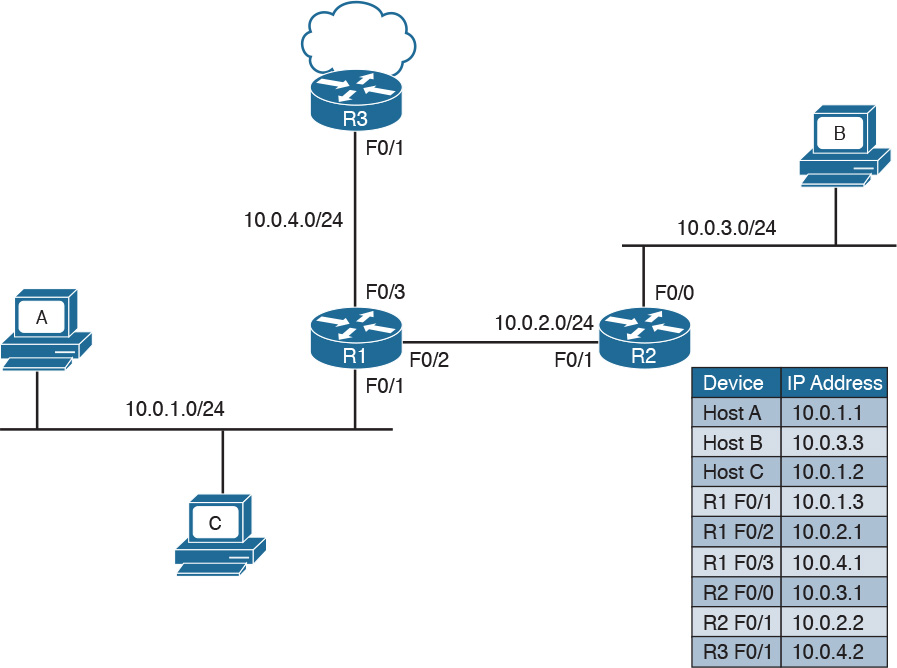

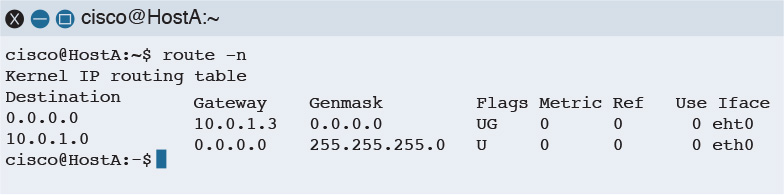

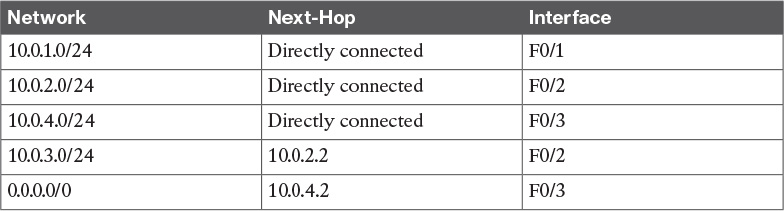

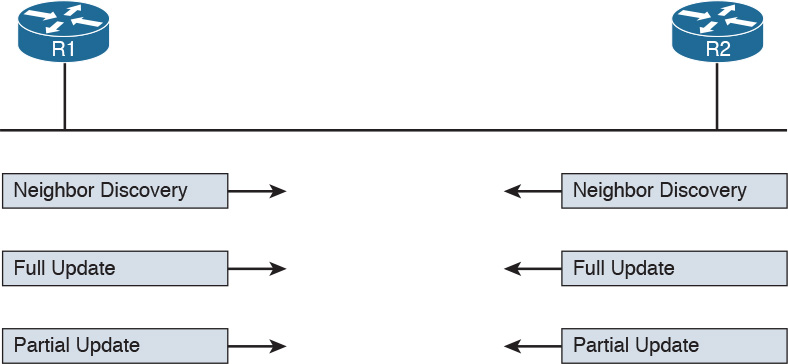

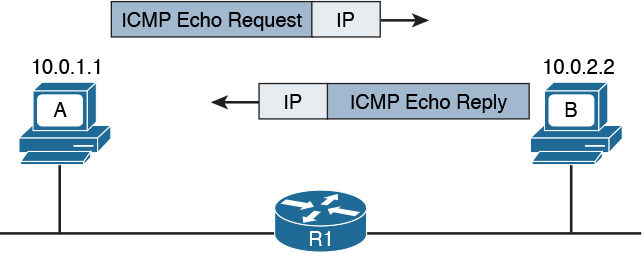

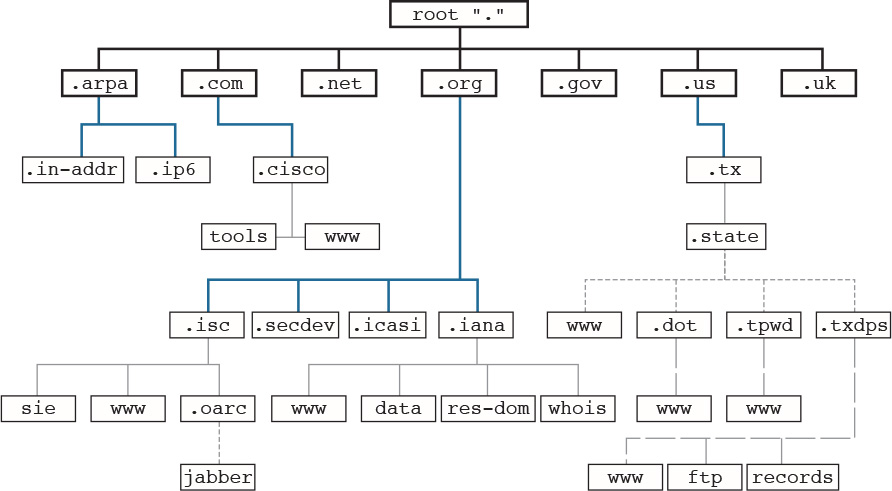

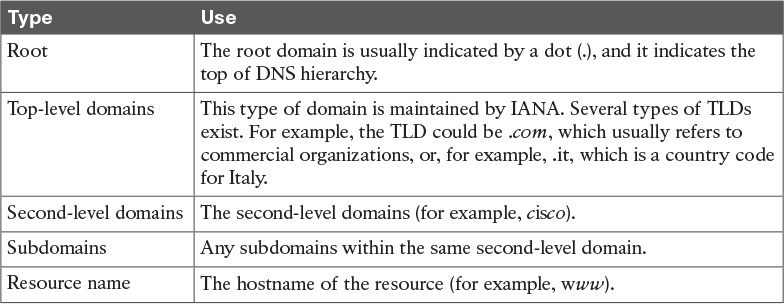

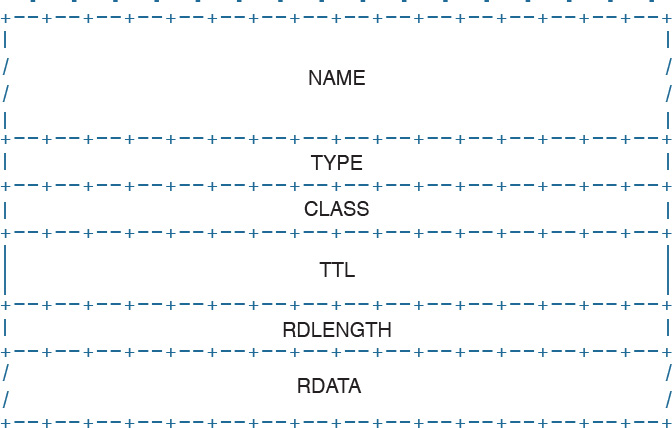

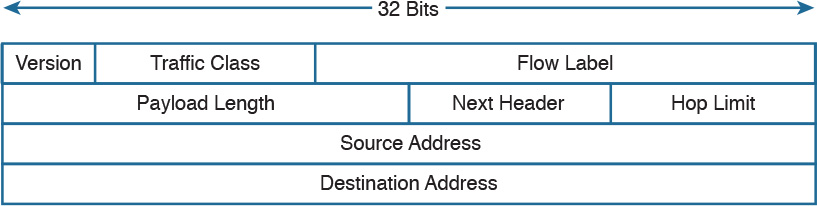

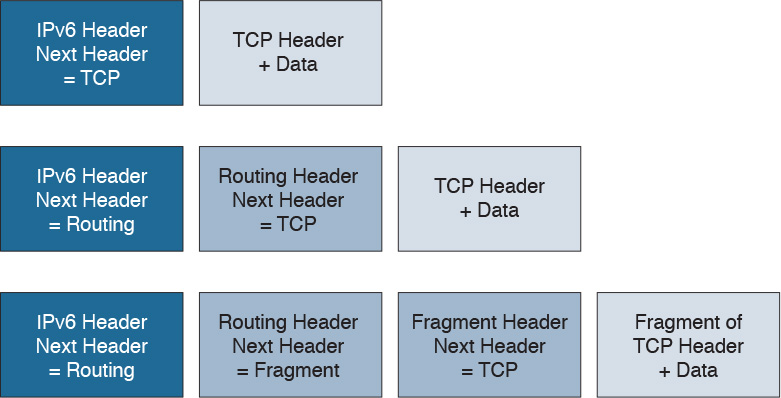

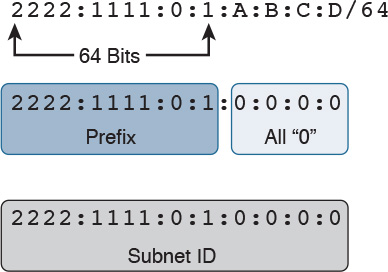

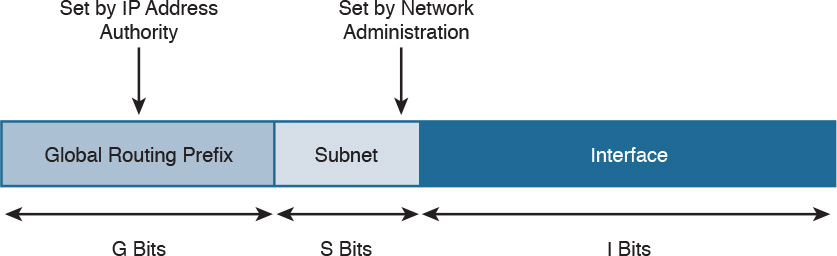

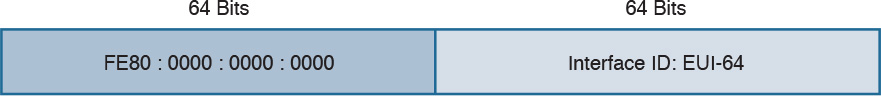

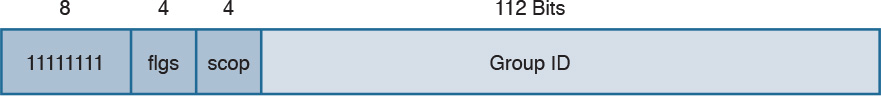

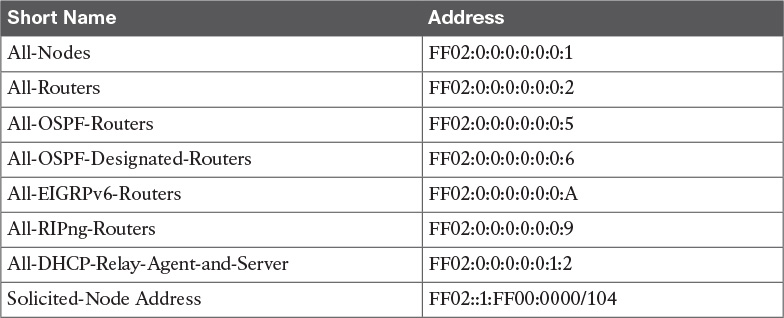

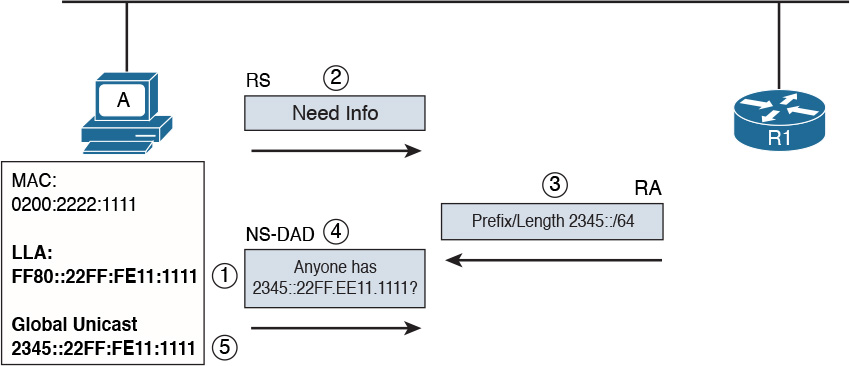

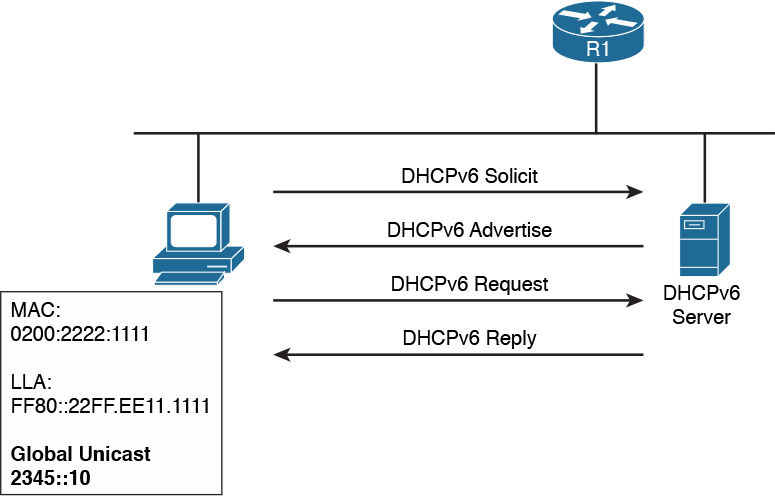

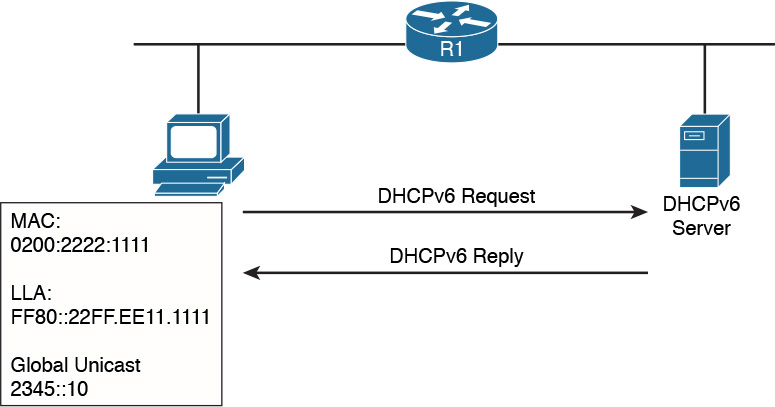

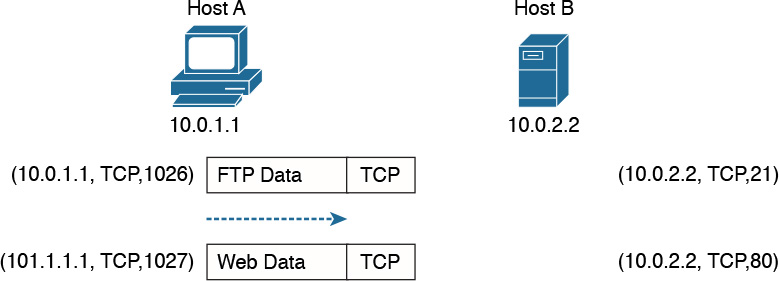

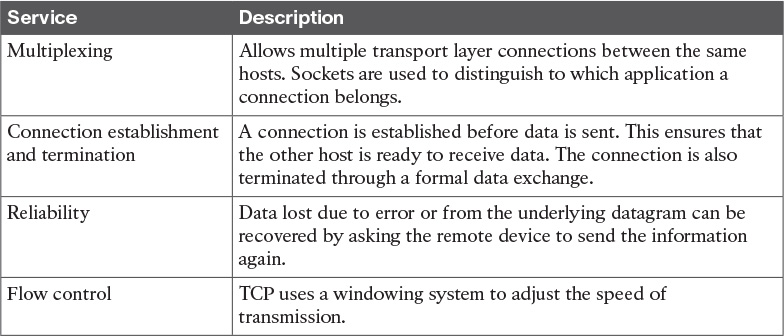

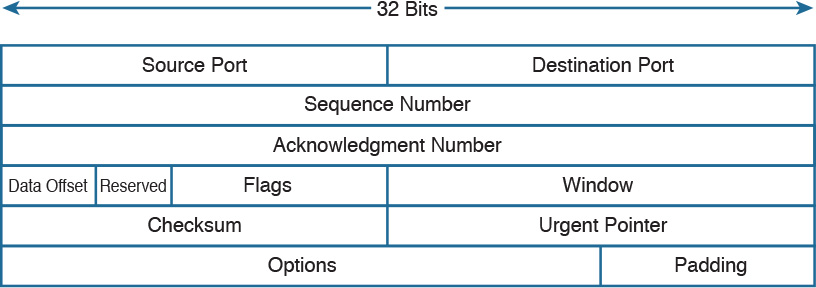

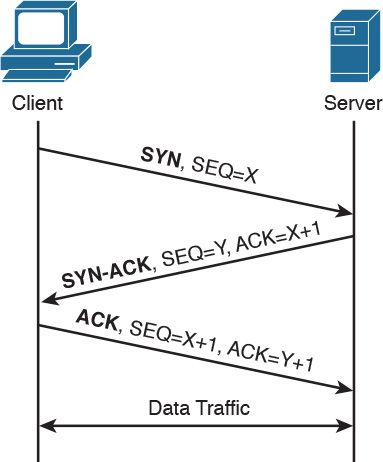

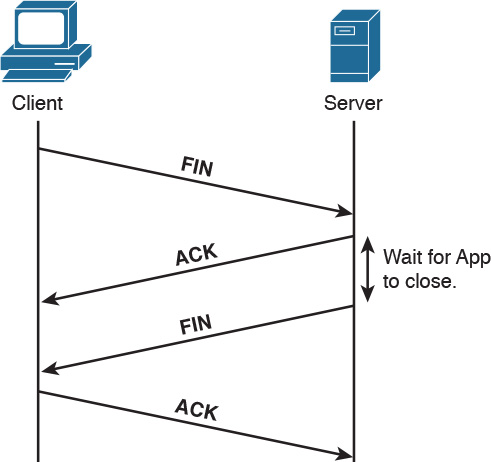

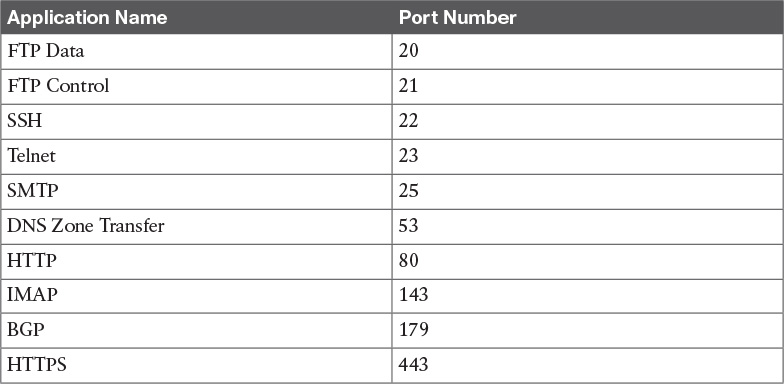

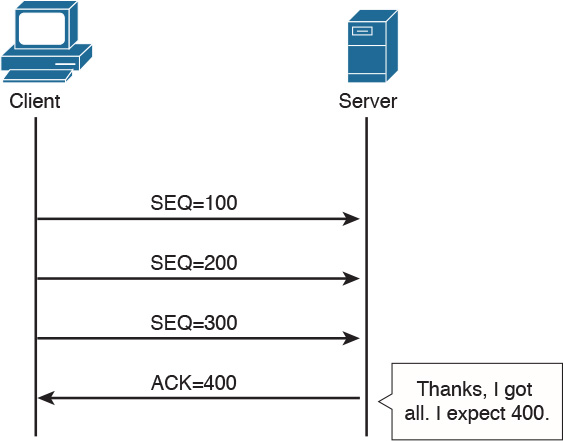

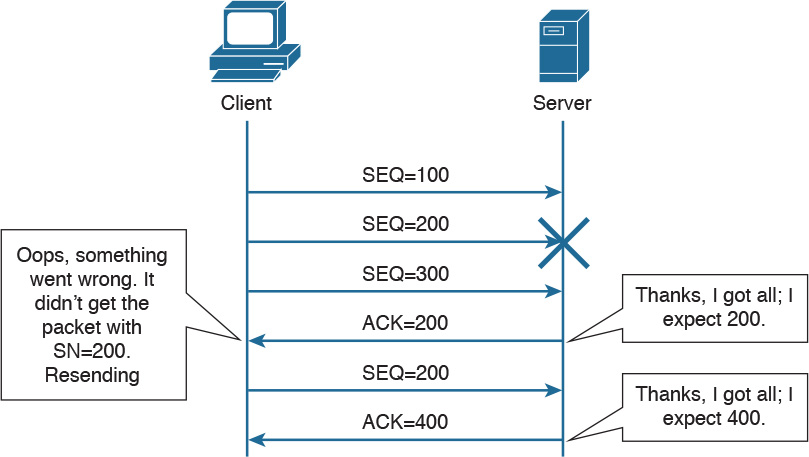

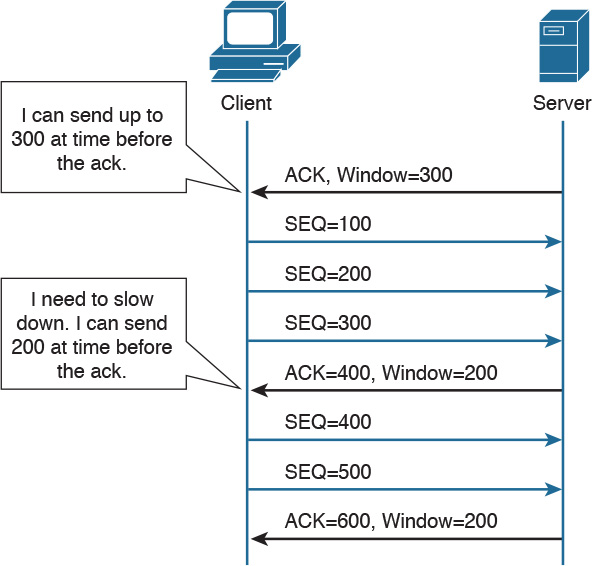

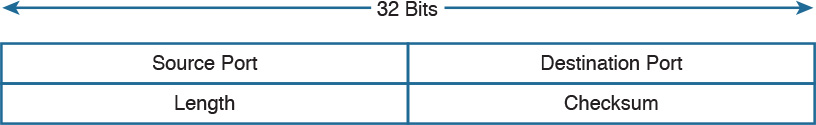

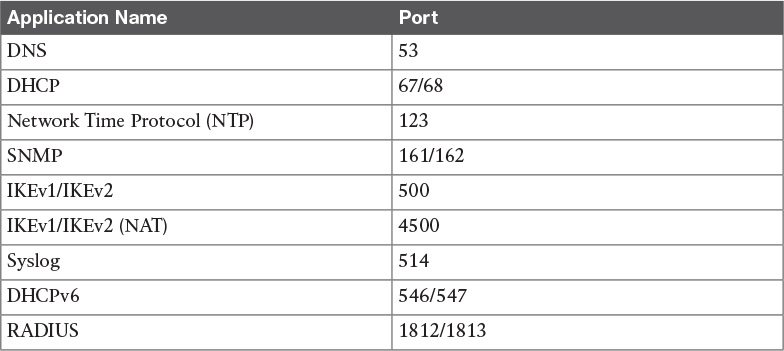

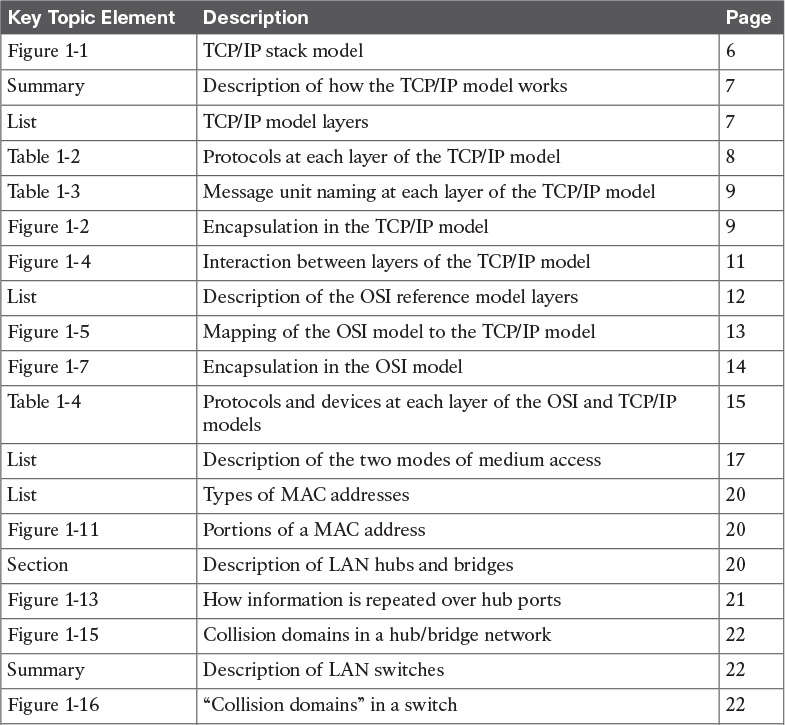

![]() Receiver address (RA) is the MAC address of the receiver of the frame (for example, the AP).