Chapter 3. Vulnerability Management Activities

This chapter covers the following topics related to Objective 1.3 (Given a scenario, perform vulnerability management activities) of the CompTIA Cybersecurity Analyst (CySA+) CS0-002 certification exam:

• Vulnerability identification: Explores asset criticality, active vs. passive scanning, and mapping/enumeration

• Validation: Covers true positive, false positive, true negative, and false negative alerts

• Remediation/mitigation: Describes configuration baseline, patching, hardening, compensating controls, risk acceptance, and verification of mitigation

• Scanning parameters and criteria: Explains risks associated with scanning activities, vulnerability feed, scope, credentialed vs. non-credentialed scans, server-based vs. agent-based scans, internal vs. external scans, and special considerations including types of data, technical constraints, workflow, sensitivity levels, regulatory requirements, segmentation, intrusion prevention system (IPS), intrusion detection system (IDS), and firewall settings

• Inhibitors to remediation: Covers memorandum of understanding (MOU), service-level agreement (SLA), organizational governance, business process interruption, degrading functionality, legacy systems, and proprietary systems

Managing vulnerabilities requires more than a casual approach. There are certain processes and activities that should occur to ensure that your management of vulnerabilities is as robust as it can be. This chapter describes the activities that should be performed to manage vulnerabilities.

“Do I Know This Already?” Quiz

The “Do I Know This Already?” quiz enables you to assess whether you should read the entire chapter. If you miss no more than one of these five self-assessment questions, you might want to move ahead to the “Exam Preparation Tasks” section. Table 3-1 lists the major headings in this chapter and the “Do I Know This Already?” quiz questions covering the material in those headings so you that can assess your knowledge of these specific areas. The answers to the “Do I Know This Already?” quiz appear in Appendix A.

Table 3-1 “Do I Know This Already?” Foundation Topics Section-to-Question Mapping

1. Which of the following helps to identify the number and type of resources that should be devoted to a security issue?

a. Specific threats that are applicable to the component

b. Mitigation strategies that could be used

c. The relative value of the information that could be discovered

d. The organizational culture

2. Which of the following occurs when the scanner correctly identifies a vulnerability?

a. True positive

b. False positive

c. False negative

d. True negative

3. Which of the following is the first step of the patch management process?

a. Determine the priority of the patches

b. Install the patches

c. Test the patches

d. Ensure that the patches work properly

4. Which of the following is not a risk associated with scanning activities?

a. False sense of security can be introduced

b. Does not itself reduce your risk

c. Only as valid as the latest scanner update

d. Distracts from day-to-day operations

5. Which of the following is a document that, while not legally binding, indicates a general agreement between the principals to do something together?

a. SLA

b. MOU

c. ICA

d. SCA

Foundation Topics

Vulnerability Identification

Vulnerabilities must be identified before they can be mitigated by applying security controls or countermeasures. Vulnerability identification is typically done through a formal process called a vulnerability assessment, which works hand in hand with another process called risk management. The vulnerability assessment identifies and assesses the vulnerabilities, and the risk management process goes a step further and identifies the assets at risk and assigns a risk value (derived from both the impact and likelihood) to each asset.

Regardless of the components under study (network, application, database, etc.), any vulnerability assessment’s goal is to highlight issues before someone either purposefully or inadvertently leverages the issue to compromise the component. The design of the assessment process has a great impact on its success. Before an assessment process is developed, the following goals of the assessment need to be identified:

• The relative value of the information that could be discovered through the compromise of the components under assessment: This helps to identify the number and type of resources that should be devoted to the issue.

• The specific threats that are applicable to the component: For example, a web application would not be exposed to the same issues as a firewall because their operation and positions in the network differ.

• The mitigation strategies that could be deployed to address issues that might be found: Identifying common strategies can suggest issues that weren’t anticipated initially. For example, if you were doing a vulnerability test of your standard network operating system image, you should anticipate issues you might find and identify what technique you will use to address each.

A security analyst who will be performing a vulnerability assessment needs to understand the systems and devices that are on the network and the jobs they perform. Having this knowledge will ensure that the analyst can assess the vulnerabilities of the systems and devices based on the known and potential threats to the systems and devices.

After gaining knowledge regarding the systems and device, a security analyst should examine existing controls in place and identify any threats against those controls. The security analyst then uses all the information gathered to determine which automated tools to use to analyze for vulnerabilities. After the vulnerability analysis is complete, the security analyst should verify the results to ensure that they are accurate and then report the findings to management, with suggestions for remedial action. With this information in hand, the threat analyst should carry out threat modeling to identify the threats that could negatively affect systems and devices and the attack methods that could be used.

In some situations, a vulnerability management system may be indicated. A vulnerability management system is software that centralizes and, to a certain extent, automates the process of continually monitoring and testing the network for vulnerabilities. Such a system can scan the network for vulnerabilities, report them, and, in many cases, remediate the problem without human intervention. While a vulnerability management system is a valuable tool to have, these systems, regardless of how sophisticated they may be, cannot take the place of vulnerability and penetration testing performed by trained professionals.

Keep in mind that after a vulnerability assessment is complete, its findings are only a snapshot in time. Even if no vulnerabilities are found, the best statement to describe the situation is “there are no known vulnerabilities at this time.” It is impossible to say with certainty that a vulnerability will not be discovered in the near future.

Asset Criticality

Assets should be classified based on their value to the organization and their sensitivity to disclosure. Assigning a value to data and assets enables an organization to determine the resources that should be used to protect them. Resources that are used to protect data include personnel resources, monetary resources, access control resources, and so on. Classifying assets enables you to apply different protective measures. Asset classification is critical to all systems to protect the confidentiality, integrity, and availability (CIA) of the asset. After assets are classified, they can be segmented based on the level of protection needed. The classification levels ensure that assets are protected in the most cost-effective manner possible. The assets could then be configured to ensure they are isolated or protected based on these classification levels. An organization should determine the classification levels it uses based on the needs of the organization. A number of private-sector classifications and military and government information classifications are commonly used.

The information life cycle should also be based on the classification of the assets. In the case of data assets, organizations are required to retain certain information, particularly financial data, based on local, state, or government laws and regulations.

Sensitivity is a measure of how freely data can be handled. Data sensitivity is one factor in determining asset criticality. For example, a particular server stores highly sensitive data and therefore needs to be identified as a high criticality asset. Some data requires special care and handling, especially when inappropriate handling could result in penalties, identity theft, financial loss, invasion of privacy, or unauthorized access by an individual or many individuals. Some data is also subject to regulation by state or federal laws that require notification in the event of a disclosure. Data is assigned a level of sensitivity based on who should have access to it and how much harm would be done if it were disclosed. This assignment of sensitivity is called data classification. Criticality is a measure of the importance of the data. Data that is considered sensitive might not necessarily be considered critical. Assigning a level of criticality to a particular data set requires considering the answers to a few questions:

• Will you be able to recover the data in case of disaster?

• How long will it take to recover the data?

• What is the effect of this downtime, including loss of public standing?

Data is considered essential when it is critical to the organization’s business. When essential data is not available, even for a brief period of time, or when its integrity is questionable, the organization is unable to function. Data is considered required when it is important to the organization but organizational operations would continue for a predetermined period of time even if the data were not available. Data is nonessential if the organization can operate without it during extended periods of time.

Active vs. Passive Scanning

Network vulnerability scans probe a targeted system or network to identify vulnerabilities. The tools used in this type of scan contain a database of known vulnerabilities and identify whether a specific vulnerability exists on each device. There are two types of vulnerability scanning:

• Passive vulnerability scanning: Passive vulnerability scanning collects information but doesn’t take any action to block an attack. A passive vulnerability scanner (PVS) monitors network traffic at the packet layer to determine topology, services, and vulnerabilities. It avoids the instability that can be introduced to a system by actively scanning for vulnerabilities. PVS tools analyze the packet stream and look for vulnerabilities through direct analysis. They are deployed in much the same way as intrusion detection systems (IDSs) or packet analyzers. A PVS can pick a network session that targets a protected server and monitor it as much as needed. The biggest benefit of a PVS is its capability to do its work without impacting the monitored network. Some examples of PVSs are the Nessus Network Monitor (formerly Tenable PVS) and NetScanTools Pro.

• Active vulnerability scanning: Active vulnerability scanning collects information and attempts to block the attack. Whereas passive scanners can only gather information, active vulnerability scanners (AVSs) can take action to block an attack, such as block a dangerous IP address. AVSs can also be used to simulate an attack to assess readiness. They operate by sending transmissions to nodes and examining the responses. Because of this, these scanners may disrupt network traffic. Examples include Nessus Professional and OpenVAS.

Regardless of whether it’s active or passive, a vulnerability scanner cannot replace the expertise of trained security personnel. Moreover, these scanners are only as effective as the signature databases on which they depend, so the databases must be updated regularly. Finally, because scanners require bandwidth, they potentially slow the network. For best performance, you can place a vulnerability scanner in a subnet that needs to be protected. You can also connect a scanner through a firewall to multiple subnets; this complicates the configuration and requires opening ports on the firewall, which could be problematic and could impact the performance of the firewall.

Mapping/Enumeration

Vulnerability mapping and enumeration is the process of identifying and listing vulnerabilities. In Chapter 2, “Utilizing Threat Intelligence to Support Organizational Security,” you were introduced to the Common Vulnerability Scoring System (CVSS). A closely related concept is the Common Weakness Enumeration (CWE), a category system for software weaknesses and vulnerabilities. CWE organizes vulnerabilities into over 600 categories, including classes for buffer overflows, path/directory tree traversal errors, race conditions, cross-site scripting, hard-coded passwords, and insecure random numbers. CWE is only one of a number of enumerations that are used by Security Content Automation Protocol (SCAP), a standard that the security community uses to enumerate software flaws and configuration issues. SCAP will be covered more fully in Chapter 14, “Automation Concepts and Technologies.”

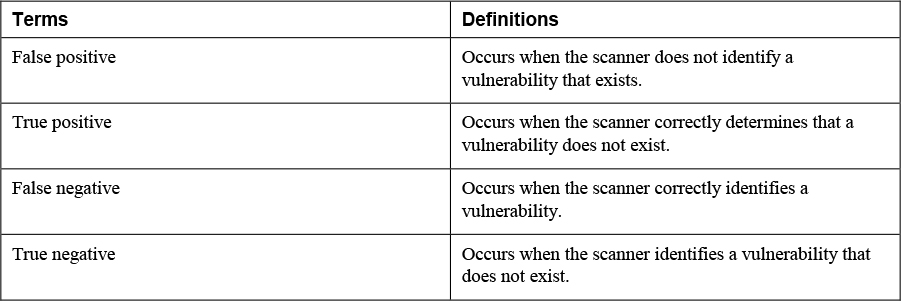

Validation

Scanning results are not always correct. Scanning tools can make mistakes identifying vulnerabilities. There are four types of results a scanner can deliver:

• True positive: Occurs when the scanner correctly identifies a vulnerability. True means the scanner is correct and positive means it identified a vulnerability.

• False positive: Occurs when the scanner identifies a vulnerability that does not exist. False mean the scanner is incorrect and positive means it identified a vulnerability. Lots of false positives reduces confidence in scanning results.

• True negative: Occurs when the scanner correctly determines that a vulnerability does not exist. True means the scanner is correct and negative means it did not identify a vulnerability.

• False negative: Occurs when the scanner does not identity a vulnerability that actually exists. False means the scanner is wrong and negative means it did not find a vulnerability. This is worse than a false positive because it means that a vulnerability exists that you are unaware of.

Remediation/Mitigation

When vulnerabilities are identified, security professionals must take steps to address them. One of the outputs of a good risk management process is the prioritization of the vulnerabilities and an assessment of the impact and likelihood of each. Driven by those results, security measures (also called controls or countermeasures) can be put in place to reduce risk. Let’s look at some issues relevant to vulnerability mitigation.

Configuration Baseline

A baseline is a floor or minimum standard that is required. With respect to configuration baselines, they are security settings that are required on devices of various types. These settings should be driven by results of vulnerability and risk management processes.

One practice that can make maintaining security simpler is to create and deploy standard images that have been secured with security baselines. A security baseline is a set of configuration settings that provide a floor of minimum security in the image being deployed.

Security baselines can be controlled through the use of Group Policy in Windows. These policy settings can be made in the image and applied to both users and computers. These settings are refreshed periodically through a connection to a domain controller and cannot be altered by the user. It is also quite common for the deployment image to include all of the most current operating system updates and patches as well. This creates consistency across devices and helps prevent security issues caused by human error in configuration.

When a network makes use of these types of technologies, the administrators have created a standard operating environment. The advantages of such an environment are more consistent behavior of the network and simpler support issues. Scans should be performed of the systems weekly to detect changes to the baseline.

Security professionals should help guide their organization through the process of establishing the security baselines. If an organization implements very strict baselines, it will provide a higher level of security but can actually be too restrictive.

If an organization implements a very lax baseline, it will provide a lower level of security and will likely result in security breaches. Security professionals should understand the balance between protecting the organizational assets and allowing users access and should work to ensure that both ends of this spectrum are understood.

Patching

Patch management, or patching, is often seen as a subset of configuration management. Software patches are updates released by vendors that either fix functional issues with or close security loopholes in operating systems, applications, and versions of firmware that run on network devices.

To ensure that all devices have the latest patches installed, you should deploy a formal system to ensure that all systems receive the latest updates after thorough testing in a non-production environment. It is impossible for a vendor to anticipate every possible impact a change might have on business-critical systems in a network. The enterprise is responsible for ensuring that patches do not adversely impact operations.

The patch management life cycle includes the following steps:

Step 1. Determine the priority of the patches and schedule the patches for deployment.

Step 2. Test the patches prior to deployment to ensure that they work properly and do not cause system or security issues.

Step 3. Install the patches in the live environment.

Step 4. After the patches are deployed, ensure that they work properly.

Many organizations deploy a centralized patch management system to ensure that patching is deployed in a timely manner. With this system, administrators can test and review all patches before deploying them to the systems they affect. Administrators can schedule the updates to occur during non-peak hours.

Hardening

Another of the ongoing goals of operations security is to ensure that all systems have been hardened to the extent that is possible and still provide functionality. The hardening can be accomplished both on physical and logical bases. From a logical perspective:

• Remove unnecessary applications.

• Disable unnecessary services.

• Block unrequired ports.

• Tightly control the connecting of external storage devices and media (if it’s allowed at all).

Compensating Controls

Not all vulnerabilities can be eliminated. In some cases, they can only be mitigated. This can be done by implementing compensating controls (also known as countermeasures or safeguards) that compensate for a vulnerability that cannot be completely eliminated by reducing the potential risk of that vulnerability being exploited. Three things must be considered when implementing a compensating control: vulnerability, threat, and risk. For example, a good compensating control might be to implement the appropriate access control list (ACL) and encrypt the data. The ACL protects the integrity of the data, and the encryption protects the confidentiality of the data.

Note

For more information on compensating controls, see http://pcidsscompliance.net/overview/what-are-compensating-controls/.

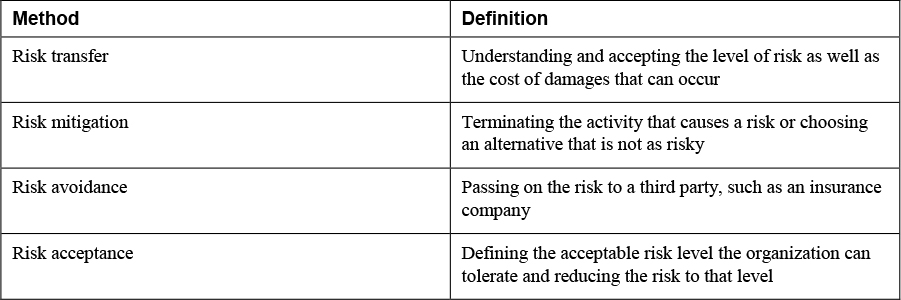

Risk Acceptance

You learned about risk management in Chapter 2. Part of the risk management process is deciding how to address a vulnerability. There are several ways to react. Risk reduction is the process of altering elements of the organization in response to risk analysis. After an organization understands its risk, it must determine how to handle the risk. The following four basic methods are used to handle risk:

• Risk avoidance: Terminating the activity that causes a risk or choosing an alternative that is not as risky

• Risk transfer: Passing on the risk to a third party, such as an insurance company

• Risk mitigation: Defining the acceptable risk level the organization can tolerate and reducing the risk to that level

• Risk acceptance: Understanding and accepting the level of risk as well as the cost of damages that can occur

Verification of Mitigation

Once a threat has been remediated, you should verify that the mitigation has solved the issue. You should also take steps to ensure that all is back to its normal secure state. These steps validate that you are finished and can move on to taking corrective actions with respect to the lessons learned.

• Patching: In many cases, a threat or an attack is made possible by missing security patches. You should update or at least check for updates for a variety of components. This includes all patches for the operating system, updates for any applications that are running, and updates to all anti-malware software that is installed. While you are at it, check for any firmware update the device may require. This is especially true of hardware security devices such as firewalls, IDSs, and IPSs. If any routers or switches are compromised, check for software and firmware updates.

• Permissions: Many times an attacker compromises a device by altering the permissions, either in the local database or in entries related to the device in the directory service server. All permissions should undergo a review to ensure that all are in the appropriate state. The appropriate state might not be the state they were in before the event. Sometimes you may discover that although permissions were not set in a dangerous way prior to an event, they are not correct. Make sure to check the configuration database to ensure that settings match prescribed settings. You should also make changes to the permissions based on lessons learned during an event. In that case, ensure that the new settings undergo a change control review and that any approved changes are reflected in the configuration database.

• Scanning: Even after you have taken all steps described thus far, consider using a vulnerability scanner to scan the devices or the network of devices that were affected. Make sure before you do so that you have updated the scanner so it can recognize the latest vulnerabilities and threats. This will help catch any lingering vulnerabilities that might still be present.

• Verify logging/communication to security monitoring: To ensure that you will have good security data going forward, you need to ensure that all logs related to security are collecting data. Pay special attention to the manner in which the logs react when full. With some settings, the log begins to overwrite older entries with new entries. With other settings, the service stops collecting events when the log is full. Security log entries need to be preserved. This may require manual archiving of the logs and subsequent clearing of the logs. Some logs make this possible automatically, whereas others require a script. If all else fails, check the log often to assess its state. Many organizations send all security logs to a central location. This could be a Syslog server, or it could be a security information and event management (SIEM) system. These systems not only collect all the logs but also use the information to make inferences about possible attacks. Having access to all logs enables the system to correlate all the data from all responding devices. Regardless of whether you are logging to a Syslog server or a SIEM system, you should verify that all communications between the devices and the central server are occurring without a hitch. This is especially true if you had to rebuild the system manually rather than restore from an image, as there would be more opportunity for human error in the rebuilding of the device.

Scanning Parameters and Criteria

Scanning is the process of using scanning tools to identity security issues. Typical issues discovered include missing patches, weak passwords, and insecure configurations. While types of scanning are covered in Chapter 4, “Analyzing Assessment Output,” let’s look at some issues and considerations supporting the process.

Risks Associated with Scanning Activities

While vulnerability scanning is an advisable and valid process, there are some risks to note:

• A false sense of security can be introduced because scans are not error free.

• Many tools rely on a database of known vulnerabilities and are only as valid as the latest update.

• Identifying vulnerabilities does not in and of itself reduce your risk or improve your security.

Vulnerability Feed

Vulnerability feeds are RSS feeds dedicated to the sharing of information about the latest vulnerabilities. Subscribing to these feeds can enhance the knowledge of the scanning team and can keep the team abreast of the latest issues. For example, the National Vulnerability Database is the U.S. government repository of standards-based vulnerability management data represented using the Security Content Automation Protocol (SCAP) (covered in Chapter 14).

Scope

The scope of a scan defines what will be scanned and what type of scan will be performed. It defines what areas of the infrastructure will be scanned, and this part of the scope should therefore be driven by where the assets of concern are located. Limiting the scan areas helps ensure that accidental scanning of assets and devices not under the direct control of the organization does not occur (because it could cause legal issues). Scope might also include times of day when scanning should not occur.

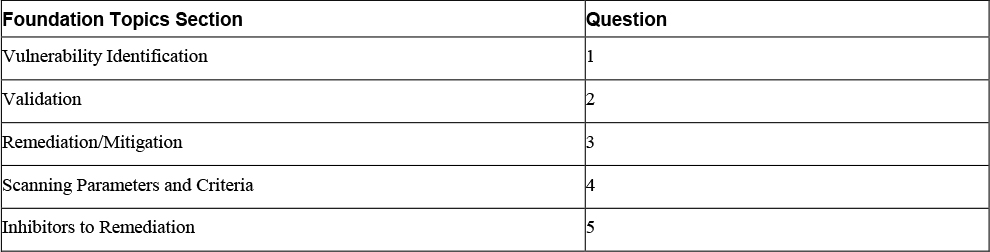

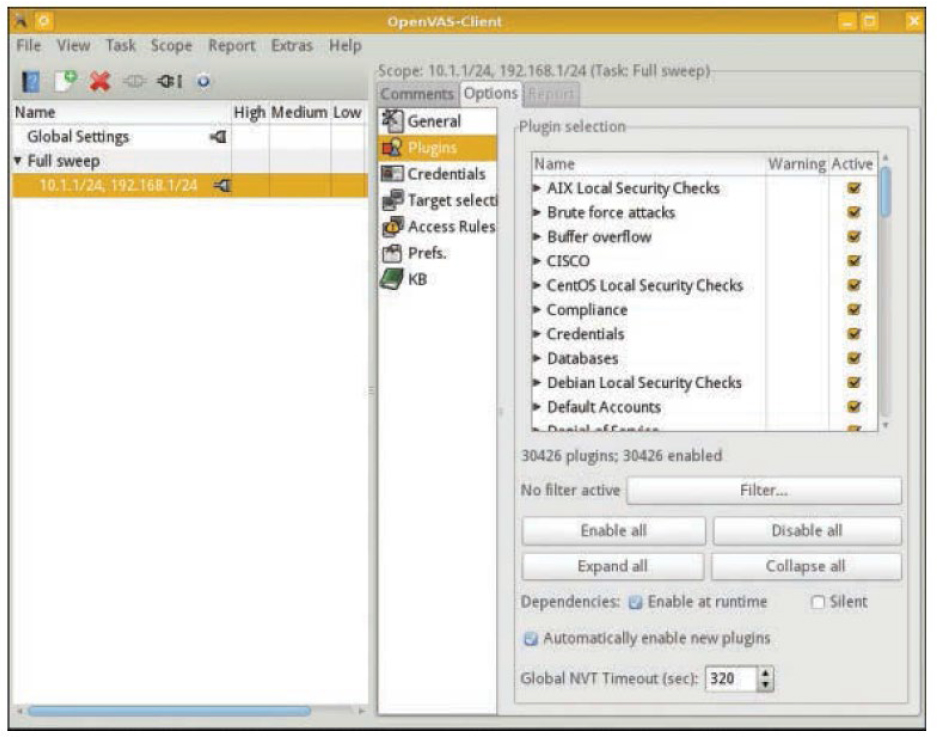

In the OpenVAS vulnerability scanner, you can set the scope by setting the plug-ins and the targets. Plug-ins define the scans to be performed, and targets specify the machines. Figure 3-1 shows where plug-ins are chosen, and Figure 3-2 shows where the targets are set.

Figure 3-1 Selecting Plug-ins in OpenVAS

Figure 3-2 Selecting Targets in OpenVAS

Credentialed vs. Non-credentialed

Another decision that needs to be made before performing a vulnerability scan is whether to perform a credentialed scan or a non-credentialed scans. A credentialed scan is a scan that is performed by someone with administrative rights to the host being scanned, while a non-credentialed scan is performed by someone lacking these rights.

Non-credentialed scans generally run faster and require less setup but do not generate the same quality of information as a credentialed scan. This is because credentialed scans can enumerate information from the host itself, whereas non-credentialed scans can only look at ports and only enumerate software that will respond on a specific port. Credentialed scanning also has the following benefits:

• Operations are executed on the host itself rather than across the network.

• A more definitive list of missing patches is provided.

• Client-side software vulnerabilities are uncovered.

• A credentialed scan can read password policies, obtain a list of USB devices, check antivirus software configurations, and even enumerate Bluetooth devices attached to scanned hosts.

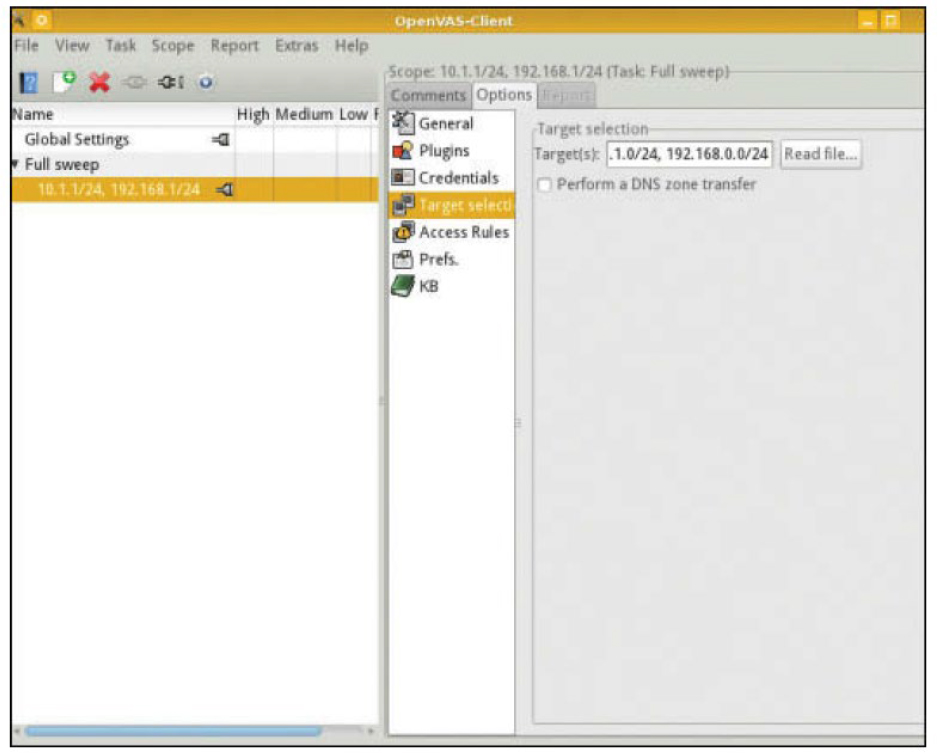

Figure 3-3 shows that when you create a new scan policy in Nessus, one of the available steps is to set credentials. Here you can see that Windows credentials are chosen as the type, and the SMB account and password are set.

Figure 3-3 Setting Credentials for a Scan in Nessus

Server-based vs. Agent-based

Vulnerability scanners can use agents that are installed on the devices, or they can be agentless. While many vendors argue that using agents is always best, there are advantages and disadvantages to both, as presented in Table 3-2.

Table 3-2 Server-Based vs. Agent-Based Scanning

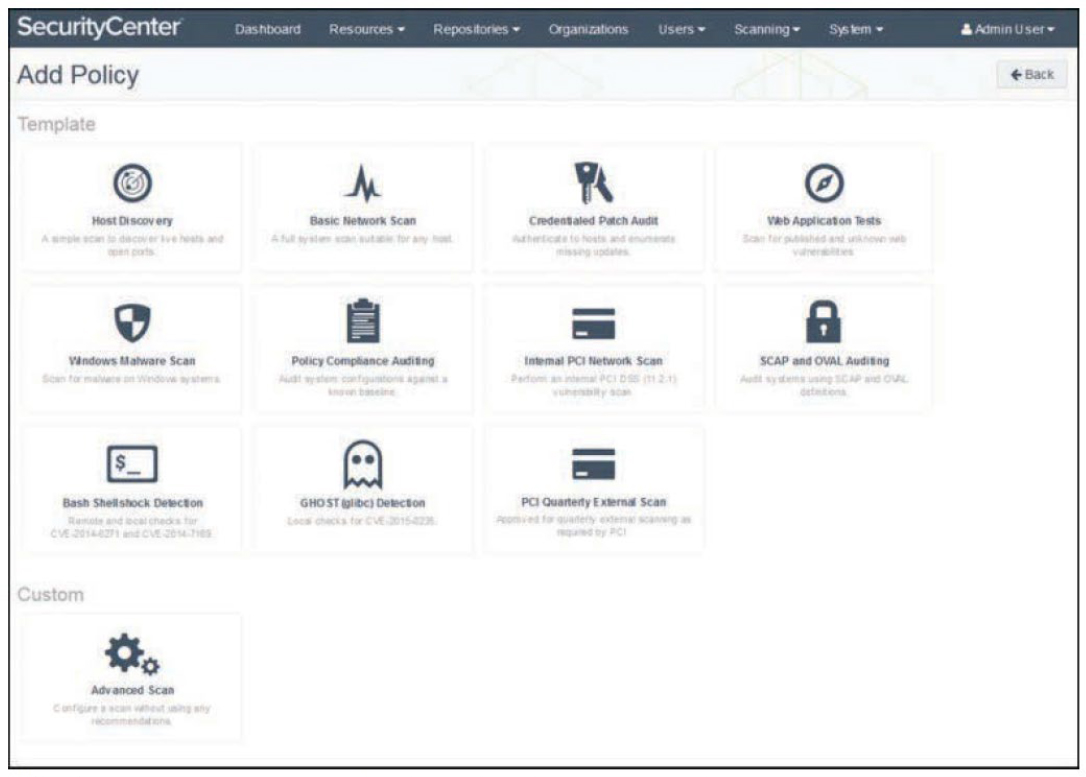

Some scanners can do both agent-based and server-based scanning (also called agentless or sensor-based scanning). For example, Figure 3-4 shows the Nessus templates library with both categories of templates available.

Figure 3-4 Nessus Template Library

Internal vs. External

Scans can be performed from within the network perimeter or from outside the perimeter. This choice has a big effect on the results and their interpretation. Typically the type of scan is driven by what the tester is looking for. If the tester’s area of interest is vulnerabilities that can be leveraged from outside the perimeter to penetrate the perimeter, then an external scan is in order. In this type of scan, either the sensors of the appliance are placed outside the perimeter or, in the case of software running on a device, the device itself is placed outside the perimeter.

On the other hand, if the tester’s area of interest is vulnerabilities that exist within the perimeter—that is, vulnerabilities that could be leveraged by outsiders who have penetrated the perimeter or by malicious insiders (your own people)—then an internal scan is indicated. In this case, either the sensors of the appliance are placed inside the perimeter or, in the case of software running on a device, the device itself is placed inside the perimeter.

Special Considerations

Just as the requirements of the vulnerability management program were defined in the beginning of the process, scanning criteria must be settled upon before scanning begins. This will ensure that the proper data is generated and that the conditions under which the data will be collected are well understood. This will result in a better understanding of the context in which the data was obtained and better analysis. Some of the criteria that might be considered are described in the following sections.

Types of Data

The types of data with which you are concerned should have an effect on how you run the scan. Many tools offer the capability to focus on certain types of vulnerabilities that relate specifically to certain data types.

Technical Constraints

In some cases the scan will be affected by technical constraints. Perhaps the way in which you have segmented the network caused you to have to run the scan multiple times from various locations in the network. You will also be limited by the technical capabilities of the scan tool you use.

Workflow

Workflow can also influence the scan. You might be limited to running scans at certain times because it negatively affects workflow. While security is important it isn’t helpful if it detracts from business processes that keep the organization in business.

Sensitivity Levels

Scanning tools have sensitivity level settings that impact both the number of results and the tool’s judgment of the results. Most systems assign a default severity level to each vulnerability. In some cases, security analysts may find that certain events that the system is tagging as vulnerabilities are actually not vulnerabilities but that the system has mischaracterized them. In other cases, an event might be a vulnerability but the severity level assigned is too extreme or not extreme enough. In that case the analyst can either dismiss the vulnerability, which means the system stops reporting it, or manually define a severity level for the event that is more appropriate. Keep in mind that these systems are not perfect.

Sensitivity also refers to how deeply a scan probes each host. Scanning tools have templates that can be used to perform certain types of scans. These are two of the most common templates in use:

• Discovery scans: These scans are typically used to create an asset inventory of all hosts and all available services.

• Assessment scans: These scans are more comprehensive than discovery scans and can identify misconfigurations, malware, application settings that are against policy, and weak passwords. These scans have a significant impact on the scanned device.

Figure 3-5 shows the All Templates page in Nessus, with scanning templates like the ones just discussed.

Figure 3-5 Scanning Templates in Nessus

Regulatory Requirements

Does the organization operate in an industry that is regulated? If so, all regulatory requirements must be recorded, and the vulnerability assessment must be designed to support all requirements. The following are some examples of industries in which security requirements exist:

• Finance (for example, banks and brokerages)

• Medical (for example, hospitals, clinics, and insurance companies)

• Retail (for example, credit card and customer information)

Legislation such as the following can affect organizations operating in these industries:

• Sarbanes-Oxley Act (SOX): The Public Company Accounting Reform and Investor Protection Act of 2002, more commonly known as the Sarbanes-Oxley Act (SOX), affects any organization that is publicly traded in the United States. It controls the accounting methods and financial reporting for the organizations and stipulates penalties and even jail time for executive officers who fail to comply with its requirements.

• Health Insurance Portability and Accountability Act (HIPAA): HIPAA, also known as the Kennedy-Kassebaum Act, affects all healthcare facilities, health insurance companies, and healthcare clearing houses. It is enforced by the Office of Civil Rights (OCR) of the Department of Health and Human Services (HHS). It provides standards and procedures for storing, using, and transmitting medical information and healthcare data. HIPAA overrides state laws unless the state laws are stricter. This act directly affects the security of protected health information (PHI).

• Gramm-Leach-Bliley Act (GLBA) of 1999: The Gramm-Leach-Bliley Act (GLBA) of 1999 affects all financial institutions, including banks, loan companies, insurance companies, investment companies, and credit card providers. It provides guidelines for securing all financial information and prohibits sharing financial information with third parties. This act directly affects the security of personally identifiable information (PII).

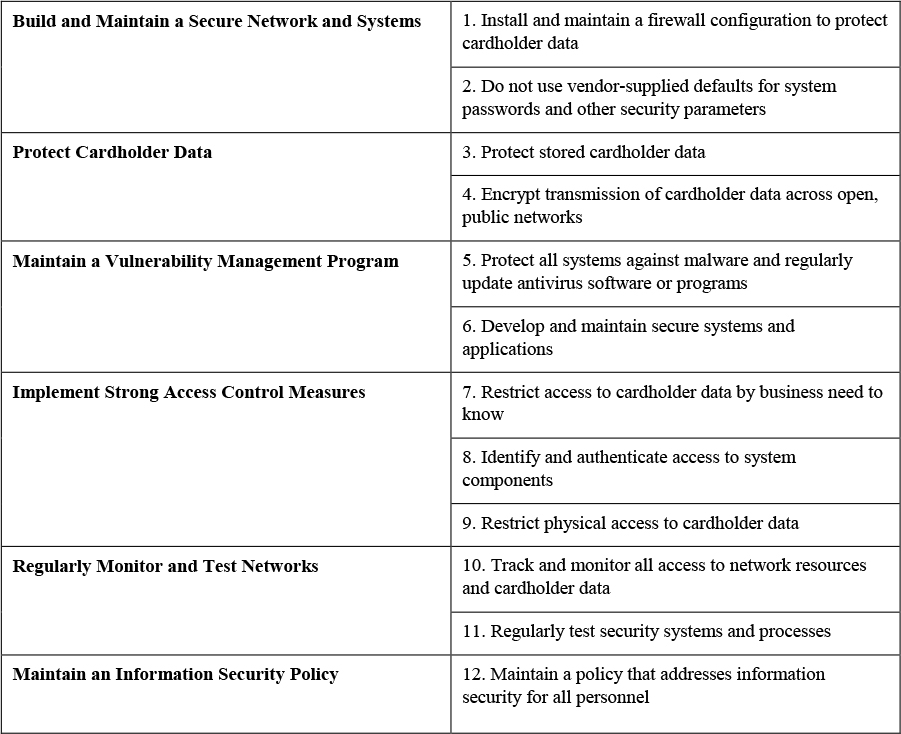

• Payment Card Industry Data Security Standard (PCI DSS): PCI DSS v3.2.1, developed in 2019, is the latest version of the PCI DSS standard as of this writing. It encourages and enhances cardholder data security and facilitates the broad adoption of consistent data security measured globally. Table 3-3 shows a high-level overview of the PCI DSS standard.

Table 3-3 High-Level Overview of PCI DSS

Segmentation

Segmentation is the process of dividing a network at either Layer 2 or Layer 3. When VLANs are used, there is segmentation at both Layer 2 and Layer 3, and with IP subnets, there is segmentation at Layer 3. Segmentation is usually done for one or both of the following reasons:

• To create smaller, less congested subnets

• To create security borders

In either case, segmentation can affect how you conduct a vulnerability scan. By segmenting critical assets and resources from less critical systems, you can restrict the scan to the segments of interest, reducing the time to conduct a scan while reducing the amount of irrelevant data. This is not to suggest that you should not scan the less critical parts of the network; it’s just that you can adopt a less robust schedule for those scans.

Intrusion Prevention System (IPS), Intrusion Detection System (IDS), and Firewall Settings

The settings that exist on the security devices will impact the scan and in many cases are the source of a technical restraint, as mentioned earlier. Scans might be restricted by firewall settings and the scan can cause alerts to be generated by your intrusion devices. Let’s talk a bit more about these devices.

Vulnerability scanners are not the only tools used to identify vulnerabilities. The following systems should also be implemented as a part of a comprehensive solution.

IDS/IPS

While you can use packet analyzers to manually monitor the network for issues during environmental reconnaissance, a less labor-intensive and more efficient way to detect issues is through the use of intrusion detection systems (IDSs) and intrusion prevention systems (IPSs). An IDS is responsible for detecting unauthorized access or attacks against systems and networks. It can verify, itemize, and characterize threats from outside and inside the network. Most IDSs are programmed to react in certain ways in specific situations. Event notification and alerts are crucial to an IDS. They inform administrators and security professionals when and where attacks are detected. IDS implementations are furthered divided into the following categories:

• Signature based: This type of IDS analyzes traffic and compares it to attack or state patterns, called signatures, that reside within the IDS database. An IDS is also referred to as a misuse-detection system. Although this type of IDS is very popular, it can only recognize attacks as compared with its database and is only as effective as the signatures provided. Frequent database updates are necessary. There are two main types of signature-based IDSs:

• Pattern matching: The IDS compares traffic to a database of attack patterns. The IDS carries out specific steps when it detects traffic that matches an attack pattern.

• Stateful matching: The IDS records the initial operating system state. Any changes to the system state that specifically violate the defined rules result in an alert or notification being sent.

• Anomaly-based: This type of IDS analyzes traffic and compares it to normal traffic to determine whether said traffic is a threat. It is also referred to as a behavior-based, or profile-based, system. The problem with this type of system is that any traffic outside expected norms is reported, resulting in more false positives than you see with signature-based systems. There are three main types of anomaly-based IDSs:

• Statistical anomaly-based: The IDS samples the live environment to record activities. The longer the IDS is in operation, the more accurate the profile that is built. However, developing a profile that does not have a large number of false positives can be difficult and time-consuming. Thresholds for activity deviations are important in this IDS. Too low a threshold results in false positives, whereas too high a threshold results in false negatives.

• Protocol anomaly-based: The IDS has knowledge of the protocols it will monitor. A profile of normal usage is built and compared to activity.

• Traffic anomaly-based: The IDS tracks traffic pattern changes. All future traffic patterns are compared to the sample. Changing the threshold reduces the number of false positives or negatives. This type of filter is excellent for detecting unknown attacks, but user activity might not be static enough to effectively implement this system.

• Rule or heuristic based: This type of IDS is an expert system that uses a knowledge base, an inference engine, and rule-based programming. The knowledge is configured as rules. The data and traffic are analyzed, and the rules are applied to the analyzed traffic. The inference engine uses its intelligent software to “learn.” When characteristics of an attack are met, they trigger alerts or notifications. This is often referred to as an IF/THEN, or expert, system.

An application-based IDS is a specialized IDS that analyzes transaction log files for a single application. This type of IDS is usually provided as part of an application or can be purchased as an add-on.

An IPS is a system responsible for preventing attacks. When an attack begins, an IPS takes actions to contain the attack. An IPS, like an IDS, can be network or host based. Although an IPS can be signature or anomaly based, it can also use a rate-based metric that analyzes the volume of traffic as well as the type of traffic. In most cases, implementing an IPS is more costly than implementing an IDS because of the added security needed to contain attacks compared to the security needed to simply detect attacks. In addition, running an IPS is more of an overall performance load than running an IDS.

HIDS/NIDS

The most common way to classify an IDS is based on its information source: network based or host based. The most common IDS, the network-based IDS (NIDS), monitors network traffic on a local network segment. To monitor traffic on the network segment, the network interface card (NIC) must be operating in promiscuous mode—a mode in which the NIC process all traffic and not just the traffic directed to the host. A NIDS can only monitor the network traffic. It cannot monitor any internal activity that occurs within a system, such as an attack against a system that is carried out by logging on to the system’s local terminal. A NIDS is affected by a switched network because generally a NIDS monitors only a single network segment. A host-based IDS (HIDS) is an IDS that is installed on a single host and protects only that host.

Firewall Rule-Based and Logs

The network device that perhaps is most connected with the idea of security is the firewall. Firewalls can be software programs that are installed over server operating systems, or they can be appliances that have their own operating system. In either case, the job of firewalls is to inspect and control the type of traffic allowed. Firewalls can be discussed on the basis of their type and their architecture. They can also be physical devices or exist in a virtualized environment. The following sections look at them from all angles.

Firewall Types

When we discuss types of firewalls, we are focusing on the differences in the way they operate. Some firewalls make a more thorough inspection of traffic than others. Usually there is trade-off in the performance of the firewall and the type of inspection it performs. A deep inspection of the contents of each packet results in the firewall having a detrimental effect on throughput, whereas a more cursory look at each packet has somewhat less of an impact on performance. It is therefore important to carefully select what traffic to inspect, keeping this trade-off in mind.

Packet-filtering firewalls are the least detrimental to throughput because they inspect only the header of a packet for allowed IP addresses or port numbers. Although even performing this function slows traffic, it involves only looking at the beginning of the packet and making a quick allow or disallow decision. Although packet-filtering firewalls serve an important function, they cannot prevent many attack types. They cannot prevent IP spoofing, attacks that are specific to an application, attacks that depend on packet fragmentation, or attacks that take advantage of the TCP handshake. More advanced inspection firewall types are required to stop these attacks.

Stateful firewalls are aware of the proper functioning of the TCP handshake, keep track of the state of all connections with respect to this process, and can recognize when packets that are trying to enter the network don’t make sense in the context of the TCP handshake. For example, a packet should never arrive at a firewall for delivery and have both the SYN flag and the ACK flag set unless it is part of an existing handshake process, and it should be in response to a packet sent from inside the network with the SYN flag set. This is the type of packet that the stateful firewall would disallow. A stateful firewall also has the ability to recognize other attack types that attempt to misuse this process. It does this by maintaining a state table about all current connections and the status of each connection process. This allows it to recognize any traffic that doesn’t make sense with the current state of the connection. Of course, maintaining this table and referencing it cause this firewall type to have more effect on performance than does a packet-filtering firewall.

Proxy firewalls actually stand between each connection from the outside to the inside and make the connection on behalf of the endpoints. Therefore, there is no direct connection. The proxy firewall acts as a relay between the two endpoints. Proxy firewalls can operate at two different layers of the OSI model.

Circuit-level proxies operate at the session layer (Layer 5) of the OSI model. They make decisions based on the protocol header and session layer information. Because they do not do deep packet inspection (at Layer 7, the application layer), they are considered application independent and can be used for wide ranges of Layer 7 protocol types. A SOCKS firewall is an example of a circuit-level proxy firewall. It requires a SOCKS client on the computers. Many vendors have integrated their software with SOCKS to make using this type of firewall easier.

Application-level proxies perform deep packet inspection. This type of firewall understands the details of the communication process at Layer 7 for the application of interest. An application-level firewall maintains a different proxy function for each protocol. For example, for HTTP, the proxy can read and filter traffic based on specific HTTP commands. Operating at this layer requires each packet to be completely opened and closed, so this type of firewall has the greatest impact on performance.

Dynamic packet filtering does not describe a type of firewall; rather, it describes functionality that a firewall might or might not possess. When an internal computer attempts to establish a session with a remote computer, it places both a source and destination port number in the packet. For example, if the computer is making a request of a web server, because HTTP uses port 80, the destination is port 80. The source computer selects the source port at random from the numbers available above the well-known port numbers (that is, above 1023). Because predicting what that random number will be is impossible, creating a firewall rule that anticipates and allows traffic back through the firewall on that random port is impossible.

A dynamic packet-filtering firewall keeps track of that source port and dynamically adds a rule to the list to allow return traffic to that port.

A kernel proxy firewall is an example of a fifth-generation firewall. It inspects a packet at every layer of the OSI model but does not introduce the same performance hit as an application-level firewall because it does this at the kernel layer. It also follows the proxy model in that it stands between the two systems and creates connections on their behalf.

Firewall Architecture

Whereas the type of firewall speaks to the internal operation of the firewall, the architecture refers to the way in which the firewall or firewalls are deployed in the network to form a system of protection. This section looks at the various ways firewalls can be deployed and the names of these various configurations.

A bastion host might or might not be a firewall. The term actually refers to the position of any device. If it is exposed directly to the Internet or to any untrusted network, it is called a bastion host. Whether it is a firewall, a DNS server, or a web server, all standard hardening procedures become even more important for these exposed devices. Any unnecessary services should be stopped, all unneeded ports should be closed, and all security patches must be up to date. These procedures are referred to as “reducing the attack surface.”

A dual-homed firewall is a firewall that has two network interfaces: one pointing to the internal network and another connected to the untrusted network. In many cases, routing between these interfaces is turned off. The firewall software allows or denies traffic between the two interfaces, based on the firewall rules configured by the administrator. The danger of relying on a single dual-homed firewall is that it provides a single point of failure. If this device is compromised, the network is compromised also. If it suffers a denial-of-service (DoS) attack, no traffic can pass. Neither of these is a good situation. In some cases, a firewall may be multihomed.

One popular type is the three-legged firewall. This configuration has three interfaces: one connected to the untrusted network, one to the internal network, and the last one to a part of the network called a demilitarized zone (DMZ). A DMZ is a portion of the network where systems will be accessed regularly from an untrusted network. These might be web servers or an e-mail server, for example. The firewall can be configured to control the traffic that flows between the three networks, but it is important to be somewhat careful with traffic destined for the DMZ and to treat traffic to the internal network with much more suspicion.

Although the firewalls discussed thus far typically connect directly to an untrusted network (at least one interface does), a screened host is a firewall that is between the final router and the internal network. When traffic comes into the router and is forwarded to the firewall, it is inspected before going into the internal network.

A screened subnet takes this concept a step further. In this case, two firewalls are used, and traffic must be inspected at both firewalls to enter the internal network. It is called a screen subnet because there is a subnet between the two firewalls that can act as a DMZ for resources from the outside world. In the real world, these various firewall approaches are mixed and matched to meet requirements, so you might find elements of all these architectural concepts applied to a specific situation.

Inhibitors to Remediation

In some cases, there may be issues that make implementing a particular solution inadvisable or impossible. Some of these inhibitors to remediation are as follows:

• Memorandum of understanding (MOU): An MOU is a document that, while not legally binding, indicates a general agreement between the principals to do something together. An organization may have MOUs with multiple organizations, and MOUs may in some instances contain security requirements that inhibit or prevent the deployment of certain measures.

• Service-level agreement (SLA): An SLA is a document that specifies a service to be provided by a party, the costs of the service, and the expectations of performance. These contracts may exist with third parties from outside the organization and between departments within an organization. Sometimes these SLAs may include specifications that inhibit or prevent the deployment of certain measures.

• Organizational governance: Organizational governance refers to the process of controlling an organization’s activities, processes, and operations. When the process is unwieldy, as it is in some very large organizations, the application of countermeasures may be frustratingly slow. One of the reasons for including upper management in the entire process is to use the weight of authority to cut through the red tape.

• Business process interruption: The deployment of mitigations cannot be done in such a way that business operations and processes are interrupted. Therefore, the need to conduct these activities during off-hours can also be a factor that impedes the remediation of vulnerabilities.

• Degrading functionality: Some solutions create more issues than they resolve. In some cases, it may impossible to implement mitigation because it would break mission-critical applications or processes. The organization may need to research an alternative solution.

• Legacy systems: Legacy systems are those that are older and may be less secure than newer systems. Some of these older system are no longer supported and are not receiving updates. In many cases, organizations have legacy systems performing critical operations and the enterprise cannot upgrade those systems for one reason or another. It could be that the current system cannot be upgraded because it would be disruptive to sales or marketing. Sometimes politics prevents these upgrades. In some cases the money is just not there for the upgrade. For whatever reason, the inability to upgrade is an inhibitor to remediation.

• Proprietary systems: In some cases, solutions have been developed by the organization that do not follow standards and are proprietary in nature. In this case the organization is responsible for updating the systems to address security issues. Many times this does not occur. For these types of systems, the upgrade path is even more difficult because performing the upgrade is not simply a matter of paying for the upgrade and applying the upgrade. The work must be done by the programmers in the organization that developed the solution (if they are still around). Obviously the inability to upgrade is an inhibitor to remediation.

Exam Preparation Tasks

As mentioned in the section “How to Use This Book” in the Introduction, you have several choices for exam preparation: the exercises here, Chapter 22, “Final Preparation,” and the exam simulation questions in the Pearson Test Prep practice test software.

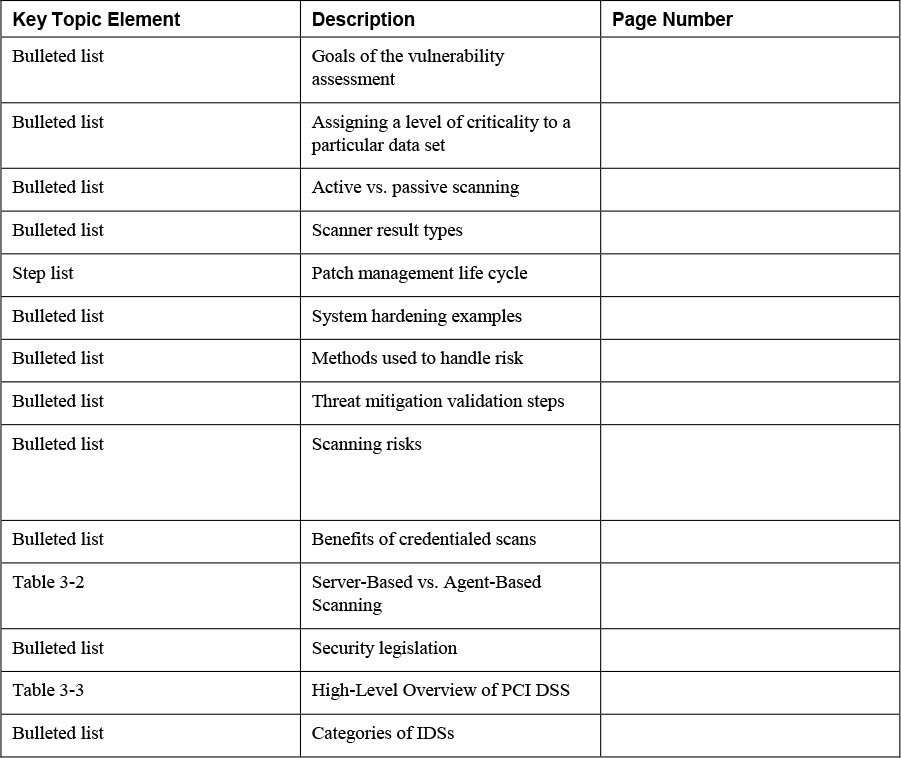

Review All Key Topics

Review the most important topics in this chapter, noted with the Key Topics icon in the outer margin of the page. Table 3-4 lists a reference of these key topics and the page numbers on which each is found.

Table 3-4 Key Topics

Define Key Terms

passive vulnerability scanning

memorandum of understanding (MOU)

Review Questions

1. ____________________ describes the relative value of an asset to the organization.

2. List at least one question that should be raised to determine asset criticality.

3. Nessus Network Monitor is an example of a(n) _____________ scanner.

4. Match the following terms with their definition.

5. ____________________ are security settings that are required on devices of various types.

6. Place the following patch management life cycle steps in order.

• Install the patches in the live environment.

• Determine the priority of the patches and schedule the patches for deployment.

• Ensure that the patches work properly.

• Test the patches.

7. When you are encrypting sensitive data, you are implementing a(n) _________________.

8. List at least two logical hardening techniques.

9. Match the following risk-handling techniques with their definitions.

10. List at least one risk to scanning.