5

Sound

5.1 Introduction

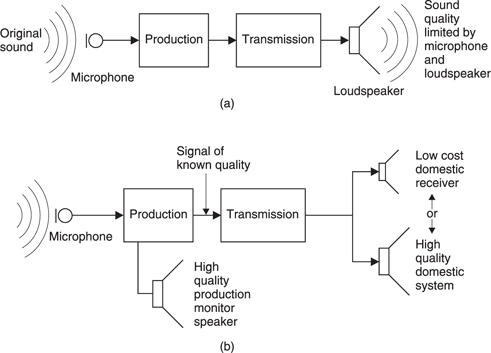

Sound is important in convergent systems both in its own right and as an accompaniment to some form of image. Traditionally the consumer could receive sound radio at a time decided by the broadcaster, or purchase prerecorded media. Digital technology allows a much greater variety of sound-delivery mechanisms. Sound radio is now broadcast digitally, with greater resistance to multipath reception than analog FM could offer, but still at a time decided by the broadcaster. Prerecorded media reached their pinnacle of sound quality with the Compact Disc, followed by the smaller but audibly inferior MiniDisc, but these are only digitized versions of earlier services.

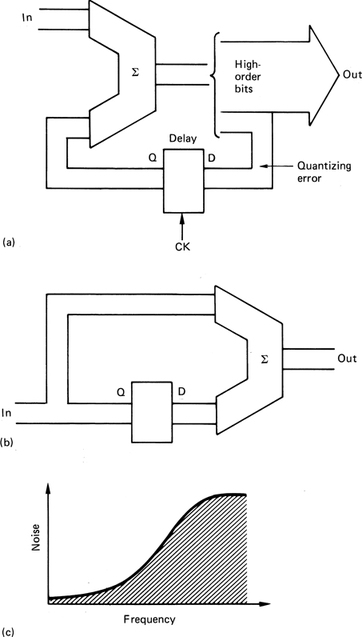

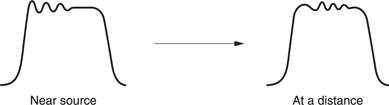

However, network technology allows digital audio to be delivered to any desired quality and at any time. Figure 5.1 shows one service which is already established. The service provider maintains file servers which contain music in a compressed digital format (typically MPEG Layer III also known as MP3). The consumer can download these files to a PC over the Internet, and can then transfer the files to a RAM-based portable player which contains an MPEG decoder. The sound quality is not outstanding, but the service has the advantage of immediacy and flexibility. The consumer can access audio files from anywhere and create a personalized album in a RAM player.

Figure 5.1 Using heavy compression, digital audio recordings can be transferred over the Internet to a PC and thence to a RAM-based player in non-real-time. The compression decoder in the player accesses the RAM to produce real-time audio.

At greater cost, audio data can be exchanged between recording studios using mild or no compression, giving a faster alternative to the delivery of tapes. Clearly a range of sound qualities will be required according to the service, ranging from voice-grade message services to downloadable classical music. This chapter takes the approach that if it shows how to make the finest possible sound reproduction system, the reader can always take an economic decision to provide lower quality. Consequently the information provided here represents as far as possible the state of the art.

Digital multiplexing makes it easy to deliver surround sound and this adds greater involvement to certain moving-image material both in the home and in electronic cinemas. It has been shown beyond doubt that realistic sound causes the viewer to rate the subjective picture quality higher. This is true not only of electronic cinema but also for Internet video where the picture quality is frequently mediocre due to bit rate limitations.

The electronic cinema has the advantage over the home theatre that a greater sum can be invested in the sound equipment, although a small proportion of home theatre systems will also be highly specified. The flat display screen using plasma technology is often hailed as the ultimate TV set to hang on the wall, but a moment’s thought will reveal that without flat loudspeaker technology of high quality, the flat screen is not a TV, but only a display. Most of today’s flat screens contain loudspeakers which are truly appalling and inconsistent with the current high cost of such displays.

It should not be assumed that audio is used entirely for entertainment. Some audio applications, such as aircaft noise monitoring, submarine detection and battlefield simulators, result in specifications which are extremely high. Emergency evacuation instructions in public places come from audio systems which must continue to work in the presence of cable damage, combustion products, water and power loss.

By definition, the sound quality of an audio system can only be assessed by the human auditory system (HAS). Quality means different things to different people and can range from a realistic reproduction of classical music to the intelligible delivery of speech in a difficult acoustic. Many items of audio equipment can only be designed well with a good knowledge of the human hearing mechanism. The understanding of the HAS and how it relates to the criteria for accurate sound reproduction has increased enormously in recent years and these findings will be given here. From this knowledge it becomes obvious what will and will not work and it becomes clear how to proceed and to what degree of accuracy.

The traditional hi-fi enthusiast will not find much comfort here. There are no suggestions to use rare or exotic materials based on pseudoscience. The obvious and audible mistakes made in the design of much of today’s hi-fi equipment are testimony to the fact that it comes from a heavily commoditized and somewhat disreputable industry where technical performance has hardly improved in twenty years. This chapter is not about hi-fi, it is about sound reproduction. All the criteria proposed here are backed with reputable research and all the technology described has been made and works as expected.

5.2 The deciBel

The first audio signals to be transmitted were on analog telephone lines. Where the wiring is long compared to the electrical wavelength (not to be confused with the acoustic wavelength) of the signal, a transmission line exists in which the distributed series inductance and the parallel capacitance interact to give the line a characteristic impedance. In telephones this turned out to be about 600O. In transmission lines the best power delivery occurs when the source and the load impedance are the same; this is the process of matching.

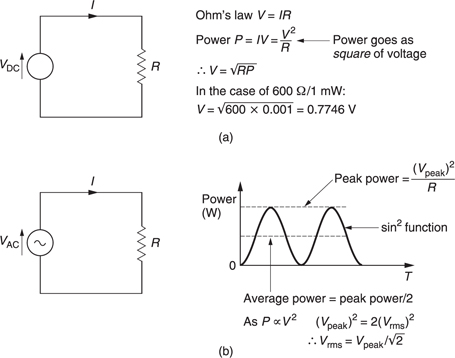

It was often required to measure the power in a telephone system, and one milliWatt was chosen as a suitable unit. Thus the reference against which signals could be compared was the dissipation of one milliWatt in 600O. Figure 5.2 shows that the dissipation of 1 mW in 600 O will be due to an applied voltage of 0.775 V rms. This voltage is the reference against which all audio levels are compared.

The deciBel is a logarithmic measuring system and has its origins in telephony1 where the loss in a cable is a logarithmic function of the length. Human hearing also has a logarithmic response with respect to sound pressure level (SPL). In order to relate to the subjective response audio signal level measurements have also to be logarithmic and so the deciBel was adopted for audio.

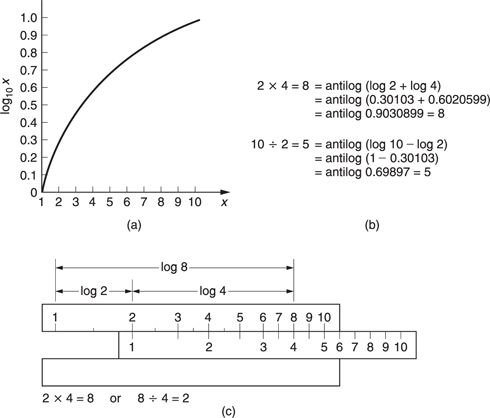

Figure 5.3 shows the principle of the logarithm. To give an example, if it is clear that 102 is 100 and 103 is 1000, then there must be a power between 2 and 3 to which 10 can be raised to give any value between 100 and 1000. That power is the logarithm to base 10 of the value. e.g. log10 300 = 2.5 approx. Note that 100 is 1.

Logarithms were developed by mathematicians before the availability of calculators or computers to ease calculations such as multiplication, squaring, division and extracting roots. The advantage is that armed with a set of log tables, multiplication can be performed by adding, division by subtracting. Figure 5.3 shows some examples. It will be clear that squaring a number is performed by adding two identical logs and the same result will be obtained by multiplying the log by 2.

Figure 5.2 (a) Ohm’s law: the power developed in a resistor is proportional to the square of the voltage. Consequently, 1 mW in 600Ω requires 0.775V. With a sinusoidal alternating input (b), the power is a sine square function which can be averaged over one cycle. A DC voltage which would deliver the same power has a value which is the square root of the mean of the square of the sinusoidal input.

The slide rule is an early calculator which consists of two logarithmically engraved scales in which the length along the scale is proportional to the log of the engraved number. By sliding the moving scale, two lengths can easily be added or subtracted and as a result multiplication and division is readily obtained.

The logarithmic unit of measurement in telephones was called the Bel after Alexander Graham Bell, the inventor. Figure 5.4(a) shows that the Bel was defined as the log of the power ratio between the power to be measured and some reference power. Clearly the reference power must have a level of 0 Bels since log10 1 is 0.

The Bel was found to be an excessively large unit for many purposes and so it was divided into 10 deciBels, abbreviated to dB with a small d and a large B and pronounced ‘deebee’. Consequently the number of dB is ten times the log of the power ratio. A device such as an amplifier can have a fixed power gain which is independent of signal level and this can be measured in dB. However, when measuring the power of a signal, it must be appreciated that the dB is a ratio and to quote the number of dBs without stating the reference is about as senseless as describing the height of a mountain as 2000 without specifying whether this is feet or metres. To show that the reference is one milliWatt into 600O, the units will be dB(m). In radio engineering, the dB(W) will be found which is power relative to one Watt.

Although the dB(m) is defined as a power ratio, level measurements in audio are often done by measuring the signal voltage using 0.775 V as a reference in a circuit whose impedance is not necessarily 600O. Figure 5.4(b) shows that as the power is proportional to the square of the voltage, the power ratio will be obtained by squaring the voltage ratio. As squaring in logs is performed by doubling, the squared term of the voltages can be replaced by multiplying the log by a factor of two. To give a result in deciBels, the log of the voltage ratio now has to be multiplied by 20.

Whilst 600 O matched-impedance working is essential for the long distances encountered with telephones, it is quite inappropriate for analog audio wiring in the studio or the home. The wavelength of audio in wires at 20 kHz is 15 km. Most studios are built on a smaller scale than this and clearly analog audio cables are not transmission lines and their characteristic impedance is swamped by the devices connected at each end. Consequently the reader is cautioned that anyone who attempts to sell exotic analog audio cables by stressing their transmission line characteristics is more of a salesman than a physicist.

Figure 5.4 (a) The Bel is the log of the ratio between two powers, that to be measured and the reference. The Bel is too large so the deciBel is used in practice. (b) As the dB is defined as a power ratio, voltage ratios have to be squared. This is conveniently done by doubling the logs so the ratio is now multiplied by 20.

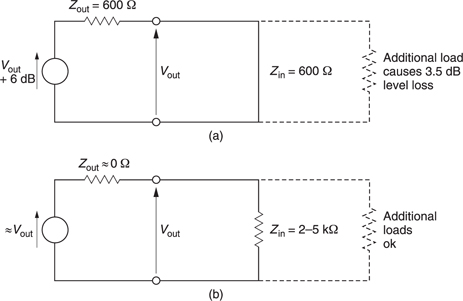

In professional analog audio systems impedance matching is not only unnecessary it is also undesirable. Figure 5.5(a) shows that when impedance matching is required the output impedance of a signal source must be artificially raised so that a potential divider is formed with the load. The actual drive voltage must be twice that needed on the cable as the potential divider effect wastes 6 dB of signal level and requires unnecessarily high power supply rail voltages in equipment. A further problem is that cable capacitance can cause an undesirable HF roll-off in conjunction with the high source impedance.

In modern professional analog audio equipment, shown in Figure 5.5(b) the source has the lowest output impedance practicable. This means that any ambient interference is attempting to drive what amounts to a short circuit and can only develop very small voltages. Furthermore, shunt capacitance in the cable has very little effect. The destination has a somewhat higher impedance (generally a few kO) to avoid excessive currents flowing and to allow several loads to be placed across one driver.

Figure 5.5 (a) Traditional impedance matched source wastes half the signal voltage in the potential divider due to the source impedance and the cable. (b) Modern practice is to use low-output impedance sources with high-impedance loads.(a) The Bel is the log of the ratio between two powers, that to be measured and the reference. The Bel is too large so the deciBel is used in practice. (b) As the dB is defined as a power ratio, voltage ratios have to be squared. This is conveniently done by doubling the logs so the ratio is now multiplied by 20.

In the absence of a fixed impedance it is now meaningless to consider power. Consequently only signal voltages are measured. The reference remains at 0.775V, but power and impedance are irrelevant. Voltages measured in this way are expressed in dB(u); the commonest unit of level in modern systems. Most installations boost the signals on interface cables by 4 dB. As the gain of receiving devices is reduced by 4 dB, the result is a useful noise advantage without risking distortion due to the drivers having to produce high voltages.

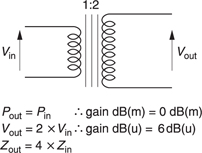

In order to make the difference between dB(m) and dB(u) clear, consider the lossless matching transformer shown in Figure 5.6. The turns ratio is 2:1 therefore the impedance matching ratio is 4:1. As there is no loss in the transformer, the power in is the same as the power out so that the transformer shows a gain of 0 dB(m). However, the turns ratio of 2:1 provides a voltage gain of 6 dB(u). The doubled output voltage will develop the same power into the quadrupled load impedance.

Figure 5.6 A lossless transformer has no power gain so the level in dB(m) on input and output is the same. However, there is a voltage gain when measurements are made in dB(u).

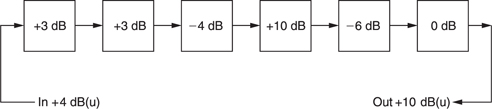

In a complex system signals may pass through a large number of processes, each of which may have a different gain. Figure 5.7 shows that if one stays in the linear domain and measures the input level in volts rms, the output level will be obtained by multiplying by the gains of all the stages involved. This is a complex calculation.

The difference between the signal level with and without the presence of a device in a chain is called the insertion loss measured in dB. However, if the input is measured in dB(u), the output level of the first stage can be obtained by adding the insertion loss in dB. The output level of the second stage can be obtained by further adding the loss of the second stage in dB and so on. The final result is obtained by adding together all of the insertion losses in dB and adding them to the input level in dB(u) to give the output level in dB(u). As the dB is a pure ratio it can multiply anything (by addition of logs) without changing the units. Thus dB(u) of level added to dB of gain are still dB(u).

In acoustic measurements, the sound pressure level (SPL) is measured in deciBels relative to a reference pressure of 2 × 10–5 Pascals (Pa) rms. In order to make the reference clear the units are dB(SPL). In measurements which are intended to convey an impression of subjective loudness, a weighting filter is used prior to the level measurement which reproduces the frequency response of human hearing which is most sensitive in the midrange. The most common standard frequency response is the so-called A-weighting filter, hence the term dB(A) used when a weighted level is being measured. At high or low frequencies, a lower reading will be obtained in dB(A) than in dB(SPL).

5.3 Audio level metering

There are two main reasons for having level meters in audio equipment: to line up or adjust the gain of equipment, and to assess the amplitude of the program material. Gain line-up is especially important in digital systems where an incorrect analog level can result in ADC clipping.

Line-up is often done using a 1 kHz sine wave generated at an agreed level such as 0 dB(u). If a receiving device does not display the same level, then its input sensitivity must be adjusted. Tape recorders and other devices which pass signals through are usually lined up so that their input and output levels are identical, i.e. their insertion loss is 0 dB. Lineup is important in large systems because it ensures that inadvertent level changes do not occur.

In measuring the level of a sine wave for the purposes of line-up, the dynamics of the meter are of no consequence, whereas on program material the dynamics matter a great deal. The simplest (and cheapest) level meter is essentially an AC voltmeter with a logarithmic response. As the ear is logarithmic, the deflection of the meter is roughly proportional to the perceived volume, hence the term Volume Unit (VU) meter.

In audio recording and broadcasting, the worst sin is to overmodulate the tape, the ADC or the transmitter by allowing a signal of excessive amplitude to pass. Real audio signals are rich in short transients which pass before the sluggish VU meter responds. Consequently the VU meter is also called the virtually useless meter in professional circles.

Broadcasters developed the peak program meter (PPM) which is also logarithmic, but which is designed to respond to peaks as quickly as the ear responds to distortion. Consequently the attack time of the PPM is carefully specified. If a peak is so short that the PPM fails to indicate its true level, the resulting overload will also be so brief that the HAS will not hear it. A further feature of the PPM is that the decay time of the meter is very slow, so that any peaks are visible for much longer and the meter is easier to read because the meter movement is less violent.

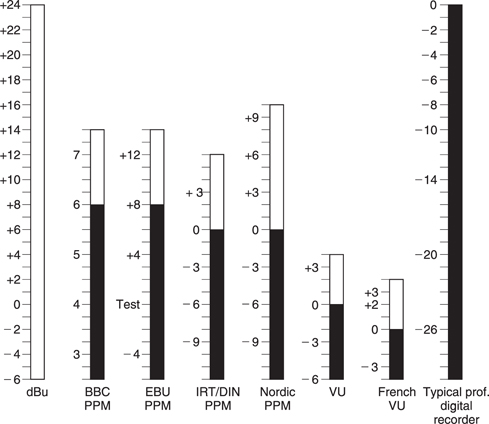

The original PPM as developed by the BBC was sparsely calibrated, but other users have adopted the same dynamics and added dB scales. Figure 5.8 shows some of the scales in use.

In broadcasting, the use of level metering and line-up procedures ensures that the level experienced by the viewer/listener does not change significantly from program to program. Consequently in a transmission suite, the goal would be to broadcast tapes at a level identical to that which was obtained during production. However, when making a recording prior to any production process, the goal would be to modulate the tape as fully as possible without clipping, as this would then give the best signal-to-noise ratio. The level would then be reduced if necessary in the production process.

Unlike analog recorders, digital systems do not have headroom, as there is no progressive onset of distortion until convertor clipping, the equivalent of saturation, occurs at 0 dBFs. Accordingly many digital recorders have level meters which read in dBFs. The scales are marked with 0 at the clipping level and all operating levels are below that. This causes no dificulty provided the user is aware of the consequences.

However, in the situation where a digital copy of an analog tape is to be made, it is very easy to set the input gain of the digital recorder so that line-up tone from the analog tape reads 0 dB. This lines up digital clipping with the analog operating level. When the tape is dubbed, all signals in the headroom suffer convertor clipping.

In order to prevent such problems, manufacturers and broadcasters have introduced artificial headroom on digital level meters, simply by calibrating the scale and changing the analog input sensitivity so that 0 dB analog is some way below clipping. Unfortunately there has been little agreement on how much artificial headroom should be provided, and machines which have it are seldom labelled with the amount. There is an argument which suggests that the amount of headroom should be a function of the sample wordlength, but this causes difficulties when transferring from one wordlength to another. In sixteen-bit working, 12 dB of headroom is a useful figure, but now that eighteen- and twentybit convertors are available, 18 dB may be more appropriate.

5.4 The ear

The human auditory system, the sense called hearing, is based on two obvious tranducers at the side of the head, and a number of less obvious mental processes which give us an impression of the world around us based on disturbances to the equilibrium of the air which we call sound. It is only possible briefly to introduce the subject here. The interested reader is referred to Moore2 for an excellent treatment.

The HAS can tell us, without aid from any other senses, where a sound source is, how big it is, whether we are in an enclosed space and how big that is. If the sound source is musical, we can further establish information such as pitch and timbre, attack, sustain and decay. In order to do this, the auditory system must work in the time, frequency and space domains. A sound reproduction system which is inadequate in one of these domains will be unrealistic however well the other two are satisfied. Chapter 3 introduced the concept of uncertainty between the time and frequency domains and the ear cannot analyse both at once. The HAS circumvents this by changing its characteristics dynamically so that it can concentrate on one domain or the other.

The acuity of the HAS is astonishing. It can detect tiny amounts of distortion, and will accept an enormous dynamic range over a wide number of octaves. If the ear detects a different degree of impairment between two audio systems and an original or ‘live’ sound in properly conducted tests, we can say that one of them is superior. Thus quality is completely subjective and can only be checked by listening tests. However, any characteristic of a signal which can be heard can, in principle, also be measured by a suitable instrument although in general the availability of such instruments lags the requirement and the use of such instruments lags the availability. The subjective tests will tell us how sensitive the instrument should be. Then the objective readings from the instrument give an indication of how acceptable a signal is in respect of that characteristic.

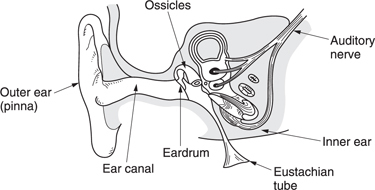

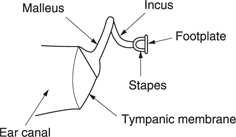

Figure 5.9 shows that the structure of the ear is traditionally divided into the outer, middle and inner ears. The outer ear works at low impedance, the inner ear works at high impedance, and the middle ear is an impedance-matching device. The visible part of the outer ear is called the pinna which plays a subtle role in determining the direction of arrival of sound at high frequencies. It is too small to have any effect at low frequencies. Incident sound enters the auditory canal or meatus. The pipe-like meatus causes a small resonance at around 4 kHz. Sound vibrates the eardrum or tympanic membrane which seals the outer ear from the middle ear. The inner ear or cochlea works by sound travelling though a fluid. Sound enters the cochlea via a membrane called the oval window. If airborne sound were to be incident on the oval window directly, the serious impedance mismatch would cause most of the sound to be reflected. The middle ear remedies that mismatch by providing a mechanical advantage.

The tympanic membrane is linked to the oval window by three bones known as ossicles which act as a lever system such that a large displacement of the tympanic membrane results in a smaller displacement of the oval window but with greater force. Figure 5.10 shows that the malleus applies a tension to the tympanic membrane rendering it conical in shape. The malleus and the incus are firmly joined together to form a lever. The incus acts upon the stapes through a spherical joint. As the area of the tympanic membrane is greater than that of the oval window, there is a further multiplication of the available force.

Consequently small pressures over the large area of the tympanic membrane are converted to high pressures over the small area of the oval window. The middle ear evolved to operate at natural sound levels and causes distortion at the high levels which can be generated with artificial amplification.

Figure 5.10 The malleus tensions the tympanic membrane into a conical shape. The ossicles provide an impedance-transforming lever system between the tympanic membrane and the oval.

The middle ear is normally sealed, but ambient pressure changes will cause static pressure on the tympanic membrane which is painful. The pressure is relieved by the Eustachian tube which opens involuntarily while swallowing. Some of the discomfort of the common cold is due to these tubes becoming blocked. The Eustachian tubes open into the cavities of the head and must normally be closed to avoid one’s own speech appearing deafeningly loud.

The ossicles are located by minute muscles which are normally relaxed. However, the middle ear reflex is an involuntary tightening of the tensor tympani and stapedius muscles which heavily damp the ability of the tympanic membrane and the stapes to transmit sound by about 12 dB at frequencies below 1 kHz. The main function of this reflex is to reduce the audibility of one’s own speech. However, loud sounds will also trigger this reflex which takes some 60–120 ms to operate; too late to protect against transients such as gunfire.

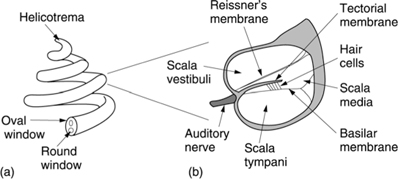

The cochlea is the transducer proper, converting pressure variations in the fluid into nerve impulses. However, unlike a microphone, the nerve impulses are not an analog of the incoming waveform. Instead the cochlea has some analysis capability which is combined with a number of mental processes to make a complete analysis. As shown in Figure 5.11(a), the cochlea is a fluid-filled tapering spiral cavity within bony walls. The widest part, near the oval window, is called the base and the distant end is the apex. Figure 5.11(b) shows that the cochlea is divided lengthwise into three volumes by Reissner’s membrane and the basilar membrane. The scala vestibuli and the scala tympani are connected by a small aperture at the apex of the cochlea known as the helicotrema. Vibrations from the stapes are transferred to the oval window and become fluid pressure variations which are relieved by the flexing of the round window.

Figure 5.11 (a) The cochlea is a tapering spiral cavity. (b) The cross-section of the cavity is divided by Reissner’s membrane and the basilar membrane.

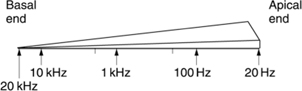

Figure 5.12 The basilar membrane tapers so its resonant frequency changes along its length.

Effectively the basilar membrane is in series with the fluid motion and is driven by it except at very low frequencies where the fluid flows through the helicotrema, decoupling the basilar membrane.

To assist in its frequency-domain operation, the basilar membrane is not uniform. Figure 5.12 shows that it tapers in width and varies in thickness in the opposite sense to the taper of the cochlea. The part of the basilar membrane which resonates as a result of an applied sound is a function of the frequency. High frequencies cause resonance near to the oval window, whereas low frequencies cause resonances further away. More precisely the distance from the apex where the maximum resonance occurs is a logarithmic function of the frequency. Consequently tones spaced apart in octave steps will excite evenly spaced resonances in the basilar membrane. The prediction of resonance at a particular location on the membrane is called place theory. Among other things, the basilar membrane is a mechanical frequency analyser. A knowledge of the way it operates is essential to an understanding of musical phenomena such as pitch discrimination, timbre, consonance and dissonance and to auditory phenomena such as critical bands, masking and the precedence effect.

The vibration of the basilar membrane is sensed by the organ of Corti which runs along the centre of the cochlea. The organ of Corti is active in that it contains elements which can generate vibration as well as sense it. These are connected in a regenerative fashion so that the Q factor, or frequency selectivity, of the ear is higher than it would otherwise be. The deflection of hair cells in the organ of Corti triggers nerve firings and these signals are conducted to the brain by the auditory nerve.

Nerve firings are not a perfect analog of the basilar membrane motion. A nerve firing appears to occur at a constant phase relationship to the basilar vibration; a phenomenon called phase locking, but firings do not necessarily occur on every cycle. At higher frequencies firings are intermittent, yet each is in the same phase relationship.

The resonant behaviour of the basilar membrane is not observed at the lowest audible frequencies below 50 Hz. The pattern of vibration does not appear to change with frequency and it is possible that the frequency is low enough to be measured directly from the rate of nerve firings.

5.5 Level and loudness

At its best, the HAS can detect a sound pressure variation of only 2 × 10–5 Pascals rms and so this figure is used as the reference against which sound pressure level (SPL) is measured. The sensation of loudness is a logarithmic function of SPL hence the use of the deciBel explained in section 5.2. The dynamic range of the HAS exceeds 130 dB, but at the extremes of this range, the ear is either straining to hear or is in pain.

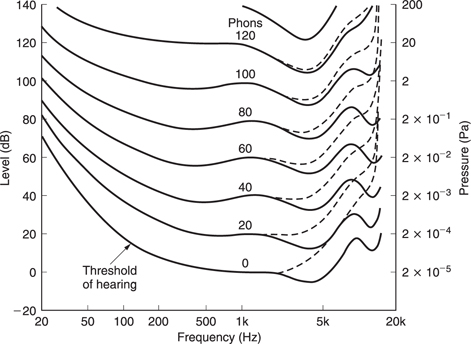

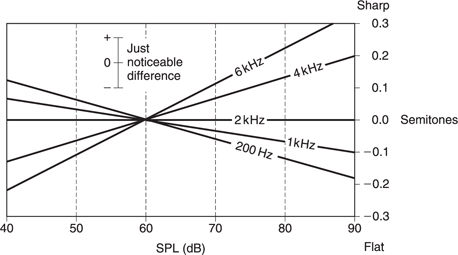

The frequency response of the HAS is not at all uniform and it also changes with SPL. The subjective response to level is called loudness and is measured in phons. The phon scale and the SPL scale coincide at 1 kHz, but at other frequencies the phon scale deviates because it displays the actual SPLs judged by a human subject to be equally loud as a given level at 1 kHz. Figure 5.13 shows the so-called equal loudness contours which were originally measured by Fletcher and Munson and subsequently by Robinson and Dadson. Note the irregularities caused by resonances in the meatus at about 4 kHz and 13 kHz.

Usually, people’s ears are at their most sensitive between about 2 kHz and 5 kHz, and although some people can detect 20 kHz at high level, there is much evidence to suggest that most listeners cannot tell if the upper frequency limit of sound is 20 kHz or 16 kHz.3,4 For a long time it was thought that frequencies below about 40 Hz were unimportant, but it is now clear that reproduction of frequencies down to 20 Hz improves reality and ambience.5 The generally accepted frequency range for highquality audio is 20–20 000 Hz, although for broadcasting an upper limit of 15 000 Hz is often applied.

Figure 5.13 Contours of equal loudness showing that the frequency response of the ear is highly level dependent (solid line. age 20; dashed line, age 60).

The most dramatic effect of the curves of Figure 5.13 is that the bass content of reproduced sound is disproportionately reduced as the level is turned down. This would suggest that if a powerful yet high-quality reproduction system is available the correct tonal balance when playing a good recording can be obtained simply by setting the volume control to the correct level. This is indeed the case. A further consideration is that many musical instruments and the human voice change timbre with level and there is only one level which sounds correct for the timbre.

Oddly, there is as yet no standard linking the signal level in a transmission or recording system with the SPL at the microphone, although with the advent of digital microphones this useful information could easily be sent as metadata.

Loudness is a subjective reaction and is almost impossible to measure. In addition to the level-dependent frequency response problem, the listener uses the sound not for its own sake but to draw some conclusion about the source. For example, most people hearing a distant motorcycle will describe it as being loud. Clearly at the source, it is loud, but the listener has compensated for the distance. Paradoxically the same listener may then use a motor mower without hearing protection.

The best that can be done is to make some compensation for the leveldependent response using weighting curves. Ideally there should be many, but in practice the A, B and C weightings were chosen where the A curve is based on the 40-phon response. The measured level after such a filter is in units of dBA. The A curve is almost always used because it most nearly relates to the annoyance factor of distant noise sources. The use of A-weighting at higher levels is highly questionable.

5.6 Frequency discrimination

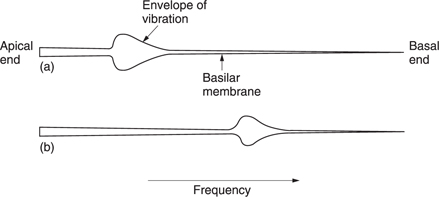

Figure 5.14 shows an uncoiled basilar membrane with the apex on the left so that the usual logarithmic frequency scale can be applied. The envelope of displacement of the basilar membrane is shown for a single frequency at (a). The vibration of the membrane in sympathy with a single frequency cannot be localized to an infinitely small area, and nearby areas are forced to vibrate at the same frequency with an amplitude that decreases with distance. Note that the envelope is asymmetrical because the membrane is tapering and due to frequencydependent losses in the propagation of vibrational energy down the cochlea. If the frequency is changed, as in (b), the position of maximum displacement will also change. As the basilar membrane is continuous, the position of maximum displacement is infinitely variable, allowing extremely good pitch discrimination of about one twelfth of a semitone which is determined by the spacing of hair cells.

In the presence of a complex spectrum, the finite width of the vibration envelope means that the ear fails to register energy in some bands when there is more energy in a nearby band. Within those areas, other frequencies are mechanically excluded because their amplitude is insufficient to dominate the local vibration of the membrane. Thus the Q factor of the membrane is responsible for the degree of auditory masking, defined as the decreased audibility of one sound in the presence of another.

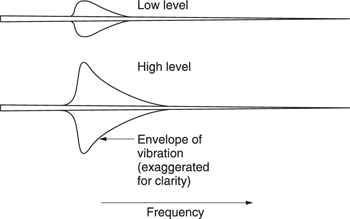

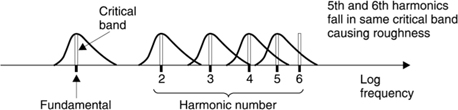

The term used in psychoacoustics to describe the finite width of the vibration envelope is critical bandwidth. Critical bands were first described by Fletcher.6 The envelope of basilar vibration is a complicated function. It is clear from the mechanism that the area of the membrane involved will increase as the sound level rises. Figure 5.15 shows the bandwidth as a function of level.

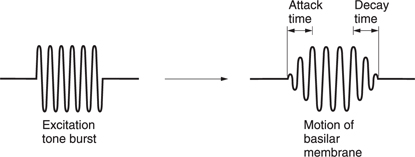

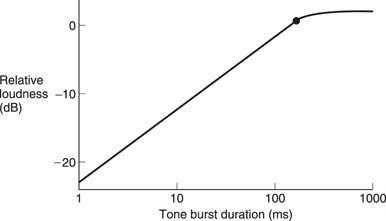

As was shown in Chapter 3, the Heisenberg inequality teaches that the higher the frequency resolution of a transform, the worse the time accuracy. As the basilar membrane has finite frequency resolution measured in the width of a critical band, it follows that it must have finite time resolution. This also follows from the fact that the membrane is resonant, taking time to start and stop vibrating in response to a stimulus. There are many examples of this. Figure 5.16 shows the impulse response and Figure 5.17 the perceived loudness of a tone burst increases with duration up to about 200 ms due to the finite response time.

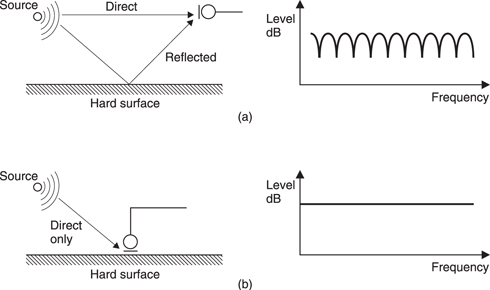

The HAS has evolved to offer intelligibility in reverberant environments which it does by averaging all received energy over a period of about 30 ms. Reflected sound which arrives within this time is integrated to produce a louder sensation, whereas reflected sound which arrives after that time can be temporally discriminated and is perceived as an echo. Microphones have no such ability which is why we often need to have acoustic treatment in areas where microphones are used.

Figure 5.16 Impulse response of the ear showing slow attack and decay due to resonantbehaviour.

Figure 5.17 Perceived level of tone burst rises with duration as resonance builds up.

A further example of the finite time discrimination of the HAS is the fact that short interruptions to a continuous tone are difficult to detect. Finite time resolution means that masking can take place even when the masking tone begins after and ceases before the masked sound. This is referred to as forward and backward masking.7

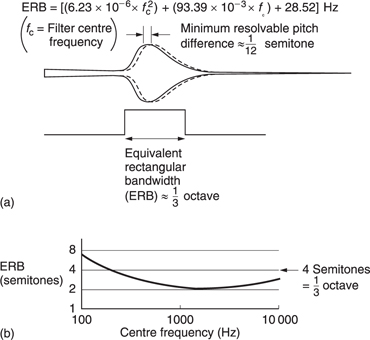

As the vibration envelope is such a complicated shape, Moore and Glasberg have proposed the concept of equivalent rectangular bandwidth to simplify matters. The ERB is the bandwidth of a rectangular filter which passes the same power as a critical band. Figure 5.18(a) shows the expression they have derived linking the ERB with frequency. This is plotted in (b) where it will be seen that one third of an octave is a good approximation. This is about thirty times broader than the pitch discrimination also shown in (b).

Figure 5.19 shows an electrical signal (a) in which two equal sine waves of nearly the same frequency have been linearly added together. Note that the envelope of the signal varies as the two waves move in and out of phase. Clearly the frequency transform calculated to infinite accuracy is that shown at (b). The two amplitudes are constant and there is no evidence of the envelope modulation. However, such a measurement requires an infinite time. When a shorter time is available, the frequency discrimination of the transform falls and the bands in which energy is detected become broader.

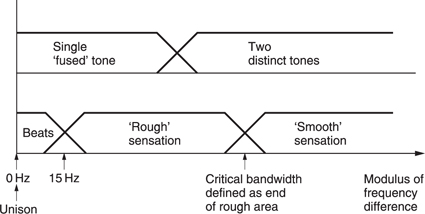

When the frequency discrimination is too wide to distinguish the two tones as in (c), the result is that they are registered as a single tone. The amplitude of the single tone will change from one measurement to the next because the envelope is being measured. The rate at which the envelope amplitude changes is called a beat frequency which is not actually present in the input signal. Beats are an artifact of finite frequency resolution transforms. The fact that the HVS produces beats from pairs of tones proves that it has finite resolution.

Measurement of when beats occur allows measurement of critical bandwidth. Figure 5.20 shows the results of human perception of a twotone signal as the frequency dF difference changes. When dF is zero, described musically as unison, only a single note is heard. As dF increases, beats are heard, yet only a single note is perceived. The limited frequency resolution of the basilar membrane has fused the two tones together. As dF increases further, the sensation of beats ceases at 12–15 Hz and is replaced by a sensation of roughness or dissonance. The roughness is due to parts of the basilar membrane being unable to decide the frequency at which to vibrate. The regenerative effect may well become confused under such conditions. The roughness which persists until dF has reached the critical bandwidth beyond which two separate tones will be heard because there are now two discrete basilar resonances. In fact this is the definition of critical bandwidth.

5.7 Music and the ear

The characteristics of the HVS, especially critical bandwidth, are responsible for the way music has evolved. Beats are used extensively in music. When tuning a pair of instruments together, a small tuning error will result in beats when both play the same nominal note. In certain pipe organs, pairs of pipes are sounded together with a carefully adjusted pitch error which results in a pleasing tremolo effect.

With certain exceptions, music is intended to be pleasing and so dissonance is avoided. Two notes which sound together in a pleasing manner are described as harmonious or consonant. Two sine waves appear consonant if they separated by a critical bandwidth because the roughness of Figure 5.20 is avoided, but real musical instruments produce a series of harmonics in addition to the fundamental.

Figure 5.21 shows the spectrum of a harmonically rich instrument. The fundamental and the first few harmonics are separated by more than a critical band, but from the seventh harmonic more than one harmonic will be in one band and it is possible for dissonance to occur. Musical instruments have evolved to avoid the production of seventh and higher harmonics. Violins and pianos are played or designed to excite the strings at a node of the seventh harmonic to suppress this dissonance.

Harmonic distortion in audio equipment is easily detected even in minute quantities because the first few harmonics fall in non-overlapping critical bands. The sensitivity of the HAS to third harmonic distortion probably deserves more attention in audio equipment than the fidelity of the dynamic range or frequency response.

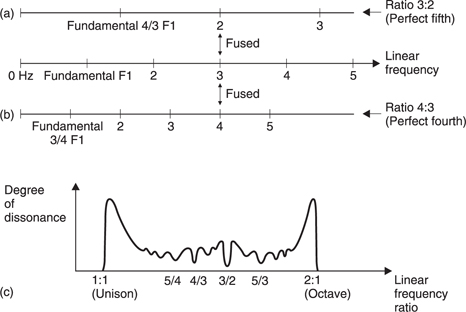

When two harmonically rich notes are sounded together, the harmonics will fall within the same critical band and cause dissonance unless the fundamentals have one of a limited number of simple relationships which makes the harmonics fuse. Clearly an octave relationship is perfect.

Figure 5.22 shows some examples. In (a) two notes with the ratio (interval) 3:2 are considered. The harmonics are either widely separated or fused and the combined result is highly consonant. The interval of 3:2 is known to musicians as a perfect fifth. In (b) the ratio is 4:3. All harmonics are either at least a third of an octave apart or are fused. This relationship is known as a perfect fourth. The degree of dissonance over the range from 1:1 to 2:1 (unison to octave) was investigated by Helmholtz and is shown in Figure 5.22(c). Note that the dissonance rises at both ends where the fundamentals are within a critical bandwidth of one another. Dissonances in the centre of the scale are where some harmonics lie in a within a critical bandwidth of one another. Troughs in the curve indicate areas of consonance. Many of the troughs are not very deep, indicating that the consonance is not perfect. This is because of the effect shown in Figure 5.21 in which high harmonics get closer together with respect to critical bandwidth. When the fundamentals are closer together, the harmonics will become dissonant at a lower frequency, reducing the consonance. Figure 5.22(c) also shows the musical terms used to describe the consonant intervals.

Figure 5.22 (a) Perfect fifth with a frequency ratio of 3:2 is consonant because harmonics are either in different critical bands or are fused. (b) Perfect fourth achieves the same result with 4:3 frequency ratio. (c) Degree of dissonance over range from 1:1 to 2:1.

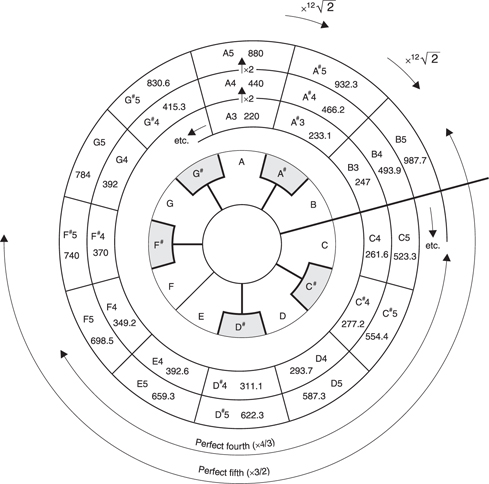

It is clear from Figure 5.22(c) that the notes of the musical scale have empirically been established to allow the maximum consonance with pairs of notes and chords. Early instruments were tuned to the just diatonic scale in exactly this way. Unfortunately the just diatonic scale does not allow changes of key because the notes are not evenly spaced. A key change is where the frequency of every note in a piece of music is multiplied by a constant, often to bring the accompaniment within the range of a singer. In continuously tuned instruments such as the violin and the trombone this is easy, but with fretted or keyboard instruments such as a piano there is a problem.

The equal-tempered scale is a compromise between consonance and key changing. The octave is divided into twelve equal intervals called tempered semitones. On a keyboard, seven of the keys are white and produce notes very close to those of the just diatonic scale, and five of the keys are black. Music can be transposed in semitone steps by using the black keys. Figure 5.23 shows an example of transposition where a scale is played in several keys.

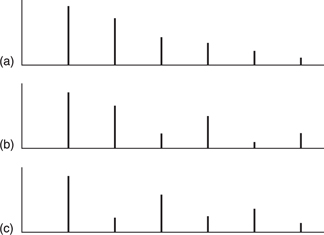

Frequency is an objective measure whereas pitch is the subjective near equivalent. Clearly frequency and level are independent, whereas pitch and level are not. Figure 5.24 shows the relationship between pitch and level. Place theory indicates that the hearing mechanism can sense a single frequency quite accurately as a function of the place or position of maximum basilar vibration. However, most periodic sounds and real musical instruments produce a series of harmonics in addition to the fundamental. When a harmonically rich sound is present the basilar membrane is excited at spaced locations. Figure 5.25(a) shows all harmonics, (b) shows even harmonics predominating and (c) shows odd harmonics predominating. It would appear that the HAS is accustomed to hearing harmonics in various amounts and the consequent regular pattern of excitation. It is the overall pattern which contributes to the sensation of pitch even if individual partials vary enormously in relative level.

Figure 5.24 Pitch sensation is a function of level.

Experimental signals in which the fundamental has been removed leaving only the harmonics result in unchanged pitch perception. The pattern in the remaining harmonics is enough uniquely to establish the missing fundamental. Imagine the fundamental in (b) to be absent. Neither the second harmonic nor the third can be mistaken for the fundamental because if they were fundamentals a different pattern of harmonics would result. A similar argument can be put forward in the time domain, where the timing of phase-locked nerve firings responding to a harmonic will periodically coincide with the nerve firings of the fundamental. The ear is used to such time patterns and will use them in conjunction with the place patterns to determine the right pitch. At very low frequencies the place of maximum vibration does not move with frequency yet the pitch sensation is still present because the nerve firing frequency is used.

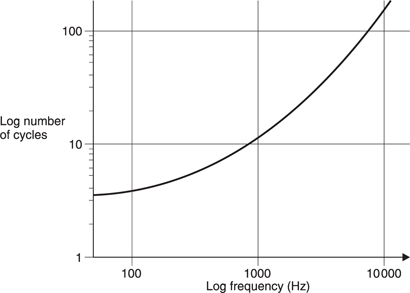

As the fundamental frequency rises it is difficult to obtain a full pattern of harmonics as most of them fall outside the range of hearing. The pitch discrimination ability is impaired and needs longer to operate. Figure 5.26 shows the number of cycles of excitation needed to discriminate pitch as a function of frequency. Clearly at around 5 kHz performance is failing because there are hardly any audible harmonics left. Phase locking also fails at about the same frequency. Musical instruments have evolved accordingly, with the highest notes of virtually all instruments found below 5 kHz.

5.8 The physics of sound

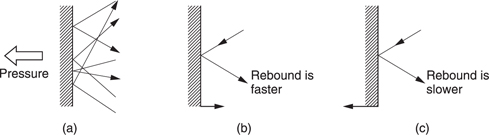

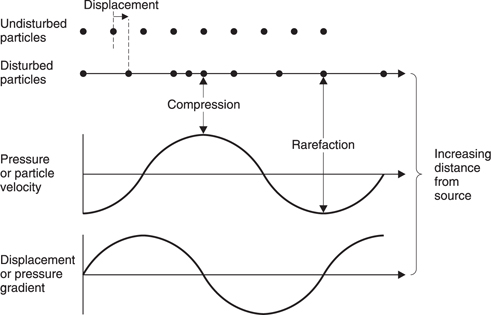

Sound is simply an airborne version of vibration which is why the two topics are inextricably linked. The air which carries sound is a mixture of gases, mostly nitrogen, some oxygen, a little carbon dioxide and so on. Gases are the highest energy state of matter, which is another way of saying that you have to heat ice to get water then heat it some more to get steam. The reason that a gas takes up so much more room than a liquid is that the molecules contain so much energy that they break free from their neighbours and rush around at high speed. As Figure 5.27(a) shows, the innumerable elastic collisions of these high-speed molecules produce pressure on the walls of any gas container. In fact the distance a molecule can go without a collision, the mean-free path, is quite short at atmospheric pressure. Consequently gas molecules also collide with each other elastically, so that if left undisturbed, in a container at a constant temperature, every molecule would end up with essentially the same energy and the pressure throughout would be constant and uniform.

Figure 5.27 (a) The pressure exerted by a gas is due to countless elastic collisions between gas molecules and the walls of the container. (b) If the wall moves against the gas pressure, the rebound velocity increases. (c) Motion with the gas pressure reduces the particle velocity.

Sound disturbs this simple picture. Figure 5.27(b) shows that a solid object which moves against gas pressure increases the velocity of the rebounding molecules, whereas in Figure 5.27(c) one moving with gas pressure reduces that velocity. The average velocity and the displacement of all the molecules in a layer of air near to a moving body is the same as the velocity and displacement of the body. Movement of the body results in a local increase or decrease in pressure of some kind. Thus sound is both a pressure and a velocity disturbance. Integration of the velocity disturbance gives the displacement.

Despite the fact that a gas contains endlessly rushing colliding molecules, a small mass or particle of gas can have stable characteristics because the molecules leaving are replaced by new ones with identical statistics. As a result, acoustics seldom considers the molecular structure of air and the constant motion is neglected. Thus when particle velocity and displacement is considered in acoustics, this refers to the average values of a large number of molecules. The undisturbed container of gas referred to earlier will have a particle velocity and displacement of zero at all points.

When the volume of a fixed mass of gas is reduced, the pressure rises. The gas acts like a spring. However, a gas also has mass. Sound travels through air by an interaction between the mass and the springiness. Imagine pushing a mass via a spring. It would not move immediately because the spring would have to be compressed in order to transmit a force. If a second mass is connected to the first by another spring, it would start to move even later. Thus the speed of a disturbance in a mass/spring system depends on the mass and the stiffness.

After the disturbance had propagated the masses would return to their rest position. The mass/spring analogy is helpful for an early understanding, but is too simple to account for commonly encountered acoustic phenomena such as spherically expanding waves. It must be remembered that the mass and stiffness are distributed throughout the gas in the same way that inductance and capacitance are distributed in a transmission line. Sound travels through air without a net movement of the air.

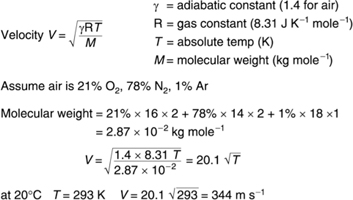

Unlike solids, the elasticity of gas is a complicated process. If a fixed mass of gas is compressed, work has to be done on it. This will create heat in the gas. If the heat is allowed to escape and the compression does not change the temperature, the process is said to be isothermal. However, if the heat cannot escape the temperature will rise and give a disproportionate increase in pressure. This process is said to be adiabatic and the Diesel engine depends upon it. In most audio cases there is insufficient time for much heat transfer and so air is considered to act adiabatically. Figure 5.28 shows how the speed of sound c in air can be derived by calculating its elasticity under adiabatic conditions.

If the volume allocated to a given mass of gas is reduced isothermally, the pressure and the density will rise by the same amount so that c does not change. If the temperature is raised at constant pressure, the density goes down and so the speed of sound goes up. Gases with lower density than air have a higher speed of sound. Divers who breathe a mixture of oxygen and helium to prevent ‘the bends’ must accept that the pitch of their voices rises remarkably. Digital pitch shifters can be used to facilitate communication. The speed of sound is proportional to the square root of the absolute temperature. Temperature changes with respect to absolute zero (–273°C) also amount to around 1 per cent except in extremely inhospitable places.

The speed of sound experienced by most of us is about 1000 feet per second or 344 metres per second. Temperature falls with altitude in the atmosphere and with it the speed of sound. The local speed of sound is defined as Mach 1. Consequently supersonic aircraft are fitted with Mach meters.

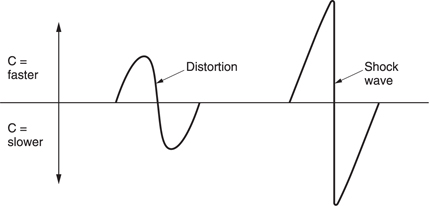

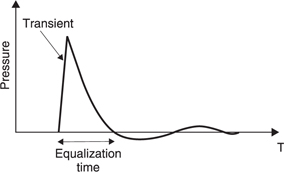

As air acts adiabatically, a propagating sound wave causes cyclic temperature changes. The speed of sound is a function of temperature, yet sound causes a temperature variation. One might expect some effects because of this. Fortunately, sounds which are below the threshold of pain have such a small pressure variation compared with atmospheric pressure that the effect is negligible and air can be assumed to be linear. However, on any occasion where the pressures are higher, this is not a valid assumption. In such cases the positive half-cycle significantly increases local temperature and the speed of sound, whereas the negative half-cycle reduces temperature and velocity. Figure 5.29 shows that this results in significant distortion of a sine wave, ultimately causing a shock wave which can travel faster than the speed of sound until the pressure has dissipated with distance. This effect is responsible for the sharp sound of a handclap.

This behaviour means that the speed of sound changes slightly with frequency. High frequencies travel slightly faster than low because there is less time for heat conduction to take place. Figure 5.30 shows that a complex sound source produces harmonics whose phase relationship with the fundamental advances with the distance the sound propagates. This allows one mechanism (there are others) by which the HAS can judge the distance from a known sound source. Clearly for realistic sound reproduction nothing in the audio chain must distort the phase relationship between frequencies. A system which accurately preserves such relationships is said to display linear phase.

Sound can be due to a one-off event known as percussion, or a periodic event such as the sinusoidal vibration of a tuning fork. The sound due to percussion is called transient whereas a periodic stimulus produces steady-state sound having a frequency f.

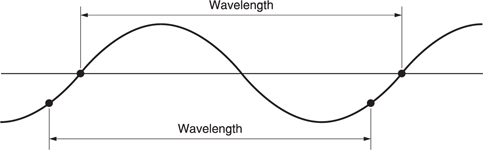

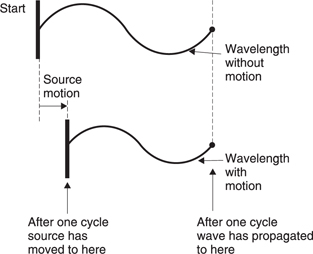

Because sound travels at a finite speed, the fixed observer at some distance from the source will experience the disturbance at some later time. In the case of a transient, the observer will detect a single replica of the original as it passes at the speed of sound. In the case of the tuning fork, a periodic sound, the pressure peaks and dips follow one another away from the source at the speed of sound. For a given rate of vibration of the source, a given peak will have propagated a constant distance before the next peak occurs. This distance is called the wavelength,λ. Figure 5.31 shows that wavelength is defined as the distance between any two identical points on the whole cycle. If the source vibrates faster, successive peaks get closer together and the wavelength gets shorter. Wavelength is inversely proportional to the frequency. It is easy to remember that the wavelength of 1000 Hz is a foot (about 30 cm).

Figure 5.30 In a complex waveform, high frequencies travel slightly faster producing a relative phase change with distance.

If there is relative motion between the source and the observer, the frequency of a periodic sound will be changed. Figure 5.32 shows a sound source moving towards the observer. At the end of a cycle, the source will be nearer the observer than at the beginning of the cycle. As a result the wavelength radiated in the direction of the observer will be shortened so that the pitch rises. The wavelength of sounds radiated away from the observer will be lengthened. The same effect will occur if the observer moves. This is the Doppler effect, which is most noticeable on passing motor vehicles whose engine notes appear to drop as they pass. Note that the effect always occurs, but it is only noticeable on a periodic sound. Where the sound is aperiodic, such as broadband noise, the Doppler shift will not be heard.

Figure 5.32 Periodic sounds are subject to Doppler shift if there is relative motion between the source and the observer.

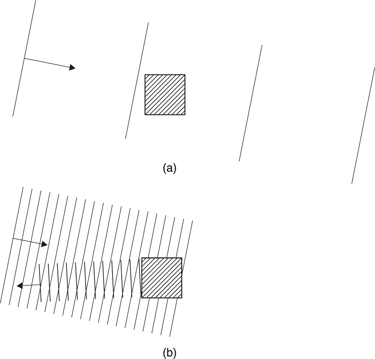

Sound is a wave motion, and the way a wave interacts with any object depends upon the relative size of that object and the wavelength. The audible range of wavelengths is from around 17 millimetres to 17 metres so dramatic changes in the behaviour of sound over the frequency range should be expected.

Figure 5.33(a) shows that when the wavelength of sound is large compared to the size of a solid body, the sound will pass around it almost as if it were not there. When the object is large compared to the wavelength, then simple reflection takes place as in Figure 5.33(b). However, when the size of the object and the wavelength are comparable, the result can only be explained by diffraction theory.

The parameter which is used to describe this change of behaviour with wavelength is known as the wave number k and is defined as:

![]()

where f = frequency, c = the speed of sound and λ = wavelength. In practice the size of any object or distance a in metres is multiplied by k.

Figure 5.33 (a) Sound waves whose spacing is large compared to an obstacle simply pass round it. (b) When the relative size is reversed, an obstacle becomes a reflector.

A good rule of thumb is that below ka = 1, sound tends to pass around as in Figure 5.33(a) whereas above ka = 1, sound tends to reflect as in (b).

5.9 How sound is radiated

When sound propagates, there are changes in velocity v, displacement x and pressure p. Figure 5.34 shows that the velocity and the displacement are always in quadrature. This is obvious because velocity is the differential of the displacement. When the displacement reaches its maximum value and is on the point of reversing direction, the velocity is zero. When the displacement is zero the velocity is maximum.

Figure 5.34 The pressure, velocity and displacement of particles as sound propagates.

The pressure and the velocity are linked by the acoustic impedance z which is given by p/v. Just like electrical impedances which can be reactive, the acoustic impedance is complex and varies with acoustic conditions. Consequently the phase relationship between velocity and pressure also varies. When any vibrating body is in contact with the air, a thin layer of air must have the same velocity as the surface of the body. The pressure which results from that velocity depends upon the acoustic impedance.

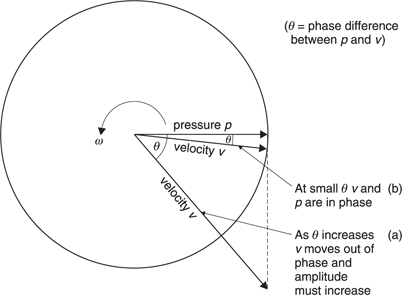

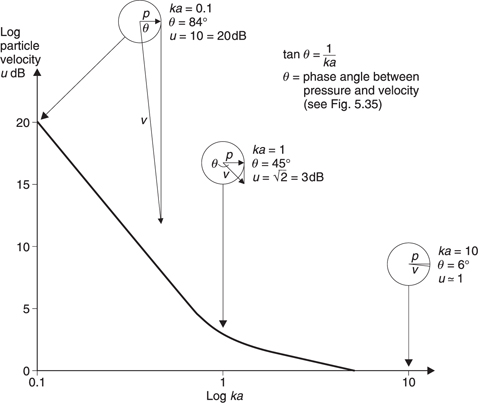

The wave number is useful to explain the way in which sound is radiated. Consider a hypothetical pulsating sphere as shown in Figure 5.35. The acoustic impedance changes with radius a. If the sphere pulsates very slowly, it will do work against air pressure as it expands and the air pressure will return the work as it contracts. There is negligible radiation because the impedance is reactive. Figure 5.35(a) shows that when ka is small there is a 90° phase shift between the pressure and the velocity. As the frequency or the radius rises, as in (b), the phase angle reduces from 90° and the pressure increases. When ka is large, the phase angle approaches zero and the pressure reaches its maximum value compared to the velocity. The impedance has become resistive.

When ka is very large, the spherical radiator is at a distance and the spherical waves will have become plane waves. Figure 5.34 showed the relationships between pressure, velocity and displacement for a plane wave. A small air mass may have kinetic energy due to its motion and kinetic energy due to its compression. The total energy is constant, but the distribution of energy between kinetic and potential varies throughout the wave. This relationship will not hold when ka is small. This can easily occur especially at low frequencies where the wavelengths can be several metres.

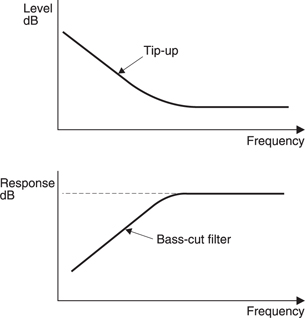

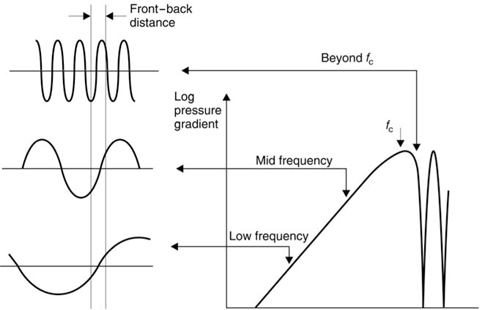

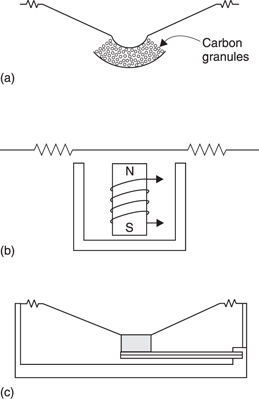

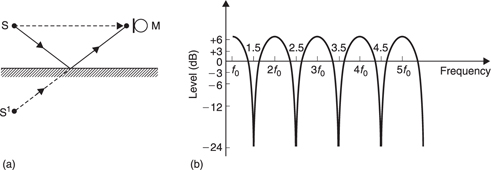

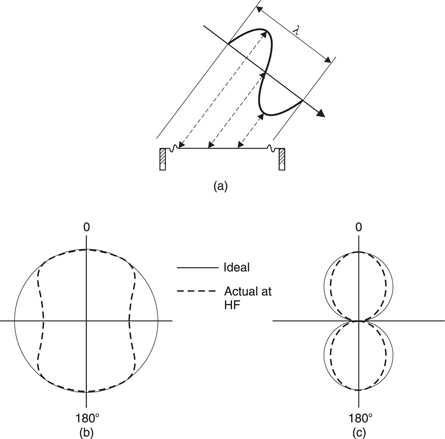

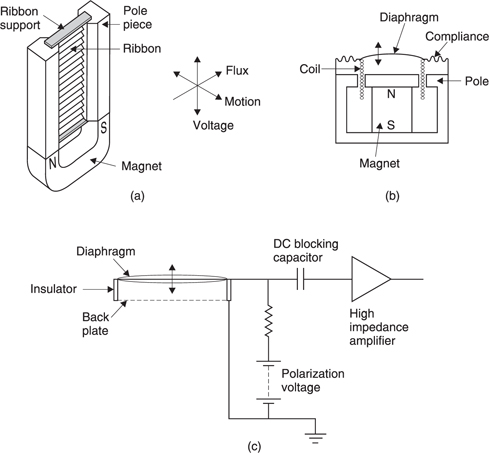

Microphones can transduce either the pressure or the velocity component of sound. When ka is large, the pressure and velocity waveforms in a spherical wave are identical. However it will be clear from Figure 5.35(a) and (b) that when ka is small the velocity exceeds the pressure component. This is the cause of the well-known proximity effect, also known as tip-up, which emphasizes low frequencies when velocitysensing microphones are used close to a sound source. Figure 5.36 shows the response of a velocity microphone relative to that of a pressure microphone for different values of ka. Various combinations of distance and frequency are given for illustration. Practical microphones often incorporate some form of bass-cut filter to offset the effect.

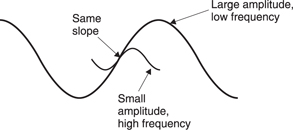

The sensation of sound is proportional to the average velocity. However, the displacement is the integral of the velocity. Figure 5.37 shows that to obtain an identical velocity or slope the amplitude must increase as the inverse of the frequency. Consequently for a given SPL low-frequency sounds result in much larger air movement than high frequency. The SPL is proportional to the volume velocity U of the source which is obtained by multiplying the vibrating area in m2 by the velocity in m/s. As SPL is proportional to volume velocity, as frequency falls the volume or displacement must rise. This means that low-frequency sound can only be radiated effectively by large objects, hence all the bass instruments in the orchestra are much larger than their treble equivalents. This is also the reason why a loudspeaker cone is only seen to move at low frequencies.

Figure 5.37 For a given velocity or slope, lower frequencies require greater amplitude.

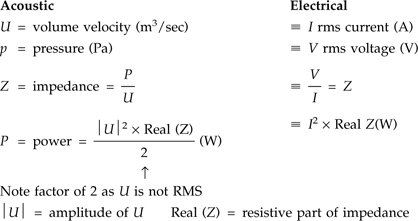

The units of volume velocity are cubic metres per second and so sound is literally an alternating current. The pressure p is linked to the current by the impedance just as it is in electrical theory. There are direct analogies between acoustic and electrical parameters and equations which are helpful. One small difficulty is that whereas alternating electrical parameters are measured in rms units, acoustic units are not. Thus when certain acoustic parameters are multiplied together the product has to be divided by two. This happens automatically with rms units. Figure 5.38 shows the analogous equations.

Figure 5.38 Electrical units are rms whereas many acoustic units are not, hence the factor of two difference in otherwise analogous equations.

The intensity of a sound is the sound power passing through unit area. In the far field it is given by the product of the volume velocity and the pressure. In the near field the relative phase angle will have to be considered. Intensity is a vector quantity as it has direction which is considered to be perpendicular to the area in question. The total sound power is obtained by multiplying the intensity by the cross-sectional area through which it passes. Power is a scalar quantity because it can be radiated in all directions.

When a spherical sound wave is considered, there is negligible loss as it advances outwards. Consequently the sound power passing through the surface of an imaginary sphere surrounding the source is independent of the radius of that sphere. As the area of a sphere is proportional to the square of the radius, it will be clear that the intensity falls according to an inverse square law.

The inverse square law should be used with caution. There are a number of exceptions. As was seen in Figure 5.36, the proximity effect causes a deviation from the inverse square law for small ka. The area in which there is deviation from inverse square behaviour is called the near field.

In reverberant conditions a sound field is set up by reflections. As the distance from the source increases at some point the level no longer falls.

It is also important to remember that the inverse square law applies only to near-point sources. A line source radiates cylindrically and intensity is then inversely proportional to radius. Noise from a busy road approximates to a cylindrical source.

5.10 Acoustics

A proper understanding of the behaviour of sound requires familiarity with the principles of wave acoustics. Wave theory is used in many different disciplines including radar, sonar, optics, antenna and filter design and the principles remain the same. Consequently the designer of a loudspeaker may obtain inspiration from studying a radar antenna or a CD pickup.

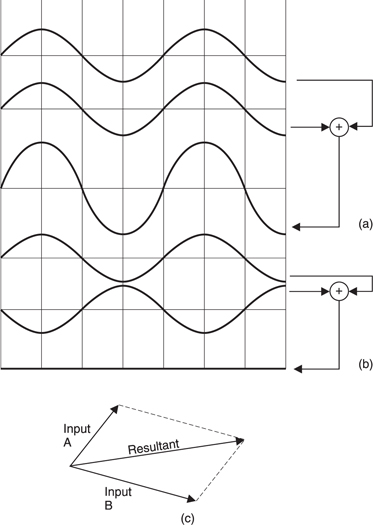

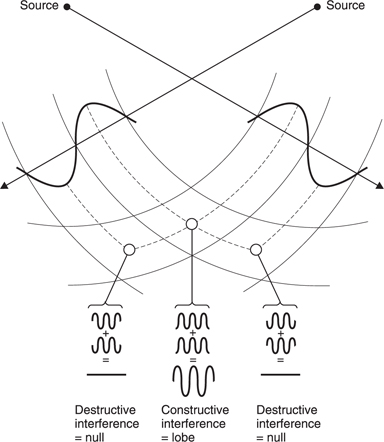

Figure 5.39 shows that when two sounds of equal amplitude and frequency add together, the result is completely dependent on the relative phase of the two. At (a) when the phases are identical, the result is the arithmetic sum. At (b) where there is a 180° relationship, the result is complete cancellation. This is constructive and destructive interference. At any other phase and/or amplitude relationship, the result can only be obtained by vector addition as shown in (c).

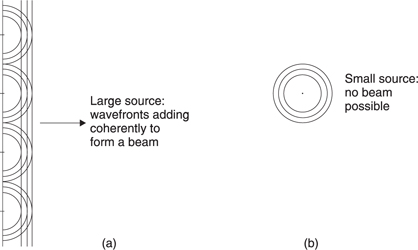

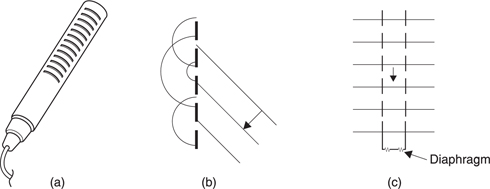

The wave theory of propagation of sound is based on interference and suggests that a wavefront advances because an infinite number of point sources can be considered to emit spherical waves which will only add when they are all in the same phase. This can only occur in the plane of the wavefront. Figure 5.40(a) shows that at all other angles, interference between spherical waves is destructive. For any radiating body, such as a vibrating object, it is easy to see from Figure 5.40(b) that when ka is small, only weak spherical radiation is possible, whereas when ka is large, a directional plane wave can be propagated or beamed. Consequently highfrequency sound behaves far more directionally than low-frequency sound.

When a wavefront arrives at a solid body, it can be considered that the surface of the body acts as an infinite number of points which reradiate the incident sound in all directions. It will be seen that when ka is large and the surface is flat, constructive interference only occurs when the wavefront is reflected such that the angle of reflection is the same as the angle of incidence. When ka is small, the amount of reradiation from the body compared to the radiation in the wavefront is very small. Constructive interference takes place beyond the body as if it were absent, thus it is correct to say that the sound diffracts around the body.

Figure 5.41 shows two identical sound sources which are spaced apart by a distance of several wavelengths and which vibrate in-phase. At all points equidistant from the sources the radiation adds constructively. The same is true where there are path length differences which are multiples of the wavelength. However, in certain directions the path length difference will result in relative phase reversal. Destructive interference means that sound cannot leave in those directions. The resultant diffraction pattern has a polar diagram which consists of repeating lobes with nulls between them.

Figure 5.41 Constructive and destructive interference between two identical sources.

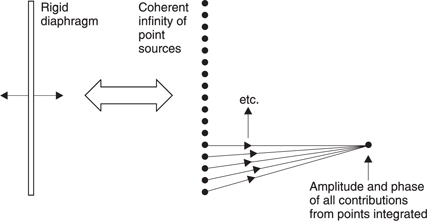

This chapter has so far considered only the radiation of a pulsating sphere; a situation which is too simple to model many real-life sound r adiators. The situation of Figure 5.41 can be extended to predict the results of vibrating bodies of arbitrary shape. Figure 5.42 shows a hypothetical rigid circular piston vibrating in an opening in a plane surface. This is apparently much more like a real loudspeaker. As it is rigid, all parts of it vibrate in the same phase. Following concepts advanced earlier, a rigid piston can be considered to be an infinite number of point sources. The result at an arbitrary point in space in front of the piston is obtained by integrating the waveform from every point source.

Figure 5.42 A rigid radiating surface can be considered as an infinity of coherent point sources. The result at a given location is obtained by integrating the radiation from each point.

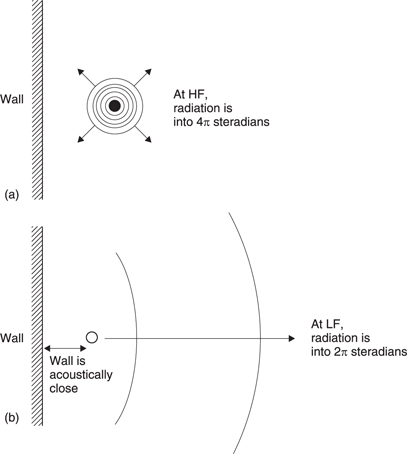

A transducer can be affected dramatically by the presence of other objects, but the effect is highly frequency dependent. In Figure 5.43(a) a high frequency is radiated, and this simply reflects from the nearby object because the wavelength is short and the object is acoustically distant or in the far field. However, if the wavelength is made longer than the distance between the source and the object as in Figure 5.43(b), the object is acoustically close or in the near field and becomes part of the source. The effect is that the object reduces the solid angle into which radiation can take place as well as raising the acoustic impedance the transducer sees.

The volume velocity of the source is confined into a smaller crosssectional area and consequently the velocity must rise in inverse proportion to the solid angle.

In Figure 5.44 the effect of positioning a loudspeaker is shown. In free space (a) the speaker might show a reduction in low frequencies which disappears when it is placed on the floor (b). In this case placing the speaker too close to a wall, or even worse, in a corner, (c), will emphasize the low-frequency output. High-quality loudspeakers will have an adjustment to compensate for positioning. The technique can be useful in the case of small cheap loudspeakers whose LF response is generally inadequate. Some improvement can be had by corner mounting.

It will be evident that at low frequencies the long wavelengths make it impossible for two close-spaced radiators acoustically to get out of phase. Consequently when two radiators are working within one another’s near field, they act like a single radiator. Each radiator will experience a doubled acoustic impedance because of the presence of the other. Thus the pressure for a given volume velocity will be doubled. As the intensity is proportional to the square of the pressure, it will be quadrupled.

The effect has to be taken into account when stereo loudspeakers are installed. At low frequencies the two speakers will be acoustically close and so will mutually raise their acoustic impedance causing a potential bass tip-up problem. When a pair of stereo speakers has been properly equalized, disconnecting one will result in the remaining speaker sounding bass light. In surround-sound systems there may be four or five speakers working in one another’s near field at low frequencies, making considerable SPL possible and calling into question the need for a separate subwoofer.

In Figure 5.45 the effect of positioning a microphone very close to a source is shown. The microphone body reduces the area through which sound can escape in the near field and raises the acoustic impedance, emphasizing the low frequencies. This effect will be observed even with pressure microphones as it is different in nature to and adds to the proximity effect described earlier. This is most noticeable in public address systems where the gain is limited to avoid howl-round. The microphone must then be held close to obtain sufficient level and the plosive parts of speech are emphasized. The high signal levels generated often cause amplifier clipping, cutting intelligibility.

When inexperienced microphone users experience howl-round they often misguidedly cover the microphone with a hand in order to prevent the sound from the speakers reaching it. This is quite the reverse of the correct action as the presence of the hand raises the local impedance and actually makes the howl-round worse. The correct action is to move the microphone away from the body and (assuming a directional mic) to point it away from the loudspeakers. In general this will mean pointing the microphone at the audience.

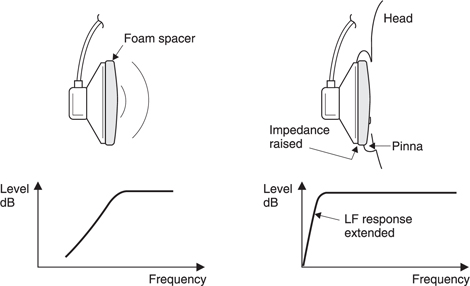

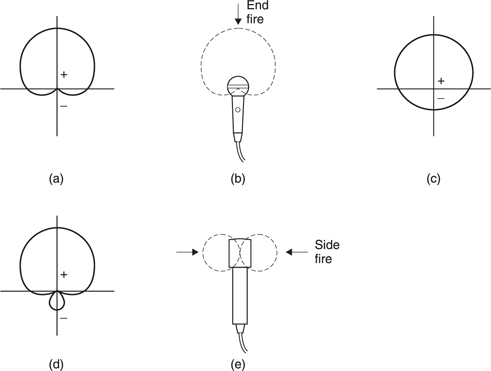

In Figure 5.46 a supra-aural headphone (one which sits above the ear rather than surrounding it) in free space has a very poor LF response because it is a dipole source and at low frequency air simply moves from front to back in a short circuit. However, the presence of the listener’s head obstructs the short circuit and the bass tip-up effect gives a beneficial extension of frequency response to the intended listener, whilst those not wearing the headphones only hear high frequencies. Many personal stereo players incorporate an LF boost to further equalize the losses. All practical headphones must be designed to take account of the presence of the user’s head since headphones work primarily in the near field.

A dramatic example of bass tip-up is obtained by bringing the ear close to the edge of a cymbal shortly after it has been struck. The fundamental note which may only be a few tens of Hz can clearly be heard. As the cymbal is such a poor radiator at this frequency there is very little damping of the fundamental which will continue for some time. At normal distances it is quite inaudible.

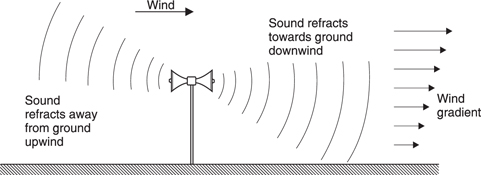

If sound enters a medium in which the speed is different, the wavelength will change causing the wavefront to leave the interface at a different angle. This is known as refraction. The ratio of velocity in air to velocity in the medium is known as the refractive index of that medium; it determines the relationship between the angles of the incident and refracted wavefronts. This doesn’t happen much in real life, it requires a thin membrane with different gases each side to demonstrate the effect. However, as was shown above in connection with the Doppler effect, wind has the ability to change the wavelength of sound. Figure 5.47 shows that when there is a wind blowing, friction with the earth’s surface causes a velocity gradient. Sound radiated upwind will have its wavelength shortened more away from the ground than near it, whereas the reverse occurs downwind.

Figure 5.47 When there is a wind, the velocity gradient refracts sound downwards downwind of the source and upwards upwind of the source.

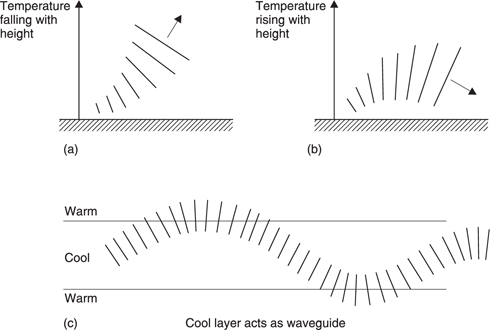

Upwind it is difficult to hear a sound source because the radiation has been refracted upwards whereas downwind the radiation will be refracted towards the ground making the sound ‘carry’ better. Temperature gradients can have the same effect. As Figure 5.48(a) shows, the reduction in the speed of sound due to the normal fall in temperature with altitude acts to refract sound away from the earth. In the case of a temperature inversion (b) the opposite effect happens. Sometimes a layer of air forms in the atmosphere which is cooler than the air above and below it. Figure 5.48(c) shows that this acts as a waveguide because sound attempting to leave the layer is gently curved back in giving the acoustic equivalent of a mirage. In this way sound can travel hundreds of kilometres. Sometimes what appears to be thunder is heard on a clear sunny day. In fact it is the sound from a supersonic aircraft which may be a very long way away indeed.

When two sounds of equal frequency and amplitude are travelling in opposite directions, the result is a standing wave where constructive interference occurs at fixed points one wavelength apart with nulls between. This effect can often be found between parallel hard walls, where the space will contain a whole number of wavelengths. As Figure 5.49 shows, a variety of different frequencies can excite standing waves at a given spacing. Wind instruments work on the principle of standing waves. The wind produces broadband noise, and the instrument resonates at the fundamental depending on the length of the pipe. The higher harmonics add to the richness or timbre of the sound produced.

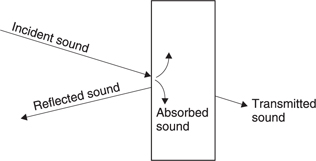

In practice, many real materials do not reflect sound perfectly. As Figure 5.50 shows, some sound is reflected, some is transmitted and the remainder is absorbed. The proportions of each will generally vary with frequency. Only porous materials are capable of being effective sound absorbers. The air movement is slowed by viscous friction among the fibres. Such materials include wood, foam, cloth and carpet. Non-porous materials either reflect or transmit according to their mass. Thin, hard materials such as glass, reflect high frequencies but transmit low frequencies. Substantial mass is required to prevent transmission of low frequencies, there being no substitute for masonry.

Figure 5.50 Incident sound is partially reflected, partially transmitted and partially absorbed. The proportions vary from one material to another and with frequency.

In real rooms with hard walls, standing waves can be set up in many dimensions, as Figure 5.51 shows. The frequencies at which the dominant standing waves occur are called eigentones. Any sound produced in such a room which coincides in frequency with an eigentone will be strongly emphasized as a resonance which might take some time to decay. Clearly a cube would be the worst possible shape for a studio as it would have a small number of very powerful resonances.

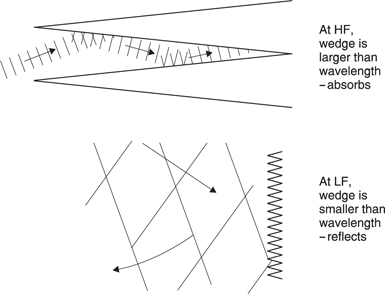

At the opposite extreme, an anechoic chamber is a room treated with efficent absorption on every surface. Figure 5.52 shows that long wedges of foam absorb sound by repeated reflection and absorption down to a frequency determined by the length of the wedges (our friend ka again). Some people become distressed in anechoic rooms and musical instruments sound quiet, lifeless and boring. Sound of this kind is described as dry.

Figure 5.52 Anechoic wedges are effective until wavelength becomes too large to see them.

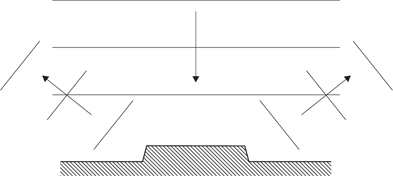

Reflected sound is needed in concert halls to amplify the instruments and add richness or reverberation to the sound. Since reflection cannot and should not be eliminated, practical studios, listening rooms and concert halls are designed so that resonances are made as numerous and close together as possible so that no single one appears dominant. Apart from choosing an irregular shape, this goal can be helped by the use of diffusers which are highly irregular reflectors. Figure 5.53 shows that if a two-plane stepped surface is made from a reflecting material, at some wavelengths there will be destructive interference between sound reflected from the upper surface and sound reflected from the lower. Consequently the sound cannot reflect back the way it came but must diffract off at any angle where constructive interference can occur. A diffuser made with steps of various dimensions will reflect sound in a complex manner. Diffusers are thus very good at preventing standing waves without the deadening effect that absorbing the sound would have.

Figure 5.53 A diffuser prevents simple reflection of an incident wavefront by destructive interference. The diffracted sound must leave by another path.

In a hall having highly reflective walls, any sound will continue to reflect around for some time after the source has ceased. Clearly as more absorbent is introduced, this time will fall. The time taken for the sound to decay by 60 dB is known as the reverberation time of the room. The optimum reverberation time depends upon the kind of use to which the hall is put. Long reverberation times make orchestral music sound rich and full, but would result in intelligibility loss on speech. Consequently theatres and cinemas have short reverberation times, opera houses have medium times and concert halls have the longest. In some multi-purpose halls the reverberation can be modified by rotating wall panelling, although more recently this is done with electronic artificial reverberation using microphones, signal processors and loudspeakers.

Only porous materials make effective absorbers at high frequency, but these cannot be used in areas which are prone to dampness or where frequent cleaning is required. This is why indoor swimming pools are so noisy.

5.11 Directionality in hearing

An understanding of the mechanisms of direction sensing is important for the successful implementation of spatial illusions such as stereophonic and surround sound. The nerve impulses from the ears are processed in specific areas of the brain which appear to have evolved at different times to provide different types of information. The time-domain response works quickly, primarily aiding the direction-sensing mechanism, and is older in evolutionary terms. The frequency-domain response works more slowly, aiding the determination of pitch and timbre and developed later, presumably after speech evolved.

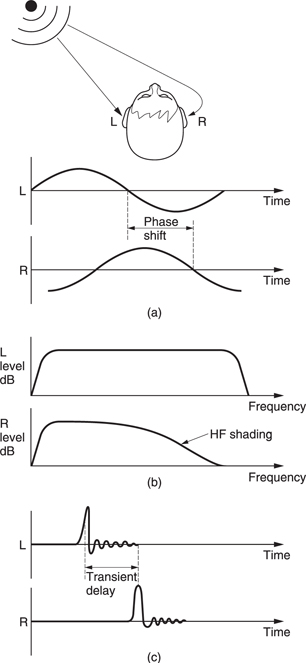

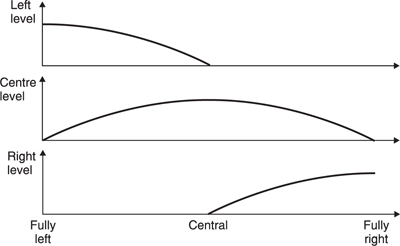

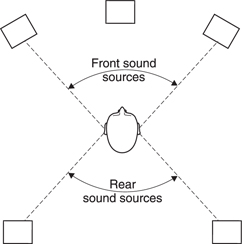

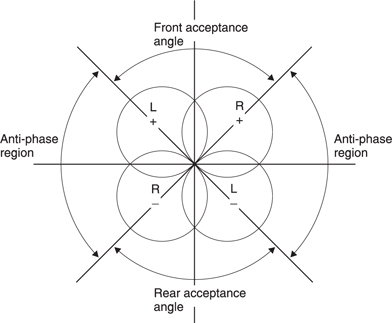

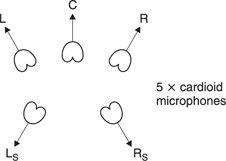

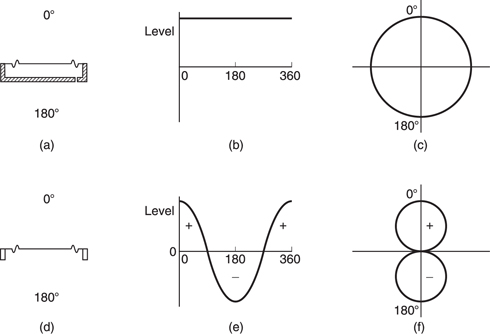

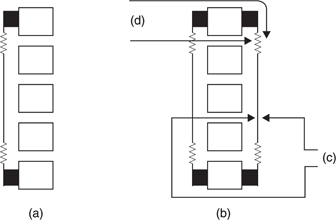

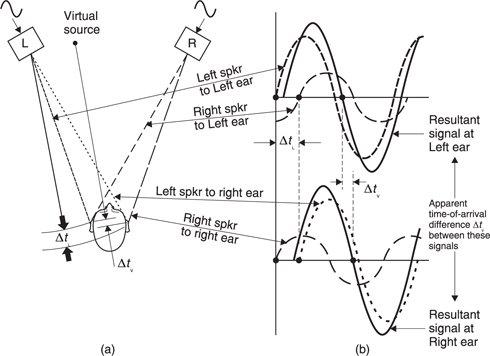

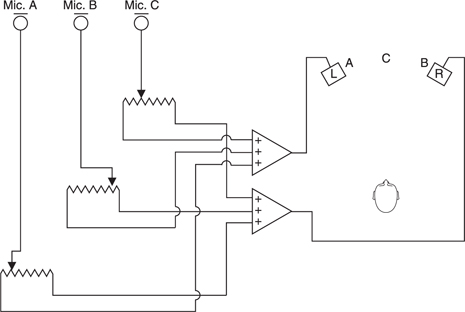

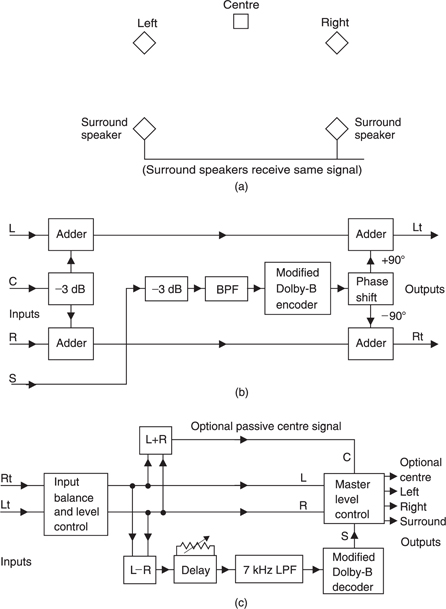

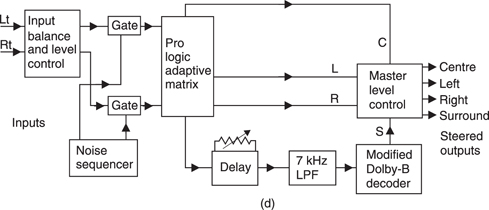

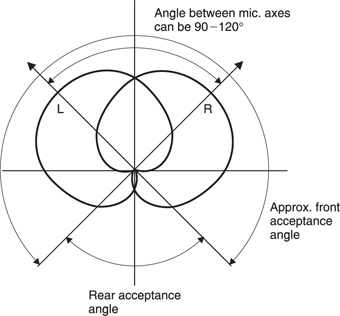

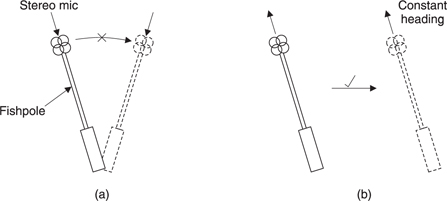

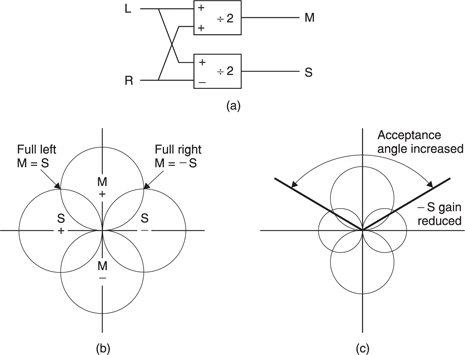

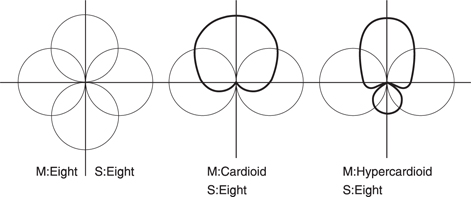

The earliest use of hearing was as a survival mechanism to augment vision. The most important aspect of the hearing mechanism was the ability to determine the location of the sound source. Figure 5.54 shows that the brain can examine several possible differences between the signals reaching the two ears. At (a) a phase shift will be apparent. At (b) the distant ear is shaded by the head resulting in a different frequency response compared to the nearer ear. At (c) a transient sound arrives later at the more distant ear. The inter-aural phase, delay and level mechanisms vary in their effectiveness depending on the nature of the sound to be located. At some point a fuzzy logic decision has to be made as to how the information from these different mechanisms will be weighted.