CHAPTER 14

USE CASES, TEST CASES

Ian Alexander

Scenario Plus, London, UK

USE CASES define the expected behaviours of systems in a branching structure consisting of the primary and alternative/exception scenarios. To generate deterministic, straight-line Test Cases, it is necessary to find a suitable set of paths—Test Scenarios—through each branched Use Case, at least covering all the branches and often also meeting more stringent criteria. Therefore, one Use Case can generate and trace several Test Cases. In addition, test conditions may need to be varied to take account of different operating environments. So, each Test Scenario discovered from the Use Cases may need to be executed in not one but a whole Class of Test Cases, whose members differ according to the environmental conditions in force.

APPLICABILITY

In principle, Use Cases can guide the design of tests for any type of system. In practice, this is most important for complex novel systems whose patterns of use are not predictable from previous experience.

Scenario-based testing is most clearly appropriate for user-level acceptance testing, in which the test cases should resemble the scenarios originally elicited from system stakeholders. It may also be useful for testing sub-system behaviour, and for tests of those system qualities such as maintainability in which a scenario approach makes sense.

POSITION IN THE LIFE CYCLE

![]()

KEY FEATURES

- Use Case paths are traversed to generate Classes of Test Cases (abstract Scenarios)

- These are combined with environmental conditions to generate concrete Test Cases

- The approach can be automated, at least partially

- The approach is especially suitable for end-to-end, black-box testing

- It directly contributes to product quality as it demonstrates that systems are fit for the purposes for which they were designed.

STRENGTHS

Scenarios, especially when they also define the handling of exceptions—as is canonical for Use Cases—are ideal for defining functional tests, as there is a natural correspondence between saying what a system is expected to do, and testing that it does those things.

Use Cases define the goals of system use, and these correspond directly to Classes of related Test Cases.

Primary scenarios in Use Cases correspond directly to ‘happy day’ tests of normal operations.

Where stakeholders have personally contributed stories or scenarios describing desired system usage, user-level Acceptance Test Cases can be made to correspond directly to such stories. This gives confidence that the system is fit for purpose, at a level above detailed testing of system functions and qualities. Such stories can be encapsulated in either Normal or Exception sequences of Use Cases, depending on their nature.

Exception courses (branches) in Use Cases correspond to simple tests of exception-handling, though the exception-handling branches usually need to be combined with (parts of) primary scenarios to yield complete Test Case Scenarios.

WEAKNESSES

Domain knowledge is needed to identify the range of environmental conditions important to the tests, and in what combination. It is impractical to run separate tests for each combination of environmental conditions for each test scenario.

Use Cases do not always stand alone. Where a Use Case includes others, complete test scenarios may straddle several Use Cases. If the relationships between Use Cases are complex, it is better to make a complete behavioural model (e.g. in Flowchart/Swimlanes or Message Sequence Chart form) to define clearly the network of scenario paths to be tested.

Skill is needed to decide how many tests to run. It is impractical to run all possible test scenarios as there is generally a combinatorial explosion of different branches; when there is explicit or implicit looping the number of possible scenarios is of course infinite. However, simple rules can be applied to generate much more limited sets of test cases if assumptions are made, for example on branch coverage.

Testing (or more generally, verification) cannot be based solely on functional requirements, that is, what a system has to do. It must also consider qualities (often known as ‘ility’ requirements) such as reliability, scalability, performance, maintainability, safety, electromagnetic compatibility, security, manufacturability, and so on. Of course it is possible to define scenarios to cover many of these requirements, but this is not always done, and scenario definition is not necessarily appropriate for them anyway. In general, the verification of non-functional requirements requires as much attention as the verification of system behaviour, and is often difficult to achieve by testing. For example, reliability can be demonstrated by testing, but only after a long period of time (possibly years). In practice it is often predicted by analysis, and partially confirmed by building up a history of actually attained reliability of components and whole systems.

Scenario-based testing does not claim to do everything. It is a close match for behaviour desired by specific stakeholders, such as maintenance operators; it is less likely to be the ideal technique for white-box tests, such as whether the desired voltages are available at certain points in a circuit, or whether software objects (or procedures, functions, etc.) work correctly when supplied with a selection of correct and incorrect parameters.

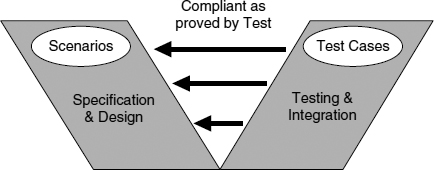

BACKGROUND: THE V-MODEL

A simple model of system development considers that project activities occur not so much in a straight-line story (like the ‘Position in the Life Cycle’ diagram that introduces each chapter of this book) as in two stories, with a relationship between them. These are often represented as the two limbs of a V (Figure 14.1). (For a more thorough look at system development life cycles, see Chapter 15 by Andrew Farncombe in this volume, which explains how the cycle of specification, design, and testing described here fits into a bigger picture.)

The traditional V-Model emphasises that the testing and integration story is rather different from the specification and design story, and indeed the two stories match like mirror images. If you create a system by specifying the whole, then sub-systems, then assemblies and finally components and modules, it follows that you verify it by testing first the lowest-level components, and if these integrate satisfactorily, then by integrating successively more parts of the system until a single working whole emerges.

FIGURE 14.1 The V-model

In this picture, it is at once evident that if you place scenarios at the start on the left-hand side, then you should get something equivalent to them on the right. One conclusion is that scenarios or Use Cases have considerable value as test cases: if you know what the stakeholders want the system to do, what better way of verifying the system than by making it do that?

TECHNIQUE

A Use Case model is constructed, with Alternative and Exception Scenarios documented to cover all the variant courses of action and Exception Events considered to require handling (Cockburn 2001).

Each Use Case is a unit of behaviour in the special sense that it is a complete story, possibly spanning contributions from all parts of the system. The availability and correct functioning of everything that contributes to that story can, therefore, be demonstrated by a test that runs through the whole story.

Obviously, this approach will not work if Use Cases are poorly constructed—for instance, if they are made from the point of view of the system and essentially document the separate behaviours of sub-systems or components (such as software modules). Models with this orientation are often called ‘inside-out’ for this reason.

For instance, in the hackneyed but frequently bungled customer-gets-cash-from-teller-machine example, the customer is not talking to the ATM1 device: he/she is talking to the bank. Ultimately the cash comes not from the hole in the wall but from the customer's account, however much the customer might wish otherwise. It is all too easy for analysts to see the world or ‘problem domain’ as a set of sub-systems, but this is as wrong for acceptance testing as it is for stating stakeholder requirements. We don't want to start with inside-out ATM cases; we want clean get-cash-from-bank cases.

Given a starting point of a well-founded set of Use Cases, test engineers already have the basis for first-cut test design: The functional requirements are segmented into more or less directly testable chunks of behaviour. The first task should naturally be to check that the Use Cases are indeed of this quality. If they are not, the alternatives are to improve and perhaps supplement them, or to find another basis for testing.

The second task is disarmingly but perhaps deceptively simple: to create a Test Case by copying (and linking back to, if you have tool support) each Scenario (Ahlowalia 2002, Alexander 2002, Heumann 2001). But there are quite a few complicating factors.

A One-to-Many Mapping

The core of a Test Case is a simple and therefore deterministic sequence of actions, generally given a name, to be carried out by a test engineer on the system, supported as necessary by test equipment. Tests should be deterministic2 to allow test engineers to know immediately whether a given test run has passed or failed—there should be no ifs and buts about it. A branching Use Case can generate many allowed sequences of actions (Scenarios), so there is a one-to-many relationship between Use Cases and Test Cases. Simple kinds of scenario such as User Stories (see Chapter 13, User Stories in Agile Software Development) often seem at first blush to map precisely one Test Case, but closer analysis often reveals several further possible Test Cases; complex Stories may have to be split to make them tractable.

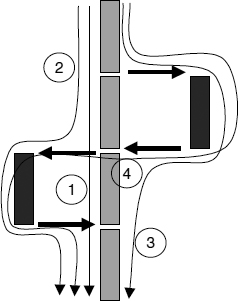

FIGURE 14.2 Multiple scenario paths in a use case with two recoverable exceptions

The generated Scenarios are likely to be composed of a part of the main or happy day sequence, followed by a branch (Alternative or Exception), followed probably by another part of the main sequence. Therefore, we have to be careful to distinguish between the component sequences of actions (or ‘courses of events’) of a Use Case, which are often called ‘Main Scenario’ and so on, and generated Scenarios which are complete paths that might happen in practice and might need to be tested. A Use Case with two recoverable Exception sequences that do not overlap can generate at least four Scenarios (see Figure 14.2), and more when cycles are considered.

Slaying the Hydra

Where there are many variations possible, the number of Scenarios that can be generated grows combinatorially. This is especially serious if you have to consider several Use Cases at once. As soon as you have thought of one Test Case, you realise there are three other similar ones that you might need to include, rather as Hercules in Greek mythology sliced off one of the heads of the Hydra with his sword, only to find that three more heads grew in its place (Figure 14.3).

One traditional solution is to select a set of Test Cases that cover each branch at least once (every block of activities is executed in at least one test). Unfortunately, this is by no means the same thing: for instance, you could run a test of the Main Scenario, and then a cut-down test that merely started each Exception off and ended when the exception-handling steps had been run. This covers all the activities in the Use Case, but it completely fails to answer pressing questions like ‘can normal activities resume successfully after this Exception?’ It is only too easy for software to reset rather too well after an error; the error is apparently cleared, but the software fails to run the next normal case. So, as test engineers are well aware, Branch Coverage is a weak approximation to ‘full’ testing.

FIGURE 14.3 Hercules slaying the Hydra. Redrawn from a Greek Earthenware Vessel, about 525 BC

For simple Use Cases (those without too many combinations), the solution is to generate all possible combinations as Test Cases.

For larger Use Cases, we need to be more selective about which Test Cases to define. Starting from the principle that behaviour visible to users needs to be tested, and that the component sequences of Use Cases constitute such behaviours, we can state some rules for generating Test Cases,

- Every Normal (happy day) scenario should be run as a test from start to end. Since the goal of the Use Case cannot be achieved without the normal behaviour, this test is a precondition for all the other tests. If it fails, further testing may be nugatory until the normal scenario can be run successfully.

- Every Alternative and Exception should be run as part of a test, commencing at the start of the Normal sequence and ending as late as possible, that is,

- at the end of the Normal sequence if this can be rejoined (recoverable Exception);

- at the end of an irrecoverable Exception sequence.

- Every Exception that ends with rejoining the Normal sequence should also be run as part of a test that then executes the whole Normal sequence (again) from the start, to check that the error conditions have been correctly reset.

For a Use Case with two recoverable Exceptions as diagrammed above, this means that there will be at least five test cases, namely,

- the Normal Scenario

- Normal Start—Exception 1—Normal End

- Normal Start—Exception 2—Normal End

- Exception 1—Normal End—whole Normal sequence again

- Exception 2—Normal End—whole Normal sequence again

It is then a matter of judgement whether the additional Test Case containing both Exceptions are necessary. If there is any doubt whether handling the first exception could upset the handling of the other exception, then the test is necessary. It is not logically sufficient, as we would still not have tested whether running the second exception and then the first (on the next cycle) would work correctly. The Hydra has many heads and it is impossible to be sure that no possible faults remain in systems of any complexity. In practice, therefore, testers have to settle for some measure of reasonable coverage rather than anything close to complete coverage of all imaginable scenarios.

Prioritising the Tests

When there are many possible paths, it is sensible to prioritise them to help select which Test Cases to run. There are several possible selection criteria, such as

- paths most likely to fail in a test (most productive tests)

- paths most frequently followed by users (McGregor and Major 2000) (most needed tests)

- minimum number of paths to achieve branch coverage (most economical tests)

- paths asserted by stakeholders to be significant

- paths with high criticality to the mission

- paths that are highly related to safety.

Ahlowalia suggests computing a path factor by adding criticality and frequency, or possibly more complex metrics (Ahlowalia 2002). Different criteria may be right for different kinds of system.

The criteria for real-time, embedded, and safety-related systems are likely to differ from those for office software such as accounting and management information systems. For example, safety testing must comply with the verification plan set out in the system safety case. That will constitute an argument that the system is known to be acceptably safe because, amongst other evidence, the system has been tested against all major identified hazards. (See Chapter 7, Negative Scenarios and Misuse Cases for a discussion of hazard identification.) It would not be acceptable in a safety context to argue that because a path was rarely followed it need not be tested—on that basis, parachutes and fire extinguishers could be made cheap and unreliable.

McGregor & Major's focus is on business software, in which the number of possible paths is essentially infinite, and even achieving branch coverage is often difficult. In this context, a software system is imagined as a green field containing any number of untrodden paths. However, suppose the field has four gates and two stiles, and people usually walk between these points. Soon, paths appear in the grass, and the areas near the gates and stiles become trodden smooth, while areas far from these points remain lightly visited or unexplored. With this image in mind, McGregor & Major's approach is to make an operational profile of each class of user (‘actor’) and hence predict frequency of use, if not for each path then at least for each Use Case. Traditionally, operational profiles are created retrospectively; but Use Cases offer a way of predicting operational profiles in time for acceptance testing.

Tool Support

It is tempting to consider how the generation of Test Cases from Use Cases could be automated. A tool would require a representation of the Use Cases that could be traversed automatically, and a set of rules (such as those just given) for the traversal.

Any database that records the order of scenario steps is sufficient to allow a straight-line scenario to be traversed, and the task of copying and linking such a scenario to a Test Case is quite trivial.

Unfortunately, there are two major obstacles in the way of automating test generation,

- Naïve traversal generates enormous numbers of combinations, yielding an impractically large set of often very repetitive and unproductive Test Cases.

- Use Cases are structured for humans to read, not for test generators.

It is possible to make some reasonable assumptions to cut down on the number of pointless Test Cases, as we did in the rules given above. These do not guarantee perfect testing, but they do often give a sensible approximation to a workable set of tests.

The second obstacle is more challenging. Use Cases and scenarios in general are fundamentally stories told by people to people. They can be chatty, casual, allusive, and inconsistent. Branching can be indicated vaguely or not at all; branch positions can be indicated by numbering in ‘come from’ rather than ‘go to’ style, and so on. In short, people have ways that are infuriating to precise test engineers. The trouble is that the reason why engineers use scenarios in the first place is because they are informal enough and expressive enough to enable ordinary stakeholders to express and share their intentions. Formalising the Use Cases into something semantically tight and precise could make them much easier for engineering tools to traverse and impossible for non-technical people to cope with.

Where Use Cases have to be shared with ordinary stakeholders, a list of steps is probably as much formalisation as can readily be tolerated. Happily, a simple tool that can copy and link such a sequence to a Test Case fragment, together with simple editing tools allowing such fragments to be spliced together, can dramatically cut down the amount of work needed to prepare user-level tests. Essentially this is the approach taken in my Scenario Plus toolkit, in which support for testing is extremely simple—the test engineer selects a scenario and pushes a button to create and link a matching test case; refinements are then made “by hand” using link-preserving cut and paste (Scenario Plus 2003). In practice, this reduces the mechanical task of creating a test case to a few seconds; most of the effort is where it should be, in defining the test conditions and pass criteria.

Where Use Cases are essentially owned by engineers, as when they document interactions between sub-systems, it is possible to make them more analytical. Some engineers (e.g. Binder 1995, Gelperin 2003) as well as a persistent group of researchers (see e.g. Frappier and Habrias 2001 for a range of approaches) argue that Use Case specifications must be made more formal. It is a moot point—even if you concede that formal specifications are needed at some state of a project—whether Use Cases should be fully structured like program code (or pseudocode) with special syntax to express branching, looping, and so on, as Gelperin suggests. Binder proposes another approach, adding a decision table to the Use Case model to support systematic generation of test cases; otherwise ‘the typical OMT3 Specification is an untestable cartoon. The information is useful for human interpretation but cannot support automated test case generation.’ (Binder 1995)

Such a structure makes it easy for a Test Case generator to traverse and analyse the cases; but since we know that even expertly written code contains an error every 1000 lines or so, such analytic scenario structures would be subject to subtle ‘programming’ errors. Given that this structure would drive the structure of system testing, any such errors would be magnified. In addition, analysis takes more time than simply writing down stories, so analytic Use Cases would be available only later in a project. However, for engineer-facing Use Cases these are no insurmountable objections.

Another approach is to develop models, such as swimlanes diagrams with activity/flow charts, state charts, and message sequence diagrams, that in their various ways express scenarios in more analytic forms. It is worth observing that sequence diagram representations are often called ‘scenarios’ (e.g. Figure 14.5 in Amyot and Mussbacher 2002). This use of models is of course the conventional software and systems engineering approach, gradually translating abstract stakeholder needs into concrete system designs. This view is echoed in the UML and associated processes like the Rational Unified Process (RUP) (Kruchten 2000; see also Peter Haumer's account in Chapter 12).

Any analytic model that correctly expresses the temporal and logical relationships of required activities can (in principle) be traversed to generate Test Cases. The diversity of types of model—both within and between projects—is an inconvenience for test case generation, but since the models are used directly to define the system, it has the merit that both the system and tests are generated from the same models.

For software, the logical outcome is a formal or semi-formal specification in, for example, Z or SDL (see Frappier and Habrias 2001), from which the code can be generated mechanically (if not yet necessarily automatically), and from which a systematic battery of test cases can be generated by rules such as those we have discussed.

Numerous research and industrial tools have been created to help construct such specifications, and then to translate them more or less automatically to sets of test cases. For example, Bell Labs have a free Message Sequence Chart (MSC) editor and Test Case generator (working from the MSC ‘scenarios’), promising complete branch coverage and optimal performance, in the sense that the tests will be fewest in number and of the shortest length possible (UBET 2003).

Do Scenarios Yield Test Cases or Test Case Classes?

So far, we have effectively assumed that a (named) sequence of activities to be tested corresponds directly to a Test Case. However, this is not the whole story. Test conditions may need to be varied to take account of the range of possible operating environments. So, each Test Scenario generated from the Use Cases may need to be executed in not just a single Test Case but also a whole Class of Test Cases, whose members differ in the environmental conditions in force.

For example, to test whether a CD player works in a car, a basic test scenario is to insert a CD, start the player, and then drive the car. The test passes if the CD is heard satisfactorily, whether the car is moving or not. This could be called the ‘Journey Music’ test. However, on reflection you can see that it could be important whether the car is driven gently on a smooth road, violently on a rough forest track, up and down steep hills, or accelerated and braked sharply. It could also matter whether the temperature is −40°C in arctic Norway, 11°C in rainy England, or 45°C in the dust of the Arabian Desert, if the car and the CD player are to be used in those places. How many Test Cases is that? Do we need an Arctic Forest-Track Rally-Driving Music Test?

The unpalatable answer might be that we need many more tests than scenarios. However, test engineers are used to making assumptions about environmental conditions, and combining results from different, fragmentary tests to predict overall performance. For instance, equipments are routinely tested by being vibrated and shaken to simulate being driven and dropped, and so on; they are separately tested by being heated and cooled repeatedly to simulate being used in different climates and when the weather changes. Passing those two tests does not actually prove that the equipment will work if shaken and heated at once, but unless there are reasons for supposing that the two factors would interact, much test effort is saved by not checking the combination.

In general, then, a Scenario identified as being worth testing corresponds to a Test Case Class, not a single Test Case as software engineers may assume: all really good disputes are founded on the simple fact that the world looks different from different places. It is a matter of judgement how many individual Test Cases are then generated, given the immense range of environmental conditions (temperature, noise, humidity, wind, pressure, electromagnetic radiation, magnetic fields, vibration… ) that could apply. The default choice is just one—the ordinary ‘room temperature’ conditions of the test laboratory—but it is always possible that this is insufficient.

The possibilities for tool support in selecting appropriate combinations of environmental conditions are quite limited. The most useful guide is experience, perhaps encapsulated in a template derived from previous projects.

WORKED EXAMPLE

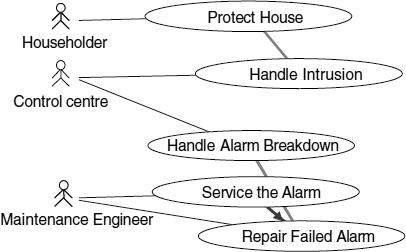

Let us continue with the simple example of Chapter 7, Negative Scenarios and Misuse Cases. Suppose that we have prepared Use Cases for a burglar alarm service, including installation, monitoring, and maintenance of household alarms (Figure 14.4).

Inputs

The principal inputs consist of the Use Cases. These tell the story of how the alarm service as a whole is to operate.

The householder protects the house with an alarm. This guards the house while the householder is out, and alerts the Control Centre if there seems to be an intrusion. The Control Centre handles the intrusion by trying to disconfirm it, and if that fails by calling out a guard and notifying the police. The Control Centre also handles alarm breakdown as well as regular servicing, calling out a maintenance engineer to repair or service the alarm as appropriate. A service may, exceptionally, lead to the repair of an alarm. The Control Centre is also responsible for other tasks (not shown) including opening and maintaining customer accounts, taking payment for accounts, and arranging for new alarms to be installed.

FIGURE 14.4 Use Case summary diagram for ‘Provide Burglar Alarm Service’

Here is a summary of the Use Cases and their principal Exceptions.

Protect House

Alternative: (Deluxe Model) Operate Alarm Remotely

Handle Intrusion

Exception: Mistake: Handle False Alarm

Exception: Alarm Failed: Repair Failed Alarm

Handle Alarm Breakdown

Service the Alarm

Exception: Alarm Failed: Repair Failed Alarm

Repair Failed Alarm

Exception: Alarm Irreparable: Install the Alarm

Install the Alarm

Open Customer Account

Update Customer Account

Collect Subscription Payment

Exception: Unpaid: Cancel Service, Chase Payment

Input Validation

Our first task is to validate these inputs: will these form good tests, and is the list complete?

It is important to test all aspects of the Use Cases. All of them will certainly be needed for the alarm service to work properly, and indeed for the company running the service to prosper. And they are reasonably discrete, end-to-end stories in their own right; of course we need to check the wording of the scenarios to see if they are adequately defined.

Notice, incidentally, that the cases are not all about exciting things at the sharp end of the stick, like activating the alarm or detecting intrusion. Many vital activities are about pedestrian details like recording that Mrs. Jones lives in 131 Acacia Avenue, that her phone number has just changed, and that she has paid her subscription for the year. Yet the address is critical to the burglar alarm service; if it is wrong, the guard and the police will be misdirected in the event of a burglary. Similarly, the phone number is needed—if it is wrong, the guard may be sent out to a false alarm, as the householder will not be reached to disconfirm intrusion. As for the subscription, the alarm service will work perfectly without it—until the alarm company goes bankrupt.

Completeness is inherently more difficult to validate. Stakeholders can tell, by playing through the stories in their minds, that these Use Cases would result in a working service. But what might be missing?

Thinking out what could go wrong, by analysing Exceptions and Misuse Cases (see Chapter 7), is one way to find out. A security breach, caused for instance by a corrupt employee in the Control Centre, could cause Handle Intrusion and perhaps Collect Subscription Payment to fail. This line of reasoning leads to the sort of requirements detailed in the Misuse Cases chapter, and suggests that there ought to be specific procedures (perhaps documented as Use Cases) to deal with security: for instance, monitoring of employee actions, analysis of patterns of burglaries, and false alarms. As test engineer, you might recommend Test Cases to cover such procedures even if the equivalent Use Cases were missing. You might also with some justification be a little suspicious about any cases with no documented Exceptions: is it really being claimed that nothing can go wrong? The ancient Greeks called that hubris, meaning ‘tempting fate’.

Identifying Classes of Test Cases

We can now work out the full list of classes of functional Test Cases—we do not expect to cover all the non-functional requirements such as reliability here.

Firstly, there must be a normal course of events (happy day) test for each Use Case:

- Protect House (from existing Use Cases)

- Handle Intrusion

- Handle Alarm Breakdown

- Service the Alarm

- Repair Failed Alarm

- Collect Subscription Payment

- Install the Alarm

- Open Customer Account

- Update Customer Account

- Monitor Employee Actions (case added by Test Engineer)

- Analyse Alarm Patterns (case added by Test Engineer)

Next, there must be a test for each documented Alternative or Exception. To minimise the number of tests, we can restrict ourselves to defining only the normal—Exception—repeat the normal test scenarios.

- 12. Protect House Alternative: (Deluxe Model) Operate Alarm Remotely

- 13. Handle Intrusion Exception: Mistake: Handle False Alarm, then Handle Intrusion normally

- 14. Handle Intrusion Exception: Alarm Failed: Repair Failed Alarm, then Handle Intrusion normally

- 15. Service the Alarm Exception: Alarm Failed: Repair Failed Alarm, then Handle Intrusion normally

- 16. Repair Failed Alarm Exception: Alarm Irreparable: Install the Alarm, then Handle Intrusion normally

- 17. Collect Subscription Payment Exception: Unpaid: Cancel Service, Chase Payment, then Collect Subscription Payment (“next year”) normally

Notice that we have chosen to run the ‘Handle Intrusion’ scenario after servicing or repairing the alarm, even though this is structured as a separate Use Case (and would likely not be identified as necessary to these particular tests by a naïve test case generator). Our domain knowledge tells us that the alarm ought to be able to do this after maintenance. We could equally have chosen to run ‘Protect the House’, which is also a happy day scenario that could follow on from alarm maintenance. Another very economical choice would be to run ‘Handle Intrusion’ after one of the simulated repairs, and ‘Protect House’ after the other. That way we would at least have some evidence that both can work correctly after a repair. The more cautious but more costly test approach would be to run both follow-ons for both situations, creating two more test cases—not many in this small example, but in a large system it would represent a severe challenge: a combinatorial explosion in numbers of test cases, where many normal courses could be combined with many exception scenarios.

Then we have to consider whether to run combination tests, first within Use Cases that have two or more Exceptions, and then across Use Cases in case they interact.

Here, in this simplified story, we only have Handle Intrusion with two Exceptions. We might as well run all combinations within that Use Case,

- 18. Handle Intrusion—Handle False Alarm—Repair Failed Alarm—Handle Intrusion again.

- 19. Handle Intrusion—Repair Failed Alarm—Handle False Alarm—Handle Intrusion again.

Is that all the tests? By no means. Questions like whether the Control Centre can deal with the stress of multiple simultaneous alarms must be answered with convincing evidence, or the company's reputation may be irredeemably damaged. If you are lucky enough to have stories or scenarios covering what experts in the field think are the critical issues, then these are definitely worth converting into test cases—even though they probably will not be made into Use Cases.

Generating Classes of Test Cases

Given the decisions already made, it ought to be possible to generate Test Cases essentially automatically. This is easy for happy day tests—you just copy (and link if you want traces from test steps back to requirements, which you should) the Normal course from the Use Case in question.

If the requirements engineers have done their stuff, every step will have its own Acceptance (or ‘Fit’) Criteria (Alexander and Stevens 2002, Robertson and Robertson 1999), which you should be able to use directly to enable the person running the test to tell whether the step has passed or failed. If not, you have a bit of extra work to do.

Tests involving Alternatives or Exceptions are a little more tricky. Unfortunately, understanding text isn't something that computers are currently much good at, and so if the steps in your Use Cases are plain text, you will probably have to identify where Alternatives and Exceptions should branch off from the Normal sequence, and where (if anywhere) they rejoin it. That done, generation of test scenarios is simply a matter of copying and linking.

For example,

- 13. Handle Intrusion Exception: Mistake: Handle False Alarm, then Handle Intrusion normally

unpacks to form the rough test scenario

- (Intrusion simulated)

- –—–—–—–—–Normal:—–—–—–—–—

- Call Centre Operator studies details of reported intrusion.

- Call Centre Operator tries to disconfirm intrusion by calling Householder.

- Call Centre Operator authenticates Householder's identify.

- ––—–—–—–—–Exception:—–—–———

- Householder admits Mistake.

- Call Centre Operator logs event.

- (not including: Call Centre Operator Calls out Guard.)

- (Intrusion simulated)

- –—–—–—–—–Normal:—–—–—–—–—

- Call Centre Operator studies details of reported intrusion.

- Call Centre Operator tries to disconfirm intrusion by calling Householder.

- Householder does not disconfirm.

- Call Centre Operator Calls out Guard.

This is only rough, because the person running the test does not want to know about people's job titles, but only which actions to take. So it may be necessary to tidy up the wording. It might be sensible to do this before generating test scenarios, if some Use Case sequences are going to be copied several times, as is likely.

Selecting Environmental Conditions for Test Cases

The final step in generation is to select which environmental conditions to test for each class of Test Cases, to create a set of fully defined individual Test Cases. For example, we might be concerned that the household alarm would not Protect the House correctly in stormy conditions, when wind, rain, hail, lightning, and rapid changes in temperature and humidity might either trigger false alarms, or might prevent real intrusions from being detected or reported. We might, therefore, create additional Test Cases in the Protect the House class

- 20 Protect House in Electrical Storm

- 21. Protect House in Force 8 Wind

- 22. Protect House in Heavy Rain

It might be considered necessary to create Test Cases for combinations of such conditions, including all of them at once.

Some environmental conditions can be set up quite readily, but simulating a full storm around a test house might be expensive, while the alternative of waiting for a real one would be unacceptable in most commercial situations.

Specialised test facilities such as wind generators (see photograph, Figure 14.5), temperature and humidity chambers, and ‘shake, rattle, and roll’ vibration generators are available in most developed nations, but they typically need to be booked or ordered far in advance.

FIGURE 14.5 The Proteus cross-wind blower. This machine simulates strong side-winds for testing jet engines. Courtesy of Rolls-Royce PLC

The effort required for thorough environmental testing provides a strong argument for writing scenarios that cover the whole life cycle (itself a scenario) from development through to disposal, and basing tests on them early in development. Well thought out scenarios make it possible to identify and plan for ‘long poles in the tent’ such as having to procure the use of test facilities, perhaps years before they will be needed.

COMPARISONS

It isn't very obvious as to what to compare scenario-based testing with: the unhappy truth is that without the sort of visibility of a system's intended uses that scenarios give naturally, test design is always somewhat in the dark, at least for end-to-end tests of whole systems. The problem is much less acute for white-box testing, where knowledge of the structure of the system is often (but definitely not always) enough to carry out a range of tests.

Testers' Domain Knowledge

In the absence of scenarios, testers have always used their personal knowledge of the domain to try to create realistic end-to-end black-box tests: in other words, they have been forced to invent plausible scenarios.

To give a somewhat extreme example, when consulting with a telecommunications company on the testing of a new accounting system, I made the uncomfortable discovery that while they had identified approximately 1500 test cases, there were no available requirements. The system was critical to their mission: if they didn't charge for phone calls, they didn't get paid. The company had bought and customised a commercial-off-the-shelf (COTS) package, and ‘it did what it did’ (Haim Kilov's phrase). The test team were given the job of showing that the software was all right, and they settled down to identify ways in which the thing might possibly be used. Since none of them seemed at all surprised at this part of their job, either this was the first time they had dealt with this kind of problem, or this was what usually happened on projects: a Buy-Design-Guess the Test Cases cycle.

This cycle might work well enough on small projects. After all, if you buy a set of COTS office automation software products, and develop a few small fragments of Visual Basic code to integrate a few office functions, you can to a large extent rely on the extensive testing done on the COTS products, and you only have to test the effect of the ‘gluing’ code you have added. This is attractive because it feels like wasted effort specifying something that already exists. Unfortunately, the lazy approach isn't safe, because it is always possible that while things may work locally, the system may break down on end-to-end scenarios. So a scenario-based testing approach looks suitable for COTS integrations. The whole question of how to specify COTS integrations is not surprisingly becoming a hot topic for requirements researchers (see Chapter 25, The Future of Scenarios).

Operational Scenarios

However, Use Cases are not the only basis for Scenario-based testing. Traditional Operational Scenarios, such as those listed in military/aerospace Concept of Operations (ConOps) documents also offer a workable approach. These are straightforward textual scenarios, sometimes classified into a hierarchy, for example

Plainly, these can be very useful for constructing end-to-end tests. Where they might not be as good as Use Cases for guiding test design is in their coverage of exceptions—which may be quite patchy.

User Stories

We have already mentioned the generation of Test Cases from User Stories, and it forms a central plank in agile methods (see Chapter 13, User Stories in Agile Software Development). Use Case and agile approaches share a strong emphasis on the importance of stakeholders, of story, and of verification (e.g. Cockburn 2001, Beck 2000). Agile methods are intended for software systems, though some of the practices—such as getting stakeholders to tell stories, and writing test cases early—are quite beneficial and could be applied to other types of system. Agile development seems to be most popular and is arguably most suitable for object-oriented, web-based and business software, where short development times and therefore repeated releases are favoured.

KEYWORDS

Use Case

Test Case

Test Case Class

Test Step

Test Script

Test Scenario

Black-Box Test

White-Box Test

REFERENCES

Alexander, I., Requirements and Testing: Two Sides of the Same Coin, Telelogic Innovate, September 2002. Available from http://easyweb.easynet.co.uk/~iany/consultancy/reqts_and_testing/reqts_and_testing.htm

Alexander, I. and Stevens, R., Writing Better Requirements, Addison-Wesley, 2002.

Ahlowalia, N., Testing from use cases using path analysis technique, International Conference on Software Testing Analysis & Review (STAR), Anaheim, CA, November 4–8, 2002. Available from http://www.StickyMinds.com

Amyot, D. and Mussbacher, G., URN: Towards a New Standard for the Visual Description of Requirements, 2002, http://www.usecasemaps.org/pub/abstracts.shtml/sam02-URN.pdf

Beck, K., Extreme Programming Explained, Embrace Change, Addison-Wesley, 2000.

Binder, R., TOOTSIE, A High-end OO Development Environment, 1995, http://www.rbsc.com/pages/tootsie.html (revised 2001).

Cockburn, A., Writing Effective Use Cases, Addison-Wesley, 2001.

Frappier, M. and Habrias, H., Software Specification Methods, An Overview Using a Case Study, Springer, 2001.

Gelperin, D., Precise Use Cases, 2003, http://www.livespecs.com/downloads/LiveSpecs06V01_PreciseUseCases.pdf

Heumann, J., Generating Test Cases From Use Cases, The Rational Edge, June 2001, www.therationaledge.com

Kruchten, P., The Rational Unified Process, An Introduction, Addison-Wesley, 2000.

McGregor, J.D. and Major, M.L., Selecting Test Cases Based on User Priorities, SD Magazine, March 2000, http://www.sdmagazine.com/documents/s=815/sdm0003c/

Robertson, S. and Robertson, J., Mastering the Requirements Process, Addison-Wesley, 1999.

Scenario Plus, website (free Use Case templates, and a free Use/Misuse Case toolkit with Test Case generator for DOORS), 2003, http://www.scenarioplus.org.uk

UBET, website (free Message Sequence Chart editor / Test Case generator), 2003, http://cm.bell-labs.com/cm/cs/what/ubet/index.html

RECOMMENDED READING

Naresh Ahlowalia's paper at STAR 2002 is a practical approach from a software point of view to creating tests from use cases. There is an alarming subheading ‘Determine all possible paths’ but this is qualified, and the paper is one of the few published accounts of use-case-based testing.

Jim Heumann's article in The Rational Edge is a readable account from a leading software company.

1 Automatic Teller Machine

2 Statistical testing of processes that necessarily include non-deterministic elements, as with traffic flows on a network, is possible but is inherently more difficult.

3 James Rumbaugh's OMT was a forerunner of UML. Rumbaugh was one of the ‘three amigos’ employed by Rational Software Corporation to develop their methods into a unified approach. Presumably, Binder would hold a similar view of UML Use Cases, though a full UML analysis would answer his objections.