CHAPTER 7

NEGATIVE SCENARIOS AND MISUSE CASES

Ian Alexander

Scenario Plus, London, UK

AMISUSE Case is the negative form of a Use Case. It documents a negative scenario. Its Actor is a hostile agent, typically but not always a human with hostile intent towards the system under design. The relationships between Use and Misuse Cases document threats and their mitigations. Use/Misuse Case diagrams are therefore valuable in security threat and safety hazard analyses. Mitigation often involves new subsystems, so Misuse Cases also have a role in system design.

Misuse Cases can help elicit requirements for systems, especially where exception cases might otherwise be missed. Their immediate applications are for security and safety requirements—in that order, but they can be useful for other types of requirement, for identifying missing functions, and for generating test cases.

APPLICABILITY

- Systems in which security is a major concern, for example, distributed and web-based systems, financial systems, government systems.

- Safety-related systems using new technologies, in which knowledge of hazards in earlier systems may be an insufficient guide to hazards introduced by new system functions and their interactions, for example, control systems in automotive, railway, and aerospace.

- Systems in which stakeholders may hold conflicting viewpoints that would threaten the project if not addressed, for example, multi-national government projects.

- More generally, any system in which threats and exceptions are not fully defined.

ROLES IN THE LIFE CYCLE

![]()

KEY FEATURES

Negative Actors are identified. The security and other threats they pose to the system are described as goals that they desire, drawn as Misuse Case bubbles on a Use Case diagram, and if need be analysed as Negative Scenarios. The threats are then mitigated by suitable Use Cases, often representing new subsystem functions.

STRENGTHS

The Negative Scenario is essentially a conceptual tool to help people apply their experience, intelligence, and social skill to identify the following:

- threats and mitigations

- security requirements

- safety requirements

- exceptions that could cause system failure

- test cases.

The strength of this kind of approach lies in its combination of a well-structured system-facing starting point, and its human-facing open-endedness. This makes it almost uniquely suited to probing the new, unknown, and unexpected.

WEAKNESSES

The approach is essentially human and qualitative. As such, it cannot guarantee discovering all threats. It does not assure correct prioritisation of threats, though it can be combined with any suitable prioritisation mechanism, for example, card sorting, laddering, or voting. Nor does it guarantee that the response to threats will be effective in mitigating them, but then no method does that for new threats.

It does not replace quantitative methods used in Safety and Reliability analysis (e.g. Bayesian computation of risk), or Monte Carlo simulation of outcomes, though it may be a useful precursor to any of these. It helps to identify the threats, hazards, and candidate mitigations that these other techniques can explore.

It tends to focus attention on one threat at a time. However, major failures of well-engineered systems, especially when they are safety-critical, tend to be the result of multiple faults and errors. Misuse Cases help identify the individual causes but do not offer a calculus for combining them—though nor does any other technique in common use.

TECHNIQUE

| Nel mezzo del cammin di nostra vita mi ritrovai per una selva oscura ché la diritta via era smarrita. | In the middle of my life's journey I found myself in a dark forest, as I had lost the straight path… Dante Alighieri, Inferno, Canto I, 1-3. |

FIGURE 7.1 Misuse case

Dante begins his Inferno by telling his readers about a threatening situation he once found himself in, ‘in a dark forest’. The rest of the Canto expands on that dramatic theme—we know immediately that the story will be dark, the journey difficult and dangerous. The rest of this book looks at the value of telling stories—scenarios and use cases—to define what systems ought to do when things go well. This chapter looks at the other side, when things threaten to go wrong.

Guttorm Sindre and Andreas Opdahl extended the expressive power of Use Case modelling (Jacobson et al. 1992) by introducing the Misuse Case to document negative scenarios, Use Cases with hostile intent (Sindre and Opdahl 2000, 2001). They inverted the colours1 of the Use Case to indicate the intentions of an opponent (Figure 7.1).

A Misuse Case documents conscious and active opposition in the form of a goal that a hostile agent intends to achieve, but which the organisation perceives as detrimental to some of its goals (Alexander 2003).

The first step in Use / Misuse Case analysis is to make a first-cut Use Case model (Kulak and Guiney 2000, Cockburn 2001, Gottesdiener 2002). This identifies the essential goals of the system, and documents what the system is to do in normal circumstances.

The next two steps can be seen as an adjunct to the hunt for Exceptions and the definition of Exception-handling scenarios (or separate Use Cases). These are to identify hostile agent roles, and the Misuse Case goals that such people may desire. These may be elicited in either order, or simultaneously.

Eliciting Hostile Roles

Hostile roles might want either

- to harm the system, its stakeholders, or their resources intentionally, or

- to achieve goals that are incompatible with the system's goals.

For example, if your business is fur farming, an animal rights protester might wish to damage you, your property, or your farms directly. On the other hand, a rival farmer might wish to corner the fur market, against your interests but not necessarily with any implication of criminality or malice.

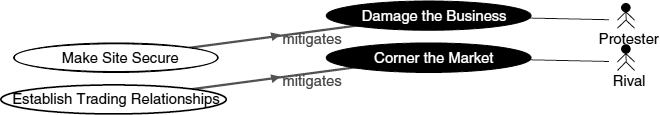

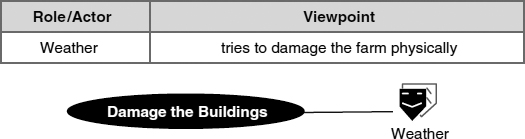

Hostile roles are documented as UML ‘actors’, with a role name and a brief statement of their Viewpoint, for example

FIGURE 7.2 Making a table of roles and viewpoints

FIGURE 7.3 Hostile roles documented as actors in Scenario Plus

Roles can readily be elicited in a workshop setting. The simplest way is to use a tabular list like this one on a flipchart (Figure 7.2). Or, you can display and edit a table in a general-purpose tool such as a Spreadsheet or a special-to-purpose tool like Scenario Plus (Scenario Plus 2003), projected onto a screen (Figure 7.3).

Eliciting Misuse Cases

A workshop is also a good place to ‘brainstorm’ a list of Misuse Cases. These can be gathered by the facilitator straight on to a Use Case context diagram, sketched or using a tool; or can simply be listed on a flipchart. At this stage, the Misuse Cases consist simply of the names of their (hostile) goals. Later, it may be helpful to document some of their Negative Scenarios in at least outline detail. As with Use Cases, there is a helpful ambiguity between Misuse Case as goal-in-a-bubble and as scenario—sometimes a bit of detail is helpful; sometimes it really isn't needed.

The Misuse Cases can then be drawn out neatly on a Use Case context diagram—with, for instance, cases grouped under their roles.

At this point it makes sense to indicate which Use Cases are actually affected by the newly discovered Misuse Cases. I have suggested two new relationships, threatens and mitigates (Alexander 2002b, 2002c), to describe the effect of a Misuse Case on a Use Case, and vice versa.

It might also be worth following (Sindre and Opdahl 2000, 2001) and defining prevents as a relationship, as prevention is important in safety-related domains such as air traffic control. However, they seem to have intended it to mean ‘mitigates’, as they suggest that the use case ‘Enforce password regime’ prevents a Crook from ‘obtaining (a) password’—which it doesn't: it just makes doing so more difficult. So, being a bit more careful, prevents should only be used when a use case goal actually makes a misuse case goal impossible to achieve, certain to fail. They also use detects, as when a monitoring use case contributes to mitigating a misuse case by noticing when it is occurring.

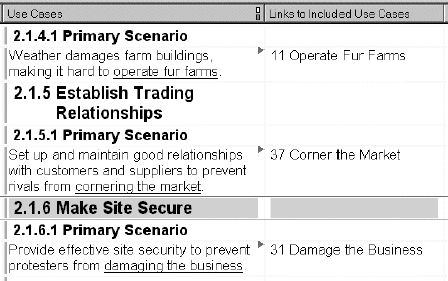

FIGURE 7.4 Documenting threats with use and misuse cases

If you are being really careful, you might want to distinguish between two shades of meaning of mitigates: makes less serious, and makes less likely. For example, making the workers in your gunpowder factory use copper instead of steel shovels prevents a spark from their shovels setting off an explosion, which makes explosion less likely. Conversely, ensuring that only one barrel of gunpowder is in the factory at a time makes any explosion less serious if it happens. If you are preparing a safety case, such distinctions are important.

Formally, these relationships are UML stereotypes that can lawfully be drawn on a Use Case diagram; in practice, they show visually which goals are threatened, and what might be done to mitigate the threats. With a tool like Scenario Plus, it is sufficient to indicate the logical links between cases, and the diagram will then show the direction and nature of the relationships (Figure 7.4). If drawn by hand, coloured arrows (I use black for threatens, green for mitigates) can be sketched in on whiteboard or flipchart.

Eliciting Exception Scenarios, Requirements, and Further Use Cases

At this point, the workshop can start to ask what to do to neutralise the Misuse Cases. From this point of view, the Misuse Cases effectively describe (and help discover) Exception Events that the hostile agents possibly intend to bring about. The project must decide if indeed the Exception Events matter enough to be worth dealing with: like all requirements, when discovered they are only candidates; prioritisation must come later.

Not everyone likes to call undesired events ‘Exceptions’. A workshop participant once told me that it was too confrontational a term; he preferred something like ‘unexpected situation’ or ‘unplanned event’. It doesn't matter what you choose to call these things: the world is full of possibilities that your system—including you—will not like. You have the choice of discovering them in advance and planning for them—or not.

Logically you could try to define an elicitation method based on Misuse Cases: you would create a named Exception (in the threatened Use Case) for each threat, for example

You would then try to elicit a procedure for handling each exception. Sometimes this is the right approach—misuse cases can identify important exceptions. But since an ounce of prevention is worth a pound of cure, the better approach is first to see if requirements can be found to prevent the identified threats from materialising—many exceptions are irrecoverable.

There are essentially two options for documenting how you are going to deal with Exceptions,

- Single actions can be documented directly in the threatened Use Case as exception-handling or exception-preventing requirements. Such requirements often do not directly call for system functions, but specify desired qualities of the system, such as its safety, reliability, or security. These ‘non-functional requirements’ can be justified by reference to the Misuse Case that called them into being. In a requirements tool, such a reference is simply a traceability link. A non-functional requirement for security, for instance, may be implemented in the design by adding subsystems such as alarms, cameras, and locks, or in the socio-technical system that contains the designed equipment by appropriate security procedures and training to achieve the specified quality. These components (assets and people) of course perform various functions within the socio-technical system; those functions respond to the non-functional requirements discovered by Misuse Case and other forms of analysis.

- More complex situations can be described and gradually analysed by identifying exception-handling Use Cases that could possibly mitigate the threats posed by the Misuse Cases. The mitigating actions can logically be described in the Primary (not Exception) Scenarios of these Use Cases, and mitigates relationships can be drawn to the Misuse Cases that are being neutralised. To complete the picture, has exception relationships can be made from the threatened (‘parent’) Use Cases to the exception-handling/threat-mitigating Use Cases (Figure 7.5).

Logically, every Use Case created to mitigate a threat ought to be considered to be an exception case—it wouldn't exist but for the undesired situation or event that it is meant to handle. However, inevitably we become familiar with old threats. With time, it seems entirely natural that we should have to lock our cars or install alarms. As these things become accepted as normal behaviour, the ‘has exception’ relationship tends to give way to an everyday ‘includes’. Does ‘Drive the Car’ have ‘Lock the Car’ as an exception or simply as an included case? Once the need for locking is accepted as a requirement, it doesn't matter much

FIGURE 7.5 Documenting exceptions

FIGURE 7.6 Use case text implicitly specifying links to misuse cases

Driving Design

The resulting new requirements (or Use Cases) may well call for new subsystems. These may have to be designed from the ground up, or they may already be available as Commercial Off-The-Shelf (COTS) products, only requiring integration with other design elements. The nature of that integration can itself be defined in subsystem-level Use Cases, in which the different subsystems play most of the roles: hence, the Use Case interactions define the functions required across subsystem interfaces.

For example, security requirements may demand new authentication, encryption, locking, surveillance, alarm, logging, and other mechanisms to be incorporated into the design. Knowledge of the existence of these mechanisms may in turn cause would-be intruders to devise new and more complex attacks: the arms race between thieves and locksmiths is never-ending.

Automatic Analysis of Use/Misuse Case Relationships

With tool support, links between Use and related Use or Misuse Cases can be created automatically by analysing their texts, as shown in the illustrations of Scenario Plus below (Figures 7.6 and 7.7), ensuring that text and diagrams stay in synch.

When Use and Misuse Cases interplay, there are four combinations to consider, namely relationships to and from each kind of case (Table 7.1):

This table can be interpreted as a four-part rule governing the automatic creation of relationship types according to the sources and targets of relationships between Use and Misuse Cases.

FIGURE 7.7 Diagram drawn automatically from the analysed use case model

TABLE 7.1 Rule governing creation of relationships between use and misuse cases

For example, a link from a Use Case to a Misuse Case can be assumed to be a threat, and be labelled mitigates. So, when a tool like the Scenario Plus analyser comes across a piece of scenario text like “… damaging the business.” in a Use Case, it searches for a match to the underscored text in the names of the Use and Misuse Cases. In this instance, it finds a Misuse Case named ‘Damage the Business’—the matching mechanism tolerates slight inexactitude with names, which allows scenarios to be written in a more fluid style—and creates a link to it. The type of that link—mitigates—is determined from the rule defined in the table.

The relationships created by the analyser are usually the correct ones, as the relationships shown in the rule are the basic ones for Misuse Case analysis. The rule breaks down in those rarer cases in which the relation between two Use Cases is in fact conflicts with, or in which a Use Case actually aggravates a Misuse Case instead of mitigating it. These relationships are important in Trade-off and Conflict Analyses (see below); the Scenario Plus analyser requires them to be specified explicitly in the Use Case text.

Design Trade-off and Conflict Analyses

The Misuse Case approach is also suitable for reasoning in design space for trade-off and conflict analyses (Alexander 2002a). Conflicts can arise in many ways, but a design conflict is essentially the product of uncertainty about how best to meet requirements, combined sometimes with incompatibilities between some design elements (shown as conflicts with relationships), and sometimes with negative effects (shown as aggravates relationships) on problems identified in Misuse Cases.

An interesting example from my experience of a design trade-off analysis, which illustrates this approach, is described in Chapter 17, Use Cases in Railway Systems.

Generating Acceptance Test Cases

Since Misuse Cases (and responses to them) often form strong stories at user level, one technique for generating user-level acceptance test cases is to look through the Misuse Cases and write at least one test case to handle each threat. Of course, if a systematic list of prioritised Exception Scenarios has been prepared for each Use Case (possibly elicited partly with Misuse Cases) then that list subsumes the Misuse Case list.

Metaphorical Roles

Taken literally, the Misuse Case approach is ideal for exploring security threats, and perhaps also safety hazards (see Comparisons below).

Other challenges that might help elicit different kinds of non-functional requirement can be identified by deliberately treating inanimate things as hostile agents. In other words, we can make use of our ability to reason about people's intentions to help identify requirements by creating metaphors of intent (Potts 2001).

For example, we could say that the weather “intends” to damage the fur farming business (Figure 7.8):

FIGURE 7.8 Metaphorical hostile role

This example leads to requirements for weather resistance and lifetime of buildings; the same approach can yield reliability, maintainability, and other “-ility” (quality) requirements.

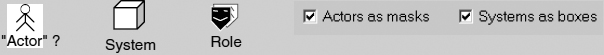

Simpler Diagrams with UML Stereotypes Some practitioners only ever use UML's Use Case and other diagrams with exactly the symbols that come out of the shrink-wrapped box. This is fine, but needlessly restrictive. Tool designers and other UML users are perfectly allowed to define stereotypes such as new relationships and roles, and to define icons for them (e.g. Fowler 1999). The Misuse Case bubble with its inverted colours, and the Role icon that makes clear it is a generic thing rather than a particular human being (for instance), are examples of stereotype icons. They should be used when they make models easier to understand. Non-software people are quite likely to be misled by terms such as ‘actor’ and the use of a stick-man icon to denote a role—let alone a non-human role. It is possible and fortunately quite permissible to do better.

Experienced practitioners may argue that they already know about quality requirements, and have effective templates to discover them. However, novel systems are becoming increasingly complex, and it may well be worth applying a little creativity to help ensure that important requirements are not missed: quality requirements are very difficult to inject into a system after it has been designed.

Less experienced practitioners may instead welcome a simple technique that gives them an independent way of finding and filling gaps in their specifications.

WORKED EXAMPLE

Suppose that we are gathering requirements on behalf of a company that sells a burglar alarm service, including installation, monitoring, and maintenance.

The basic operational objective is to reduce the risk of burglary in households protected by the company.

The core of the design approach to achieve this is to install an alarm, consisting of a set of sensors connected to a control box, which in turn is connected to some actuators whose task is to notify the Control Centre when a possible intrusion is detected, and also when faults occur in the alarm itself.

Notice, incidentally, that Use and Misuse Cases often quite naturally operate in the world of design (the solution space) rather than in some academically pure ‘problem space’ free of all design considerations.

The Control Centre analyses messages received from household alarms, and follows a procedure to determine whether to send a guard.

It is at once clear in this case that there is one kind of hostile agent for which the system is in fact designed—the burglar. Indeed, you could argue (rather theoretically) that the alarm itself, indeed the entire security system, is a response to the discovery that burglars threaten unprotected properties. The top-level misuse case is thus the obvious ‘Burgle the House’, threatening the fanciful (excessively high-level) use case ‘Live in the House’, and the response to that is the more realistic ‘Protect the House’.

The analysis becomes more interesting, however, when we consider the next step in the arms race between the burglar and the alarm company. The alarm company's strategy is to detect intrusion, and to handle the detected intrusions. If the burglar can defeat either of these two steps, the property is unprotected.

Defeating Detection

How can the burglar hope to escape detection? This question is a specialised form of the more general search for exceptions: ‘What can go wrong here?’. It is specialised in two ways: it is specific to the problem; and there is a hostile intelligence that we must assume is actively searching for weaknesses in our system.

There are several possible answers, including strategies such as stealth, deception, and force (not to mention the application of inside knowledge). A stealthy strategy is to burgle a house when it is seen to be occupied (references to threatened Use Cases are underlined):

Burglar selects a house where activity is visible upstairs, and quietly breaks in while the alarm is off, so it does not detect the intrusion.

A deceptive strategy is to impersonate a maintenance engineer:

Burglar dresses as a Maintenance Engineer and arrives at the Protected House with forged identification.

Burglar convinces the Householder that he is a legitimate Maintenance Engineer and gains access to the house, thus defeating the alarm's ability to detect intrusion.

Burglar says he is testing the alarm and not to worry if it rings, he's just getting some spares from his van. He then steals some valuables and leaves.

Householder is convinced, and waits some time before contacting the Control Centre.

A forceful strategy is to disable the household alarm:

Burglar attacks the Alarm system to prevent it from detecting the intrusion.

This is a strikingly diverse set of strategies, and they suggest radically different kinds of candidate requirement, such as:

®The household alarm can be set up to protect unoccupied zones in the house.

(mitigates Burgle Occupied House)

®Householders are instructed to check the identity cards of Maintenance Engineers.

(mitigates Impersonate Engineer)

®Householders are instructed only to admit Maintenance Engineers by prior appointment.

(mitigates Impersonate Engineer)

® The alarm notifies a possible intrusion when any sensor, actuator or power connection is lost.

(mitigates Disable the Alarm)

In general (and certainly on a more complex system than this), negative scenarios can help discover requirements that are both important and easy to miss.

Defeating the Handling of Intrusion

Similarly, there are many possible strategies for defeating the alarm company's intention to handle intrusion effectively.

A deceptive strategy is to set an alarm off repeatedly:

The burglar repeatedly sets off the alarm in the house he intends to burgle. The guard and call centre decide the alarm is faulty or being set off by an inanimate agent, and ignore it, thus failing to handle the intrusion.

A forceful strategy is to kidnap the guard:

The burglar sets off the alarm without breaking in, and waits near the house for the guard to arrive. As soon as the guard gets out of his van, the burglar and his accomplice capture the guard, blindfold him, and tie him up, taking his radio and telephone. The accomplice drives the guard a few miles away in the guard's own van, and leaves him, still tied up. The burglar works undisturbed by any further attempt at handling the intrusion.

A strategy based on knowledge is to burgle quickly at a time when rapid response is unlikely:

The burglar knows that it takes 25 minutes for the guard to get through the traffic, so he burgles the house in the rush hour and is gone within a few minutes, long before the guard can handle the intrusion effectively.

Quite a different kind of strategy is to subvert the system. The Control Centre depends on human employees for much of its effectiveness. One strategy is:

The burglar and an associate conspire. The associate gets a job in the Control Centre and arranges to ignore or destroy alarm messages from the houses to be burgled, so the system fails to handle the intrusions.

If anything, these strategies are even more diverse than the threats to detection, and they lead to some quite complex security requirements on the human/procedural parts of the system, far removed from the obvious functionality of the electronics of the Control Centre (let alone of the household alarm, which is where people first start looking for security requirements). Indeed, one lesson is that systems never consist solely of engineered devices; another is that end-to-end scenarios are essential.

Here are some candidate requirements suggested by the misuse cases:

® Control Centre staff are vetted for criminal associations before starting employment.

(mitigates Plant a Corrupt Employee)

®Messages to the Control Centre are logged securely.

(mitigates Plant a Corrupt Employee)

®Control Centre logs are analysed regularly by a trusted employee.

(mitigates Plant a Corrupt Employee)

®Patterns of repeated alarm activation are searched for continuously.

(mitigates Set off Alarm Repeatedly)

® Identified patterns suggesting deception or fraud are reported immediately to the Control Centre Manager.

(mitigates Set off Alarm Repeatedly)

® Patterns of burglar activity are analysed by both geographical area and time of day.

(mitigates Set off Alarm Repeatedly)

® Identified patterns of burglar activity are reported daily to the Control Centre Manager.

(mitigates Set off Alarm Repeatedly)

®Guards are pre-positioned at peak times close to zones of high burglar activity.

(mitigates Set off Alarm Repeatedly and Burgle Very Quickly)

®The location of the guard is tracked continuously during call-out.

(mitigates Kidnap the Guard)

® The Control Centre checks the status of the guard with an authentication challenge at 5-minute intervals during call-out.

(mitigates Kidnap the Guard)

FIGURE 7.9 Misuse cases justifying requirements

As shown, all of these requirements trace directly (in a many-to-many pattern) to the misuse cases threatening the handling of intrusions (Figure 7.9). None of them, interestingly, traces directly to any step in ‘Handle Intrusion’ itself—in this sense they are non-obvious, and the misuse cases (or their equivalents in negative scenarios) are necessary to show why the requirements are needed.

The use case diagram illustrates these misuse cases and their ‘threatens’ relationships to the two use cases discussed. All other relationships (such as ‘includes’, ‘mitigates’) have been suppressed for clarity (Scenario Plus 2003). All the discussed misuse cases can be included in the top-level misuse case ‘Burgle the House’—they are effectively contributory strategies or mechanisms to help achieve that case's goal.

COMPARISONS

Failure Cases

Related to Misuse Cases are Failure Cases—undesirable and possibly dangerous situations, events, or (perhaps) sequences of events that lead to system failure. Karen Allenby and Tim Kelly discuss ways of applying ‘Use Cases’ to model failure cases that threaten the safety of aircraft (Allenby and Kelly 2001). I'll call these ‘Failure Cases’ to avoid confusion.

Allenby and Kelly present a wonderful example that would certainly horrify the public if word got around. Jet engines not only power aircraft when they take off and fly; they are also used to decelerate aircraft once they have landed. This is achieved by a mechanism that basically consists of a pair of gates that shut off the backwards-directed flow of gas from the engine, and deflect the flow more or less forwards. The mechanism is called a thrust reverser, and it works splendidly—all passengers notice is that the plane slows down quickly, with slightly more noise than usual.

FIGURE 7.10 A failure case described as a misuse case

The situation would be very different if a thrust reverser were to be deployed in flight. That side of the plane would decelerate rapidly, while the other side continued at its previous speed: the plane would turn violently, and would either break up in the air or fly into the ground—as happened to a civil airliner over Thailand. Clearly ‘Deploy Thrust Reverser in Flight’ constitutes an important Failure Case (Figure 7.10), even if its negative agent (if anyone wants to identify it—Allenby and Kelly don't) is the uninteresting ‘Software Bug’ or ‘Mechanical Fault’.

What is more interesting is to think out mitigations. In the case of the accidentally deployed thrust reverser, the mitigating Use Case is ‘Restow Thrust Reverser’. This is remarkable as it is an existing function in commercial jet engine control software, whose only purpose is to correct a problem that should never occur if the engine, including its control software, is working correctly.

This particular threat / mitigation pair was thus discovered retrospectively by Failure Case analysis. Jet engines are complex machines, and are vulnerable to many less obvious functional hazards (those resulting from the way that the engine itself works). It will be interesting to see whether detailed Failure Case analysis discovers threats that engine makers are unaware of, and thus helps to predict failures before they occur. It has been well said that safety and security engineers always counter the previous generation of safety hazards and security threats2, just as generals are said to plan how to win the last war that they fought. It would certainly be a major milestone in the progress of requirements engineering if it became a predictive discipline.

FMEA, FTA, Functional Hazard Analysis, HazOp, and so on

The work of Allenby and Kelly makes clear that Misuse Cases have a place in the armoury of safety engineering techniques. Perhaps there may be some value in taking an outsider's look at the relationship between misuse cases and safety engineering.

Traditionally, the list of hazards facing, say, a train or an aircraft was very well known and essentially static. New hazards were discovered from time to time, by investigating the wreckage after an accident, and literally piecing together the causes. The authorities then issued new standards to prevent the freshly understood hazard from causing any more accidents. For example, EN 50126 (1999) is the European Norm for Railway applications - The specification and demonstration of Reliability, Availability, Maintainability and Safety (RAMS), while Engineering Safety Management, better known as the Yellow Book (2000), defines best practice for the British rail industry. There are plenty of similar things in other industries.

Given a list of hazards that might be expected, safety engineers then applied a set of techniques to establish the safety of the system under design, documenting the resulting argument that the system was acceptably safe in a safety case presented to the industry regulator—such as Her Majesty's Railway Inspectorate (now subsumed in the Health & Safety Executive) or the relevant Aviation Authorities.

The techniques for establishing system safety traditionally included Failure Mode Effects Analysis (FMEA—sometimes known as FMECA with an extra ‘and Criticality’ for good measure) and Fault Tree Analysis (FTA). These are covered by international standards IEC 60812 for FMEA and IEC 61025 for FTA, among others. These techniques are quantitative and yield the best available predictions of reliability and safety. However, you can only build fault trees and calculate on the basis of the faults and failure modes that you have thought of. The fact that one crash after another has caused the discovery of new failure modes is sufficient proof of this.

Misuse Case analysis is an entirely independent technique, so it at least offers the possibility of discovering ways in which systems might fail. Allenby and Kelly elaborate the basic idea into a fully worked out technique, Functional Hazard Analysis (FHA). This will certainly not predict every possible hazard, but it can look at the way a system—such as a jet engine—works, and from scenarios involving the known functions, ask what could go wrong. This is clearly the same concept as scenario-directed search for Exceptions (see the discussion of ‘Exceptions’ below). From there, safety engineers can analyse the expected effects, and then work out mitigating actions.

Another traditional technique, Hazard and Operability analysis (HazOp), borrowed from chemical engineering (e.g. Gossman 1998), is traditionally applied to discover functional hazards, but since this looks at functional requirements (or indeed at design elements such as valves and pumps), it does not benefit from the context around each activity—the story around each functional step—that a scenario inherently provides. Doing a HazOp is certainly better than not doing one at all, but scenario-based techniques such as Misuse Case / Functional Hazard analysis offer a significantly different perspective that may sometimes catch hazards that HazOp would have missed.

What is new in Functional Hazard Analysis is that the stream of safety engineering activities is seen to be not merely parallel to the system engineering stream, but interacting with it. Traditional safety engineering was—to parody it gently—almost done in isolation; some domain knowledge was applied; some intensive analysis and mathematics was done; and out popped a safety case. Given the pace of change—for example, the tremendous rise in complexity and the role of software in control systems—this is no longer adequate. Hazards now arise not only from the world outside but also crucially from the design approach, and hence the system and subsystem functions. Therefore, there must be inputs not only at the start of the life cycle but also from each stage of system and subsystem specification to the safety stream.

No Crystal Ball

Misuse Case Analysis cannot in itself predict complex failure modes where multiple factors combine to cause an accident, or complex security threats devised by ingenious opponents to make use of a combination of perhaps apparently minor weaknesses.

Air Traffic Control authorities, for instance, are well aware of the kinds of hazards that might endanger air traffic, and these are carefully mitigated. The remaining risk comes from situations in which perhaps five or more causes combine to overcome all the carefully crafted safety nets built into modern air traffic systems. In a recent accident, some or all of the following events (in no particular order) reportedly3 occurred:

- A controller left his desk for a break during the night shift, so the single remaining controller was unsupervised and unable to obtain help if overloaded.

- The radar was imprecisely calibrated and reported aircraft positions wrongly.

- Maintenance work was under way on the main radar system to release new software, so the radar was in fallback mode on the standby system.

- The short-term conflict alert (STCA) system did not alert the controller (the standby radar did not have a visual conflict alert).

- Two planes flying in different directions were told to fly at the same height.

- The collision warning system (TCAS) onboard an aircraft was ignored.

- The controller noticed impending collision and gave orders (‘Climb!’) that were contradicted by the collision warning system (‘Descend!’).

- The pilot of one of the planes was away from the cockpit (in the toilet).

- The co-pilot took ineffective action.

Few if any of these hazards have not been considered and mitigated by air traffic authorities. What nobody expected was that they might occur simultaneously—the a priori probability for that would have been considered unimaginably small. No current technique appears to be able to gaze that far into the crystal ball, and Misuse Cases don't claim to do so either. Nor do they claim to provide a calculus for detecting ways of combining numerous security weaknesses of, say, a software system into an ingenious but obscure attack route for hackers4.

Abuse Cases

John McDermott and Chris Fox invented the ‘Abuse Case’ as a way of eliciting security requirements (McDermott and Fox 1999), though the term may have been used informally by others before then. They say that an Abuse Case defines an interaction between an actor and a system that results in harm to the system, the stakeholders, or their resources. This is not quite the whole story, as the interaction between the hostile actor and the system is not just something that happens, like bumping into a lamppost and getting a bruise as a result, but intentional and indeed often malicious—the harm is intentionally caused. In the same sort of contorted dictionary-speak style, we might say that a misuse case defines a hostile goal, which if achieved would result in harm to the system, stakeholders, or their resources.

However, there is no obvious need to restrict ‘Abuse Case’ to security requirements; and if we put down McDermott & Fox's soft expressions like ‘interaction’ and ‘results in harm’ (instead of ‘threatened attack’ and ‘intentionally harms’) to academic caution, essentially Abuse Case seems to be a straight synonym of Misuse Case.

It is worth noting that the merging of misuse and failure cases suggests that safety, security, and incidentally also survivability requirements all follow a common pattern. Donald Firesmith argues that they, and the types of analysis that are used on them, should be unified under a heading such as ‘Defensibility’ (Firesmith 2004; see also Appendix 1.4, Non-Functional Requirements Template, in this volume). This brings out their essential similarities: systems are threatened intentionally by hostile agencies; threats can be analysed hierarchically and their probabilities combined mathematically; mitigations can be identified and prioritised. A Google search revealed uses of defensibility to include defence against lawsuits and wildfires—which seem entirely appropriate candidates for Misuse Case treatment.

Negative Scenarios

Misuse Cases, Abuse Cases, and Failure Cases are thus all more or less the same thing. They are forms of the centuries-old planning or game-playing technique of putting yourself in your enemy's shoes and thinking out what he would do if he did his worst: Negative Scenarios.

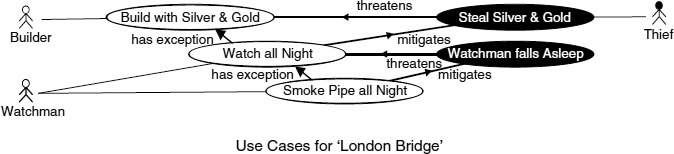

The traditional nursery rhyme ‘London Bridge is broken down’ has for centuries5 amused children and their parents in a game of ‘what if..’, exploring one Negative Scenario after another (Figure 7.11):

Build it up with silver and gold, my fair lady.

Silver and gold will be stolen away, my fair lady.

Set a man to watch all night, my fair lady.

Suppose the man should fall asleep, my fair lady.

Give him a pipe to smoke all night, my fair lady.

FIGURE 7.11 London Bridge misuse cases

The idea of the Negative Scenario is not only not new; it is an essential concomitant of plan formation, namely looking for what rival intelligences, enemies, or prey would do if the plan were to be put into effect. Clearly this applies to any situation in which an agent who does not wish your plan to succeed may take actions specifically designed to defeat your plan.

Obstacles

This sense of active, intelligent, and creative opposition sharply distinguishes Negative Scenarios from passive Obstacles (van Lamsweerde and Letier 1998). An Obstacle is indeed in a limited sense something between you and your Goal(s), but it is not the opposite of a Goal. The goal of your enemy, though, really is in opposition to your goal; it is only not ‘the opposite’ because there can be many hostile goals, and indeed many hostile agents.

Goal-Obstacle analysis is a simple and practical way of noting unfortunate things that might happen. It is not a way of thinking that encourages awareness of the likely behaviour of hostile agents, who may intelligently but perversely modify their actions in the light of what they know of your intentions and actions.

Anti-Scenarios

In Come, Let's Play, David Harel and Rami Marelly define the term ‘anti-scenarios’ to mean

‘ones that are forbidden, in the sense that if they occur there is something very wrong: either something in the specification is not as we wanted, or else the implementation does not correctly satisfy the specification’. (Harel and Marelly 2003, page 5)

This clearly covers a lot of ground, including mistakes in design and coding, failure to understand or follow the requirements, and things that ‘we’ (presumably stakeholders) do not want. Anti-scenario is thus much more general than negative scenario or misuse case. As a term it makes sense in the context of running a simulation—do you want to allow that sequence of output events or not?—as instanced by Harel's wonderfully innovative use of scenarios and Live Sequence Charts with his Play Engine. But since it covers issues both of verification (did we meet the requirements?) and of validation (were the requirements right?), it is too broad a term for use in other contexts.

i* and GRL

A radically different take on goals and non-functional requirements is to model the intentions of system stakeholders and the expectations that they place on each other as various kinds of goals: We rely on you to perform this function, you rely on him to ensure safety, and so on. This kind of approach was pioneered by E. Yu and J. Mylopoulos at Toronto in their work first on the i* notation and then on GRL (Chung et al. 2000). It is then possible to mark up both positive and negative interactions—this goal makes achieving that goal easier or more difficult. A model of this kind can certainly document some non-functional requirements, and it can indicate conflicts between goals. Much the same applies to this as to Obstacles (see above): it doesn't really capture hostile intention, but it could in principle be a useful approach. That said, it seems to be still essentially an academic matter, and it is being taken up very slowly by industry. In contrast, Use Cases have popularised the application of scenarios, and the Misuse Case approach is essentially an easy further step towards the effective handling of a wider range of requirements.

Exceptions

Negative Scenarios or Misuse Cases are not an alternative to the basic search for Exceptions that should take place in any system (Cockburn 2001, Alexander and Zink 2002). If you have a scenario, you should analyse what can go wrong at each step; most of the exceptions will not be intentionally caused, and there is no need to invent or imagine hostile agents to find ordinary exceptions. But Misuse Cases do offer an additional, independent method for finding Exceptions.

The Place of the Negative Scenario or Misuse Case

On the other hand, the metaphor of an enemy is a powerful one, and metaphors of intent are useful because humanity has evolved in a social context (Potts 2001, Pinker 1994). If thinking about hostile agents and threats helps people to find exceptions with their ‘soap opera brains’—their highly specialised social interaction analysers, then the technique is worthwhile, even when the supposedly hostile agents are in fact mere passive obstacles. This may help elicit exceptions that would otherwise be missed.

Projects that are already being conducted participatively, as with Suzanne Robert-son's use of scenarios for requirements discovery (Chapter 3 of this volume), Ellen Gottesdiener's workshop techniques (Chapter 5), Neil Maiden's structured scenario walk-throughs (Chapter 9) or Karen Holtzblatt's Contextual Design (Chapter 10) can consider spending a session on negative scenarios. Used informally, the technique might also be valuable in agile projects as described by Kent Beck and David West (Chapter 13) given participation from usually very small groups of stakeholders.

Pinker argues convincingly that the ability to plan and evaluate ‘what-if’ scenarios is close to the root of language, and indeed of human reasoning itself (Pinker 1994). Thinking out around the campfire with your fellow stone-age hunters what you would do if the Woolly Rhinoceros you are going to hunt tomorrow turns and charges you, instead of falling into the pit you have dug for it, might save your life. Having your crazy plans laughed at the night before is embarrassing, but a lot better than discovering the unhandled exceptions the hard way.

The Negative Scenario is clearly secondary to the ordinary Scenario as a requirement elicitation technique. It comes into its own where security is vital; and it may have an important role in safety, where it can contribute to functional hazard analysis (Allenby and Kelly 2001) (see the discussion of ‘Failure Cases’ above). Scenarios typically elicit functional requirements; Negative Scenarios help elicit exceptions (which in turn call for the functions inherent in exception-handling scenarios) and some kinds of non-functional requirements.

The Negative Scenario also has a role in documenting and justifying functions put in place for security, safety, and sometimes other reasons. When a requirement has been created in response to a threat, then continuing knowledge of that threat (through traceability) protects that requirement from deletion when budgets and timescales come under pressure.

KEYWORDS

Negative Scenario

Misuse Case

Requirements Elicitation

Exception

Security Requirement

Threat Identification

Mitigation

“-ility” (Quality Requirement)

Function

Non-Functional Requirement

Failure Case

Trade-Offs

Conflict Identification

REFERENCES

Alexander, I., Initial industrial experience of misuse cases in trade-off analysis, Proceedings of IEEE Joint International Requirements Engineering Conference (RE '02), September 9–13 2002a, Essen, Germany, pp. 61–68

Alexander, I., Towards automatic traceability in industrial practice, Proceedings of the First International Workshop on Traceability, Edinburgh, Germany, September 28, 2002b, pp. 26–31, in conjunction with the 17th IEEE International Conference on Automated Software Engineering

Alexander, I., Modelling the interplay of conflicting goals with use and misuse cases, Proceedings of the Eighth International Workshop on Requirements Engineering: Foundation for Software Quality (REFSQ '02), Essen, Germany, September 9–10, 2002c, pp. 145–152.

Alexander, I., Use/misuse case analysis elicits nonfunctional requirements, Computing and Control Engineering, 14(1), 40–45, 2003.

Alexander, I. and Zink, T., Systems engineering with use cases, Computing and Control Engineering Journal, 13(6), 289–297, 2002.

Allenby, K. and Kelly, T., Deriving safety requirements using scenarios, Proceeding of the 5th International Symposium on Requirements Engineering (RE '01), Toronto, Canada, pp. 228–235.

Chung, L., Nixon, B.A., Yu, E., and Mylopoulos, J., Non-Functional Requirements in Software Engineering, Kluwer, 2000.

Cockburn, A., Writing Effective Use Cases, Addison-Wesley, 2001.

EN 50126:1999, Railway Applications—The Specification and Demonstration of Reliability, Avail-ability, Maintainability and Safety (RAMS), CEN-ELEC, Rue de Stassart, 36, B-1050 Brussels; English language version BS EN 50126:1999, British Standards Institution, 389 Chiswick High Road, London W4 4AL.

Firesmith, D., Common Concepts Underlying Safety, Security, and Survivability Engineering, Software Engineering Institute, Carnegie Mellon University, Technical Note CMU/SEI-2003-TN-033, January 2004, available from http://www.sei.cmu.edu

Fowler, M. with Scott, K., UML Distilled, A Brief Guide to the Standard Object Modeling Language, Addison-Wesley, 1999.

Gossman, D., HazOp Reviews, 4(8), 1998, http://gcisolutions.com/GCINOTES898.htm

Gottesdiener, E., Requirements by Collaboration, Workshops for Defining Needs, Addison-Wesley, 2002.

Harel, D. and Marelly, R., Come, Let's Play: Scenario-Based Programming Using LSCs and the Play-Engine, Springer-Verlag, 2003.

Jacobson, I., Christerson, M., Jonsson, P. and Overgaard, G., Object-Oriented Software Engineering: A Use Case Driven Approach, Addison-Wesley, 1992.

Kulak, D. and Guiney, E., Use Cases: Requirements in Context, Addison-Wesley, 2000.

McDermott, J. and Fox, C., Using abuse case models for security requirements analysis, 15th Annual Computer Security Applications Conference, IEEE, Phoenix, Arizona, 1999, pp. 55–66.

Pinker, S., The Language Instinct, Penguin Books, 1994 (and see discussion in Chapter 1: Introduction of this volume).

Potts, C., Metaphors of intent, Proceedings of the 5th International Symposium on Requirements Engineering (RE '01), 2001, pp. 31–38.

Scenario Plus, website (free Use/Misuse Case toolkit for the DOORS requirements management system), http://www.scenarioplus.org.uk, 2003.

Sindre, G. and Opdahl, A.L., Eliciting security requirements by misuse cases, Proceedings of the TOOLS Pacific 2000, November 2000, pp. 120–131, 20–23.

Sindre, G. and Opdahl, A.L., Templates for misuse case description, Proceedings of the 7th International Workshop on Requirements Engineering, Foundation for Software Quality (REFSQ 2001), Interlaken, Switzerland, June 4–5, 2001.

van Lamsweerde, A. and Letier, E., Integrating obstacles in goal-driven requirements engineering, Proceedings of the ICSE '98—20th International Conference on Software Engineering, IEEE-ACM, 1998.

Yellow Book, Engineering safety management, distributed by Praxis Critical Systems Ltd, Railtrack PLC, 2000, http://www.praxis-cs.co.uk

Wiley, B., Essential System Requirements, Addison-Wesley, 2000.

RECOMMENDED READING

I wrote what I hope is an easily approachable account of Misuse Cases in an article, Misuse cases: use cases with hostile intent, IEEE Software, 58–66, 20(1), 2003.

1 In monochrome, this inevitably makes the background black. In colour presentations, it is suggested to use red as in ‘red team review’ for Misuse Cases. In any event, requirements engineers should not use the word black as a synonym for negative.

2 Anthony Hall, Praxis, personal communication, 2002.

3 http://news.bbc.co.uk/hi/english/world/europe/newsid2125000/2125838.stm; http://www.ifatca.org/locked/midair.ppt

4 Dr Jonathan Moffett, University of York: personal communication.

5 Mentioned in Namby Pamby or a Panegyric on the New Versification, Henry Carey, 1725.