CHAPTER 6

CHAPTER 6

Motion Planning for Three-Dimensional Arm Manipulators

The robot is going to lose. Not by much. But when the final score is tallied, flesh and blood is going to beat the damn monster.

—Adam Smith, philosopher and economist, 1723–1790

6.1 INTRODUCTION

We are continuing developing SIM (Sensing–Intelligence–Motion) algorithms for robot arm manipulators. The cases considered in Chapter 5 all deal with arm manipulators whose end effectors (hands) move along a two-dimensional (2D) surface. Although applications do exist that can make use of those algorithms—for example, assembly of microelectronics on a printed circuit board is largely limited to a 2D operation—most robot arm manipulators live and work in three-dimensional (3D) space. From this standpoint, our primary objective in Chapter 5 should be seen as preparing the necessary theoretical background and elucidating the relevant issues, before proceeding to the 3D case. Sensor-based motion planning algorithms should be able to handle 3D space and 3D arm manipulators. Developing such strategies is the objective of this chapter. As before, the arm manipulators that we consider are simple open kinematic chains.

Is there a fundamental difference between motion planning for two-dimensional (2D) and 3D arm manipulators? The short answer is yes, but the question is not that simple. Recall a similar discussion about mobile robots in Chapter 3. From the standpoint of motion planning, mobile robots differ from arm manipulators: They have more or less compact bodies, kinematics plays no decisive role in their motion planning, and their workspace is much larger compared to their dimensions. For mobile robots the difference between the 2D and 3D cases is absolute and dramatic: Unequivocally, if the 2D case has a definite and finite solution to the planning problem, the 3D case has no finite solution in general.

The argument goes as follows. Imagine a bug moving in the two-dimensional plane, and imagine that on its way the bug encounters an object (an obstacle). Assume the bug's target location is somewhere on the other side of the obstacle. One way for it to continue its motion is to first pass around the obstacle. The bug has only two options: It can pass the obstacle from the left or it can pass it from the right, clockwise or counterclockwise. If neither option leads to success—let us assume it is a smart bug, with a reasonably good motion planning skills—the goal is not achievable. In this case, by slightly exaggerating the bug's stubbornness, we will note that eventually the bug will come back to the point on the obstacle where it started. It took only one simple going around, all in one direction, to explore the whole obstacle. That is the essence of the 2D case.

Imagine now a fly that is flying around a room—that is, in 3D space. Imagine that on its way the fly encounters a (3D) obstacle—say, a child's balloon hanging on a string. Now there is an infinite number of routes the fly can take to pass around the obstacle. The fly would need to make a great many loops around the obstacle in order to explore it completely. That's the fundamental difficulty of the 3D case; in theory it takes an infinitely long path to explore the whole obstacle, even if its dimensions and volume are finite and modest.1 The point is that while in the 2D case a mobile robot has a theoretically guaranteed finite solution, no such solution can be guaranteed for a 3D mobile robot. The 3D sensor-based motion planning problem is in general intractable.

The situation is more complex, but also not as hopeless, for 3D arm manipulators. Try this little experiment. Fix your shoulder and try to move your hand around a long vertical pole. Unlike a fly that can make as many circles around the pole as it wishes, your hand will make about one circle around the pole and stop. What holds it from continuing moving in the same direction is the arm's kinematics and also the fact that the arm's base is “nailed down.” The length of your arm links is finite, the links themselves are rigid, and the joints that connect the links allow only so much motion. These are natural constraints on your arm movement. The same is so for robot arm manipulators. In other words, the kinematic constraints of an arm manipulator impose strong limitations on its motion.

This fact makes the problem of sensor-based motion planning for 3D arm manipulators manageable. The hope is that the arm kinematics can be effectively exploited to make the problem tractable. Furthermore, those same constraints promise a constructive test of target reachability, similar to those we designed above for mobile robots and 2D arm manipulators.

As noted by Brooks [102], the motion planning problem for a manipulator with revolute joints is inherently difficult because (a) the problem is nondecomposable, (b) there may be difficulties associated with rotations, (c) the space representation and hence the time execution of the algorithm are exponential in the number of robot's degrees of freedom of the objects involved, and (d) humans are especially poor at the task when much reorientation is needed, which makes it difficult to develop efficient heuristics. This is all true, and indeed more true for arms with revolute joints—but these difficulties have been formulated for the motion planning problem with complete in formation. Notice that these difficulties above did not prevent us from designing rather elegant sensor-based planning algorithms for 2D arms with revolute joints, even in the workspace with arbitrarily complex obstacles. The question now is how far we can go with the 3D case.

It was said before that this is a difficult area of motion control and algorithm design. As we will see in Chapter 7, human intuition is of little help in designing reasonable heuristics and even in assessing proposed algorithms. Doing research requires expertise from different areas, from topology to sensing technology. There are still many unclear issues. Much of the exciting research is still waiting to be done. Jumping to the end of this chapter, today there are still no provable algorithms for the 3D kinematics with solely revolute joints. While this type of kinematics is just one mechanism among others in today's robotics, it certainly rules the nature.

As outlined in Section 5.1.1, we use the notion of a separable arm [103], which is an arm naturally divided into the major linkage responsible for the arm's position planning (or gross motion), and the minor linkage (the hand) responsible for the orientation planning of the arm's end effector. As a rule, existing arm manipulators are separable. Owing to the fact that three degrees of freedom (DOF) is the minimum necessary for reaching an arbitrary point in 3D space, and another three DOF are needed to provide an arbitrary orientation for the tool—six DOF in total as a minimum—many 3D arm manipulators' major linkages include three links and three joints, and so do typical robot hands. Our motion planning algorithms operate on the major linkage—that is, on handling gross motion and making sure that the hand is brought into the vicinity of the target position. The remaining “fine tuning” for orientation is usually a simpler task and is assumed to be done outside of the planning algorithm. For all but very few unusual applications, this is a plausible assumption.

While studying topological characteristics of the robot configuration space for a few 3D kinematics types, we will show that obstacle images in these configuration spaces exhibit a distinct property that we call space monotonicity : For any point on the surface of the obstacle image, there exists a direction along which all the remaining points of the configuration space belong to the obstacle. Furthermore, the sequential connection of the arm links results in the property called space anisotropy of the configuration space, whereby the obstacle monotonicity presents itself differently along different space axes.

The space monotonicity property provides a basis for selecting directions of arm motion that are more promising than others for reaching the target position. By exploiting the properties of space monotonicity and anisotropy, we will produce motion planning algorithms with proven convergence. No explicit or implicit beforehand calculations of the workspace or configuration space will be ever needed. All the necessary calculations will be carried out in real time in the arm workspace, based on its sensing information. No exhaustive search will ever take place.

As before with 2D arms, motion planning algorithms that we will design for 3D arms will depend heavily on the underlying arm kinematics. Each kinematics type will require its own algorithm. The extent of algorithm specialization due to arm kinematics will be even more pronounced in the 3D case than in the 2D case. Let us emphasize again that this is not a problem of depth of algorithmic research but is instead a fundamental constraint in the relationship between kinematics and motion. The same is true, of course, in nature: The way a four-legged cat walks is very different from the way a two-legged human walks. Among four-legged, the gaits of cats and turtles differ markedly. One factor here is the optimization process carried out by the evolution. Even if a “one fits all” motion control procedure is feasible, it will likely be cumbersome and inefficient compared to algorithms that exploit specific kinematic peculiarities. We observed this in the 2D case (Section 5.8.4): While we found a way to use the same sensor-based motion planning algorithm for different kinematics types, we also noted the price in inefficiency that this universality carried. Here we will attempt both approaches.

This is not to say that the general approach to motion planning will be changing from one arm to another; as we have already seen, the overall SIM approach is remarkably the same independent of the robot kinematics, from a sturdy mobile robot to a long-limbed arm manipulator.

As before, let letters P and R refer to prismatic and revolute joints, respectively. We will also use the letter X to represent either a P or a R joint, X = [P, R]. A three-joint robot arm manipulator (or the major linkage of an arm), XXX, can therefore be one of eight basic kinematic linkages: PPP, RPP, PRP, RRP, PPR, RPR, PRR, and RRR. As noted in Ref. 111, each basic linkage can be implemented with different geometries, which produces 36 linkages with joint axes that are either perpendicular or parallel to one another. Among these, nine degenerate into linkages with only one or two DOF; seven are planar. By also eliminating equivalent linkages, the remaining 20 possible spatial linkages are further reduced to 12, some of which are of only theoretical interest.

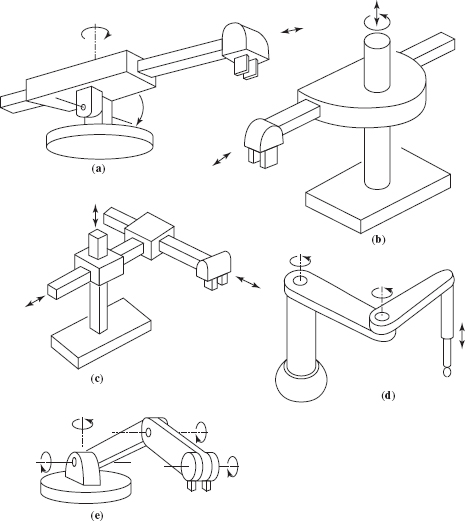

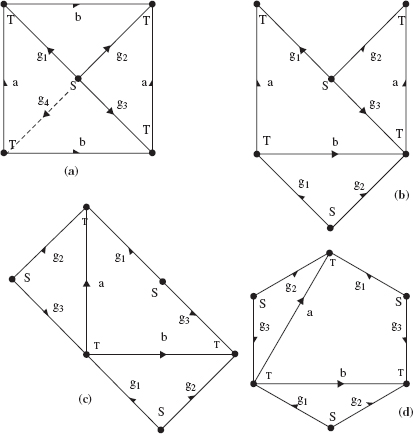

The above sequence XXX is written in such an order that the first four linkages in it are XXP arms; in each of them the outermost joint is a P joint. Those four are among the five major 3D linkages (see Figure 6.1) that are commonly seen in industry [111–113] and that together cover practically all today's commercial and special-purpose robot arm manipulators. It turns out that these four XXP arms are better amenable to sensor-based motion planning than the fifth one (Figure 6.1e) and than the remaining four arms in the XXX sequence. It is XXP arms that will be studied in this chapter.

While formally these four linkages—PPP, RPP, PRP, and RRP—cover a half of the full group XXX, they represent four out of five, or 80%, of the linkages in Figure 6.1. Many, though not all, robot arm applications are based on XXP major linkages. Welding, assembly, and pick-and-place robot arms are especially common in this group, one reason being that a prismatic joint makes it easy to produce a straight-line motion and to expand the operating space. The so-called SCARA arm (Selective Compliance Assembly Robot Arm), whose major linkage is, in our notation, an RRP arm, is especially popular among assembly oriented industrial arm manipulators.

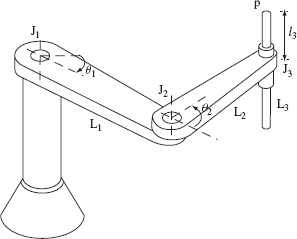

Figure 6.1 Five commonly used 3D robot arm linkages: (a) RRP, “polar coordinates” arm, with a spherical workspace; (b) PRP, “cylindrical coordinates” arm, with a cylindrical workspace; (c) PPP, Cartesian arm, with a “cubicle” workspace; (d) RRP, SCARA-type arm, with a cylindrical workspace; and (e) RRR, “articulate” arm, with a spherical workspace.

Recall our showing in Chapter 5 that RR, the arm with two revolute joints, is the most general among XX arms, X = [P, R]. We will similarly show that the arm RRP is the most general case among XXP arms. The algorithm that works for the RRP arm will work for other XXP arms.

While arm configurations shown in Figure 6.1 have nice straight-line links that are mutually perpendicular or parallel, our SIM approach does not require such properties unless specified explicitly.

We will first analyze the PPP arm (Section 6.2), an arm with three sliding (prismatic) joints (it is often called the Cartesian arm), and will develop a sensor-based motion planning strategy for it. Similar to the 2D Cartesian arm, the SIM algorithm for a 3D Cartesian arm turns out to be the easiest to visualize and to design. After mastering in this case the issues of 3D algorithmic machinery, in Section 6.3 we will turn our attention to the general case of an XXP linkage. Similar to the material in Section 5.8, some theory developed in Section 6.2.4 and Sections 6.3 to 6.3.6 is somewhat more complex than most of other sections.

As before, we assume that the arm manipulator has enough sensing to sense nearby obstacles at any point of its body. A good model of such sensing mechanism is a sensitive skin that covers the whole arm body, similar to the skin on the human body. Any other sensing mechanism will do as long as it guarantees not missing potential obstacles. Similar to the algorithm development for the 2D case, we will assume tactile sensing: As was shown in prior chapters, the algorithmic clarity that this assumption brings is helpful in the algorithm design. We have seen in Sections 3.6 and 5.2.5 that extending motion planning algorithms to more information-rich sensing is usually relatively straightforward. Regarding the issues of practical realization of such sensing, see Chapter 8.

6.2 THE CASE OF THE PPP (CARTESIAN) ARM

The model, definitions, and terminology that we will need are introduced in Section 6.2.1. The general idea of the motion planning approach is tackled in Section 6.2.2. Relevant analysis appears in Sections 6.2.3 and 6.2.4. We formulate, in particular, an important necessary and sufficient condition that ties the question of existence of paths in the 3D space of this arm to existence of paths in the projection 2D space (Theorem 6.2.1). This condition helps to lay a foundation for “growing” 3D path planning algorithms from their 2D counterparts. The corresponding existential connection between 3D and 2D algorithms is formulated in Theorem 6.2.2. The resulting path planning algorithm is formulated in Section 6.2.5, and examples of its performance appear in Section 6.2.6.

6.2.1 Model, Definitions, and Terminology

For the sake of completeness, some of the material in this section may repeat the material from other chapters.

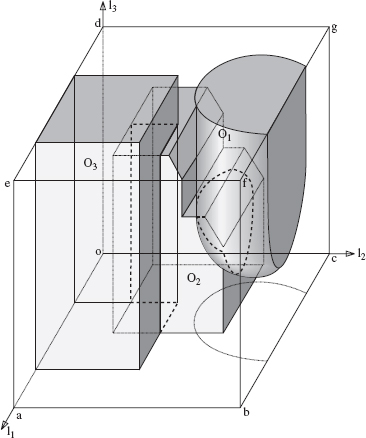

Robot Arm. The robot arm is an open kinematic chain consisting of three links, l1, l2, and l3, and three joints, J1, J2, and J3, of prismatic (sliding) type [8]. Joint axes are mutually perpendicular (Figure 6.2). For convenience, the arm endpoint P coincides with the upper end of link l3. Point Ji, i = 1, 2, 3, also denotes the center point of joint Ji, defined as the intersection point between the axes of link li and its predecessor. Joint J1 is attached to the robot base O and is the origin of the fixed reference system. Value li also denotes the joint variable for link li; it changes in the range li = [li min, li max]. Assume for simplicity zero minimum values for all li, li = [0, li max]; all li max are in general different.

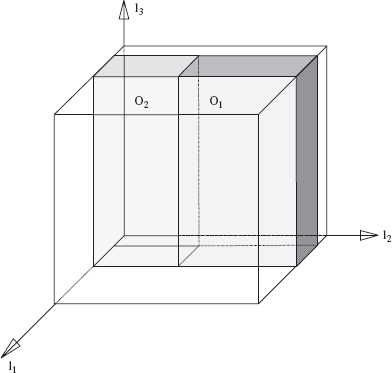

Figure 6.2 The work space of a 3D Cartesian arm: l1, l2, and l3 are links; J1, J2, and J3 are prismatic joints; P is the arm endpoint. Each link has the front and rear end; for example, J3 is the front end of link l2. O1, O2, and O3 are three physical obstacles. Also shown in the plane (l1, l2) are obstacles' projections. The cube abcodefg indicates the volume whose any point can be reached by the arm endpoint.

Each link presents a generalized cylinder (briefly, a cylinder)—that is, a rigid body characterized by a straight-line axis coinciding with the corresponding joint axis, such that the link's cross section in the plane perpendicular to the axis does not change along the axis. A cross section of link li presents a simple closed curve; it may be, for example, a circle (then, the link is a common cylinder), a rectangle (as in Figure 6.2), an oval, or even a nonconvex curve. The link cross section may differ from link to link.2

The front ends of links l1 and l2 coincide with joints J2 and J3, respectively; the front end of link l3 coincides with the arm endpoint P (Figure 6.2). The opposite end of link li, i = 1, 2, 3, is its rear end. Similarly, the front (rear) part of link li is the part of variable length between joint Ji and the front (rear) end of the link. When joint Ji is in contact with an obstacle, the contact is considered to be with link li−1.

For the sensing mechanism, we assume that the robot arm is equipped with a kind of “sensitive skin” that covers the surfaces of arm links and allows any point of the arm surface to detect a contact with an approaching obstacle. Other sensing mechanisms are equally acceptable as long as they provide information about potential obstacles at every point of the robot body. Depending on the nature of the sensor system, the contact can be either physical—as is the case with tactile sensors—or proximal. As said above, solely for presentation purposes we assume that the arm sensory system is based on tactile sensing.3

The Task. Given the start and target positions, S and T, with coordinates S = (l1 S, l2 S, l3 S) and T = (l1 T, l2 T, l3 T), respectively, the robot is required to generate a continuous collision-free path from S to T if one exists. This may require the arm to maneuver around obstacles. The act of maneuvering around an obstacle refers to a motion during which the arm is in constant contact with the obstacle. Position T may or may not be reachable from S; in the latter case the arm is expected to make this conclusion in finite time. We assume that the arm knows its own position in space and those of positions S and T at all times.

Environment and Obstacles. The 3D volume in which the arm operates is the robot environment. The environment may include a finite number of obstacles. Obstacle positions are fixed. Each obstacle is a 3D rigid body whose volume and outer surface are finite, such that any straight line may have only a finite number of intersections with obstacles in the workspace. Otherwise obstacles can be of arbitrary shape. At any position of the arm, at least some motion is possible. To avoid degeneracies, the special case where a link can barely squeeze between two obstacles is treated as follows: We assume that the clearance between the obstacles is either too small for the link to squeeze in between, or wide enough so that the link can cling to one obstacle, thus forming a clearance with the other obstacle. The number, locations, and geometry of obstacles in the robot environment are not known.

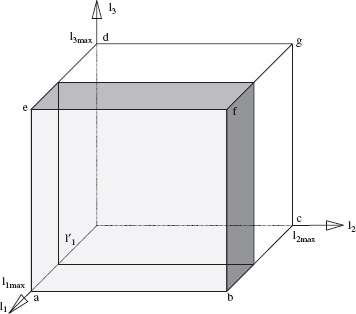

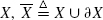

W-Space and W-Obstacles. The robot workspace (W-space or ![]() ) presents a subset of Cartesian space in which the robot arm operates. It includes the effective workspace, any point of which can be reached by the arm end effector (Figure 6.3a), and the outside volumes in which the rear ends of the links may also encounter obstacles and hence also need to be protected by the planning algorithm (Figure 6.3b). Therefore,

) presents a subset of Cartesian space in which the robot arm operates. It includes the effective workspace, any point of which can be reached by the arm end effector (Figure 6.3a), and the outside volumes in which the rear ends of the links may also encounter obstacles and hence also need to be protected by the planning algorithm (Figure 6.3b). Therefore, ![]() is the volume occupied by the robot arm when its joints take all possible values l = (l1, l2, l3), li = [0, li max], i = 1, 2, 3. Denote the following:

is the volume occupied by the robot arm when its joints take all possible values l = (l1, l2, l3), li = [0, li max], i = 1, 2, 3. Denote the following:

- υi is the set of points reachable by point Ji, i = 1, 2, 3;

- Vi is the set of points (the volume) reachable by any point of link li. Hence,

- υ1 is a single point, O;

- υ2 is a unit line segment, Oa;

- υ3 is a unit square, Oabc;

- V1 is a cylinder whose (link) cross section is s1 and whose length is 2l1 max;

- V2 is a slab of length 2l2 max formed by all possible motions of the front and rear ends of link l2 within the joint limits of l1 and l2;

- V3 is a “cubicle” of height 2l3 max formed by all possible motions of the front and rear ends of link l3 within the joint limits of l1, l2, and l3.

Figure 6.3 (a) The effective workspace of the 3D Cartesian arm—the volume that can be reached by the arm endpoint—is limited by the cubicle abcodefg. (b) Since the rear end of every link may also encounter obstacles, the workspace that has to be protected by the planning algorithm is larger than the effective workspace, as shown.

The total volume VW of W-space is hence VW = V1 ∪ V2 ∪ V3. Out of this, the set {l} = {l ∈ [0, lmax]}, where lmax = (l1 max, l2 max, l3 max), represents points reachable by the arm end effector; {l} is a closed set.

An obstacle in W-space, called W-obstacle, presents a set of points, none of which can be reached by any point of the robot body. This may include some areas of W-space which are actually free of obstacles but still not reachable by the arm because of interference with obstacles. Such areas are called the shadows of the corresponding obstacles. A W-obstacle is thus the sum of volumes of the corresponding physical obstacle and the shadows it produces. The word “interference” refers here only to the cases where the arm can apply a force to the obstacle at the point of contact. For example, if link l1 in Figure 6.2 happens to be sliding along an obstacle (which is not so in this example), it cannot apply any force onto the obstacle, the contact would not preclude the link from the intended motion, and so it would not constitute an interference. W-obstacles that correspond to the three physical obstacles—O1, O2, and O3—of Figure 6.2 are shown in Figure 6.4.

C-Space, C-Point, and C-Obstacle. The vector of joint variables l = (l1, l2, l3) forms the robot configuration space (C-space or ![]() ). In C-space, the arm is presented as a single point, called the C-point. The C-space of our Cartesian arm presents a parallelepiped, or generalized cubicle, and the mapping

). In C-space, the arm is presented as a single point, called the C-point. The C-space of our Cartesian arm presents a parallelepiped, or generalized cubicle, and the mapping ![]() →

→ ![]() is unique.4 For the example of Figure 6.2, the corresponding C-space is shown in Figure 6.5. For brevity, we will refer to the sides of the C-space cubicle as its floor (in Figure 6.2 this is the side Oabc), its ceiling (side edgf), and its walls, the remaining four sides. C-obstacle is the mapping of a W-obstacle into

is unique.4 For the example of Figure 6.2, the corresponding C-space is shown in Figure 6.5. For brevity, we will refer to the sides of the C-space cubicle as its floor (in Figure 6.2 this is the side Oabc), its ceiling (side edgf), and its walls, the remaining four sides. C-obstacle is the mapping of a W-obstacle into ![]() . In the algorithm, the planning decisions will be based solely on the fact of contact between the links and obstacles and will never require explicit computation of positions or geometry of W-obstacles or C-obstacles.

. In the algorithm, the planning decisions will be based solely on the fact of contact between the links and obstacles and will never require explicit computation of positions or geometry of W-obstacles or C-obstacles.

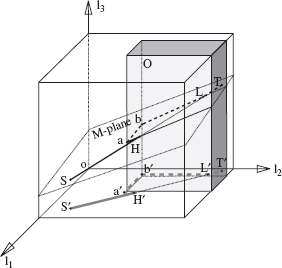

M-Line, M-Plane, and V-Plane. As before, a desired path, called the main line (M-line), is introduced as a simple curve connecting points S and T (start and target) in W-space. The M-line presents the path that the arm end effector would follow if no obstacles interfered with the arm motion. Without loss of generality, we assume here that the M-line is a straight-line segment. We will also need two planes, M-plane and V-plane, that will be used in the motion planning algorithm when maneuvering around obstacles (see Figures 6.7 and 6.9):

Figure 6.4 The W-obstacles produced by obstacles shown in Figure 6.2 consist of the parts of physical obstacles that intersect W-space plus their corresponding shadows.

- M-plane is a plane that contains an M-line and the straight line perpendicular to both the M-line and link l3 axis. M-plane is thus undetermined only if the M-line is collinear with l3 axis. This special case will present no difficulty: Here motion planning is trivial and amounts to changing only values l3; hence we will disregard this case.

- V-plane contains the M-line and is parallel to link l3 axis.

For our Cartesian arm, the M-line, M-plane, and V-plane map in C-space into a straight line and two planes, respectively.

Figure 6.5 C-space and C-obstacles that correspond to W-space in Figures 6.2 and 6.4. Thicker dotted and solid lines show intersections between obstacles. Shown also are projections of the three obstacles on the plane l1, l2.

Local Directions. Similar to other algorithms in previous chapters, a common operation in the algorithm here will be the choice of a local direction for the next turn (say, left or right). This will be needed when, while moving along a curve, the C-point encounters a sort of T-intersection with another curve (which is here the horizontal part of “T”). Let us define the vector of current motion p and consider all possible cases.

- The C-point moves along the M-line or along an intersection curve between the M-plane and an obstacle and is about to leave M-plane at the cross-point. Define the normal vector m of the M-plane [97]. Then the local direction b is upward if b · m > 0 and downward if b · m ≤ 0.

- The C-point moves along the M-line or along an intersection curve between the V-plane and an obstacle, and it is about to leave V-plane at the cross-point. Let

be the vector of l3 axis. Then, local direction b is left if

be the vector of l3 axis. Then, local direction b is left if  and right if

and right if  .

. - In a special case of motion along the M-line, the directions are

= forward and

= forward and  = backward.

= backward.

Consider the motion of a C-point in the M-plane. When, while moving along the M-line, the C-point encounters an obstacle, it may define on it a hit point, H. Here it has two choices for following the intersection curve between the M-plane and the obstacle surface: Looking from S toward T, the direction of turn at H is either left or right. We will see that sometimes the algorithm may replace the current local direction by its opposite. When, while moving along the intersection curve in the M-plane, the C-point encounters the M-line again at a certain point, it defines here the leave point, L. Similarly, when the C-point moves along a V-plane, the local directions are defined as “upward” and “downward,” where “upward” is associated with the positive and “downward”—with the negative direction of l3 axis.

6.2.2 The Approach

Similar to other cases of sensor-based motion planning considered so far, conceptually we will treat the problem at hand as one of moving a point automaton in the corresponding C-space. (This does not mean at all, as we will see, that C-space needs to be computed explicitly.) Essential in this process will be sensing information about interaction between the arm and obstacles, if any. This information—namely, what link and what part (front or rear) of the link is currently in contact with an obstacle—is obviously available only in the workspace.

Our motion planning algorithm exploits some special topological characteristics of obstacles in C-space that are a function of the arm kinematics. Note that because links l1, l2, and l3 are connected sequentially, the actual number of degrees of freedom available to them vary from link to link. For example, link l1 has only one degree of freedom: If it encounters an obstacle at some value ![]() , it simply cannot proceed any further. This means that the corresponding C-obstacle occupies all the volume of C-space that lies between the value

, it simply cannot proceed any further. This means that the corresponding C-obstacle occupies all the volume of C-space that lies between the value ![]() and one of the joint limits of joint J1. This C-obstacle thus has a simple structure: It allows the algorithm to make motion planning decisions based on the simple fact of a local contact and without resorting to any global information about the obstacle in question.

and one of the joint limits of joint J1. This C-obstacle thus has a simple structure: It allows the algorithm to make motion planning decisions based on the simple fact of a local contact and without resorting to any global information about the obstacle in question.

A similar analysis will show that C-obstacles formed by interaction between link l2 and obstacles always extend in C-space in the direction of one semi-axis of link l2 and both semi-axes of link l3; it will also show that C-obstacles formed by interaction between link l3and obstacles present generalized cylindrical holes in C-space whose axes are parallel to the axis l3. No such holes can appear, for example, along the axes l1 or l2. In other words, C-space exhibits an anisotropy property; some of its characteristics vary from one direction to the other. Furthermore, C-space possesses a certain property of monotonicity (see below), whose effect is that, no matter what the geometry of physical obstacles in W-space, no holes or cavities can appear in a C-obstacle.

From the standpoint of motion planning, the importance of these facts is in that the local information from the arm's contacts with obstacles allow one to infer some global characteristics of the corresponding C-obstacle that help avoid directions of motion leading to dead ends and thus avoid an exhaustive search.

Whereas the resulting path planning algorithm is used in the workspace, without computations of C-space, it can be conveniently sketched in terms of C-space, as follows. If the C-point meets no obstacles on its way, it will move along the M-line, and with no complications the robot will happily arrive at the target position T. If the C-point does encounter an obstacle, it will start moving along the intersection curve between the obstacle and one of the planes, M-plane or V-plane. The on-line computation of points along the intersection curve is easy: It uses the plane's equation and local information from the arm sensors.

If during this motion the C-point meets the M-line again at a point that satisfies some additional condition, it will resume its motion along the M-line. Otherwise, the C-point may arrive at an intersection between two obstacles, a position that corresponds to two links or both front and rear parts of the same link contacting obstacles. Here the C-point can choose either to move along the intersection curve between the plane and one of the obstacles, or move along the intersection curve between the two obstacles. The latter intersection curve may lead the C-point to a wall, a position that corresponds to one or more joint limits. In this case, depending on the information accumulated so far, the C-point will conclude (correctly) either that the target is not reachable or that the direction it had chosen to follow the intersection curve would lead to a dead end, in which case it will take a corrective action.

At any moment of the arm motion, the path of the C-point will be constrained to one of three types of curves, thus reducing the problem of three-dimensional motion planning to the much simpler linear planning:

- The M-line

- An intersection curve between a specially chosen plane and the surface of a C-obstacle

- An intersection curve between the surfaces of two C-obstacles

To ensure convergence, we will have to show that a finite combination of such path segments is sufficient for reaching the target position or concluding that the target cannot be reached. The resulting path presents a three-dimensional curve in C-space. No attempt will be made to reconstruct the whole or part of the space before or during the motion.

Since the path planning procedure is claimed to converge in finite time, this means that never, not even in the worst case, will the generated path amount to an exhaustive search.

An integral part of the algorithm is the basic procedure from the Bug family that we considered in Section 3.3 for two-dimensional motion planning for a point automaton. We will use, in particular, the Bug2 procedure, but any other convergent procedure can be used as well.

6.2.3 Topology of W-Obstacles and C-Obstacles

Monotonicity Property. Obstacles that intersect the W-space volume may interact with the arm during its motion. As mentioned above, one result of such interaction is the formation of obstacle shadows. Consider the spherical obstacle O1 in Figure 6.2. Clearly, no points directly above O1 can be reached by any point of the arm body. Similarly, no point of W-space below the obstacle O2 or to the left of the cubical obstacle O3 can be reached. Subsequently, the corresponding W-obstacles become as shown in Figure 6.4, and their C-space representation becomes as in Figure 6.5. This effect, studied in detail below, is caused by the constraints imposed by the arm kinematics on its interaction with obstacles. Anisotropic characteristics of W-space and C-space present themselves in a special topology of W- and C-obstacles best described by the notion of the (W- and C-) obstacle monotonicity:

Obstacle Monotonicity. In all cases of the arm interference with an obstacle, there is at least one direction corresponding to one of the axes li, i = 1, 2, 3, such that if a value ![]() of link li cannot be reached due to the interference with an obstacle, then no value

of link li cannot be reached due to the interference with an obstacle, then no value ![]() in case of contact with the link front part, or, inversely,

in case of contact with the link front part, or, inversely, ![]() in case of contact with the link rear part, can be reached either.

in case of contact with the link rear part, can be reached either.

In what follows, most of the analysis of obstacle characteristics is done in terms of C-space, although it applies to W-space as well. Comparing Figures 6.2 and 6.5, note that although physical obstacles occupy a relatively little part of the arm's workspace, their interference with the arm motion can reduce, often dramatically, the volume of points reachable by the arm end effector. The kinematic constraints are due to the arm joints, acting differently for different joint types, and to the fact that arm links are connected in series. As a result, the arm effectively has only one degree of freedom for control of motion of link l1, two degrees of freedom for control of link l2, and three degrees of freedom for control of link l3. A simple example was mentioned above on how this can affect path planning: If during the arm motion along M-line the link l1 hits an obstacle, then, clearly, the task cannot be accomplished.

The monotonicity property implies that C-obstacles, though not necessarily convex, have a very simple structure. This special topology of W- and C-obstacles will be factored into the algorithm; it allows us, based on a given local information about the arm interaction with the obstacle, to predict important properties of the (otherwise unknown) obstacle beyond the contact point. The monotonicity property can be expressed in terms more amenable to the path planning problem, as follows:

Corollary 6.2.1. No holes or cavities are possible in a C-obstacle.

W-obstacle monotonicity affects differently different links and even different parts—front or rear—of the same link. This brings about more specialized notions of li-front and li-rear monotonicity for every link, i = 1, 2, 3 (see more below). By treating links' interaction with obstacles individually and by making use of the information on what specific part—front or rear—of a given link is currently in contact with obstacles, the path planning algorithm takes advantage of the obstacle monotonicity property. Because this information is not available in C-space, the following holds:

Information Loss due to Space Transition. Information is lost in the space transition ![]() →

→ ![]() . Since some of this information—namely, the location of contact points between the robot arm and obstacles—is essential for the sensor-based planning algorithm, from time to time the algorithm may need to utilize some information specific to W-space only.

. Since some of this information—namely, the location of contact points between the robot arm and obstacles—is essential for the sensor-based planning algorithm, from time to time the algorithm may need to utilize some information specific to W-space only.

We will now consider some elemental planar interactions of arm links with obstacles, and we will show that if a path from start to target does exist, then a combination of elemental motions can produce such a path. Define the following:

- Type I obstacle corresponds to a W- or C-obstacle that results from the interaction of link l1 with a physical obstacle.

- Type II obstacle corresponds to a W- or C-obstacle that results from the interaction of link l2 with a physical obstacle.

- Type III obstacle corresponds to a W- or C-obstacle that results from the interaction of link l3with a physical obstacle.

We will use subscripts “+“ and “−“ to further distinguish between obstacles that interact with the front and rear part of a link, respectively. For example, a Type III+ obstacle refers to a C-obstacle produced by interaction of the front part of link l3 with some physical obstacle.

In the next section we will analyze separately the interaction of each link with obstacles. Each time, three cases are considered: when an obstacle interacts with the front part, the rear part, or simultaneously with both parts of the link in question. We will also consider the interaction of a combination of links with obstacles, setting the foundation for the algorithm design.

Interaction of Link l1 with Obstacles — Type I Obstacles. Since, according to our model, sliding along an obstacle does not constitute an interference with the link l1 motion, we need to consider only those cases where the link meets an obstacle head-on. When only the front end of link l1 is in contact with an obstacle—say, at the joint value ![]() —a Type I+ obstacle is produced, which extends from C-space floor to ceiling and side to side (see Figure 6.6) which effectively reduces the C-space cubicle by the volume (l1 max —

—a Type I+ obstacle is produced, which extends from C-space floor to ceiling and side to side (see Figure 6.6) which effectively reduces the C-space cubicle by the volume (l1 max — ![]() ) · l2 max · l3 max.

) · l2 max · l3 max.

A similar effect appears when only the rear end of link l1 interacts with an obstacle—say, at a joint value ![]() . Then the C-space is effectively decreased by the volume

. Then the C-space is effectively decreased by the volume ![]() · l2 max · l3 max. Finally, a simultaneous contact of both front and rear ends with obstacles at a value

· l2 max · l3 max. Finally, a simultaneous contact of both front and rear ends with obstacles at a value ![]() corresponds to a degenerate case where no motion of link l1 is possible; that is, the C-obstacle occupies the whole C-space. Formally the property of Type I obstacle monotonicity is expressed as follows:

corresponds to a degenerate case where no motion of link l1 is possible; that is, the C-obstacle occupies the whole C-space. Formally the property of Type I obstacle monotonicity is expressed as follows:

Figure 6.6 C-space with a Type I obstacle.

Type I Monotonicity. For any obstacle interacting with link l1, there are three directions corresponding to the joint axes li, i = 1, 2, 3, respectively, along which the C-obstacle behaves monotonically, as follows: If a position ![]() cannot be reached by the arm due to an obstacle interference, then no position

cannot be reached by the arm due to an obstacle interference, then no position ![]() , such that

, such that ![]() in case of the (obstacle's) contact with the link's front part, or

in case of the (obstacle's) contact with the link's front part, or ![]() in case of the contact with the link's rear part, and

in case of the contact with the link's rear part, and ![]() ,

, ![]() , can be reached either.

, can be reached either.

Interaction of Link l2 with Obstacles — Type II Obstacles

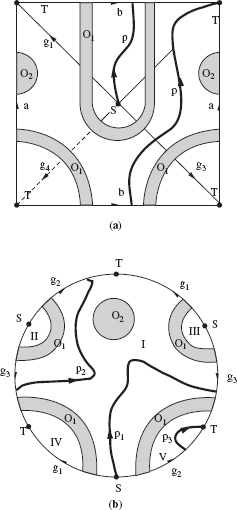

Front Part of Link l2 – Type II+ Obstacles. Consider the case when only the front part of link l2 interferes with an obstacle (Figure 6.2). Because link l2 effectively has two degrees of freedom, the corresponding Type II+ obstacle will look in C-space as shown in Figure 6.7. The monotonicity property in this case is as follows:

Type II+ Monotonicity. For any obstacle interacting with the front part of link l2, there are two axes (directions), namely l2 and l3, along which the C-obstacle behaves monotonically, as follows: If a position ![]() cannot be reached by the arm due to an obstacle interference, then no position

cannot be reached by the arm due to an obstacle interference, then no position ![]() , such that

, such that ![]() and

and ![]() , can be reached either.

, can be reached either.

As a result, a Type II+ collision, as at point H in Figure 6.7, indicates that any motion directly upward or downward from H along the obstacle will necessarily bring the C-point to one of the side walls of the C-space cubicle. This suggests that a plane can be chosen such that the exploration of the intersection curve between this plane and the Type II+ obstacle will produce a more promising outcome that will result either in a success or in the correct conclusion that the target cannot be reached. In the algorithm, the M-plane will be used, which offers some technical advantages. In general, all three arm joints will participate in the corresponding motion.

Figure 6.7 (a) W-space and (b) C-space with a Type II obstacle. (S, T) is the M-line; HabL is a part of the intersection curve between the obstacle O and M-plane.

For this case (front part of link l2 interacting with an obstacle), the decision on which local direction, right or left, is to be taken at a hit point H in order to follow the intersection curve between an M-plane and a Type II+ obstacle is made in the algorithm based on the following rule:

Rule 1:

If l1 H > l1 T, the current direction is “left.”

If l1 H > l1 T, the current direction is “right.”

If l1 H > l1 T, the target cannot be reached.

Rear Part of Link l2 – Type II_Obstacles. Now consider the case when only the rear part of link l2—that is, the link's part to the left of joint J2—can interfere with obstacles (see obstacle O3, Figure 6.2). This situation produces a C-space very similar to that in Figure 6.7. The direction of obstacle monotonicity along the axis l2 will now reverse:

Type II− Monotonicity. For any obstacle interacting with the rear part of link l2, there are two axes (directions), namely l2 and l3, along which the C-obstacle behaves monotonically, as follows: If a position ![]() cannot be reached by the arm due to an obstacle interference, then no position

cannot be reached by the arm due to an obstacle interference, then no position ![]() , such that

, such that ![]() and

and ![]() , can be reached either.

, can be reached either.

In terms of decision-making, this case is similar to the one above, except that the direction of obstacle monotonicity along l2 axis reverses, and the choice of the current local direction at a hit point H obeys a slightly different rule:

Rule 2:

If l1 H > l1 T, the current direction is “right.”

If l1 H < l1 T, the current direction is “left.”

If l1 H = l1 T, the target cannot be reached.

Interaction of Both Parts of Link l2 with Obstacles. Clearly, when both the front and the rear parts of link l2 interact simultaneously with obstacles, the resulting Type II+ and Type II− obstacles fuse into a single C-obstacle that divides C-space into two separate volumes unreachable one from another (see Figure 6.8). If going from S to T requires the arm to cross that obstacle, the algorithm will conclude that the target position cannot be reached.

Figure 6.8 C-space in the case when both front and rear parts of link l2 interact with obstacles, producing a single obstacle that is a combination of a Type II+ and Type II− obstacles.

Front Part of Link l3 – Type III+ Obstacles. Assume for a moment that only the front part of link l3 can interfere with an obstacle (see, e.g., obstacle O1, Figures 6.2 and 6.4). Consider the cross sections of the obstacle with two horizontal planes: one corresponding to the value ![]() and the other corresponding to the value

and the other corresponding to the value ![]() with

with ![]() . Denote these cross sections a′ and a″, respectively. Each cross section is a closed set limited by a simple closed curve; it may or may not include points on the C-space boundary. Because link l3 is a generalized cylinder, the vertical projection of one cross section onto the other satisfies the relationship a′ ⊆ a″. This is a direct result of the Type III+ obstacle monotonicity property, which is formulated as follows:

. Denote these cross sections a′ and a″, respectively. Each cross section is a closed set limited by a simple closed curve; it may or may not include points on the C-space boundary. Because link l3 is a generalized cylinder, the vertical projection of one cross section onto the other satisfies the relationship a′ ⊆ a″. This is a direct result of the Type III+ obstacle monotonicity property, which is formulated as follows:

Type III+ Monotonicity. For any obstacle interacting with the front part of link l3, there is one axis (direction), namely l3, along which the corresponding C-obstacle behaves monotonically, as follows: if a position ![]() cannot be reached by the arm due to an obstacle interference, then no position

cannot be reached by the arm due to an obstacle interference, then no position ![]() such that

such that ![]() can be reached either.

can be reached either.

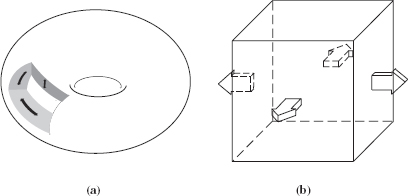

This property results in a special “stalactite” shape of Type III+ obstacles. A typical property of icicles and of beautiful natural stalactites that hang down from the ceilings of many caves is that their horizontal cross section is continuously reduced (in theory at least) from its top to its bottom. Each Type III+ obstacle behaves in a similar fashion. It forms a “stalactite” that hangs down from the ceiling of the C-space cubicle, and its horizontal cross section can only decrease, with its maximum horizontal cross section being at the ceiling level, l3 = l3 max(see cubicle Oabcdefg and obstacle O1, Figure 6.4). For any two horizontal cross sections of a Type III+ obstacle, taken at levels ![]() and

and ![]() such that

such that ![]() , the projection of the first cross section (

, the projection of the first cross section (![]() level) onto a horizontal plane contains no points that do not belong to the similar projection of the second cross section (

level) onto a horizontal plane contains no points that do not belong to the similar projection of the second cross section (![]() level). This behavior is the reflection of the monotonicity property.

level). This behavior is the reflection of the monotonicity property.

Because of this topology of Type III+ obstacles, the sufficient motion for maneuvering around any such obstacle—that is, motion sufficient to guarantee convergence—turns out to be motion along the intersection curves between the corresponding C-obstacle and either the M-plane or the V-plane (specifically, its part below M-plane), plus possibly some motion in the floor of the C-space cubicle (Figure 6.9).

Rear Part of Link l3 – Type III− Obstacles. A similar argument can be made for the case when only the rear end of link l3 interacts with an obstacle (see, e.g., obstacle O2, Figures 6.2, 6.4, and 6.5). In C-space the corresponding Type III− obstacle becomes a “stalagmite” growing upward from the C-space floor. This shape is a direct result of the Type III− obstacle monotonicity property, which is reversed compared to the above situation with the front part of link l3, as follows:

Figure 6.9 C-space with a Type III obstacle. (a) Curve abc is the intersection curve between the obstacle and V-plane that would be followed by the algorithm. (b) Here the Type III obstacle intersects the floor of C-space. Curve aHbLc is the intersection curve between the obstacle and V-plane and C-space floor that would be followed by the algorithm.

Type III− Monotonicity. For any obstacle interacting with the rear part of link l3, there is one axis (direction), l3, along which the corresponding C-obstacle behaves monotonically, as follows: if a position ![]() cannot be reached by the arm due to an obstacle interference, then no position

cannot be reached by the arm due to an obstacle interference, then no position ![]() such that

such that ![]() can be reached either.

can be reached either.

The motion sufficient for maneuvering around a Type III− obstacle and for guaranteeing convergence is motion along the curves of intersection between the corresponding C-obstacle and either the M-plane or the V-plane (its part above M-plane), or the ceiling of the C-space cubicle.

Interaction of Both Parts of Link l3 with Obstacles. This is the case when in C-space a “stalactite” obstacle meets a “stalagmite” obstacle and they form a single obstacle. (Again, similar shapes are found in some caves.) Then the best route around the obstacle is likely to be in the region of the “waist” of the new obstacle.

Let us consider this case in detail. For both parts of link l3 to interact with obstacles, or with different pieces of the same obstacle, the obstacles must be of both types, Type III+ and Type III−. Consider an example with two such obstacles shown in Figure 6.10. True, these C-space obstacles don't exactly look like the stalactites and stalagmites that one sees in a natural cave, but they do have their major properties: One “grows” from the floor and the other grows from the ceiling, and they both satisfy the monotonicity property, which is how we think of natural stalactites and stalagmites.

Without loss of generality, assume that at first only one part of link l3—say, the rear part—encounters an obstacle (see obstacle O2, Figure 6.10). Then the arm will start maneuvering around the obstacle following the intersection curve between the V-plane and the obstacle (path segment aH, Figure 6.10). During this motion the front part of link l3 contacts the other (or another part of the same) obstacle (here, obstacle O1, Figure 6.10).

At this moment the C-point is still in the V-plane, and also at the intersection curve between both obstacles, one of Type III+ and the other of Type III− (point H, Figure 6.10; see also the intersection curve H2cdL2fg, Figure 6.12). As with any curve, there are two possible local directions for following this intersection curve. If both of them lead to walls, then the target is not reachable. In this example the arm will follow the intersection curve—which will depart from V-plane, curve HbcL—until it meets V-plane at point L, then continue in the V-plane, and so on.

Since for the intersection between Type III+ and Type III− obstacles the monotonicity property works in the opposite directions—hence the minimum area “waist” that they form—the following statement holds (it will be used below explicitly in the algorithm):

Corollary 6.2.2. If there is a path around the union of a Type III+ and a Type III− obstacles, then there must be a path around them along their intersection curve.

Figure 6.10 C-space in the case when both front and rear parts of link l3 interact with obstacles, producing a single obstacle that is a combination of a Type III+ and Type III− obstacles.

Simultaneous Interaction of Combinations of Links with Obstacles. Since Type I obstacles are trivial from the standpoint of motion planning—they can be simply treated as walls parallel to the sides of the C-space cubicle—we focus now on the combinations of Type II and Type III obstacles. When both links l2 and l3 are simultaneously in contact with obstacles, the C-point is at the intersection curve between Type II and Type III obstacles, which presents a simple closed curve. (Refer, for example, to the intersection of obstacles O2 and O3, Figure 6.11.) Observe that the Type III monotonicity property is preserved in the union of Type II and Type III obstacles. Hence,

Corollary 6.2.3. If there is a path around the union of a Type II and a Type III obstacles, then there must be a path around them along their intersection curve.

As in the case of intersection between the Type III obstacle and the V-plane (see above), one of the two possible local directions is clearly preferable to the other. For example, when in Figure 6.11 the C-point reaches, at point b, the intersection curve between obstacles O2 and O3, it is clear from the monotonicity property that the C-point should choose the upward direction to follow the intersection curve. This is because the downward direction is known to lead to the base of the obstacle O2 “stalagmite” and is thus less promising (though not necessarily hopeless) as the upward direction (see path segments bc and cL, Figure 6.11).

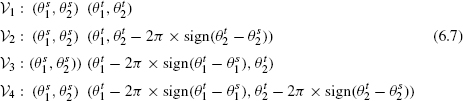

Figure 6.11 The path in C-space in the presence of obstacles O2 and O3 of Figure 6.2; SHabcLdeT is the actual path of the arm endpoint, and curve S′H′a′…T′ is its projection onto the plane (l1, l2).

Let us stress that in spite of seeming multiplicity of cases and described elemental strategies, their logic is the same: All elemental strategies force the C-point to move either along the M-line, or along the intersection curves between a C-obstacle and a plane (M-plane, V-plane, or C-space side planes), or along the intersection curves between two Type III C-obstacles. Depending on the real workspace obstacles, various combinations of such path segments may occur. We will show in the next section that if in a given scene there exists a path to the target position, a combination of elemental strategies is sufficient to produce one.

6.2.4 Connectivity of

A space or manifold is connected relative to two points in it if there is a path that connects both points and that lies fully in the space (manifold). For a given path l, the corresponding trajectory l(t) defines this path as a function of a scalar variable t; for example, t may indicate time. Denote the 2D Cartesian space formed by joint values l1, l2 as ![]() .

.

We intend to show here that for the 3D Cartesian arm the connectivity in ![]() can be deduced from the connectivity in

can be deduced from the connectivity in ![]() p. Such a relationship will mean that the problem of path planning for the 3D Cartesian arm can be reduced to that for a point automaton in the plane, and hence the planar strategies of Chapter 3 can be utilized here, likely with some modifications.

p. Such a relationship will mean that the problem of path planning for the 3D Cartesian arm can be reduced to that for a point automaton in the plane, and hence the planar strategies of Chapter 3 can be utilized here, likely with some modifications.

Define the conventional projection Pc(E) of a set of points E = {(l1, l2, l3)} ⊆ ![]() onto space

onto space ![]() p as

p as ![]() . Thus,

. Thus, ![]() c(S),

c(S), ![]() c(T),

c(T), ![]() c(M-line), and

c(M-line), and ![]() c({O}) are, respectively, the projections of points S and T, the M-line, and C-obstacles onto

c({O}) are, respectively, the projections of points S and T, the M-line, and C-obstacles onto ![]() p. See, for example, projections

p. See, for example, projections ![]() c of three obstacles, O1, O2, O3 (Figure 6.12). It is easy to see that

c of three obstacles, O1, O2, O3 (Figure 6.12). It is easy to see that ![]() c(O1 ∩ O2) =

c(O1 ∩ O2) = ![]() c(O1) ∩

c(O1) ∩ ![]() c(O2).

c(O2).

Define the minimal projection Pm(E) of a set of points E = {(l1, l2, l3)}, ⊆ ![]() onto space

onto space ![]() p as

p as ![]() m(E) = {(l1, l2) | ∀ l3, (l1, l2, l3) ∈ E}. Thus, if a C-obstacle O stretches over the whole range of l3 ∈ [0, l3 max], and E contains all the points in O, then

m(E) = {(l1, l2) | ∀ l3, (l1, l2, l3) ∈ E}. Thus, if a C-obstacle O stretches over the whole range of l3 ∈ [0, l3 max], and E contains all the points in O, then ![]() m(E) is the intersection between the (l1, l2)-space and the maximum cylinder that can be inscribed into O and whose axis is parallel to l3. Note that if a set E is a cylinder whose axis is parallel to the l3 axis, then

m(E) is the intersection between the (l1, l2)-space and the maximum cylinder that can be inscribed into O and whose axis is parallel to l3. Note that if a set E is a cylinder whose axis is parallel to the l3 axis, then ![]() c(E) =

c(E) = ![]() m(E). Type I and Type II obstacles present such cylinders. In general,

m(E). Type I and Type II obstacles present such cylinders. In general, ![]() m(S) =

m(S) = ![]() m(T) = =

m(T) = = ![]() .

.

Existence of Collision-Free Paths. We will now consider the relationship between a path in ![]() and its projection in

and its projection in ![]() p. The following statement comes directly from the definition of

p. The following statement comes directly from the definition of ![]() c and

c and ![]() m:

m:

Lemma 6.2.1. For any C-obstacle O in ![]() and any set Ep in

and any set Ep in ![]() p, if Ep ∩

p, if Ep ∩ ![]() c(O) =

c(O) = ![]() , then

, then ![]() .

.

If the hypothesis is not true, then ![]() . We have

. We have ![]()

![]() . Thus a contradiction.

. Thus a contradiction.

The next statement provides a sufficient condition for the existence of a path in C-space:

Lemma 6.2.2. Given a set of obstacles {O} in ![]() and the corresponding projections

and the corresponding projections ![]() c({O}), if there exists a path between

c({O}), if there exists a path between ![]() c(S) and

c(S) and ![]() c(T) in

c(T) in ![]() p, then there must exist a path between S and T in

p, then there must exist a path between S and T in ![]() .

.

Let lp(t) = {l1(t), l2(t)} be a trajectory of Pc(C-point) between ![]() c(S) and

c(S) and ![]() c(T) in

c(T) in ![]() p. From Lemma 6.2.1,

p. From Lemma 6.2.1, ![]() in

in ![]() . Hence, for example, the path

. Hence, for example, the path ![]() connects S and T in

connects S and T in ![]() .

.

To find the necessary condition, we will use the notion of a minimal projection. The following statement asserts that a zero overlap between two sets in ![]() implies a zero overlap between their minimal projections in

implies a zero overlap between their minimal projections in ![]() p:

p:

Lemma 6.2.3. For any set E and any C-obstacle O in ![]() , if O ∩ E =

, if O ∩ E = ![]() , then

, then ![]() m(E) ∩

m(E) ∩ ![]() m(O) =

m(O) = ![]() .

.

By definition, ![]() and

and ![]() . Thus, if

. Thus, if ![]() m(E) ∩

m(E) ∩ ![]() m(O) =

m(O) = ![]() , then

, then ![]()

![]() .

.

To use this lemma in the algorithm design, we need to describe minimal projections for different obstacle types. For any Type I or Type II obstacle O, ![]() c(O) =

c(O) = ![]() m(O). For a Type III obstacle we consider three cases, using, as an example, a Type III+ obstacle; denote it O+.

m(O). For a Type III obstacle we consider three cases, using, as an example, a Type III+ obstacle; denote it O+.

- O+ intersects the floor F of

. Because of the monotonicity property,

. Because of the monotonicity property,  m(O+) = O+ ∩ F. In other words, the minimal projection of O+ is exactly the intersection area of O+ with the floor F.

m(O+) = O+ ∩ F. In other words, the minimal projection of O+ is exactly the intersection area of O+ with the floor F. - O+ intersects with a Type III− obstacle, O−. Then, B(

m(O+ ∪ O−)) =

m(O+ ∪ O−)) =  c(B(O+) ∩ B(O−)), where B(O) refers to the boundary of O. That is, the boundary curve of the combined minimal projection of O+ and O− is the conventional projection of the intersection curve between the boundary surfaces of O+ and O−.

c(B(O+) ∩ B(O−)), where B(O) refers to the boundary of O. That is, the boundary curve of the combined minimal projection of O+ and O− is the conventional projection of the intersection curve between the boundary surfaces of O+ and O−. - Neither of the above cases apply. Then

m(O+) =

m(O+) =  .

.

A similar argument can be carried out for a Type III− obstacle.

We now turn to establishing a necessary and sufficient condition that ties the existence of paths in the plane ![]() p with that in

p with that in ![]() . This condition will provide a base for generalizing, in the next section, a planar path planning algorithm to the 3D space. Assume that points S and T lie outside of obstacles.

. This condition will provide a base for generalizing, in the next section, a planar path planning algorithm to the 3D space. Assume that points S and T lie outside of obstacles.

Theorem 6.2.1. Given points S, T and a set of obstacles {O} in ![]() , a path exists between S and T in

, a path exists between S and T in ![]() if and only if there exists a path in

if and only if there exists a path in ![]() p between points

p between points ![]() c(S) and

c(S) and ![]() c(T) among the obstacles

c(T) among the obstacles ![]() m({O}).

m({O}).

First, we prove the necessity. Let l(t), t ∈ [0, 1], be a trajectory in ![]() . From Lemma 6.2.3,

. From Lemma 6.2.3, ![]() m(l(t)) ∩

m(l(t)) ∩ ![]() m({O}) =

m({O}) = ![]() . Hence, the path

. Hence, the path ![]() m(l(t)) connects

m(l(t)) connects ![]() c(S) and

c(S) and ![]() c(T) in

c(T) in ![]() p.

p.

To show the sufficiency, let lp(t), t ∈ [0, 1], be a trajectory in ![]() p and let lp(·) be the corresponding path. Then

p and let lp(·) be the corresponding path. Then ![]() presents a manifold in

presents a manifold in ![]() . Define

. Define ![]() and let Ec be the complement of E in

and let Ec be the complement of E in ![]() . We need to show that Ec consists of one connected component. Assume that this is not true. For any t* ∈ [0, 1], since lp(t*) ∩

. We need to show that Ec consists of one connected component. Assume that this is not true. For any t* ∈ [0, 1], since lp(t*) ∩ ![]() m({O} =

m({O} = ![]() , there exists l3* such that point (lp(t*), l3*) ∈ Ec. The only possibility for Ec to consist of two or more disconnected components is when there exists t* and a set

, there exists l3* such that point (lp(t*), l3*) ∈ Ec. The only possibility for Ec to consist of two or more disconnected components is when there exists t* and a set ![]() , such that (lp(t*), l3*) ∈ E while

, such that (lp(t*), l3*) ∈ E while ![]() and

and ![]() . However, this cannot happen because of the monotonicity property of obstacles. Hence Ec must be connected, and since points S and T lie outside of obstacles, then S, T ∈ Ec. Q.E.D.

. However, this cannot happen because of the monotonicity property of obstacles. Hence Ec must be connected, and since points S and T lie outside of obstacles, then S, T ∈ Ec. Q.E.D.

Lifting 2D Algorithms into 3D Space. Theorem 6.2.1 establishes the relationship between collision-free paths in ![]() and collision-free paths in

and collision-free paths in ![]() p. We now want to develop a similar relationship between motion planning algorithms for

p. We now want to develop a similar relationship between motion planning algorithms for ![]() and those for

and those for ![]() p. We address, in particular, the following question: Given an algorithm Ap for

p. We address, in particular, the following question: Given an algorithm Ap for ![]() p, can one construct an algorithm A for

p, can one construct an algorithm A for ![]() , such that any trajectory (path) l(t) produced by A in

, such that any trajectory (path) l(t) produced by A in ![]() in the presence of obstacles {O} maps by

in the presence of obstacles {O} maps by ![]() m into the trajectory lp(t) produced by Ap in

m into the trajectory lp(t) produced by Ap in ![]() p in the presence of obstacles

p in the presence of obstacles ![]() m({O})?

m({O})?

We first define the class of algorithms from which algorithms Ap are chosen. A planar algorithm Ap is said to belong to class ![]() p if and only if its operation is based on local information, such as from tactile sensors; the paths it produces are confined to the M-line, obstacle boundaries, and W-space boundaries; and it guarantees convergence. In other words, class

p if and only if its operation is based on local information, such as from tactile sensors; the paths it produces are confined to the M-line, obstacle boundaries, and W-space boundaries; and it guarantees convergence. In other words, class ![]() p comprises only sensor-based motion planning algorithms that satisfy our usual model. In addition, we assume that all decisions about the direction to proceed along the M-line or along the obstacle boundary are made at the intersection points between M-line and obstacle boundaries.

p comprises only sensor-based motion planning algorithms that satisfy our usual model. In addition, we assume that all decisions about the direction to proceed along the M-line or along the obstacle boundary are made at the intersection points between M-line and obstacle boundaries.

Theorem 6.2.1 says that if there exists a path in ![]() p (between projections of points S and T), then there exists at least one path in

p (between projections of points S and T), then there exists at least one path in ![]() . Our goal is to dynamically construct the path in

. Our goal is to dynamically construct the path in ![]() while Ap, the given algorithm, generates its path in

while Ap, the given algorithm, generates its path in ![]() p. To this end, we will analyze five types of elemental motions that appear in

p. To this end, we will analyze five types of elemental motions that appear in ![]() , called Motion I, Motion II, Motion III, Motion IV, and Motion V, each corresponding to the

, called Motion I, Motion II, Motion III, Motion IV, and Motion V, each corresponding to the ![]() c(C-point) motion either along the

c(C-point) motion either along the ![]() c(M-line) or along the obstacle boundaries

c(M-line) or along the obstacle boundaries ![]() c({O}). Based on this analysis, we will augment the decision-making mechanism of Ap to produce the algorithm A for

c({O}). Based on this analysis, we will augment the decision-making mechanism of Ap to produce the algorithm A for ![]() .

.

Out of the three types of obstacle monotonicity property identified above, only Type III monotonicity is used in this section. One will see later that other monotonicity types can also be used, resulting in more efficient algorithms. Below, Type I and II obstacles are treated as C-space side walls; the C-space ceiling is treated as a Type III+ obstacle; and the C-space floor is treated as a Type III− obstacle. Note that when the arm comes in contact simultaneously with what it perceives as two Type III obstacles, only those of the opposite “signs” have to be distinguished—that is, a Type III+ and a Type III−. Obstacles of the same sign will be perceived as one. Below, encountering “another Type III obstacle” refers to an obstacle of the opposite sign. Then the projection ![]() m of the union of the obstacles is not zero,

m of the union of the obstacles is not zero, ![]() m(·) ≠

m(·) ≠ ![]() .

.

Among the six local directions defined in Section 6.2.1 —forward, backward, left, right, upward, and downward—the first four can be used in a 2D motion planning algorithm. Our purpose is to design a general scheme such that, given any planar algorithm Ap, a 3D algorithm A can be developed that lifts the decision-making mechanism of Ap into 3D space by complementing the set of local directions by elements upward and downward. We now turn to the study of five fundamental motions in 3D, which will be later incorporated into the 3D algorithm.

Motion I—Along the M-Line. Starting at point S, the C-point moves along the M-line, as in Figure 6.7, segment SH; this corresponds to ![]() c(C-point) moving along the

c(C-point) moving along the ![]() c(M-line) (segment S′H′, Figure 6.7). Unless algorithm Ap calls for terminating the procedure, one of these two events can take place:

c(M-line) (segment S′H′, Figure 6.7). Unless algorithm Ap calls for terminating the procedure, one of these two events can take place:

- A wall is met; this corresponds to

c(C-point) encountering an obstacle. Algorithm Ap now has to decide whether

c(C-point) encountering an obstacle. Algorithm Ap now has to decide whether  c(C-point) will move along

c(C-point) will move along  c(M-line), or turn left or right to go around the obstacle. Accordingly, the C-point will choose to reverse its local direction along the M-line, or to turn left or right to go around the wall. In the latter case we choose a path along the intersection curve between the wall and the M-plane, which combines two advantages: (i) While not true in the worst case, due to obstacle monotonicity the M-plane typically contains one of the shortest paths around the obstacle; and (ii) after passing around the obstacle, the C-point will meet the M-line exactly at the point of its intersection with the obstacle (point L, Figure 6.7), and so the path will be simpler. In general, all three joints participate in this motion.

c(M-line), or turn left or right to go around the obstacle. Accordingly, the C-point will choose to reverse its local direction along the M-line, or to turn left or right to go around the wall. In the latter case we choose a path along the intersection curve between the wall and the M-plane, which combines two advantages: (i) While not true in the worst case, due to obstacle monotonicity the M-plane typically contains one of the shortest paths around the obstacle; and (ii) after passing around the obstacle, the C-point will meet the M-line exactly at the point of its intersection with the obstacle (point L, Figure 6.7), and so the path will be simpler. In general, all three joints participate in this motion. - A Type III+ or III− obstacle is met. The C-point cannot proceed along the M-line any longer. The local objective of the arm here is to maneuver around the obstacle so as to meet the M-line again at a point that is closer to T than the encounter point. Among various ways to pass around the obstacle, we choose here motion in the V-plane. The intersection curve between the Type III obstacle and the V-plane is a simple planar curve. It follows from the monotonicity property of Type III obstacles that when the front (rear) part of link l3 hits an obstacle, then any motion upward (accordingly, downward) along the obstacle will necessarily bring the C-point to the ceiling (floor) of the C-space. Therefore, a local contact information is sufficient here for a global planning inference—that the local direction downward (upward) along the intersection curve between the V-plane and the obstacle is a promising direction. In the example in Figure 6.9a, the resulting motion produces the curve abc.

Motion II–Along the Intersection Curve Between the M-Plane and a Wall. In ![]() p, this motion corresponds to

p, this motion corresponds to ![]() c(C-point) moving around the obstacle boundary curve in the chosen direction (see Figure 6.12, segments H1aL1 and

c(C-point) moving around the obstacle boundary curve in the chosen direction (see Figure 6.12, segments H1aL1 and ![]() ). One of these two events can take place:

). One of these two events can take place:

- The M-line is encountered, as at point L1, Figure 6.12; in

p this means

p this means  c(M-line) is encountered. At this point, algorithm Ap will decide whether

c(M-line) is encountered. At this point, algorithm Ap will decide whether  c(C-point) should start moving along the

c(C-point) should start moving along the  c(M-line) or continue moving along the intersection curve between the M-plane and the obstacle boundary. Accordingly, the C-point will either resume its motion along the M-line, as in Motion I, or will keep moving along the M-plane/obstacle intersection curve.

c(M-line) or continue moving along the intersection curve between the M-plane and the obstacle boundary. Accordingly, the C-point will either resume its motion along the M-line, as in Motion I, or will keep moving along the M-plane/obstacle intersection curve. - A Type III+ or III− obstacle is met. In

p, the

p, the  c(C-point) keeps moving in the same local direction along the obstacle boundary (path segments bcL and b′L′, Figure 6.11). As for C-point, there are two possible directions for it to follow the intersection curve between the Type III obstacle and the wall. Since the Type III+ (or III−) monotonicity property is preserved here, the only promising local direction for passing around the Type III+ (III−) obstacle is downward (upward). This possibility corresponds to Motion IV below.

c(C-point) keeps moving in the same local direction along the obstacle boundary (path segments bcL and b′L′, Figure 6.11). As for C-point, there are two possible directions for it to follow the intersection curve between the Type III obstacle and the wall. Since the Type III+ (or III−) monotonicity property is preserved here, the only promising local direction for passing around the Type III+ (III−) obstacle is downward (upward). This possibility corresponds to Motion IV below.

Figure 6.12 The path in C-space in the presence of obstacles O1, O2 and O3 of Figure 6.2. Trajectory SH1aL1bH2cdL2eT is the actual path of the arm endpoint; ![]() is its projection onto the plane (l1, l2).

is its projection onto the plane (l1, l2).

Motion III–Along the Intersection Curve Between the V-Plane and a Type III+ or III− Obstacle. This corresponds to moving along the ![]() c (M-line) in

c (M-line) in ![]() p. One of the following can happen:

p. One of the following can happen:

- The M-line is met. C-point resumes its motion along the M-line as in Motion I; see path segments bc, cT and b′c′, c′T′, Figure 6.9a.

- A wall is met. This corresponds to the

m(C-point) encountering an obstacle. According to the algorithm Ap,

m(C-point) encountering an obstacle. According to the algorithm Ap,  c(C-point) will either reverse its local direction to move along

c(C-point) will either reverse its local direction to move along  c(M-line) or will make a turn to follow the obstacle. Accordingly, the C-point will either (a) reverse its local direction to follow the intersection curve between the V-plane and the (Type III) obstacle or (b) try to go around the union of the wall and the obstacle. For the latter motion we choose a path along the intersection curve between the wall and the Type III obstacle.

c(M-line) or will make a turn to follow the obstacle. Accordingly, the C-point will either (a) reverse its local direction to follow the intersection curve between the V-plane and the (Type III) obstacle or (b) try to go around the union of the wall and the obstacle. For the latter motion we choose a path along the intersection curve between the wall and the Type III obstacle. - Another Type III+ or III− obstacle is met. Since the

m projection of both Type III obstacles onto

m projection of both Type III obstacles onto  p is not zero, this corresponds to the

p is not zero, this corresponds to the  c(C-point) encountering an obstacle, which presents the

c(C-point) encountering an obstacle, which presents the  m projection of the intersection curve between both obstacles. According to Ap algorithm, the

m projection of the intersection curve between both obstacles. According to Ap algorithm, the  c(C-point) will either (a) reverse its local direction to move along

c(C-point) will either (a) reverse its local direction to move along  c(M-line) or (b) make a turn to follow the obstacle. Accordingly, the C-point will either (a) reverse its local direction to follow the intersection curve between V-plane and the Type III obstacle or (b) try to go around the union of two Type III obstacles. For the latter motion, we choose a path along the intersection curve between the two Type III obstacles in the local direction left or right decided by Ap.

c(M-line) or (b) make a turn to follow the obstacle. Accordingly, the C-point will either (a) reverse its local direction to follow the intersection curve between V-plane and the Type III obstacle or (b) try to go around the union of two Type III obstacles. For the latter motion, we choose a path along the intersection curve between the two Type III obstacles in the local direction left or right decided by Ap.

Motion IV– Along the Intersection Curve Between a Type III Obstacle and a Wall. In ![]() p, this corresponds to the

p, this corresponds to the ![]() c(C-point) moving along the boundary of