CHAPTER 3

CHAPTER 3

Motion Planning for a Mobile Robot

Thou mayst not wander in that labyrinth; There Minotaurs and ugly treasons lurk.

— William Shakespeare, King Henry the Sixth

What is the difference between exploring and being lost?

— Dan Eldon, photojournalist

As discussed in Chapter 1, to plan a path for a mobile robot means to find a continuous trajectory leading from its initial position to its target position. In this chapter we consider a case where the robot is a point and where the scene in which the robot travels is the two-dimensional plane. The scene is populated with unknown obstacles of arbitrary shapes and dimensions. The robot knows its own position at all times, and it also knows the position of the target that it attempts to reach. Other than that, the only source of robot's information about the surroundings is its sensor. This means that the input information is of a local character and that it is always partial and incomplete. In fact, the sensor is a simple tactile sensor: It will detect an obstacle only when the robot touches it. “Finding a trajectory” is therefore a process that goes on in parallel with the journey: The robot will finish finding the path only when it arrives at the target location.

We will need this model simplicity and the assumption of a point robot only at the beginning, to develop the basic concepts and algorithms and to produce the upper and lower bound estimates on the robot performance. Later we will extend our algorithmic machinery to more complex and more practical cases, such as nonpoint (physical) mobile robots and robot arm manipulators, as well as to more complex sensing, such as vision or proximity sensing. To reflect the abstract nature of a point robot, we will interchangeably use for it the term moving automaton (MA, for brevity), following some literature cited in this chapter.

Other than those above, no further simplifications will be necessary. We will not need, for example, the simplifying assumptions typical of approaches that deal with complete input information such as approximation of obstacles with algebraic and semialgebraic sets; representation of the scene with intermediate structures such as connectivity graphs; reduction of the scene to a discrete space; and so on. Our robot will treat obstacles as they are, as they are sensed by its sensor. It will deal with the real continuous space—which means that all points of the scene are available to the robot for the purpose of motion planning.

The approach based on this model (which will be more carefully formalized later) forms the sensor-based motion planning paradigm, or, as we called it above, SIM (Sensing–Intelligence–Motion). Using algorithms that come out of this paradigm, the robot is continuously analyzing the incoming sensing information about its current surroundings and is continuously planning its path. The emphasis on strictly local input information is somewhat similar to the approach used by Abelson and diSessa [36] for treating geometric phenomena based on local information: They ask, for example, if a turtle walking along the sides of a triangle and seeing only a small area around it at every instant would have enough information to prove triangle-related theorems of Euclidean geometry. In general terms, the question being posed is, Can one make global inferences based solely on local information? Our question is very similar: Can one guarantee a global solution—that is, a path between the start and target locations of the robot—based solely on local sensing?

Algorithms that we will develop here are deterministic. That is, by running the same algorithm a few times in the same scene and with the same start and target points, the robot should produce identical paths. This point is crucial: One confusion in some works on robot motion planning comes from a view that the uncertainty that is inherent in the problem of motion planning with incomplete information necessarily calls for probabilistic approaches. This is not so.

As discussed in Chapter 1, the sensor-based motion planning paradigm is distinct from the paradigm where complete information about the scene is known to the robot beforehand—the so-called Piano Mover's model [16] or motion planning with complete information. The main question we ask in this and the following chapters is whether, under our model of sensor-based motion planning, provable (complete and convergent are equivalent terms) path planning algorithms can be designed. If the answer is yes, this will mean that no matter how complex the scene is, under our algorithms the robot will find a path from start to target, or else will conclude in a finite time that no such path exists if that is the case.

Sometimes, approaches that can be classified as sensor-based planning are referred to in literature as reactive planning. This term is somewhat unfortunate: While it acknowledges the local nature of robot sensing and control, it implicitly suggests that a sensor-based algorithm has no way of inferring any global characteristics of space from local sensing data (“the robot just reacts”), and hence cannot guarantee anything in global terms. As we will see, the sensor-based planning paradigm can very well account for space global properties and can guarantee algorithms' global convergence.

Recall that by judiciously using the limited information they managed to get about their surroundings, our ancestors were able to reach faraway lands while avoiding many obstacles, literally and figuratively, on their way. They had no maps. Sometimes along the way they created maps, and sometimes maps were created by those who followed them. This suggests that one does not have to know everything about the scene in order to solve the go-from-A-to-B motion planning problem. By always knowing one's position in space (recall the careful triangulation of stars the seaman have done), by keeping in mind where the target position is relative to one's position, and by remembering two or three key locations along the way, one should be able to infer some important properties of the space in which one travels, which will be sufficient for getting there. Our goal is to develop strategies that make this possible.

Note that the task we pose to the robot does not include producing a map of the scene in which it travels. All we ask the robot to do is go from point A to point B, from its current position to some target position. This is an important distinction. If all I need to do is find a specific room in an unfamiliar building, I have no reason to go into an expensive effort of creating a map of the building. If I start visiting the same room in that same building often enough, eventually I will likely work out a more or less optimal route to the room—though even then I will likely not know of many nooks and crannies of the building (which would have to appear in the map). In other words, map making is a different task that arises from a different objective. A map may perhaps appear as a by-product of some path planning algorithm; this would be a rather expensive way to do path planning, but this may happen. We thus emphasize that one should distinguish between path planning and map making.

Assuming for now that sensor-based planning algorithms are viable and computationally simple enough for real-time operation and also assuming that they can be extended to more complex cases—nonpoint (physical) robots, arm manipulators, and complex nontactile sensing—the SIM paradigm is clearly very attractive. It is attractive, first of all, from the practical standpoint:

- Sensors are a standard fare in engineering and robot technology.

- The SIM paradigm captures much of what we observe in nature. Humans and animals solve complex motion planning tasks all the time, day in and day out, while operating with local sensing information. It would be wonderful to teach robots to do the same.

- The paradigm does away with complex gathering of information about the robot's surroundings, replacing it with a continuous processing of incoming sensor information. This, in turn, allows one not to worry about the shapes and locations of obstacles in the scene, and perhaps even handle scenes with moving or shape-changing obstacles.

- From the control standpoint, sensor-based motion planning introduces the powerful notion of sensor feedback control, thus transforming path planning into a continuous on-line control process. The fact that local sensing information is sufficient to solve the global task (which we still need to prove) is good news: Local information is likely to be simple and easy to process.

These attractive points of sensor-based planning stands out when comparing it with the paradigm of motion planning with complete information (the Piano Mover's model). The latter requires the complete information about the scene, and it requires it up front. Except in very simple cases, it also requires formidable calculations; this rules out a real-time operation and, of course, handling moving or shape-changing obstacles.

From the standpoint of theory, the main attraction of sensor-based planning is the surprising fact that in spite of the local character of robot sensing and the high level of uncertainly—after all, practically nothing may be known about the environment at any given moment—SIM algorithms can guarantee reaching a global goal, even in the most complex environment.

As mentioned before, those positive sides of the SIM paradigm come at a price. Because of the dynamic character of incoming sensor information—namely, at any given moment of the planning process the future is not known, and every new step brings in new information—the path cannot be preplanned, and so its global optimality is ruled out. In contrast, the Piano Mover's approach can in principle produce an optimal solution, simply because it knows everything there is to know.1 In sensor-based planning, one looks for a “reasonable path,” a path that looks acceptable compared to what a human or other algorithms would produce under similar conditions. For a more formal assessment of performance of sensor-based algorithms, we will develop some bounds on the length of paths generated by the algorithms. In Chapter 7 we will try to assess human performance in motion planning.

Given our continuous model, we will not be able to use the discrete criteria typically used for evaluating algorithms of computational geometry—for example, assessing a task complexity as a function of the number of vertices of (polygonal or otherwise algebraically defined) obstacles. Instead, a new path-length performance criterion based on the length of generated paths as a function of obstacle perimeters will be developed.

To generalize performance assessment of our path planning algorithms, we will develop the lower bound on paths generated by any sensor-based planning algorithm, expressed as the length of path that the best algorithm would produce in the worst case. As known in complexity theory, the difficulty of this task lies in “fighting an unknown enemy”—we do not know how that best algorithm may look like.

This lower bound will give us a yardstick for assessing individual path planning algorithms. For each of those we will be interested in the upper bound on the algorithm performance—the worst-case scenario for a specific algorithm. Such results will allow us to compare different algorithms and to see how far are they from an “ideal” algorithm.

All sensor-based planning algorithms can be divided into these two nonoverlapping intuitively transparent classes:

Class 1. Algorithms in which the robot explores each obstacle that it encounters completely before it goes to the next obstacle or to the target.

Class 2. Algorithms where the robot can leave an obstacle that it encounters without exploring it completely.

The distinction is important. Algorithms of Class 1 are quite “thorough”—one may say, quite conservative. Often this irritating thoroughness carries the price: From the human standpoint, paths generated by a Class 1 algorithm may seem unnecessarily long and perhaps a bit silly. We will see, however, that this same thoroughness brings big benefits in more difficult cases. Class 2 algorithms, on the other hand, are more adventurous—they are “more human”, they “take risks.” When meeting an obstacle, the robot operating under a Class 2 algorithm will have no way of knowing if it has met it before. More often than not, a Class 2 algorithm will win in real-life scenes, though it may lose badly in an unlucky scene.

As we will see, the sensor-based motion planning paradigm exploits two essential topological properties of space and objects in it—the orientability and continuity of manifolds. These are expressed in topology by the Jordan Curve Theorem [57], which states:

Any closed curve homeomorphic to a circle drawn around and in the vicinity of a given point on an orientable surface divides the surface into two separate domains, for which the curve is their common boundary.

The threateningly sounding “orientable surface” clause is not a real constraint. For our two-dimensional case, the Moebius strip and Klein bottle are the only examples of nonorientable surfaces. Sensor-based planning algorithms would not work on these surfaces. Luckily, the world of real-life robotics never deals with such objects.

In physical terms, the Jordan Curve Theorem means the following: (a) If our mobile robot starts walking around an obstacle, it can safely assume that at some moment it will come back to the point where it started. (b) There is no way for the robot, while walking around an obstacle, to find itself “inside” the obstacle. (c) If a straight line—for example, the robot's intended path from start to target—crosses an obstacle, there is a point where the straight line enters the obstacle and a point where it comes out of it. If, because of the obstacle's complex shape, the line crosses it a number of times, there will be an equal number of entering and leaving points. (The special case where the straight line touches the obstacle without crossing it is easy to handle separately—the robot can simply ignore the obstacle.)

These are corollaries of the Jordan Curve Theorem. They will be very explicitly used in the sensor-based algorithms, and they are the basis of the algorithms' convergence. One positive side effect of our reliance on topology is that geometry of space is of little importance. An obstacle can be polygonal or circular, or of a shape that for all practical purposes is impossible to define in mathematical terms; for our algorithm it is only a closed curve, and so handling one is as easy as the other. In practice, reliance on space topology helps us tremendously in computational savings: There is no need to know objects' shapes and dimensions in advance, and there is no need to describe and store object descriptions once they have been visited.

In Section 3.1 below, the formal model for the sensor-based motion planning paradigm is introduced. The universal lower bound on paths generated by any algorithm operating under this model is then produced in Section 3.2. One can see the bound as the length of a path that the best algorithm in the world will generate in the most “uncooperating” scene. In Sections 3.3.1 and 3.3.2, two provably correct path planning algorithms are described, called Bug1 and Bug2, one from Class 1 and the other from Class 2, and their convergence properties and performance upper bounds are derived. Together the two are called basic algorithms, to indicate that they are the base for later strategies in more complex cases. They also seem to be the first and simplest provable sensor-based planning algorithms known. We will formulate tests for target reachability for both algorithms and will establish the (worst-case) upper bounds on the length of paths they generate.

Analysis of the two algorithms will demonstrate that a better upper bound on an algorithm's path length does not guarantee shorter paths. Depending on the scene, one algorithm can produce a shorter path than the other. In fact, though Bug2's upper bound is much worse than that of Bug1, Bug2 will be likely preferred in real-life tasks.

In Sections 3.4 and 3.5 we will look at further ways to obtain better algorithms and, importantly, to obtain tighter performance bounds. In Section 3.6 we will expand the basic algorithms—which, remember, deal with tactile sensing—to richer sensing, such as vision. Sections 3.7 to 3.10 deal with further extensions to real-world (nonpoint) robots, and compare different algorithms. Exercises for this chapter appear in Section 3.11.

3.1 THE MODEL

The model includes two parts: One is related to geometry of the robot (automaton) environment, and the other is related to characteristics and capabilities of the automaton. To save on multiple uses of words “robot” and “automaton,” we will call it MA, for “moving automaton.”

Environment. The scene in which MA operates is a plane. The scene may be populated with obstacles, and it has two given points in it: the MA starting location, S, and the target location, T. Each obstacle's boundary is a simple closed curve of finite length, such that a straight line will cross it in only finitely many points. The case when the straight line is tangential to an obstacle at a point or coincides with a finite segment of the obstacle is not a “crossing.” Obstacles do not touch each other; that is, a point on an obstacle belongs to one and only one obstacle (if two obstacles do touch, they will be considered one obstacle). The scene can contain only a locally finite number of obstacles. This means that any disc of finite radius intersects a finite set of obstacles. Note that the model does not require that the scene or the overall set of obstacles be finite.

Robot. MA is a point. This means that an opening of any size between two distinct obstacles can be passed by MA. MA's motion skills include three actions: It knows how to move toward point T on a straight line, how to move along the obstacle boundary, and how to start moving and how to stop. The only input information that MA is provided with is (1) coordinates of points S and T as well as MA's current locations and (2) the fact of contacting an obstacle. The latter means that MA has a tactile sensor. With this information, MA can thus calculate, for example, its direction toward point T and its distance from it. MA's memory for storing data or intermediate results is limited to a few computer words.

Definition 3.1.1. A local direction is a once-and-for-all decided direction for passing around an obstacle. For the two-dimensional problem, it can be either left or right.

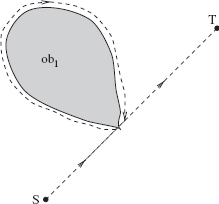

That is, if the robot encounters an obstacle and intends to pass it around, it will walk around the obstacle clockwise if the chosen local direction is “left,” and walk around it counterclockwise if the local direction is “right.” Because of the inherent uncertainty involved, every time MA meets an obstacle, there is no information or criteria it can use to decide whether it should turn left or right to go around the obstacle. For the sake of consistency and without losing generality, unless stated otherwise, let us assume that the local direction is always left, as in Figure 3.5.

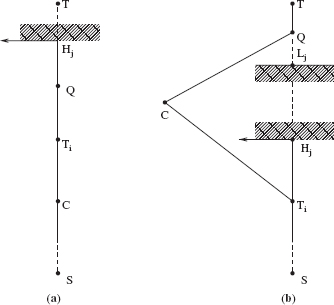

Definition 3.1.2. MA is said to define a hit point on the obstacle, denoted H, when, while moving along a straight line toward point T, it contacts the obstacle at the point H. It defines a leave point, L, on the obstacle when it leaves the obstacle at point L in order to continue its walk toward point T. (See Figure 3.5.)

In case MA moves along a straight line toward point T and the line touches some obstacle tangentially, there is no need to invoke the procedure for walking around the obstacle—MA will simply continue its straight-line walk toward point T. This means that no H or L points will be defined in this case. Consequently, no point of an obstacle can be defined as both an H and an L point. In order to define an H or an L point, the corresponding straight line has to produce a “real” crossing of the obstacle; that is, in the vicinity of the crossing, a finite segment of the line will lie inside the obstacle and a finite segment of the line will lie outside the obstacle.

Below we will need the following notation:

D is Euclidean distance between points S and T.

d(A, B) is Euclidean distance between points A and B in the scene; d(S, T) = D.

d(A) is used as a shorthand notation for d(A, T).

d(Ai) signifies the fact that point A is located on the boundary of the ith obstacle met by MA on its way to point T.

P is the total length of the path generated by MA on its way from S to T.

pi is the perimeter of i th obstacle encountered by MA.

Σi pi is the sum of perimeters of obstacles met by MA on its way to T, or of obstacles contained in a specific area of the scene; this quantity will be used to assess performance of a path planning algorithm or to compare path planning algorithms.

3.2 UNIVERSAL LOWER BOUND FOR THE PATH PLANNING PROBLEM

This lower bound, formulated in Theorem 3.2.1 below, informs us what performance can be expected in the worst case from any path planning algorithm operating within our model. The bound is formulated in terms of the length of paths generated by MA on its way from point S to point T. We will see later that the bound is a powerful means for measuring performance of different path planning procedures.

Theorem 3.2.1 ([58]). For any path planning algorithm satisfying the assumptions of our model, any (however large) P > 0, any (however small) D > 0, and any (however small) δ > 0, there exists a scene in which the algorithm will generate a path of length no less than P,

where D is the distance between points S and T, and pi are perimeters of obstacles intersecting the disk of radius D centered at point T.

Proof: We want to prove that for any known or unknown algorithm X a scene can be designed such that the length of the path generated by X in it will satisfy (3.1).2 Algorithm X can be of any type: It can be deterministic or random; its intermediate steps may or may not depend on intermediate results; and so on. The only thing we know about X is that it operates within the framework of our model above. The proof consists of designing a scene with a special set of obstacles and then proving that this scene will force X to generate a path not shorter than P in (3.1).

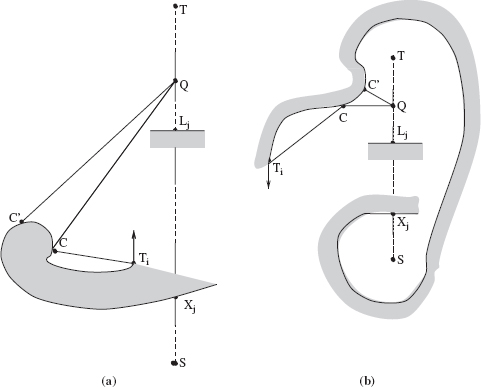

We will use the following scheme to design the required scene (called the resultant scene). The scene is built in two stages. At the first stage, a virtual obstacle is introduced. Parts of this obstacle or the whole of it, but not more, will eventually become, when the second stage is completed, the actual obstacle(s) of the resultant scene.

Consider a virtual obstacle shown in Figure 3.1a. It presents a corridor of finite width 2W > δ and of finite length L. The top end of the corridor is closed. The corridor is positioned such that the point S is located at the middle point of its closed end; the corridor opens in the direction opposite to the line (S, T). The thickness of the corridor walls is negligible compared to its other dimensions. Still in the first stage, MA is allowed to walk from S to T along the path prescribed by the algorithm X. Depending on the X's procedure, MA may or may not touch the virtual obstacle.

When the path is complete, the second stage starts. A segment of the virtual obstacle is said to be actualized if all points of the inside wall of the segment have been touched by MA. If MA has contiguously touched the inside wall of the virtual obstacle at some length l, then the actualized segment is exactly of length l. If MA touched the virtual obstacle at a point and then bounced back, the corresponding actualized area is considered to be a wall segment of length δ around the point of contact. If two segments of the MA's path along the virtual obstacle are separated by an area of the virtual obstacle that MA has not touched, then MA is said to have actualized two separate segments of the virtual obstacle.

We produce the resultant scene by designating as actual obstacles only those areas of the virtual obstacle that have been actualized. Thus, if an actualized segment is of length l, then the perimeter of the corresponding actual obstacle is equal to 2l; this takes into account the inside and outside walls of the segment and also the fact that the thickness of the wall is negligible (see Figure 3.1).

Figure 3.1 Illustration for Theorem 3.2.1. Actualized segments of the virtual obstacle are shown in solid black. S, start point; T, target point.

This method of producing the resultant scene is justified by the fact that, under the accepted model, the behavior of MA is affected only by those obstacles that it touches along its way. Indeed, under algorithm X the very same path would have been produced in two different scenes: in the scene with the virtual obstacle and in the resultant scene. One can therefore argue that the areas of the virtual obstacle that MA has not touched along its way might have never existed, and that algorithm X produced its path not in the scene with the virtual obstacle but in the resultant scene. This means the performance of MA in the resultant scene can be judged against (3.1). This completes the design of the scene. Note that depending on the MA's behavior under algorithm X, zero, one, or more actualized obstacles can appear in the scene (Figure 3.1b).

We now have to prove that the MA's path in the resultant scene satisfies inequality (3.1). Since MA starts at a distance D = d(S, T) from point T, it obviously cannot avoid the term D in (3.1). Hence we concentrate on the second term in (3.1). One can see by now that the main idea behind the described process of designing the resultant scene is to force MA to generate, for each actual obstacle, a segment of the path at least as long as the total length of that obstacle's boundary. Note that this characteristic of the path is independent of the algorithm X.

The MA's path in the scene can be divided into two parts, P1 and P2; P1 corresponds to the MA's traveling inside the corridor, and P2 corresponds to its traveling outside the corridor. We use the same notation to indicate the length of the corresponding part. Both parts can become intermixed since, after having left the corridor, MA can temporarily return into it. Since part P2 starts at the exit point of the corridor, then

where ![]() is the hypotenuse AT of the triangle ATS (Figure 3.1a). As for part P1 of the path inside the corridor, it will be, depending on the algorithm X, some curve. Observe that in order to defeat the bound—that is, produce a path shorter than the bound (3.1)—algorithm X has to decrease the “path per obstacle” ratio as much as possible. What is important for the proof is that, from the “path per obstacle” standpoint, every segment of P1 that does not result in creating an equivalent segment of the actualized obstacle makes the path worse. All possible alternatives for P1 can be clustered into three groups. We now consider these groups separately.

is the hypotenuse AT of the triangle ATS (Figure 3.1a). As for part P1 of the path inside the corridor, it will be, depending on the algorithm X, some curve. Observe that in order to defeat the bound—that is, produce a path shorter than the bound (3.1)—algorithm X has to decrease the “path per obstacle” ratio as much as possible. What is important for the proof is that, from the “path per obstacle” standpoint, every segment of P1 that does not result in creating an equivalent segment of the actualized obstacle makes the path worse. All possible alternatives for P1 can be clustered into three groups. We now consider these groups separately.

- Part P1 of the path never touches walls of the virtual obstacle (Figure 3.1a). As a result, no actual obstacles will be created in this case, Σi pi = 0. Then the resulting path is P > D, and so for an algorithm X that produces this kind of path the theorem holds. Moreover, at the final evaluation, where only actual obstacles count, the algorithm X will not be judged as efficient: It creates an additional path component at least equal to (2 · L + (C − D)), in a scene with no obstacles!

- MA touches more than once one or both inside walls of the virtual obstacle (Figure 3.1b). That is, between consecutive touches of walls, MA is temporarily “out of touch” with the virtual obstacle. As a result, part P1 of the path will produce a number of disconnected actual obstacles. The smallest of these, of length δ, corresponds to point touches. Observe that in terms of the “path per obstacle” assessment, this kind of strategy is not very wise either. First, for each actual obstacle, a segment of the path at least as long as the obstacle perimeter is created. Second, additional segments of P1, those due to traveling between the actual obstacles, are produced. Each of these additional segments is at least not smaller than 2W, if the two consecutive touches correspond to the opposite walls of the virtual obstacle, or at least not smaller than the distance between two sequentially visited disconnected actual obstacles on the same wall. Therefore, the length P of the path exceeds the right side in (3.1), and so the theorem holds.

- MA touches the inside walls of the virtual obstacle at most once. This case includes various possibilities, from a point touching, which creates a single actual obstacle of length δ, to the case when MA closely follows the inside wall of the virtual obstacle. As one can see in Figure 3.1c, this case contains interesting paths. The shortest possible path would be created if MA goes directly from point S to the furthest point of the virtual obstacle and then directly to point T (path Pa, Figure 3.1c). (Given the fact that MA knows nothing about the obstacles, a path that good can be generated only by an accident.) The total perimeter of the obstacle(s) here is 2δ, and the theorem clearly holds.

Finally, the most efficient path, from the “path per obstacle” standpoint, is produced if MA closely follows the inside wall of the virtual obstacle and then goes directly to point T (path Pb, Figure 3.1c). Here MA is doing its best in trying to compensate each segment of the path with an equivalent segment of the actual obstacle. In this case, the generated path P is equal to

(In the path Pb in Figure 3.1c, there is only one term in Σi pi.) Since no constraints have been imposed on the choice of lengths D and W, take them such that

which is always possible because the right side in (3.4) is nonnegative for any D and W. Reverse both the sign and the inequality in (3.4), and add (D + Σi pi) to its both sides. With a little manipulation, we obtain

This exhausts all possible cases of path generation by the algorithm X. Q.E.D.

We conclude this section with two remarks. First, by appropriately selecting multiple virtual obstacles, Theorem 3.2.1 can be extended to an arbitrary number of obstacles. Second, for the lower bound (3.1) to hold, the constraints on the information available to MA can be relaxed significantly. Namely, the only required constraint is that at any time moment MA does not have complete information about the scene.

We are now ready to consider specific sensor-based path planning algorithms. In the following sections we will introduce three algorithms, analyze their performance, and derive the upper bounds on the length of the paths they generate.

3.3 BASIC ALGORITHMS

3.3.1 First Basic Algorithm: Bug1

This procedure is executed at every point of the MA's (continuous) path [17, 58]. Before describing it formally, consider the behavior of MA when operating under this procedure (Figure 3.2). According to the definitions above, when on its way from point S (Start) to point T (Target), MA encounters an ith obstacle, it defines on it a hit point Hi,i = 1, 2,…. When leaving the ith obstacle in order to continue toward T, MA defines a leave point Li. Initially i = 1, L0 = S. The procedure will use three registers—R1, R2, and R3—to store intermediate information. All three are reset to zero when a new hit point is defined. The use of the registers is as follows:

- R1 is used to store coordinates of the latest point, Qm, of the minimum distance between the obstacle boundary and point T; this takes one comparison at each path point. (In case of many choices for Qm, any one of them can be taken.)

- R2 integrates the length of the ith obstacle boundary starting at Hi.

- R3 integrates the length of the ith obstacle boundary starting at Qm.

We are now ready to describe the algorithm's procedure. The test for target reachability mentioned in Step 3 of the procedure will be explained further in this section.

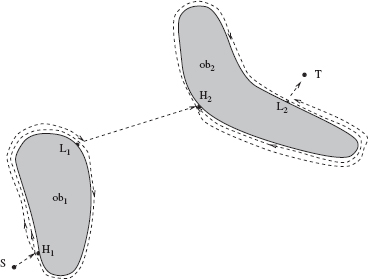

Figure 3.2 The path of the robot (dashed lines) under algorithm Bug1. ob1 and ob2 are obstacles, H1 and H2 are hit points, L1 and L2 are leave points.

Bug1 Procedure

- From point Li−1, move toward point T (Target) along the straight line until one of these occurs:

(a) Point T is reached. The procedure stops.

(b) An obstacle is encountered and a hit point, Hi, is defined. Go to Step 2.

- Using the local direction, follow the obstacle boundary. If point T is reached, stop. Otherwise, after having traversed the whole boundary and having returned to Hi, define a new leave point Li = Qm. Go to Step 3.

- Based on the contents of registers R2 and R3, determine the shorter way along the boundary to point Li, and use it to reach Li. Apply the test for target reachability. If point T is not reachable, the procedure stops. Otherwise, set i = i + 1 and go to Step 1.

Analysis of Algorithm Bug1

Lemma 3.3.1. Under Bug1 algorithm, when MA leaves a leave point of an obstacle in order to continue toward point T, it will never return to this obstacle again.

Proof: Assume that on its way from point S to point T, MA does meet some obstacles. We number those obstacles in the order in which MA encounters them. Then the following sequence of distances appears:

![]()

If point S happens to be on an obstacle boundary and the line (S, T) crosses that obstacle, then D = d(H1).

According to our model, if MA's path touches an obstacle tangentially, then MA needs not walk around it; it will simply continue its straight-line walk toward point T. In all other cases of meeting an ith obstacle, unless point T lies on an obstacle boundary, a relation d(Hi) > d(Li) holds. This is because, on the one hand, according to the model, any straight line (except a line that touches the obstacle tangentially) crosses the obstacle at least in two distinct points. This is simply a reflection of the finite “thickness” of obstacles. On the other hand, according to algorithm Bug1, point Li is the closest point from obstacle i to point T. Starting from Li, MA walks straight to point T until (if ever) it meets the (i + 1)th obstacle. Since, by the model, obstacles do not touch one another, then d(Li) > d(Hi+1). Our sequence of distances, therefore, satisfies the relation

where d(H1) is or is not equal to D. Since d(Li) is the shortest distance from the ith obstacle to point T, and since (3.6) guarantees that algorithm Bug1 monotonically decreases the distances d(Hi) and d(Li) to point T, Lemma 3.3.1 follows. Q.E.D.

The important conclusion from Lemma 3.3.1 is that algorithm Bug1 guarantees to never create cycles.

Corollary 3.3.1. Under Bug1, independent of the geometry of an obstacle, MA defines on it no more than one hit and no more than one leave point.

To assess the algorithm's performance—in particular, we will be interested in the upper bound on the length of paths that it generates—an assurance is needed that on its way to point T, MA can encounter only a finite number of obstacles. This is not obvious: While following the algorithm, MA may be “looking” at the target not only from different distances but also from different directions. That is, besides moving toward point T, it may also rotate around it (see Figure 3.3). Depending on the scene, this rotation may go first, say, clockwise, then counterclockwise, then again clockwise, and so on. Hence we have the following lemma.

Lemma 3.3.2. Under Bug1, on its way to the Target, MA can meet only a finite number of obstacles.

Proof: Although, while walking around an obstacle, MA may sometimes be at distances much larger than D from point T (see Figure 3.3), the straight-line segments of its path toward the point T are always within the same circle of radius D centered at point T. This is guaranteed by inequality (3.6). Since, according to our model, any disc of finite radius can intersect only a finite number of obstacles, the lemma follows. Q.E.D.

Figure 3.3 Algorithm Bug1. Arrows indicate straight-line segments of the robot's path. Path segments around obstacles are not shown; they are similar to those in Figure 3.2.

Corollary 3.3.2. The only obstacles that MA can be meet under algorithm Bug1 are those that intersect the disk of radius D centered at target T.

Together, Lemma 3.3.1, Lemma 3.3.2, and Corollary 3.3.2 guarantee convergence of the algorithm Bug1.

Theorem 3.3.1. Algorithm Bug1 is convergent.

We are now ready to tackle the performance of algorithm Bug1. As discussed, it will be established in terms of the length of paths that the algorithm generates. The following theorem gives an upper bound on the path lengths produced by Bug1.

Theorem 3.3.2. The length of paths produced by algorithm Bug1 obeys the limit,

where D is the distance (Start, Target), and Σi pi includes perimeters of obstacles intersecting the disk of radius D centered at the Target.

Proof: Any path generated by algorithm Bug1 can be looked at as consisting of two parts: (a) straight-line segments of the path while walking in free space between obstacles and (b) path segments when walking around obstacles. Due to inequality (3.6), the sum of the straight-line segments will never exceed D. As to path segments around obstacles, algorithm Bug1 requires that in order to define a leave point on the ith obstacle, MA has to first make a “full circle” along its boundary. This produces a path segment equal to one perimeter, pi, of the ith obstacle, with its end at the hit point. By the time MA has completed this circle and is ready to walk again around the ith obstacles from the hit to the leave point, in order to then depart for point T, the procedure prescribes it to go along the shortest path. By then, MA knows the direction (going left or going right) of the shorter path to the leave point. Therefore, its path segment between the hit and leave points along the ith obstacle boundary will not exceed 0.5 · pi. Summing up the estimates for straight-line segments of the path and segments around the obstacles met by MA on its way to point T, obtain (3.7). Q.E.D.

Further analysis of algorithm Bug1 shows that our model's requirement that MA knows its own coordinates at all times can be eased. It suffices if MA knows only its distance to and direction toward the target T. This information would allow it to position itself at the circle of a given radius centered at T. Assume that instead of coordinates of the current point Qm of minimum distance between the obstacle and T, we store in register R1 the minimum distance itself. Then in Step 3 of the algorithm, MA can reach point Qm by comparing its current distance to the target with the content of register R1. If more than one point of the current obstacle lie at the minimum distance from point T, any one of them can be used as the leave point, without affecting the algorithm's convergence.

In practice, this reformulated requirement may widen the variety of sensors the robot can use. For example, if the target sends out, equally in all directions, a low-frequency radio signal, a radio detector on the robot can (a) determine the direction on the target as one from which the signal is maximum and (b) determine the distance to it from the signal amplitude.

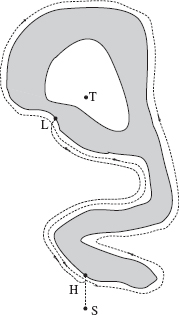

Test for Target Reachability. The test for target reachability used in algorithm Big1 is designed as follows. Every time MA completes its exploration of a new obstacle i, it defines on it a leave point Li. Then MA leaves the ith obstacle at Li and starts toward the target T along the straight line (Li, T). According to Lemma 3.3.1, MA will never return again to the ith obstacle. Since point Li is by definition the closest point of obstacle i to point T, there will be no parts of the obstacle i between points Li and T. Because, by the model, obstacles do not touch each other, point Li cannot belong to any other obstacle but i. Therefore, if, after having arrived at Li in Step 3 of the algorithm, MA discovers that the straight line (Li, T) crosses some obstacle at the leave point Li, this can only mean that this is the ith obstacle and hence target T is not reachable—either point S or point T is trapped inside the ith obstacle.

To show that this is true, let O be a simple closed curve; let X be some point in the scene that does not belong to O; let L be the point on O closest to X; and let (L, X) be the straight-line segment connecting L and X. All these are in the plane. Segment (L, X) is said to be directed outward if a finite part of it in the vicinity of point L is located outside of curve O. Otherwise, if segment (L, X) penetrates inside the curve O in the vicinity of L, it is said to be directed inward.

The following statement holds: If segment (L, X) is directed inward, then X is inside curve O. This condition is necessary because if X were outside curve O, then some other point of O that would be closer to X than to L would appear in the intersection of (L, X) and O. By definition of the point L, this is impossible. The condition is also sufficient because if segment (L, X) is directed inward and L is the point on curve O that is the closest to X, then segment (L, X) cannot cross any other point of O, and therefore X must lie inside O. This fact is used in the following test that appears as a part in Step 3 of algorithm Bug1:

Test for Target Reachability. If, while using algorithm Bug1, after having defined a point L on an obstacle, MA discovers that the straight line segment (L, Target) crosses the obstacle at point L, then the target is not reachable.

One can check the test on the example shown in Figure 3.4. Starting at point T, the robot encounters an obstacle and establishes on it a hit point H. Using the local direction “left,” it then does a full exploration of the (accessible) boundary of the obstacle. Once it arrives back at point H, its register R1 will contain the location of the point on the boundary that is the closest to T. This happens to be point L. The robot then walks to L by the shortest route (which it knows from the information it now has) and establishes on it the leave point L. At this point, algorithm Bug1 prescribes it to move toward T. While performing the test for target reachability, however, the robot will note that the line (L, T) enters the obstacle at L and hence will conclude that the target is not reachable.

Figure 3.4 Algorithm Bug1. An example with an unreachable target (a trap).

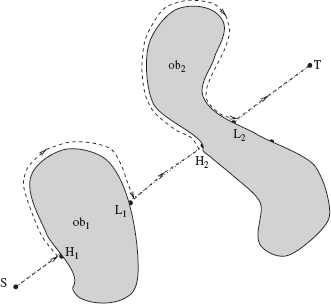

3.3.2 Second Basic Algorithm: Bug2

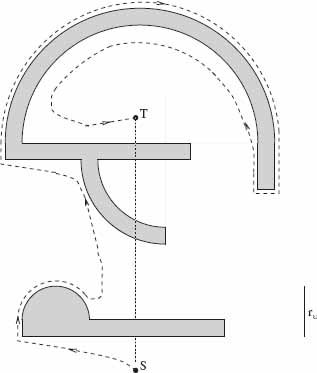

Similar to the algorithm Bug1, the procedure Bug2 is executed at every point of the robot's (continuous) path. As before, the goal is to generate a path from the start to the target position. As will be evident later, three important properties distinguish algorithm Bug2 from Bug1: Under Bug2, (a) MA can encounter the same obstacle more than once, (b) algorithm Bug2 has no way of distinguishing between different obstacles, and (c) the straight line (S, T) that connects the starting and target points plays a crucial role in the algorithm's workings. The latter line is called M-line (for Main line). In imprecise words, the reason M-line is so important is that the procedure uses it to index its progress toward the target and to ensure that the robot does not get lost.

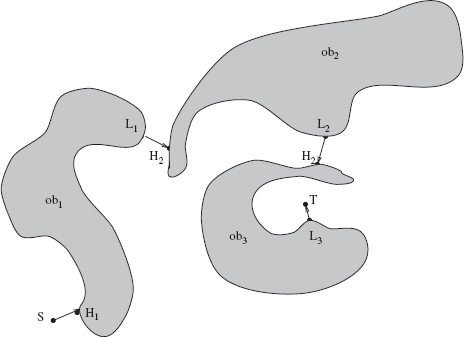

Because of these differences, we need to change the notation slightly: Subscript i will be used only when referring to more than one obstacle, and superscript j will be used to indicate the jth occurrence of a hit or leave points on the same or on a different obstacle. Initially, j = 1; L0 = Start. Similar to Bug1, the Bug2 procedure includes a test for target reachability, which is built into Steps 2b and 2c of the procedure. The test is explained later in this section. The reader may find it helpful to follow the procedure using an example in Figure 3.5.

Figure 3.5 Robot's path (dashed line) under Algorithm Bug2.

- From point Lj−1, move along the M-line (straight line (S, T)) until one of these occurs:

(a) Target T is reached. The procedure stops.

(b) An obstacle is encountered and a hit point, Hj, is defined. Go to Step 2.

- Using the accepted local direction, follow the obstacle boundary until one of these occurs:

(a) Target T is reached. The procedure stops.

(b) M-line is met at a point Q such that distance d(Q) < d(Hj), and straight line (Q, T) does not cross the current obstacle at point Q. Define the leave point Lj = Q. Set j = j + 1. Go to Step 1.

(c) MA returns to Hj and thus completes a closed curve along the obstacle boundary, without having defined the next hit point, Hj+1. Then, the target point T is trapped and cannot be reached. The procedure stops.

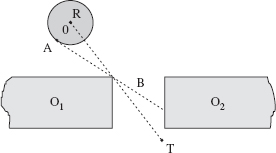

Unlike with algorithm Bug1, more than one hit and more than one leave point can be generated on a single obstacle under algorithm Bug2 (see the example in Figure 3.6). Note also that the relationship between perimeters of the obstacles and the length of paths generated by Bug2 is not as clear as in the case of algorithm Bug1. In Bug1, the perimeter of an obstacle met by MA is traversed at least once and never more than l.5 times. In Bug2, more options appear. A path segment around an obstacle generated by MA is sometimes shorter than the obstacle perimeter (Figure 3.5), which is good news: We finally see something “intelligent.” Or, when a straight-line path segment of the path meets an obstacle almost tangentially and MA happened to be walking around the obstacle in an “unfortunate” direction, the path can become equal to the obstacle's full perimeter (Figure 3.7). Finally, as Figure 3.6a demonstrates, the situation can get even worse: MA may have to pass along some segments of a maze-like obstacle more than once and more than twice. (We will return to this case later in this section.)

Analysis of Algorithm Bug2

Lemma 3.3.3. Under Bug2, on its way to the target, MA can meet only a finite number of obstacles.

Proof: Although, while walking around an obstacle, MA may at times find itself at distances much larger than D from point T (Target), its straight-line path segments toward T are always within the same circle of radius D centered at T. This is guaranteed by the algorithm's condition that d(Lj, T) > d(Hj, T) (see Step 2 of Bug2 procedure). Since, by the model, any disc of finite radius can intersect with only a finite number of obstacles, the lemma follows. Q.E.D.

Corollary 3.3.3. The only obstacles that MA can meet while operating under algorithm Bug2 are those that intersect the disc of radius D centered at the target.

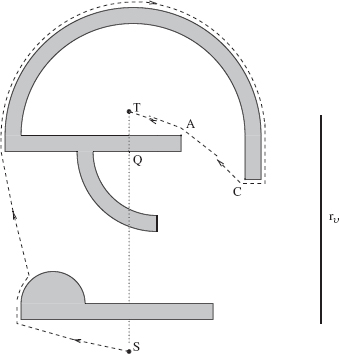

Figure 3.6 (a, b) Robot's path around a maze-like obstacle under Algorithm Bug2 (in-position case). Both obstacles (a) and (b) are similar, except in (a) the M-line (straight line (S, T)) crosses the obstacle 10 times, and in (b) it crosses 14 times. MA passes through the same path segment(s) at most three times (here, through segment (H1, L1)). Thus, at most two local cycles are created in this examples.

Figure 3.7 In this example, under Algorithm Bug2 the robot will make almost a full circle around this convex obstacle.

Figure 3.8 Robot's path in an in-position case; here point S is outside of the obstacle, and T is inside.

Moreover, the only obstacles that can be met by MA are those that intersect the M-line (straight line (Start, Target)).

Definition 3.3.1. For the given local direction, a local cycle is created when MA has to pass some point of its path more than once.

In the example in Figure 3.5, no local cycles are created; in Figures 3.6 and 3.8 there are local cycles.

Definition 3.3.2. The term in-position refers to a mutual position of points (Start, Target) and a given obstacle, such that (1) the M-line crosses the obstacle boundary at least once, and (2) either Start or Target lie inside the convex hull of the obstacle. The term out-position refers to a mutual position of points (Start, Target) and a given obstacle, such that both points Start and Target lie outside the convex hull of the obstacle. A given scene is referred to as an in-position case if at least one obstacle in the scene creates an in-position situation; otherwise, the scene presents an out-position case.

For example, the scene in Figure 3.3 is an in-position case. Without obstacle ob3, the scene would have been an out-position case.

We denote ni to be the number of intersections between the M-line (straight line (S, T)) and the ith obstacle; ni is thus a characteristic of the set (scene, Start, Target) and not of the algorithm. Obviously, for any convex obstacle, ni = 2.

If an obstacle is not convex but still ni = 2, the path generated by Bug2 can be as simple as that for a convex obstacle (see, e.g., Figure 3.5, obstacle ob2). Even with more complex obstacles, such as that in Figure 3.6, the situation and the resulting path can be quite simple. For example, in this same scene, if the M-line happened to be horizontal, we would have ni = 2 and a very simple path.

The path can become more complicated if ni > 2 and we are dealing with an in-position case. In Figure 3.6a, the segment of the boundary from point H1 to point L1, (H1, L1), will be traversed three times: segments (L1, L2) and (H2, H1), twice each; and segments (L2, L3) and (H3, H2), once each. On the other hand, if in this example the M-line line extends below, so that point S is under the whole obstacle (that is, this becomes an out-position case), the path will again become very simple, with no local cycles (in spite of high ni number).

Lemma 3.3.4. Under Bug2, MA will pass any point of the ith obstacle boundary at most ni/2 times.

Proof: As one can see, procedure Bug2 does not distinguish whether two consecutive obstacle crossings by the M-line (straight line (S, T)) correspond to the same or to different obstacles. Without loss of generality, assume that only one obstacle is present; then we can drop the index i. For each hit point Hj, the procedure will make MA walk around the obstacle until it reaches the corresponding leave point, Lj. Therefore, all H and L points appear in pairs, (Hj, Lj). Because, by the model, all obstacles are of finite “thickness,” for each pair (Hj, Lj) an inequality holds, d(Hj) > d(Lj). After leaving Lj, MA walks along a straight line to the next hit point, Hj+1. Since, according to the model, the distance between two crossings of the obstacle by a straight line is finite, we have d(Lj) > d(Hj+1). This produces a chain of inequalities for all H and L points,

Therefore, although any H or L point may be passed more than once, it will be defined as an H(correspondingly, L) point only once. That point can hence generate only one new passing of the same segment of the obstacle perimeter. In other words, each pair (Hj, Lj) can give rise to only one passing of a segment of the obstacle boundary. This means that ni crossings will produce at most ni/2 passings of the same path segment. Q.E.D.

The lemma guarantees that the procedure terminates, and it gives a limit on the number of generated local cycles. Using the lemma, we can now produce an upper bound on the length of paths generated by algorithm Bug2.

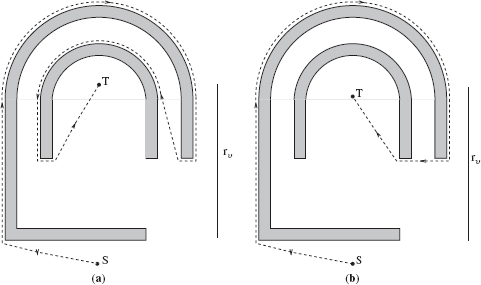

Theorem 3.3.3. The length of a path generated by algorithm Bug2 will never exceed the limit

where D is the distance (Start, Target), and pi refers to perimeters of obstacles that intersect the M-line (straight line segment (Start, Target)). This means Bug2 is convergent.

Proof: Any path can be looked at as consisting of two parts: (a) straight-line segments of the M-line between the obstacles that intersect it and (b) path segments that relate to walking around obstacle boundaries. Because of inequality (3.8), the sum of the straight line segments will never exceed D. As to path segments around obstacles, there is an upper bound guaranteed by Lemma 3.3.4 for each obstacle met by MA on its path: No more than ni/2 passings along the same segment of the obstacle boundary will take place. Because of Lemma 3.3.3 (see its proof), only those obstacles that intersect the M-line should be counted. Summing up the straight-line segments and segments that correspond to walking around obstacles, obtain (3.9). Q.E.D.

Theorem 3.3.3 suggests that in some “bad” scenes, under algorithm Bug2, MA may be forced to go around obstacles any large, albeit finite, number of times. An important question, therefore, is how typical such “bad” scenes are.

In particular, other things being equal, what characteristics of the scene influence the length of the path? Theorem 3.3.4 and its corollary below address this question. They suggest that the mutual position of point S, point T, and obstacles in the scene can affect the path length rather dramatically. Together, they significantly improve the upper bound on the length of paths generated by Bug2—in out-position scenes in general and in scenes with convex obstacles in particular.

Theorem 3.3.4. Under algorithm Bug2, in the case of an out-position scene, MA will pass any point of an obstacle boundary at most once.

In other words, if the mutual position of the obstacle and of points S and T satisfies the out-position definition, the estimate on the length of paths generated by Bug2 reaches the universal lower bound (3.1). That is a very good news indeed. Out-position situations are rather common for mobile robots.3 We know already that in some situations, algorithm Bug2 is extremely efficient and traverses only a fraction of obstacle boundaries. Now the theorem tells us that as long as the robot deals with an out-position situation, even in the most unlucky case it will not traverse more than 1.5 times the obstacle boundaries involved.

Proof: Figure 3.9 is used to illustrate the proof. Shaded areas in the figure correspond to one or many obstacles. Dashed boundaries indicate that obstacle boundaries in these areas can be of any shape.

Consider an obstacle met by MA on its way to the Target, and consider an arbitrary point Q on the obstacle boundary (not shown in the figure). Assume that Q is not a hit point. Because the obstacle boundary is a simple closed curve, the only way that MA can reach point Q is to come to it from a previously defined hit point. Now, move from Q along the already generated path segment in the direction opposite to the accepted local direction, until the closest hit point on the path is encountered; say, that point is Hj. We are interested only in those cases where Q is involved in at least one local cycle—that is, when MA passes point Q more than once. For this event to occur, MA has to pass point Hj at least as many times. In other words, if MA does not pass Hj more than once, it cannot pass Q more than once.

Figure 3.9 Illustration for Theorem 3.3.4.

According to the Bug2 procedure, the first time MA reaches point Hj it approaches it along the M-line (straight line (Start, Target))—or, more precisely, along the straight line segment (Lj−1, T). MA then turns left and starts walking around the obstacle. To form a local cycle on this path segment, MA has to return to point Hj again. Since a point can become a hit point only once (see the proof for Lemma 3.3.4), the next time MA returns to point Hj it must approach it from the right (see Figure 3.9), along the obstacle boundary. Therefore, after having defined Hj, in order to reach it again, this time from the right, MA must somehow cross the M-line and enter its right semiplane. This can take place in one of only two ways: outside or inside the interval (S, T). Consider both cases.

- The crossing occurs outside the interval (S, T). This case can correspond only to an in-position configuration (see Definition 3.3.2). Theorem 3.3.4, therefore, does not apply.

- The crossing occurs inside the interval (S, T). We want to prove now that such a crossing of the path with the interval (S, T) cannot produce local cycles. Notice that the crossing cannot occur anywhere within the interval (S, H j) because otherwise at least a part of the straight-line segment (Lj−1, Hj) would be included inside the obstacle. This is impossible because MA is known to have walked along the whole segment (Lj−1, Hj). If the crossing occurs within the interval (Hj, T), then at the crossing point MA would define the corresponding leave point, Lj, and start moving along the line (S, T) toward the target T until it defined the next hit point, Hj+1, or reached the target. Therefore, between points Hj and Lj, MA could not have reached into the right semiplane of the M-line (see Figure 3.9).

Since the above argument holds for any Q and the corresponding Hj, we conclude that in an out-position case MA will never cross the interval (Start, Target) into the right semiplane, which prevents it from producing local cycles. Q.E.D.

So far, no constraints on the shape of the obstacles have been imposed. In a special case when all the obstacles in the scene are convex, no in-position configurations can appear, and the upper bound on the length of paths generated by Bug2 can be improved:

Corollary 3.3.4. If all obstacles in the scene are convex, then in the worst case the length of the path produced by algorithm Bug2 is

and, on the average,

where D is distance (Start, Target), and pi refer to perimeters of the obstacles that intersect the straight line segment (Start, Target).

Consider a statistically representative number of scenes with a random distribution of convex obstacles in each scene, a random distribution of points Start and Target over the set of scenes, and a fixed local direction as defined above. The M-line will cross obstacles that it intersects in many different ways. Then, for some obstacles, MA will be forced to cover the bigger part of their perimeters (as in the case of obstacle ob1, Figure 3.5); for some other obstacles, MA will cover only a smaller part of their perimeters (as with obstacle ob2, Figure 3.5).

On the average, one would expect a path that satisfies (3.11). As for (3.10), Figure 3.7 presents an example of such a “noncooperating” obstacle. Corollary 3.3.4 thus ensures that for a wide range of scenes the length of paths generated by algorithm Bug2 will not exceed the universal lower bound (3.1).

Test for Target Reachability. As suggested by Lemma 3.3.4, under Bug2 MA may pass the same point Hj of a given obstacle more than once, producing a finite number p of local cycles, p = 0, 1, 2,…. The proof of the lemma indicates that after having defined a point Hj, MA will never define this point again as an H or an L point. Therefore, on each of the subsequent local cycles (if any), point Hj will be passed not along the M-line but along the obstacle boundary. After having left point Hj, MA can expect one of the following to occur:

MA will never return again to Hj; this happens, for example, if it leaves the current obstacle altogether or reaches the Target T.

MA will define at least the first pair of points (Lj, Hj+1), … , and will then return to point Hj, to start a new local cycle.

MA will come back to point Hj without having defined a point Lj on the previous cycle. This means that MA could find no other intersection point Q of the line (Hj, T) with the current obstacle such that Q would be closer to the point T than Hj, and the line (Q, T) would not cross the current obstacle at Q. This can happen only if either MA or point T are trapped inside the current obstacle (see Figure 3.10). The condition is both necessary and sufficient, which can be shown similar to the proof in the target reachability test for algorithm Bug1 (Section 3.3.1).

Based on this observation, we now formulate the test for target reachability for algorithm Bug2.

Figure 3.10 Examples where no path between points S and T is possible (traps), algorithm Bug2. The path is the dashed line. After having defined the hit point H2, the robot returns to it before it defines any new leave point. Therefore, the target is not reachable.

Test for Target Reachability. If, on the pth local cycle, p = 0, 1, … , after having defined a hit point Hj, MA returns to this point before it defines at least the first two out of the possible set of points Lj, Hj+1, … , Hk, this means that MA has been trapped and hence the target is not reachable.

We have learned that in in-position situations algorithm Bug2 may become inefficient and create local cycles, visiting some areas of its path more than once. How can we characterize those situations? Does starting or ending “inside” the obstacle—that is, having an in-position situation—necessarily lead to such inefficiency? This is clearly not so, as one can see from the following example of Bug2 operating in a maze (labyrinth). Consider a version of the labyrinth problem where the robot, starting at one point inside the labyrinth, must reach some other point inside the labyrinth. The well-known mice-in-the-labyrinth problem is sometimes formulated this way. Consider an example4 shown in Figure 3.11.

Figure 3.11 Example of a walk (dashed line) in a maze under algorithm Bug2. S, Start; T, Target.

Given the fact that no bird's-eye view of the maze is available to MA (at each moment it can see only the small cell that it is passing), the MA's path looks remarkably efficient and purposeful. (It would look even better if MA's sensing was something better than simple tactile sensing; see Figure 3.20 and more on this topic in Section 3.6.) One reason for this is, of course, that no local cycles are produced here. In spite of its seeming complexity, this maze is actually an easy scene for the Bug2 algorithm.

Let's return to our question, How can we classify in-position situations, so as to recognize which one would cause troubles to the algorithm Bug2? This question is not clear at the present time. The answer, likely tied to the topological properties of the combination (scene, Start, Target), is still awaiting a probing researcher.

3.4 COMBINING GOOD FEATURES OF BASIC ALGORITHMS

Each of the algorithms Bug1 and Bug2 has a clear and simple, and quite distinct, underlying idea: Bug1 “sticks” to every obstacle it meets until it explores it fully; Bug2 sticks to the M-line (line (Start, Target)). Each has its pluses and minuses. Algorithm Bug1 never creates local cycles; its worse-case performance looks remarkably good, but it tends to be “overcautious” and will never cover less than the full perimeter of an obstacle on its way. Algorithm Bug2, on the other hand, is more “human” in that it can “take a risk.” It takes advantage of simpler situations; it can do quite well even in complex scenes in spite of its frighteningly high worst-case performance—but it may become quite inefficient, much more so than Bug1, in some “unlucky” cases.

The difficulties that algorithm Bug2 may face are tied to local cycles— situations when the robot must make circles, visiting the same points of the obstacle boundaries more than once. The source of these difficulties lies in what we called in-position situations (see the Bug2 analysis above). The problem is of topological nature. As the above estimates of Bug2 “average” behavior show, its performance in out-positions situations may be remarkably good; these are situations that mobile robots will likely encounter in real-life scenes.

On the other hand, fixing the procedure so as to handle in-position situations well would be an important improvement. One simple idea for doing this is to attempt a procedure that combines the better features of both basic algorithms. (As always, when attempting to combine very distinct ideas, the punishment will be the loss of simplicity and elegance of both algorithms.) We will call this procedure BugM1 (for “modified”) [59]. The procedure combines the efficiency of algorithm Bug2 in simpler scenes (where MA will pass only portions, instead of full perimeters, of obstacles, as in Figure 3.5) with the more conservative, but in the limit the more economical, strategy of algorithm Bug1 (see the bound (3.7)). The idea is simple: Since Bug2 is quite good except in cases with local cycles, let us try to switch to Bug1 whenever MA concludes that it is in a local cycle. As a result, for a given point on a BugM1 path, the number of local cycles containing this point will never be larger than two; in other words, MA will never pass the same point of the obstacle boundary more than three times, producing the upper bound

Algorithm BugM1 is executed at every point of the continuous path. Instead of using the fixed M-line (straight line (S, T)), as in Bug2, BugM1 uses a straight-line segment ![]() with a changing point

with a changing point ![]() here,

here, ![]() indicates the jth leave point on obstacle i. The procedure uses three registers, R1, R2, and R3, to store intermediate information. All three are reset to zero when a new hit point

indicates the jth leave point on obstacle i. The procedure uses three registers, R1, R2, and R3, to store intermediate information. All three are reset to zero when a new hit point ![]() is defined:

is defined:

- Register R1 stores coordinates of the current point, Qm, of minimum distance between the obstacle boundary and the Target.

- R2 integrates the length of the obstacle boundary starting at

.

. - R3 integrates the length of the obstacle boundary starting at Qm. (In case of many choices for Qm, any one of them can be taken.)

The test for target reachability that appears in Step 2d of the procedure is explained lower in this section. Initially, i = 1, j = 1; ![]() = Start. The BugM1 procedure includes these steps:

= Start. The BugM1 procedure includes these steps:

- From point

, move along the line (

, move along the line ( , Target) toward Target until one of these occurs:

, Target) toward Target until one of these occurs:

(a) Target is reached. The procedure stops.

(b) An ith obstacle is encountered and a hit point,

, is defined. Go to Step 2.

, is defined. Go to Step 2. - Using the accepted local direction, follow the obstacle boundary until one of these occurs:

(a) Target is reached. The procedure stops.

(b) Line (

, Target) is met inside the interval (

, Target) is met inside the interval ( , Target), at a point Q such that distance d(Q) < d(Hj), and the line (Q, Target) does not cross the current obstacle at point Q. Define the leave point

, Target), at a point Q such that distance d(Q) < d(Hj), and the line (Q, Target) does not cross the current obstacle at point Q. Define the leave point  = Q. Set j = j + 1. Go to Step 1.

= Q. Set j = j + 1. Go to Step 1.(c) Line (

, Target) is met outside the interval (

, Target) is met outside the interval ( , Target). Go to Step 3.

, Target). Go to Step 3.(d) The robot returns to

and thus completes a closed curve (of the obstacle boundary) without having defined the next hit point. The target cannot be reached. The procedure stops.

and thus completes a closed curve (of the obstacle boundary) without having defined the next hit point. The target cannot be reached. The procedure stops. - Continue following the obstacle boundary. If the target is reached, stop. Otherwise, after having traversed the whole boundary and having returned to point

, define a new leave point

, define a new leave point  . Go to Step 4.

. Go to Step 4. - Using the contents of registers R2 and R3, determine the shorter way along the obstacle boundary to point

, and use it to get to

, and use it to get to  . Apply the test for Target reachability (see below). If the target is not reachable, the procedure stops. Otherwise, designate

. Apply the test for Target reachability (see below). If the target is not reachable, the procedure stops. Otherwise, designate  , set i = i + 1, j = 1, and go to Step 1.

, set i = i + 1, j = 1, and go to Step 1.

As mentioned above, the procedure itself BugM1 is obviously longer and “messier” compared to the elegantly simple procedures Bug1 and Bug2. That is the price for combining two algorithms governed by very different principles. Note also that since at times BugM1 may leave an obstacle before it fully explores it, according to our classification above it falls into the Class 2.

What is the mechanism of algorithm BugM1 convergence? Depending on the scene, the algorithm's flow fits one of the following two cases.

Case 1. If the condition in Step 2c of the procedure is never satisfied, then the algorithm flow follows that of Bug2—for which convergence has been already established. In this case, the straight lines (![]() , Target) always coincide with the M-line (straight line (Start, Target)), and no local cycles appear.

, Target) always coincide with the M-line (straight line (Start, Target)), and no local cycles appear.

Case 2. If, on the other hand, the scene presents an in-position case, then the condition in Step 2c is satisfied at least once; that is, MA crosses the straight line (![]() , Target) outside the interval (

, Target) outside the interval (![]() , Target). This indicates that there is a danger of multiple local cycles, and so MA switches to a more conservative procedure Bug1, instead of risking an uncertain number of local cycles it might now expect from the procedure Bug2 (see Lemma 3.3.4). MA does this by executing Steps 3 and 4 of BugM1, which are identical to Steps 2 and 3 of Bug1.

, Target). This indicates that there is a danger of multiple local cycles, and so MA switches to a more conservative procedure Bug1, instead of risking an uncertain number of local cycles it might now expect from the procedure Bug2 (see Lemma 3.3.4). MA does this by executing Steps 3 and 4 of BugM1, which are identical to Steps 2 and 3 of Bug1.

After one execution of Steps 3 and 4 of the BugM1 procedure, the last leave point on the ith obstacle is defined, ![]() , which is guaranteed to be closer to point T than the corresponding hit point,

, which is guaranteed to be closer to point T than the corresponding hit point, ![]() [see inequality (3.7), Lemma 3.3.1]. Then MA leaves the ith obstacle, never to return to it again (Lemma 3.3.1). From now on, the algorithm (in its Steps 1 and 2) will be using the straight line (

[see inequality (3.7), Lemma 3.3.1]. Then MA leaves the ith obstacle, never to return to it again (Lemma 3.3.1). From now on, the algorithm (in its Steps 1 and 2) will be using the straight line (![]() , Target) as the “leading thread.” [Note that, in general, the line (

, Target) as the “leading thread.” [Note that, in general, the line (![]() , Target) does not coincide with the straight lines (

, Target) does not coincide with the straight lines (![]() , T) or (S, T)]. One execution of the sequence of Steps 3 and 4 of BugM1 is equivalent to one execution of Steps 2 and 3 of Bug1, which guarantees the reduction by one of the number of obstacles that MA will meet on its way. Therefore, as in Bug1, the convergence of this case is guaranteed by Lemma 3.3.1, Lemma 3.3.2, and Corollary 3.3.2. Since Case 1 and Case 2 above are independent and together exhaust all possible cases, the procedure BugM1 converges.

, T) or (S, T)]. One execution of the sequence of Steps 3 and 4 of BugM1 is equivalent to one execution of Steps 2 and 3 of Bug1, which guarantees the reduction by one of the number of obstacles that MA will meet on its way. Therefore, as in Bug1, the convergence of this case is guaranteed by Lemma 3.3.1, Lemma 3.3.2, and Corollary 3.3.2. Since Case 1 and Case 2 above are independent and together exhaust all possible cases, the procedure BugM1 converges.

3.5 GOING AFTER TIGHTER BOUNDS

The above analysis raises two questions:

- There is a gap between the bound given by (3.1), P > D + Σi pi − δ (the universal lower bound for the planning problem), and the bound given by (3.7), P < D + 1.5 · Σi pi (the upper bound for Bug1 algorithm).

What is there in the gap? Can the lower bound (3.1) be tightened upwards—or, inversely, are there algorithms that can reach it?

- How big and diverse are Classes 1 and 2?

To remind the reader, Class 1 combines algorithms in which the robot never leaves an obstacle unless and until it explores it completely. Class 2 combines algorithms that are complementary to those in Class 1: In them the robot can leave an obstacle and walk further, and even return to this obstacle again at some future time, without exploring it in full.

A decisive step toward answering the above questions was made in 1991 by A. Sankaranarayanan and M. Vidyasagar [60]. They proposed to (a) analyze the complexity of Classes 1 and 2 of sensor-based planning algorithms separately and (b) obtain the lower bounds on the lengths of generated paths for each of them. This promised tighter bounds compared to (3.1). Then, since together both classes cover all possible algorithms, the lower of the obtained bounds would become the universal lower bound. Proceeding in this direction, Sankaranarayanan and Vidyasagar obtained the lower bound for Class 1 algorithms as

and the lower bound for Class 2 algorithms as

As before, P is the length of a generated path, D is the distance (Start, Target), and pi refers to perimeters of obstacles met by the robot on its way to the target.

There are three important conclusions from these results:

- It is the bound (3.13), and not (3.1), that is today the universal lower bound: in the worst case no sensor-based motion planning algorithm can produce a path shorter than P in (3.13).

- According to the bound (3.13), algorithm Bug1 reaches the universal lower bound. That is, no algorithm in Class 1 will be able to do better than Bug1 in the worst case.

- According to bounds (3.13) and (3.14), in the worst case no algorithm from either of the two classes can do better than Bug1.

How much variety and how many algorithms are there in Classes 1 and 2? For Class 1, the answer is simple: At this time, algorithm Bug1 is the only representative of Class 1. The future will tell whether this represents just the lack of interest in the research community to such algorithms or something else. One can surmise that it is both: The underlying mechanism of this class of algorithms does not promise much richness or unusual algorithms, and this gives little incentive for active research.

In contrast, a lively innovation and variety has characterized the development in Class 2 algorithms. At least a dozen or so algorithms have appeared in literature since the problem was first formulated and the basic algorithms were reported. Since some such algorithms make use of the types of sensing that are more elaborate than basic tactile sensing used in this section, we defer a survey in this area until Section 3.8, after we discuss in the next section the effect of more complex sensing on sensor-based motion planning.

3.6 VISION AND MOTION PLANNING

In the previous section we developed the framework for designing sensor-based path planning algorithms with proven convergence. We designed some algorithms and studied their properties and performance. For clarity, we limited the sensing that the robot possesses to (the most simple) tactile sensing. While tactile sensing plays an important role in real-world robotics—in particular in short-range motion planning for object manipulation and for escaping from tight places—for general collision avoidance, richer remote sensing such as computer vision or range sensing present more promising options.

The term “range” here refers to devices that directly provide distance information, such as a laser ranger. A stereo vision device would be another option. In order to successfully negotiate a scene with obstacles, a mobile robot can make a good use of distance information to objects it is passing.

Here we are interested in exploring how path planning algorithms would be affected by the sensing input that is richer and more complex than tactile sensing. In particular, can algorithms that operate with richer sensory data take advantage of additional sensor information and deliver better path length performance—to put it simply, shorter paths—than when using tactile sensing? Does proximal or distant sensing really help in motion planning compared to tactile sensing, and, if so, in what way and under what conditions? Although this question is far from trivial and is important for both theory and practice (this is manifested by a recent continuous flow of experimental works with “seeing” robots), there have been little attempts to address this question on the algorithmic level.

We are thus interested in algorithms that can make use of a range finder or stereo vision and that, on the one hand, are provably correct and, on the other hand, would let, say, a mobile robot deliver a reasonable performance in nontrivial scenes. It turns out that the answers to the above question are not trivial as well. First, yes, algorithms can be modified so as to take advantage of better sensing. Second, extensive modifications of “tactile” motion planning algorithms are needed in order to fully utilize additional sensing capabilities. We will consider in detail two principles for provably correct motion planning with vision. As we will see, the resulting algorithms exhibit different “styles” of behavior and are not, in general, superior to each other. Third and very interestingly, while one can expect great improvements in real-world tasks, in general richer sensing has no effect on algorithm path length performance bounds.