Chapter 17. Search Engine Spam

Search engine users search to find relevant content. And search engines try to deliver links to relevant content. Unethical SEO, referred to in the industry as spam, attempts to boost page rank by abusing the idea of relevant content—that is, improving page rank by doing something other than delivering quality, relevant pages. So, for example, getting a page about Viagra to come up in a search for sports results would be considered search engine spam.

If you are an established brand or company, your image is everything and you will do everything to protect that image. If you are struggling to get to the top of the SERPs, you might be tempted to use artificial, or black hat, SEO. Artificial SEO strives for immediate benefits—usually to make a quick profit. Its sole focus is in tricking search engines by finding loopholes in the search engine algorithms. It is this “tricking” component that makes it unethical SEO. Nevertheless, black hat SEO practitioners are always looking for ways to manipulate search engine results.

What exactly are such SEO practitioners trying to do? Simply put, they are trying to use search engines to get much more traffic. The goal is as simple as the law of large numbers. Suppose a site gets 50 search engine referrals per day. On average, it takes about 200 referrals to make a sale. This means it takes four days to make only one sale. Some people need better sales results than that, and they are easily tempted by unethical techniques to attain the results they desire.

You may even hire SEO practitioners who will employ unethical methods without your prior knowledge or consent. Many companies have found this out the hard way (usually after being blacklisted on Google and others). It is therefore imperative that you understand both ethical and unethical SEO methods. This chapter focuses on unethical methods.

Before going down the unethical SEO path, consider this. If your goal is simply to obtain immediate website traffic, there are ethical methods that will not hurt you in the long run. This includes the use of PPC campaigns.

Understanding Search Engine Spam

Many different search engine spam techniques have sprung up over the years. Some techniques are more effective than others. Techniques that target related traffic typically have higher returns (number of sales) if undetected. They include keyword stuffing, hidden or small text, and cloaking. Techniques targeting any traffic include blog and forum spam, domain misspellings, and site hacking.

Low-end spam techniques are typically broadly targeted. For example, many people want to be number one on Google for the keywords viagra and sex. This is the case even when their website has nothing to do with those two keywords. The premise is that because the two keywords are so popular, enough of the referred traffic will end up buying the product that the spam site is selling.

Similarly, how many times have you seen adult SERP spam when searching for seemingly innocent keywords? The bottom line is that spammers seek traffic for an immediate benefit. Spammers will sometimes use any means necessary to achieve their goals—often at the expense of legitimate website owners.

What Constitutes Search Engine Spam?

Search engine spam is also known as web spam or spamdexing. Using any deceptive methods to trick search engines to obtain better search engine rankings constitutes search engine spam. According to http://www.wordspy.com/words/spamdexing.asp:

There’s also “spamdexing,” which involves repeatedly using certain keywords—registered trademarks, brand names or famous names—in one’s Web page. Doing this can make a Web site move to the top of a search engine list, drawing higher traffic to that site—even if the site has nothing to do with the search request.

All of us have seen search result spam. After searching for a particular term, you click on one of the results, believing it to be relevant to your query, only to get something completely different, usually annoying ads.

Google guidelines

Google is very vocal when it comes to search engine spam. Google is not shy about taking action against spammy sites; websites big and small can be removed from Google’s index if they are found to be guilty of search engine spam. In its Quality Guidelines, Google spells out some of the most common spam techniques (summarized in Table 17-1). Google also states the following:

These quality guidelines cover the most common forms of deceptive or manipulative behavior, but Google may respond negatively to other misleading practices not listed here (e.g. tricking users by registering misspellings of well-known websites). It’s not safe to assume that just because a specific deceptive technique isn’t included on this page, Google approves of it.

Yahoo! guidelines

Yahoo! sees unique content as paramount. It wants to see pages designed for humans with links intended to help users find quality information quickly. Yahoo! also wants to see accurate meta information, including title and description meta tags.

Yahoo! does not want to see inaccurate, misleading pages or data, duplicate content, automatically generated pages, and other deceptive practices (many of which are in line with the Google Quality Guidelines). For more information on Yahoo!’s guidelines, visit http://help.yahoo.com/l/us/yahoo/search/basics/basics-18.html.

Bing guidelines

Bing wants to see well-formed pages, no broken links, sites using robots.txt as well as Sitemaps, simple (and static-looking) URLs, and no malware. Microsoft is vocal about shady tactics as well.

The company also advises against the use of artificial keyword

density (irrelevant words including the stuffing of ALT attributes), use of hidden text or

links, and link farms. For more information on Bing search

guidelines, visit http://bit.ly/8bJZp.

Search Engine Spam in Detail

It is possible to violate search engine guidelines without even knowing it! There are a lot of gray areas, and lots of clever ideas that sound good but are easily abused. You could be blacklisted for doing something that would be considered ethical by any philosopher, but that uses techniques associated with unethical behavior.

Search engines know about these techniques, but they don’t know about your intentions. This section explores many of the common scenarios that might be considered search engine spam. These are the kinds of things to avoid to ensure a steady flow of free visitors.

Keyword stuffing

Keyword stuffing is the mother of all spam. The idea is to acquire higher search engine rankings by using an artificially high keyword count in the page copy or in the HTML meta tags. In the early days of the Internet, all you had to do was stuff the keywords meta tag with enough of the same or similar keywords to get to the top of the SERPs on some search engines.

Keyword stuffing increases page keyword density, thereby increasing perceived page relevance. For example, suppose you have an online store selling basketball apparel. Here is how reasonable SEO copy might look:

MJ Sports is your number one provider for all yourbasketballneeds. We are your one stop location for a great selection ofbasketballapparel. At MJ sports we knowbasketball.Basketballexperts are at your fingertips, please call us at 1-800-mjsports.

In this example, we have a keyword density of about 10% for the keyword basketball. The keyword-stuffed version might look as follows:

Basketballat MJ Sports.Basketballapparel,basketballshoes,basketballshorts,basketball. We playbasketballand we know yourbasketballneeds. Bestbasketballshoes in town. MJ Sportsbasketballapparels. Why go anywhere else forbasketball? Shopbasketballnow!

A keyword density of 29% for the keyword basketball hardly looks “natural.” Remember to write your page copy for the web visitor first. The page copy should be readable.

Keyword stuffing no longer works for most search engines. This has been the case for some time. Furthermore, the use of the keywords meta tag has lost its meaning in SEO. Google and others do not pay attention to keyword meta tags for rankings. They do use meta (description) information for search result display text.

Hidden or small text

The purpose of hidden text is to be perfectly visible to web crawlers

but invisible to web users. Search engines do not like this and

consider this as spam or deception. The goal of using hidden text is

the same as in keyword stuffing. It is to artificially increase page

rank by artificially stuffing targeted keywords via hidden text.

Hidden text can be deployed in several different ways, including via

the <noscript> element, the

text font color scheme, and CSS.

The <noscript> element

The <noscript>

element is meant to be used as alternative content in cases

where scripting is not enabled by the web browser or where the

browser does not support scripting elements found within

the script tag.

According to W3C (http://www.w3.org/TR/REC-html40/interact/scripts.html#h-18.3.1):

The NOSCRIPT element allows authors to provide alternate content when a script is not executed. The content of a NOSCRIPT element should only be rendered by a script-aware user agent...

The overwhelming majority of people use browsers that have

client scripting enabled. The <noscript> element is used to

display alternative text in a browser where scripting is disabled. Since very few

users disable scripting, very few, if any, will see the text. But

the search engines will still discover it. Here is an

example:

<SCRIPT>

// some dynamic script (JavaScript or VBScript)

function doSomething() {

// do something

}

</SCRIPT>

<NOSCRIPT>

sildenafil tadalafil sildenafil citrate uprima impotence caverta

apcalis vardenafil generic softtabs generic tadalafil vagra zenegra

generic sildenafil citrate pillwatch generic sildenafil buy sildenafil

buy sildenafil citrate little blue pill clialis lavitra silagra

regalis provigrax kamagra generic pills online pharmacy

</NOSCRIPT>The text found within the <noscript> element would appear

only to a very small number of people (a number that is negligible

for all intents and purposes).

The text font color scheme

This technique involves strategically formatting text to be of the same color as the page background color. The rationale behind this technique is that the hidden text will “show up” to both the search engine crawler and the web user.

The problem, of course, is that the text appears invisible to the web user, unless it is highlighted by a mouse selection. This is clearly deception. Here is an example:

<html> <head> <meta http-equiv="Content-Language" content="en-us"> <title>Page X</title> </head> <body bgcolor="white"> <div> <font color="white">sildenafil tadalafil sildenafil citrate uprima impotence caverta apcalis vardenafil generic softtabs generic tadalafil vagra zenegra generic sildenafil citrate pillwatch generic sildenafil buy sildenafil buy sildenafil citrate little blue pill clialis lavitra silagra regalis provigrax kamagra generic pills online pharmacy </font> </div> <!--Regular Text Goes Here --> <p>Discount Satellite Dishes... Buy Viewsonic for $199...</p> ... </body> </html>

The text contained within the DIV tags is

enclosed within the <font>

tag. The <font> tag is

using a color attribute value

of white. Since the default

body background usually renders white, the text will blend in with

the default white background perfectly—effectively becoming

invisible.

Even ethical SEO practitioners can fall victim to this form of spam. Use care when picking font colors and using CSS. It is relatively easy to make a mistake and make your text invisible.

CSS

A more discreet way to hide text is with Cascading Style Sheets (CSS). Here is the example from the preceding section, but rewritten using CSS:

<html>

<head>

<meta http-equiv="Content-Language" content="en-us">

<title>Page X</title>

<style>

.special {

color:#ffffff;

}

</style>

</head>

<body>

<div class="special">

sildenafil tadalafil sildenafil citrate uprima impotence caverta

apcalis vardenafil generic softtabs generic tadalafil vagra zenegra

generic sildenafil citrate pillwatch generic sildenafil buy sildenafil

buy sildenafil citrate little blue pill clialis lavitra silagra

regalis provigrax kamagra generic pills online pharmacy

</div>

<!--Regular Text Goes Here -->

<p>Beast deals on Hockey Cards...</p>

...

</body>

</html>Another way to do the same thing is to place all CSS content in an external file, or use absolute positioning coordinates—effectively placing the optimized text out of the viewable screen area.

Don’t be foolish and attempt to use these hiding techniques. If your site employs CSS, be careful not to make mistakes that might appear as search engine spam. Consider the following example:

<style>

.special {

color:#000000;

padding:2px 2px 2px 2px;

margin:0px;

font-size:10px;

background:#ffffff;

font-weight:bold;

line-height:1px;

color:#ffffff;

}

</style>Note that the color

attribute is defined twice—the second time as #ffffff (white). The second definition

overrides the first, so any text rendered inside a tag with this

special CSS class will be rendered in white. If the background is

white, as is usually the case, the text will be invisible. Some

people will attempt to augment this approach by placing all of the

CSS in external files blocked by robots.txt.

Tiny text

Tiny text strives for the same end goals as the preceding methods: higher search engine rankings via a higher keyword density. Tiny text is visible to both search engine spiders and web users. The only catch is that it is (for all practical purposes) unreadable by web users due to its size. Here is an example:

<p><font style="font-size:1px;">keyword keyword</font></p>

Rendering this text in a modern browser produces output that is only 1 pixel high, which would be impossible to read.

Cloaking

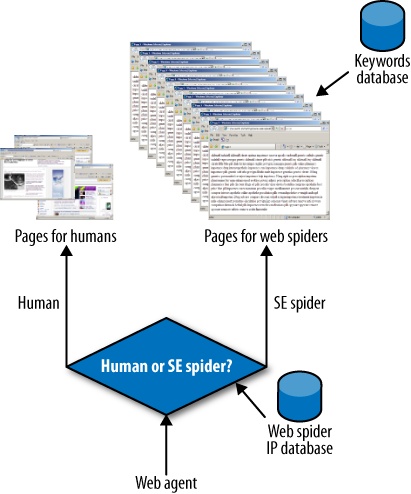

You can think of cloaking as the bait and switch technique on steroids! Typically, smaller sites employ this method in an attempt to be perceived as big sites by search engine spiders. Figure 17-1 shows how cloaking works.

On the arrival of any web visitor (agent), a website that employs cloaking checks the originating IP address to determine whether the visitor is a human or a web spider. Once this is determined, the page is served according to the type of visitor. Pages prepared for web spiders are usually nonsensical collections of targeted keywords and keyword phrases.

There are some legitimate reasons to serve different content to different IP addresses. One involves the use of geotargeting. For example, if you are running an international website, you may want to serve your images from the server node that is closest to the requesting IP address to improve your website performance. Akamai is one company that does this.

Sometimes you may want to show a page in French as opposed to English if the originating IP is from France. Other times, you may want to show pages specifically optimized for particular regions of the United States. Google advises that you treat search engine spiders the same way you would treat regular (human) users. In other words, serve the same content to all visitors.

Doorway pages

A doorway page is a page completely optimized for web spiders. Usually sites employ hundreds or even many thousands of doorway pages, each optimized for the specific term or phrase.

Doorway pages are usually just a conduit to one or several target pages. This is accomplished by a redirect. Most common redirects are implemented via JavaScript or meta refresh tags. The following fragment shows the meta refresh version:

<!-- META refresh example-->

<html><head><title>Some Page</title>

<meta http-equiv="refresh" content="0;url=http://www.somedomain.com">

</head>

<body>

<!-- SE spider optimized text -->

</body>

</html>The key part of the meta refresh redirect is the numerical parameter specifying the number of seconds after which to execute the redirect. In this case, the parameter is 0 seconds (which is in boldface in the code fragment). The next fragment uses JavaScript to accomplish the same thing:

<!-- JavaScript redirect example-->

<html>

<head>

<title>Some Page</title>

<script type="text/javascript">

function performSpecial(){

window.location = "http://www.somedomain.com/";

}

</script>

</head>

<body onLoad="performSpecial();">

<!-- SE spider optimized text -->

</body>

</html>In this example, after the HTML page loads, the onLoad page handler calls the performSpecial JavaScript function, which then uses the window object to issue the redirect. One

of the most famous cases of doorway page spam involved BMW, as it

was allegedly using this technique on its German language site. The

site was eventually reindexed after removal of the spammy

content.

Scraper sites

Many malicious sites are lurking on the Internet. Often, these sites will crawl your site and copy its entire contents. Usually, they will post your content on their sites in an attempt to get the ranking benefits of your site.

With the use of offline browsers, anyone can copy your site. After slurping all of the contents, some black hat SEO practitioners could convert all of these pages to act as doorway pages to their target site, simply by inserting a few lines of JavaScript code or the meta refresh equivalent.

Link farms, reciprocal links, and web rings

The concept of link farms is fairly old. Free For All (FFA) sites are a thing of the past. In their heyday, a site could get thousands of useless backlinks with the click of a button. The concept was based on the idea that link farms would boost the popularity of the submitted URL. If you are caught using an FFA site, it can harm your rankings.

Reciprocal links fall in the category of link exchange schemes. Sometimes reciprocal links can occur naturally for topically related websites. For sites with high authority (trust), this is not a big concern. For smaller (newer) sites, be careful to not overdo it. Google has penalized sites using reciprocal links in the past.

A web ring is a circular structure composed of many member sites—approved by the web ring administrator site. Each member site of the ring shares the same navigation bars, usually containing “Next” and “Previous” links.

The web ring member sites share a common theme or topic that would be something desirable in terms of SEO. Having relevant inbound and outbound links is a good thing. However, most web rings (at best) are just a collection of personal sites. Using web rings can be perceived as an endorsement of all associated sites in the web ring. Web rings can also fall into the trap of using too many reciprocal links, which are likely to be penalized.

Hidden links

There are many scenarios for placing hidden links. Say a black hat SEO consultant is working on two new sites, A and B, while also having access to an existing (popular) site, X. All three sites are completely unrelated, but to improve the new sites’ rankings, he creates hidden links from site X to sites A and B, so both sites are credited with having more links, even though there’s no reason for the relationship.

Hidden links are typically used to boost the link popularity of the recipient site. The assumption is that the victim site is compromised by an unethical SEO practitioner (or hacker) working on the site. The following example uses a 0-pixel image to employ a hidden link:

To protect your PC, <a href="http://www.domaintobeboosted.com"><img src="1pixel.gif" width="0" height="0" hspace="0" alt="deals on jeans" border="0"></a>use antivirus programs.

The code fragment using the 0-pixel image will render nothing

in relation to the actual image. But search engine crawlers would

still pick it up. The next example uses the <noscript>

element to accomplish the same thing:

<script> //do something </script> <noscript> <a href="http://www.domaintobeboosted.com">For a great deal on jeans visit www.domaintobeboosted.com</a> </noscript>

In this example, we are using the <noscript> element (as we discussed

in Hidden or small text). Unethical SEO

practitioners can easily place these hidden links in their clients’

web pages, with their clients not noticing anything.

Paid links

Search engines know that paid links are essentially one of the biggest noise factors in their ranking algorithms. To minimize this noise, Google actively encourages webmasters to report paid links. Google is interested in knowing about sites that either sell or buy paid links.

Using paid links will not necessarily get your site penalized.

Google cares about paid links that distribute PageRank. So, if you

are worried about being penalized, you can do two things: use

the nofollow link

attribute or use an intermediary page that is blocked by robots.txt. To obtain further

information, visit http://www.google.com/support/webmasters/bin/answer.py?hl=en&answer=66736.

Some sites sell links in more discrete ways. You can “rent” an entire page to point to your site or to buy custom ad space (that does not follow any ad patterns). Nonetheless, as they say, the truth gets out eventually. For some, it may be just what they need to get enough net traction in the beginning, which by then would allow them to clean up their act by employing strictly white hat practices.

Regardless of what search engines think, using paid links could be a legitimate strategy (if you use them in ethical ways, as discussed earlier) and a viable alternative to organic SEO. Use caution and make sure you do it correctly. This depends on many factors, including budget, reputation, and brand.

Blog, forum, and wiki spam

How many times have you seen comment spam? This could range from legitimate users posting comments to automated nonsensical comments submitted by black hat SEO practitioners. Legitimate comments can also include URLs. The ultimate goal in this case is much the same as in spam comments: passing link juice to the site of choice. The only difference is that legitimate comments add some value to the page.

With the introduction of the nofollow link

attribute, the big search engines have united in the fight against

comment spam. The idea of the nofollow link attribute is to disallow the

passing of link juice from the blog or forum site to the destination

URLs found in the web user comments.

By placing the nofollow

link attribute with all of the link tags found in the comments, you

are simply telling search engines that you cannot vouch with

confidence as to the value, accuracy, and quality of the outbound

link. The intent was to discourage SEO black hats from utilizing

comment spam.

This method can never be a catchall solution. The problem lies

in the fact that a majority of sites are not using this attribute.

In addition, most sites are not even using SEO. Furthermore, not all

search engines treat the nofollow

link attribute equally. Google is the most conservative, followed by

Yahoo!. Ask.com completely ignores the

attribute.

In the case of wikis, it is relatively easy to see how abuse can unfold. Unethical editors can be deliberately passing link juice to site(s) of their own interest, or worse yet, be paid to do so.

Acquiring expired domains

People acquire expired domains in an attempt to acquire the domains’ link juice. Although Google has said it would devalue all backlink references to expired domains, it may still be helpful to buy expired domains, especially if they contain keywords that are pertinent to your website.

Buying expired domains has other benefits, even if the domain is completely removed from the search engine index. Valuable backlinks may still be pointing to expired domains. In addition, some people may have bookmarked the domain. These residual references may also merit the effort to acquire these domains.

In another sense, knowing that search engines will erase all of the acquired benefits of an expired domain means it is important to stay on top of your domain expiry administration. Domain registrars allow for a certain grace period, typically up to 60 days, to renew a domain. Depending on your hosting provider, your site may or may not be visible during this grace period. Technically speaking, it should be visible. Some hosting providers might block your site entirely to remind you that you need to renew your domain.

Acquiring misspelled domain names

Many times web users typing in your domain name will make mistakes. Webmasters or content publishers may also make typing mistakes in your backlinks. These situations can be viewed as opportunities to gather additional traffic.

There is nothing wrong with buying misspelled domain names—when it comes to your own domain name misspellings. They can become a part of your SEO strategy. In these cases, using the permanent HTTP 301 redirect from the misspelled domain to the main domain is the correct approach.

When you buy a misspelling of a known (brand name) domain name, you could get into legal trouble. This black hat technique is troublesome, as it is trying to capitalize on the known brand while also confusing the web searcher.

Site hacking

Many sites get hacked every day. Once vulnerability is discovered within particular (mostly popular) software, hackers can have a field day in terms of wreaking havoc on all sites employing this software. Staying up-to-date with software patches and performing regular database and content backups is extremely important.

Depending on the severity of the damage, it could take quite some time to get your site up and running again once hackers have compromised it. Chances are, web crawlers have already visited your site and are likely giving link juice to the hacker’s site if referenced on the compromised site. Sometimes your site can be hacked without your knowledge. It is possible for hackers to continuously exploit your site by installing hidden links or other sorts of spam.

You can detect hacker activities at the file or system level in many ways. If your website contains mostly static files and content, you can utilize software versioning control (such as CVS). If you have a dynamic website, you can utilize an offline browser to periodically download your site and then perform a comparison on the file level. You can also compare the contents of your database to past versions in order to detect any differences/anomalies.

What If My Site Is Penalized for Spam?

Sometimes search engines can make a mistake and mark your site as spam. Other times you are knowingly or unknowingly being deceptive. If you are caught conducting search engine spam, any other sites that you own might come under scrutiny and be considered part of a bad neighborhood. Whatever the case, if you want to “come clean,” you can submit your site for reconsideration.

Requesting Google site reevaluation

You can request that Google reconsider your site in Google Webmaster Tools. Before you submit your site for reconsideration, remove any shady pages or practices, submit a truthful report, and then wait for your site to reappear in the SERPs. This can take several weeks or more, so patience is required.

Here is what Google says when you are submitting your website’s reconsideration request (https://www.google.com/webmasters/tools/reconsideration?hl=en):

Tell us more about what happened: what actions might have led to any penalties, and what corrective actions have been taken. If you used a search engine optimization (SEO) company, please note that. Describing the SEO firm and their actions is a helpful indication of good faith that may assist in evaluation of reconsideration requests. If you recently acquired this domain and think it may have violated the guidelines before you owned it, let us know that below. In general, sites that directly profit from traffic (e.g., search engine optimizers, affiliate programs, etc.) may need to provide more evidence of good faith before a site will be reconsidered.

Use caution before you submit your site for reconsideration. Keep your request straight to the point. In the worst case, creating a new domain may be your only option, provided you are sure that none of your pages are employing search engine spam.

Requesting Yahoo! site reevaluation

Yahoo! provides a web form you can use to initiate a reevaluation request. You should fill out and submit this form after you have cleaned up your site. What I said regarding Google reconsideration requests also applies to Yahoo!. Simply be honest, fix any spam, and then contact Yahoo! using the form.

Requesting Bing site reevaluation

Bing is no different from Google and Yahoo!. It also provides an online submission method for submitting site reevaluations. You can find the online form at http://bit.ly/1NgzUo. Use the same advice I gave regarding Google and Yahoo! when submitting Bing reevaluation requests.

Summary

All search engines regularly post and update their webmaster guidelines. Google’s webmaster guidelines talk about “illicit practices” and possible penalties (http://bit.ly/PFen):

Following these guidelines will help Google find, index, and rank your site. Even if you choose not to implement any of these suggestions, we strongly encourage you to pay very close attention to the “Quality Guidelines,” which outline some of the illicit practices that may lead to a site being removed entirely from the Google index or otherwise penalized. If a site has been penalized, it may no longer show up in results on Google.com or on any of Google’s partner sites.

Basically, if you break the rules and use any sneaky tactics, your site will eventually be penalized. Often, it does not take more than a few days before you are caught. Even if search engines cannot detect a particular deception through their underlying algorithms, your site (or sites) is still likely to be caught, thanks to web users reporting spam results. All major search engines provide easy ways for the public or (often angry) webmasters to report search engine spam. Here are links to forms for reporting SERP spam:

As we discussed in this chapter, understanding search engine spam is important. It is a road map of all the things you should avoid. Although certain black hat SEO practitioners in the industry boast of their black-hat-style successes, this is not the way to go.

The question then becomes: is it worth it to explore the use of shady tactics for short-term benefit? Black hat techniques are focused only on short-term benefits and are increasingly easy for the big search engines to spot. Once you are caught in a deceptive tactic, it is very hard to be trusted again. It is best to stay clear of any and all deceptions. Invest your time in building legitimate content that inspires people to link to your site.