Chapter 14. Content Considerations

One of the most basic obstacles that any site can face is lack of unique, compelling, quality content. Good content is planned, researched, copyedited, relevant, and long lasting. Good content is also current, unique, interesting, and memorable.

Producing lots of content does not guarantee the success of your website. Producing little or no new content is not the answer either. Striking the right balance between short- and long-term content will help make your site stand out from the crowd.

Content duplication is one of the most common SEO challenges. With the introduction of the canonical link element, webmasters now have another tool to combat content duplication.

Optimizing your sites for search engine verticals can help bring in additional traffic besides traffic originating from standard organic results. In this chapter, we will discuss all of these topics in detail.

Becoming a Resource

If you had to pick one thing that would make or break a site, it would almost always be content. This does not automatically mean that having the most content will get you the rankings or traffic you desire. Many factors play a role. The best sites produce all kinds of content. Website content can be classified into two broad areas: short-term content and long-term content. You should also be aware of the concept of predictive SEO.

Predictive SEO

Depending on your niche, you can anticipate future events in your keyword strategy. Some events occur only once per year, once every few years, once in a lifetime, once every day, once every week, and so forth. You can leverage this knowledge to foster content development to accommodate these future events.

Future events, buying cycles, and buzz information

You can use Google Trends to see what’s happening in the world of search as well as in realizing past trends or keyword patterns that you can leverage when planning for future events.

Future events

Many sites are being built today that will see the light of day only when certain events occur in the future. For example, the Winter Olympic Games occur only every four years. If you are running a sports site, you could start creating a site (or a subsite) specifically for the next Winter Games. This way, you can be ready when the time comes. In the process, you will be acquiring domain age trust from Google and others as you slowly build up your content and audience in time for the Winter Games.

Buying cycles

In the Western world, there are certain known buying cycles throughout the year. For example, the money modifier gift starts to spike from early November. It reaches its peak a few days prior to Christmas, only to go back to its regular level by the end of December. Figure 14-1 shows this concept.

Online retailers know this sort of information and are always looking to capitalize on these trends. From an SEO perspective, site owners are typically advised to optimize their sites well before their anticipated shopping surge event, as it takes some time for search engines to index their content.

Buzz information

Search engines are in a race to come up with better live search algorithms. Many sites effectively act as information repeaters. This includes many well-respected (big player) media news sites. They repackage news that someone else has put forth. Some clever sites employ various mashups to look more unique.

The idea of content mashups is to create content that is effectively better than the original by providing additional quality information or compelling functions. In this competitive news frenzy, sites that can achieve additional (value add) functionality benefit from a perceived freshness relevance.

Content mashups are everywhere. Some sites employ commonly available (popular) keyword listings, including those at the following URLs:

These types of content mashup schemes employ scraped links to build new (mixed) content by using these popular keywords.

Short-Term Content

Short-term content is the current Internet buzz. Sites producing such content include blogs, forums, and news sites. Short-term content is important, as it aids webmasters by providing a freshness and relevancy boost to their websites. Many legitimate and unethical sites use this to their advantage.

Unexpected buzz

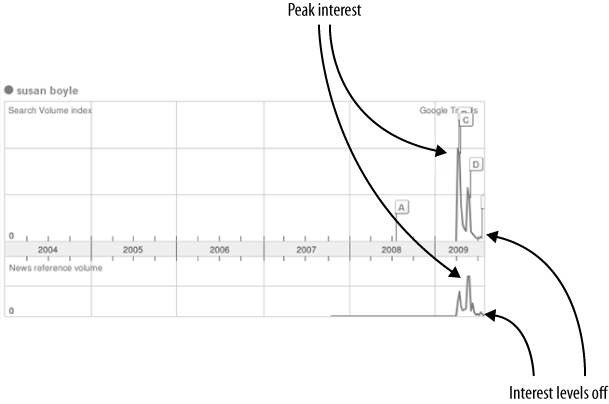

The trick when creating short-term content is to do it while the specific topic is still “hot.” Better yet, if you can anticipate the future buzz the content will generate, you will be a step ahead of your competition. Figure 14-2 illustrates search and news article volume trends for the keyword susan boyle (the unexpected frontrunner on the televised singing competition, “Britain’s Got Talent”).

From Figure 14-2, it should be easy to see what transpired over the past few years. From the beginning of 2004 to about April 2009, this keyword was not even on the map. Everything changed after the show was televised. News sites and blogs alike were in a frenzy to cover this emerging story.

Just a few months later, search volumes tumbled. And when you look closely at the graph in Figure 14-2, you can see another pattern. Figure 14-3 shows the same graph, but on a 12-month scale. You can see that in terms of search volume, interest in susan boyle was highest at the start of the graph (point A in the figure). When you look at the news reference volume graph, you can see how the news and blog sites scrambled to cover the news, as indicated by the first peak in the graph.

Shortly thereafter, the search volume plummeted (between points B and C in the figure), which is also reflected in the number of news articles generated during that time. By the time the second search volume peak appeared around mid-May (point C), many more news sites were competing for essentially the same story (and the same content). By July 2009 (point D), the search volume index and news reference volume were nearly identical.

Although I am not going to go into detail on TV headlines that produce search volume peaks, this discussion should reinforce the importance of being ready to produce content so that you are one of the first sites to capture any unexpected buzz. You want to catch the first peak, as in this case, so that your content will be unique and fresh and you won’t have to deal with a large number of competitor sites in the same news space. You want to capture the search frenzy at its earliest.

Expected buzz

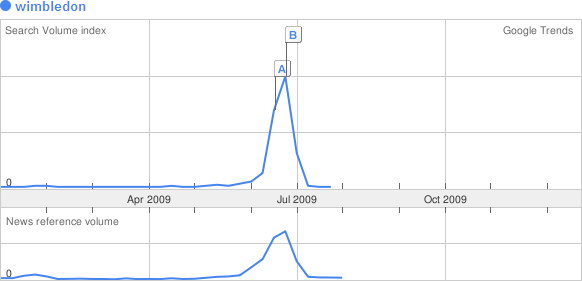

Now let’s examine what happens when you can anticipate a certain event. For example, the most popular tennis tournament takes place every year at Wimbledon. Figure 14-4 shows a Google Trends graph for the keyword wimbledon.

Several things should be clear when you look at Figure 14-4. The two peaks—indicating search volume index and news reference volume—are very much in sync. This should speak loudly about the preparation and production of content that mimics the buzz coming out of the tournament.

The high peak occurs around the tournament finals at the end of June; hence the highest news coverage during that time frame. Creating short-term content of expected events is much easier than doing the same for unexpected events. However, you will be facing a lot more competition for the same storylines.

Long-Term Content

Long-term content is content that sticks. It is the type of content that people bookmark and come back to many times. This type of content has a greater lifespan than short-term content. Its relevancy does not diminish much over time.

Long-term content can be anything from online software tools to websites acting as reference resources. Let’s take a look at some examples.

If you use Google to search for any word that appears in the English dictionary, chances are Google will provide among its search results a result from Wikipedia. The English dictionary does not change much over time. Sites containing English language dictionary definitions, such as Wikipedia, are well positioned to receive steady traffic over time.

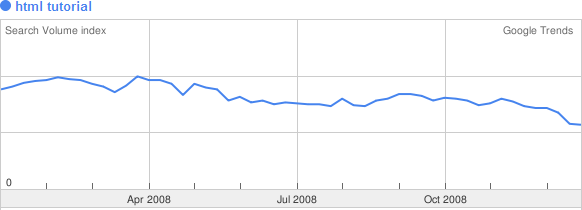

Most computer programmers use Google to find answers to their development problems. Sites providing answers to such problems can also benefit from this, as they will receive a steady flow of traffic. Figure 14-5 illustrates search volume based on the keyword html tutorial.

You can see that the overall search volume is more or less the same month after month. If your site hosts the best “HTML Tutorial” around and is currently getting good traffic, you can be pretty certain that your site will be getting steady traffic in the future (assuming no other variables are introduced). When people are looking for reference information, they will continue searching until they find exactly what they are looking for. Searching several SERPs is not uncommon.

Content Balance

Both short- and long-term content have their advantages. How much of your content is short term or long term depends on your line of business. If you are in the news or blog business, you will do well by continuously producing new content. If you are providing technical information, you may wish to offer a mix of new articles and technical reference articles, which you can package into a different article category that is easy to access from anywhere on your site.

Organic content

If you are producing lots of semantically similar content, you should consider making it easy for people to add comments to that content. Each comment helps make your associated content page more unique. Content uniqueness is something to strive for, especially if you are in a competitive niche.

Content Creation Motives

At the most basic level, your content is your sales pitch. You create content to make a statement. Compelling content is content that provides newsworthy information, answers to questions, reference information, and solutions to searchers’ problems.

The ultimate motive of any website content is to provide information to visitors so that they can accomplish a particular business goal.

Engaging your visitors

Modern sites try to make it easy for their visitors to comment on and share their content. Engaging visitors adds an element of trust when people can say what they wish to say about your products, services, ideas, and so forth. This is mutually beneficial, as you can use your visitors’ feedback to improve your products and services.

Fortifying your web authority

Staying ahead of the competition requires continual work. You cannot just design your site and leave. Creating a continuous buzz and interest is necessary to foster and cultivate your brand or business.

Updating and supplementing existing information

All things change. You need to ensure that your site is on top of the latest happenings in your particular niche. Using old information to attract visitors will not work.

Catching additional traffic

Creating additional content to get more traffic is one of the oldest tricks in the book. Search engines are getting smarter by day, so to make this work, your content will need to be unique.

Make no mistake: the fact that content is unique does not automatically say anything about its quality. However, search engines can get a pretty good idea about the quality of your content based on many different factors, such as site trust, social bookmarking, user click-throughs, and so on.

Content Duplication

Content duplication is among the most talked about subjects in SEO. With the advances we have seen in search engine algorithms, the challenge of content duplication is no longer as big as it used to be. Duplicate content can occur for many different reasons. Some common causes include affiliate sites, printer-friendly pages, multiple site URLs to the same content, and session and other URL variables. This section talks about different ways to deal with content duplication.

Canonical Link Element

Content duplication is one of the most common challenges webmasters face. Although you can deal with content duplication in many different ways, the introduction of the canonical link element is being touted as one of the resolutions to this issue. As of early 2009, the canonical link element was officially endorsed by Google, Yahoo!, and Microsoft. Microsoft’s blog site (http://bit.ly/lIVY0) stated that:

Live Search (Bing) has partnered with Google and Yahoo to support a new tag attribute that will help webmasters identify the single authoritative (or canonical) URL for a given page.

Ask.com has also endorsed the canonical link element. Needless to say, many popular CMSs and blogging software packages scrambled to produce updated versions of their code or plug-ins to support canonical URLs. These include WordPress, Joomla, Drupal, and many others.

What is a canonical link?

A canonical link is like a preferred link. This preference comes to light when we are dealing with duplicate content and when search engines choose which link should be favored over all others when referring to identical page content.

Matt Cutts, Google search quality engineer, defines canonicalization on his blog site as follows:

Canonicalization is the process of picking the best url when there are several choices, and it usually refers to home pages.

Canonical link element format

You place the canonical link element in the header part of the

HTML file, within the <head> tag.

Its format is simply:

<link rel="canonical" href="http://www.mydomain.com/keyword.html" />

Let’s say you have a page, http://www.mydomain.com/pants.html, which you want to be the preferred page, as it points to the original (main) document. But you also have three other pages that refer to the same content page, as in the following:

http://www.mydomain.com/catalog.jsp?part=pants&category=clothes&

http://www.mydomain.com/catalog.jsp;jsessionid=B8C2341GE57FAF195DE34027A95DA3FC?part=pants&category=clothes

http://www.mydomain.com/catalog.jsp?pageid=78234

To apply the newly supported canonical link element, you would place the following in each of the three preceding URLs, in the HTML header section:

<html>

<head>

<!-- other header tags/elements -->

<link rel="canonical" href="http://www.mydomain.com/pants.html" />

</head>

<body>

<!--page copy text and elements -->

</body>

</html>The Catch-22

Although the introduction of the canonical link element certainly solves some duplication problems, it does not solve them all. In its current implementation, you can use it only for pages on the same domain or a related subdomain.

Here are examples in which the canonical link element can be applied:

http://games.mydomain.com/tetris.php

http://mydomain.com/shop.do?gameid=824

http://retrogames.mydomain.com/tetris.com

Here are examples in which the canonical link element cannot be applied:

http://games.mydomain.net/tetris.php

http://myotherdomain.com/shop.do?gameid=824

The reason the big search engines do not support cross-domain canonical linking is likely related to spam. Imagine a domain being hijacked, and then hackers using the canonical link element to link the hijacked domain to one of their own in an attempt to pass link juice from the hijacked domain. Although the canonical link element is not a perfect solution, it should be a part of your standard SEO toolkit when dealing with content duplication.

Possibility of an infinite loop

Take care when using canonical link elements, as you could run into a situation such as this:

<!-- Page A --> <html> <head> <link rel="canonical" href="http://www.mydomain.com/b.html" /> </head> <body><!-- ... page A text ... --> </html> <!-- Page B --> <html> <head> <link rel="canonical" href="http://www.mydomain.com/a.html" /> </head> <body><!-- ... page B text ... --> </html>

In this example, Page A indicates a preferred page, Page B. Similarly, Page B indicates a preferred page, Page A. This scenario constitutes an infinite loop. In this situation, search engines will pick the page they think is the preferred page, or they might even ignore the element, leaving the possibility of a duplicate content penalty.

Multiple URLs

The canonical link element is only one of the tools you should have in your arsenal. There are other methods for dealing with content duplication.

Trailing slash

There is certainly a debate as to the use of the trailing slash (/) in URLs. The implied meaning of a trailing slash in URLs is that of a directory. A URL with no trailing slash implies a specific file. With the use of URL rewriting, this meaning has become somewhat blurry in a sense. URLs that you see in web browsers may or may not be their true (unaltered) representations when interpreted at the web server.

From an SEO perspective, URL consistency is the only thing that matters. Google Webmaster Tools does report duplicate content URLs. For example, Google will tell you whether you have two URLs, as in the following fragment:

http://www.mysite.com/ultimate-perl-tutorial http://www.mysite.com/ultimate-perl-tutorial/

You can handle this scenario by using the following URL rewriting (.htaccess) fragment:

RewriteEngine on

RewriteCond %{REQUEST_FILENAME} !-f

RewriteCond %{REQUEST_URI} !(.*)/$

RewriteRule ^(.*)$ http://www.mysite.com/$1/ [R=301,L]All requests will now be redirected (using the HTTP 301 permanent redirect) to the URL version with the trailing slash. If you prefer not to use a trailing slash, you can use the following:

RewriteEngine on

RewriteCond %{REQUEST_FILENAME} !-d

RewriteCond %{REQUEST_URI} ^(.+)/$

RewriteRule ^(.+)/$ /$1 [R=301,L]The notable difference in this example is in the second line of code, which checks to make sure the resource is not a directory.

Multiple slashes

Sometimes people will make mistakes by adding multiple slashes to your page URLs in local or external inbound links. To eliminate this situation, you can use the following URL rewriting segment:

RewriteEngine on

RewriteCond %{REQUEST_URI} ^(.*)//(.*)$

RewriteRule . %1/%2 [R=301,L]WWW prefix

You do have the time and control to enforce how people link to your site. Suppose you own a domain called SiteA.com that can be reached by either http://siteA.com or http://www.siteA.com. You’ve decided to use the http://www.siteA.com version. You can easily implement this with the following URL rewriting (.htaccess) fragment:

RewriteEngine On

RewriteCond %{HTTP_HOST} ^siteA.com$

RewriteRule (.*) http://www.siteA.com/$1 [R=301,L]Domain misspellings

If you purchased a few misspelled domains, you can use a similar method to redirect those misspelled domains. The following is the URL rewriting (.htaccess) fragment to use:

RewriteEngine On

RewriteCond %{HTTP_HOST} ^www.typodomain.com$

RewriteRule ^(.*)$ http://www.principaldomain.com$1 [R=301,L,NC]

RewriteCond %{HTTP_HOST} ^typodomain.com$

RewriteRule ^(.*)$ http://www.principaldomain.com$1 [R=301,L,NC]In this example, we are redirecting both http://typodomain.com and http://www.typodomain.com to http://www.principaldomain.com.

HTTP to HTTPS and vice versa

Sometimes you may want to use secure web browser–to–web server communications. In this case, you will want to redirect all of the pertinent HTTP requests to their HTTPS counterparts. You can do this by using the following (.htaccess) fragment:

RewriteEngine On

RewriteCond %{HTTPS} off

RewriteRule (.*) https://%{HTTP_HOST}%{REQUEST_URI} [R=301,L,NC]If you have an e-commerce store, you may want to redirect users back to HTTP for all pages except those containing a specific URL part. If all of your billing (payment) pages need to be run over HTTPS and all of the billing content is under the https://mywebstore.com/payments path, you can use the following (.htaccess) fragment:

RewriteEngine On

RewriteCond %{HTTPS} on

RewriteRule !^payments(/|$) http://%{HTTP_HOST}%{REQUEST_URI} [R=301,L,NC]Fine-Grained Content Indexing

Sometimes (apart from the case of content duplication) you may want to exclude certain pages from getting indexed on your site. This applies to any generic pages with no semantic relevancy and can include ad campaign landing pages, error message pages, confirmation pages, feedback pages, contact pages, and pages with little or no text.

We already discussed ways to prevent search engines from

indexing your content pages. This includes utilization of the

robots.txt file, the noindex meta tag,

the X-Robots-Tag, and the nofollow attribute.

These methods will work for pages at the site level or at the

individual page level.

You can also apply some filtering to each page. You can make

generic (semantically unrelated) parts of your page invisible to web

spiders. There are several ways to do this, including JavaScript,

iframes, and dynamic DIVs.

External Content Duplication

External content duplication can be caused by a few different things, including mirror sites and content syndication.

Mirror sites

There are two types of site mirroring: legitimate and unethical. We will discuss the legitimate case. For whatever reason, website owners might need to create multiple mirror sites of essentially the same content. If one site was to go down, they could quickly switch the traffic to the other site (with the same or a different URL).

If this is the case with your site arrangement, you want to

make sure you block search engines from crawling your mirror sites.

For starters, you can disallow crawling in robots.txt. You can also apply

the noindex meta tag

or the X-Robots-Tag equivalent.

Content syndication

If you happen to syndicate your content on other sites, Google advises the following:

If you syndicate your content on other sites, Google will always show the version we think is most appropriate for users in each given search, which may or may not be the version you’d prefer. However, it is helpful to ensure that each site on which your content is syndicated includes a link back to your original article. You can also ask those who use your syndicated material to block the version on their sites with robots.txt.

You may also want to consider syndicating just the article teasers instead of the articles in their entirety.

Similar Pages

Similar pages can also be viewed as duplicates. Google explains this concept as follows:

If you have many pages that are similar, consider expanding each page or consolidating the pages into one. For instance, if you have a travel site with separate pages for two cities, but the same information on both pages, you could either merge the pages into one page about both cities or you could expand each page to contain unique content about each city.

Deep-Linked Content

Blogs, forums, and article-driven sites will often experience indexing problems due to pagination. Pagination does not play a significant role if you have only a few articles on your site. If you are a busy site, however, this becomes an indexing and duplication issue if you do not handle it properly.

Some sites try to deal with this scenario by increasing the number of articles shown per page. This is not the optimal solution. Let’s look at a typical blog site. At first, each new blog entry is found in multiple places, including the home page, the website archive, and the recent posts section, all of which have the potential for duplicate content URLs.

Once the blog entry starts to age, it moves farther down the page stack. At some point, it is invisible from the home page. As time passes by, it takes more and more clicks to be reached, and then at some point it gets thrown out of the search engine index. You can deal with this so-called deep-linked content in several ways.

Sitemaps

You can list all of your important pages in your Sitemaps, as we discussed in Chapter 10. Although search engines do not provide guarantees when it comes to what they index, you can try this approach. If you have many similar pages, you might want to consolidate them as per Google’s advice.

Resurfacing

Another way to bring your important pages back to the forefront is to link to them from your other internal pages. You can do the same with any external backlinks.

In addition, you could bundle important long-term content pages in another hotspot reference area for easy finding. One example of this concept is with sticky posts in forums that include posts, such as forum guidelines and forum rules. For other types of sites, you might have a top-level page that links to these important links.

Navigation structure

Many newer CMSs also provide an article subcategory view. This approach takes advantage of the semantic relationships between different articles. This provides for better semantic structure of the site.

If you’re changing your custom code, ensure that you block duplicate paths to your content. Even if you are using a free or paid CMS, chances are you will need to make some SEO tweaks to minimize and eliminate content duplication.

Protecting Your Content

Any content you create becomes your intellectual property as soon as it is created. With so many people on the Internet today, someone will want to take advantage of your content.

On October 12, 1998, then-President Clinton signed the Digital Millennium Copyright Act (DMCA), after approval from Congress. This act contains provisions whereby Internet service providers can remove illegal copyrighted content from users’ websites. You can view the entire document at http://www.copyright.gov/legislation/dmca.pdf. For more information on copyrights, visit http://www.copyright.gov.

Google takes action when it finds copyright violations. You can read more about how Google handles DMCA at http://www.google.com/dmca.html. If you find that your content is being copied on other sites, you can file a complaint to let Google know. The process comprises several steps, including mailing the completed form to Google.

You can also use the service provided by Copyscape. Copyscape provides free and premium copyright violation detection services. The free service allows you to enter your URLs in a simple web form that then returns any offending sites. The premium service provides many more detection features.

If you do find people using your content, you can try to contact them directly through either their website contact information or their domain Whois information. If that does not work, you can contact their IP block owner (provider) by visiting http://ws.arin.net/whois/. If that doesn’t work, you should consult a local lawyer who is knowledgeable in Internet copyright laws.

Preventing hot links

Hot link prevention is one of the oldest ways webmasters can prevent other sites from using their bandwidth. You can implement hot link prevention with a few lines of code in your .htaccess file:

RewriteEngine On

RewriteCond %{HTTP_REFERER} !^$

RewriteCond %{HTTP_REFERER} !^http://(www.)?mycoolsite.com/.*$ [NC]

RewriteRule .(gif|jpg|png|vsd|doc|xls|js|css)$ - [F]When you add this code to your site, no one will be able to directly access any of the document types as specified in the rewrite rule.

Content Verticals

Your website traffic does not always come from the main search engines. All major search engines provide vertical search links (or tabs) found at the top of the search box. People can find your site by searching for images, news, products, maps, and so forth.

Vertical Search

Each search engine vertical is run by specialized search engine algorithms. In this section, we’ll examine Google Images, Google News, and Google Product Search. Bing provides similar, albeit smaller, functionality.

Google Images

The psychology of image search is different from regular searches. Image searchers are usually more patient. At the same time, they are pickier. Therefore, optimizing your images makes sense. The added benefit of image optimization is that your images will show up in the main search results blended with text results. They also appear on Google Maps.

Images need to be accessible. Ensure that your robots.txt file

is not blocking your image folder. Make sure X-Robots-Tags are not being used on your server

(sending the noindex HTTP header). You can see

whether any of your existing images are indexed by Google by issuing

the following commands (when in the Images tab):

site:mysite.com site:mysite.com filetype:png

You can do the same thing in Bing by issuing the following command:

site:mysite.com

The image filename should contain keywords separated by

hyphens. Ideally, the full path to the image will contain related

keywords. All images should have the ALT text with specified width and height

parameters.

Consider making images clickable, surrounded by relevant descriptive copy. If an image is clickable, the destination page should be on the same topic and should include the same or a larger version of the image. When linking to images, use descriptive anchor text with pertinent keywords.

Google News

To include your site’s articles in Google News, you will need to submit your link to Google for approval, as described at http://bit.ly/1UeZHp. Google will review the link to ensure that it meets its guidelines. There is no guarantee of inclusion.

When approved, utilize the Google News Sitemaps when submitting your news content. We already talked about creating news Sitemaps in Chapter 10. In early 2009, Google published several tips for optimizing articles for Google News. Here are the eight optimization tips Google recommends:

Keep the article body clean.

Make sure article URLs are permanent and unique.

Take advantage of stock tickers in Sitemaps.

Check your encoding.

Make your article publication dates explicit.

Keep original content separate from press releases.

Format your images properly.

Make sure the title you want appears in both the title tag and as the headline on the article page.

With the Google News platform, the more clicks your articles produce, the better. Google is tracking all of the clicks on its Google News portal. As of this writing, the following is the HTTP header fragment when clicking on one of the articles:

GET /news/url?sa=t&ct2=ca%2F0_0_s_0_0_t&usg=AFQjCNFjVmWpHfAZj— UVnmQA6HjxXyuYg&cid=1292192065&ei=cqR7SsD5Fqjm9ASm_p- OAw&rt=HOMEPAGE&vm=STANDARD&url=http%3A%2F%2Fwww.cbc.ca%2Fhealth%2F story%2F2009%2F08%2F06%2Fswine-flu-vaccine.html HTTP/1.1

This request is then followed by a 302 (temporary) HTTP redirect to the (news) source site:

HTTP/1.x 302 Found Cache-Control: private Content-Type: text/html; charset=UTF-8 Location: http://www.cbc.ca/health/story/2009/08/06/swine-flu-vaccine.html Date: Fri, 07 Aug 2009 03:50:21 GMT Server: NFE/1.0 Content-Length: 261 http://www.cbc.ca/health/story/2009/08/06/swine-flu-vaccine.html

In this way, Google can easily test and promote articles that are getting the most clicks.

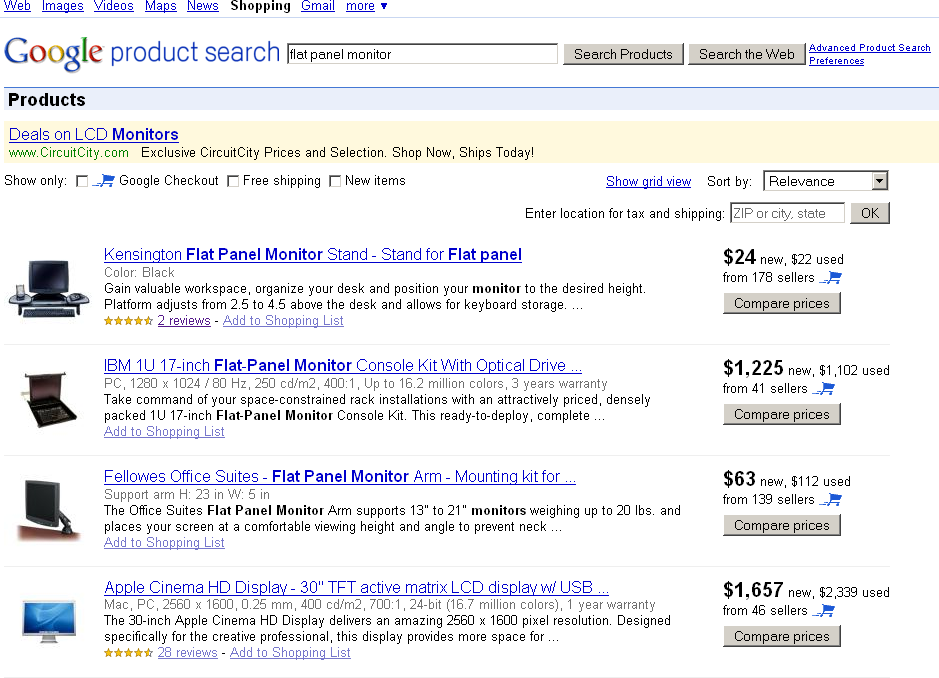

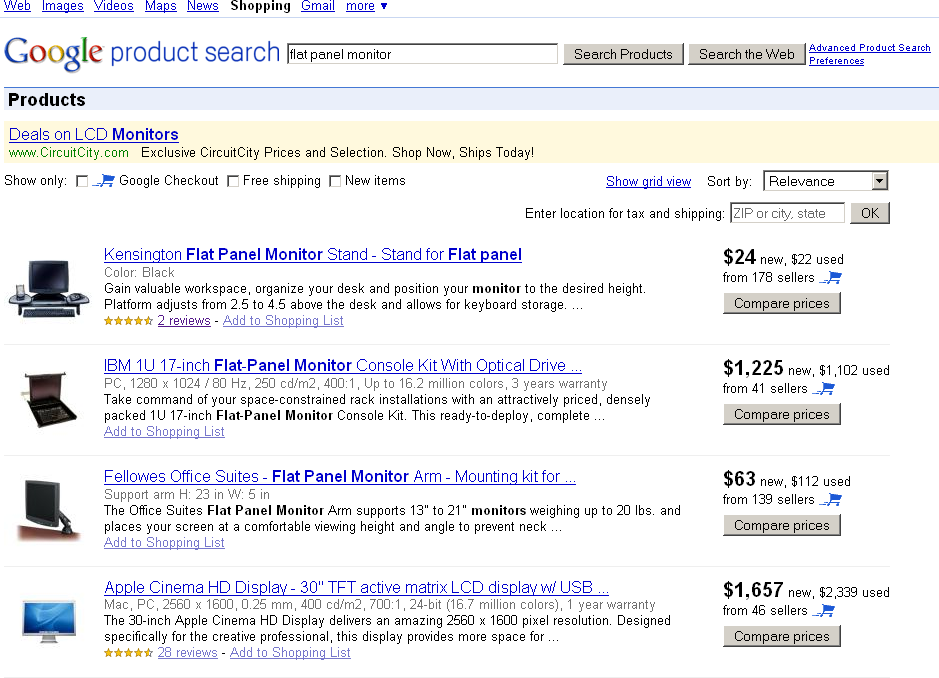

Google Product Search

The Google Product Search platform allows sellers to create product feeds. Sellers can increase their website traffic and sales. The platform setup is free of charge. You can create your account by registering for an account with Google Base. According to Google:

Google Base is a place where you can easily submit all types of online and offline content, which we’ll make searchable on Google (if your content isn’t online yet, we’ll put it there). You can describe any item you post with attributes, which will help people find it when they do related searches. In fact, based on your items’ relevance, users may find them in their results for searches on Google Product Search and even our main Google web search.

Currently, Google Base supports the following product feed formats: tab delimited, RSS 1.0, RSS 2.0, Atom 0.3, Atom 1.0, and API. When constructing your feeds, pay special attention to the product title and description fields. These are the fields that will appear in the Google Product Search results. Make use of your targeted keywords with a call to action.

It is believed that Google favors older feeds just like it favors older domains. Also note that Google Product Search lists seller ratings as well as product reviews in its search results. Products with better reviews are favored in the search results. Figure 14-6 shows a sample Google Product Search results page.

Summary

As we discussed in this chapter, content is king. Depending on your site, you will want to have both short- and long-term content. Short-term content helps you capture traffic associated with current Internet buzz. Long-term content helps bring more consistent traffic volumes over time.

Content duplication is one of the most talked about issues in SEO. Using the canonical link element along with existing content duplication elimination methods is part of sound SEO.

Utilizing search engine verticals helps bring in additional website traffic. Companies selling products should explore using Google Base and its Google Product Search platform.