2. Managing Software Security Risk

“The need for privacy, alas, creates a tradeoff between the need for security and ease of use. In the ideal world, it would be possible to go to an information appliance, turn it on and instantly use it for its intended purpose, with no delay. . . . Because of privacy issues, this simplicity is denied us whenever confidential or otherwise restricted information is involved.”

—DONALD NORMAN

THE INVISIBLE COMPUTER

The security goals we covered in Chapter 1 include prevention, traceability and auditing, monitoring, privacy and confidentiality, multilevel security, anonymity, authentication, and integrity. Software project goals include functionality, usability, efficiency, time-to-market, and simplicity. With the exception of simplicity, designing a system that meets both security goals and software project goals is not always easy.

In most cases, the two sets of goals trade off against one another badly. So when it comes to a question of usability versus authentication (for example), what should win out? And when it comes to multilevel security versus efficiency, what’s more important?

The answer to these and other similar questions is to think of software security as risk management. Only when we understand the context within which a tradeoff is being made can we make intelligent decisions. These decisions will differ greatly according to the situation, and will be deeply influenced by business objectives and other central concerns.

Software risk management encompasses security, reliability, and safety. It is not just a security thing. The good news is that a mature approach to software security will help in many other areas as well. The bad news is that software risk management is a relative newcomer to the scene, and many people don’t understand it. We hope to fix that in this chapter.

We begin with a 50,000-foot overview of software risk management and how it relates to security. Then we talk about who should be charged with software risk management, how they fit into a more complete security picture, and how they should think about development and security. We also take a short diversion to cover the Common Criteria—an attempt to provide an international, standardized approach to security that may impact the way software security is practiced.

An Overview of Software Risk Management for Security

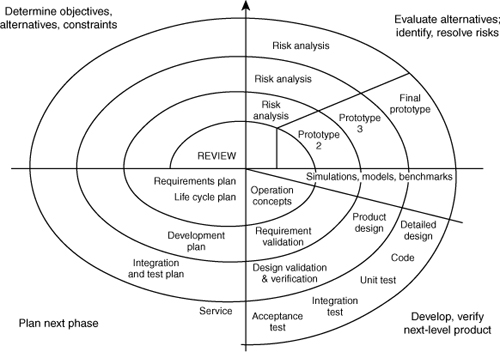

The most important prerequisite to software risk management is adopting a high-quality software engineering methodology that enables good risk management practices. The premier methodology is the spiral model, shown in Figure 2-1.1 The spiral model posits that development is best performed by doing the same kinds of things over and over again, instead of running around in circles. However, the idea is to spiral in toward the final goal, which is usually a complete, robust product. Much of the repetition in the spiral model often boils down to refining your product based on new experiences.

1. Although Figure 2-1 shows the spiral model as an outward spiral, we like to see it more as an inward spiral to a platonic ideal that you can never reach.

Figure 2-1 The spiral model of software development.

In the spiral model, the first activity is to derive a set of requirements. Requirements are essential. From the point of view of security, you need to be sure to consider security when requirements are derived. We recommend spending some time talking about security as its own topic, to make sure it gets its due.

The second activity is to identify your largest risks, and evaluate strategies for resolving those risks. Identifying risks is accomplished through risk analysis (see Chapter 6). Clearly, practitioners will do a lot better in terms of security if they keep security requirements in mind when performing a risk analysis. At this stage, as well as during the requirements phase, we find it good to perform a complete security analysis to make sure that security is addressed.

A risk management exercise may begin anytime during the overall software life cycle, although it is most effectively applied as early as possible. For any given project, there is likely some point in the life cycle beyond which software risk management gets very expensive. However, it’s never too late.

At the point in the life cycle when software risk management begins, all existing artifacts and involved stakeholders can be used as resources in identifying software risks. Requirements documents, architecture and design documents, models, any existing code, and even test cases all make useful artifacts.

Next, risks are addressed, often through prototyping, and some validation may be performed to make sure that a possible solution manages risks appropriately, given the business context. Any such solution is then integrated into the current state of affairs. Perhaps the code will be modified. Perhaps the design and the requirements will be updated as well. Finally, the next phase is planned.

The key to the spiral model is that it is applied in local iterations at each milestone during software life cycle phases. Each local software risk management cycle involves application of the same series of steps. Local iterations of the cycle manage risk at a more refined level, and apply specifically to the life cycle phase during which they are performed. As a result, projects become more resilient to any problems that crop up along the way. This model is in sharp contrast to the older waterfall model, which has all development happening in order, with little looking back or cycling back to address newly identified concerns.

Software engineering is based on an attempt to formalize software development, injecting some sanity into what is often perceived as too creative an endeavor. Building secure software leans heavily on the discipline of software engineering, liberally borrowing methods that work and making use of critical engineering artifacts. Be cautioned, however, that some practitioners of software engineering overemphasize process to the detriment of understanding and adapting software behavior. We think process is a good thing, but analysis of the end product (the software itself) is an essential practice [Voas, 1998].

This book is not intended to teach you about software engineering principles per se. In fact, we don’t really care which process you apply. The main thing is to work explicitly to manage software risk, especially from a security perspective. In the end, note that we consider sound software engineering a prerequisite to sound software security.

The Role of Security Personnel

Approaching security as risk management may not come easily to some organizations. One of the problems encountered in the real world involves the disconnect between what we call “roving bands of developers” and the security staff of the information technology (IT) department. Although we’ve said that security integrates in a straightforward manner into software engineering methodologies, it still requires time and expertise. One workable approach to bridging the gap is to make software security somebody’s job. The trick is to find the right somebody.

Two major qualifications are required for a software security lead: (1) a deep understanding of software development and (2) an understanding of security. Of these two, the first qualification is probably the most important.

The previous statement may sound strange coming from a couple of security people. Suffice it to say that we were both developers (and programming language connoisseurs) before we got into security. Developers have highly attuned radar. Nothing bothers a developer more than useless meetings with people who seemingly don’t “get it.” Developers want to get things done, not worry about checking boxes and passing reviews. Unfortunately, most developers look at security people as obstacles to be overcome, mostly because of the late life cycle “review”-based approach that many organizations seem to use.

This factor can be turned into an advantage for software security. Developers like to listen to people who can help them get their job done better. This is one reason why real development experience is a critical requirement for a successful software security person. A software security specialist should act as a resource to the development staff instead of an obstacle. This means that developers and architects must have cause to respect the body of knowledge the person brings to the job. A useful resource is more likely to be consulted early in a project (during the design and architecture phases), when the most good can be accomplished.

Too often, security personnel assigned to review application security are not developers. This causes them to ask questions that nobody with development experience would ever ask. And it makes developers and architects roll their eyes and snicker. That’s why it is critical that the person put in charge of software security really knows about development.

Knowing everything there is to know about software development is, by itself, not sufficient. A software security practitioner needs to know about security as well. Right now, there is no substitute for direct experience. Security risk analysis today is a fundamentally creative activity. In fact, following any software engineering methodology tends to be that way. Identifying security risks (and other software risks for that matter) requires experience with real risks in the real world. As more software security resources become available, and as software security becomes better understood by developers, more people will be able to practice it with confidence.

Once a software security lead has been identified, he or she should be the primary security resource for developers and architects or, preferably, should build a first-tier resource for other developers. For example, it’s good practice to provide a set of implementation guidelines for developers to keep in mind when the security lead isn’t staring over their shoulder (this being generally a bad idea, of course). Building such a resource that developers and architects can turn to for help and advice throughout the software life cycle takes some doing. One critical factor is understanding and building knowledge of advanced development concepts and their impact on security. For example, how do middleware application servers of different sorts impact security? What impact do wireless devices have on security? And so on.

On many projects, having a group of security engineers may be cost-effective. Developers and architects want to make solid products. Any time security staff help the people on a project meet this objective without being seen as a tax or a hurdle, the process can work wonders.

Software Security Personnel in the Life Cycle

Another job for the software security lead is that he or she should be responsible for making sure security is fairly represented during each phase of the software engineering life cycle. In this section we talk about how such expertise can effectively manifest itself during each development phase. Generally, we discuss the role of a security engineer during each phase of the software life cycle. This engineer could be the lead, or it could be another individual. During each phase, you should use the best available person (or people) for the job.

Deriving Requirements

In developing a set of security requirements, the engineer should focus on identifying what needs to be protected, from whom those things need to be protected, and for how long protection is needed. Also, one should identify how much it is worth to keep important data protected, and one should look at how people concerned are likely to feel over time.

After this exercise, the engineer will most likely have identified a large number of the most important security requirements. However, there are usually more such requirements that must be identified to do a thorough job. For example, a common requirement that might be added is, “This product must be at least as secure as the average competitor.” This requirement is perfectly acceptable in many situations, as long as you make sure the requirement is well validated. All along, the security engineer should make sure to document everything relevant, including any assumptions made.

The security engineer should be sure to craft requirements well. For example, the requirement “This application should use cryptography wherever necessary” is a poor one, because it prescribes a solution without even diagnosing the problem. The goal of the requirements document is not only to communicate what the system must do and must not do, but also to communicate why the system should behave in the manner described. A much better requirement in this example would be, “Credit card numbers should be protected against potential eavesdropping because they are sensitive information.” The choice of how to protect the information can safely be deferred until the system is specified.

Software security risks to be managed take on different levels of urgency and importance in different situations. For example, denial of service may not be of major concern for a client machine, but denial of service on a commercial Web server could be disastrous. Given the context-sensitive nature of risks, how can we compare and contrast different systems in terms of security?

Unfortunately, there is no “golden” metric for assessing the relative security of systems. But we have found in practice that the use of a standardized set of security analysis guidelines is very useful. Visa International makes excellent use of security guidelines in their risk management and security group [Visa, 1997].

The most important feature of any set of guidelines is that they create a framework for consistency of analysis. Such a framework allows any number of systems to be compared and contrasted in interesting ways.

Guidelines consist of both an explanation of how to execute a security analysis in general, and what kinds of risks to consider. No such list can be absolute or complete, but common criteria for analysis—such as those produced when using the Common Criteria (described later)—can sometimes be of help.

The system specification is generally created from a set of requirements. The importance of solid system specification cannot be overemphasized. After all, without a specification, the behavior of a system can’t be wrong, it can only be surprising! Especially when it comes to running a business, security surprises are not something anyone wants to encounter.

A solid specification draws a coherent big-picture view of what the system does and why the system does it. Specifications should be as formal as possible without becoming overly arcane. Remember that the essential raison d’être for a specification is system understanding. In general, the clearer and easier a specification, the better the resulting system. Unfortunately, extreme programming (XP) seems to run counter to this old-fashioned view! In our opinion, XP will probably have a negative impact on software security.

Risk Assessment

As we have said, there is a fundamental tension inherent in today’s technology between functionality (an essential property of any working system) and security (also essential in many cases). A common joke goes that the most secure computer in the world is one that has its disk wiped, is turned off, and is buried in a 10-foot hole filled with concrete. Of course, a machine that secure also turns out to be useless. In the end, the security question boils down to how much risk a given enterprise is willing to take to solve the problem at hand effectively.

An effective risk-based approach requires an expert knowledge of security. The risk manager needs to be able to identify situations in which known attacks could potentially be applied to the system, because few totally unique attacks ever rear their head. Unfortunately, such expert knowledge is hard to come by.

Risk identification works best when you have a detailed specification of a system to work from. It is invaluable to have a definitive resource to answer questions about how the system is expected to act under particular circumstances. When the specification is in the developer’s head, and not on paper, the whole process becomes much more fuzzy. It is all too easy to consult your mental requirements twice and get contradictory information without realizing it.

Note that new risks tend to surface during development. For experimental applications, many risks may not be obvious at requirements time. This is one reason why an iterative software engineering life cycle can be quite useful.

Once risks have been identified, the next step is to rank the risks in order of severity. Any such ranking is a context-sensitive undertaking that depends on the needs and goals of the system. Some risks may not be worth mitigating (depending, for example, on how expensive carrying out a successful attack might be). Ranking risks is essential to allocating testing and analysis resources further down the line. Because resource allocation is a business problem, making good business decisions regarding such allocations requires sound data.

Given a ranked set of potential risks in a system, testing for security is possible. Testing requires a live system and makes sometimes esoteric security risks much more concrete. There is nothing quite as powerful as showing someone an attack to get them to realize the magnitude of the problem.

When a risk analysis is performed on a system, the security engineer should make sure that a high-quality security risk analysis takes place. It is often best to get a consultant to look at a system, because even a security engineer has some personal stake in the product he or she is auditing. This book’s companion Web site lists companies that offer security engineering services, including risk analysis and code audits. Nonetheless, we still think it’s a good idea to have the security engineer perform risk analyses, if only to duplicate such analyses whenever possible. This goes for the rest of your risk analyses. An impartial person is often better at such things than people who are emotionally attached to the project. Additionally, you may be able to bring more expertise to the project that way, especially considering that many security engineers are internally grown people who happened to be motivated and interested in the topic. An extra pair of eyes almost always turns up more issues. However, if you are strapped for resources, then relying on your security engineer has to be good enough.

Design for Security

The ad hoc security development techniques most people use tend not to work very well. “Design for security” is the notion that security should be considered during all phases of the development cycle and should deeply influence a system’s design.

There are many examples of systems that were not originally designed with security in mind, but later had security functionality added. Many of these systems are security nightmares. A good example is the Windows 95/98/ME platform (which we collectively refer to as Windows 9X2), which is notorious for its numerous security vulnerabilities. It is generally believed that any Windows 9X machine on a network can be crashed or hacked by a knowledgeable attacker. For example, authentication mechanisms in Windows 9X are prone to being defeated. The biggest problem is that anyone can sit down at a Windows 9X machine, shut off the machine, turn it back on, log in without a password, and have full control over the computer. In short, the Windows 9X operating system was not designed for today’s networking environment; it was designed when PCs were stand-alone machines. Microsoft’s attempts to retrofit its operating system to provide security for the new, networked type of computer usage have not been very successful.

2. Even in the twenty-first century, Windows 9X is a technology that is better left in the 90s, from a security point of view. We’re much more fond of the NT/2000 line.

UNIX, which was developed by and for scientific researchers and computer scientists, was not designed with security in mind either. It was meant as a platform for sharing research results among groups of team players. Because of this, it has also suffered from enormous amounts of patching and security retrofitting. As with Microsoft, these efforts have not been entirely successful.

When there are product design meetings, the security engineer should participate, focusing on the security implications of any decisions and adding design that addresses any potential problems. Instead of proposing a new design, the security engineer should focus significant attention on analysis. Good analysis skills are a critical prerequisite for good design. We recommend that security engineers focus on identifying

• How data flow between components

• Any users, roles, and rights that are either explicitly stated or implicitly included in the design

• The trust relationships of each component

• Any potentially applicable solution to a recognized problem

Implementation

Clearly, we believe that good software engineering practice is crucial to building secure software. We also think the most important time to practice good software engineering technique is at design time, but we can’t overlook the impact of the implementation.

The second part of this book is dedicated to securing the implementation. Most of the initial work is in the hands of the people writing code. Certainly, the security engineer can participate in coding if he or she is skilled. Even if not a world-class programmer, a good security engineer can understand code well enough to be able to read it, and can understand security problems in code well enough to be able to communicate them to other developers. The security engineer should be capable of serving as an effective resource to the general development staff, and should spend some time keeping current with the security community.

The other place a security engineer can be effective during the implementation phase of development is the code audit. When it’s time for a code review, the security engineer should be sure to look for possible security bugs (again, outside auditing should also be used, if possible). And, whenever it is appropriate, the security engineer should document anything about the system that is security relevant. We discuss code auditing in much more detail in Chapter 6. Note that we’re asking quite a lot from our security engineer here. In some cases, more than one person may be required to address the range of decisions related to architecture through implementation. Finding a software security practitioner who is equally good at all levels is hard.

Security Testing

Another job that can fall to a security engineer is security testing, which is possible with a ranked set of potential risks in hand, although it remains difficult. Testing requires a live system and is an empirical activity, requiring close observation of the system under test. Security tests usually will not result in clear-cut results like obvious system penetrations, although sometimes they may. More often a system will behave in a strange or curious fashion that tips off an analyst that something interesting is afoot. These sorts of hunches can be further explored via manual inspection of the code. For example, if an analyst can get a program to crash by feeding it really long inputs, there is likely a buffer overflow that could potentially be leveraged into a security breach. The next step is to find out where and why the program crashed by looking at the source code.

Functional testing involves dynamically probing a system to determine whether the system does what it is supposed to do under normal circumstances. Security testing, when done well, is different. Security testing involves probing a system in ways that an attacker might probe it, looking for weaknesses in the software that can be exploited.

Security testing is most effective when it is directed by system risks that are unearthed during an architectural-level risk analysis. This implies that security testing is a fundamentally creative form of testing that is only as strong as the risk analysis it is based on. Security testing is by its nature bounded by identified risks (as well as the security expertise of the tester).

Code coverage has been shown to be a good metric for understanding how good a particular set of tests is at uncovering faults. It is always a good idea to use code coverage as a metric for measuring the effectiveness of functional testing. In terms of security testing, code coverage plays an even more critical role. Simply put, if there are areas of a program that have never been exercised during testing (either functional or security), these areas should be immediately suspect in terms of security. One obvious risk is that unexercised code will include Trojan horse functionality, whereby seemingly innocuous code carries out an attack. Less obvious (but more pervasive) is the risk that unexercised code has serious bugs that can be leveraged into a successful attack.

A Dose of Reality

For the organizational solution we propose to work, you need to make sure the security lead knows his or her place. The security lead needs to recognize that security trades off against other requirements.

The goal of a security lead is to bring problems to the attention of a team, and to participate in the decision-making process. However, this person should avoid trying to scare people into doing the “secure” thing without regard to the other needs of the project. Sometimes the “best” security is too slow or too unwieldy to be workable for a real-world system, and the security lead should be aware of this fact. The security lead should want to do what’s in the best interest of the entire project. Generally, a good security lead looks for the tradeoffs, points them out, and suggests an approach that appears to be best for the project. On top of that, a good security lead is as unobtrusive as possible. Too many security people end up being annoying because they spend too much time talking about security. Observing is an important skill for such a position.

Suffice it to say, we have no qualms with people who make decisions based on the bottom line, even when they trade off security. It’s the right thing to do, and good security people recognize this fact. Consider credit card companies, the masters of risk management. Even though they could provide you with much better security for your credit cards, it is not in their financial interests (or yours, as a consumer of their product) to do so. They protect you from loss, and eat an astounding amount in fraud each year, because the cost of the fraud is far less than the cost to deploy a more secure solution. In short, why should they do better with security when no one ultimately gets hurt, especially when they would inconvenience you and make themselves less money to boot?

Getting People to Think about Security

Most developers are interested in learning about security. Security is actually a great field to be working in these days, and software security is right at the cutting edge.

The world is coming to realize that a majority of security problems are actually caused by bad software. This means that to really get to the heart of the matter, security people need to pay more attention to software development. Obviously, the people best suited to think hard about development are developers themselves.

Sometimes developers and architects are less prone to worry about security. If you find yourself a developer on a project for which the people holding the reigns aren’t paying enough attention to security, what should you do? One of our suggestions is to have such people read Chapter 1 of this book. It should open their eyes. Another good book to give them is Bruce Schneier’s book Secrets and Lies, which provides a great high-level overview of basic security risks [Schneier, 2000].

Software Risk Management in Practice

Balancing security goals against software project goals is nontrivial. The key to success is understanding the tradeoffs that must be made from a business perspective. Software risk management can help balance security and functionality, bridging the all-too-common gap between IT department security and development staff.

In this section we describe the security gap found in many traditional organizations, and our approach to bridging it.

When Development Goes Astray

Technology is currently in a rapid state of change. The emergence of the Web, the new economy, and e-business have caused a majority of businesses (both old and new) to reevaluate their technology strategy and to dive headlong into the future.

Caught offguard in the maelstrom of rapid change, traditional IT structures have struggled to keep up. One of the upshots is a large (but hopefully shrinking) gap between traditional CIO-driven, management information-based, IT organizations, and CTO-driven, advanced technology, rapid development teams charged with inventing new Internet-based software applications. Because security was traditionally assigned to the IT staff, there is often little or no security knowledge among what we have called the “roving bands of developers.”

Left to their own devices, roving bands of developers often produce high-quality, well-designed products based on sometimes too-new technologies. The problem is, hotshot coders generally don’t think about security until very late in the development process (if at all). At this point, it is often too late.

There is an unfortunate reliance on the “not-my-job” attitude among good developers. They want to produce something that works as quickly as possible, and they see security as a stodgy, slow, painful exercise.

When Security Analysis Goes Astray

Traditional network security departments in most IT shops understand security from a network perspective. They concern themselves with firewalls, antivirus products, intrusion detection, and policy. The problem is that almost no attention is paid to software being developed by the roving bands of developers, much less to the process that development follows.

When the two groups do interact, the result is not often pretty. IT departments sometimes hold “security reviews,” often at the end of a project, to determine whether an application is secure. This causes resentment among developers because they come to see the reviews as a hurdle in the way of producing code. Security slows things down (they rightfully claim).

One answer to this problem is to invest in software security expertise within the security group. Because software security expertise is an all-too-rare commodity these days, this approach is not always easy to implement. Having a person on the security staff devoted to software security and acting as a resource to developers is a great way to get the two camps interacting.

Traditional IT security shops often rely on two approaches we consider largely ineffective when they do pay attention to software security. These are black box testing and red teaming.

Black Box Testing

Simply put, black box testing for security is not very effective. In fact, even without security in the picture, black box testing is nowhere near as effective as white box testing (making use of the architecture and code to understand how to write effective tests). Testing is incredibly hard. Just making sure every line of code is executed is often a nearly impossible task, never mind following every possible execution path, or even the far simpler goal of executing every branch in every possible truth state.

Security tests should always be driven by a risk analysis. To function, a system is forced to make security tradeoffs. Security testing is a useful tool for probing the edges and boundaries of a tradeoff. Testing the risks can help determine how much risk is involved in the final implementation.

Security testing should answer the question, “Is the system secure enough?” not the question, “Does this system have reliability problems?” The latter question can always be answered Yes.

Black box testing can sometimes find implementation errors. And these errors can be security problems. But although most black box tests look for glaring errors, not subtle errors, real malicious hackers know that subtle errors are much more interesting in practice. It takes a practiced eye to see a test result and devise a way to break a system.

Red Teaming

The idea behind red teaming is to simulate what hackers do to your program in the wild. The idea is to pay for a group of skilled people to try to break into your systems without giving them any information a probable attacker wouldn’t have. If they don’t find any problems, success is declared. If they do find problems, they tell you what they did. You fix the problems, and success is declared.

This idea can certainly turn up real problems, but we find it misguided overall. If a red team finds no problems, does that mean there are no problems? Of course not. Experience shows that it often takes a lot of time and diverse experience to uncover security holes. Red teams sometimes have diverse experience, but the time spent is usually not all that significant. Also, there is little incentive for red teams to look for more than a couple of problems. Red teams tend to look for problems that are as obvious as possible. A real attacker may be motivated by things you don’t understand. He or she may be willing to spend amazing amounts of effort to pick apart your system. Red teams generally only have time to scratch the surface, because they start with such little information. Whether a skilled red team actually finds something is largely based on luck. You get far more bang for your buck by opening up the details of your system to these kinds of people, instead of forcing them to work with no information.

The Common Criteria

In this chapter we’ve doled out advice on integrating security into your engineering process. You may wish to look at other methodologies for building secure systems. For example, over the last handful of years, several governments around the world, including the US government in concert with major user’s groups such as the Smart Card Security User’s Group, have been working on a standardized system for design and evaluation of security-critical systems. They have produced a system known as the Common Criteria. Theoretically, the Common Criteria can be applied just as easily to software systems as to any other computing system. There are many different sets of protection profiles for the Common Criteria, including a European set and a US set, but they appear to be slowly converging. The compelling idea behind the Common Criteria is to create a security assurance system that can be systematically applied to diverse security-critical systems.

The Common Criteria has its roots in work initiated by the US Department of Defense (DoD) which was carried out throughout the 1970s. With the help of the National Security Agency (NSA), the DoD created a system for technical evaluation of high-security systems. The Department of Defense Trusted Computer System Evaluation Criteria [Orange, 1995] codified the approach and became known as the “Orange Book” because it had an orange cover. The Orange Book was first published in 1985, making it a serious antique in Internet years. Most people consider the Orange Book obsolete. In light of the fact that many believe that the Orange Book is not well suited for networked machines, this opinion is basically correct.

Several more efforts to standardize security assurance practices have emerged and fallen out of favor between the debut of the Orange Book and the Common Criteria. They include

• The Canadian’s Canadian Trusted Computer Products Evaluation Criteria

• The European Union’s Information Technology Security Evaluation Criteria (ITSEC)

• The US Federal Criteria

The Common Criteria is designed to standardize an evaluation approach across nations and technologies. It is an ISO (International Standard Organization) standard (15408, version 2.1).

Version 2 of the Common Criteria is composed of three sections that total more than 600 pages. The Common Criteria defines a set of security classes, families, and components designed to be appropriately combined to define a protection profile for any type of IT product, including hardware, firmware, or software. The goal is for these building blocks to be used to create packages that have a high reuse rate among different protection profiles.

The Common Evaluation Methodology is composed of two sections that total just more than 400 pages. The Common Evaluation Methodology defines how targets of evaluation, defined as a particular instance of a product to be evaluated, are to be assessed according to the criteria laid out in the Common Criteria. The National Voluntary Laboratory Accreditation Program (NVLAP) is a part of the National Institute of Standards and Technology (NIST) that audits the application of the Common Criteria and helps to ensure consistency.

The smart card industry, under the guidance of the Smart Card Security User’s Group, has made great use of the Common Criteria to standardize approaches to security in their worldwide market.

An example can help clarify how the Common Criteria works. Suppose that Wall Street gets together and decides to produce a protection profile for the firewalls used to protect the machines holding customers’ financial information. Wall Street produces the protection profile, the protection profile gets evaluated according to the Common Evaluation Methodology to make sure that it is consistent and complete. Once this effort is complete, the protection profile is published widely. A vendor comes along and produces its version of a firewall that is designed to meet Wall Street’s protection profile. Once the product is ready to go, it becomes a target of evaluation and is sent to an accredited lab for evaluation.

The protection profile defines the security target for which the target of evaluation was designed. The lab applies the Common Evaluation Methodology using the Common Criteria to determine whether the target of evaluation meets the security target. If the target of evaluation meets the security target, every bit of the lab testing documentation is sent to NVLAP for validation.3 Depending on the relationship between NVLAP and the lab, this could be a rubber stamp (although this is not supposed to happen), or it could involve a careful audit of the evaluation performed by the lab.

3. At least this is the case in the United States. Similar bodies are used elsewhere.

If the target of evaluation is validated by NVLAP, then the product gets a certificate and is listed alongside other evaluated products. It is then up to the individual investment houses on Wall Street to decide which brand of firewall they want to purchase from the list of certified targets of evaluation that meet the security target defined by the protection profile.

Although the Common Criteria is certainly a good idea whose time has come, security evaluation is unfortunately not as simple as applying a standard protection profile to a given target of evaluation. The problem with the Common Criteria is evident right in its name. That is, “common” is often not good enough when it comes to security.

We’ve written at length about security being a process and not a product. One of our main concerns is that software risk management decisions, including security decisions are sensitive to the business context in which they are made. There is no such thing as “secure”; yet the Common Criteria sets out to create a target of evaluation based on a standardized protection profile. The very real danger is that the protection profile will amount to a least-common-denominator approach to security.

As of the year 2001, the smart card community is still grappling with this problem. The Smart Card Security User’s Group is spearheading the effort to make the Common Criteria work for the smart card industry, but the dust has yet to settle. There are two main problems: First, two distinct protection profiles have emerged—a European version that focuses on security by obscurity and physical security (to the detriment of design analysis and testing), and a Visa-developed US version that leans more heavily on applying known attacks and working with a mature threat model. Which of these approaches will finally hold sway is yet to be decided. Second, a majority of the technology assessment labs that do the best work on smart card security are not interested in entering the Common Criteria business. As a result, it appears that Common Criteria-accredited labs (large consulting shops with less expert-driven know-how) will not be applying protection profiles to targets of evaluation with enough expertise. This calls into question just what a Common Criteria accreditation of a target of evaluation will mean. As it stands, such a certification may amount to an exercise in box checking with little bearing on real security. This will be especially problematic when it comes to unknown attacks.

All standards appear to suffer from a similar least-common-denominator problem, and the Common Criteria is no exception. Standards focus on the “what” while underspecifying the “how.” The problem is that the “how” tends to require deep expertise, which is a rare commodity. Standards tend to provide a rather low bar (one that some vendors have trouble hurdling nonetheless). An important question to ponder when thinking about standards is how much real security is demanded of the product. If jumping the lowest hurdle works in terms of the business proposition, then applying something like the Common Criteria makes perfect sense. In the real world, with brand exposure and revenue risks on the line, the Common Criteria may be a good starting point but will probably not provide enough security.

The US government is pushing very hard for adoption of the Common Criteria, as are France, Germany, the United Kingdom, and Canada. It is thus reasonable to expect these governments to start requiring a number of shrink-wrapped products to be certified using the Common Criteria/Common Evaluation Methodology. Private industry will likely follow.

All in all, the move toward the Common Criteria is probably a good thing. Any tool that can be used to separate the wheat from the chaff in today’s overpromising/underdelivering security world is likely to be useful. Just don’t count on the Common Criteria to manage all security risks in a product. Often, much more expertise and insight are required than what have been captured in commonly adopted protection profiles. Risk management is about more than hurdling the lowest bar.

Conclusion

This chapter explains why all is not lost when it comes to software security. But it also shows why there is no magic bullet. There is no substitute for working software security as deeply into the software development process as possible, taking advantage of the engineering lessons software practitioners have learned over the years. The particular process that you follow is probably not as important as the act of thinking about security as you design and build software.

Software Engineering and security standards such as the Common Criteria both provide many useful tools that good software security can leverage. The key to building secure software is to treat software security as risk management and to apply the tools in a manner that is consistent with the purpose of the software itself.

Identify security risks early, understand the implications in light of experience, create an architecture that addresses the risks, and rigorously test a system for security.