Hope is a good breakfast, but it is a bad supper.

Caching indicates the system’s, or the application’s, ability to save frequently used data to an intermediate storage medium. An intermediate storage medium is any support placed in between the application and its primary data source that lets you persist and retrieve data more quickly than with the primary data source. In a typical Web scenario, the canonical intermediate storage medium is the Web server’s memory, whereas the data source is the back-end data management system. Obviously, you can design caching around the requirements and characteristics of each application, thus using as many layers of caching as needed to reach your performance goals. In ASP.NET, caching comes in two independent but not exclusive flavors: caching application data, and caching the output of served pages.

To build an application-specific caching subsystem, you use the caching application programming interface (API) that lets you store data in a global, system-managed object—the Cache object. This approach gives you the greatest flexibility, but you need to learn a few usage patterns to stay on the safe side.

Page-output caching, instead, is a very quick way to take advantage of cache capabilities. You don’t need to write code; you just configure it at design time and go. The ASP.NET system takes care of caching the output of the page to serve it back to clients for the specified time. For pages that don’t get stale quickly, page-output caching is a kind of free performance booster.

In this chapter, I’ll cover the aspects of caching in a single server as well as caching in a distributed scenario.

Centered on the Cache object, the ASP.NET caching API is much more than simply a container of global data shared by all sessions, such as the Application object that I briefly discussed in the previous chapter. The Application object, by the way, is preserved only for backward compatibility with legacy applications. The Cache object is a smarter and thread-safe container that can automatically remove unused items, support various forms of dependencies, and optionally provide removal callbacks and priorities. New ASP.NET applications should use the Cache object and seriously consider the AppFabric Caching services if strong scalability is needed.

The Cache class is defined in the System.Web.Caching namespace. The current instance of the application’s ASP.NET cache is returned by the Cache property of the HttpContext object or the Cache property of the Page object.

The Cache object is unique in its capability to automatically scavenge the memory and get rid of unused items. Cached items can be prioritized and associated with various types of dependencies, such as disk files, other cached items, and database tables. When any of these items change, the cached item is automatically invalidated and removed. Aside from that, the Cache object provides the same dictionary-based and familiar programming interface as Session. Unlike Session, however, the Cache object does not store data on a per-user basis. Furthermore, when the session state is managed in-process, all currently running sessions are stored as distinct items in the ASP.NET Cache.

Keep in mind that an instance of the Cache class is created on a per-AppDomain basis and remains valid as long as that AppDomain is up and running. If you’re looking for a global repository object that, like Session, works across a Web farm or Web garden architecture, the Cache object is not for you. You have to resort to AppFabric Caching services or to some commercial frameworks (for example, ScaleOut or NCache) or open-source frameworks (for example, Memcached or SharedCache).

The Cache class provides a few properties and public fields. Table 18-1 lists and describes them all.

Table 18-1. Cache Class Properties and Public Fields

Property | Description |

|---|---|

Count | Gets the number of items stored in the cache. |

EffectivePercentagePhysicalMemoryLimit | Gets the maximum percentage of memory that can be used before the scavenging process starts. The default value is 97. |

EffectivePrivateBytesLimit | Returns the bytes of memory available to the cache. |

Item | An indexer property that provides access to the cache item identified by the specified key. |

NoAbsoluteExpiration | A static constant that indicates a given item will never expire. |

NoSlidingExpiration | A static constant that indicates sliding expiration is disabled for a given item. |

The NoAbsoluteExpiration field is of the DateTime type and is set to the DateTime.MaxValue date—that is, the largest possible date defined in the Microsoft .NET Framework. The NoSlidingExpiration field is of the TimeSpan type and is set to TimeSpan.Zero, meaning that sliding expiration is disabled. I’ll say more about sliding expiration shortly.

The Item property is a read/write property that can also be used to add new items to the cache. If the key specified as the argument of the Item property does not exist, a new entry is created. Otherwise, the existing entry is overwritten.

Cache["MyItem"] = value;

The data stored in the cache is generically considered to be of type object, whereas the key must be a case-sensitive string. When you insert a new item in the cache using the Item property, a number of default attributes are assumed. In particular, the item is given no expiration policy, no remove callback, and a normal priority. As a result, the item will stay in the cache indefinitely, until programmatically removed or until the application terminates. To specify any extra arguments and exercise closer control on the item, use the Insert method of the Cache class instead.

The methods of the Cache class let you add, remove, and enumerate the items stored. Methods of the Cache class are listed and described in Table 18-2.

Table 18-2. Cache Class Methods

Both the Add and Insert methods don’t accept null values as the key or the value of an item to cache. If null values are used, an exception is thrown. You can configure sliding expiration for an item for no longer than one year. Otherwise, an exception will be raised. Finally, bear in mind that you cannot set both sliding and absolute expirations on the same cached item.

Note

Add and Insert work in much the same way, but a couple of differences make it worthwhile to have both on board. Add fails (but no exception is raised) if the item already exists, whereas Insert overwrites the existing item. In addition, Add has just one signature, while Insert provides several overloads.

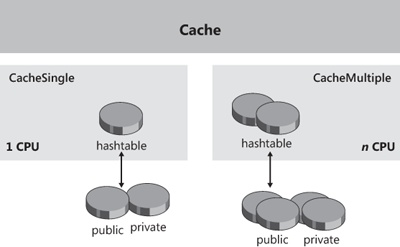

The Cache class inherits from Object and implements the IEnumerable interface. It is a wrapper around an internal class that acts as the true container of the stored data. The real class used to implement the ASP.NET cache varies depending on the number of affinitized CPUs. If only one CPU is available, the class is CacheSingle; otherwise, it is CacheMultiple. In both cases, items are stored in a hashtable and there will be a distinct hashtable for each CPU. It turns out that CacheMultiple manages an array of hashtables. Figure 18-1 illustrates the architecture of the Cache object.

The hashtable is divided into two parts: public elements and private elements. In the public portion of the hashtable are placed all items visible to user applications. System-level data, on the other hand, goes in the private section. The cache is a resource extensively used by the ASP.NET runtime itself; system items, though, are neatly separated by application data and there’s no way an application can access a private element on the cache.

The Cache object is mostly a way to restrict applications to read from, and write to, the public segment of the data store. Get and set methods on internal cache classes accept a flag to denote the public attribute of the item. When called from the Cache class, these internal methods always default to the flag that selects public items.

The hashtable containing data is then enhanced and surrounded by other internal components to provide a rich set of programming features. The list includes the implementation of a least recently used (LRU) algorithm to ensure that items can be removed f the system runs short of memory, dependencies, and removal callbacks.

Note

On a multiprocessor machine with more than one CPU affinitized with the ASP.NET worker process, each processor ends up getting its own Cache object. The various cache objects are not synchronized. In a Web garden configuration, you can’t assume that users will return to the same CPU (and worker process) on subsequent requests. So the status of the ASP.NET cache is not guaranteed to be aligned with what the same page did last time. Later in the chapter, we’ll discuss a variation of the Cache object that addresses exactly this scenario.

An instance of the Cache object is associated with each running application and shares the associated application’s lifetime. Each item when stored in the cache can be given special attributes that determine a priority and an expiration policy. All these are system-provided tools to help programmers control the scavenging mechanism of the ASP.NET cache.

A cache item is characterized by a handful of attributes that can be specified as input arguments of both Add and Insert. In particular, an item stored in the ASP.NET Cache object can have the following properties:

Key A case-sensitive string, it is the key used to store the item in the internal hash table the ASP.NET cache relies upon. If this value is null, an exception is thrown. If the key already exists, what happens depends on the particular method you’re using: Add fails, while Insert just overwrites the existing item.

Value A non-null value of type Object that references the information stored in the cache. The value is managed and returned as an Object and needs casting to become useful in the application context.

Dependencies An object of type CacheDependency, tracks a physical dependency between the item being added to the cache and files, directories, database tables, or other objects in the application’s cache. Whenever any of the monitored sources are modified, the newly added item is marked obsolete and automatically removed.

Absolute Expiration Date A DateTime object that represents the absolute expiration date for the item being added. When this time arrives, the object is automatically removed from the cache. Items not subject to absolute expiration dates must use the NoAbsoluteExpiration constants representing the farthest allowable date. The absolute expiration date doesn’t change after the item is used in either reading or writing.

Sliding Expiration A TimeSpan object, represents a relative expiration period for the item being added. When you set the parameter to a non-null value, the expiration-date parameter is automatically set to the current time plus the sliding period. If you explicitly set the sliding expiration, you cannot set the absolute expiration date too. From the user’s perspective, these are mutually exclusive parameters. If the item is accessed before its natural expiration time, the sliding period is automatically renewed.

Priority A value picked out of the CacheItemPriority enumeration, denotes the priority of the item. It is a value ranging from Low to NotRemovable. The default level of priority is Normal. The priority level determines the importance of the item; items with a lower priority are removed first.

Update Callback If specified, indicates the function that the ASP.NET Cache object calls back when the item will be removed from the cache because it expired or the associated dependency changed. The function won’t be called if the item is programmatically removed from the cached or scavenged by the cache itself. The delegate type used for this callback is CacheItemUpdateCallback.

Removal Callback If specified, indicates the function that the ASP.NET Cache object calls back when the item will be removed from the cache. In this way, applications can be notified when their own items are removed from the cache, no matter what the reason is. As mentioned in Chapter 17, when the session state works in InProc mode, a removal callback function is used to fire the Session_End event. The delegate type used for this callback is CacheItemRemovedCallback.

There are basically three ways to add new items to the ASP.NET Cache object—the set accessor of the Item property, the Add method, and the Insert method. The Item property allows you to indicate only the key and the value. The Add method has only one signature that includes all the aforementioned arguments. The Insert method is the most flexible of all options and provides the following overloads:

public void Insert(String, Object);

public void Insert(String, Object, CacheDependency);

public void Insert(String, Object, CacheDependency, DateTime, TimeSpan);

public void Insert(String, Object, CacheDependency, DateTime, TimeSpan,

CacheItemUpdateCallback);

public void Insert(String, Object, CacheDependency, DateTime, TimeSpan,

CacheItemPriority, CacheItemRemovedCallback);The following code snippet shows the typical call that is performed under the hood when the Item set accessor is used:

Insert(key, value, null, Cache.NoAbsoluteExpiration, Cache.NoSlidingExpiration, CacheItemPriority.Normal, null);

If you use the Add method to insert an item whose key matches that of an existing item, no exception is raised, nothing happens, and the method returns null.

All items marked with an expiration policy, or a dependency, are automatically removed from the cache when something happens in the system to invalidate them. To programmatically remove an item, on the other hand, you resort to the Remove method. Note that this method removes any item, including those marked with the highest level of priority (NotRemovable). The following code snippet shows how to call the Remove method:

var oldValue = Cache.Remove("MyItem");Normally, the method returns the value just removed from the cache. However, if the specified key is not found, the method fails and null is returned, but no exception is ever raised.

When items with an associated callback function are removed from the cache, a value from the CacheItemRemovedReason enumeration is passed on to the function to justify the operation. The enumeration includes the values listed in Table 18-3.

Table 18-3. The CacheItemRemovedReason Enumeration

Description | |

|---|---|

DependencyChanged | Removed because the associated dependency changed. |

Expired | Removed because expired. |

Removed | Programmatically removed from the cache using Remove. Notice that a Removed event might also be fired if an existing item is replaced either through Insert or the Item property. |

Underused | Removed by the system to free memory. |

If the item being removed is associated with a callback, the function is executed immediately after having removed the item.

Note that the CacheItemUpdateReason enumeration contains only the first two items of Table 18-3. Curiously, however, the actual numeric values behind the members in the enumeration don’t match.

Items added to the cache through the Add or Insert method can be linked to an array of files and directories as well as to an array of existing cache items, database tables, or external events. The link between the new item and its cache dependency is maintained using an instance of the CacheDependency class. The CacheDependency object can represent a single file or directory or an array of files and directories. In addition, it can also represent an array of cache keys—that is, keys of other items stored in the Cache—and other custom dependency objects to monitor—for example, database tables or external events.

The CacheDependency class has quite a long list of constructors that provide for the possibilities listed in Table 18-4.

Table 18-4. The CacheDependency Constructor List

Constructor | Description |

|---|---|

String | A file path—that is, a URL to a file or a directory name |

String[] | An array of file paths |

String, DateTime | A file path monitored starting at the specified time |

String[], DateTime | An array of file paths monitored starting at the specified time |

String[], String[] | An array of file paths, and an array of cache keys |

String[], String[], CacheDependency | An array of file paths, an array of cache keys, and a separate CacheDependency object |

String[], String[], DateTime | An array of file paths and an array of cache keys monitored starting at the specified time |

String[], String[], CacheDependency, DateTime | An array of file paths, an array of cache keys, and a separate instance of the CacheDependency class monitored starting at the specified time |

Any change in any of the monitored objects invalidates the current item. Note that you can set a time to start monitoring for changes. By default, monitoring begins right after the item is stored in the cache. A CacheDependency object can be made dependent on another instance of the same class. In this case, any change detected on the items controlled by the separate object results in a broken dependency and the subsequent invalidation of the present item.

In the following code snippet, the item is associated with the timestamp of a file. The net effect is that any change made to the file that affects the timestamp invalidates the item, which will then be removed from the cache.

var dependency = new CacheDependency(filename); Cache.Insert(key, value, dependency);

Bear in mind that the CacheDependency object needs to take file and directory names expressed through absolute file system paths.

Item removal is an event independent from the application’s behavior and control. The difficulty with item removal is that because the application is oblivious to what has happened, it attempts to access the removed item later and gets only a null value back. To work around this issue, you can either check for the item’s existence before access is attempted or, if you think you need to know about removal in a timely manner, register a callback and reload the item if it’s invalidated. This approach makes particularly good sense if the cached item just represents the content of a tracked file or query.

The following code demonstrates how to read the contents of a Web server’s file and cache it with a key named MyData. The item is inserted with a removal callback. The callback simply re-reads and reloads the file if the removal reason is DependencyChanged.

void Load_Click(Object sender, EventArgs e)

{

AddFileContentsToCache("data.xml");

}

void AddFileContentsToCache(String fileName)

{

var file = Server.MapPath(fileName);

var reader = new StreamReader(file);

var data = reader.ReadToEnd();

reader.Close();

CreateAndCacheItem(data, file);

// Display the contents through the UI

contents.Text = Cache["MyData"].ToString();

}

void CreateAndCacheItem(Object data, String file)

{

var removal = new CacheItemRemovedCallback(ReloadItemRemoved);

var dependency = new CacheDependency(file);

Cache.Insert("MyData", data, dependency, Cache.NoAbsoluteExpiration,

Cache.NoSlidingExpiration, CacheItemPriority.Normal, removal);

}

void ReloadItemRemoved(String key, Object value,

CacheItemRemovedReason reason)

{

if (reason == CacheItemRemovedReason.DependencyChanged)

{

// At this time, the item has been removed. We get fresh data and

// re-insert the item

if (key == "MyData")

AddFileContentsToCache("data.xml");

// This code runs asynchronously with respect to the application,

// as soon as the dependency gets broken. To test it, add some

// code here to trace the event

}

}If the underlying file has changed, the dependency-changed event is notified and the new contents are automatically loaded. So the next time you read from the cache, you get fresh data. If the cached item is removed, any successive attempt to read returns null. Here’s some code that shows you how to read from the cache and remove a given item:

void Read_Click(Object sender, EventArgs e)

{

var data = Cache["MyData"];

if (data == null)

{

contents.Text = "[No data available]";

return;

}

// Update the UI

contents.Text = (String) data;

}

void Remove_Click(Object sender, EventArgs e)

{

Cache.Remove("MyData");

}Note that the item removal callback is a piece of code defined by a user page but automatically run by the Cache object as soon as the removal event is fired. The code contained in the removal callback runs asynchronously with respect to the page. If the removal event is related to a broken dependency, the Cache object executes the callback as soon as the notification is detected.

If you add an object to the Cache and make it dependent on a file, directory, or key that doesn’t exist, the item is regularly cached and marked with a dependency as usual. If the file, directory, or key is created later, the dependency is broken and the cached item is invalidated. In other words, if the dependency item doesn’t exist, it’s virtually created with a null timestamp or empty content.

Each item in the cache is given a priority—that is, a value picked up from the CacheItemPriority enumeration. A priority is a value ranging from Low (lowest) to NotRemovable (highest), with the default set to Normal. The priority is supposed to determine the importance of the item for the Cache object. The higher the priority is, the more chances the item has to stay in memory even when the system resources are going dangerously down.

If you want to give a particular priority level to an item being added to the cache, you have to use either the Add or Insert method. The priority can be any value listed in Table 18-5.

Table 18-5. Priority Levels in the Cache Object

The Cache object is designed with two goals in mind. First, it has to be efficient and built for easy programmatic access to the global repository of application data. Second, it has to be smart enough to detect when the system is running low on memory resources and to clear elements to free memory. This trait clearly differentiates the Cache object from HttpApplicationState, which maintains its objects until the end of the application (unless the application itself frees those items). The technique used to eliminate low-priority and seldom-used objects is known as scavenging.

Priority level and changed dependencies are two of the factors that can lead a cached item to be automatically garbage-collected from the Cache. Another possible cause for a premature removal from the Cache is infrequent use associated with an expiration policy. By default, all items added to the cache have no expiration date, neither absolute nor relative. If you add items by using either the Add or Insert method, you can choose between two mutually exclusive expiration policies: absolute expiration and sliding expiration.

Absolute expiration is when a cached item is associated with a DateTime value and is removed from the cache as the specified time is reached. The DateTime.MaxValue field, and its more general alias NoAbsoluteExpiration, can be used to indicate the last date value supported by the .NET Framework and to subsequently indicate that the item will never expire.

Sliding expiration implements a sort of relative expiration policy. The idea is that the object expires after a certain interval. In this case, though, the interval is automatically renewed after each access to the item. Sliding expiration is rendered through a TimeSpan object—a type that in the .NET Framework represents an interval of time. The TimeSpan.Zero field represents the empty interval and is also the value associated with the NoSlidingExpiration static field on the Cache class. When you cache an item with a sliding expiration of 10 minutes, you use the following code:

Insert(key, value, null, Cache.NoAbsoluteExpiration,

TimeSpan.FromMinutes(10), CacheItemPriority.Normal, null);Internally, the item is cached with an absolute expiration date given by the current time plus the specified TimeSpan value. In light of this, the preceding code could have been rewritten as follows:

Insert(key, value, null, DateTime.Now.AddMinutes(10),

Cache.NoSlidingExpiration, CacheItemPriority.Normal, null);However, a subtle difference still exists between the two code snippets. In the former case—that is, when sliding expiration is explicitly turned on—each access to the item resets the absolute expiration date to the time of the last access plus the time span. In the latter case, because sliding expiration is explicitly turned off, any access to the item doesn’t change the absolute expiration time.

Note

Immediately after initialization, the Cache collects statistical information about the memory in the system and the current status of the system resources. Next, it registers a timer to invoke a callback function at one-second intervals. The callback function periodically updates and reviews the memory statistics and, if needed, activates the scavenging module. Memory statistics are collected using a bunch of Win32 API functions to obtain information about the system’s current usage of both physical and virtual memory. The Cache object classifies the status of the system resources in terms of low and high pressure. When the memory pressure exceeds the guard level, seldom-used objects are the first to be removed according to their priority.

Caching is a critical factor for the success of a Web application. Caching mostly relates to getting quick access to prefetched data that saves you roundtrips, queries, and any other sort of heavy operations. Caching is important also for writing, especially in systems with a high volume of data to be written. By posting requests for writing to a kind of intermediate memory structure, you decouple the main body of the application from the service in charge of writing. Some people call this a batch update, but in the end it is nothing more than a form of caching for data to write.

The caching API provides you with the necessary tools to build a bullet-proof caching strategy. When it comes to this, though, a few practical issues arise.

There’s just one possible answer to this question—it depends. It depends on the characteristics of the application and the expected goals. For an application that must optimize throughput and serve requests in the shortest possible amount of time, caching is essential. The quantity of data you cache and the amount of time you cache it in are the two parameters you need to play with to arrive at a good solution.

Caching is about reusing data, so data that is not often used in the lifetime of the application is not a good candidate for the cache. In addition to being frequently used, cacheable data is also general-purpose data rather than data that is specific to a request or a session. If your application manages data with these characteristics, cache it with no fear.

Caching is about memory, and memory is relatively cheap. However, a bad application design can easily drive the application to unpleasant out-of-memory errors regardless of the cost of a memory chip. On the other hand, caching can boost the performance just enough to ease your pain and give you more time to devise a serious refactoring.

Sometimes you face users who claim to have an absolute need for live data. Sure, data parked in the cache is static, unaffected by concurrent events, and not fully participating in the life of the application. Can your users afford data that has not been updated for a few seconds? With a few exceptions, the answer is, “Sure, they can.” In a canonical Web application, there’s virtually no data that can’t be cached at least for a second or two. No matter what end users claim, caching can realistically be applied to the vast majority of scenarios. Real-time systems and systems with a high degree of concurrency (for example, a booking application) are certainly an exception, but most of the time a slight delay of one or two seconds can make the application run faster under stress conditions without affecting the quality of the service.

In the end, you should be considering caching all the time and filter it out in favor of direct data access only in special situations. As a practical rule, when users claim they need live data, you should try to provide a counterexample that proves to them that a few seconds of delay are still acceptable and that the delay can maximize hardware and software investments.

Fetching the real data is an option, but it might be the most expensive one. If you choose that option, make sure you really need it. Accessing cached data is usually faster if the data you get in this way makes sense to the application. On the other hand, be aware that caching requires memory. If abused, it can lead to out-of-memory errors and performance hits. Having said that, don’t be too surprised if you find out that sometimes fetching data is actually faster than accessing items in a busy cache. This is due to how optimized SQL Server access has gotten these days.

As mentioned, no data stored in the ASP.NET cache is guaranteed to stay there when a piece of code attempts to read it. For the safety of the application, you should never rely on the value returned by the Get method or the Item property. The following pattern keeps you on the safe side:

var data = Cache["MyData"];

if (data != null)

{

// The data is here, process it

...

}The code snippet deliberately omits the else branch. What should you do if the requested item is null? You can abort the ongoing operation and display a friendly message to the user, or you can perhaps reload the data with a new fetch. Whatever approach you opt for, it will hardly fit for just any piece of data you can have in the cache.

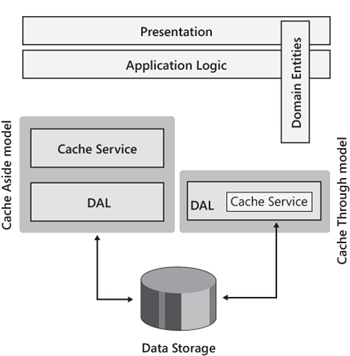

If you need the cache as a structural part of the application (rather than just for caching only a few individual pieces of data), it has to be strictly related to the data access layer (DAL) and the repository interfaces you have on top of that. (See Chapter 14.) Depending on the pattern you prefer, you can have caching implemented as a service in the business tier (Cache-side pattern) or integrated in the DAL and transparent to the rest of the application (Cache Through pattern). Figure 18-2 shows the resulting architecture in both cases.

In addition, you need to consider the pluggability of the caching layer. Whether you design it as an application service or as an integral part of the DAL, the caching service must be abstracted to an interface so that it can be injected in the application or in the DAL. At a minimum, the abstraction will offer the following:

public interface ICacheService

{

Object Get(String key);

void Set(String key, Object data);

...

}You are responsible for adding dependencies and priorities as appropriate. Here’s the skeleton of a class that implements the interface using the native ASP.NET Cache object:

public class AspNetCacheService : ICacheService

{

public Object Get(String key)

{

return HttpContext.Current.Cache[key];

}

public void Set(String key, Object data)

{

HttpContext.Current.Cache[key] = data;

}

...

}As emphatic as it might sound, you should never use the Cache object directly from code-behind classes in a well-designed, ASP.NET-based layered solution.

The .NET Framework provides no method on the Cache class to programmatically clear all the content. The following code snippet shows how to build one:

public void Clear()

{

foreach(DictionaryEntry elem in Cache)

{

string s = elem.Key.ToString();

Cache.Remove(s);

}

}Even though the ASP.NET cache is implemented to maintain a neat separation between the application’s items and the system’s items, it is preferable that you delete items in the cache individually. If you have several items to maintain, you might want to build your own wrapper class and expose one single method to clear all the cached data.

Whenever you read or write an individual cache item, from a threading perspective you’re absolutely safe. The ASP.NET Cache object guarantees that no other concurrently running threads can ever interfere with what you’re doing. If you need to ensure that multiple operations on the Cache object occur atomically, that’s a different story. Consider the following code snippet:

var counter = -1;

object o = Cache["Counter"];

if (o == null)

{

// Retrieve the last good known value from a database

// or return a default value

counter = RetrieveLastKnownValue();

}

else

{

counter = (Int32) Cache["Counter"];

counter ++;

Cache["Counter"] = counter;

}The Cache object is accessed repeatedly in the context of an atomic operation—incrementing a counter. Although individual accesses to Cache are thread-safe, there’s no guarantee that other threads won’t kick in between the various calls. If there’s potential contention on the cached value, you should consider using additional locking constructs, such as the C# lock statement (SyncLock in Microsoft Visual Basic .NET).

Important

Where should you put the lock? If you directly lock the Cache object, you might run into trouble. ASP.NET uses the Cache object extensively, and directly locking the Cache object might have a serious impact on the overall performance of the application. However, most of the time ASP.NET doesn’t access the cache via the Cache object; rather, it accesses the direct data container—that is, the CacheSingle or CacheMultiple class. In this regard, a lock on the Cache object probably won’t affect many ASP.NET components; regardless, it’s a risk that I wouldn’t like to take.

By locking the Cache object, you also risk blocking HTTP modules and handlers active in the pipeline, as well as other pages and sessions in the application that need to use cache entries different from the ones you want to serialize access to.

The best way out seems to be by using a synchronizer—an intermediate but global object that you lock before entering in a piece of code sensitive to concurrency:

lock(yourSynchronizer) {

// Access the Cache here. This pattern must be replicated for

// each access to the cache that requires serialization.

}The synchronizer object must be global to the application. For example, it can be a static member defined in the global.asax file.

Although you normally tend to cache only global data and data of general interest, to squeeze out every little bit of performance you can also cache per-request data that is long-lived even though it’s used only by a particular page. You place this information in the Cache object.

Another form of per-request caching is possible to improve performance. Working information shared by all controls and components participating in the processing of a request can be stored in a global container for the duration of the request. In this case, though, you might want to use the Items collection on the HttpContext class (discussed in Chapter 16) to park the data because it is automatically freed up at the end of the request and doesn’t involve implicit or explicit locking like Cache.

Let’s say it up front: writing a custom cache dependency object is no picnic. You should have a very good reason to do so, and you should carefully design the new functionality before proceeding. The CacheDependency class is inheritable—you can derive your own class from it to implement an external source of events to invalidate cached items.

The base CacheDependency class handles all the wiring of the new dependency object to the ASP.NET cache and all the issues surrounding synchronization and disposal. It also saves you from implementing a start-time feature from scratch—you inherit that capability from the base class constructors. (The start-time feature allows you to start tracking dependencies at a particular time.)

Let’s start reviewing the original limitations of CacheDependency that have led to removing the sealed attribute on the class, making it fully inheritable.

To fully support derived classes and to facilitate their integration into the ASP.NET caching infrastructure, a bunch of new public and protected members have been added to the CacheDependency class. They are summarized in Table 18-6.

Table 18-6. Public and Protected Members of CacheDependency

Description | |

|---|---|

DependencyDispose | Protected method. It releases the resources used by the class. |

GetUniqueID | Public method. It retrieves a unique string identifier for the object. |

NotifyDependencyChanged | Protected method. It notifies the base class that the dependency represented by this object has changed. |

SetUtcLastModified | Protected method. It marks the time when a dependency last changed. |

UtcLastModified | Public read-only property. It gets the time when the dependency was last changed. |

As mentioned, a custom dependency class relies on its parent for any interaction with the Cache object. The NotifyDependencyChanged method is called by classes that inherit CacheDependency to tell the base class that the dependent item has changed. In response, the base class updates the values of the HasChanged and UtcLastModified properties. Any cleanup code needed when the custom cache dependency object is dismissed should go into the DependencyDispose method.

As you might have noticed, nothing in the public interface of the base CacheDependency class allows you to insert code to check whether a given condition—the heart of the dependency—is met. Why is this? The CacheDependency class was designed to support only a limited set of well-known dependencies—against files, time, and other cached items.

To detect file changes, the CacheDependency object internally sets up a file monitor object and receives a call from it whenever the monitored file or directory changes. The CacheDependency class creates a FileSystemWatcher object and passes it an event handler. A similar approach is used to establish a programmatic link between the CacheDependency object and the Cache object and its items. The Cache object invokes a CacheDependency internal method when one of the monitored items changes. What does this all mean to the developer?

A custom dependency object must be able to receive notifications from the external data source it is monitoring. In most cases, this is really complicated if you can’t bind to existing notification mechanisms (such as file system monitor or SQL Server notifications). When the notification of a change in the source is detected, the dependency uses the parent’s infrastructure to notify the cache of the event. We’ll consider a practical example in a moment.

Not only can you create a single dependency on an entry, you can also aggregate dependencies. For example, you can make a cache entry dependent on both a disk file and a SQL Server table. The following code snippet shows how to create a cache entry, named MyData, that is dependent on two different files:

// Creates an array of CacheDependency objects

CacheDependency dep1 = new CacheDependency(fileName1);

CacheDependency dep2 = new CacheDependency(fileName2);

CacheDependency deps[] = {dep1, dep2};

// Creates an aggregate object

AggregateCacheDependency aggDep = new AggregateCacheDependency();

aggDep.Add(deps);

Cache.Insert("MyData", data, aggDep)Any custom cache dependency object (including SqlCacheDependency) inherits CacheDependency, so the array of dependencies can contain virtually any type of dependency.

The AggregateCacheDependency class is built as a custom cache dependency object and inherits the base CacheDependency class.

Suppose your application gets some key data from a custom XML file and you don’t want to access the file on disk for every request. So you decide to cache the contents of the XML file, but still you’d love to detect changes to the file that occur while the application is up and running. Is this possible? You bet. You arrange a file dependency and you’re done.

In this case, though, any update to the file that modifies the timestamp is perceived as a critical change. As a result, the related entry in the cache is invalidated and you’re left with no choice other than re-reading the XML data from the disk. The rub here is that you are forced to re-read everything even if the change is limited to a comment or to a node that is not relevant to your application.

Because you want the cached data to be invalidated only when certain nodes change, you create a made-to-measure cache dependency class to monitor the return value of a given XPath expression on an XML file.

Note

If the target data source provides you with a built-in and totally asynchronous notification mechanism (such as the command notification mechanism of SQL Server), you just use it. Otherwise, to detect changes in the monitored data source, you can only poll the resource at a reasonable rate.

To better understand the concept of custom dependencies, think of the following example. You need to cache the inner text of a particular node in an XML file. You can define a custom dependency class that caches the current value upon instantiation and reads the file periodically to detect changes. When a change is detected, the cached item bound to the dependency is invalidated.

Note

Admittedly, polling might not be the right approach for this particular problem. Later on, in fact, I’ll briefly discuss a more effective implementation. Be aware, though, that polling is a valid and common technique for custom cache dependencies.

A good way to poll a local or remote resource is through a timer callback. Let’s break the procedure into a few steps:

The custom XmlDataCacheDependency class gets ready for the overall functionality. It initializes some internal properties and caches the polling rate, file name, and XPath expression to find the subtree to monitor.

After initialization, the dependency object sets up a timer callback to access the file periodically and check contents.

In the callback, the return value of the XPath expression is compared to the previously stored value. If the two values differ, the linked cache item is promptly invalidated.

There’s no need for the developer to specify details about how the cache dependency is broken or set up. The CacheDependency class takes care of it entirely.

Note

If you’re curious to know how the Cache detects when a dependency is broken, read on. When an item bound to a custom dependency object is added to the Cache, an additional entry is created and linked to the initial item. NotifyDependencyChanged simply invalidates this additional element which, in turn, invalidates the original cache item.

The following source code shows the core implementation of the custom XmlDataCacheDependency class:

public class XmlDataCacheDependency : CacheDependency

{

// Internal members

static Timer _timer;

Int32 _pollSecs = 10;

String _fileName;

String _xpathExpression;

String _currentValue;

public XmlDataCacheDependency(String file, String xpath, Int32 pollTime)

{

// Set internal members

_fileName = file;

_xpathExpression = xpath;

_pollSecs = pollTime;

// Get the current value

_currentValue = CheckFile();

// Set the timer

if (_timer == null) {

var ms = _pollSecs * 1000;

var cb = new TimerCallback(XmlDataCallback);

_timer = new Timer(cb, this, ms, ms);

}

}

public String CurrentValue

{

get { return _currentValue; }

}

public void XmlDataCallback(Object sender)

{

// Get a reference to THIS dependency object

var dep = sender as XmlDataCacheDependency;

// Check for changes and notify the base class if any are found

var value = CheckFile();

if (!String.Equals(_currentValue, value))

dep.NotifyDependencyChanged(dep, EventArgs.Empty);

}

public String CheckFile()

{

// Evaluates the XPath expression in the file

var doc = new XmlDocument();

doc.Load(_fileName);

var node = doc.SelectSingleNode(_xpathExpression);

return node.InnerText;

}

protected override void DependencyDispose()

{

// Kill the timer and then proceed as usual

_timer.Dispose();

_timer = null;

base.DependencyDispose();

}

}When the cache dependency is created, the file is parsed and the value of the XPath expression is stored in an internal member. At the same time, a timer is started to repeat the operation at regular intervals. The return value is compared to the value stored in the constructor code. If the two are different, the NotifyDependencyChanged method is invoked on the base CacheDependency class to invalidate the linked content in the ASP.NET Cache.

How can you use this dependency class in a Web application? It’s as easy as it seems—you just use it in any scenario where a CacheDependency object is acceptable. For example, you create an instance of the class in the Page_Load event and pass it to the Cache.Insert method:

protected const String CacheKeyName = "MyData";

protected void Page_Load(Object sender, EventArgs e)

{

if (!IsPostBack)

{

// Create a new entry with a custom dependency (and poll every 10 seconds)

var dependency = new XmlDataCacheDependency(

Server.MapPath("employees.xml"),

"MyDataSet/NorthwindEmployees/Employee[employeeid=3]/lastname",

10);

Cache.Insert(CacheKeyName, dependency.CurrentValue, dependency);

}

// Refresh the UI

Msg.Text = Display();

}You write the rest of the page as usual, paying close attention to accessing the specified Cache key. The reason for this is that because of the dependency, the key could be null. Here’s an example:

protected String Display()

{

var o = Cache[CacheKeyName];

return o ?? "[No data available--dependency broken]";

}The XmlDataCacheDependency object allows you to control changes that occur on a file and decide which are relevant and might require you to invalidate the cache. The sample dependency uses XPath expressions to identify a subset of nodes to monitor for changes.

Note

I decided to implement polling in this sample custom dependency because polling is a pretty common, often mandatory, approach for custom dependencies. However, in this particular case polling is not the best option. You could set a FileSystemWatcher object and watch for changes to the XML file. When a change is detected, you execute the XPath expression to see whether the change is relevant for the dependency. Using an asynchronous notifier, if one is available, results in much better performance.

Many ASP.NET applications query some data out of a database, cache it, and then manage to serve a report to the user. Binding the report to the data in the cache will both reduce the time required to load each report and minimize traffic to and from the database. What’s the problem, then? With a report built from the cache, if the data displayed is modified in the database, users will get an out-of-date report. If updates occur at a known or predictable rate, you can set an appropriate duration for the cached data so that the report gets automatically refreshed at regular intervals. However, this contrivance just doesn’t work if serving live data is critical for the application or if changes occur rarely and, worse yet, randomly. In the latter case, whatever duration you set might hit the application in one way or the other. A too-long duration creates the risk of serving outdated reports to end users which, in some cases, could undermine the business; a too-short duration burdens the application with unnecessary queries.

A database dependency is a special case of custom dependency that consists of the automatic invalidation of some cached data when the contents of the source database table changes. In ASP.NET, you find an ad hoc class—SqlCacheDependency—that inherits CacheDependency and supports dependencies on SQL Server tables. More precisely, the class is compatible with SQL Server 2005 and later.

The SqlCacheDependency class has two constructors. The first takes a SqlCommand object, and the second accepts two strings: the database name and the table name. The following code creates a SQL Server dependency and binds it to a cache key:

protected void AddToCache(Object data)

{

var database = "Northwind";

var table = "Customers";

var dependency = new SqlCacheDependency(database, table);

Cache.Insert("MyData", data, dependency);

}

protected void Page_Load(Object sender, EventArgs e)

{

if (!IsPostBack)

{

// Get some data to cache

var data = Customers.LoadByCountry("USA");

// Cache with a dependency on Customers

AddToCache(data);

}

}The data in the cache can be linked to any data-bound control, as follows:

var data = Cache["MyData"] as IList<Customer>;

if (data == null)

Trace.Warn("Null data");

CustomerList.DataTextField = "CompanyName";

CustomerList.DataSource = data;

CustomerList.DataBind();When the database is updated, the MyData entry is invalidated and, as in the sample implementation provided here, the list box displays empty.

Important

You get notification based on changes in the table as a whole. In the preceding code, we’re displaying a data set that results from the following:

SELECT * FROM customers WHERE country='USA'

If, say, a new record is added to the Customers table, you get a notification no matter what the value in the country column is. The same happens if a record is modified or deleted where the country column is not USA.

By using a SqlCommand object in the constructor of the dependency class, you gain a finer level of control and can notify applications only of changes to the database that modify the output of that specific command.

Scalability is an aspect of software that came with the advent of successful Web applications. It is strictly related to the stateless nature of the HTTP protocol so that any new requests from the same user in the same session must be bound to the same “state” left by the last request.

The need to “re-create” the last known good state results in an additional workload that saturates Web and data servers quite soon and kind of linearly as the number of users increases. Caching is a way to smooth the issue by providing a data store that sits nearer to the user and doesn’t require frequent roundtrips to central servers.

The ASP.NET Cache object has a number of powerful and relevant capabilities. Unfortunately, today’s business needs raised the bar a little higher. As a result, the Cache object is limited today because it is bound to a worker process and a single machine. The Cache object doesn’t span multiple machines like in a Web farm; its amount of memory affects only a single machine and can’t be scaled out horizontally. Enter distributed caching.

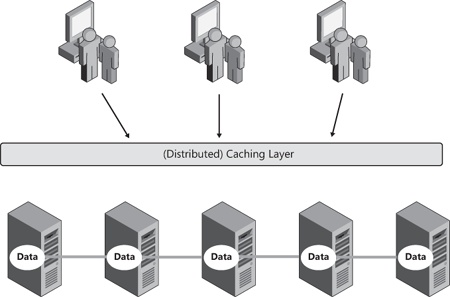

The power of a distributed cache is in its design, which distributes load and data on multiple and largely independent machines. Implemented across multiple servers, a distributed cache is scalable by nature but still gives the logical view of a single cache. Moreover, you don’t need high-end machines to serve as cache servers. Add this to cheaper storage and faster network cards and you get the big picture—distributed caching these days is much more affordable than only a few years ago. Figure 18-3 shows the abstraction that a distributed caching layer provides to applications.

Note

In a previous edition of this book, in the same chapter about caching, I had arguments against the widespread use of a distributed cache. Only a few years ago, the perception of scalability was different. It was recognized as a problem, but most of the time it could be resolved in the scope of the single Web server.

Today, it is different—not only do you welcome the possibility of caching on multiple machines, but you also demand an ad hoc layer to do the hard work of data synchronization and retrieval. This is referred to as a distributed caching system.

As mentioned, lack of scalability is the fundamental problem addressed by a distributed cache. Compared to a classic database, a distributed cache is much easier and cheaper to scale and replicate. It is not coincidental that there is a growing interest in NoSQL solutions, which are essentially distributed stores that can be easily and effectively scaled horizontally. Ultimately, a distributed cache and most NoSQL frameworks offer nearly the same set of features.

Commonly based on a cluster of cache servers, distributed cache gains scalability through the addition of new servers and high availability (H/A) through replication of the content on each server. If one cache server goes down, no data is lost because another copy on another server is immediately available to the application.

Although high availability remains a natural attribute of a distributed caching system, the real effectiveness of replication has to be measured against the real behavior of the application. Replication is great for applications that do a lot of reads. As you add more servers, you add more read capacity to your cache cluster and improve the responsiveness and availability of the application.

At the same time, a heavily replicated cache is not desirable for write-intensive applications. If the application updates the cache frequently, maintaining multiple synchronized copies of the data becomes ineffective.

The topology of the distributed cache plays an important role in determining its success. There are two main topologies: the replicated cache and the partitioned cache.

In a replicated cache topology, the various servers in the cluster hold a private copy of the data. In this way, the reliability is high and users never experience loss of data, even when a server goes down. This cache topology is excellent for read-intensive apps, but it turns into overhead for write-intensive applications.

In a partitioned cache topology, the entire content of the cache is partitioned among the various servers. This design represents a good compromise between availability and performance. This is the first option to consider in scenarios where read/write operations are balanced.

A popular variation of this topology privileges high availability and is often referred to as partitioned cache with H/A. In this case, each partition is also replicated and servers contain their regular data partition plus a copy of another partition.

A distributed caching system is not necessarily limited to just one tier. It can be complemented with a client cache that lives close to the user and keeps in-process a copy of frequently used data from the cache. When used, such a client cache is usually read-only and not kept in sync with the rest of the caching system.

By design, the cache is a place for temporary information that needs be replaced periodically with up-to-date data. Of high importance in the feature list of a distributed cache is the ability to specify how long data should stay in the cache. Common expiration policies are based on an absolute time (for example, “Remove items at noon or in one hour”) or a sliding usage time (for example, “Remove items if not used for a given period”).

Most distributed caches are in-memory and do not persist their content to disk. So in most situations, memory is limited and the cache size cannot grow beyond a certain fixed limit. When the cache reaches this size, it should start removing cached items to make room for new ones, a process usually referred to as performing evictions. Least recently used (LRU) and least frequently used (LFU) are the two most popular algorithms for data eviction.

Cache dependencies, both on other cached items and external resources, are also desirable features to have in a distributed cache. Especially when you have domain data in the cache, you might want to search for data using a more flexible approach than using a simple key. Ideally, a LINQ-based query language for cached items is a big plus.

Finally, read-through and write-through (or write-behind) capabilities qualify a caching solution as a top-quality solution. Read-through capabilities refer to the cache’s ability to automatically read data from a configured data store in case of a cache miss.

Write-through capabilities, instead, enable the cache to automatically write data to the configured data store whenever you update the cache. In other words, the cache, not your application, holds the key to the data access layer. The difference between write-through and write-behind capabilities is that in the former case the application waits until both the cache and data store are updated. In write-behind (or write-back) mode, the application updates the cache synchronously but then the cache ripples changes to the database in an asynchronous manner.

This is a list of the features one would reasonably expect from a distributed cache. Not all products available today, however, offer these features to the same extent. Let’s see what the Microsoft distributed caching service currently offers.

Windows Server AppFabric consists of a few extensions to Microsoft Windows Server to improve the application infrastructure and make it possible to use it to run applications that are easier to scale and manage.

Currently, Windows Server AppFabric has two extensions: AppFabric Caching Services and AppFabric Hosting Services. The former is Microsoft’s long-awaited distributed cache solution; the latter is the centralized host environment for services, and specifically services created using Windows Workflow Foundation. Let’s focus on caching services.

AppFabric Caching Services (ACS) is an out-of-process cache that combines a simple programming interface with a clustered architecture. ACS should not be viewed as a mere (distributed) replacement for the native ASP.NET Cache object. When you have a very slow data access layer that assembles data from various sources (for example, relational databases, SAP, mainframes, and documents) and when you have an application deployed on a Web farm, you definitely need a caching layer to mediate access to data and distribute the load of data retrieval on an array of servers.

The plain old Cache object of ASP.NET is simply inadequate and, in similar scenarios, you are encouraged to use ACS in lieu of Cache. Keep in mind, however, that although endowed with a similar programming interface, ACS currently lacks some of the more advanced capabilities of the ASP.NET Cache, such as dependencies, sliding and absolute expiration, and removal callbacks. At the same time, it remains as simple to use as a hashtable, offers a scalable and reliable infrastructure, is configurable via Windows PowerShell, and presents some developer-oriented features that are not in ASP.NET, such as programmatic access to cache-related properties of cached items (such as priority or expiration) and event propagation (such as notifying client apps of changed items).

ACS is articulated in two levels: the client cache and distributed cache. The client cache is a component to install on the Web server machine and represents the gateway used by ASP.NET applications to read and write through ACS. The distributed cache includes some cache server machines that are each running an instance of the AppFabric Caching Services and storing data according to the configured topology. In addition, the client cache can optionally implement a local, server-specific cache that makes access to selected data even faster. The data found in this local cache is not kept in sync with the data in the cluster. Figure 18-4 provides an overall vision of AppFabric Caching Services.

The configuration script for the servers in the cache cluster contains the name of the cluster and general settings such as topology and data eviction policies. In addition, the configuration contains the list of servers and relative names and ports. Each service is configured to use two main ports: one to communicate with the client (cachePort) and one to communicate their availability to neighbors (clusterPort). Configuration information for the cluster is saved in one location, which can be an XML file on a shared network folder, a SQL Server database, or a custom store. Here’s an example excerpted from an XML file (clusterconfig.xml):

<configuration>

<configSections>

<section name="dataCache"

type="Microsoft.ApplicationServer.Caching.DataCacheSection,

Microsoft.ApplicationServer.Caching.Core, ..." />

</configSections>

<dataCache size="Small">

<hosts>

<host replicationPort="22236"

arbitrationPort="22235"

clusterPort="22234"

hostId="879796007"

size="1007"

leadHost="true"

account="My-LaptopDinoE"

cacheHostName="AppFabricCachingService"

name="My-Laptop"

cachePort="22233" />

</hosts>

</dataCache>

</configuration>Each server can have one or multiple caches of data. A data cache is simply a logical way of grouping data. Each enabled server has one default data cache (which is unnamed), but you are allowed to create your own. Data caches can be individually configured.

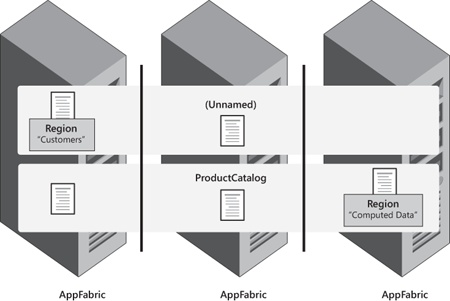

Each data cache is optionally made of regions. A region is a logical way of grouping data within a given data cache. Each region is defined programmatically on a given server and, unlike data caches, they do not span multiple servers. Finally, you have cached objects. A cached object is simply the data you store either directly in the data cache or in a region. Figure 18-5 provides an illustration.

In Figure 18-5, you see that named and unnamed caches can span multiple servers, whereas optional regions are specific to just one server and are usually created via Windows PowerShell. As a developer, you are not interested in the location of a region; you only need to know whether it exists and what data it contains. In this way, you can provide more information to ACS and make it return data more quickly.

Note

To be precise, in ACS an unnamed cache is not really unnamed. It is just named in a default way and must still be explicitly created via the administration console of Windows PowerShell. The “unnamed” cache gets the name of “default.” The only difference between this and a regular named cache is that you can gain access to it using an additional shortcut method on the cache factory object—GetDefaultCache.

To use ACS in your ASP.NET pages, you need to configure the Web server environment first. This requires adding a section to the application’s web.config file. Here’s a snippet:

<dataCacheClient>

<localCache isEnabled="false" />

<hosts>

<host name="Server01" cachePort="22233" />

...

</hosts>

</dataCacheClient>Obviously, you must first have available a bunch of AppFabric assemblies on the Web server so that you can safely declare the new dataCacheClient section in the configuration. For some reason, the ACS assemblies don’t show up in the Microsoft Visual Studio list of available assemblies and you have to catch them yourself in the folds of the %System%AppFabric directory. You need to pick up two assemblies: Microsoft.ApplicationServer.Caching.Core and Microsoft.ApplicationServer.Caching.Client.

<configuration>

<configSections>

<section name="dataCacheClient"

type="Microsoft.ApplicationServer.Caching.DataCacheClientSection,

Microsoft.ApplicationServer.Caching.Core"

allowLocation="true"

allowDefinition="Everywhere" />

</configSections>

...

</configuration>The dataCacheClient section specifies the desired deployment type, the list of hosts that provide cache data, and any optional settings regarding the local cache.

An ACS client can connect to all listed hosts. The ACS infrastructure tracks the placement of cached objects across all hosts and routes your client straight to the right host when a request for a particular cached object is made.

Important

Note that some old documentation and literature still refer to this feature as one of two possible deployment strategies: routing and simple. In the final version of ACS, the simple deployment mode is no longer supported. The drawback is that the same stale documentation and literature suggests you add a deployment attribute to the dataCacheClient section, which will cause a runtime configuration exception as the code attempts to gain access to the cache factory.

If you enable the local cache, any retrieved objects are saved in a local cache within the ACS client, thus forming an additional layer of virtual memory. The local cache takes precedence over data in the cluster. Note that ACS performs no automatic checks on data in the cluster to detect possible recent changes that occurred for the objects in the local cache. In other words, by using the local cache you trade speed of data retrieval for data accuracy.

Let’s look at some sample code. The entry point in ACS is the cache factory object. The factory, then, will gain you access to any data cache available in the system. The simplest way to get a factory is shown here:

var factory = new DataCacheFactory();

The factory needs to consume some information to be successfully instantiated. You can provide configuration settings as a constructor parameter or let the class figure it out itself from the configuration file. Here’s the fluent code you can use to initialize the factory without using the web.config file:

var servers = new List<DataCacheServerEndpoint>(1)

{

new DataCacheServerEndpoint("Server01", 22233)

};

var configuration = new DataCacheFactoryConfiguration

{

Servers = servers,

LocalCacheProperties = new DataCacheLocalCacheProperties()

};

var factory = new DataCacheFactory(configuration);The next steps are straightforward. First, you get a data cache and then you start reading and writing data to it. A data cache must be created administratively using the Windows PowerShell console. Here’s the command you need to create a named cache. (As mentioned, you use the name default to create the default unnamed cache.)

New-Cache -cachename yourCache

In this way, you get a named cache with the same default settings as the default unnamed cache. Of course, you can express additional parameters on the command line. For example, the following line creates a cache with no eviction policy enabled:

New-Cache -cachename yourCache -eviction:none

To get command line information, you can type the following:

New-Cache -?

If the requested cache is not available, the constructor of DataCacheFactory just throws an exception:

var factory = new DataCacheFactory();

var dinoCache = factory.GetCache("Dino");

var defaultCache = factory.GetDefaultCache();From this point on, you can use the object returned by GetCache and GetDefaultCache in much the same way you would use the native Cache object of ASP.NET. With just a little difference, you now can access information stored across a (expansible) cluster of servers:

dinoCache[key] = value;

Of course, you might want to call the factory and get cache objects only once in the application, preferably at startup. Unlike to the ASP.NET Cache, you don’t have dependencies, but you do have regions, search capabilities, and a rich event model (through which you can simulate some of the ASP.NET cache dependencies).

As a matter of fact, most distributed applications today need distributed caching and just can’t afford the native ASP.NET Cache object. AppFabric Caching Services is a solution that addresses many scenarios, but overall it is not feature-complete yet from the perspective of a realistic distributed cache. What other solutions are available?

Memcached (http://memcached.org) is a popular, open-source distributed cache widely used by some popular social networks. Facebook is the most illustrious example. Technically speaking, Memcached can be hosted on a number of platforms, including Windows. Memcached, however, was originally created for Unix-based machines. For this reason, it’s often associated with PHP and, in general, with the LAMP stack (where LAMP stands for Linux, Apache, MySQL, and PHP/Python/Perl).

Memcached runs as a background service on a variety of servers and communicates with the outside world through a configured port (usually port 11211). Client applications employ ad hoc libraries to contact a Memcached-equipped server and read or write data. A client can connect to any available servers; servers, on the other hand, are isolated from one another. The server stores data in memory and applies an eviction policy when it runs short of RAM.

A .NET library that can be used to talk to a Memcached installation (regardless of the host environment) can be found at http://sourceforge.net/projects/memcacheddotnet.

Note

Is it Memcache or Memcached? Both names seem to be frequently used to mean the same product. The product name is Memcache, but because on Unix it runs as a daemon, the program file has been named with a trailing “d” just to stay consistent with Unix naming conventions for daemons. It soon became common to use Memcached to refer to either the product or the executable. A subtler question is, how should you pronounce that? Should it be like the past form of “to cache” or like it would be in a Unix environment—that is, memcache-dee? In the end, it’s up to you!

Written in C# and entirely based on .NET managed code, SharedCache (sharedcache.com) is another open-source distributed and replicated cache for use in server farms. SharedCache supports three deployment scenarios: a partitioned cache, a replicated cache, and a single instance.

With a partitioned cache, the entire data set is split across the active servers that form the cluster of cache server machines. With a replicated cache, each cache server contains the entire data set. In this case, the runtime infrastructure is responsible for keeping data over the servers in sync. Finally, the single instance mode entails you having a single cache server but host it out of process with respect to the client Web application. Moving from one configuration to another doesn’t require code refactoring; it’s simply a matter of configuration.

With good products available for free or under open-source licenses, why should you ever consider commercial solutions, then? The answer is simple: commercial solutions offer more advanced features, capabilities and, especially, support. At their core, both commercial and free solutions provide a fast and scalable caching layer; the difference is in the extras that are provided. If you’re OK with the core features you get from ACS or the community edition of Memcached, by all means stick to that. Otherwise, be ready to spend some good money for the extras. Table 18-7 lists a few products currently available.

Table 18-7. A Quick List of Commercially Distributed Cache Products

Description | |

|---|---|

It’s the leading commercial distribution of Memcached as worked out by some of the key contributors to the original open-source project. Enhancements include security, dynamic scaling, packaged setup, and Web-based management capabilities. From the same group, you can also get Membase Server, which is a NoSQL database backed by Memcached. For more information, visit http://www.membase.com/products-and-services/memcached. | |

Part of a suite of products aimed at making enterprise applications more scalable, StateServer runs as a service on every machine in your server farm and stores data objects in memory while making them globally accessible across the farm within its distributed data grid. Its top selling points are the patented technology for H/A, its comprehensive API, and the outstanding StateServer console for administrative and management tasks. For more information, visit http://www.scaleoutsoftware.com/products/scaleout-stateserver. | |

It presents itself as the distributed version of the ASP.NET native Cache object with a ton of extra features, including a LINQ-based query language, rich eviction functionalities, event notification, and dynamic clustering. As a plus, it also has an Express version that is free for up to two cache servers running .NET 3.5. The NCache engine is also exposed as a second-level caching layer for both NHibernate and Entity Framework 4 and as an extension to the Enterprise Library Caching Block and the Cache provider mechanism of ASP.NET 4. For more information, visit http://www.alachisoft.com/ncache. |

The concept of a Web cache is probably as old as the Web itself. Don’t worry if you’re not familiar with this term; you certainly know very well at least one kind of Web cache—the browser’s local cache.

Web sites and applications rely on the services provided by the Web server, which essentially receives requests and sends out responses. Generally speaking, a Web cache is something that sits in between a Web server and a client browser and gently takes the liberty of serving some requests without disturbing the Web server at the back end. Fundamental reasons for installing a Web cache are to reduce network traffic and to reduce latency.

The most popular type of Web cache is the browser cache. Another example of a Web cache is a proxy server that, deployed on the network, has requests and responses routed to it to cache on a larger scale than the local browser. A proxy cache is recommended for large organizations where many users might be requesting the same pages from a bunch of sites. The beneficial effects of a proxy cache expand, then, to the entire organization.

A Web cache stores representations of requested resources (such as script files, images, and style sheets), and it applies a few simple rules to determine whether it can serve the request right away or whether the resource has to be requested by the origin server. In general, all requests are subject to Web caching. At the same time, each response that serves a given resource can contain instructions for the Web cache regarding if and how to cache the resource.

Let’s see how ASP.NET pages interact with the browser cache.

All Web browsers look into their cache before making a request for a given URL. ASP.NET requests are no exception. This means that if the content of the requested ASP.NET page is available on the client (and valid), often no request is made to the server. However, every time you make a change to the source ASPX file on the server, the next request for that page will get the update. If you make a change to a JavaScript file, instead, you likely will have to wait a few hours or just manually clean the local browser cache to get the update. Let’s try to understand how things work under the hood.

First and foremost, browsers won’t save any responses that explicitly prohibit the use of the cache. Furthermore, browsers won’t save any responses that come from a secure channel (HTTPS) or that require authentication.

If the requested URL doesn’t have a match in the local cache, the browser just sends the request on to the server. Otherwise, if a match is found, the browser checks whether the cached representation of the requested resource is still valid. A valid representation is a representation that has not expired. Valid representations (also referred to as fresh representations) are served directly from the cache without any contact with the server.

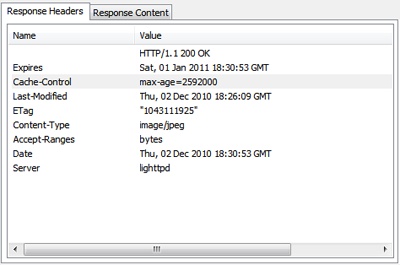

The browser uses HTTP headers to determine whether the representation is fresh or stale. The Expires HTTP header indicates the absolute expiration date of the resource. The max-age HTTP header indicates for how long the representation is fresh. If the resource is stale, the browser will ask the origin server to validate the representation. If the server replies that a newer resource exists, a new request is made. Otherwise, the saved representation is served.

These simple rules express the behavior of Web caches—both browser cache and proxy cache. If ASP.NET pages and JavaScript files behave differently, the difference is all in the HTTP headers that accompany them.

An ASP.NET page is a dynamic resource, meaning that its content might be different even when the requesting URL is the same. This structural attribute makes an ASP.NET page a noncacheable resource. An image that represents a company’s logo or a script file, on the other hand, is a much more static type of resource and is inherently more cacheable.

By default, an ASP.NET page is cacheable by browsers but not by proxy servers. However, an ASP.NET page has no expiration set and subsequently is always stale. For this reason, any request you make for an ASPX resource will always result in an immediate refetch from the server as if the page was never cached. Figure 18-6 shows this.

The screen shot shows that default.aspx is requested as usual, whereas cascading style sheets (CSS), images, and scripts are checked and served from the local cache because they are not modified.

The default behavior of the ASP.NET page results from a similar payload and especially from the Cache-Control header.

Cache-Control private Content-Type text/html; charset=utf-8 Server Microsoft-IIS/7.5 X-AspNet-Version 4.0.30319 X-Powered-By ASP.NET Date Thu, 02 Dec 2010 17:56:06 GMT Content-Length 12315

You can change at will the caching settings for any ASPX page. One of the tools you can use for doing so is the @OutputCache directive that I’ll cover in just a moment.

Typically, static resources are served with a relatively long lifetime, with the goal of staying in the cache as much as possible. Obviously, developers as well as Web masters are ultimately responsible for deciding about the maximum age allowed for a given set of resources. For static resources, you can set HTTP headers from the Web server (for example, IIS) management console. (See Figure 18-7.)