19 Stage 1: Problem

Life is just too complicated to be smart all the time.

—Scott Adams

In this chapter, I describe the practices that characterize the problem stage of risk management evolution. People are too busy solving problems to think about the future. They do not address risks and, in fact, act as if what they do not know will not hurt them. Management will not support communication regarding risks. Reality is viewed as pessimism, where it is unacceptable to estimate past the dictated time to market. The team works very hard, yet product quality is low. Quality assurance is performed by the product buyers, which tarnishes the company’s reputation. Management has unrealistic expectations of what is achievable, which causes the team to feel stress. When significant problems of cost and schedule can no longer be ignored, there is a reorganization. This is what we observe in the problem stage project. (The project name is withheld, but the people and their experiences are genuine.)

This chapter answers the following questions:

![]() What are the practices that characterize the problem stage?

What are the practices that characterize the problem stage?

![]() What is the primary project activity at the problem stage?

What is the primary project activity at the problem stage?

![]() What can we observe at the problem stage?

What can we observe at the problem stage?

19.1 Problem Project Overview

The project is developing a state-of-the-art call-processing system for wireless communications. The project infrastructure is a commercial corporation. The project has a 20-month iterative development schedule. The team consists of over 40 engineers, approximately half of them subcontractors. The estimated software size is 300K lines of code plus adapted COTS (commercial off-the-shelf software). Software languages used are C, C++, Assembly, and a fourth-generation language.

19.2 The Process Improvement Initiative

The corporation is laying the foundation for the implementation of a quality management system (QMS), based on the requirements of the ANSI/ASQC Q9001 quality standard [ASQC94], the Six Sigma method of metrics and quality improvement [Motorola93],1 and the SEI CMM for Software [Paulk93]. To help with the initiative, a process consultant was brought on board. She prepared a statement of work (SOW) that describes the effort required to achieve SEI CMM Level 2.2 The goal of SEI Level 2, the repeatable level, is to institutionalize effective management processes. Effective processes are defined, documented, trained, practiced, enforced, measured, and able to improve. The scope of the SEI Level 2 key process areas (KPAs) has been expanded to include systems engineering and hardware.

1 As a corporation, Motorola’s goal is to achieve Six Sigma Quality for all operations:99.99966 percent of output must be defect free. In other words, for every million opportunities to create a defect, only 3.4 mistakes occur.

2 This chapter describes the risks of process improvement, not the risks of product development.

19.2.1 Vision and Mission

Through discussions with the employees, the process consultant understood the quality vision and mission as follows:

The quality vision is to lead the wireless communications industry by becoming a world-class organization and achieving a reputation for innovative, high-quality products. The quality mission is to commit to customer satisfaction and continuous improvement through total quality management and adherence to international (i.e., ISO 9001) and industry (i.e., SEI CMM) benchmarks for quality.

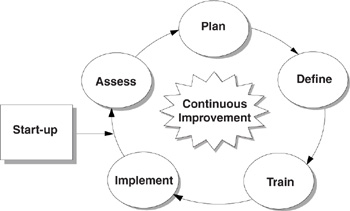

Figure 19.1 Proposed improvement strategy. Continuous improvement is an evolutionary approach based on incremental improvement. The proposed improvement strategy has six phases: start-up, assess, plan, define, train, and implement.

Continuous improvement is iterative in nature. The continuous improvement model shown in Figure 19.1 can be used to understand the major activities of the QMS. The corporation is currently in the start-up phase.

19.2.2 Roles and Responsibilities

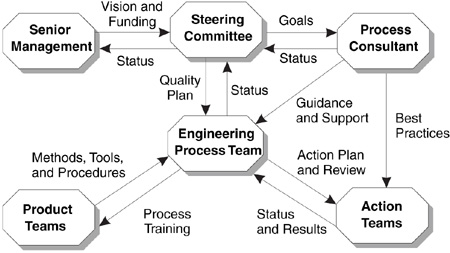

The corporation began the initiative by establishing a steering committee to guide the objectives associated with continuous improvement. As shown in Figure 19.2, the planned improvement organization has several components that work together to achieve the business objectives.

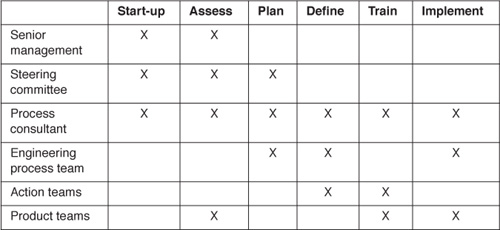

To define roles and responsibilities clearly, the process consultant mapped the phases of continuous improvement to the improvement organization shown in Table 19.1. Detailed descriptions of the roles and responsibilities follow to describe completely how the work is accomplished.

Senior management—the chief executive officer (CEO) and the vice-presidents—has primary responsibility for establishing the quality vision for the organization by understanding the existing practice and allocating resources for continuous improvement. Senior management reviews findings from audits and assessments, approves the QMS plan, provides motivation to the organization through execution of the reward and recognition program and communicates commitment to quality by their written and spoken words and their actions.

The steering committee members are the directors from each business area. This committee has primary responsibility for designing the foundation of the QMS, commissioning audits and assessments, and developing the QMS plan to achieve ISO 9001, SEI maturity levels, and Six Sigma goals. The steering committee provides incentives for sustained improvement by establishing an employee reward and recognition program and ensures communications within the organization by reviewing status and reporting results to senior management, creating posters, and promoting a quality culture.

Figure 19.2 Proposed improvement organization.

The process consultant is an experienced process champion from an ISO-certified and SEI Level 3 organization. This person is responsible for under-standing the corporation’s goals and priorities and facilitating process development. This is accomplished by performing a process assessment, establishing the baseline, and assisting in process improvement plan development. Other activities include defining the top-level process from SEI CMM requirements, creating action team notebooks, training action teams, facilitating action team meetings, reviewing action team products, reporting status, and helping to train the process to the product teams.

Table 19.1 Organization Roles And Responsibilities, By Phase

Engineers from various levels in systems, software, and hardware are on the engineering process team. This team has responsibility for characterizing the existing process, establishing a metrics program, developing the process improvement plan, and maintaining the process asset library. Other activities performed include establishing the priority of action teams, reviewing action team process status and products, approving the defined process, and handling change requests.

The practitioners from the organization are the action team members. These teams are responsible for executing the action plan to analyze and define the CMM key process areas. Cross-functional volunteers meet periodically and coordinate with existing process definitions to complete their objectives. They maintain a team notebook, distribute meeting minutes, and report status and recommended solutions to the process team. Then they document and update products based on review comments, as well as define training modules and instruct training sessions.

The product team members are the practitioners with product responsibilities. These teams are responsible for implementing the defined process. They help to describe existing methods, tools, and procedures; measure the process; collect the metrics; and evaluate the process for improvement. Product teams also feed-back comments and improvement recommendations to the process team.

19.2.3 Statement of Work

The process consultant’s SOW assumes that the corporation has taken a holistic view of process improvement and wants to expand the scope of the SEI model to include systems and hardware. Software is not developed in isolation, and the engineering process is assumed to be inclusive. Two process models for systems engineering were reviewed by the managers for their utility: the System Engineering Capability Maturity Model (SE-CMM) version 1.0 [Bate94] and the Systems Engineering Capability Assessment Model (SECAM) version 1.41 [CAWG96].

Resource constraints of time, budget, and staff were factored into the SOW. The assessment process was streamlined and focused on providing the inputs required for the process improvement plan. Staffing issues were addressed by scheduling a minimum number of action teams in parallel and allowing for incremental training.

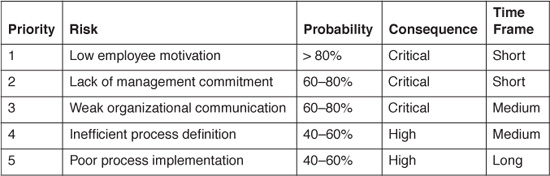

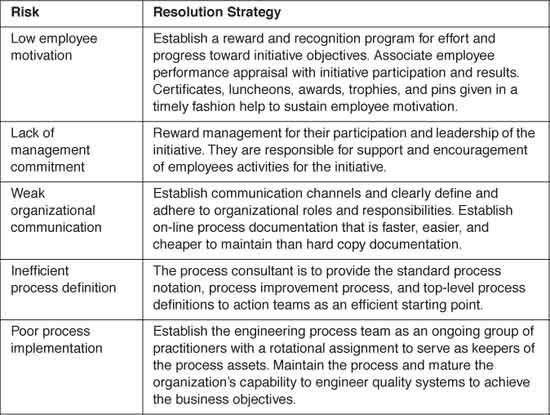

Risks to institutionalizing effective engineering management processes were identified by the process consultant. These risks, described in Table 19.2 are typical of improvement initiatives. These risks have been evaluated and prioritized by their risk severity. Risk severity accounts for the probability of an unsatisfactory outcome, the consequence if the unsatisfactory outcome occurs, and the time frame for action.

A risk resolution strategy has been incorporated into the SOW for each of the risks identified in Table 19.2. As shown in Table 19.3 these strategies are necessary but not sufficient for the success of the initiative. When executed, these strategies will go a long way to ensuring the success of the initiative goals of a repeatable process.

Table 19.2 Known Process Improvement Initiative Risks

Table 19.3 Risk Resolution Strategies

19.3 Process Assessment

A process assessment would be necessary to understand the current engineering practices and identify areas for improvement. Prior to the process assessment, the process consultant sent a letter to the CEO with the following counsel: “The road to Level 2 should not be underestimated. Industry data from September 1995 reported the percentage of assessed companies at SEI Level 1 was 70%. False starts due to lack of long-term commitment only make subsequent efforts more difficult to initiate.”

The CEO approved a process assessment of the project. Assessment objectives included the initiation of process improvement with the completion of the following activities:

![]() Provide a framework for action plans.

Provide a framework for action plans.

![]() Help obtain sponsorship and support for action.

Help obtain sponsorship and support for action.

![]() Baseline the process to evaluate future progress.

Baseline the process to evaluate future progress.

19.3.1 Assessment Process

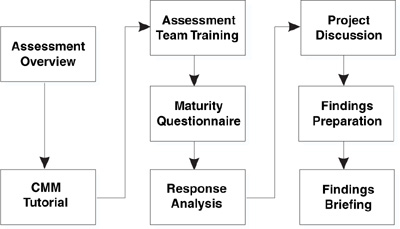

The assessment process was streamlined to reduce the cost, effort, and time to achieve the stated objectives. As shown in Figure 19.3, the process is a tailored version of the mini-assessment process used by a subset of Kodak product development organizations [Hill95].3 The streamlined assessment included a one-hour assessment overview presentation to the entire project team that described the assessment goals and process. The process improvement organization and the roles and responsibilities of both the assessment team and the project practitioners were also discussed. A one-hour CMM tutorial followed to explain the vision of process improvement with an emphasis on the key process areas of SEI Level 2. Six hours were spent in assessment team training identifying assessment risks and learning the details of the assessment process. The SEI maturity questionnaire was given to each assessment team member, and a week later the results were reviewed at the response analysis meeting. During this meeting, discussion scripts were prepared to validate the maturity questionnaire responses. By dividing assessment team members into three teams that worked in parallel, the project discussion was accomplished in one day. Findings preparation lasted eight hours to condense the assessment results down to 20 viewgraphs for the one-hour findings briefing.

3 Kodak developed the mini-assessment in 1993 to reduce the cost, effort, and time frame associated with traditional SEI Software Process Assessments (SPAs). After performing 12 mini-assessments, Kodak reported the assessment cost at 10 percent to 13 percent of the cost of a traditional SPA.

Figure 19.3 Streamlined assessment process.

19.3.2 Reasons for Improvement

As part of the assessment overview briefing, the process consultant devised an exercise to document the organization’s rationale for process improvement. The people attending the briefing were given ten minutes to complete the following directions:

1. Think about the benefit of process improvement.

2. Think about how the organization would benefit from improving its processes.

3. Write one reason that you think the organization should improve.

As part of its training, the assessment team was asked to sort the reasons, which had been written on index cards. Using an affinity diagram process to group similar reasons for improvement [Brassard94], the following six categories emerged:

![]() Gain the competitive advantage. To produce a better product and be able to identify what changes are incorporated into a release. Time to market is very critical to the bottom line.

Gain the competitive advantage. To produce a better product and be able to identify what changes are incorporated into a release. Time to market is very critical to the bottom line.

![]() Produce a quality product. So we can effectively delight our customers and improve stakeholder value. Process needs improvement to improve future products’ quality. Improved process can benefit in producing a better-quality product in less time. We are in the market with world-class companies. For us to survive, we must improve the quality of our products NOW. There is no tomorrow for small companies. Improve the coding cycle of multiple reviews of documentation and prelim-code. Get away from coding on the fly. Lower risk of meeting deadlines.

Produce a quality product. So we can effectively delight our customers and improve stakeholder value. Process needs improvement to improve future products’ quality. Improved process can benefit in producing a better-quality product in less time. We are in the market with world-class companies. For us to survive, we must improve the quality of our products NOW. There is no tomorrow for small companies. Improve the coding cycle of multiple reviews of documentation and prelim-code. Get away from coding on the fly. Lower risk of meeting deadlines.

![]() Develop efficiency. More efficient use of resources (people’s time, lab equipment, development workstations, licenses for various development software). Gain process efficiency and shorten cycle time. Efficiency in how we operate—we get a lot done in a short time only because of the extra hours and commitment by employees. We do not work efficiently or do the same thing consistently. Process improvement is required so that we can reduce the time wasted in correcting problems caused by lack of a good process (e.g., in design, in test, in code). Allow the company to spend money on new development versus maintenance! To improve our concept of time and not publish unrealistic schedules. Need to reduce “fire drills.” No person or organization or product can ever be better than the process it followed.

Develop efficiency. More efficient use of resources (people’s time, lab equipment, development workstations, licenses for various development software). Gain process efficiency and shorten cycle time. Efficiency in how we operate—we get a lot done in a short time only because of the extra hours and commitment by employees. We do not work efficiently or do the same thing consistently. Process improvement is required so that we can reduce the time wasted in correcting problems caused by lack of a good process (e.g., in design, in test, in code). Allow the company to spend money on new development versus maintenance! To improve our concept of time and not publish unrealistic schedules. Need to reduce “fire drills.” No person or organization or product can ever be better than the process it followed.

![]() Repeat quality. Capability to recreate exactly pieces for maintenance support (e.g., find library items despite changes in personnel). The company needs this to provide a means to develop quality products consistently! To be competitive and succeed in the marketplace, we must consistently produce high-quality product and services in a predictable and timely manner. We should improve how we maintain software to incorporate enhancements or corrections into our product more easily.

Repeat quality. Capability to recreate exactly pieces for maintenance support (e.g., find library items despite changes in personnel). The company needs this to provide a means to develop quality products consistently! To be competitive and succeed in the marketplace, we must consistently produce high-quality product and services in a predictable and timely manner. We should improve how we maintain software to incorporate enhancements or corrections into our product more easily.

![]() Reduce learning curve. Clearly documented and trained process reduces the learning curve for new team members and maintains process focus as team players change. This facilitates team flexibility.

Reduce learning curve. Clearly documented and trained process reduces the learning curve for new team members and maintains process focus as team players change. This facilitates team flexibility.

![]() Clarify expectations. The organization’s processes are not well defined, written down, and available to the engineering staff. Employees will have a clear definition of process for their individual task, and understand what is required to work effectively, instead of individuals working within vacuums. Expectations of individual processes are not clear. Intermediate work products do not always meet expectations or easily fit together. Process improvement is necessary to ensure the company’s long-term viability, because it first defines and documents the process, and second works the process toward more profitability.

Clarify expectations. The organization’s processes are not well defined, written down, and available to the engineering staff. Employees will have a clear definition of process for their individual task, and understand what is required to work effectively, instead of individuals working within vacuums. Expectations of individual processes are not clear. Intermediate work products do not always meet expectations or easily fit together. Process improvement is necessary to ensure the company’s long-term viability, because it first defines and documents the process, and second works the process toward more profitability.

19.3.3 Process Assessment Risks

The assessment team was asked to identify their top three risks to a successful assessment. The assessment team had 30 minutes to complete the following directions:

1. Divide into three teams.

2. Brainstorm risks to a successful assessment.

3. Come to a consensus on the top three risks.

4. Spokesperson presents and leads discussion including mitigation strategies.

The exercise helped the assessment team focus on what they needed to do. The assessment team listed the following risks to a successful assessment:

1. Clear definition of the assessment outcome.

2. Time management.

3. Interpersonal conflicts.

4. Is there any value? Will this be taken seriously? Will anything change? Mitigation: Management support through actions.

5. Proper time allocation to task with everything else on our plate! Mitigation: Plan consistent with workload demands.

6. Truthful feedback. Concern of negative ramification to person or group. Mitigation: Anonymous results.

7. Consistent management support for action plans.

8. Inaccurate and inappropriate measurements. Mitigation: Take time up front to identify measurements to be made, and clearly define the measurement procedures.

9. Failure to communicate findings correctly. Mitigation: Tailor findings to the audience. Communicate plan for correcting shortcomings.

10. Lack of cooperation from staff. Mitigation: Provide free lunches. Get buy-in from all concerned.

The process consultant anticipated several additional assessment risks and reviewed them with the assessment team:

![]() Assessment overview: Employees have seen similar, unsuccessful initiatives. The assessment team should be sensitive to this.

Assessment overview: Employees have seen similar, unsuccessful initiatives. The assessment team should be sensitive to this.

![]() Response analysis: Because the team is too large [it had ten members], consensus will likely be laborious. Conclusions will be strong before discussion begins.

Response analysis: Because the team is too large [it had ten members], consensus will likely be laborious. Conclusions will be strong before discussion begins.

![]() Project discussion: Assessment team familiar with project can lose objectivity. Direct reporting relationships can cause subordinates to hold back their inputs. Single project focus may miss different aspects of software projects. Software-intensive project may lack hardware and systems input.

Project discussion: Assessment team familiar with project can lose objectivity. Direct reporting relationships can cause subordinates to hold back their inputs. Single project focus may miss different aspects of software projects. Software-intensive project may lack hardware and systems input.

![]() Findings briefing: Employees fear that nothing will be done with the inputs. Team feeling that they are finished when they have only just begun. Reactionary response by management could have negative impact on employee willingness to be involved.

Findings briefing: Employees fear that nothing will be done with the inputs. Team feeling that they are finished when they have only just begun. Reactionary response by management could have negative impact on employee willingness to be involved.

19.3.4 Assessment Team Discussion

After the team completed the maturity questionnaire response analysis, they were prepared for the interview sessions. The assessment team was asked to summarize their thoughts on what was needed. The process consultant added her concern to the list.

![]() Everyone brings old quality baggage. It does not have to fail.

Everyone brings old quality baggage. It does not have to fail.

![]() We have a chance to change this. It is not a bitch session.

We have a chance to change this. It is not a bitch session.

![]() Focus on key issues. Start small to achieve success.

Focus on key issues. Start small to achieve success.

![]() Look for success. Everything we do is a step forward.

Look for success. Everything we do is a step forward.

![]() Maintain momentum. Do not let them wonder what happened to the initiative.

Maintain momentum. Do not let them wonder what happened to the initiative.

![]() Plan for beyond SEI Level 2. Need individuals to push.

Plan for beyond SEI Level 2. Need individuals to push.

![]() Listen to gather good information.

Listen to gather good information.

![]() Communicate to the lowest level.

Communicate to the lowest level.

![]() Support from management and time to accomplish goals.

Support from management and time to accomplish goals.

![]() Need reward and recognition for action teams.

Need reward and recognition for action teams.

19.4 Process Assessment Results

An important part of the assessment was that the people discover the results for themselves. The answers to the following two questions sum up the distance between “as is” and “should be”:

1. Where are we? There is no organization-wide definition or documentation of current processes.

2. Where should we be? The organization should describe all processes in a standard notation. The organization should train processes to the management and staff, and follow them as standard procedure. The organization should measure and monitor processes for status and improvement.

19.4.1 Engineering Process Baseline

The final findings summarized the engineering process baseline as follows:

Strengths

The organization is rich in the diversity of the staff.

Productivity is very high.

Everyone wants to do a good job.

Open to ideas and suggestions for improvement.

Weaknesses

Lack of communication with management.

Schedule estimates tend not to be realistic.

Lack of well-defined engineering processes and procedures.

Lack of clear roles and responsibilities.

19.4.2 Participants’ Wish List

At the close of each interview session, the facilitator asked the following question: “If you could change one thing, other than your boss or your paycheck, what would it be?” Project leaders said:

“Reality is viewed as pessimism.”

“Vendors and customers serve as quality assurance.”

“The development process is iterative code, design, test.”4

4 This order implies that the development process was broken.

“Do not sacrifice quality.”

“Focus on company priorities, process.”

“Senior management is not looking for quality; it is schedule driven.”

Software functional area representatives said:

“Change requests should be tracked for system test.”

“Appoint a software watchdog to oversee project to facilitate consistency and reuse.”

“Software CM tool to be changed.”

“Senior management has unrealistic expectations of people who are working on several projects with priorities changing daily.”

“This assessment will not be of benefit, as senior management will ignore it.”

“Integration and system test should be started much earlier.”

“Mixing C and C++ is a mistake, as we do not have staff skilled in both.”

“Senior management is committed to deadlines, not quality; hence this assessment is meaningless.”

Test and configuration management functional area representatives said:

“More planning for demos. Put hooks in the schedule.”

“More hours in the lab. During the day, there is no schedule. It is first come, first served. Typically, a management person owns the system. Get bumped regularly by demos. Easily lose half the morning by the demo.”

“More flexibility in adjusting schedules. Worked overtime every week, 24 hours around the clock for three demos.”

“More feedback from upper management. More of a large-company mentality. Feel like more of a small company. The CEO told us what he told the shareholders. We do not get that feedback anymore. Closed-door attitude.”

Hardware functional area representatives said:

“I would like to see a more unified top-level structure and more focus at the company level.”

“More information from the top on company direction and progress. Need status on projects.”

“More procedures established. More consistency in purpose and operation. Each department needs charters so that senior management will know who is responsible for what job. Basically it should define what the job is and what results are required.”

“I am looking for more direction. I need distinct goals. What are my personal goals? What are the company’s strategic goals? All I am doing right now is fire fighting.”

19.4.3 Findings and Recommendations

The findings and recommendations were briefed by the assessment team leader, who was project manager. The findings were summarized according to the SEI CMM Level 2 KPAs:

Requirements management. Requirements do not drive the development (e.g., system requirements were approved one year after the project started). There is no policy for managing requirements, and no method for tracing requirements through the life cycle. Recommendations: Ensure that requirements are entry criteria for design and provide for buy-in from developers.

Project planning. Developers are not adequately involved in the planning process (e.g., the schedule was inherited, and estimates are allowed only if they fit). There is no methodology used for estimating. There is no schedule relief for change in scope and resources. Schedule is king—the project is driven by time to market. Recommendations: Adopt scheduling methodology to involve all affected parties.

Project tracking. Project status is not clearly communicated in either direction (i.e., up or down). There is “no room for reality” with a “sanitized view of status reported.”

Milestones are replanned only after the date slips. Schedules are not adjusted by the missed dependencies, so the history is not visible. Recommendations: Improve data and increase circulation of project review meeting minutes. Also, communicate reality and take responsible action.

Subcontract management. Validation of milestone deliverables is ineffective because milestones are completed without proper verification. Transfer of technology is not consistently occurring since it is not on the schedule and inadequate staff are assigned. Recommendations: Establish product acceptance criteria and a plan for transfer of technology.

Quality assurance. There are no defined quality assurance engineering activities. There is no consistent inspection of intermediate products. Product quality is ensured by final inspection and, in some cases, by our vendors and customers. The project depends on final testing to prove product quality. There is no quality assurance training for engineering. Recommendations: Define quality assurance checkpoints as part of the engineering process.

Configuration management. No companywide configuration management (CM) plan. For example, systems, hardware, and software do not use the same CM tool. There is a lack of advanced CM tool training. The CM tool does not satisfy the project’s needs because the developers go around the tool to do their job. Recommendations: Fix or replace the tool, and provide more in-depth tool training.

19.5 Initiative Hindsight

There is much to learn from the experience of others.

19.5.1 Assessment Team Perspective

The assessment team was asked to think about the engineering process assessment from their own perspective. They were given 15 minutes to answer the following questions:

1. What worked?

2. What did not work?

3. What could be improved?

For each question, the team members shared their views with the other assessment team members. At the executive briefing, their lessons learned were presented to senior management.5

5 The executive briefing was not on the assessment process diagram but was necessary because several senior managers were unable to attend the findings briefing.

In retrospect, preparation, organization, and timing were key to several successes identified by the assessment team members. Training helped each assessment team member develop the skills they needed to be prepared for the project discussion. The organization of the assessment team into three subteams helped make the discussion groups feel more comfortable and know that this was not an inquisition. The timing of the findings briefing the morning after the findings preparation enabled the assessment team to easily recall specific examples to support the findings. These successes are further described below.

Assessment team training. Organization of training material, group interaction, and involvement through exercises and rotation of groups of people was good. The team practiced sharing results, and the people wanted to be there. The team built momentum, enthusiasm, spirit, and camaraderie by using the consensus process. The consensus process worked well to get more buy-in and much less dissatisfaction after a decision was made.

Project discussion. Candid discussions with honest thoughts and feelings were possible due to the emphasis of confidentiality in the introduction script. The assessment team preparation of potential interview pitfalls helped. The listening skills of the assessment team were good; there was genuine caring by the assessment team. Grouping assessment team members so their skill mix more closely matched the peer group led to cohesive discussions. Dividing the assessment team into three subteams enabled five interview discussions to finish in one day.

Findings briefing. The timing of the briefing was immediately after the assessment, when thoughts were fresh. Questions were answered quickly, because the examples were easily recalled by the team. Asking for participation on the action teams was good because everyone was there, and they signed up for specific teams to be involved in.

What Did Not Work

Informing the staff about the SEI CMM in the one-hour tutorial did not work because not everyone was aware of the SEI maturity model. Only those who participated in key functional areas were aware of what the assessment team was doing. In general, there was no relief from the assessment teams’ assigned work. Phone calls in the conference room were an interruption to other team members. There was no encouragement from management to focus on the assessment. There was too much pressure on the assessment team to get assessment training and conduct the assessment in one week without dropping any project tasks.

Project discussion. The focus on the assessment was disrupted by people wanting to get organizational issues off their chest.

Response analysis. The meeting was too long because ten people is too many for an assessment team. By the end of the analysis meeting, many people had lost focus and were suffering from burn-out. There was a feeling of “hurry up and finish.”

Findings briefing. Management was surprised by the results and took the results personally. This defensive posture on the findings added a negative aspect to the assessment results. The assessment was to help people to own the results, but management made a speech that essentially said they were responsible, and they would fix the problems.

Informal hall talk. Interviewees thought the presentation was watered down. Interviewees know that nothing will change.

Executive briefing. The executive briefing was held over a month after the assessment results briefing, which was not timely.

What Could Be Improved

The assessment team also noted areas that could be improved as follows:

Assessment process. The assessment team and participants can be more effective by planning meetings with enough time so the process is not rushed. Using PCs for notes would make efficient use of time.

Findings briefing. The presentation should not be a surprise to senior management. Perhaps involving them in a dry run would allow them to prepare a response better. Emphasize that no names are associated with the results, and explain that the assessment team notes are shredded. If key managers cannot attend the results briefing, then reschedule the briefing.

Follow-through. There needs to be immediate follow-up and ongoing demonstration of the progress being made to define the engineering process standards. Upper management must do more to support process improvement than just talk about it.

19.5.2 Process Improvement Risks

The process consultant attempted to move the initiative forward into the planning phase of continuous improvement. There was a baseline process and a framework for action plans. Twenty-five people signed up as action team volunteers. At the executive briefing, the steering committee asked the CEO, “Where do we go from here?” The CEO replied, “SEI Level 5.” But when the subject of resources, specifically time and people, came up, the CEO said, “Quality is free.” The number two risk—lack of management commitment—had just become the number one problem. This risk was predicted in the SOW with a high probability and critical consequence.

Six months after the assessment, a reorganization removed a layer of senior management. Within a year after the assessment, the CEO was ousted by the board of directors. The rationale for the reorganization was not a failed process improvement initiative but rather a lack of product results. Perhaps the lack of product results was caused by the lack of process results. If this were true, management commitment to process improvement might have prevented the reorganization. Hindsight is, as they say, 20/20.

19.6 Summary and Conclusions

In this chapter, I described the practices that characterize the problem stage of risk management evolution. These practices include managing problems, not risks. Management is driven by time to market, and as a result, products are shipped with problems. Risks identified through the efforts of a process consultant are not taken seriously by the organization. Attempts to improve ad hoc procedures fail as management expects the team to improve in their spare time.

The primary project activity in the problem stage is hard work. Long and frustrating hours spent in the work environment make even the most intelligent people work inefficiently. Blame for this situation is assigned to management, who must suffer the consequences.

At the problem stage, we observed the following:

![]() Smart people. The project had many intelligent individuals working on stateof-the-art technology.

Smart people. The project had many intelligent individuals working on stateof-the-art technology.

![]() Burnout. Many people worked 50 hours or more each week. The stress of the work environment was evident in the frustration level of the people.

Burnout. Many people worked 50 hours or more each week. The stress of the work environment was evident in the frustration level of the people.

![]() Reorganization. Management took the blame when the company did not meet its goals. Six months after the assessment, a reorganization removed a layer of senior management. Within a year after the assessment, the CEO was ousted by the board of directors.

Reorganization. Management took the blame when the company did not meet its goals. Six months after the assessment, a reorganization removed a layer of senior management. Within a year after the assessment, the CEO was ousted by the board of directors.

19.7 Questions for Discussion

1. How does improving a process improve the quality of future products? Do you think process improvement is a risk reduction strategy? Explain your answer.

2. Do you agree that false starts due to lack of long-term commitment make subsequent improvement efforts more difficult to initiate? Discuss why you do or do not agree.

3. Write your top three reasons for improving an ad hoc process. Describe your reasons in terms of their effect on quality, productivity, cost, and risk of software development.

4. Given an SEI Level 1 organization, discuss the likelihood for high productivity and low quality.

5. Explain the consequence of the statement, “Reality is viewed as pessimism.”

6. You are a hardware engineer with an invitation to be a member of a process assessment team. Your supervisor gives you permission to participate in the assessment as long as it does not affect your regular work. Describe your feelings toward your supervisor.

7. You are a senior manager responsible for purchasing a configuration management tool costing $100,000. At the process assessment results briefing, the recommendation was made to fix or replace the tool. Discuss how you will or will not support this recommendation.

8. You are the chief executive officer of a commercial company. At an executive briefing, a project manager informs you that your organization is SEI Level 1. Discuss how you will respond.

9. Do you think it is easier to replace people or to change the way they work? Explain your answer.

10. Why do people become stressed? How could you help people reduce their stress at work? Do you think people work better under stress? Discuss why you do or do not think so.

19.8 References

[Adams96] Adams S. The Dilbert Principle. New York: HarperCollins, 1996.

[ASQC94] American National Standard. Quality Systems—Model for Quality Assurance in Design, Development, Production, Installation, and Servicing. Milwaukee, WI: ASQC. August 1994.

[Bate94] Bate R, et al. System Engineering Capability Maturity Model (SE-CMM) Version1.0. Handbook CMU/SEI-94-HB-04. Pittsburgh, PA: Software Engineering Institute, Carnegie Mellon University, December 1994.

[Brassard94] Brassard M, Ritter D. The Memory Jogger II. Methuen, MA: GOAL/QPC, 1994.

[CAWG96] Capability Assessment Working Group. Systems Engineering Capability Assessment Model (SECAM) Version 1.41. Document CAWG-1996-01-1.41. Seattle, WA: International Council on Systems Engineering, January 1996.

[Hill95] Hill L, Willer N. A method of assessing projects. Proc. 7th Software Engineering Process Group Conference, Boston, May 1995.

[Motorola93] Motorola University. Utilizing the Six Steps to Six Sigma. SSG 102, Issue No.4. June 1993.

[Paulk93] Paulk M, et al. Capability Maturity Model for Software. Version 1.1. Technical report CMU/SEI-93-TR-24. Pittsburgh, PA: Software Engineering Institute, Carnegie Mellon University, 1993.