17 Assess Risk

cog•ni•tion (kog-nish’en)1. The mental process or faculty by which knowledge is acquired. 2. Something that comes to be known, as through perception, reasoning, or intuition.

—American Heritage Dictionary

The purpose of assessing risk is to understand the risk component of decisions. When we identify and analyze risk, we assess the probability and consequence of unsatisfactory outcomes. Risk assessment is a method for discovery that informs us about the risk. We are better able to decide based on knowing the risk rather than not knowing it. We invest our experience in the attempt to understand the future. By measuring uncertainty, we are developing an awareness of the future. Future awareness is the result of a cognitive thinking process. When we are cognizant, we are fully informed and aware.

In this chapter, I describe how to identify and analyze the possibilities in the project plan and the remaining work. I discuss how you can discover unknown risk and how to assess risk in a team environment. You will need to draw on the process described in Chapters 4 and 5, the risk management plan described in Chapter 15, and the common sense of this chapter to assess risk.

This chapter answers the following questions:

![]() What are the activities to assess risk?

What are the activities to assess risk?

![]() What software risks are frequently reported in risk assessments?

What software risks are frequently reported in risk assessments?

![]() What is the major challenge that remains in the software community?

What is the major challenge that remains in the software community?

17.1 Conduct a Risk Assessment

The project manager is responsible for delegating the task of conducting a risk assessment, which provides a baseline of assessed risks to the project. A risk baseline should be determined for a project as soon as possible so that risk is associated with the work, not with the people. By understanding the worst-case outcome, we bound our uncertainty and contain our fears. Risk assessment helps take some emotion out so we can deal with the issues more logically. The risk assessment should involve all levels of the project because individual knowledge is diverse. Conducting a risk assessment helps to train the risk assessment methods that will be used throughout the project.

There are three main objectives of a risk assessment:

1.Identify and assess risks. You can identify and assess risk using different risk assessment methods. Project requirements may call for a less formal or more analytical approach.

2.Train methods. You can use the techniques that you learned during risk assessment training throughout the project.

3.Provide a baseline. You have a risk aware project team and a risk baseline for continued risk management as the result of a risk assessment.

There are many opportunities to assess risk—for example:

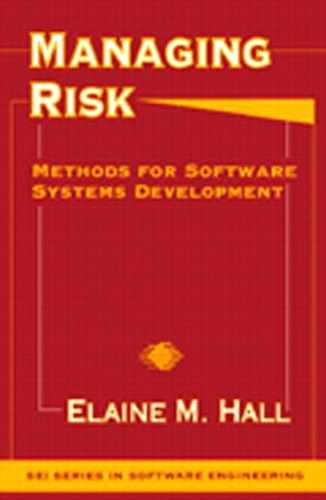

![]() Phase readiness review. Have the team identify the top five risks on a riskappraisal form. As shown in Figure 17.1, the risk appraisal gathers individual perspectives without requiring collaboration or consensus. (The risk statements in this appraisal are concise and readable because they follow the standard notation described in Chapter 4.) Time: 15 minutes.

Phase readiness review. Have the team identify the top five risks on a riskappraisal form. As shown in Figure 17.1, the risk appraisal gathers individual perspectives without requiring collaboration or consensus. (The risk statements in this appraisal are concise and readable because they follow the standard notation described in Chapter 4.) Time: 15 minutes.

![]() Team-building session. Ask the team to identify the top three risks to their success. This exercise is a way to experience collaboration and consensus on risks, and it provides focus for team goals. Time: 30 minutes.

Team-building session. Ask the team to identify the top three risks to their success. This exercise is a way to experience collaboration and consensus on risks, and it provides focus for team goals. Time: 30 minutes.

![]() Technical proposal. A proposal must respond to technical design risk. You can describe technical risk using a risk management form (see Chapter 15 for an example of a completed form), which organizes risk information to help determine the risk action plan. Time: 1–2 hours per risk.

Technical proposal. A proposal must respond to technical design risk. You can describe technical risk using a risk management form (see Chapter 15 for an example of a completed form), which organizes risk information to help determine the risk action plan. Time: 1–2 hours per risk.

Figure 17.1 Individual risk appraisal. The sample risk appraisal requires no prior preparation and is completed without consulting with other personnel. This risk appraisal captures a test engineer’s perceptions about five risks in the project.

![]() Project start-up. A rigorous method of formal risk assessment gathers information by interviewing people in peer groups. This method uses a risk taxonomy checklist and a taxonomy-based questionnaire developed at the SEI [Sisti94]. Although there is no limitation as to when to conduct a formal risk assessment, it is most useful at project start-up or for major project changes, such as an engineering change proposal or change in project management. I describe the activities and cost of a typical formal risk assessment below. Time: 2–4 days.

Project start-up. A rigorous method of formal risk assessment gathers information by interviewing people in peer groups. This method uses a risk taxonomy checklist and a taxonomy-based questionnaire developed at the SEI [Sisti94]. Although there is no limitation as to when to conduct a formal risk assessment, it is most useful at project start-up or for major project changes, such as an engineering change proposal or change in project management. I describe the activities and cost of a typical formal risk assessment below. Time: 2–4 days.

17.1.1 Activities of a Formal Risk Assessment

The activities of an interview-based risk assessment transform project knowledge into risk assessment results: assessed risks, lessons learned, and a risk database. A formal risk assessment has five major activities:

1. Train team

![]() Identify and train assessment team.

Identify and train assessment team.

![]() Select interview participants.

Select interview participants.

![]() Prepare risk assessment schedule.

Prepare risk assessment schedule.

![]() Coordinate meeting logistics.

Coordinate meeting logistics.

2. Identify risk

![]() Interview participants.

Interview participants.

![]() Record perceived risks.

Record perceived risks.

![]() Clarify risk statements.

Clarify risk statements.

![]() Observe process and record results.

Observe process and record results.

3. Analyze risk

![]() Evaluate identified risks.

Evaluate identified risks.

![]() Categorize the risks.

Categorize the risks.

4. Abstract findings

![]() Sort risks in risk database.

Sort risks in risk database.

![]() Prepare findings.

Prepare findings.

5. Report results

![]() Debrief project manager.

Debrief project manager.

![]() Brief project team.

Brief project team.

![]() Evaluate risk assessment.

Evaluate risk assessment.

17.1.2 Cost of a Formal Risk Assessment

The cost of a formal risk assessment varies with the number of people involved. I recommend three individuals on the risk assessment team and a minimum of three peer group interview sessions: the first for project managers, the second for technical leaders, and the third for the engineering staff. You should invite from two to five representatives to participate in each interview session. This rule of thumb works for project teams of 50 people or fewer, when 15 representatives constitute 30 percent of the total team. For project teams over 50 people, you will need more interview sessions to maintain a minimum of one-third team involvement. Team representatives should bring input from the other two-thirds to the interview session. Because interviews with a single person do not develop the group dynamics for discovering unknowns, they are not recommended. Individual interviews are analogous to performing a peer review with the author of the source code under review without peers. Yes, there is value in the review—but it is limited.

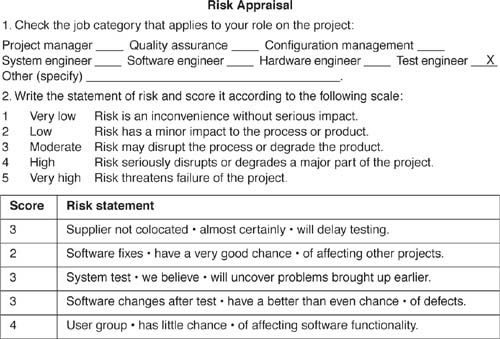

Figure 17.2 Formal risk assessment schedule.

The formal risk assessment is more expensive than other assessment methods because it provides the most time for communication between project members. The assessment team contributes about half of the cost of the assessment. The value of the risk assessment team is the members’ expertise and independence. The assessment team also helps minimize the time the project team spends away from their assigned work. Figure 17.2 shows the schedule of a typical formal risk assessment. Using the above recommendations, the cost for the assessment team is 72 total labor hours. The cost for the project team is 52 total labor hours plus 1 hour for each team member who attends the results briefing.

17.2 Develop a Candidate Risk List

You can routinely develop a candidate list of risks by reviewing a risk checklist, work breakdown structure, or a previously developed top ten risk list. These structured lists help you to identify your own set of risks from known risk areas. In addition, you can use techniques to discover unknown risks. When developing a candidate risk list, the focus is on identification of risk and source of risk in both management and technical areas.

17.2.1 List Known Risks

Known risks are those that you are aware of. Communication helps you to understand known risk in the following ways:

![]() Separate individuals from issues. Sometimes the right person knows about a risk but is afraid to communicate it to the team, fearing that the team will perceive the individual, not the issue, at risk. In one case, the person responsible for configuration management had no tool training but during the interview session did not raise this as a risk. Fortunately, someone else raised this issue as a risk, so that it could be resolved.

Separate individuals from issues. Sometimes the right person knows about a risk but is afraid to communicate it to the team, fearing that the team will perceive the individual, not the issue, at risk. In one case, the person responsible for configuration management had no tool training but during the interview session did not raise this as a risk. Fortunately, someone else raised this issue as a risk, so that it could be resolved.

![]() Report risks. The most frequently reported software development risks are issues often found on software projects. It is likely that other software projects report risks similar to those found on your project. Section 17.7 lists the top ten risks of government and industry prioritized by the frequency as reported in risk assessments.

Report risks. The most frequently reported software development risks are issues often found on software projects. It is likely that other software projects report risks similar to those found on your project. Section 17.7 lists the top ten risks of government and industry prioritized by the frequency as reported in risk assessments.

![]() Report problems. Everyone knows about issues that are pervasive within the organization, such as the lack of a software process. When there are problems without a focus for improvement, these problems are known future risks. If you have problems that are likely to worsen without proper attention, you should add them to the risk list.

Report problems. Everyone knows about issues that are pervasive within the organization, such as the lack of a software process. When there are problems without a focus for improvement, these problems are known future risks. If you have problems that are likely to worsen without proper attention, you should add them to the risk list.

17.2.2 Discover Unknown Risks

Unknown risks are those that exist without anyone’s awareness of them. Communication helps to discover unknown risk in the following ways:

![]() Share knowledge. A team can discover risks by individuals’ contributing what they know. The collective information forms a more complete picture of the risk in a situation. Individuals contribute pieces of a puzzle that they could not resolve on their own.

Share knowledge. A team can discover risks by individuals’ contributing what they know. The collective information forms a more complete picture of the risk in a situation. Individuals contribute pieces of a puzzle that they could not resolve on their own.

![]() Recognize importance. We sometimes identify risks without an awareness of their significance. Discovering the magnitude of the risk helps in prioritizing it correctly. The passing of time helps us to discover risk importance, but only if we are paying attention. When we are not paying attention, risks turn into problems, when it may be too late to repair the damage.

Recognize importance. We sometimes identify risks without an awareness of their significance. Discovering the magnitude of the risk helps in prioritizing it correctly. The passing of time helps us to discover risk importance, but only if we are paying attention. When we are not paying attention, risks turn into problems, when it may be too late to repair the damage.

![]() Cumulate indicators. A status indicator that is 10 percent off target is generally perceived as low risk. The summation of a small variance in several indicators can add up to a critical risk. Count the number of indicators exceeding threshold and discover the effect of aggregate risk.

Cumulate indicators. A status indicator that is 10 percent off target is generally perceived as low risk. The summation of a small variance in several indicators can add up to a critical risk. Count the number of indicators exceeding threshold and discover the effect of aggregate risk.

17.3 Define Risk Attributes

For each issue listed in the candidate risk list, you should qualify the issue as a risk. If the probability is zero or one, there is no uncertainty, and the issue is not a risk. If there is no consequence of the risk’s occurring in the future, the issue is not a risk. Once you believe that you are dealing with a risk, however, you will want to bound the primary risk attributes of probability and consequence.

17.3.1 Bound the Primary Attributes

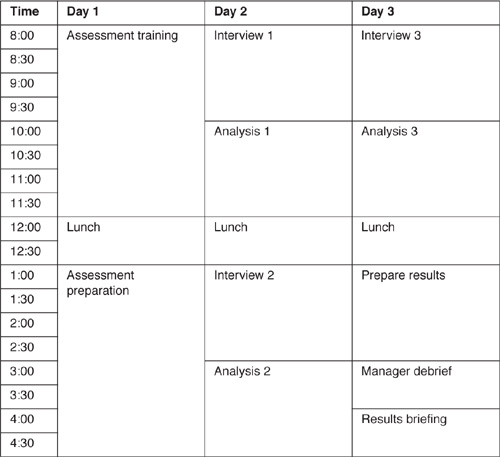

To bound the primary risk attribute of probability, you can use words or numbers. Table 17.1 provides the mapping from subjective phrases to quantitative numbers. When you are unsure about the probability, you can provide a range for the probability. The range can be broad to provide room for error (e.g., 40 to 60 percent). A five-point risk score can be used in conjunction with a numerical percentage or a perceived likelihood to help group risks.

To bound the primary risk attribute of consequence, you can list the effect on specific objectives. If the risk were realized, what would happen to your objective? For example, if a virus is downloaded from the Internet, system security would be compromised.

Table 17.1 Risk Probability Map

17.3.2 Categorize the Risk

Risk assessment results are often categorized to communicate the nature of a risk. There are five steps to categorize risk:

1. Clarify. Write the risk statement according to the standard notation. Describe the risk in its simplest terms to refine the understanding of the issue.

2. Consense. Agree on the meaning of the risk statement. Once everyone understands the issue, you should agree on the scope of the issue as written. Agreement is not about the validity of the issue as a risk but about the meaning and intent of the issue as documented.

3. Classify. Select the risk category from a risk classification scheme, such as the SEI software risk taxonomy [Carr93]. If two or more categories describe the risk, the risk may be a compound one. Decompose risks to their lowest level. Note the dependencies that link related risks together.

4. Combine. Group risk statements by risk categories. Roll up all the issues in a category and review them to gain a general understanding of this category by the different perspectives documented in the risks.

5. Condense. Remove duplicates and summarize related risks. After you summarize the issues in a logical area, you can easily locate and remove duplicates. Then group related risks with other risks by risk category.

17.3.3 Specify the Risk Drivers

Risk drivers are the variables that cause either the probability or consequence to increase significantly—for example, constraints, resources, technology, and tools. The process and environment can also be risk drivers. These forces cause risk to change over time. For example, your SEI Level 3 process can be reduced to an ad hoc SEI Level 1 process over time due to the risk driver of schedule. You would want to specify the schedule constraint as the risk driver that causes the process to be at risk. Because risks are dynamic, it is important to specify the risk drivers to describe the factors that support risk occurrence. Understanding the risk drivers will help you know where to begin to resolve risk. Use the analysis techniques from Chapter 5 to help you determine the risk drivers and the source of risk.

17.4 Document Identified Risk

A risk management form for documenting the risk provides a familiar mechanism that structures how we think about risk. The completed risk management form in Chapter 15 contains all the essential information to understand the risk.

17.5 Communicate Identified Risk

Communicate identified risk to appropriate project personnel to increase awareness of project issues in a timely manner. Examples of good risk statements are contained in Figure 17.1 and in section 17.7. You can communicate identified risk by submitting the risk management form. Logging risks in a risk database is one mechanism to facilitate communication of identified risks. Risks that are logged into the system can be periodically reviewed. The “Risk Category” and “WBS Element” fields of the risk management form help to identify the appropriate project personnel who should review the risk.

17.6 Estimate and Evaluate Risk

Once the risk is clearly communicated, an estimate of risk exposure—the product of risk probability and consequence—can be made. The estimate of risk exposure and the time frame for action help to establish a category of risk severity, which determines the relative risk priority by mapping categories of risk exposure against the time frame for action. An evaluation of risk severity in relation to other logged risks determines the actual risk priority. Criteria for risk evaluation should be established.

17.7 Prioritize Risk

Prioritizing risk provides a focus for what is really important. Your prioritized risk list will be unique to your role on a specific project. In general, the challenges of managing software development can be understood by reviewing the current list of prioritized risks reported by the software community. Government and industry sectors of the software community have different yet interrelated risks. The government role is primarily that of acquirer (i.e., consumer), while industry is the primary developer (i.e., producer).1 By understanding the risks of each sector, we can work better together as a software community. Through a partnership in helping each other, we ultimately help ourselves. The remainder of this section reflects on the risks most often reported from risk assessments in the 1990s and how the list has changed since the 1980s.

1 These roles may be reversed in the future as the general population becomes a significant software consumer and the government helps to develop the information highway.

17.7.1 Government Top Ten

The current issues facing the government sector of the software community depend on whom you ask and when you ask. If you ask a program executive officer (PEO) from a program in the demonstration/validation phase, he or she might say, “Meeting the specified technical performance requirements within cost and schedule.”2 If you ask a PEO from a program in the development/integration phase, the reply might be, “Funding is a major issue due to the lack of sweep-up dollars in the Pentagon.”3 In this case, the difference is a matter of life cycle phase: one software acquisition manager is beginning a five-year program, while the other is maintaining an existing system and developing upgrades simultaneously. Because each life cycle phase has a different focus, issues and risks will vary accordingly.

2 Conversation with direct reporting program manager (DRPM) Colonel James Feigley, USMC.

3 From a classified conversation.

Formal risk assessments in the government software sector provide insight into risks of a software consumer. The project participants and the independent risk assessment team both rated the issues used to develop the prioritized Government Top Ten Risk List. I added the risk category and summarized the risk statement (according to my standard notation); the project participants provided the risk context.

1. Funding (Risk.Management.Project). Constrained funding • almost certainly • limits the total scope of the project and affects the ability to deliver. There is no financial reserve. Funding sources face similar constraints. The process used to get additional funding is slow and cumbersome. Inputs to this process are not based on complete or accurate estimates. The project is budgeted at the minimum sustainable level, and budget cuts are a fact of life. Begging for money takes time.

2. Roles and Responsibilities (Risk.Management.Project). Poorly defined roles and responsibilities • almost certainly cause • uncoordinated activities, an uneven workload, and a loss of focus. Project roles and responsibilities are misunderstood. Lack of communication is caused by not knowing where to go for information. Individuals perform multiple roles, resulting in “context switching.” Without a clear understanding of responsibilities, it is difficult to hold people accountable.

3. Staff Expertise (Risk.Management.Project). Shortfalls in staff expertise • are highly likely to continue, causing • lower morale, burnout, and staff turnover. Shortfalls in expertise are due to a lack of training and difficulties hiring someone with the right skills through the government hiring system. Replacing personnel is very time-consuming. Turnover from retirement and promotion, combined with turnover expected due to morale and burnout, would cause a further decrease in staff expertise.

4. Development Process (Risk.Technical.Process). Development process definition is inadequate.• It is highly likely that procedures followed inconsistently will cause • schedule slip and cost overrun. Existing processes are not well understood or documented. Requirements elicitation process is poor. No method exists for estimating the cost of changing requirements. The change control process is not always followed. Process steps such as testing and configuration management are bypassed due to schedule pressure.

5. Project Planning (Risk.Management.Process). The planning process is not well defined.• There is a very good chance that the software estimates are inaccurate, causing • difficulty in long-range planning. There is no integrated project master plan. Work breakdown structure is in bits and pieces, and not communicated. Plans are not based systematically on documented estimates. Contingency planning is informal or nonexistent. Multiple external dependencies drive replanning that is done without understanding the ripple effect of changes to the plan.

6. Project Interfaces (Risk.Management.Project). Multiple project interfaces • will likely cause • conflicting requirements, technical dependencies, difficulty coordinating schedules, and a loss of project control. The project interfaces with a number of systems, many of which are under development. Customers have conflicting requirements, both technical and programmatic. Data and communications dependencies exist with other government systems. There are technical dependencies on hardware platform availability and logistic dependencies on hardware delivery and training availability. There are difficult-to-coordinate schedules with a large number of project interfaces and no political clout to control the project.

7. System Engineering (Risk.Technical.Process). Informal and distributed systems engineering • will likely cause • problems during hardware and software integration. There is no single point of contact for systems engineering. Systems engineering functions are informally distributed across the project. Interface control documents are lacking with some critical external systems. The impact of system changes is not always assessed. Several areas such as system architecture, human-computer interface design, and security are not assessed efficiently. Extensive reuse and COTS creates a “shopping cart” architecture that causes uncertainty as to whether the software can be upgraded to meet future needs.

8. Requirements (Risk.Technical.Product). Multiple generators of requirements • will probably cause • requirements creep, conflicting requirements, and schedule slip. There are growth of existing requirements, changes to requirements, and new requirements. Requirements grow because they are not fully understood. Derived requirements arise from refining higher-level requirements. Requirements come from a large, diverse customer base, and there is potential for conflict between requirements. Unstable and incomplete requirements are major sources of schedule risk.

9. Schedule (Risk.Management.Project). Constrained schedule • we believe may cause • late system delivery, customer dissatisfaction, erosion of sponsorship, and loss of funding. The development schedule is driven by operational needs of different user groups whose schedules are not synchronized. Once release dates are set and made public, it sets customer expectations. If the system is not delivered on time for any reason, customer dissatisfaction can lead to erosion of sponsorship and loss of funding.

10. Testing (Risk.Technical.Process). Inadequate testing • has a better than even chance of causing • concern for system safety. Testing is the primary method for defect detection. The test phase is compressed due to budget and schedule constraints. Test steps are bypassed for low-priority trouble reports. Testing processes are not well defined. Testing is not automated, and existing test and analysis tools are not used effectively. Inadequate regression testing is a safety issue.

17.7.2 Industry Top Ten

What are the current issues facing the industry sector of the software community? It depends on whom you ask and when you ask. Management, if asked, might mention project funding as a particularly high-risk area. Technical staff might say that requirements are changing. In this case, the difference is a matter of conflicting goals. Management is focused on the project profit equation; technical staff has primary responsibility for the product. Each employee has a different assigned task, and the risks will be relative to the task success criteria.

Formal risk assessments in the industry software sector provide insight into the risks of a software producer. The project participants and the independent risk assessment team both rated the issues that were used to develop the prioritized Industry Top Ten Risk List. The risks are described below with their risk category, risk statement, and risk context.

1. Resources (Risk.Management.Project). Aggressive schedules on fixed budgets• almost certainly will cause • a schedule slip and a cost overrun. Appropriate staffing is incomplete early in project. No time for needed training. Productivity rates needed to meet schedule are not likely to occur. Overtime perceived as a standard procedure to overcome schedule deficiencies. Lack of analysis time may result in incomplete understanding of product functional requirements.

2. Requirements (Risk.Technical.Product). Poorly defined user requirements • almost certainly will cause • existing system requirements to be incomplete. Documentation does not adequately describe the system components. Interface document is not approved. Domain experts are inaccessible and unreliable. Detailed requirements must be derived from existing code. Some requirements are unclear, such as the software reliability analysis and the acceptance criteria. Requirements may change due to customer turnover.

3. Development Process (Risk.Technical.Process). Poorly conceived development process • is highly likely to cause • implementation problems. There is introduction of new methodology from company software process improvement initiative. Internally imposed development process is new and unfamiliar. The Software Development Plan is inappropriately tailored for the size of the project. Development tools are not fully integrated. Customer file formats and maintenance capabilities are incompatible with the existing development environment.

4. Project Interfaces (Risk.Management.Project). Dependence on external software delivery • has a very good chance of causing • a schedule slip. Subcontractor technical performance is below expectations. There is unproven hardware with poor vendor track record. Subcontractor commercial methodology conflicts with customer MIL spec methodology. Customer action item response time is slow. Having difficulty keeping up with changing/increasing demands of customers.

5. Management Process (Risk.Management.Process). Poor planning • is highly likely to cause • an increase in development risk. Management does not have a picture of how to manage object-oriented (i.e., iterative) development. Project sizing is inaccurate. Roles and responsibilities are not well understood. Assignment of system engineers is arbitrary. There is a lack of time and staff for adequate internal review of products. No true reporting moves up through upper management. Information appears to be filtered.

6. Development System (Risk.Technical.Process). Inexperience with the development system • will probably cause • lower productivity in the short term. Nearly all aspects of the development system are new to the project team. The level of experience with the selected tool suite will place the entire team on the learning curve. There is no integrated development environment for software, quality assurance, configurations management, systems engineering, test, and the program management office. System administration support in tools, operating system, networking, recovery and backups is lacking.

7. Design (Risk.Technical.Product). Unproven design • will likely cause • system performance problems and inability to meet performance commitments. The protocol suite has not been analyzed for performance. Delayed inquiry and global query are potential performance problems. As the design evolves, database response time may be hard to meet. Object-oriented runtime libraries are assumed to be perfect. Building state and local backbones of sufficient bandwidth to support image data are questionable. The number of internal interfaces in the proposed design generates complexity that must be managed. Progress toward meeting technical performance for the subsystem has not been demonstrated.

8. Management Methods (Risk.Management.Process). Lack of management controls • will probably cause • an increase in project risk and a decrease in customer satisfaction. Management controls of requirements are not in place. Content and organization of monthly reports does not provide insight into the status of project issues. Risks are poorly addressed and not mitigated. Quality control is a big factor in project but has not been given high priority by the company [customer perspective]. SQA roles and responsibilities have expanded beyond original scope [company perspective].

9. Work Environment (Risk.Technical.Process). Remote location of project team • we believe will • make organizational support difficult and cause downtime. Information given to technical and management people does not reach the project team. Information has to be repeated many times. Project status is not available through team meetings or distribution of status reports. Issues forwarded to managers via the weekly status report are not consistently acted on. Lack of communication between software development teams could cause integration problems.

10. Integration and Test (Risk.Technical.Product). Optimistic integration schedule• has a better than even chance of • accepting an unreliable system. The integration schedule does not allow for the complexity of the system. Efforts to develop tests have been underestimated. The source of data needed to test has not been identified. Some requirements are not testable. Formal testing below the system level is not required. There is limited time to conduct reliability testing.

17.7.3 Top Ten Progress and Challenges

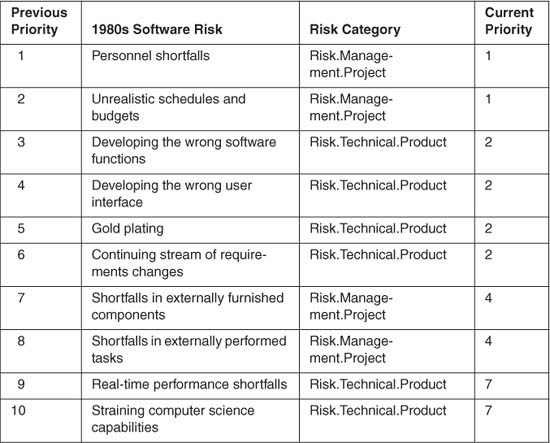

In the 1980s, experienced project managers identified the risks that were likely to compromise a software project’s success [Boehm89]. Table 17.2 describes the top ten risk list of the 1980s and shows how the risk priority has changed over time. A review of software risk from the past decade shows both progress and challenges that remain in the industry.

Risk category in the table shows the lack of process focus that typified software development in the 1980s. Progress has been made here. Today we recognize process risk and know how to assess software process. Half of the industry reported risks are related to process.

Current priority in the table shows that risk has increased in priority since the 1980s, which suggests a lack of effective software risk management. Resources, requirements, external interfaces, and design continue as major risk areas. These risk areas should be considered as opportunities for improvement.

Table 17.2 Top Ten Risk List of the 1980s

17.8 Summary

In this chapter, I described how to identify and analyze the possibilities in the project plan and remaining work. The following checklist can serve as a reminder of the activities to assess risk:

1. Conduct a risk assessment.

2. Develop a candidate risk list.

3. Define risk attributes.

4. Document identified risk.

5. Communicate identified risk.

6. Estimate and evaluate risk.

7. Prioritize risk.

I presented results from risk assessments performed in the 1990s to use as a list of known risks. The following government and industry top ten risk areas are prioritized by their frequency as reported in the risk assessments.

Government Top Ten Reported Risks

1. Funding.

2. Roles and responsibilities.

3. Staff expertise.

4. Development process.

5. Project planning.

6. Project interfaces.

7. System engineering.

8. Requirements.

9. Schedule.

10. Testing.

Industry Top Ten Reported Risks

1. Resources.

2. Requirements.

3. Development process.

4. Project interfaces.

5. Management process.

6. Development system.

7. Design.

8. Management methods.

9. Work environment.

10. Integration and test.

The 1990s top ten list has changed from the 1980s. These changes indicate where progress has been made and the challenges that remain. For example, progress has been made by identifying process as a new risk. Further comparison reveals that risk priorities have increased in the past decade. The risks of the 1980s have not been resolved. This fact underscores the major challenge that remains in the software community is to follow through with risk control.

17.9 Questions for Discussion

1. Do you think it is important to articulate what we do not know? Discuss the significance of asking questions to become aware of the unknown.

2. Explain why the project manager is responsible for delegating the task of conducting a risk assessment.

3. List three types of risk assessment methods and describe a situation when each would be appropriate.

4. Discuss the extent to which sharing information can discover unknown risks.

5. List the five steps to categorize risk. What is the value of each step?

6. Explain the risk drivers that might cause an SEI Level 3 process to degrade.

7. Do you think it is important to communicate risk in a standard format? Discuss how a standard format might make people more receptive to dealing with uncertainty.

8. Explain how you could measure risk exposure over time and predict risk occurrence.

9. Discuss how you can use time frame for action to determine risk priority.

10. Do you trust your intuition? Give an example of a time when you did and a time when you did not trust your intuition.

17.10 References

[American85] The American Heritage Dictionary. 2nd College Ed., Boston: Houghton Mifflin, 1985.

[Boehm89] Boehm, B. IEEE Tutorial on Software Risk Management. New York: IEEE Computer Society Press, 1989.

[Carr93] Carr M, Konda S, Monarch I, Ulrich F, Walker C. Taxonomy based risk identification. Technical report CMU/SEI-93-TR-6. Pittsburgh, PA: Software Engineering Institute, Carnegie Mellon University, 1993.

[Sisti94] Sisti F, Joseph S. Software risk evaluation method. Technical report CMU/SEI-94-TR-19. Pittsburgh, PA: Software Engineering Institute, Carnegie Mellon University, 1994.