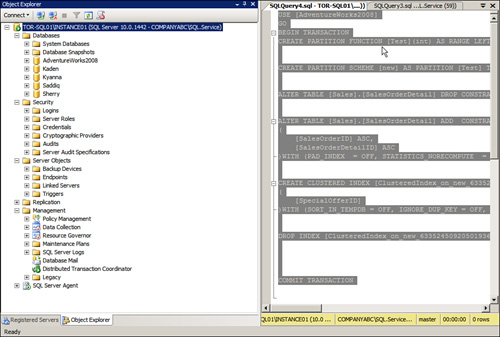

Chapter 2 Administering the SQL Server 2008 Database Engine

Although SQL Server 2008 is composed of numerous components, one component is often considered the foundation of the product. The Database Engine is the core service for storing, processing, and securing data for the most challenging data systems. Note that the Database Engine is also known as Database Engine Services in SQL Server 2008, Likewise, it provides the foundation and fundamentals for the majority of the core database administration tasks. As a result of its important role in SQL Server 2008, it is no wonder that the Database Engine is designed to provide a scalable, fast, secure, and highly available platform for data access and other components.

This chapter focuses on administering the Database Engine component, also referred to as a feature in SQL Server 2008. Administration tasks include managing SQL Server properties, database properties, folders within SQL Server Management Studio, and the SQL Server Agent based on SQL Server 2008. In addition, Database Engine management tasks are also covered.

Even though the chapter introduces and explains all the management areas within the Database Engine, you are directed to other chapters for additional information. This is a result of the Database Engine feature being so large and intricately connected to other features.

SQL Server 2008 introduces a tremendous number of new features, in addition to new functionality, that DBAs need to be aware of. The following are some of the important Database Engine enhancements:

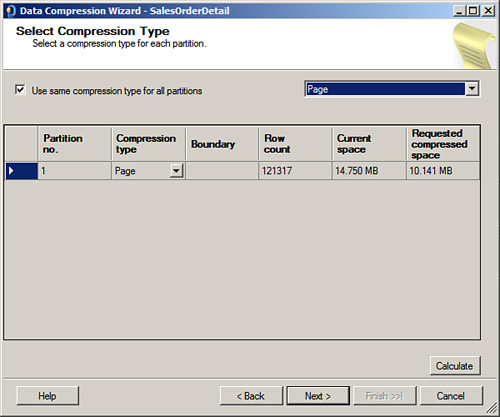

![]() Built-in on-disk storage compression at several different areas. DBAs can compress database files, transaction logs, backups, and also database table elements at the row level and the page level.

Built-in on-disk storage compression at several different areas. DBAs can compress database files, transaction logs, backups, and also database table elements at the row level and the page level.

![]() A new storage methodology for storing unstructured data. SQL Server FILESTREAM allows large binary data to be stored in the filesystem yet remains an integral part of the database with transactional consistency.

A new storage methodology for storing unstructured data. SQL Server FILESTREAM allows large binary data to be stored in the filesystem yet remains an integral part of the database with transactional consistency.

![]() Improved performance on retrieving vector-based data by creating indexes on spatial data types.

Improved performance on retrieving vector-based data by creating indexes on spatial data types.

![]() A new data-tracking feature, Change Data Capture and Merge, allows DBAs to track and capture changes made to databases with minimal performance impact.

A new data-tracking feature, Change Data Capture and Merge, allows DBAs to track and capture changes made to databases with minimal performance impact.

![]() A new database management approach to standardizing, evaluating, and enforcing SQL Server configurations with Policy Based Management. This dramatically improves DBA productivity because simplified management can be easily achieved. In addition, the policies can scale to hundreds or thousands of servers within the enterprise.

A new database management approach to standardizing, evaluating, and enforcing SQL Server configurations with Policy Based Management. This dramatically improves DBA productivity because simplified management can be easily achieved. In addition, the policies can scale to hundreds or thousands of servers within the enterprise.

![]() A DBA can leverage standard administrative reports included with the Database Engine to increase the ability to view the health of a SQL Server system.

A DBA can leverage standard administrative reports included with the Database Engine to increase the ability to view the health of a SQL Server system.

![]() Performance Studio can be leveraged to troubleshoot, tune, and monitor SQL Server 2008 instances.

Performance Studio can be leveraged to troubleshoot, tune, and monitor SQL Server 2008 instances.

![]() New encryption technologies such as Transparent Data Encryption (TDE) can be leveraged to encrypt sensitive data natively without making any application changes.

New encryption technologies such as Transparent Data Encryption (TDE) can be leveraged to encrypt sensitive data natively without making any application changes.

![]() New auditing mechanisms have been introduced; therefore, accountability and compliance can be achieved for a database.

New auditing mechanisms have been introduced; therefore, accountability and compliance can be achieved for a database.

![]() A DBA can add processors to the SQL Server system on the fly. This minimizes server outages; however, the hardware must support Hot CPU Add functionality.

A DBA can add processors to the SQL Server system on the fly. This minimizes server outages; however, the hardware must support Hot CPU Add functionality.

![]() Resource Governor is a new management node found in SQL Server Management Studio. Resource Governor will give DBAs the potential to manage SQL Server workload and system resource consumption.

Resource Governor is a new management node found in SQL Server Management Studio. Resource Governor will give DBAs the potential to manage SQL Server workload and system resource consumption.

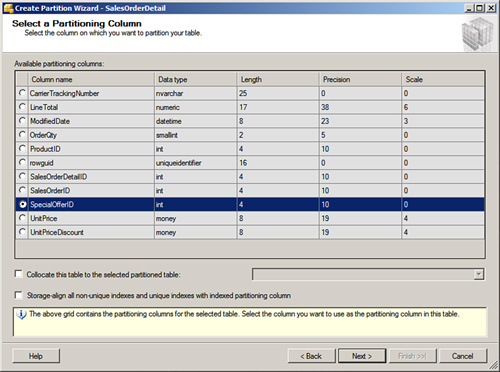

![]() SQL Server 2008 enhances table and index partitioning by introducing an intuitive Create Partition Wizard tool.

SQL Server 2008 enhances table and index partitioning by introducing an intuitive Create Partition Wizard tool.

![]() SQL Server 2008 and PowerShell are integrated; therefore, DBAs can use Microsoft’s extensible command-line shell to perform administrative SQL Server tasks by execution of cmdlets.

SQL Server 2008 and PowerShell are integrated; therefore, DBAs can use Microsoft’s extensible command-line shell to perform administrative SQL Server tasks by execution of cmdlets.

![]() The Database Engine Query Editor now supports IntelliSense. IntelliSense is an autocomplete function that speeds up programming and ensures accuracy.

The Database Engine Query Editor now supports IntelliSense. IntelliSense is an autocomplete function that speeds up programming and ensures accuracy.

The SQL Server Properties dialog box is the main place where you, as database administrator (DBA), configure server settings specifically tailored toward a SQL Server 2008 Database Engine installation.

You can invoke the Server Properties dialog box for the Database Engine by following these steps:

1. Choose Start, All Programs, Microsoft SQL Server 2008, SQL Server Management Studio.

2. Connect to the Database Engine in Object Explorer.

3. Right-click SQL Server and then select Properties.

The Server Properties dialog box includes eight pages of Database Engine settings that you can view, manage, and configure. The eight Server Properties pages are similar to what was found in SQL Server 2005 and include

![]() General

General

![]() Memory

Memory

![]() Processors

Processors

![]() Security

Security

![]() Connections

Connections

![]() Database Settings

Database Settings

![]() Advanced

Advanced

![]() Permissions

Permissions

Note

Each SQL Server Properties setting can be easily scripted by clicking the Script button. The Script button is available on each Server Properties page. The Script output options available include Script Action to New Query Window, Script Action to a File, Script Action to Clipboard, and Script Action to a Job.

Note

In addition, it is possible to obtain a listing of all the SQL Server configuration settings associated with a Database Engine installation by executing the following query, in Query Editor.

SELECT * FROM sys.configurations

ORDER BY name ;

GO

The following sections provide examples and explanations for each page in the SQL Server Properties dialog box.

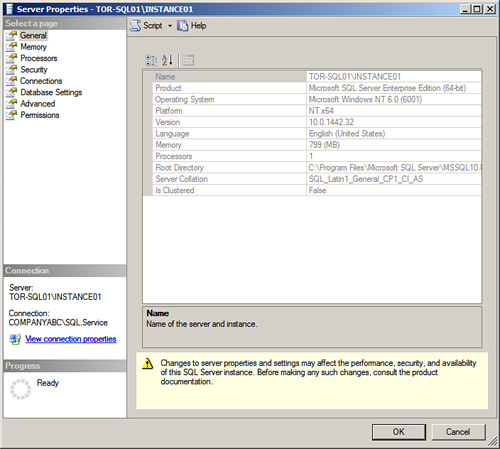

The first Server Properties page, General, includes mostly information pertaining to the SQL Server 2008 installation, as illustrated in Figure 2.1. Here, you can view the following items: SQL Server name; product version such as Standard, Enterprise, or 64-bit; Windows platform, such as Windows 2008 or Windows 2003; SQL Server version number; language settings; total memory in the server; number of processors; Root Directory; Server Collation; and whether the installation is clustered.

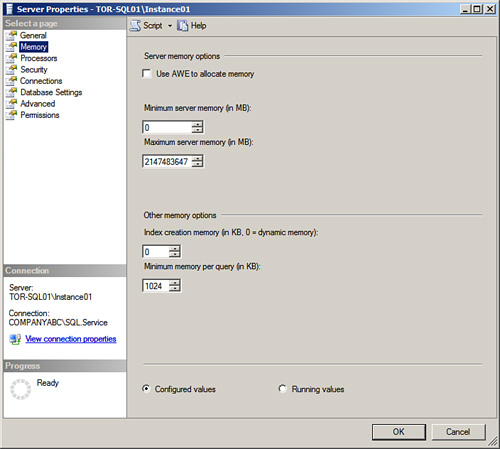

Memory is the second page within the Server Properties dialog box. As shown in Figure 2.2, this page is broken into two sections: Server Memory Options and Other Memory Options. Each section has additional items to configure to manage memory; they are described in the following sections.

The Server Memory options are as follows:

![]() Use AWE to Allocate Memory— If this setting is selected, the SQL Server installation leverages Address Windowing Extensions (AWE) memory. Typically, this setting is no longer needed when running 64-bit systems as they can natively handle more than 4GB of RAM out of the box. Therefore, the setting is only tailored toward 32-bit systems.

Use AWE to Allocate Memory— If this setting is selected, the SQL Server installation leverages Address Windowing Extensions (AWE) memory. Typically, this setting is no longer needed when running 64-bit systems as they can natively handle more than 4GB of RAM out of the box. Therefore, the setting is only tailored toward 32-bit systems.

![]() Minimum Server Memory and Maximum Server Memory— The next items within Memory Options are for inputting the minimum and maximum amount of memory allocated to a SQL Server instance. The memory settings inputted are calculated in megabytes.

Minimum Server Memory and Maximum Server Memory— The next items within Memory Options are for inputting the minimum and maximum amount of memory allocated to a SQL Server instance. The memory settings inputted are calculated in megabytes.

The following Transact-SQL (TSQL) code can be used to configure Server Memory Options:

sp_configure 'awe enabled', 1

RECONFIGURE

GO

sp_configure 'min server memory', ,<MIN AMOUNT IN MB>

RECONFIGURE

GO

sp_configure 'max server memory', <MAX AMOUNT IN MB>

RECONFIGURE

GO

The second section, Other Memory Options, has two additional memory settings tailored toward index creation and minimum memory per query:

![]() Index Creation Memory— This setting allocates the amount of memory that should be used during index creation operations. The default value is 0, which represents dynamic allocation by SQL Server.

Index Creation Memory— This setting allocates the amount of memory that should be used during index creation operations. The default value is 0, which represents dynamic allocation by SQL Server.

![]() Minimum Memory Per Query— This setting specifies the minimum amount of memory in kilobytes that should be allocated to a query. The default setting is configured to the value of 1024KB.

Minimum Memory Per Query— This setting specifies the minimum amount of memory in kilobytes that should be allocated to a query. The default setting is configured to the value of 1024KB.

Note

It is best to let SQL Server dynamically manage both the memory associated with index creation and the memory for queries. However, you can specify values for index creation if you’re creating many indexes in parallel. You should tweak the minimum memory setting per query if many queries are occurring over multiple connections in a busy environment.

Use the following TSQL statements to configure Other Memory Options:

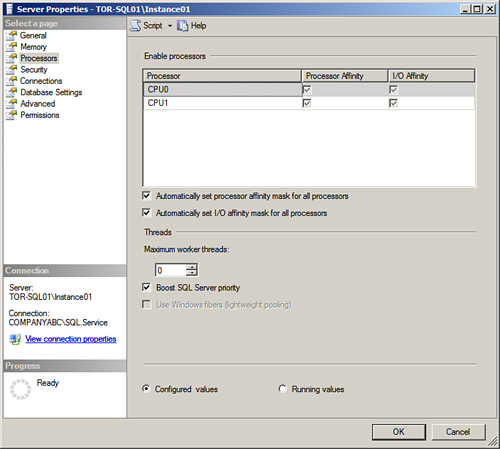

The Processors page, shown in Figure 2.3, should be used to administer or manage any processor-related options for the SQL Server 2008 Database Engine. Options include threads, processor performance, affinity, and parallel or symmetric processing.

Similar to a database administrator, the operating system is constantly multitasking. Therefore, the operating system moves threads between different processors to maximize processing efficiency. You should use the Processors page to administer or manage any processor-related options such as parallel or symmetric processing. The processor options include:

![]() Enable Processors— The two processor options in this section include Processor Affinity and I/O Affinity. Processor Affinity allows SQL Server to manage the processors; therefore, processors are assigned to specific threads during execution. Similar to Processor Affinity, the I/O Affinity setting informs SQL Server about which processors can manage I/O disk operations.

Enable Processors— The two processor options in this section include Processor Affinity and I/O Affinity. Processor Affinity allows SQL Server to manage the processors; therefore, processors are assigned to specific threads during execution. Similar to Processor Affinity, the I/O Affinity setting informs SQL Server about which processors can manage I/O disk operations.

Tip

SQL Server 2008 does a great job of dynamically managing and optimizing processor and I/O affinity settings. If you need to manage these settings manually, you should reserve some processors for threading and others for I/O operations. A processor should not be configured to do both.

![]() Automatically Set Processor Affinity Mask for All Processors— If this option is enabled, SQL Server dynamically manages the Processor Affinity Mask and overwrites the existing Affinity Mask settings.

Automatically Set Processor Affinity Mask for All Processors— If this option is enabled, SQL Server dynamically manages the Processor Affinity Mask and overwrites the existing Affinity Mask settings.

![]() Automatically Set I/O Affinity Mask for All Processors— Same thing as the preceding option: If this option is enabled, SQL Server dynamically manages the I/O Affinity Mask and overwrites the existing Affinity Mask settings.

Automatically Set I/O Affinity Mask for All Processors— Same thing as the preceding option: If this option is enabled, SQL Server dynamically manages the I/O Affinity Mask and overwrites the existing Affinity Mask settings.

The following Threads items can be individually managed to assist processor performance:

![]() Maximum Worker Threads— The Maximum Worker Threads setting governs the optimization of SQL Server performance by controlling thread pooling. Typically, this setting is adjusted for a server hosting many client connections. By default, this value is set to 0. The 0 value represents dynamic configuration because SQL Server determines the number of worker threads to utilize. If this setting will be statically managed, a higher value is recommended for a busy server with a high number of connections. Subsequently, a lower number is recommended for a server that is not being heavily utilized and has a small number of user connections. The values to be entered range from 10 to 32,767.

Maximum Worker Threads— The Maximum Worker Threads setting governs the optimization of SQL Server performance by controlling thread pooling. Typically, this setting is adjusted for a server hosting many client connections. By default, this value is set to 0. The 0 value represents dynamic configuration because SQL Server determines the number of worker threads to utilize. If this setting will be statically managed, a higher value is recommended for a busy server with a high number of connections. Subsequently, a lower number is recommended for a server that is not being heavily utilized and has a small number of user connections. The values to be entered range from 10 to 32,767.

Tip

Microsoft recommends maintaining the Maximum Worker Threads setting at 0 in order to negate thread starvation. Thread starvation occurs when incoming client requests are not served in a timely manner due to a small value for this setting. Subsequently, a large value can waste address space as each active thread consumes 512KB.

![]() Boost SQL Server Priority— Preferably, SQL Server should be the only application running on the server; therefore, it is recommended to enable this check box. This setting tags the SQL Server threads with a higher priority value of 13 instead of the default 7 for better performance. If other applications are running on the server, performance of those applications could degrade if this option is enabled because those threads have a lower priority. If enabled, it is also possible that resources from essential operating system and network functions may be drained.

Boost SQL Server Priority— Preferably, SQL Server should be the only application running on the server; therefore, it is recommended to enable this check box. This setting tags the SQL Server threads with a higher priority value of 13 instead of the default 7 for better performance. If other applications are running on the server, performance of those applications could degrade if this option is enabled because those threads have a lower priority. If enabled, it is also possible that resources from essential operating system and network functions may be drained.

![]() Use Windows Fibers (Lightweight Pooling)— This setting offers a means of decreasing the system overhead associated with extreme context switching seen in symmetric multiprocessing environments. Enabling this option provides better throughput by executing the context switching inline.

Use Windows Fibers (Lightweight Pooling)— This setting offers a means of decreasing the system overhead associated with extreme context switching seen in symmetric multiprocessing environments. Enabling this option provides better throughput by executing the context switching inline.

Note

Enabling fibers is tricky because it has its advantages and disadvantages for performance. This is derived from how many processors are running on the server. Typically, performance gains occur if the system is running a lot of CPUs, such as more than 16, whereas performance may decrease if there are only 1 or 2 processors. To ensure the new settings are optimized, it is a best practice to monitor performance counters, after changes are made. In addition, this setting is only available when using Windows Server 2003 Enterprise Edition.

These TSQL statements should be used to set processor settings:

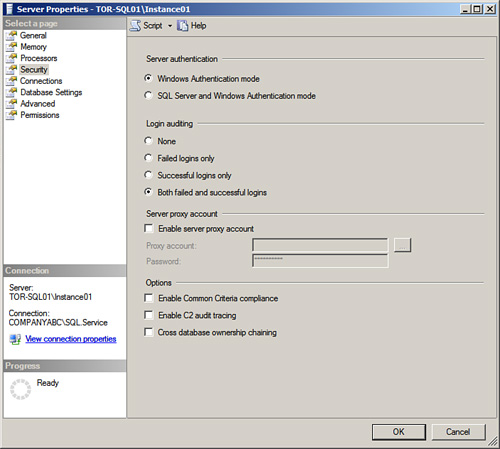

The Security page, shown in Figure 2.4, maintains server-wide security configuration settings. These SQL Server settings include Server Authentication, Login Auditing, Server Proxy Account, and Options.

The first section in the Security page focuses on server authentication. At present, SQL Server 2008 continues to support two modes for validating connections and authenticating access to database resources: Windows Authentication Mode and SQL Server and Windows Authentication Mode. Both of these authentication methods provide access to SQL Server and its resources. SQL Server and Windows Authentication Mode is regularly referred to as mixed mode authentication,

Note

During installation, the default authentication mode is Windows Authentication. The authentication mode can be changed after the installation.

The Windows Authentication Mode setting is the default Authentication setting and is the recommended authentication mode. It leverages Active Directory user accounts or groups when granting access to SQL Server. In this mode, you are given the opportunity to grant domain or local server users access to the database server without creating and managing a separate SQL Server account. It’s worth mentioning that when Windows Authentication Mode is active, user accounts are subject to enterprise-wide policies enforced by the Active Directory domain, such as complex passwords, password history, account lockouts, minimum password length, maximum password length, and the Kerberos protocol. These enhanced and well-defined policies are always a plus to have in place.

The second authentication option is SQL Server and Windows Authentication (Mixed) Mode. This setting, uses either Active Directory user accounts or SQL Server accounts when validating access to SQL Server. Starting with SQL Server 2005, Microsoft introduced a means to enforce password and lockout policies for SQL Server login accounts when using SQL Server Authentication.

Note

Review the authentication sections in Chapter 8, “Hardening a SQL Server 2008 Implementation,” for more information on authentication modes and which mode should be used as a best practice.

Login Auditing is the focal point on the second section on the Security page. You can choose from one of the four Login Auditing options available: None, Failed Logins Only, Successful Logins Only, and Both Failed and Successful Logins.

Tip

When you’re configuring auditing, it is a best practice to configure auditing to capture both failed and successful logins. Therefore, in the case of a system breach or an audit, you have all the logins captured in an audit file. The drawback to this option is that the log file will grow quickly and will require adequate disk space. If this is not possible, only failed logins should be captured as the bare minimum.

You can enable a server proxy account in the Server Proxy section of the Security page. The proxy account permits the security context to execute operating system commands by the impersonation of logins, server roles, and database roles. If you’re using a proxy account, you should configure the account with the least number of privileges to perform the task. This bolsters security and reduces the amount of damage if the account is compromised.

Additional security options available in the Options section of the Security page are as follows:

![]() Enable Common Criteria Compliance— When this setting is enabled, it manages database security. Specifically, it manages features such as Residual Information Protection (RIP), controls access to login statistics, and enforces restrictions where, for example, the column titled GRANT cannot override the table titled DENY.

Enable Common Criteria Compliance— When this setting is enabled, it manages database security. Specifically, it manages features such as Residual Information Protection (RIP), controls access to login statistics, and enforces restrictions where, for example, the column titled GRANT cannot override the table titled DENY.

![]() Enable C2 Audit Tracing— When this setting is enabled, SQL Server allows the largest number of the success and failure objects to be audited. The drawback to capturing for audit data is that it can degrade performance and take up disk space. The files are stored in the Data directory associated with the instance of the SQL Server installation.

Enable C2 Audit Tracing— When this setting is enabled, SQL Server allows the largest number of the success and failure objects to be audited. The drawback to capturing for audit data is that it can degrade performance and take up disk space. The files are stored in the Data directory associated with the instance of the SQL Server installation.

![]() Cross-Database Ownership Chaining— Enabling this setting allows cross-database ownership chaining at a global level for all databases. Cross-database ownership chaining governs whether the database can be accessed by external resources. As a result, this setting should be enabled only when the situation is closely managed because several serious security holes would be opened.

Cross-Database Ownership Chaining— Enabling this setting allows cross-database ownership chaining at a global level for all databases. Cross-database ownership chaining governs whether the database can be accessed by external resources. As a result, this setting should be enabled only when the situation is closely managed because several serious security holes would be opened.

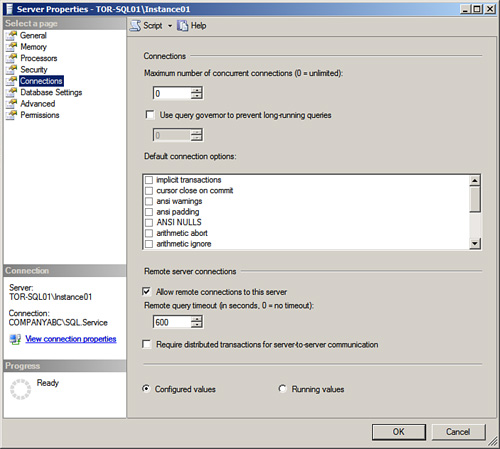

The Connections page, as shown in Figure 2.5, is the place where you examine and configure any SQL Server settings relevant to connections. The Connections page is broken up into two sections: Connections and Remote Server Connections.

The Connections section includes the following settings:

![]() Maximum Number of Concurrent Connections— The first setting determines the maximum number of concurrent connections allowed to the SQL Server Database Engine. The default value is 0, which represents an unlimited number of connections. The value used when configuring this setting is really dictated by the SQL Server hardware such as the processor, RAM, and disk speed.

Maximum Number of Concurrent Connections— The first setting determines the maximum number of concurrent connections allowed to the SQL Server Database Engine. The default value is 0, which represents an unlimited number of connections. The value used when configuring this setting is really dictated by the SQL Server hardware such as the processor, RAM, and disk speed.

![]() Use Query Governor to Prevent Long-Running Queries— This setting creates a stipulation based on an upper-limit criteria specified for the time period in which a query can run.

Use Query Governor to Prevent Long-Running Queries— This setting creates a stipulation based on an upper-limit criteria specified for the time period in which a query can run.

![]() Default Connection Options— For the final setting, you can choose from approximately 16 advanced connection options that can be either enabled or disabled, as shown in Figure 2.5.

Default Connection Options— For the final setting, you can choose from approximately 16 advanced connection options that can be either enabled or disabled, as shown in Figure 2.5.

The second section located on the Connections page focuses on Remote Server settings:

![]() Allow Remote Connections to This Server— If enabled, the first option allows remote connections to the specified SQL Server. With SQL Server 2008, this option is enabled by default.

Allow Remote Connections to This Server— If enabled, the first option allows remote connections to the specified SQL Server. With SQL Server 2008, this option is enabled by default.

![]() Remote Query Timeout— The second setting is available only if Allow Remote Connections is enabled. This setting governs how long it will take for a remote query to terminate. The default value is 600, however, the values that can be configured range from 0 to 2,147,483,647. Zero represents infinite.

Remote Query Timeout— The second setting is available only if Allow Remote Connections is enabled. This setting governs how long it will take for a remote query to terminate. The default value is 600, however, the values that can be configured range from 0 to 2,147,483,647. Zero represents infinite.

![]() Require Distributed Transactions for Server-to-Server Communication— The final setting controls the behavior and protects the transactions between systems by using the Microsoft Distributed Transaction Coordinator (MS DTC).

Require Distributed Transactions for Server-to-Server Communication— The final setting controls the behavior and protects the transactions between systems by using the Microsoft Distributed Transaction Coordinator (MS DTC).

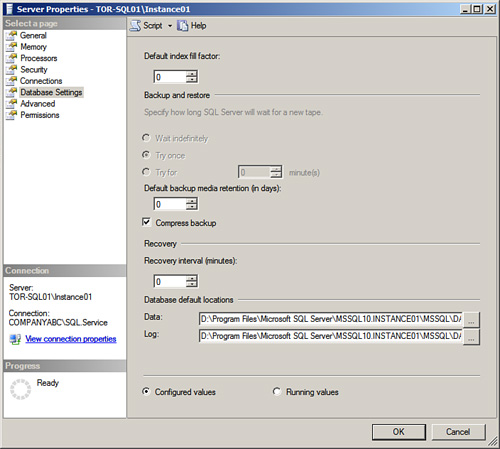

The Database Settings page, shown in Figure 2.6, contains configuration settings that each database within the SQL Server instance will inherit. The choices available on this page are broken out by Fill Factor, Backup and Restore, Recovery, and Database Default Locations.

The Default Index Fill Factor setting specifies how full SQL Server should configure each page when a new index is created. The default setting is 0, and the ranges are between 0 and 100. The 0 value represents a table with room for growth, whereas a value of 100 represents no space for subsequent insertions without requiring page splits. A table with all reads typically has a higher fill factor, and a table that is meant for heavy inserts typically has a low fill factor. The value 50 is ideal when a table has plenty of reads and writes. This setting is global to all tables within the Database Engine.

For more information on fill factors, refer to Chapter 4, “Managing and Optimizing SQL Server 2008 Indexes” and Chapter 6, “SQL Server 2008 Maintenance Practices.”

The Backup and Restore section of the Database Settings page includes the following settings:

![]() Specify How Long SQL Server Will Wait for a New Tape— The first setting governs the time interval SQL Server will wait for a new tape during a database backup process. The options available are Wait Indefinitely, Try Once, or Try for a specific number of minutes.

Specify How Long SQL Server Will Wait for a New Tape— The first setting governs the time interval SQL Server will wait for a new tape during a database backup process. The options available are Wait Indefinitely, Try Once, or Try for a specific number of minutes.

![]() Default Backup Media Retention (In Days)— This setting is a system-wide configuration that affects all database backups, including the translation logs. You enter values for this setting in days, and it dictates the time to maintain and/or retain each backup medium.

Default Backup Media Retention (In Days)— This setting is a system-wide configuration that affects all database backups, including the translation logs. You enter values for this setting in days, and it dictates the time to maintain and/or retain each backup medium.

![]() Compress Backup— Backup compression is one of the most promising and highly anticipated features of SQL Server 2008 for the DBA. If the Compress Backup system-wide setting is enabled, all new backups associated with the SQL Server instance will be compressed. Keep in mind there is a tradeoff when compressing backups. Space associated with the backup on disk is significantly reduced; however, processor usage increases during the backup compression process. For more information on compressed backups, refer to Chapter 7, “Backing Up and Restoring the SQL Server 2008 Database Engine.”

Compress Backup— Backup compression is one of the most promising and highly anticipated features of SQL Server 2008 for the DBA. If the Compress Backup system-wide setting is enabled, all new backups associated with the SQL Server instance will be compressed. Keep in mind there is a tradeoff when compressing backups. Space associated with the backup on disk is significantly reduced; however, processor usage increases during the backup compression process. For more information on compressed backups, refer to Chapter 7, “Backing Up and Restoring the SQL Server 2008 Database Engine.”

Note

It is possible to leverage Resource Governor in order to manage the amount of workload associated with the processor when conducting compressed backups. This will ensure that the server does not suffer from excessive processor resource consumption, which eventually leads to performance degradation of the server. For more information on Resource Governor, refer to Chapter 16, “Managing Workloads and Consumption with Resource Governor.”

The Recovery section of the Database Settings page consists of one setting:

![]() Recovery Interval (Minutes)— Only one Recovery setting is available. This setting influences the amount of time, in minutes, SQL Server will take to recover a database. Recovering a database takes place every time SQL Server is started. Uncommitted transactions are either committed or rolled back.

Recovery Interval (Minutes)— Only one Recovery setting is available. This setting influences the amount of time, in minutes, SQL Server will take to recover a database. Recovering a database takes place every time SQL Server is started. Uncommitted transactions are either committed or rolled back.

Options available in the Database Default Locations section are as follows:

![]() Data and Log— The two folder paths for Data and Log placement specify the default location for all database data and log files. Click the ellipsis button on the right side to change the default folder location.

Data and Log— The two folder paths for Data and Log placement specify the default location for all database data and log files. Click the ellipsis button on the right side to change the default folder location.

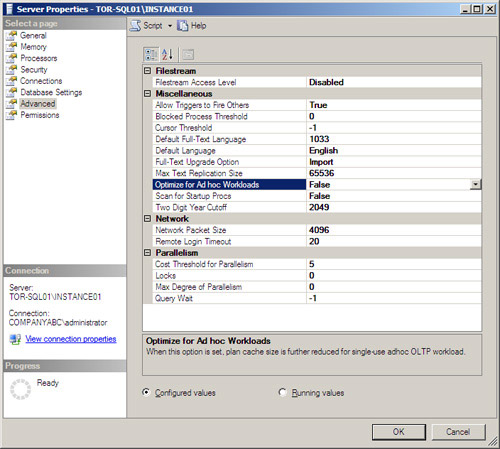

The Advanced Page, shown in Figure 2.7, contains the SQL Server general settings that can be configured.

FILESTREAM is a new storage methodology introduced with SQL Server 2008. There is only one item that can be configured via the Advanced page.

![]() Filestream Access Level— This setting displays how the SQL Server instance will support FILESTREAM. FILESTREAM is a new feature associated with SQL Server 2008, and it allows for the storage of unstructured data. The global server options associated with FILESTREAM configuration include:

Filestream Access Level— This setting displays how the SQL Server instance will support FILESTREAM. FILESTREAM is a new feature associated with SQL Server 2008, and it allows for the storage of unstructured data. The global server options associated with FILESTREAM configuration include:

![]() Disabled— The Disabled setting does not allow Binary Large Object (BLOB) data to be stored in the file system.

Disabled— The Disabled setting does not allow Binary Large Object (BLOB) data to be stored in the file system.

![]() Transact-SQL Access Enabled— FILESTREAM data is accessed only by Transact-SQL and not by the file system.

Transact-SQL Access Enabled— FILESTREAM data is accessed only by Transact-SQL and not by the file system.

![]() Full Access Enabled— FILESTREAM data is accessed by both Transact-SQL and the file system.

Full Access Enabled— FILESTREAM data is accessed by both Transact-SQL and the file system.

Options available in the Miscellaneous section of the Advanced page are as follows:

![]() Allow Triggers to Fire Others— If this setting is configured to True, triggers can execute other triggers. In addition, the nesting level can be up to 32 levels. The values are either True or False.

Allow Triggers to Fire Others— If this setting is configured to True, triggers can execute other triggers. In addition, the nesting level can be up to 32 levels. The values are either True or False.

![]() Blocked Process Threshold— The threshold at which blocked process reports are generated. Settings include 0 to 86,400.

Blocked Process Threshold— The threshold at which blocked process reports are generated. Settings include 0 to 86,400.

![]() Cursor Threshold— This setting dictates the number of rows in the cursor that will be returned for a result set. A value of 0 represents that cursor keysets are generated asynchronously.

Cursor Threshold— This setting dictates the number of rows in the cursor that will be returned for a result set. A value of 0 represents that cursor keysets are generated asynchronously.

![]() Default Full-Text Language— This setting specifies the language to be used for full-text columns. The default language is based on the language specified during the SQL Server instance installation.

Default Full-Text Language— This setting specifies the language to be used for full-text columns. The default language is based on the language specified during the SQL Server instance installation.

![]() Default Language— This setting is also inherited based on the language used during the installation of SQL. The setting controls the default language behavior for new logins.

Default Language— This setting is also inherited based on the language used during the installation of SQL. The setting controls the default language behavior for new logins.

![]() Full-Text Upgrade Option— Controls the behavior of how full-text indexes are migrated when upgrading a database. The options include; Import, Rebuild or Reset.

Full-Text Upgrade Option— Controls the behavior of how full-text indexes are migrated when upgrading a database. The options include; Import, Rebuild or Reset.

![]() Max Text Replication Size— This global setting dictates the maximum size of text and image data that can be inserted into columns. The measurement is in bytes.

Max Text Replication Size— This global setting dictates the maximum size of text and image data that can be inserted into columns. The measurement is in bytes.

![]() Optimize for Ad Hoc Workloads— This setting is set to False by default. If set to True, this setting will improve the efficiency of the plan cache for ad hoc workloads.

Optimize for Ad Hoc Workloads— This setting is set to False by default. If set to True, this setting will improve the efficiency of the plan cache for ad hoc workloads.

![]() Scan for Startup Procs— The configuration values are either True or False. If the setting is configured to True, SQL Server allows stored procedures that are configured to run at startup to fire.

Scan for Startup Procs— The configuration values are either True or False. If the setting is configured to True, SQL Server allows stored procedures that are configured to run at startup to fire.

![]() Two Digit Year Cutoff— This setting indicates the uppermost year that can be specified as a two-digit year. Additional years must be entered as a four-digit number.

Two Digit Year Cutoff— This setting indicates the uppermost year that can be specified as a two-digit year. Additional years must be entered as a four-digit number.

Options available in the Network section of the Advanced page are as follows:

![]() Network Packet Size— This setting dictates the size of packets being transmitted over the network. The default size is 4096 bytes and is sufficient for most SQL Server network operations.

Network Packet Size— This setting dictates the size of packets being transmitted over the network. The default size is 4096 bytes and is sufficient for most SQL Server network operations.

![]() Remote Login Timeout— This setting determines the amount of time SQL Server will wait before timing out a remote login. The default time is 30 seconds, and a value of 0 represents an infinite wait before timing out. The default setting is 20.

Remote Login Timeout— This setting determines the amount of time SQL Server will wait before timing out a remote login. The default time is 30 seconds, and a value of 0 represents an infinite wait before timing out. The default setting is 20.

Options available in the Parallelism section of the Advanced page are as follows:

![]() Cost Threshold for Parallelism— This setting specifies the threshold above which SQL Server creates and runs parallel plans for queries. The cost refers to an estimated elapsed time in seconds required to run the serial plan on a specific hardware configuration. Set this option only on symmetric multiprocessors. For more information, search for “cost threshold for parallelism option” in SQL Server Books Online.

Cost Threshold for Parallelism— This setting specifies the threshold above which SQL Server creates and runs parallel plans for queries. The cost refers to an estimated elapsed time in seconds required to run the serial plan on a specific hardware configuration. Set this option only on symmetric multiprocessors. For more information, search for “cost threshold for parallelism option” in SQL Server Books Online.

![]() Locks— The default for this setting is 0, which indicates that SQL Server is dynamically managing locking. Otherwise, you can enter a numeric value that sets the utmost number of locks to occur.

Locks— The default for this setting is 0, which indicates that SQL Server is dynamically managing locking. Otherwise, you can enter a numeric value that sets the utmost number of locks to occur.

![]() Max Degree of Parallelism— This setting limits the number of processors (up to a maximum of 64) that can be used in a parallel plan execution. The default value of 0 uses all available processors, whereas a value of 1 suppresses parallel plan generation altogether. A number greater than 1 prevents the maximum number of processors from being used by a single-query execution. If a value greater than the number of available processors is specified, however, the actual number of available processors is used. For more information, search for “max degree of parallelism option” in SQL Server Books Online.

Max Degree of Parallelism— This setting limits the number of processors (up to a maximum of 64) that can be used in a parallel plan execution. The default value of 0 uses all available processors, whereas a value of 1 suppresses parallel plan generation altogether. A number greater than 1 prevents the maximum number of processors from being used by a single-query execution. If a value greater than the number of available processors is specified, however, the actual number of available processors is used. For more information, search for “max degree of parallelism option” in SQL Server Books Online.

![]() Query Wait— This setting indicates the time in seconds a query will wait for resources before timing out.

Query Wait— This setting indicates the time in seconds a query will wait for resources before timing out.

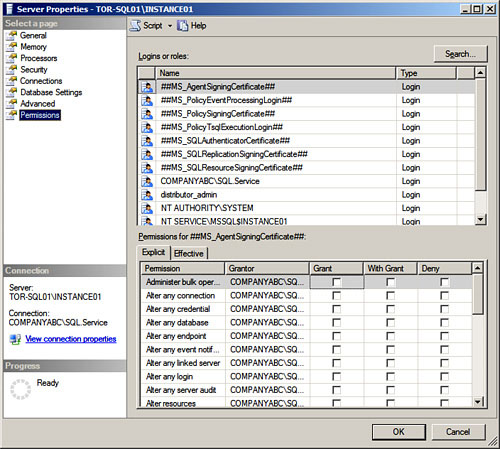

The Permissions Page, as shown in Figure 2.8, includes all the authorization logins and permissions for the SQL Server instance. You can create and manage logins and/or roles within the first section. The second portion of this page displays the Explicit and Effective permissions based on the login or role.

For more information on permissions and authorization to the SQL Server 2008 Database Engine, refer to Chapter 9, “Administering SQL Server Security and Authorization.”

After you configure the SQL Server properties, you must manage the SQL Server Database Engine folders and understand how the settings should be configured. The SQL Server folders contain an abundant number of configuration settings that need to be managed on an ongoing basis. The main SQL Server Database Engine top-level folders, as shown in Figure 2.9, are as follows:

![]() Databases

Databases

![]() Security

Security

![]() Server Objects

Server Objects

![]() Replication

Replication

![]() Management

Management

Each folder can be expanded, which leads to more subfolders and thus more management of settings. The following sections discuss the folders within the SQL Server tree, starting with the Databases folder.

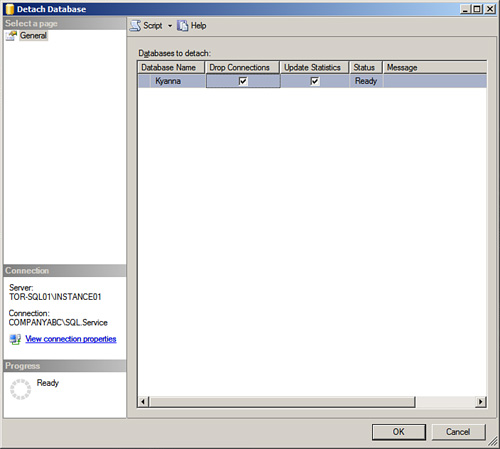

The Databases folder is the main location for administering system and user databases. Management tasks that can be conducted by right-clicking the Database folder consist of creating new databases, attaching databases, restoring databases, and creating custom reports.

The Databases folder contains subfolders as a repository for items such as system databases, database snapshots, and user databases. When a Database folder is expanded, each database has a predefined subfolder structure that includes configuration settings for that specific database. The database structure is as follows: Database Diagrams, Tables, Views, Synonyms, Programmability, Service Broker, Storage, and Security.

Let’s start by examining the top-level folders and then the subfolders in subsequent sections.

The System Databases subfolder is the first folder within the Database tree. It consists of all the system databases that make up SQL Server 2008. The system databases consist of

![]() Master Database— The master database is an important system database in SQL Server 2008. It houses all system-level data, including system configuration settings, login information, disk space, stored procedures, linked servers, and the existence of other databases, along with other crucial information.

Master Database— The master database is an important system database in SQL Server 2008. It houses all system-level data, including system configuration settings, login information, disk space, stored procedures, linked servers, and the existence of other databases, along with other crucial information.

![]() Model Database— The model database serves as a template for creating new databases in SQL Server 2008. The data residing in the model database is commonly applied to a new database with the Create Database command. In addition, the tempdb database is re-created with the help of the model database every time SQL Server 2008 is started.

Model Database— The model database serves as a template for creating new databases in SQL Server 2008. The data residing in the model database is commonly applied to a new database with the Create Database command. In addition, the tempdb database is re-created with the help of the model database every time SQL Server 2008 is started.

![]() Msdb Database— Used mostly by the SQL Server Agent, the msdb database stores alerts, scheduled jobs, and operators. In addition, it stores historical information on backups and restores, SQL Mail, and Service Broker.

Msdb Database— Used mostly by the SQL Server Agent, the msdb database stores alerts, scheduled jobs, and operators. In addition, it stores historical information on backups and restores, SQL Mail, and Service Broker.

![]() Tempdb— The tempdb database holds temporary information, including tables, stored procedures, objects, and intermediate result sets. Each time SQL Server is started, the tempdb database starts with a clean copy.

Tempdb— The tempdb database holds temporary information, including tables, stored procedures, objects, and intermediate result sets. Each time SQL Server is started, the tempdb database starts with a clean copy.

TIP

It is a best practice to conduct regular backups on the system databases. In addition, if you want to increase performance and response times, it is recommended to place the tempdb data and transaction log files on different volumes from the operating system drive. Finally, if you don’t need to restore the system databases to a point in failure, you can set all recovery models for the system databases to Simple.

The second top-level folder under Databases is Database Snapshots. A snapshot allows you to create a point-in-time read-only static view of a database. Typical scenarios for which organizations use snapshots consist of running reporting queries, reverting databases to state when the snapshot was created in the event of an error, and safeguarding data by creating a snapshot before large bulk inserts occur. All database snapshots are created via TSQL syntax and not the Management Studio.

For more information on creating and restoring a database snapshot, view the database snapshot sections in Chapter 7.

The rest of the subfolders under the top-level Database folder are all the user databases. The user database is a repository for all aspects of a database, including administration, management, and programming. Each user database running within the Database Engine shows up as a separate subfolder. From within the User Database folder, you can conduct the following tasks: backup, restore, take offline, manage database storage, manage properties, manage database authorization, encryption, shrink, and configure log shipping or database mirroring. In addition, from within this folder, programmers can create the database schema, including tables, views, constraints, and stored procedures.

The second top-level folder in the SQL Server instance tree, Security, is a repository for all the Database Engine securable items meant for managing authorization. The sublevel Security Folders consist of

![]() Logins— This subfolder is used for creating and managing access to the SQL Server Database Engine. A login can be created based on a Windows or SQL Server account. In addition, it is possible to configure password policies, server role and user mapping access, and permission settings.

Logins— This subfolder is used for creating and managing access to the SQL Server Database Engine. A login can be created based on a Windows or SQL Server account. In addition, it is possible to configure password policies, server role and user mapping access, and permission settings.

![]() Server Roles— SQL Server 2008 leverages the role-based model for granting authorization to the SQL Server 2008 Database Engine. Predefined SQL Server Roles already exist when SQL Server is deployed. These predefined roles should be leveraged when granting access to SQL Server and databases.

Server Roles— SQL Server 2008 leverages the role-based model for granting authorization to the SQL Server 2008 Database Engine. Predefined SQL Server Roles already exist when SQL Server is deployed. These predefined roles should be leveraged when granting access to SQL Server and databases.

![]() Credentials— Credentials are used when there is a need to provide SQL Server authentication users an identity outside SQL Server. The principal rationale is for creating credentials to execute code in assemblies and for providing SQL Server access to a domain resource.

Credentials— Credentials are used when there is a need to provide SQL Server authentication users an identity outside SQL Server. The principal rationale is for creating credentials to execute code in assemblies and for providing SQL Server access to a domain resource.

![]() Cryptographic Providers— The Cryptographic Providers subfolder is used for managing encryption keys associated with encrypting elements within SQL Server 2008. For more information on Cryptographic Providers and SQL Server 2008 encryption, reference Chapter 11, “Encrypting SQL Server Data and Communications.”

Cryptographic Providers— The Cryptographic Providers subfolder is used for managing encryption keys associated with encrypting elements within SQL Server 2008. For more information on Cryptographic Providers and SQL Server 2008 encryption, reference Chapter 11, “Encrypting SQL Server Data and Communications.”

![]() Audits and Server Audit Specifications— SQL Server 2008 introduces enhanced auditing mechanisms, which make it possible to create customized audits of events residing in the database engine. These subfolders are used for creating, managing, storing, and viewing audits in SQL Server 2008. For more information on creating and managing audits including server audit specifications, refer to Chapter 17, “Monitoring SQL Server 2008 with Native Tools.”

Audits and Server Audit Specifications— SQL Server 2008 introduces enhanced auditing mechanisms, which make it possible to create customized audits of events residing in the database engine. These subfolders are used for creating, managing, storing, and viewing audits in SQL Server 2008. For more information on creating and managing audits including server audit specifications, refer to Chapter 17, “Monitoring SQL Server 2008 with Native Tools.”

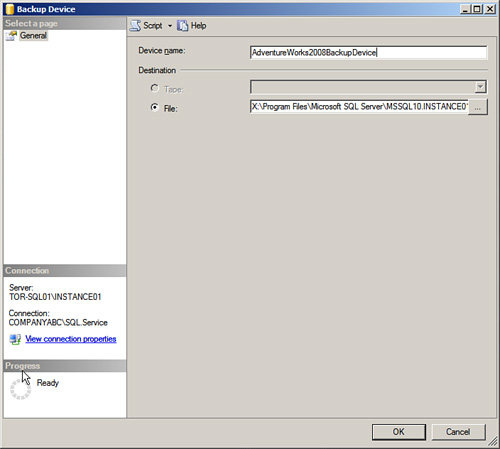

The third top-level folder located in Object Explorer is called Server Objects. Here, you create backup devices, endpoints, linked servers, and triggers.

Backup devices are a component of the backup and restore process when working with user databases. Unlike the earlier versions of SQL Server, backup devices are not needed; however, they provide a great way to manage all the backup data and transaction log files for a database under one file and location.

To create a backup device, follow these steps:

1. Choose Start, All Programs, Microsoft SQL Server 2008, SQL Server Management Studio.

2. In Object Explorer, connect to the Database Engine, expand the desired server, and then expand the Server Objects folder.

3. Right-click the Backup Devices folder and select New Backup Device.

4. In the Backup Device dialog box, specify a Device Name and enter the destination file path, as shown in Figure 2.10. Click OK to complete this task.

This TSQL syntax can also be used to create the backup device:

USE [master]

GO

EXEC master.dbo.sp_addumpdevice @devtype = N'disk',

@logicalname = N'Rustom''s Backup Device',

@physicalname = N'C:Rustom''s Backup Device.bak'

GO

For more information on using backup devices and step-by-step instructions on backing up and restoring the Database Engine, refer to Chapter 7.

To connect to a SQL Server instance, applications must use a specific port that SQL Server has been configured to listen on. In the past, the authentication process and handshake agreement were challenged by the security industry as not being robust or secure. Therefore, SQL Server uses a concept called endpoints to strengthen the communication security process.

The Endpoint folder residing under the Server Objects folder is a repository for all the endpoints created within a SQL Server instance. The endpoints are broken out by system endpoints, database mirroring, service broker, Simple Object Access Protocol (SOAP), and TSQL.

The endpoint creation and specified security options for Database Mirroring endpoints are covered in Chapter 13.

As the enterprise scales, more and more SQL Server 2008 servers are introduced into an organization’s infrastructure. As this occurs, you are challenged to provide a means to allow distributed transactions and queries between different SQL Server instances. Linked servers provide a way for organizations to overcome these hurdles by providing the means of distributed transactions, remote queries, and remote stored procedure calls between separate SQL Server instances or non–SQL Server sources such as Microsoft Access.

Follow these steps to create a linked server with SQL Server Management Studio (SSMS):

1. In Object Explorer, first connect to the Database Engine, expand the desired server, and then expand the Server Objects Folder.

2. Right-click the Linked Servers folder and select New Linked Server.

3. The New Linked Server dialog box contains three pages of configuration settings: General, Security, and Server Options. On the General Page, specify a linked server name, and select the type of server to connect to. For example, the remote server could be a SQL Server or another data source. For this example, select SQL Server.

4. The next page focuses on security and includes configuration settings for the security context mapping between the local and remote SQL Server instances. On the Security page, first click Add and enter the local login user account to be used. Second, either impersonate the local account, which will pass the username and password to the remote server, or enter a remote user and password.

5. Still within the Security page, enter an option for a security context pertaining to the external login that is not defined in the previous list. The following options are available:

![]() Not Be Made— Indicates that a login will not be created for user accounts that are not already listed.

Not Be Made— Indicates that a login will not be created for user accounts that are not already listed.

![]() Be Made Without a User’s Security Context— Indicates that a connection will be made without using a user’s security context for connections.

Be Made Without a User’s Security Context— Indicates that a connection will be made without using a user’s security context for connections.

![]() Be Made Using the Login’s Current Security Context— Indicates that a connection will be made by using the current security context of the user which is logged on.

Be Made Using the Login’s Current Security Context— Indicates that a connection will be made by using the current security context of the user which is logged on.

![]() Be Made Using This Security Context— Indicates that a connection will be made by providing the login and password security context.

Be Made Using This Security Context— Indicates that a connection will be made by providing the login and password security context.

6. On the Server Options page, you can configure additional connection settings. Make any desired server option changes and click OK.

Replication is a means of distributing data among SQL Server instances. In addition, peer-to-peer replication can also be used as a form of high availability and for offloading reporting queries from a production server to a second instance of SQL Server. When administering and managing replication, you conduct all the replication tasks from within this Replication folder. Tasks include configuring the distributor, creating publications, creating local subscriptions, and launching the Replication Monitor for troubleshooting and monitoring.

Administering, managing, and monitoring replication can be reviewed in Chapter 15, “Implementing and Managing SQL Server Replication.”

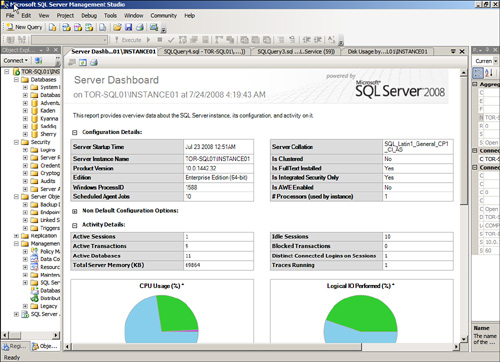

The Management folder contains a plethora of old and new elements used to administer SQL Server management tasks. The majority of the topics in the upcoming bullets are covered in dedicated chapters, as the topics and content are very large. The subfolders found in the Management folder consist of the following:

![]() Policy Management— A new feature in SQL Server 2008, Policy Management allows DBAs to create policies in order to control and manage the behavior and settings associated with one or more SQL Server instances. Policy Based Management ensures that a system conforms to usage and security practices of an organization and its industry by constantly monitoring the surface area of a SQL Server system, database, and/or objects. To effectively establish and monitor policies for a SQL Server environment, review Chapter 10, “Administering Policy Based Management.”

Policy Management— A new feature in SQL Server 2008, Policy Management allows DBAs to create policies in order to control and manage the behavior and settings associated with one or more SQL Server instances. Policy Based Management ensures that a system conforms to usage and security practices of an organization and its industry by constantly monitoring the surface area of a SQL Server system, database, and/or objects. To effectively establish and monitor policies for a SQL Server environment, review Chapter 10, “Administering Policy Based Management.”

![]() Data Collection— Data Collection is the second element in the Management folder. It is the main place for DBAs to manage all aspects associated with the new SQL Server 2008 feature, Performance Studio. Performance Studio is an integrated framework that allows database administrators the opportunity for end-to-end collection of data from one or more SQL Server systems into a centralized data warehouse. The collected data can be used to analyze, troubleshoot, and store SQL Server diagnostic information. To further understand how to administer Performance Studio, data collections, and the central repository and management reports, review Chapter 17.

Data Collection— Data Collection is the second element in the Management folder. It is the main place for DBAs to manage all aspects associated with the new SQL Server 2008 feature, Performance Studio. Performance Studio is an integrated framework that allows database administrators the opportunity for end-to-end collection of data from one or more SQL Server systems into a centralized data warehouse. The collected data can be used to analyze, troubleshoot, and store SQL Server diagnostic information. To further understand how to administer Performance Studio, data collections, and the central repository and management reports, review Chapter 17.

![]() Resource Governor— The paramount new feature included with the release of SQL Server 2008. Resource Governor can be used in a variety of ways to monitor resource consumption and manage the workloads of a SQL Server system. By leveraging Resource Governor and defining the number of resources a workload can use, it is possible to establish a SQL Server environment that allows many workloads to run on a server, without the fear of one specific workload cannibalizing the system. For more information on managing Resource Governor, refer to Chapter 16.

Resource Governor— The paramount new feature included with the release of SQL Server 2008. Resource Governor can be used in a variety of ways to monitor resource consumption and manage the workloads of a SQL Server system. By leveraging Resource Governor and defining the number of resources a workload can use, it is possible to establish a SQL Server environment that allows many workloads to run on a server, without the fear of one specific workload cannibalizing the system. For more information on managing Resource Governor, refer to Chapter 16.

![]() Maintenance Plans— The Maintenance Plan subfolder includes an arsenal of tools tailored toward automatically sustaining a SQL Server implementation. DBAs can conduct routine maintenance on one or more databases by creating a maintenance plan either manually or by using a wizard. Some of these routine database tasks involve rebuilding indexes, checking database integrity, updating index statistics, and performing internal consistency checks and backups. For more information on conducting routine maintenance, review Chapter 6.

Maintenance Plans— The Maintenance Plan subfolder includes an arsenal of tools tailored toward automatically sustaining a SQL Server implementation. DBAs can conduct routine maintenance on one or more databases by creating a maintenance plan either manually or by using a wizard. Some of these routine database tasks involve rebuilding indexes, checking database integrity, updating index statistics, and performing internal consistency checks and backups. For more information on conducting routine maintenance, review Chapter 6.

![]() SQL Server Logs— The SQL Server Logs subfolder is typically the first line of defense when analyzing issues associated with a SQL Server instance. From within this subfolder, it is possible to configure logs, view SQL Server logs, and view Windows Logs. By right-clicking the SQL Server Log folder, you have the option to limit the number of error logs before they are recycled. The default value is 6; however, a value from 6 to 99 can be selected. The logs are displayed in a hierarchical fashion with the Current log listed first.

SQL Server Logs— The SQL Server Logs subfolder is typically the first line of defense when analyzing issues associated with a SQL Server instance. From within this subfolder, it is possible to configure logs, view SQL Server logs, and view Windows Logs. By right-clicking the SQL Server Log folder, you have the option to limit the number of error logs before they are recycled. The default value is 6; however, a value from 6 to 99 can be selected. The logs are displayed in a hierarchical fashion with the Current log listed first.

![]() Database Mail— The Database Mail folder should be leveraged to configure SQL Server email messages using the SMTP protocol. Management tasks include configuring mail system parameters, creating mail accounts, administering profiles, and mail security. For more information on managing Database Mail, see Chapter 17.

Database Mail— The Database Mail folder should be leveraged to configure SQL Server email messages using the SMTP protocol. Management tasks include configuring mail system parameters, creating mail accounts, administering profiles, and mail security. For more information on managing Database Mail, see Chapter 17.

![]() Distributed Transaction Coordinator— There isn’t much to manage; however, the Distributed Transaction Coordinator (DTC) provides status on the DTC service from within SSMS. Although status is presented, such as running or stopped, the DTC service must be managed with the Services snap-in included with Windows Server 2008.

Distributed Transaction Coordinator— There isn’t much to manage; however, the Distributed Transaction Coordinator (DTC) provides status on the DTC service from within SSMS. Although status is presented, such as running or stopped, the DTC service must be managed with the Services snap-in included with Windows Server 2008.

![]() Legacy— The Legacy subfolder includes a means of managing legacy SQL Server 2008 elements that are still supported and not yet decommissioned. Typically, these elements are pre–SQL Server 2005 and include Database Maintenance Plans, Data Transformation Services, and SQL Mail.

Legacy— The Legacy subfolder includes a means of managing legacy SQL Server 2008 elements that are still supported and not yet decommissioned. Typically, these elements are pre–SQL Server 2005 and include Database Maintenance Plans, Data Transformation Services, and SQL Mail.

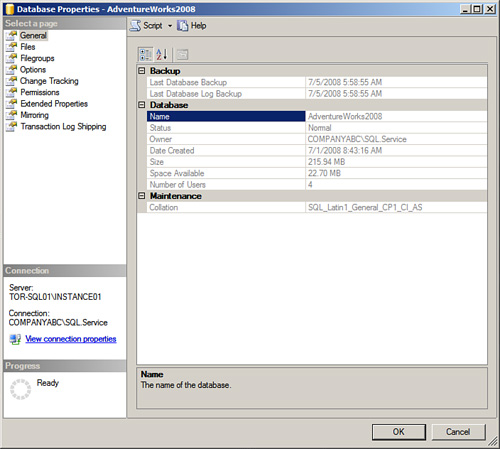

The Database Properties dialog box is the place where you manage the configuration options and values of a user or system database. You can execute additional tasks from within these pages, such as database mirroring and transaction log shipping. The configuration pages in the Database Properties dialog box include

![]() General

General

![]() Files

Files

![]() Filegroups

Filegroups

![]() Options

Options

![]() Change Tracking

Change Tracking

![]() Permissions

Permissions

![]() Extended Properties

Extended Properties

![]() Mirroring

Mirroring

![]() Transaction Log Shipping

Transaction Log Shipping

The upcoming sections describe each page and setting in its entirety. To invoke the Database Properties dialog box, perform the following steps:

1. Choose Start, All Programs, Microsoft SQL Server 2008, SQL Server Management Studio.

2. In Object Explorer, first connect to the Database Engine, expand the desired server, and then expand the Databases folder.

3. Select a desired database such as AdventureWorks2008, right-click, and select Properties. The Database Properties dialog box, including all the pages, is displayed in the left pane.

General, the first page in the Database Properties dialog box, displays information exclusive to backups, database settings, and collation settings. Specific information displayed includes

![]() Last Database Backup

Last Database Backup

![]() Last Database Log Backup

Last Database Log Backup

![]() Database Name

Database Name

![]() State of the Database Status

State of the Database Status

![]() Database Owner

Database Owner

![]() Date Database Was Created

Date Database Was Created

![]() Size of the Database

Size of the Database

![]() Space Available

Space Available

![]() Number of Users Currently Connected to the Database

Number of Users Currently Connected to the Database

![]() Collation Settings

Collation Settings

You should use this page for obtaining information about a database, as displayed in Figure 2.11.

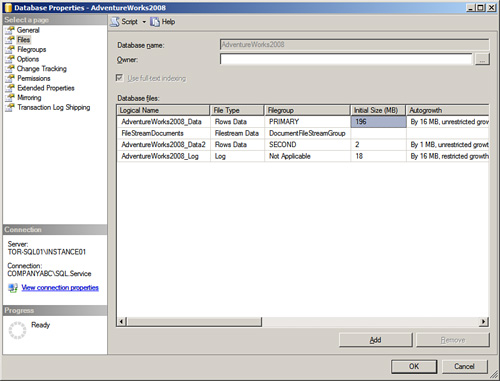

The second Database Properties page is called Files. Here you can change the owner of the database, enable full-text indexing, and manage the database files, as shown in Figure 2.12.

The Files page is used to configure settings pertaining to database files and transaction logs. You will spend time working in the Files page when initially rolling out a database and conducting capacity planning. Following are the settings you’ll see:

![]() Data and Log File Types— A SQL Server 2008 database is composed of two types of files: data and log. Each database has at least one data file and one log file. When you’re scaling a database, it is possible to create more than one data and one log file. If multiple data files exist, the first data file in the database has the extension

Data and Log File Types— A SQL Server 2008 database is composed of two types of files: data and log. Each database has at least one data file and one log file. When you’re scaling a database, it is possible to create more than one data and one log file. If multiple data files exist, the first data file in the database has the extension *.mdf and subsequent data files maintain the extension *.ndf. In addition, all log files use the extension *.ldf.

Tip

To reduce disk contention, many database experts recommend creating multiple data files. The database catalog and system tables should be stored in the primary data file, and all other data, objects, and indexes should be stored in secondary files. In addition, the data files should be spread across multiple disk systems or Logical Unit Number (LUN) to increase I/O performance.

![]() Filegroups— When you’re working with multiple data files, it is possible to create filegroups. A filegroup allows you to logically group database objects and files together. The default filegroup, known as the Primary Filegroup, maintains all the system tables and data files not assigned to other filegroups. Subsequent filegroups need to be created and named explicitly.

Filegroups— When you’re working with multiple data files, it is possible to create filegroups. A filegroup allows you to logically group database objects and files together. The default filegroup, known as the Primary Filegroup, maintains all the system tables and data files not assigned to other filegroups. Subsequent filegroups need to be created and named explicitly.

![]() Initial Size in MB— This setting indicates the preliminary size of a database or transaction log file. You can increase the size of a file by modifying this value to a higher number in megabytes.

Initial Size in MB— This setting indicates the preliminary size of a database or transaction log file. You can increase the size of a file by modifying this value to a higher number in megabytes.

![]() Autogrowth— This feature enables you to manage the file growth of both the data and transaction log files. When you click the ellipsis button, a Change Autogrowth dialog box appears. The configurable settings include whether to enable autogrowth, and if autogrowth is selected, whether autogrowth should occur based on a percentage or in a specified number of megabytes. The final setting is whether to choose a maximum file size for each file. The two options available are Restricted File Growth (MB) or Unrestricted File Growth.

Autogrowth— This feature enables you to manage the file growth of both the data and transaction log files. When you click the ellipsis button, a Change Autogrowth dialog box appears. The configurable settings include whether to enable autogrowth, and if autogrowth is selected, whether autogrowth should occur based on a percentage or in a specified number of megabytes. The final setting is whether to choose a maximum file size for each file. The two options available are Restricted File Growth (MB) or Unrestricted File Growth.

Tip

When you’re allocating space for the first time to both data files and transaction log files, it is a best practice to conduct capacity planning, estimate the amount of space required for the operation, and allocate a specific amount of disk space from the beginning. It is not a recommended practice to rely on the autogrowth feature because constantly growing and shrinking the files typically leads to excessive fragmentation, including performance degradation.

Note

Database Files and RAID Sets—Database files should reside only on RAID sets to provide fault tolerance and availability, while at the same time increasing performance. If cost is not an issue, data files and transaction logs should be placed on RAID 1+0 volumes. RAID 1+0 provides the best availability and performance because it combines mirroring with striping. However, if this is not a possibility due to budget, data files should be placed on RAID 5 and transaction logs on RAID 1.

Perform the following steps to increase the data file for the AdventureWorks2008 database using SSMS:

Perform the following steps to create a new filegroup and files using the AdventureWorks2008 database with both SSMS and TSQL:

1. In Object Explorer, right-click the AdventureWorks2008 database and select Properties.

2. Select the Filegroups page in the Database Properties dialog box.

3. Click the Add button to create a new filegroup.

4. When a new row appears, enter the name of new the filegroup and enable the option Default.

Alternatively, you can use the following TSQL script to create the new filegroup for the AdventureWorks2008 database:

USE [master]

GO

ALTER DATABASE [AdventureWorks2008] ADD FILEGROUP [SecondFile-

Group]

GO

Now that you’ve created a new filegroup, you can create two additional data files for the AdventureWorks2008 database and place them in the newly created filegroup:

1. In Object Explorer, right-click the AdventureWorks2008 database and select Properties.

2. Select the Files page in the Database Properties dialog box.

3. Click the Add button to create new data files.

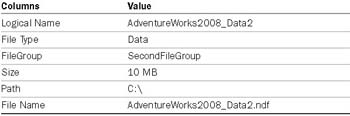

4. In the Database Files section, enter the following information in the appropriate columns:

5. Click OK.

Note

For simplicity, the file page for the new database file is located in the root of the C: drive for this example. In production environments, however, you should place additional database files on separate volumes to maximize performance.

It is possible to conduct the same steps by executing the following TSQL syntax to create a new data file:

USE [master]

GO

ALTER DATABASE [AdventureWorks2008]

ADD FILE (NAME = N'AdventureWorks2008_Data2',

FILENAME = N'C:AdventureWorks2008_Data2.ndf',

SIZE = 10240KB , FILEGROWTH = 1024KB )

TO FILEGROUP [SecondFileGroup]

GO

Next, to configure autogrowth on the database file, follow these steps:

1. From within the File page on the Database Properties dialog box, click the ellipsis button located in the Autogrowth column on a desired database file to configure it.

2. In the Change Autogrowth dialog box, configure the File Growth and Maximum File Size settings and click OK.

3. Click OK in the Database Properties dialog box to complete the task.

You can use the following TSQL syntax to modify the Autogrowth settings for a database file based on a growth rate at 50% and a maximum file size of 1000MB:

Until SQL Server 2008, organizations have been creatively inventing their own mechanisms to store unstructured data. Now SQL Server 2008 introduces a new file type that can assist organizations by allowing them to store unstructured data such as bitmap images, music files, text files, videos, and audio files in a single data type, which is more secure and manageable.

From an internal perspective, FILESTREAM creates a bridge between the Database Engine and the NTFS filesystem included with Windows Server. It stores varbinary(max) binary large object (BLOB) data as files on the filesystem, and Transact-SQL can be leveraged to interact with the filesystem by supporting inserts, updates, queries, search, and backup of FILESTREAM data. FILESTREAM will be covered in upcoming sections of this chapter.

As stated previously, filegroups are a great way to organize data objects, address performance issues, and minimize backup times. The Filegroup page is best used for viewing existing filegroups, creating new ones, marking filegroups as read-only, and configuring which filegroup will be the default.

To improve performance, you can create subsequent filegroups and place database files, transaction log files, Filestream data and indexes onto them. In addition, if there isn’t enough physical storage available on a volume, you can create a new filegroup and physically place all files on a different volume or LUN if Storage Area Network (SAN) is being used.

Finally, if a database has static data, it is possible to move this data to a specified filegroup and mark this filegroup as read-only. This minimizes backup times; because the data does not change, SQL Server marks this filegroup and skips it.

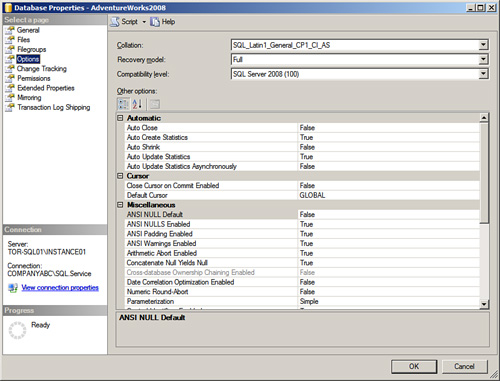

The Options page, shown in Figure 2.13, includes configuration settings on Collation, Recovery Model, and other options such as Automatic, Cursor, and Miscellaneous. The following sections explain these settings.

The Collation setting located on the Database Properties Options page specifies the policies for how strings of character data are sorted and compared, for a specific database, based on the industry standards of particular languages and locales. Unlike SQL Server collation, the database collation setting can be changed by selecting the appropriate setting from the Collation drop-down box.

Each recovery model handles recovery differently. Specifically, each model differs in how it manages logging, which results in whether an organization’s database can be recovered to the point of failure. The three recovery models associated with a database in the Database Engine are as follows:

![]() Full— This recovery model captures and logs all transactions, making it possible to restore a database to a determined point in time or up to the minute. Based on this model, you must conduct maintenance on the transaction log to prevent logs from growing too large and disks becoming full. When you perform backups, space is made available again and can be used until the next planned backup. Organizations may notice that maintaining a transaction log slightly degrades SQL Server performance because all transactions to the database are logged. Organizations that insist on preserving critical data often overlook this issue because they realize that this model offers them the highest level of recovery capabilities.

Full— This recovery model captures and logs all transactions, making it possible to restore a database to a determined point in time or up to the minute. Based on this model, you must conduct maintenance on the transaction log to prevent logs from growing too large and disks becoming full. When you perform backups, space is made available again and can be used until the next planned backup. Organizations may notice that maintaining a transaction log slightly degrades SQL Server performance because all transactions to the database are logged. Organizations that insist on preserving critical data often overlook this issue because they realize that this model offers them the highest level of recovery capabilities.

![]() Simple— This model provides organizations with the least number of options for recovering data. The Simple recovery model truncates the transaction log after each backup. This means a database can be recovered only up to the last successful full or differential database backup. This recovery model also provides the least amount of administration because transaction log backups are not permitted. In addition, data entered into the database after a successful full or differential database backup is unrecoverable. Organizations that store data they do not consider mission-critical may choose to use this model.