CHAPTER FOUR

GETTING THERE THROUGH SUCCESSIVE APPROXIMATION

- “With adequate funding we will have the cure for cancer 6 months from Thursday.”

- “In my administration, taxes will drop 50 percent while lifelong full-coverage health benefits will be extended to all citizens.”

- “We will deliver a strongly motivating, high-impact e-learning application as soon as you need it within whatever budget you happen to have set aside.”

Some challenges are truly tough challenges. Although it might get a program launched, setting expectations unrealistically high doesn’t help solve the real challenges at hand.

Designing effective e-learning applications is one of those truly tough challenges if you don’t have the benefit of an effective process. This chapter talks about the design process and how to involve stakeholders so that expectations can be an ally rather than an additional burden.

A Multifaceted Challenge

Developing learning experiences that change behavior is a tough, multi-faceted challenge, no matter how few constraints are in place and how generous the funding. Just as in designing modern buildings, setting up a new business operation, or manufacturing an aircraft, in e-learning design there are multifarious complexities requiring a tremendous breadth of knowledge and skill. It’s easy for newcomers to be unaware of this until they see their dreams fade in projects that struggle for completion and fail to achieve the needed results.

Constraints

As if the inherent challenges of good training weren’t enough, constraints always exist. Typical constraints include one or more of the following:

- Too little budget for the magnitude of behavioral changes needed

- Conflicting opinions of what outcome behaviors are important

- Unrealistically high expectations of solving deeply rooted organizational problems through training

- Preconceptions about what constitutes good instruction or good interactivity

- Short deadlines

- Inadequate content resources and sporadic availability of subject-matter experts

- Unavailability of typical learners when needed

- Convictions about applicability of specific media

- Undocumented variances in delivery platforms

- Restrictions on distribution or installation of workstation software

Further, regardless of how forward thinking and flexible an organization may be, typical challenges are always exacerbated by additional constraints en route, whether they are foreseeable through experienced analysis or are quite unexpected “pop-ups.”

Who expects to find that the critical subject-matter expert has changed midstream into a person with very different views? Who expects the procedures being taught to be changed just as simulation software is receiving its final touches? Who expects information systems policies to change, no longer allowing access to essential databases for training purposes?

No one succeeds in e-learning design and development who hates a challenge. Complexity abounds from the primary task of structuring effective learning tasks and from the constantly changing tool sets and technologies. Challenges emanate from within the content, the learners, the client, the learning environment and culture, the technology, and so on. Each project is unique, and if one fully engages the challenge, construction of an effective solution cannot be simple and routine.

Dealing with Design Challenges

The question is how to approach the challenges in a manner that ensures success, or at least an acceptable probability of success. Many approaches have been tried.

Can We Learn from the Past?

I convey this history here in my déjà vu anxiety. The world of e-learning today looks much like that of the early days of PLATO, where so much energy, excitement, and money resulted in a glut of poor instructional software. Don’t get me wrong—PLATO efforts also resulted in some of the best learning applications yet. PLATO offered learning management systems (LMSs), e-mail, and instant messaging systems in the 1970s that are still unequaled. Even the mistakes are to be appreciated, because many alternative directions were explored. It is probably the most definitive effort in support of technology in learning on record. It demonstrated both the good that is possible and the waste that can be generated by inexperienced, unguided hands.

I convey this history with a plea—a plea that responsible individuals will insist on knowing what has already been learned, before capriciously and wastefully darting into application development as if there were no hazards and no vital expertise. In other words: There is a body of helpful knowledge to build on, and we won’t ruin the fun inherent in developing today’s e-learning by taking advantage of it as we go forward.

Some questions have to be answered before e-learning development costs can be estimated. Answering questions does carry a cost, but it is far cheaper than wasting a whole project budget through invalid assumptions. It would be wonderful if there were formulas, percentages, or some other metrics to estimate costs, but it just doesn’t work that way. Sadly, many people involved in developing e-learning are trapped in this myth. The good news is that there is a way to answer these questions (more on that later).

Of Camels, Horses, and Committees

You know the old joke that a camel is a horse designed by committee? No doubt the horse committee was given an excellent design document, perfectly detailing the specifications and description of the horse. But that’s what happens with committees, due to bureaucratic and political problems, compromises, and efforts to maintain harmony—the result is never better than a camel. While there may be big functional differences between a committee and a team, some of the same “camel” difficulties often arise with e-learning teams.

What do you think Professor Sherwood observed when he looked at the origins of exemplary e-learning applications?

These findings, unfortunately, give us little solution to the problems of generating high-quality e-learning design in the quantities needed. It’s possible that the top, most inventive solutions will be designed and developed by individual geniuses, but with the ever-widening array of technology and tools to be harnessed, it seems less and less likely. We need an effective alternative.

Persistence versus Genius

What else has been tried to address the challenges of good e-learning development? Persistence seems like a possible substitute for rare genius. There’s nothing wrong with persistence as a path to excellence, except that it can take a long time—a very long time, if talent, knowledge, and skills aren’t very strong. The opportunities to err are pervasive. Unguided dedication can waste a lot of resources and frustrate everyone involved.

It’s a lot of work when you know what you’re doing, and even more when you don’t. Many are so passionate about their e-learning projects that they make the necessary sacrifices to work until they are satisfied—or, more often, until they can’t do any more. Results are most often disappointing, but sometimes are astounding and inspiring. They sometimes add to the proof that it’s possible to use learning technology in meaningful ways. Passion is good, possibly even a prerequisite for producing excellent e-learning designs, but clearly passion and persistence alone aren’t guarantees of success. Through such means, the actual costs in terms of work hours is prohibitive anyway. We need a better way.

Genius versus Persistence

Genius, often born of insatiable curiosity, incites and demands dedication—serious dedication, requiring innumerable sacrifices over an extended period. We may think genius makes notable accomplishments easy, but geniuses often struggle for a lifetime to achieve that deed we applaud and mistakenly attribute more to rare ability than to prolonged effort. Genius is composed of talent (or predisposition toward talent), capability, focus, and being in the right place at the right time.

Sometimes genius is mostly persistence. If you work at something hard enough and long enough, your chances of success increase greatly—you improve your chances of doing the right thing at the right time and being rewarded for it. There’s nothing wrong with applying genius as a means to excellence, except that there isn’t enough of it handy.

I’ve had and continue to have opportunities to work with some incredibly talented people—people who have extraordinary insight and a range of talents that permit them to do what their unique vision challenges them to do. Unfortunately, there aren’t enough of these geniuses ready to lead the design and development of all needed e-learning applications.

By the way, the task of excellent e-learning development has frequently been a challenge even for the gifted. They also have to work hard and explore many ideas to create great learning experiences. They too must persuade clients that there’s a better, more interactive way. They meet with technology entanglements and organizational confusions just as do the rest of us.

There are many easier ways to fame and fortune than building valuable learning experiences. Exceptionally gifted designers who remain in the field of teaching and technology-based learning are too few, very busy, and often exhausted.

Is There a Viable Solution to e-Learning Development?

If teams have problems producing effective solutions, if geniuses are too few, and if both persistence and genius are too slow, where does that leave us? Are regular folk doomed to produce the typical e-learning that doesn’t work, that everybody hates, and that costs a fortune? Is the task of inventing effective interactive learning experiences so difficult that success will be the exception? Given that there are so few genius-level designers, is it just being pragmatic to consider e-learning an unrealistic solution for most performance problems and learning needs?

e-Learning might well be discounted as a potential mainstream tool if the answer to all these questions proves to be yes. Under such conditions, corporations shouldn’t even consider e-learning as a solution to performance problems, or as a means for achieving business goals.

Some companies have clearly had great disappointments in e-learning, although they are often loath to publicize it. Undaunted, many expect that later efforts will lead to a positive return on investment (ROI), even if their initial efforts have not been successful. They just keep going, hoping somehow things will get better. “It’s a learning curve issue,” say some. “We just have to get the hang of it. One can’t expect extraordinary successes in the first round.”

e-Learning investments are growing to unprecedented, even colossal levels. Optimism remains strong. Organizations either are unaware of how ineffective their e-learning design is or they have the same confidence I have that there is a way good e-learning design can happen within realistic bounds. Some organizations, however, are anxiously looking for easier paths and quick fixes.

We have arrived at a critical question. What can we turn to for reliable and cost-effective production of timely, first-rate e-learning applications? Is there a viable solution?

Yes.

An Issue of Process

Again, the lessons of early e-learning development offer some hope, and that hope lies in an effective process.

What Should Have Worked, But Didn’t: The ISD Tradition

Instructional systems design (ISD) is sometimes referred to as the ADDIE method for its five phases of analysis, design, development, implementation, and evaluation. (Actually having its roots in engineering design approaches, some may recognize the similarity of ISD to the information system development life cycle. One can only hope that this methodology works better in that context!)

ISD is classified as a waterfall methodology, so called because there is no backing up through its five sequential phases or steps (Figure 4.1). Each phase elaborates on the output of the previous phase, without going back upstream to reconsider decisions.

FIGURE 4.1 The ADDIE method.

Checks are made within each phase to be sure it has been completed thoroughly and accurately. If errors are found, work must not move on to the next phase, because it would be based on faulty information and would amplify errors and waste precious resources.

In many ways, this approach seems quite rational. It has been articulated to great depth by many organizations. But the results have been far from satisfactory. A few of the problems we experienced with it in the development of thousands of hours of instructional software included:

- Considerable rework and overruns because subject-matter experts couldn’t see the instructional application in any meaningful way until after implementation (i.e. programming) had been completed. Then, errors and omissions were found and had to be corrected, with additional costs and schedule delays.

- Contention among team members because each would be visualizing something different as the process moved along, only to discover this fact when it was too late to make any changes without blame.

- Boring applications arising from a number of factors. One was that we couldn’t explore alternative designs, because that requires execution of all five ADDIE steps to some degree without finalization of any. Another was that we focused on content and documentation rather than on learners and their experiences. Finally, we lacked helpful learner involvement (because learners had no prototypes to evaluate, and they couldn’t react meaningfully to a sea of highly structured documentation that made no sense to them).

A Fatally Flawed Process

By attempting to be fully complete and totally accurate in the output of each phase, the ISD or ADDIE waterfall method assumes that perfection, or near perfection, is possible. It requires a high degree of perfection as a safeguard against building on false premises promoted in previous phases. Many attempts to refine the process have tried to tighten it up through clearer definitions, more detailed documentation, more sign-offs, and the like. They have tried to make sure analyses were deep and accurate, content was clarified in full, and screen designs were sketched out in detail almost to the pixel: no errors of omission, no vagary—and no success.

Efforts to complete each phase with precise, unequivocal analyses, specifications, or programming, in our case at CDC and perhaps typically in similar projects elsewhere, increased the administrative burden, increased the focus on documentation, and, even worse, decreased the likelihood of creative, engaging learning activities. Costs increased and throughput decreased. Applying the process became the job, mediocre learning experiences the output.

Iterations Make Geniuses

Fortunately, there is a viable alternative to ISD. Imagine a process that:

- Helps teams work together more effectively and with enthusiasm

- Is more natural and involves more players in meaningful participation from beginning to end

- Focuses on learning experiences rather than on documentation

- Produces better products

- Is based on iterations rather than phenomenal performance

- Can be a lot of fun

There is such a process. It’s called successive approximation. Successive approximation is a term I borrowed from psychology. In operant conditioning, it means that the gap between the current behavior and the desired behavior gets smaller as you give rewards for behaviors that are getting closer to the desired behavior. Its primary power in the world of e-learning comes from making repeated small steps rather than perfectly executed giant steps, which very few can do successfully.

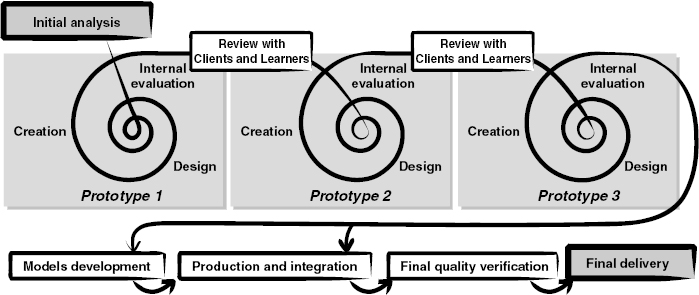

In exploratory work with PLATO, “successive approximation” seemed to describe a software development process we devised for the design and development of complex applications that involved significant learner-interface invention. It actually incorporates most ISD notions and all ADDIE activities, but they are applied repetitively at the micro level rather than linearly at the macro level. Most important, successive approximation rejects the waterfall approach. It is instead an iterative approach. It not only allows, but prescribes, backing up. That is, it prescribes redoing evaluation, design, and development work as insight and vision build (Figure 4.2).

FIGURE 4.2 The successive approximation method.

Some ISD or ADDIE defenders suggest that these differences are minor, but I feel that successive approximation is nearly an antithesis of those terribly burdened processes. Let’s look at successive approximation in closer detail.

The Gospel of Successive Approximation

There is an approach, a set of values, a way of thinking that is truly the essence and strength of successive approximation. Three primary tenets are enough to set you on your way to seeing the light. Once you get into it, all the critical nuances become natural and self-evident, rather than things to be memorized and applied dogmatically. Here is what the successive approximation flock believes:

- No e-learning application is perfect.

- Functional prototypes are better than storyboards and design specs.

- Quick and dirty is beautiful.

No e-Learning Application Is Perfect

First and foremost is the recognition that no software application—or any product, for that matter—is perfect or ever will be. All applications can be improved, and as we make each attempt to improve them, we work to move successively closer to, or approximate, the theoretical ideal. We must be content to know that perfection is not achievable. Thankfully, it’s not a prerequisite to success. We will never get to perfection, but a desirable process will ensure that each step we take will get us significantly closer.

Quite pragmatically, with successive approximation we are looking for the most cost-effective means we can find to enable learners to do what we want them to do. We want to spend neither more nor less than is necessary to achieve our goal. Both overspending and underspending are expensive mistakes. Without conducting some research and gathering critical information beforehand, there’s no way of knowing what is the right amount to spend on a project, although everyone wishes there were. So it’s important that the process be sensitive to the costs and diligent in determining appropriate investments at the earliest possible moment.

Functional Prototypes Are Better than Storyboards and Design Specs

Next is the observation that storyboards and design specifications are unacceptably weak in comparison to functional prototypes. A storyboard is a hard-copy mock-up of the series of screens a learner will see in the completed e-learning piece, including feedback for each choice shown on the screen (Figure 4.3). These detailed screen images are presented in the order in which the learner is likely to encounter them, in the vain hope of avoiding errors and omissions in the finished e-learning application. Similarly, design specifications (or specs for short) include written descriptions and rough drawings of the interface, navigation, instructional objectives, content text, and so on. Again, the hope is that subject-matter experts and others on the design team will be able to fully grasp the nature of the envisioned interactions and successfully appraise the impact they will have on learners, and somehow will be able to spot errors and omissions in the design specs and so prevent them from becoming part of the final e-learning application.

FIGURE 4.3 Storyboards typically include screen layout sketches and detailed written descriptions of how interactions should work.

By using functional prototypes, successive approximation sidesteps the risks inherent in storyboards and design specs, and reaps many advantages from using the actual delivery medium as a sounding board.

Storyboards and Design Specs

Storyboards have been valued tools to help clients, subject-matter experts, instructors, managers, programmers, and others understand and approve proposed designs. The logic is clear enough. Look at the sketches of screen designs, read the annotations of what is to happen, look at the interactions proposed and the contingent feedback messages students are to receive, and determine whether the event is likely to achieve the defined learning outcomes.

Storyboards are far better than nothing and much better than design specs that attempt to describe interactions verbally. Unfortunately, no matter how detailed storyboards may be, experience indicates that they fall short in their ability to convey designs adequately to all the concerned individuals. They are inferior as props for testing design effectiveness with learners. The sad truth is that storyboards almost invariably lead to problematic surprises when their contents have been programmed and their prescribed interactivity can be witnessed and tested.

I know that significant numbers of my much-appreciated readers are very much attached, both intellectually and emotionally, to storyboards. They find storyboards so valuable they wouldn’t know any way of proceeding without them. Some, if not many, will feel that their storyboards have been the keys to many successes. Just between you and me, I’m actually thinking that these successes probably weren’t such great successes anyway and weren’t, in any case, all they might easily have been. How much more might have been achieved had a more effective process been used? What opportunities were lost by not being able to present and discuss aspects of the actual interactions to be experienced?

True instructional interactivity (see Chapter 7) cannot be storyboarded or effectively communicated through design specs. Yes, this may seem a radical, even rash, assertion. But consider the nature of a storyboard, and the nature of true instructional interactivity, and try to reach any other conclusion. Storyboards, by their nature, are static documents. Just displaying a static document changes its nature. What looks good on paper rarely looks good on the screen and vice versa. Even bigger differences become apparent between interactions described in documents and operational interactions created through a developer’s interpretation of the documents. Even with sketches and detailed descriptions, the original vision will mutate in the most unexpected ways. Further, the original vision often doesn’t seem so good when illuminated on the screen, even if no permutations have occurred.

Prototypes

Functional prototypes have an enormous advantage over storyboards. With functional prototypes, everyone can get a sense of the interactive nature of the application, its timing, the conditional nature of feedback, and its dependency on learner input. With functional prototypes, everyone’s attention turns to the most critical aspect of the design, the interactivity, as opposed to simply reviewing content presentation and talking about whether all content points have been presented. Design specs aren’t even in the running. Unlike using storyboards or design specs, users of prototypes may have to personally decide on responses to make and actually make them in order to see how the software will respond.

I can hear the objection: Developing prototypes takes too long! My answer is that, for a skilled prototype developer, a useful prototype can usually be turned out in about two hours.

Teams can produce excellent products if members have effective ways to communicate with each other. They need to see—literally see—the same design rather than imagine different designs and assume a common vision that really doesn’t exist. If projects could be built by only a single person, it would usually take far too long. Teamwork is necessary, but it must be effective. Teams need a way to share a common vision, lest they all start on individual journeys leading to very different places. Without help from each other, no one arrives anywhere desirable.

Ability to Evaluate

Think of the difference between watching Who Wants to Be a Millionaire and actually being in the hot seat. With nothing at risk, we casually judge the challenge to be much easier than it actually is. Imagine if, in story-board fashion, Millionaire’s correct answers were immediately posted for us on the screen as each question appeared, just as we’d see in story-boards. We’d easily think, “Oh, I know that. What an easy question!” But we wouldn’t actually be facing the dreaded question: “Is that your final answer?” In the hot seat, we might actually have quite a struggle determining the answer. Obviously, many contestants do. Quite possibly, our learners would have much more trouble than we would anticipate from prototypes—you just can’t tell very easily without the real tests that prototypes allow.

Comprehension of displayed text is inferior to comprehension of printed text. We also know that many designs that are approved in a story-board presentation are soundly and immediately rejected when they are first seen on the screen, and they are even more likely to be rejected when they become interactive. Prototypes simply provide an invaluable means of evaluating designs. Otherwise, with the storyboards approved and the programming completed, changes are disruptive at best. More likely, they cause budget overruns and the work environment becomes contentious.

Two-Hour Prototyping

We built Authorware specifically to address prototyping needs. Author-ware is an icon-based authoring system for developing media-rich e-learning applications, marketed by Macromedia, Inc. To provide the most value, prototypes must be generated very quickly—we’re talking minutes to hours here, not weeks—so they can be reviewed as early as possible and discarded without reservation. Prototypes must have interactive functionality so that the key characteristics of the events can be evaluated. Many teams today use Authorware primarily for this purpose even if the final product will be developed using tools more specifically optimized for Internet delivery, but any tool that allows very rapid prototyping and very rapid changes to prototypes can serve well.

Quick and Dirty Is Beautiful

Finally, quick iterations allow exploration of multiple design ideas. Successive approximation advocates believe attempts to perfect a design before any software is built are futile and wasteful. Alternative designs need to be developed with just enough functionality, content, and media development for everyone to understand and evaluate the proposed approaches.

I hasten to point out that there are problems with this approach if there are unindoctrinated heathens among the believers. Skeptics may lose confidence when asked to look at screens with primitive stick figures, unlabeled buttons, no media, incomplete sentences, and dysfunctional buttons. Although these are the characteristics of valuable rapidly produced prototypes, some may be unable to see through the roughness to the inner beauty. Indeed, this is a radical departure. I plead with you, however, to keep the faith. Converts are numerous, even among previously devout antagonists. To my knowledge, none of my clients would ever return to other processes; they have all become evangelists.

Converting disbelievers, however, can take time and patience. (“Most of our so-called reasoning consists in finding arguments for going on as we already do.” —James Robinson.) It is often worthwhile, therefore, to produce a few polished screens to show what can be expected later in the process. Also, communicating your production standards for the project in advance will help curb the tendency to nitpick designs that are ready only for high-level evaluation.

Brainstorming

The first version of a new application will typically be furthest from perfection, the furthest from what we really want, no matter how much effort is applied. To optimize chances of identifying the best plan possible, alternative approaches need to be explored. In this brainstorming mode, divergent ideas aren’t judged before they’ve been developed a bit.

Brainstorming sounds like fun and can be. But the process isn’t always obvious to everyone, and it can be difficult to keep on track. Check Table 4.1 for some approaches my teams have found effective.

TABLE 4.1 Brainstorming Techniques

| Technique | Description |

| Using word reduction | Take the whole problem and reduce it to two sentences. Then one sentence. Then five words. Then one word. The idea is to really get at the conceptual heart of the process. As people go around the room and share their one word, you may find that everyone focuses on a different word! This ridiculous exercise reveals the direction for interactivity and also the potential conflicts. |

| Acting it out | Role-play the situation: the problem, how it’s working now, how it’s not working. |

| Playing the opposite game | What’s the opposite of what’s desired? For example, the opposite of what’s needed is to slam the phone down on a customer after screaming “Don’t call back!” This backhanded example reveals a goal: End conversations with the customer eager to call back. This approach can be a very goofy, funny brainstorming approach, but don’t lead with this one first—there has to be some group trust before you start handling the client’s content with humor. |

| Oversimplifying | Have the subject-matter experts explain the problem as they might to a class of 8-year-old kids. This forces them to use easy words, explain jargon, and reduce the problem to its most basic elements. It sheds unnecessary complications. |

| Drawing it | Draw the problem statement instead of using words. |

| Finding analogies | “Our problem is like the problem furniture salespeople have when a customer doesn’t want to buy a bed that day. . . .” “Our problem is like when you go to the dentist and you haven’t flossed and you feel guilty. . . .” Find creative ways of expressing the problem other than directly—this will suggest paths for interactivity and reduce the problem to basic elements. |

| Setting a 10-minute limit | Pretend that your users only have 10 minutes to experience all of the training. What would you expose them to? What would you have them learn? This activity can help users prioritize what’s important in the interactivity or the problem analysis. |

Alternatives at No Extra Cost

The total cost of a set of smaller creative design efforts (that is, prototypes) is easily affordable, because you don’t spend much on any given one. A quick review of alternatives incurs far less risk than single-pass or phased approaches that bet everything on one proposal. Further, with the iterative approach, course correction is performed routinely and early enough to prevent full development of ultimately unacceptable (and therefore extremely costly) designs.

With a prototyping approach, expenses are kept to a minimum while the search for the best solution is being conducted. Each prototyping effort means minimal analysis, leading to minimal design work, which leads to minimal development (prototyping) for evaluation. Note that this is just the opposite of ISD, which works for perfection at each point and intends to perform each process only once. Instead, following a small amount of effort, ideas are available for evaluation by everyone—including students, who can verify what makes sense and what doesn’t, what is interesting and what isn’t, what’s funny and what isn’t, what’s meaningful and what’s confusing, and so on. No more money needs to be spent on anything that seems unlikely to work if there are more promising alternatives to explore.

Please put your savings in the offering tray as we pass it around!

Unsuccessful Designs Provide Insight

Early evaluation is input that either confirms or refutes the correctness of the analysis or design. In either case, additional analysis will lead to new design ideas that can be programmed and evaluated. Again, it’s critical that early prototyping be regarded as disposable. In fact, it’s healthy for organizations to work with the expectation of discarding early prototypes and a commitment to do so. There’s no pressure for this early work to be done with foresight for maintenance, standards compliance, or anything else that would detract from the challenge of identifying the most effective learning event possible.

Again, prototyping requires the availability of flexible tools and the experience to use them, because it is often valuable to perform prototype development live—in front of the brainstorming team. Choose a tool that allows you to build your prototypes very quickly, concentrate on the learner experience instead of implementation details, and make modifications almost as quickly as ideas arise. Each correction will be a step forward.

It’s Catching On

Successive approximation is catching on in many organizations largely because alternative processes are not producing the quality of software and instruction expected and required. Even organizations that create general computer software are turning to similar approaches. (See the work of the Dynamic Systems Development Method Consortium at http://www.dsdm.org/.) Costs for development of even rather primitive interactive software remain high even with our best approaches. It is therefore important that projects return value, because they otherwise risk substantial losses.

Successive approximation requires neither the intellect of Albert Einstein nor the imagination of Walt Disney. Yet, by putting teams in the best possible position to be inventive—to see and explore opportunities while they still have resources to pursue them—successive approximation can reliably produce outstanding, creative learning experiences that are meaningful and memorable.

It’s Not Catching On

The successive approximation approach is a radical departure from the development processes entrenched in many organizations. I don’t think this is unfortunate in itself. What’s unfortunate is the inability to change.

Change Requires Leadership

I have worked with many organizations that have rigid software development processes, defined in detail—painful, unending detail. These processes not only are blessed by top management, but also are rigorously enforced. Unfortunately, the underlying methodology is typically a waterfall method, and corporate standards are implemented to enforce completion of each phase, irreversible sign-offs, and systematic rejection of good ideas that come later than desired. Although I’ve invariably found that individuals within these organizations ingeniously find ways to employ some of the processes of successive approximation, they frequently have to do it surreptitiously.

The successive approximation approach also conflicts with many widespread notions of project management, so managers not fully versed in its principles may be uncomfortable and edgy, if not actively resistant. They may try to impose constraints that seem both logical and harmless but handicap the process enough to call its effectiveness into question.

It’s an interesting situation—those who have had success with successive approximation cannot comprehend how any organization would stick to older methods, and those who are working to perfect traditional methods are unable to see the innumerable practical advantages of successive approximation. It appears that you have to get wet in order to swim, but some just aren’t willing to put even a toe in the water.

They need a shove! Go ahead and push!

Savvy—A Successful Program of Successive Approximation

Unfortunately, just understanding and appreciating the three key principles of successive approximation doesn’t lead an organization easily to fluent application. To see how the principles can translate into some critical details, let’s look at my own organization’s application of successive approximations as a case study.

Savvy is the name Allen Interactions has given to an articulated application of successive approximation. Savvy, by the way, is not an acronym—because, frankly, we couldn’t come up with anything that wasn’t hokey, especially with two Vs in the middle of the name.

Because of its continuing success from almost every point of view, we hold dearly to the postulates of successive approximation and apply them in a program that involves:

- A Savvy Start

- Recent learners as designers

- Typical learner testing

- Breadth-over-depth sequencing of design and development efforts

- Team consistency and ownership

- Production with models

Let’s explore them individually:

A Savvy Start

Savvy begins with attempts to collect some essential information that is critical to making a succession of good decisions. The information doesn’t have to be totally correct or complete. It’s just a place to start.

Just as it’s too expensive and ultimately impossible to make a perfect product, it’s too expensive and ultimately impossible to get perfect information at the outset of a project (see Rossett 1999 for practical tips)—or any other time, for that matter. Waiting for attempts to extract this information from discussion, analysis, synthesis, report generation, report review, comment, modification, and approval is also expensive.

Ready, Fire, Aim

Radically, and in perhaps an audacious manner, Savvy begins with the Savvy Start, and the Savvy Start begins with a short discussion and some on-the-spot rapid prototyping. Design, development, and evaluation of off-the-cuff prototypes stimulate information discovery. While this may seem akin to a trial-and-error process, many strongly held tenets, including such cornerstones as what should be taught to whom when, which values are appropriate, and how technology can be used to improve performance may actually be seen in a new light. Staunchly held preconceptions are quite often modified, if not totally discarded, in the process.

Unchangeable Things Change

Experience warns us about taking initially stated requirements to be absolute. No matter how strongly they may be supported at first, assuming that requirements can’t be changed is precarious and probably unnecessarily restrictive. Things do change. In fact, learning is all about change. As people learn more about training and e-learning in particular, their preconceptions and premature plans can and often do change.

In corporate training arenas where custom courseware is developed under contract with external vendors, it’s hard for contractors to know quickly what the real anchors are, what is actually negotiating posture versus a truly immutable constraint, and where hidden flexibility lies or can be created. If you’re trying to help clients (whether internal or external) achieve significant success rather than simply trying to please them by delivering good-looking courseware, you will be focused on creating events that get people to change their behavior. Simultaneously, you will probably have to change your clients’ minds about a lot of things, including what are and aren’t effective uses of technology.

The earlier you can learn the lay of the land, the more credibility you will have to shape the training into what’s really needed. But you need to get a foothold in order to investigate.

Who’s on First?

Prototypes help get discussions focused on solutions, not on who is in control, whose opinion counts the most, who knows the content best, who has the most teaching experience, or who can make the best-sounding arguments for certain design decisions. Prototypes stimulate brainstorming and creative problem solving. Prototypes get projects started and help clients determine what really is and isn’t important to them. Prototypes help align clients’ values for success rather than for maintenance of the status quo.

The excerpts from the Allen Interactions Savvy documentation presented in Figure 4.4 suggest the importance of client education. The process is paradoxically both similar to many things we do in life and surprisingly unexpected and foreign as a commercial process for creative product development. This document is provided to Allen Interactions’ clients to set expectations and convey a clear picture of what happens just before and during a Savvy Start.

FIGURE 4.4 Excerpt from Allen Interactions Savvy documentation.

(Copyright © 2002 Allen Interactions Inc., Minneapolis, MN, www.alleninteractions.com.)

Recent Learners as Designers

There is a fascinating paradox about expertise. The more expert we become, the more difficulty we have explaining how we think and how we do what we do, especially to those with very little knowledge of the particular field. Experts often experience difficulty and frustration as they try to help or teach novices.

Consider this: Who knows the most about driving, a person who has been driving safely for 20 years or a 16-year-old? The 20-year veteran, right?

Who can best help you prepare for a driver’s license exam? Probably someone who recently took the test, such as a 16-year-old. Not the more expert driver!

The mental gymnastics of experts are quite incomprehensible to novice learners. It appears experts come to their conclusions mystically. The rationale eludes learners. With mounting anxiety, feelings of inadequacy, and doubts about ever succeeding, novices working with experts begin to turn their focus on their emotions and their lack of progress. In the process, their learning abilities wane, and their expert instructors become even more frustrated.

Experts Know Too Much

Experts become able to think in patterns and probabilities. They look for telltale signs that rule out specific possibilities or suggest other signs to investigate. While their rationale could conceivably be mapped out for novice learners, the skills needed to identify and evaluate each sign would have to be developed. Further, these skills would have to be refined and perhaps reshaped and combined with others to be effective for specific contexts. Although much of this would be fascinating for experts, almost none of it would be meaningful to the beginning learner. Attempts to fashion training based on a model of expert behavior are unlikely to succeed.

What does all this mean for the design of effective learning events and for successive approximations?

- Although their input can be invaluable, seasoned experts are not likely to be good designers of instruction on topics and behaviors in their field of expertise.

- The most advanced experts may have difficulty providing the services of subject-matter experts.

Recent Learners Know More

We have to be careful about relying on subject-matter experts to articulate content to be learned, the most appropriate sequence of events, or the needed learning events themselves. While their review of content accuracy is obviously important to make sure learners aren’t misled, there is a frequently overlooked source of valuable expertise: recent learners. The paradox continues.

Perhaps surprisingly, recently successful learners can provide an important balance and valuable insights. While the behavior of experts may indeed define the target outcome, experts often have trouble remembering not knowing what they now know so well and do without thinking.

Recent learners, however, can often remember:

- Not knowing what they have recently come to know

- What facts, concepts, procedures, or skills where challenging at first

- Why those facts, concepts, procedures, or skills were challenging

- What happened that got them past the challenge

- What brought on that coveted “A-ha!” experience

In an iterative process that seeks to verify frequently that assumptions are correct, that designs are working, and that there are no better ideas to build upon, recent learners are invaluable contributors. Guessing what will work for learners is an unnecessary risk. You can’t speak for learners as well as they can speak for themselves, and waiting until the software is fully programmed is waiting too long. Once recent learners get in on the vision they can:

- Review even the earliest prototypes and access the likely value to learners.

- Propose learning events.

- Propose resources helpful in learning, such as libraries of examples, exercises, glossaries, and demonstrations.

- Help determine whether blended media solutions might be vital and which topics might be taught through each medium, including instructor-led activities.

- Review learner-interface designs for intelligibility.

Finally, although recent learners can be expected to make valuable contributions to the project from start to finish, it can be valuable both to continue working with the same individuals and to bring in others who will be seeing the project for the first time after some iterative work has been accomplished. Fresh eyes will make another layer of contribution, perhaps by seeing opportunities or problems that no one who has been on the team from the beginning is noticing.

Of course, the practicality and cost of working with an expanded review team must be considered. A small number of recent learners might be enough. Perhaps only three will be sufficient, particularly if you can find articulate, insightful, introspective, and interested individuals. Consider also the cost of not providing a learning event that really works for learners and reliably engenders the behaviors you need.

Don’t miss the value recent learners can provide without seriously considering the advantages.

Typical Learner Testing

Again we turn not to the usual sources of expertise, but to a nevertheless invaluable one: typical learners. Just as it is risky to think you know what a teenager would want to wear, it’s really dangerous to make assumptions about what will appeal, engage, and be meaningful to learners without testing. Experience certainly helps increase the probability that proposed designs will work, but the confluence of factors determining the effectiveness of learning events is complex. Just as with television commercials and retail products, many good ideas don’t turn out so well in practice, even when conceived by experts.

Thankfully, e-learning development tools allow easy and rapid modification of learning events. There won’t be recalls, trashed products, or even filled wastebaskets, but mistakes can certainly be expensive if they aren’t caught soon. The iterative process of successive approximation seeks to catch mistakes as soon as possible, well before the first version of the product is distributed. Thus, typical learner testing is a critical component of the process.

The timing of learner testing in the successive approximation approach is what differentiates it from other processes. Typical learners are brought in after each cycle of design. They are even brought in to look at prototypes resulting from the Savvy Start or prototypes that will be used to help schedule and budget the project. While everyone knows the prototypes will undergo major revision, perhaps several times, the reaction of typical learners can have a profound impact on the project. Learners may report that too much effort is being put into facts that are quite obvious and easy to understand. They may report the opposite. They may find that your enthusiastically endorsed ideas are quite boring, or that your simple game is way too complicated.

Surprises in the reactions of typical learners should be expected. It is difficult to put yourself into someone else’s mind-set, but easy to be convinced that you have done so. The reality of the situation will surface, bluntly and frustratingly at times, if typical learners are truly comfortable expressing their opinions. While it may be deflating and hard to accept their input, it is invaluable to get it early and often. And, of course, it is necessary to accept it and work with it to garner the process advantages.

It takes discipline.

Just as with the participation of recent learners, participation of typical learners requires effort, cost, and coordination. For example, arranging for a steady stream of typical learners who haven’t yet seen the evolving application can be difficult. Providing computers at the times and places needed, arranging for responsibilities to be covered while people are away from their workstations, providing orientation, and gathering feedback in useful forms is a lot of work. Perhaps none of these is as difficult to come by, however, as the discipline required just to make it happen. It’s so easy to assume you’re on the right track and that there’s little guidance a typical learner can provide.

Designers readily fall into the trap of designing for themselves, assuming much more similarity of their needs, likes and dislikes, abilities, readiness, comfort with technology, and so on than actually exists with targeted learners. They conclude that there’s little to be gained by stopping for discussion and feedback. It’s a comforting and desirable conclusion, because there’s always a push to keep the project moving. It seems that evaluation takes time away from development; although, in the long run, it should reduce total development if it catches design errors early enough.

No one likes to show their work in progress. We especially don’t like to hear about deficiencies we know are there, as if we didn’t recognize them and intend to address them. We don’t like to expose mistakes. So, our natural tendency, if not our immutable rule, is to hide our work from view until it is finished and polished. But the longer a project goes on without verifying its course, the more costly it will be to make changes should they be required.

It cannot be said strongly enough: You must involve learners early and frequently. It’s really quite irresponsible to do otherwise. It has to fit within the constraints of the overall project, as do all activities, but frequent learner testing is an essential component of successive approximation.

Breadth-over-Depth Sequencing of Design and Development Efforts

The successive approximation approach has a built-in risk. Although many instructional designers are instantly attracted to the process, managers quickly see a red flag. The concern is that because it is an iterative process, projects will never be completed but will continue in perpetual revision as new ideas inevitably surface. Managers are concerned that attempts to refine the first components will consume all the project time and that insufficient resources will remain to complete the work. In the jargon of successive approximation, this would be a depth-over-breadth approach. And it’s a gospel sin.

The managers’ fears might be valid if successive approximation were not a breadth-over-depth or breadth-before-depth approach. The process depends on proposing design solutions for all performance needs as quickly as possible and implementing functional prototypes to verify the soundness and affordability of each approach. Like layers of a cake, each iteration covers the entire surface, then builds on the previous layer until a satisfactory depth is achieved.

Let’s see why it’s so important.

Everything Is Related

Although there may be facts, concepts, procedures, and skills which seem very much unrelated, design work in all corners of an e-learning application affects work in the other corners. Like making a bed, you can’t complete the process by perfecting each corner one at a time. You first loosely position sheets and blankets, then revisit each corner to tuck and tighten. If you need to adjust one corner, it will be easier to do so if the other corners haven’t been completed. If they have been completed, there will be some unmaking and remaking—an effort that wouldn’t have been necessary had you waited to check all four corners before perfecting them.

The analogy speaks strongly to notions of breadth over depth: Get through all the content before finalizing designs. Learner-interface notions, context development, testing and scoring structures, feedback and progress protocols, and many other design decisions may have to be adjusted several times until all of the content has been accommodated. You must not dig deeply into one area, assuming that all others have similar needs. If you perfect one “corner,” the odds are high that you will have to undo a lot of work.

Setting the Budget

We’ve already asserted that no application will reach perfection. In a way, that’s good news for the budgeting process. It doesn’t make good business sense to set an unreachable goal, because failure would be certain. Because perfection can’t be reached, it’s not the goal. Pragmatically, the goal is to spend exactly the right amount, no more or less than is necessary to achieve the desired outcome behaviors efficiently.

The successive approximation approach is capable of two very important outcomes:

- Successive approximation can produce the best learning application possible within the constraints of a preestablished schedule and budget.

- Successive approximation can help determine an optimal budget through iterative improvement and evaluation.

Within constraints, as many alternatives as possible are considered. Because of the breadth-over-depth rule, however, instruction for all targeted behaviors will be developed before refinement is applied to any of them. Minimal treatment of all content is therefore ensured.

When budgets are inadequate to meet needs, and sometimes they are, opportunities for later improvement are identified in the normal iterative process. Everything is set and ready for further refinement should funding become available.

Iteratively comparing the impact of each successive revision yields data relevant to the appropriateness of further spending. Through early revisions, gains are likely to be very high, but as the quality of the application continues to improve, successive gains will begin to decrease. Taking specific value factors into consideration—such as the cost of errors, the opportunities for expanded business, improved productivity, and so on—one can then make relatively objective decisions about whether additional cycles would yield an acceptable return on the investment.

In the meantime, and most important, the best possible application, within allocated resources and constraints, will be available for use.

Three Is the Magic Number

A plan to revisit all areas of design three times proves quite effective in practice. Sometimes only one revision is necessary, especially when a previously validated model is being used.

Figure 4.5 shows a practical application of successive approximations with three prototyping iterations. Three iterations of the design, creation, and evaluation process are applied. The first prototyping is done during the Savvy Start and is based on initial analysis information that is always modified and extended during Savvy Start sessions.

FIGURE 4.5 A three-iteration application of successive approximation.

When the initial prototypes have been completed, they are tested with typical learners. This provides input for the second prototype and iterations of the design, creation, and evaluation process.

Sometimes the second prototype is sufficient, but it is important to reserve time and budget for a third prototyping effort, just in case. Better to come in under budget than over. Experience indicates that it is seldom necessary to go beyond three prototypes to finalize a design.

Creeping Elegance

It’s hard not to want to perfect media, interactions, and even text as they are initially developed. It is important, actually, to develop the look and feel enough that stakeholders can get a very clear picture of what is being proposed. It’s also valuable to develop style sheets (you know, those irritating documents you have to refer to that specify what branding colors to use, what fonts are allowed, what margins are necessary, and so on) and to fully render some interactions so that everyone is clear about the extent of refinement that is eventually intended for all interactions.

Refinement should be confined to representative components of the final product, rather than applied to all prototypes. In fact, some later prototypes may be even more primitive than the first ones, because everyone will already understand how media and interface standards will be applied.

Temptingly, after the development of standards, it will be as easy to fully apply some of them as to wait for late iterations. There’s no sense in doing things twice if standards can be applied with only about the same time and effort as placeholders. One must guard carefully, however, against creeping elegance. Unnecessarily refined prototypes work contrary to the effectiveness of successive approximation. Regardless of how much more satisfying it is to present refined prototypes, rewards should go to those who achieve consensus on the first iteration as quickly as possible.

Remember, breadth over depth. Cover all the content at modest levels of refinement, then go back over all the content iteratively to make successive layers of improvements.

Team Consistency and Ownership

Many organizations work to keep all members of their design and development staffs busy. It makes sense that idle time is an expense to be avoided. In fact, most organizations departmentalize their specialists, with designers, programmers, artists, and so on each in their respective departments. As projects call for various tasks to be done, available people are assigned from each department. Department managers are responsible for providing services as needed while minimizing excess staff.

In my experience, this is perhaps the worst conceivable organizational structure. (I’ve tried it, of course.)

One has to admit that there’s logic and simplicity to it. Unfortunately, it doesn’t seem to work. Why not?

Total Immersion

Crafting a multimedia experience to change behavior requires merging the talents of many individuals. As I’ve already asserted, documents describing interactive multimedia fall far short as tools for defining and communicating design vision. You have to feel the clay in your hands to know what’s happening. To contribute, you have to be there, thinking, contributing, listening, working. You have to understand the business and performance problem being addressed as if it were your own problem to solve.

An effective team is not just individuals who show up from time to time, provide an illustration, and bow out. It’s a team whose members stay with a project from beginning to end, think about solutions during lunch, and daydream about the most effective project solutions.

The level of individual involvement may change from time to time, especially in production activities, and not everyone assists in all tasks, of course. But beginning no later than with the initial prototypes, it’s important that all team members be participants.

Artists and programmers can make important contributions to instructional design, just as designers and managers can make important observations on media design. As the team works together, knowing that each of the players will be there at the finish line, everyone looks to take advantage of what is being done in the successive cycles to make the application better, stronger, and more effective in later iterations. Issues aren’t dismissed because the concerned individual probably won’t be back tomorrow. Committee design anomalies don’t occur, because each individual will be there to see if the specified outcome behaviors result. No one succeeds if the application fails; everyone succeeds if it does.

Studios

Allen Interactions’ greatest success has come from organizing into studios. A studio is a full complement of professionals capable of designing and developing all aspects of a powerful multimedia learning application. Clients work with the same team, the same individuals, from beginning to end. They meet and get to know every individual. They communicate directly about any and all issues and ideas.

The studio structure is not only a comforting and flexible structure for clients, it’s the only one I’ve found to consistently produce the kind of learning solutions everyone wants.

Production with Models

It is important to leverage technologies wherever helpful and to find every way possible to develop powerful learning experiences at the lowest cost. One very effective way to do this is to search for and identify similar learning tasks among the types of behaviors people must learn. Whether teaching dental assistants, airplane mechanics, telesales operators, or office managers, there are likely to be some similar tasks or repeating tasks with only content variations, not structural differences.

Sometimes the similar tasks are found to reoccur within one e-learning application. Other times, similar tasks are found in each of several different applications. Once an instructional approach has been designed and the delivery software is developed, it likely can be used repeatedly with specific content substitutions.

Models can vary in size from small structures, such as utilities for students to make notes in a personal notebook as they pursue their e-learning, to complex structures that are nearly complete training applications. Several applications used as examples in this book are actually models of varying size and complexity.

The corrective feedback model is a logic model shown in two applications: Who Wants to Be a Miller? and Just Ducky (see Figures 7.25 to 7.29). The user interface, graphics, and content vary tremendously from application to application, but the elegant and effective logic of this application is the same in each.

The task model is a structure that’s particularly effective in teaching procedural tasks, such as the use of a software application. Shown in the Breeze Thru Windows 95 application (Figures 7.22 to 7.23), this model has been used many times with a variety of content, such as teaching the use of custom and commercial software systems, medical devices, and restaurant seating and food-order-processing systems.

A problem-solving investigation model (Figures 7.30 to 7.38) was developed by DaimlerChrysler Quality Institute to require learners to practice applying statistical quality-control methods in an environment that simulates the complexities of large, real-life manufacturing processes. Rather than being given all the information needed as might be done in an academic approach, learners must think who might have needed information, find what is known, interpret data, and determine what information is relevant to solve problems that have actually occurred but have proved difficult to solve. Once the structure was built, it was only a matter of substituting new content to build additional cases for learners to study.

When there are enough uses of an approach, it pays to develop formalized templates or models that are structured specifically for easy content substitution. (See Table 4.2.) Sometimes, it’s even worth going further to provide options for quick customization of how a model will work.

TABLE 4.2 Advantages and Disadvantages of Programmed Models

| Advantages | Disadvantages |

| Speed development. | Force design compromises. |

| Reduce costs. | Generate content-insensitive interactions. |

| Centralize software bugs and repairs. | May be incompatible with the design context or may force adoption of a vanilla context. |

| Provide interface consistency. | Lead to monotonous repetition. |

| Reduce risk of programming errors. | May not be adaptable as needs change or more appropriate interactivity is identified. |

When to Consider Models

Some projects are highly design-intensive. Almost every interaction is uniquely tailored to specific activities and outcome behaviors. As a result, models are not likely to be very helpful. Generalizing code so that content can be substituted is sometimes a costly and trivial effort, and it shouldn’t be undertaken just in case the structure might be used again when the prospects for reuse haven’t been identified. On the other hand, there are times when a logical structure can be used very widely. Not only is it then appropriate to generalize the code, but it is also important to document its use very carefully and sometimes even provide a number of options to help tailor the structure to a wider variety of applications.

Spotting Opportunities for Models

In the first pass of interaction design, one objective is to be certain that a prototype is developed for every type of learning activity. Although we reviewed in detail why it’s important to avoid refining these prototypes too early, there is yet another reason to delay refinement. Quite often, an approach used in one area of the application will be appropriate in another, but with only a slight twist. Sometimes in reviewing the original intended use, the twist will be valuable there as well. Sometimes not.

Because minimal effort was put into the early prototypes, ideas and structures remain temporary and fluid as instructional designs are considered for all of the content (breadth over depth). It is appropriate to reconsider individually developed designs that appear to be similar to each other to see if they might be evolved into a single, more generally useful structure. This will tap the advantages of models, and, unless inappropriate comprises are made to force compatibility, it will also avoid most of the disadvantages of models. More interesting, it may be possible now to build a more powerful, sophisticated set of interactions because of their broad utility.

Summary

The challenges of designing and implementing excellent e-learning applications are many and varied. Team approaches are necessary, even though some of the best work has been done by dedicated individuals working over long periods of time. Most projects cannot wait for the extended development time needed by individuals. Unfortunately, traditional team approaches have had their unique disadvantages as well, resulting in mediocre products, delayed implementation, and organizational tension.

Successive approximation is a pragmatic approach that has overcome many of the problems experienced with team design and development. It is an iterative process that begins with rapid prototyping and evolves the most promising designs into the best application possible within given constraints. Frequent review of emerging applications takes the lead, rather than single-pass development working from design documents. Above all else, the process must be iterative to be cost-effective.

A successful example of successive approximation called Savvy involves six key principles and activities:

- A Savvy Start

- Recent learners as designers

- Typical learner testing

- Breadth-over-depth sequencing of design and development efforts

- Team consistency and ownership

- Production with models