CHAPTER FIVE

LEARNER MOTIVATION

I am sometimes asked why I’ve stayed in the field of technology-based instruction for so many years. I can tell you that once you experience some successes and see what is possible, the allure of repeating those successes is very strong. Let me tell you a story of an early success with the PLATO system:

How often do kids break into school to study? How many situations do you know of in which school children voluntarily assemble to buckle down to schoolwork?

I have had many experiences in which I have personally seen technology-based instruction make a dramatic difference in the lives of learners. Each one of them has given me a craving for more. Each one of them has shown me how much untapped potential lies in e-learning. One area that definitely deserves more attention is the ability of e-learning to motivate learners.

Although outstanding teachers do their best to motivate learners on the first day of class and continually thereafter, many e-learning designers don’t even consider the issue of learner motivation, let alone take action to raise it. They tend to focus instead on the meticulous presentation of information or content. Perhaps they believe the techniques teachers use to motivate learners are beyond the capabilities of e-learning. Instead of looking for alternative ways to use the strengths of e-learning technology to address motivation, they simply drop the challenge.

The e-Learning Equation

Learning is an action taken by and occurring within the learner. Instructors cannot learn their learners, and neither can e-learning technology with all its graphics, animations, effects, audio, interactivity, and so on. Learners must be active participants and, in the end, do the learning.

The learners’ motivation determines, in large part, the level of their participation in the learning activity and their ability to learn. Motivation is an essential element of learning success.

With apologies to Albert Einstein, let me advance a conceptual model through a simple equation:

![]()

where e = edification (or e-learning outcomes)

m = motivation

c = content presentation

The equation suggests that if there is no motivation (m = 0), there are no learning outcomes (e = 0), regardless of how perfectly structured and presented the content may be. Of course, if the content is also inaccurate or faulty (c = 0), the learning outcomes will also be null. However, the equation suggests that emphasizing motivation in the course can have an exponentially greater impact than simply being comprehensive in content presentation.

No matter what the speculative value of c (content presentation) might be, when m = 0, e = 0. If you think about it, you know this is quite possible. Surely you’ve attended a class that went into a topic you didn’t see as valuable or applicable to you. You started thinking about something else and later jerked to attention, realizing you had no idea of what had been said.

Motivation and Perception

Our selective perception allows us to filter out uninteresting, unimportant stimuli. It’s very important that we have the capability of ignoring unimportant stimuli; otherwise, we would be shifting our attention constantly. Without selective perception, we wouldn’t be able to attend properly to important events.

There are countless stimuli vying for our attention; however, most of these are unimportant and need to be ignored. As we sit in a lecture, for example, we can study the finish of the ceiling, note the kinds of shoes people are wearing, and check to see if our fingernails are trimmed evenly and to the desired length. The more we attend to unimportant things, the more difficult it becomes to effectively attend to those things that might actually be quite important to us.

Our motivations influence our perceptions and the process of focusing our attention. We attend to things of importance, whether they paint exciting opportunities in our minds or present dangers. When we see the possibility of winning a valued prize by correctly and quickly answering a question, for example, we focus and ready our whole body to respond. At that moment, we become completely oblivious to many other stimuli so that we can focus exclusively on the question. Conversely, if we expect little gratification from winning or have almost no possibility of winning, we might remain fully relaxed, not trying to generate an answer, or not even listening to the question. We might, instead, begin watching the behaviors of those really trying to win. If people watching provides little entertainment, that ceiling may take on a new fascination. There’s a good likelihood we’ll never even hear the question or the correct answer, because we’ll be listening to our internal thoughts instead.

Motivation and Persistence

As the gateway to learning, motivation first helps us attend to learning events. It then determines what actions we take in response to them. Viewed from the perspective of whether we achieve the behavioral changes targeted, success correlates with motivation as shown in Figure 5.1.

FIGURE 5.1 Effect of motivation on behavioral outcomes.

When we’re motivated to learn, we find needed information even if it’s not so easily accessed. We make the most of available resources. We stay on track with even a disorganized or inarticulate lecturer. We ask questions, plead for examples, or even suggest activities and topics for discussion. If the lecturer proves to be a steady, untiring adversary, we turn to other learners, the library, or even other instructors for the help we need. We might switch to another class if that’s an option, but somehow, if our motivation is high enough, we learn what we want to learn. And to the extent possible under our control, we refuse to waste time in unproductive activities.

Instructional Design Priorities

As we’ve seen, motivation controls our perception—what we see, hear, and experience. Motivation also fuels our persistence to achieve selected goals. Strong motivation, therefore, becomes critical for sustained learning.

There may be exceptions to this statement, as there are to most rules; however, many situations that appear to be exceptions are not. We learn things, for example, from simple observation. We learn from traumatic events, from surprises, and from shocking happenings. Are these exceptions? We find concurrent motivations at work even in these cases. Our motivations to belong, be safe, avoid unemployment, or win can all translate readily into motivation to learn. They cause what appears to be involuntary learning, but is, nonetheless, motivated learning.

Learning motivation is nearly always energized by other motivations, whether negative (such as avoidance of embarrassment, danger, or financial losses) or positive (such as competence, self-esteem, recognition, or financial gain).

e-Learning Design Can Heighten as Well as Stifle Motivation

In a circular fashion, e-learning can help build the motivation needed for success. Heightened motivation strengthens the effectiveness of the e-learning and therefore promotes learning. This self-energizing system is to be fostered.

An opposing, deadly cycle is the alternative. Poor e-learning saps any motivation learners have. As learners suspect their e-learning work is of little value, they attend less and participate less, thus reducing the possibility that it will be of value. With evidence that e-learning isn’t working for them, learner interest and motivation continue to drop. This self-defeating system is, of course, to be avoided.

e-Learning Dropouts

Many e-learning designs tacitly assume, expect, require, and depend on high learner motivation, as evidenced by the good measure of persistence it takes just to endure them. If learner motivation wanes before the completion of instruction, learners drop out mentally, if not physically. Know what? This is exactly what is reported: 70 percent of learners drop out of their e-learning applications without completing them (Islam 2002).

Optimists claim (or hope) that high e-learning dropout rates simply reflect the attrition of learners who have gotten all they needed. Learners quit, it is reasoned, because their needs have been satisfied and they feel ready to meet their performance expectations. This may be their excuse, but I doubt that learners feel their initial e-learning experiences were so successful that they need not complete the training.

MOVIEGOER: This movie is so good, let’s leave—quick, before it ends.

READER: This book is so good, I don’t think I’ll read any more of it.

E-LEARNER: This e-learning application is so good, I think I’ll quit.

Does this logic sound right to you? e-Learners more likely drop out because they can’t take the boredom and frustration than because the instruction has served their needs so well. The time, effort, and patience required are greater than the perceived benefit.

Even Excellent Instruction Must Be Sold to the Learner

To create successful e-learning—or any successful learning program, for that matter—we need to make sure that value really is there. But perhaps just as important, we also need to make sure learners see and appreciate that value in concrete terms. Each learner must buy into the value of the learning—not just in general, but for specific, meaningful benefits. In other words, we need to sell learners on the truthful proposition that participation will provide benefits worth the time and effort. Doing so will stimulate vital motivation and give the program a chance to succeed.

I’m not talking about marketing spin meant to mask a miserable experience (although if the experience is going to be miserable, it’s more important than ever to sell it successfully to the learner). Nor do I suggest cajoling learners or propositioning them: “If you struggle through this, you’ll be much the better for it.”

Adult learners are sensitive to manipulation. If they feel they are being manipulated, they are likely to react defensively. They may be motivated to prove the instruction was unnecessary or ineffective. Rather, everyone has much to gain if the learner sees the personal advantages of learning. Again, the value must truly exist and learners must be able to envision and appraise the win firsthand.

All of this is done to ensure that the m in e = m2c reaches the highest value possible.

It Isn’t Bad News That Motivation Is Essential

Knowing the importance of learner motivation gives us an explanation of many e-learning failures and points the way to success. This is good news.

Actually, even better news lies in knowing that motivation levels change from situation to situation and from moment to moment. In other words, motivation levels are context-sensitive and can be influenced. We don’t have to be satisfied with the levels of motivation learners carry into a learning event. If a learner’s motivation is low, we can do things that are likely to raise it.

![]()

You may have noted that, in contrast to Einstein’s equation (e = mc2), I have squared the motivation factor. This is done to emphasize not only that motivation is essential, as would be indicated simply by e = mc, but that the learning outcome is more likely to be affected by motivational factors than it is by the content presentation. Again, if motivation is high, learners will make the most of whatever content information is available. If motivation is low, refining presentation text and graphics may help to improve learning somewhat, but not to the same level as heightening motivation.

It’s also critical that these two elements compliment each other. Content can be structured and presented in ways that are sensitive to the issue of motivation. That is, just as confusing and incomprehensible content presentation can extinguish motivation (i.e., c can be 0), selection of the right content at the right time can stimulate motivation.

Further, interactivity allows learners to act on their motivations. Seeing their efforts advance themselves toward their goals reinforces motivation. We might, therefore, extend our equation to include the value of interactivity:

![]()

where i = interactivity

The equation is not to be taken in any literal, computational sense, of course. We have no practical units of measurement applicable to content presentation nor standardized measures of motivation; however, the factors that determine learning shown here are functional and easily observed. The equation serves as a reminder not to omit attention to each factor.

Motivation to Learn versus Motivation to Learn via e-Learning

Motivation has focus. It has a goal. We can have a varying set of multiple motivations with their individual goals simultaneously, and they are competitive with each other at all times. In certain contexts, one motive will sometimes have sufficient strength to dominate our attention. At these moments, stimuli unrelated to the dominant motivation won’t even reach our consciousness.

Realizing the context sensitivity of motivations, we can also understand that e-learning events have the power to both increase and decrease learner motivation. Like every other aspect of human behavior, learner motivation is complex, but a simple view of motivation is sufficient to reveal powerful design principles for interactive instruction.

The simple view is this:

- If we want to learn, we will find a way.

- If we don’t want to learn, we won’t.

- If we want to learn but the e-learning application isn’t working for us, we will turn to something else.

When we want the cost savings, quality control, easy access, and other advantages of e-learning, the question becomes, “How do we get learners to want to learn via e-learning?”

The answer: Seven Magic Keys.

Seven Magic Keys to Motivating e-Learning

Yes, I have seven (rim shot please) “magic keys” to making e-learning experiences compelling and engaging.

It’s never as easy as following a recipe in e-learning. I can’t emphasize enough that there is a lot to know about instructional design, and that although good e-learning design may look simple to create, it isn’t. A great idea will often look obvious, and an effective implementation of it may look easy when complete, but uncovering the simple, “obvious” ideas can be a very challenging task.

It is therefore important to set out in promising directions right from the start. The magic in these keys is that they are such reliable and widely applicable techniques. Their presence or absence correlates well with the likely effectiveness of an e-learning application—at least to the extent that it provides motivating and engaging experiences. Thankfully, these features are not often more difficult to implement than many less effective interactions. These are realistic, practical approaches to highly effective e-learning. Let’s begin with Table 5.1, which lists seven ways to enhance motivation—the Seven Magic Keys—and then discuss them in more detail with examples.

TABLE 5.1 Ways to Enhance Learning Motivation—the Magic Keys

| Key | Comments |

| 1. Build on anticipated outcomes. | Help learners see how their involvement in the e-learning will produce outcomes they care about. |

| 2. Put the learner at risk. | If learners have something to lose, they pay attention. |

| 3. Select the right content for each learner. | If it’s meaningless or learners already know it, it’s not going to be an enjoyable learning experience. |

| 4. Use an appealing context | Novelty, suspense, fascinating graphics, humor, sound, music, animation—all draw learners in when done well. |

| 5. Have the learner perform multistep tasks. | Having people attempt real (or “authentic”) tasks is much more interesting than having them repeat or mimic one step at a time. |

| 6. Provide intrinsic feedback. | Seeing the positive consequences of good performance is better feedback than being told, “Yes, that was good.” |

| 7. Delay judgment. | If learners have to wait for confirmation, they will typically reevaluate for themselves while the tension mounts—essentially reviewing and rehearsing! |

Using the Magic Keys

You don’t have to use every Magic Key in every application. You would be challenged to do it even if you were so inclined. On the other hand, the risk of failure rises dramatically if you employ none of them.

Although it’s rather bold to say so, I do contend that if you fully employ just one of these motivation stimulants, your learning application is likely to be far more effective than the average e-learning application. Everything else you’d do would become more powerful because the learner would be a more active, interested participant.

Magic Key 1: Build on Anticipated Outcomes

Magic Key 1: Build on Anticipated Outcomes

We have motives from the time we are born. As we mature, learned motives expand on our instinctive motives. All our learned motives can probably be traced to our instinctive motivations in some way, but the helpful observation here is that all persons have an array of motivations that can be employed to make e-learning successful (Figure 5.2). A simple and effective technique to build interest in an e-learning application is to relate its benefits to learner desires for comfort, power, self-esteem, and other prevalent motivations.

FIGURE 5.2 Prevalent motivations.

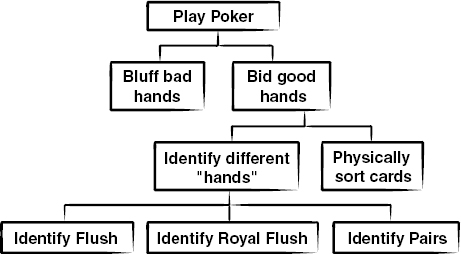

Instructional Objectives

Much has been made of targeted outcome statements or instructional objectives, perhaps beginning with Robert Mager’s insightful and pragmatic how-to books on instructional design, such as Preparing Instructional Objectives (Mager 1997c), Measuring Instructional Results (Mager 1997b), and Goal Analysis (Mager 1997a; Atlanta, GA: Center for Effective Performance). Instructional designers are taught to prepare objectives early in the design process and to list learning objectives at the beginning of each module of instruction. Few classically educated instructional designers would consider omitting the opening list of objectives.

Mager provides three primary reasons why objectives are important:

Objectives . . . are useful in providing a sound basis (1) for the selection or designing of instructional content and procedures, (2) for evaluating or assessing the success of the instruction, and (3) for organizing the learners’ own efforts and activities for the accomplishment of the important instructional intents. (Mager 1997c, p. 6)

There’s no doubt about the first two uses. If you don’t know what abilities you are helping your learners build, how can you know if you’re having them do the right things? As I’ve emphasized before, success depends on people doing the right thing at the right time. If no declaration of the right thing is available, you can neither develop effective training nor measure the effectiveness of it—except, perhaps, by sheer luck. Objectives are a studied and effective way of declaring what the “right thing” is. As Mager says, “if you know where you are going, you have a better chance of getting there” (Mager 1997c, p. 6).

It’s the third point that’s of interest here—using objectives to help learners organize their learning efforts. Certainly objectives can help. When objectives are not present, learners must often guess what is important. In academic or certification contexts, learners without objectives must guess what will be included in the all-important final examination. After they have taken the final exam, learners know how they might have better organized their learning efforts. But, of course, it’s too late by then.

For objectives to provide benefits to learners, learners have to read, understand, and think about them. Unfortunately, learners rarely spend the time to read objectives, much less use them as learning tools. Rather, they discover that the objectives page is, happily, a page that can be skipped over quickly. Learners think, “I’m supposed to do my best to learn whatever is here, so I might as well spend all my time learning it rather than reading about learning it.”

Lists of Objectives Are Not Motivating!

Many designers hope objectives will not only help learners organize their study, but also motivate them to want to learn the included content. Will they? Not if learners don’t read them. How readable are they? It depends, of course, on how they are written.

Accomplished writers know that objectives should have three parts:

- A description of behavior that demonstrates learning

- The criterion for determining acceptable performance

- The conditions in which the performance must be given

Writers have learned the importance of using the right vocabulary; see Table 5.2 to get the gist of it.

TABLE 5.2 Behavioral Objectives—Acceptable Verbs

| Not Measurable | Measurable |

| To know | To recall |

| To understand | To apply |

| To appreciate | To choose |

| To think | To solve |

Measurable behavioral objectives are, indeed, critical components to guide the design of effective training applications. Designers need such objectives, and none of these components should be missing from their design plans. But the question here is about their use as motivators.

You can hardly yawn fast enough when you read a block of statements containing such “proper” objectives as:

Given a typical business letter, you will be able to identify at least 80% of the common errors by underlining inappropriate elements or placing an “X” where essential components are missing.

Motivating? I don’t think so. Objectives are certainly important, but listing such statements as this in bullet points at the start of a program is boring and ineffective. There are better ways to motivate learners.

How about Better-Written Objectives?

You can certainly write objectives in more interesting ways and in ways more relevant to the learner. Frankly, when deciding just how much energy and involvement to commit, learners want to know what’s in it for them (i.e., how it relates to their personal network of motivations). Effective objectives answer the question and give motivation a little boost; see Table 5.3.

TABLE 5.3 More Motivating Objective Statements

| Instead of Saying . . . | You Could Say . . . |

| After you have completed this chapter, given a list of possible e-learning components, you will be able to list the essential components of high-impact e-learning. | In a very short time, say about two hours, you will learn to spot the flaws in typical designs that make e-learning deathly boring and you will know some ways to fix them. Ready? |

Remember that the more fully we can sell the learner on the advantages of learning the material at hand, the more effective the material will be. But if you’ve come to agree on this point, you might wonder whether textual listings of objectives are really the best way to sell anyone on learning. Good thought. We can do better!

Don’t List Objectives

Don’t List Objectives

If learners aren’t going to read objectives, even valuable and well-written objectives, listing them at the beginning of each module of instruction isn’t a very effective thing to do.

In e-learning, we have techniques for drawing attention to vital information. We use interactivity, graphics, animation—in short, all the powers of interactive multimedia—to help learners focus on beneficial content. Why, then, shouldn’t we use these same powers to portray the objectives and sell the learning opportunity to the learner?

Instead of just listing the objectives, provide meaningful and memorable experiences.

Example 1: Put the Learner to Work

Perhaps the clearest statement of possible outcomes comes from setting the learner to work on a stated task. If learners take a try and fail, at least they’ll know exactly what they are going to be able to do when they complete the learning.

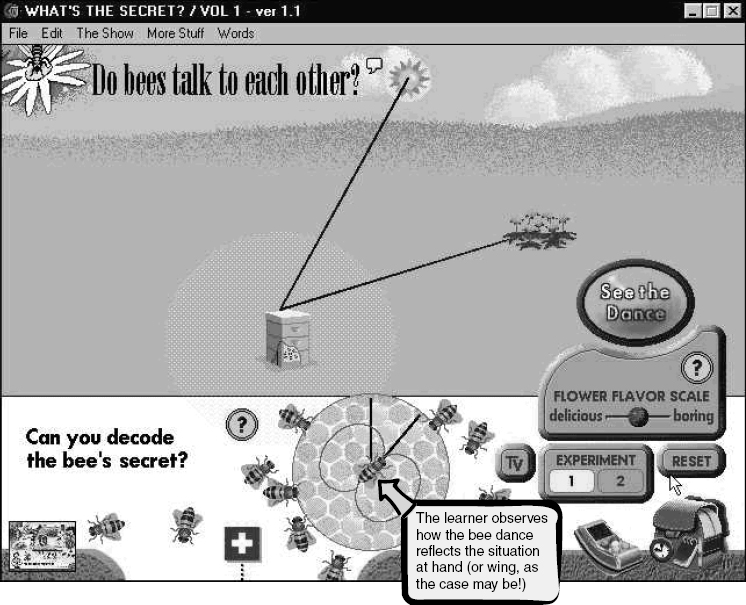

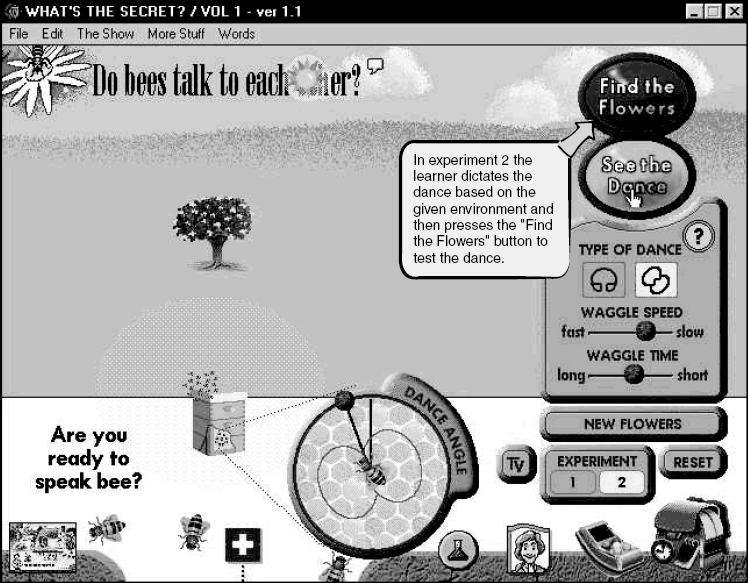

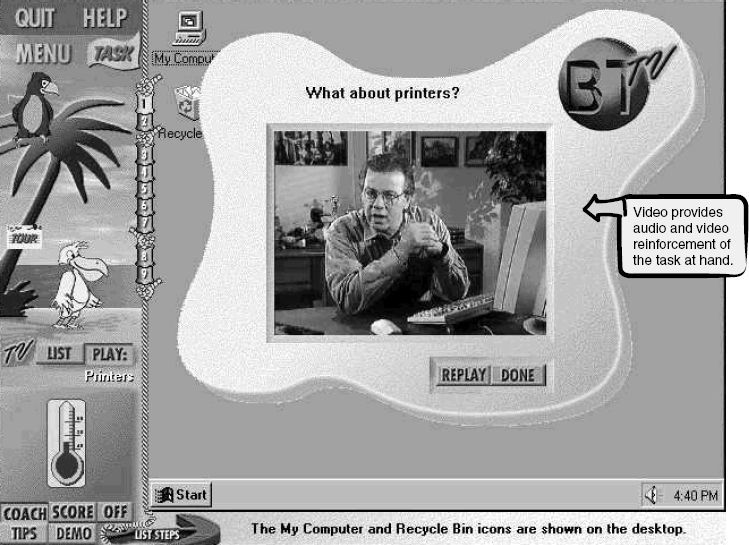

In this example of an award-winning application to teach users the ins and outs of Microsoft Windows (Figures 5.3 to 5.8), individual objectives are quite clear in the presentation of the task itself. Learners are immediately put to work to see if they can select a printer. Many learning aids are available, including a complete demonstration, a demonstration of the next step, hints, and sometimes an amusing video that puts the overall utility of knowing how to perform the task in context. Learners, engaged in their task, are motivated to seek and apply help as needed.

FIGURE 5.3 A meaningful task challenge takes the place of a traditional objective statement.

Breeze Thru Windows 95 Basics. Courtesy of Allen Interactions Inc.

FIGURE 5.4 Various types of aids are available.

Breeze Thru Windows 95 Basics. Courtesy of Allen Interactions Inc.

FIGURE 5.5 Learners can request a demonstration.

Breeze Thru Windows 95 Basics. Courtesy of Allen Interactions Inc.

FIGURE 5.6 Tips are available to suggest alternate ways of completing the task.

Breeze Thru Windows 95 Basics. Courtesy of Allen Interactions Inc.

FIGURE 5.7 Videos add humor and perspective.

Breeze Thru Windows 95 Basics. Courtesy of Allen Interactions Inc.

FIGURE 5.8 Scoring and progress indicators encourage learners to practice for perfection.

Breeze Thru Windows 95 Basics. Courtesy of Allen Interactions Inc.

You might be concerned that some failures, especially early on, will frustrate or demoralize learners. This is an appropriate concern. Interactive features must be provided to help all learners work effectively with risk. Please see Magic Key 2 for a discussion of risk management.

Example 2: Drama

Motivation is not an exclusively cognitive process. Motivations involve our emotions and our physiological drives, as well. Good speakers, writers, and filmmakers are able to spirit us on to take action, to reevaluate currently held positions, and to stir emotions that stick with us and guide our future decisions.

Imagine this scenario. Airline mechanics are to be trained in the process of changing tires on an aircraft in its current position at a gate. You can easily imagine the typical first page of the training materials to read something like this:

At the completion of this course, you will be able to:

- Determine safety concerns and proper methods of addressing each.

- Confirm and cross-check location of tire(s) to replace.

- Obtain appropriate replacement tire.

- Select appropriate chock or antiroll mechanisms and secure according to approved procedures for each.

Now imagine this approach. You press a key to begin your training. Your computer screen slowly fades to full black.

Lightning sounds crash and your screen flickers bright white a few times. You see a few bolts of lightning between the tall panes of commercial windows just barely visible. Through the window, you now see an airplane at the gate. The rain is splashing off the fuselage. A gust of wind makes a familiar sound.

The scene cuts to two men in yellow rain slickers shouting at each other to be heard over the background noise of the storm and airport traffic.

“You’ll have to change that tire in record speed. The pilot insists there’s a problem, and that’s all it takes to mandate the change. There’s going to be a break in the storm and there are thirty-some flights hoping to get out before we’ll probably have to close down again.”

“No problem, Bob. We’re on it.”

Return to the windows, where we now can see people at the gate—a young man in business attire is pacing worriedly past an elderly woman seated near the windows.

“Don’t worry, young man. They won’t take off if the storm presents a serious danger. Even in a storm and with all the possible risks of air travel, I still think it’s the safest way for me to get around.”

“Oh, thanks, ma’am. But you see, my wife is in labor with our first child. I missed an earlier flight by ten minutes, and now this is the last one out tonight. I’m so worried this flight will be delayed for hours—or worse, even cancelled due to weather. I’m not sure what my options are, but I need to get home tonight.”

Back outside, Bob runs up to three mechanics as they run toward him in the rain.

“All done, Bob. A little final paperwork inside where it’s dry, and she’s set to fly.”

“Your team must have set a record. Even under pleasant circumstances, I don’t know any other employees who could have done such a good job so fast. The departure of this aircraft won’t be delayed one minute because of that tire change. A lot of people will benefit from your work tonight.

“This is going into the company’s newsletter!”

I hope you can see how this dramatic context can much more effectively communicate the learning objectives and motivate learners to pursue them. Who wouldn’t want to be a hero in this circumstance? Who wouldn’t begin thinking, “I’ll bet I could learn to do that . . . maybe even do it better.” And then, “I wonder what it takes. Hope I get a chance to find out in this learning program.”

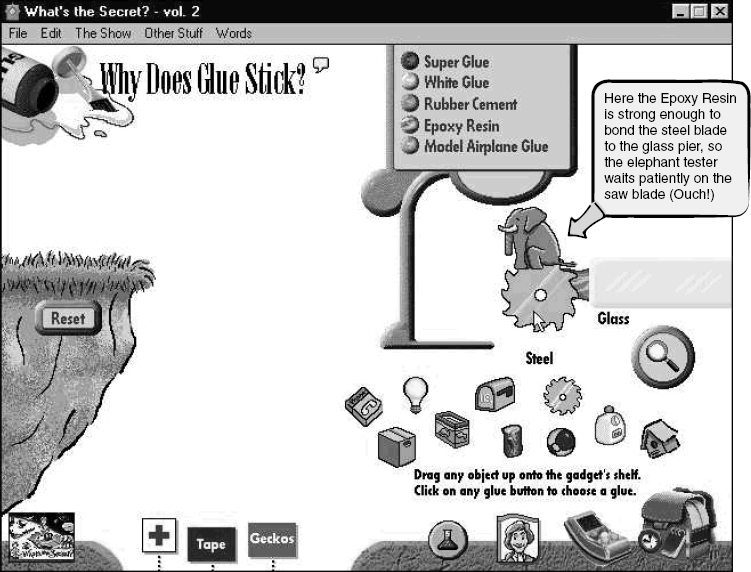

Example 3: Game Quiz

Many interactive courses start with an opening assessment. It’s a sound instructional principle. In order to select appropriate experiences for our learners, we need to know which skills our learners already have and which they don’t.

The problem is that taking a test isn’t an eagerly sought out experience. Many learners, in fact, become terribly fearful just at the thought. They would go to great lengths to avoid being tested, and, if not able to escape the test, may perform far below their actual abilities simply because of fright.

So, while there are good reasons to begin training activities with an assessment, there are also very good reasons not to. The challenge is how to:

- Motivate learners.

- Create a positive attitude about engaging with the e-learning application.

- Determine learner skill levels.

- Communicate what can be learned in the course.

- Set expectations in a convincing manner.

Sometimes it is not so much what you do as how you do it. One of the advantages of technology-based instruction is that learning experiences can be very private. Some of the fear of testing comes from apprehension about what others will think of a poor public performance.

There’s much emotional carryover into e-learning from other experiences, even when concerns about embarrassment truly are not applicable. These concerns cannot be simply ignored, appropriate or not. Experience does show that nearly everyone can become accustomed to, and even appreciate, private testing when the results are kept private and are used to make learning experiences more fitting and enjoyable.

Not all quizzes are alike. While many are threatening, it’s possible to make them fun. By creating as much distance as possible between the feared, graded academic test and a game in which not knowing an answer is fully acceptable and knowing one is a happy surprise to everyone, it’s possible to meet all the challenges previously mentioned. Learners get into it and are energized. They see that there will be humor about mistakes and opportunities to address weaknesses (that’s what the training is all about). They see whether they are beginners, intermediate performers, or even too advanced for the course. They see what content is covered and see some of the things that are important. And finally, if the testing is done very well (perhaps even involving some simulations), they see what kinds of tasks they will be able to perform once the training has been completed.

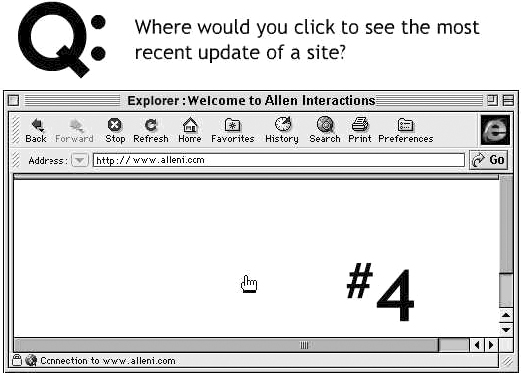

In this example, Fallon Worldwide (an advertising agency) wanted to assess each employee’s level of Internet knowledge to best provide appropriate training. The idea of a formal test was unlikely to be an attractive proposition and would unnecessarily prejudice learners against the effort. This engaging quiz plays more like a game than an assessment tool. It moves quickly, is entertaining in its visual and audio effects, previews and highlights important information about the Internet, and still manages to gather all the desired information (Figures 5.9 to 5.11). You’ll need to check this one out on the CD to get full impact.

FIGURE 5.9 Opening sequence sets expectations for challenge and fun.

Internet Readiness Quiz. Courtesy of Fallon Worldwide.

FIGURE 5.10 Presentation uses engaging and amusing animation to present questions.

Internet Readiness Quiz. Courtesy of Fallon Worldwide.

FIGURE 5.11 Question formats vary with content to give learners a preview of instructional content.

Internet Readiness Quiz. Courtesy of Fallon Worldwide.

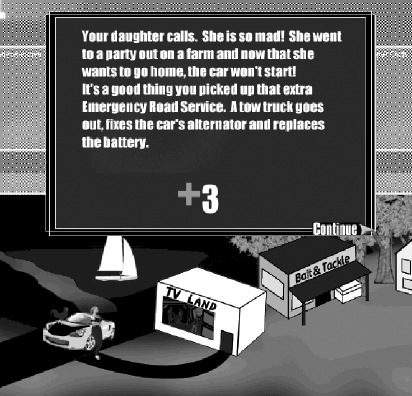

Magic Key 2: Put the Learner at Risk

Magic Key 2: Put the Learner at Risk

When do our senses become most acute? When are we most alive and ready to respond? It’s when we are at risk, when we sense danger (even pretended danger) and must make decisions to avert it. It’s when we see an opportunity to win and the possibility of losing.

Games energize us primarily by putting us at risk and rewarding us for success. Although it’s easy to point to the rewards of winning as the allure, it is also the energizing capabilities of games that make them so attractive. Risk makes games fun to play. It feels good to be active, win or lose.

Proper application of risk seems to provide optimal learning conditions. I used to think, in fact, that putting learners at some level of risk was the only effective motivator worth considering in e-learning and the most frequently omitted essential element. While I have identified other essential elements—the other Magic Keys—I continue to believe that risk is the most effective in the most situations. There are positives and negatives, however; see Table 5.4.

TABLE 5.4 Risk as a Motivator

| The Positives | The Negatives |

| Energizes learners, avoids boredom | May frighten learners, causing anxiety that inhibits learning and performance |

| Focuses learners on primary points and on performance | May rush learners to perform and not allow enough time to build a thorough understanding |

| Builds confidence in meeting challenges through rehearsed success | May damage confidence and self-image through a succession of failures |

Problems with Risk as a Motivator

Consider instructor-led training for a moment. In the classroom, putting the learner at risk can be as simple as the instructor posing a question to an individual learner. The risk to the learner goes far beyond failing to answer correctly. Public performance can affect social status, social image, and self-confidence for better or worse. Even if we’re in a class in which we know none of our classmates, being asked a question typically causes a rush of adrenaline because of what’s at stake.

Competition is a risk-based device used by many classroom instructors to motivate learners. In some of my early work with PLATO, I managed an employee who considered one of PLATO’s greatest strengths to be its underlying communication capabilities, which were able to pit multiple users against each other in various forms of competitive combat. Although these capabilities were often used just for gaming, he was intrigued with the idea of using them to motivate learners.

Competitive e-learning environments can certainly be created, but, unfortunately, pitting learners against each other often constructs a win/lose environment. It may be true that even the losers are gaining strengths, and they may be effectively motivated to do their best as long as they aren’t overly intimidated by the situation or their competitors. But it’s difficult to prevent the nonwinners from seeing themselves as losers.

Private versus Social Learning Environments

There are advantages to being in the company of others when we learn. We are motivated to keep up. We learn from watching the mistakes and successes of others. Communication with others helps round out our understanding. Successful public performance gives us confidence.

On the other hand, the risk of public humiliation is, for many, a fearful risk. While a successful public performance can easily meet our two essential criteria of being a meaningful and memorable learning event, the event may be memorable because of the fear associated with it. And although traumatic experiences may be memorable, the emotional penalty is too high. For many individuals, practice in a private environment avoids all risk of a humiliation and can bring significant learning rewards.

Interestingly, research finds that people respond to their computers as if they were people (Reeves and Nass 1999). We try to win the favor of our computers and respond to compliments extended to us by computer software. Quite surprisingly, solo learning activities undertaken with the computer have more characteristics of social learning environments than one would expect. Talented instructional designers build personality into their instructional software so as to maximize the positive social aspect of the learning environment.

Asynchronous electronic communications such as e-mail and synchronous learning events such as are now possible with various implementations of remote learning can build a sense of being together. Where resources are available to assist learning through such technologies, a stronger social learning environment can be offered, although here, just as with other forms of e-learning, design of learning events, not the mere use of technology, determines the success achieved.

For many organizations, though, one of the greatest advantages of e-learning comes from its constant availability. When there’s a lull in their work, employees can be building skills rather than being simply unproductive while waiting for either more work assignments or scheduled classes to roll around. Unless you can count on the availability of appropriate colearners, it is probably best to design independent learning opportunities.

Don’t Baby Your Learners

Don’t Baby Your Learners

Some organizations are very concerned about frustrating learners, generating complaints, or simply losing learners because they were too strongly challenged. They are so concerned, in fact, that they make sure that learners seldom make mistakes. Learners can cruise through the training, getting frequent rewards for little accomplishment.

The organization expects to get outstanding ratings from learners on surveys, and may get them regardless of whether any significant learning occurred. But even greater ratings may be achieved by actually helping learners improve their skills. More important, both individual and organizational success might be achieved.

Break the rules and put learners at some measure of risk. Then provide structures that avoid the potential perils of doing so. Here are some suggestions:

Avoiding Risk Negatives

The positives of risk are extremely valuable, but they don’t always outweigh the negatives. Fortunately, the negatives can be avoided in almost every instance, so that we’re left only with the truly precious positive benefits. Effective techniques include:

- Allowing learners to ask for the correct answer. If learners see they aren’t forced into a risk and can back out at any time, learners often warm up to taking chances, especially when they see the advantages of doing so.

- Allowing learners to set the level of challenge. Low challenge levels become uninteresting with repetition. Given the option, learners will usually choose successively greater challenges.

- Complimenting learners on their attempts. Some encouragement, even when learner attempts are unsuccessful, does a lot to keep learners trying. It is important for learners to know that a few failures here and there are helpful and respected.

- Providing easier challenges after failures. A few successes help ready learners to take a run at greater challenges.

- Providing multiple levels of assistance. Instead of all or nothing, learners can ask for and receive help ranging from general hints to high-fidelity demonstrations.

Using these techniques, it is possible to offer highly motivating e-learning experiences with almost none of the typical side effects that accompany learning risk in other environments.

Example: Stacked Challenges

Why do kids (and adults) play such video games as the classic Super Mario Bros. for hours on end? Shortly after home versions of the game became available, our son and all the children on our block knew every opportunity hidden throughout hundreds of screens under a multiplicity of changing variables. They stayed up too late and would have missed meals, if allowed to, in order to find every kickable brick in walls of thousands of bricks (Figure 5.12). They learned lightning-fast responses to jump and duck at exactly the right times in hundreds of situations. And for what? Toys? Money? Vacations? Prizes? Nope.

FIGURE 5.12 Computer games successfully teach hundreds of facts and procedures.

We play these games as skillfully as possible to be able to continue playing, to see how far we can get, to avoid dying and having to start over, to feel good about ourselves, and to enjoy the energy of a riskful, but not harmful, situation.

In the process of starting over (and over, and over), we practice all the skills from the basics through the recently acquired skills, overlearning them in the process and becoming ever more proficient. We take satisfaction in confirming our abilities as our adrenaline rises in anticipation of reencountering the challenges that doomed us last time.

Imagine, in contrast, the typical schoolteacher setting out to teach students to identify which bricks hide award points. There would be charts of the many thousands of bricks in walls of various configurations. You can just imagine the homework assignments requiring students to memorize locations by counting over and down so many bricks and circling the correct ones. In class, students would grade each others’ papers.

Students would be so bored that behavior problems would soon erupt. The teacher would remind students that someday in the future, they would be glad they could identify the bricks to kick. Great success would come to those learners who worked diligently. After several years of pushing students through these exercises, the teacher might turn to e-learning for help. With a happy thought of transferring the teaching tedium to the computer, the teacher would create an online version of the same boring instructional activities.

To me, this sounds similar to a lot of corporate training. While we might not see our trainees pulling each others’ hair, making crude noises, and writing on desks, we can be sure they will be thinking about things other than the training content when learning tasks are so uninteresting.

Just teaching one task within the complex behaviors of the venerable Super Mario Bros. player would be a daunting challenge for many educators, yet millions of people have become adroit at these tasks with no instruction—at least with no typical instruction. Why aren’t the obviously effective instructional techniques applied to e-learning, with all its interactive multimedia capabilities?

Good question. Why not, indeed!

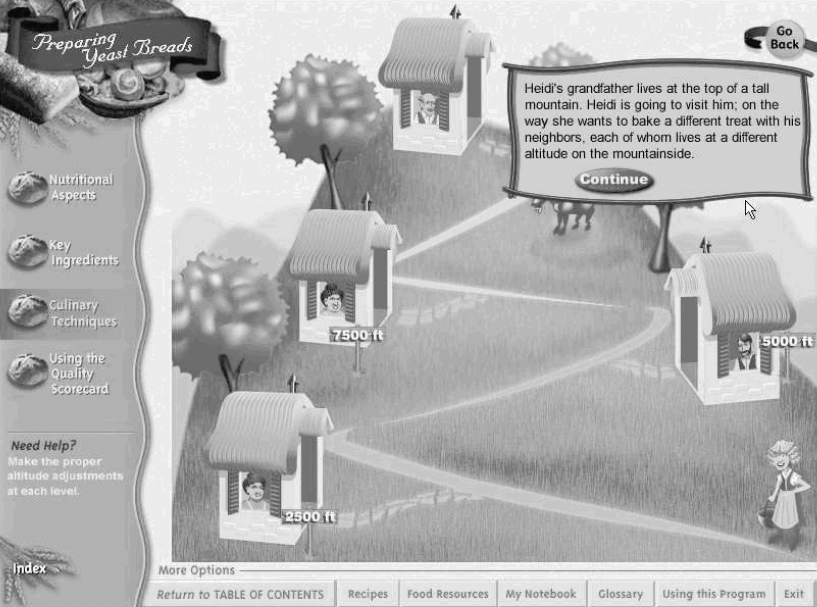

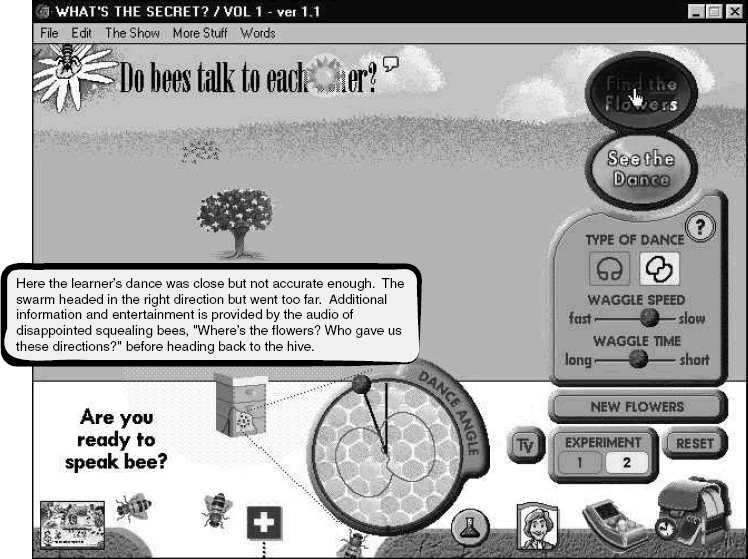

In a project done for the National Food Service Management Institute (NFSMI), Cooking with Flair: Preparing Yeast Breads, Quick Breads, Cakes, Pasta, Rice & Grains, there were two goals: to teach specific culinary techniques and to teach concepts to give learners a deeper understanding of some of the more complicated aspects of quantity food preparation.

Food service workers must be able to adjust recipes for proper results at different altitudes. Adjustments can be made to the leavening, tougheners, tenderizers, liquids, baking temperature, pan greasing, and storage. The lesson provides details of these required adjustments, but it is unlikely that these charts will have any long-lasting effect on a learner who simply reads them (Figure 5.13).

FIGURE 5.13 Explanation of high-altitude baking adjustments provides a lot to remember.

Cooking with Flair: Preparing Yeast Breads, Quick Breads, Cakes, Pasta, Rice & Grains. Courtesy of National Food Service Management Institute, University of Mississippi.

The adjustment tasks are not complex, difficult to understand, or difficult to do: Increase leavening, reduce liquids, and so on. The problem lies in the fact that there are so many factors to be learned. In a typical training design, there would be concern about how you could keep people awake and focused on the task while you tried to train them on all these pieces of information.

But think about how many specific pieces of information children eagerly learn in the Super Mario Bros. games. If they can learn all of that information, then we should similarly be able to teach this information. What techniques can we borrow from the Nintendo game to make an e-learning experience successful?

Repetition and Goals

Repetition is a primary way of getting information stored in our brains (see Figure 3.17). Most theorists believe we first perceive new information, transfer it to short-term memory, and then, through repetition, eventually integrate new information with existing information already held in long-term memory. Whether this description of the internal process is exactly correct really doesn’t matter. What is clearly observable is that repetition facilitates learning, recall, and performance.

The problem is that repetition is generally boring. So, we need a way to make repetition palatable. This is where goals come in. By establishing goals we draw attention away from the repetition itself and can focus on the results that repetition achieves. If learners fail over and over again, they will generally want to stop. However, if they fail in a more advanced place each time, they’re motivated to keep working toward perfection. Visible progress keeps learners trying.

These motivators were incorporated fully into the Heidi altitude baking exercise in Cooking with Flair. NFSMI used the visual context of a mountain that the learner’s character (in this case Heidi rather than Mario) has to climb. She wants to visit her grandfather, who lives at the top, and prepare some baked goods for him to enjoy (Figure 5.14).

FIGURE 5.14 Learners face the challenge of helping Heidi climb a mountain to reach her grandfather.

Cooking with Flair: Preparing Yeast Breads, Quick Breads, Cakes, Pasta, Rice & Grains. Courtesy of National Food Service Management Institute, University of Mississippi.

Along the way, she stops to visit his neighbors, who live at different altitudes (Figure 5.15). Her task is to prepare gifts of baked goods as she travels along (Figure 5.16). The problem is that all her recipes expect preparation at sea level. If she doesn’t adapt them properly, her baked gifts will be terrible (Figure 5.17), and the mountain’s goat will knock Heidi down to the base of the mountain (Figure 5.18). She rolls down giggling—no injuries or violence here (Figure 5.19). The learner must start all over again.

FIGURE 5.15 Learners attempt to adjust recipes for each altitude.

Cooking with Flair: Preparing Yeast Breads, Quick Breads, Cakes, Pasta, Rice & Grains. Courtesy of National Food Service Management Institute, University of Mississippi.

FIGURE 5.16 When learners fail to adjust recipes properly, detailed information supports the learning opportunity.

Cooking with Flair: Preparing Yeast Breads, Quick Breads, Cakes, Pasta, Rice & Grains. Courtesy of National Food Service Management Institute, University of Mississippi.

FIGURE 5.17 When recipe adjustments fail, Heidi meets the goat.

Cooking with Flair: Preparing Yeast Breads, Quick Breads, Cakes, Pasta, Rice & Grains. Courtesy of National Food Service Management Institute, University of Mississippi.

FIGURE 5.18 Heidi rolls down the hill to start all over again.

Cooking with Flair: Preparing Yeast Breads, Quick Breads, Cakes, Pasta, Rice & Grains. Courtesy of National Food Service Management Institute, University of Mississippi.

FIGURE 5.19 After succeeding with several altitude adjustments, learners put extra effort into their final challenges.

Cooking with Flair: Preparing Yeast Breads, Quick Breads, Cakes, Pasta, Rice & Grains. Courtesy of National Food Service Management Institute, University of Mississippi.

Learners don’t need to remember all the detail. Instead, they respond at the generalization level. “At this altitude, do I put in more or less flour?” Decisions the learner must make to adapt recipes for each altitude are simple check boxes, but the challenge is a considerable one, given all the possibilities. Feedback is both specific and dramatic.

Specific information is provided in response to the learner’s choices.

Because learners must start over each time they fall, they get the most practice making adjustments at the 2,500-ft level. The extra practice at 2,500 ft is especially important because, on the job, more learners will have to make adjustments for this sea level than any other.

Intense learner involvement builds quickly in this exercise. Learners feel great satisfaction when they finally get Heidi all the way up the mountain to her grandfather (Figure 5.19).

Memory and interest continue to build as Heidi moves up the mountain and closer to her goal.

Magic Key 3: Select the Right Content for Each Learner

Magic Key 3: Select the Right Content for Each Learner

What’s boring? Presentations of information you already know, find irrelevant, or can’t understand.

What’s interesting?

- Learning how your knowledge can be put to new and valuable uses

- Understanding something that has always been puzzling (such as how those magicians switch places with tigers)

- Seeing new possibilities for success

- Discovering talents and capabilities you didn’t know you had

- Doing something successfully that you’ve always failed at before

Moral? It’s not enough for learners to believe there is value in prospective instruction (so that they have sufficient initial motivation to get involved). There must also be real value for them as learners. The content must fit with what they are ready to learn and capable of learning.

Individualization

From some of the earliest work with computers in education, there has been an alluring vision: a vision that someday technology would make practical the adaptation of instruction to the different needs of individual learners. Some learners grasp concepts easily, whereas others need more time, more examples, more analogies, or more counterexamples. Some can concentrate for long periods of time; others need frequent breaks. Some learn quickly from verbal explanations, others from graphics, others from hands-on manipulation. The vision saw that, while instructors must generally provide information appropriate to the needs of the average learner, technology-delivered instruction could vary the pacing and selection of content as dictated by the needs of each learner.

Not only could appropriate content be selected for each learner’s goals, interests, and abilities, instruction could also be adapted to learning styles, cultural backgrounds, and even personal lifestyles and interests. Perhaps no instructional path would ever be exactly the same for two learners. It would be possible for some learners to take cross-discipline paths, while others might pursue a single topic in great depth before turning to another. Some learners would need to keep diversions to a minimum while working on basic skills; others would benefit from putting basic skills into real-life contexts almost from the beginning. Areas of potential individualization seemed almost endless, but possible. And given enough learners across which to amortize the cost of development, the individualization could be quite practical.

Many classroom instructors do their best to address individual needs, but they know there are severe limits to what they can do specifically for individual learners, especially when there are high levels of variation among learners in a class. The pragmatic issues of determining each learner’s needs, constructing an individual road map, and communicating the plan to the learner are insurmountable even without considering the mainstay of the typical instructor’s day: presenting information. It’s impossible to handle more than a small number of learners in a highly individualized program without the aid of technology.

With e-learning, we do have the technology necessary for truly exciting programs that can adapt effectively to the needs of individual learners. Through technology, the delivery and support of a highly individualized process of instruction is quite practical, and there are actually many ways instruction can be fitted to individual needs.

Fortunately, it’s not necessary to get esoteric in the approach. The simplest approaches contribute great advantages over nonindividualized approaches and probably have the greatest return on investment. It may require an expanded up-front investment, of course, as there’s hardly anything cheaper than delivering one form of the curriculum in the same manner to many learners at the same time. But there are some practical tactics that result in far more individualized instruction than is often provided.

Let’s look at some alternative paradigms to see how instruction can be individualized. Our emphasis here will be on just one parameter—content matching. Efforts to match learning style, psychological profiles, and other learner characteristics are technically possible. But where we haven’t begun to match content to individual needs, we’ll hardly be ready for the more refined subtleties of matching instructional characteristics to learners.

Common Instruction

The most prevalent form of instruction makes no attempt to adapt content to learners. Learners show up. The presentations and activities commence. After a while, a test is given and grades are issued. Done. Next class, please.

This form of instruction has been perpetrated on learners almost since the concept of organized group instruction originated. It’s as simple and inexpensive as you can get, and it’s credible today due not to its effectiveness but to its ubiquity.

It’s unfortunate that so many use common instruction as a model for education and training. This tell-and-test paradigm is truly content-centric. With honorable intentions and dedication, designers frequently put great effort into the organization and presentation of the content and sometimes also into the preparation of test questions, but they do nothing to determine the readiness of learners or the viability of planned presentations for specific individuals. Although the application easily handles any number of learners and has almost no administrative or management problems, it doesn’t motivate the learner.

The effectiveness of common instruction ranges widely, depending on many factors that are usually not considered, such as motivation of the learners, their expectations, their listening or reading skills, their study habits, and their content-specific entry skills. It is often thought that the testing event and grading provide sufficient motivation, so the issue of motivation is moot. Besides, motivation is really the learners’ problem, right? If they don’t learn, they’ll have to pay the consequences—and hopefully will know better next time.

Wrong thinking, at least in the business world. It’s the employer and the organization as a whole who will pay the consequences—consequences that are far greater than most organizations contemplate (as discussed in Chapter 1).

Individualization rating for common instruction:

Selective Instruction

A front end is added to common instruction in the creation of selective instruction. The front end is designed to improve effectiveness of the inevitable common instruction by narrowing the range of learners to be taught. Clever, huh? Change the target audience rather than offer better instruction.

Most American colleges and universities employ selective instruction at an institutional level; that is, they select and prepare to teach only those learners who meet minimum standards. Students are chosen through use of entry examinations, measures of intellectual abilities, academic accomplishments, and so on. The higher those standards can be, the better (well, the easier) the teaching task will be, anyway.

It makes sense that learners who have been highly successful in previous common instruction activities will be likely to again deal effectively with common instruction’s unfortunate limitations. Organizations of higher learning will, therefore, find their instructional tasks simplified. They need to do little in the way of motivating learners and adapting instruction to individual needs. They can just find bright, capable, energetic learners, and then do whatever they want for instruction. The learners will find a way to make the best of it and learn.

It dismays me that our most prestigious institutions boast of the entrance examination scores achieved by their entering learners. What they know, but aren’t saying, is that they will have minimal instructional challenges, and their learners will continue to be outstanding performers. By turning away learners with lower scores, they will have far less challenging instructional tasks and will be able, if they wish, to devote more of their time to research and publishing. They actually could, if they wished (and I don’t mean to suggest that they do), provide the weakest instructional experience and still anticipate impressive learning outcomes.

We do need organizations that can take our best learners and move them to ever higher levels of achievement. But perhaps the more significant instructional challenges lie in working with more typical learners who require greater instructional support for learning. Here, common instruction probably has been less effective than desired and should be supplanted by instruction that is based more on our knowledge of human learning than on tradition.

Individualization rating for selective instruction:

Remedial Instruction

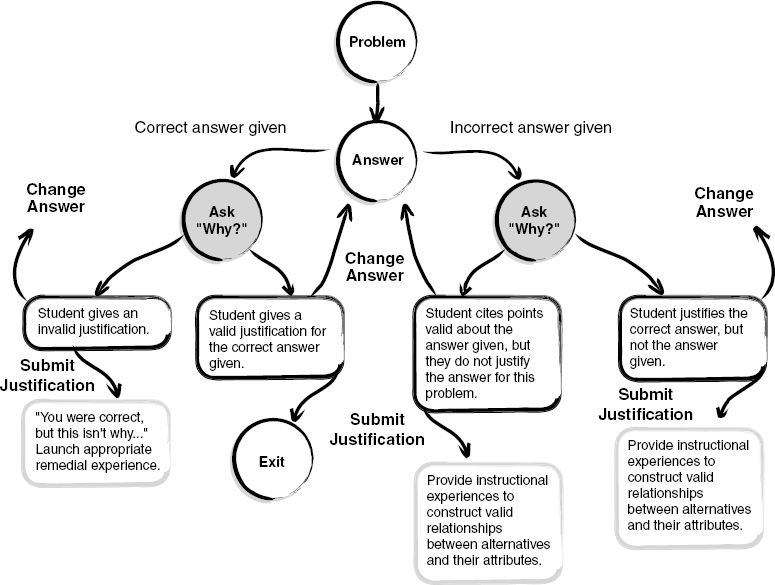

Recognizing that issuing grades is not the purpose of training, some have tried modifying common instruction to actually help failing learners. In this remedial approach, when testing indicates that a learner has not reached an acceptable level of proficiency, alternate instructional events are provided to remedy the situation.

These added events, it is hoped, will bring failing learners to an acceptable level of performance.

Remediation is something of an advance toward purposeful instruction, because, rather than simply issuing grades, it attempts to help all learners achieve needed performance levels.

If learners fail on retesting, it is possible to continue the remediation, perhaps by providing alternate activities and support, until they achieve the required performance levels.

One has to ask, if the remediation is more likely to produce acceptable performance, why isn’t it provided initially? The answer could be that it requires more instructor time or more expensive resources. If many learners can succeed without it, they can move on while special attention is given to the remedial learners.

The unfortunate thing about remedial instruction is that its individualization is based on failure. Until all the telling is done, instructors may not discover that some learners are not adequately prepared for the course, or don’t have adequate language skills, or aren’t sufficiently motivated. Although more appropriate learning events can be arranged after the testing reveals individual problems, precious time may have been wasted. The instructor and many learners (including those for whom the instruction is truly appropriate) may have been grievously frustrated, and remedial learners may be more confused and difficult to teach.

All in all, remediation represents better recognition of the true goals of instruction but employs a very poor process of reaching them.

Individualization Rating for remedial instruction:

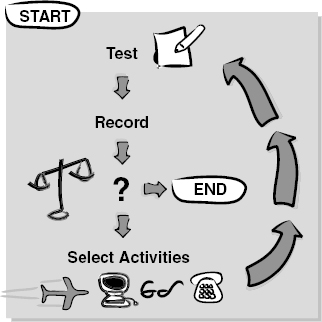

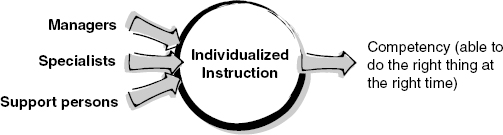

Individualized Instruction

The same components of telling, testing, and deciding what to do next can be resequenced to achieve a remarkably different instructional process. As with so many instructional designs, a seemingly small difference can create a dramatically different experience with consequential differences.

A basic individualized system begins with assessment. The intent of the assessment is to determine the readiness of learners for learning with the instructional support available. Learners are not ready if, on one hand, they already possess the competencies that are the goal of the program, or, on the other hand, they do not meet the necessary entry requirements to understand and work with the instructional program. Unless assumptions can be made accurately, as is rarely the case despite a common willingness to make assumptions regarding learner competencies, it’s very important to test for both entry readiness and prior mastery.

Record keeping is essential for individualized programs to work, because progress and needs are frequently reassessed to be sure the program is responding to the learner’s evolving competencies. Specific results of each testing event must be kept, not just as an overall performance score, but as a measurement of progress and achievement for every instructional objective each learner is pursuing.

As progress is made and records are updated, the questions of readiness and needs are asserted. If there are (or remain) areas of weakness in which the training program can assist, learning activities are selected for the individual learner. Any conceivable type of learning activity that can be offered to the learner can be included, electronically delivered or not, including workbook exercises, group activities, field trips, computer-based training, videos, and so on.

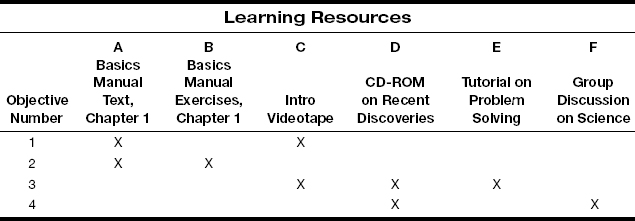

The learning system is greatly empowered if alternate activities are available for each objective or set of objectives. Note that it is not necessary to have separate learning activities available for each objective, although it helps to have some that are limited to single objectives wherever possible. An arrangement suggested by Table 5.5 would be advantageous and perhaps typical. However, great variations are workable; it is often possible to set up an individualized learning program based on a variety of existing support materials.

TABLE 5.5 Learning Resource Selection

Looking at the Table 5.5, you can see how an individualized learning system works. If a learner’s assessment indicated needed instruction on all four objectives, what would you suggest the learner do? You’d probably select resources A and D, because we don’t happen to have one resource that covers all four and because together A and D provide complete coverage of the four objectives.

Further, to their benefit, these two resources cover more than one objective each. When resources cover multiple objectives, it is more likely that they will discuss how various concepts relate to each other. Such discussions provide bridges and a supportive context for deeper understanding. Some supportive context, particularly important for a novice, is likely to be there in the selection of A and D in the preceding example, whereas it would be less likely in the combination of C, B, and F.

Just as with remedial instruction, if a prescribed course of study proves unsuccessful, it’s better to have an alternative available than to simply send the learner back to repeat an unsuccessful experience. If the learner assigned to A and D were reassessed and still showed insufficient mastery of Objective 3, it would probably be more effective to assign Learning Resource E at this point than to suggest repetition of D.

The closed loop of individualized instruction systems returns learners to assessment following learning activities, where readiness and needs are again determined. Once the learner has mastered all the targeted objectives that the learning system supports, the accomplishment is documented, and the learner is ready to move on.

The framework of individualized instruction is very powerful, yet, happily, very simple in concept. Its implementation can be extraordinarily sophisticated, as is the case with some learning management systems (LMSs) or learning content management systems (LCMSs), whether custom-built or off the shelf. However, it can also be built rather simply within an instructional application and have great success.

Note that this structure does not require all learners to pursue exactly the same set or the same order of objectives. Very different programs of study are easily managed. Learners who require advanced work in some areas or reduced immersion in others are easily accommodated.

Individualization Rating for individualized instruction:

Examples

As we have noted, there is a continuum of individualization. Some individualization is more valuable than none, even if the full possibilities of individualized instruction are beyond reach for a particular project. One of the most practical ways of achieving a valuable measure of individualization is to simply reverse the paradigm of tell and test in common instruction.

Fixed Time, Variable Learning

With the almost-never-appropriate tell-and-test approach, exemplified by prevalent instructor-led practices, learners are initially told everything they need to know through classroom presentations, textbooks, videotapes, and other available means. Learners are then tested to see what they have learned (more likely, what they can recall). After the test, if time permits (since instructor-led programs almost always work within a preset, fixed time period, such as a week, quarter, or semester), more telling will be followed by more testing until the time is exhausted. The program appears well-planned if a final test closely follows the final content presentation and occurs just before the time period expires.

When e-learning follows this ancient plan, many of its archaic weaknesses are preserved.

Fixed Content, Variable Learning

Instead of a predetermined time period, content coverage can be the controlling factor. The process of alternately telling and testing is repeated until all the content has been exhausted. The program then ends.

To emphasize the major weakness of this design (among its many flaws), the tell-and-test approach is often characterized as fixed time, variable learning—or, in the case of e-learning implementations, fixed content, variable learning. In other words, the approach does not ensure sufficient learning to meet any standards. It simply ensures that the time slot will be filled or that all the content will be presented.

Fixed Learning, Variable Time and Content

To achieve success through enabling people to do the right thing at the right time, we are clearly most interested in helping all learners master skills. It is not our objective to simply cover all the content or fill the time period. To get everyone on the job and performing as desired as quickly as possible, we would most appropriately choose to let time vary, cover only needed content, and ensure that all learners had achieved competency.

Put the Test First

Put the Test First

Many instructional designers embark on a path that results in tell-and-test applications. They may disguise the underlying approach rather effectively, perhaps by the clever use of technomedia, but the tell-and-test method nevertheless retains all the weaknesses of common instruction and none of the advantages of individualized instruction.

Let’s compare the tell-and-test and test-and-tell methods (see Table 5.6). The advantages gained by simply putting the test first and allowing learners to ask for content assistance as they need it are amazing. As previously noted, just a slight alteration of an instructional approach often makes a dramatic difference. This is one of those cases. There is very little expense or effort difference between tell and test on the one hand and test and tell on the other, but the learning experiences are almost fundamentally different.

TABLE 5.6 Selecting Content to Match Learner Needs

| Tell and Test | Test and Tell |

| Because they have to wait for the test to reveal what is really important to learn (or it wouldn’t be on the test), learners may have to guess at what is important during the extended tell time. They may discover too late that they have misunderstood as they stare at unexpected questions on the test that will determine their grades. | Learners are immediately confronted with challenges that the course will enable them to meet. Learners witness instantly what they need to be learning. |

| All learners receive the same content presentations. | Learners can skip over content they already know as evidenced by their initial performance. |

| Learners are passive, but they try to absorb the content slung at them, which they hope will prove to be empowering at some point. | Learners in well-designed test-and-tell environments become active learners; they are encouraged to ask for help when they cannot handle test items. The presentation material (which can be much the same as that used in tell and test) is presented in pieces relevant to specific skills. Learners see the need for it and put it to use. |

| It’s boring. | Not likely to be boring. |

Getting novice designers to break the tendency to begin their applications with a lot of telling is not simple. Indeed, almost everyone seems to have the tendency to launch into content presentation as the natural, appropriate, and most essential thing to do. I have been frustrated over the years as my learners and employees, especially novices to instructional design, have found themselves drawn almost magnetically to this fundamental error. I have, however, discovered a practical remedy: After designers complete their first prototype, I simply ask them to switch it around in the next iteration. This makes content presentations available on demand and subject to learner needs.

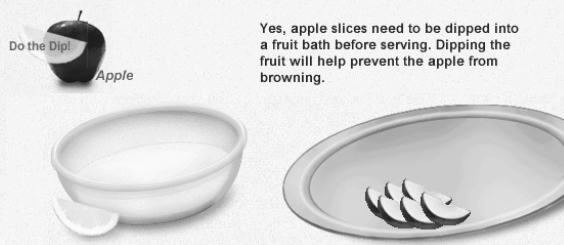

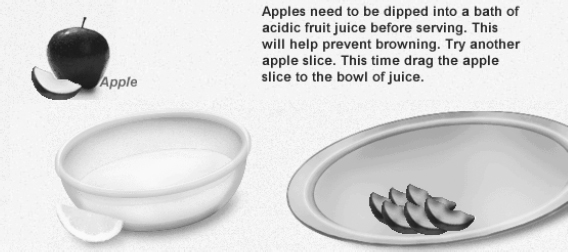

Another example drawn from e-learning initiatives at NFSMI illustrates the power of the test-and-tell technique. A companion CD to the Breads and Grains module illustrated earlier, Cooking with Flair: Preparing Fruits, Salads, and Vegetables, teaches a range of facts and procedural information regarding the preparation of fruits and vegetables. The target learners usually have some general but incomplete knowledge of the topics to be covered, but the gaps in their knowledge vary greatly. A typical strategy in such a context is to tell everybody everything, regardless of whether they need it. This is rarely helpful, as learners tend to stop paying attention when forced to read information that they already know. This is exactly the situation in which test and tell is most powerful.

We’ll look at a content area called “Do the Dip,” in which learners are to master information about how to keep freshly cut fruit from turning brown (Figure 5.20). The principle at hand is that fruits that tend to darken should be dipped in a dilute solution of citrus juice; some other fruits do not need to be treated in this way. The learner is to learn which common fruits this rule applies to. The setup here is very simple. Learners are given a very brief statement that sets the context. Then they are immediately tasked with the job of preparing a fruit tray. They can place the cut fruit directly on the serving tray, or they can first dip the fruit in a dilute solution of lemon juice.

FIGURE 5.20 Learner must decide whether it is appropriate to dip fruit in preparing fresh fruit tray.

Cooking with Flair: Preparing Fruits, Salads, and Vegetables. Courtesy of National Food Service Management Institute, University of Mississippi.

If the user correctly dips a fruit, the information regarding that choice is confirmed with a message and a Do the Dip! flag on the fruit (Figure 5.21). Whether the learner already knew the concept or guessed correctly, the “tell” part of the instruction is contained in the feedback—presented after the learner’s attention has been focused by the challenge.

FIGURE 5.21 Correct response is reinforced with Do the Dip! stamp on selected fruit.

Cooking with Flair: Preparing Fruits, Salads, and Vegetables. Courtesy of National Food Service Management Institute, University of Mississippi.

How about when the learner is wrong? When a fruit is not dipped when it should be, the fruit turns brown and unappealing, while instructional text specific to the error is presented on the screen (Figure 5.22).

FIGURE 5.22 Incorrect response results in brown, unappealing fruit; user must repeat this fruit correctly.

Cooking with Flair: Preparing Fruits, Salads, and Vegetables. Courtesy of National Food Service Management Institute, University of Mississippi.

After the exercise is complete, the learner is left with a summary screen: a complete and attractive fruit platter and screen elements that further reinforce the lesson of which fruits require a citrus treatment (Figure 5.23).

FIGURE 5.23 Success results in completed tray and review of learned content through markers on fruit.

Cooking with Flair: Preparing Fruits, Salads, and Vegetables. Courtesy of National Food Service Management Institute, University of Mississippi.

This is a small and straightforward application of the test-and-tell principle. You should notice this magic key in action in more sophisticated ways in nearly every other example described in this book. It’s engaging, efficient, and effective. Nonetheless, a common response from instructional designers is, “Isn’t it unfair to ask learners to do a task for which you haven’t prepared them?” (Interestingly, we rarely if ever hear this complaint from actual students!) But it really isn’t unfair at all. Quite the reverse: The challenge focuses learning attention to the task at hand, allows learners with experience with the content to succeed quickly (saving time and frustration), provides learning content to the right people at the right time, and motivates all learners to engage in critical thinking about a task instead of simply being passive recipients of training. When the “tell” information is presented in the context of a learner action (i.e., right after a mistake), learners are in an optimal position to assimilate the new information meaningfully into their existing understanding of the topic.

Magic Key 4: Use an Appealing Context

Magic Key 4: Use an Appealing Context

Learning contexts have many attributes. The delivery system is one very visible attribute, as is the graphic design or aesthetics of an e-learning application. More important than these, however, are the role the learner might be asked to play, the relevance and strength of the situational context, and the dramatic presentation of contextual content.

It’s easy to confuse needed attributes of the learning context with other contextual issues. Unfortunately, such confusion can lead to much weaker applications than intended. However, strong learning context can amplify the unique learning capabilities of e-learning technologies. Let’s first look at some mistakes that have continued since the early days of e-learning.

The Typing Ball Syndrome

When I did my first major project with computer-based instruction, it was with computer terminals connected to a remote IBM 360 central computer. The terminals were IBM 3270s, which had no display screen but were more like typewriters, employing an IBM Selectric typewriter ball (Figure 5.24).

FIGURE 5.24 IBM Selectric typewriter and ball.

Continuous sheets of fanfold paper were threaded into the machine. A ball spun and tilted almost magically to align each character to be typed. With thin flat wires manipulating the ball’s mechanism, the ball, like a mesmerized marionette, hammered the typewriter ribbon to produce crisp, perfectly aligned text.

Selectric typewriters were something of an engineering fascination. They so quickly produced a clean, sharp character in response to a tap on the keyboard, it was hard to see the movement. And yet, since a perfect character appeared on the paper, you knew the ball had spun to precisely the correct place and had tapped against the ribbon with exactly the right force.

If the Selectric typewriter was fascinating, the IBM 3270 Terminal was enthralling. Its Selectric ball typed by itself like a player piano! Looking very much like the Selectric typewriter with a floor stand, the terminal was able to receive output from a remote computer and could type faster than any human. The keys on the keyboard didn’t move. The ball just did its dance while letters appeared on the paper.

The computer could type out information and learners could type their answers to be evaluated by the computer. The technology was fascinating. There was much talk about this being the future of education. Classroom delivery of instruction was clearly near the end of its life span, and, despite fears of lost teaching jobs, there was great excitement that private, personalized learning supported by amazingly intelligent computers would truly make learning fun and easy.

Novelty versus Reality

There were some very positive signs that technology-led instruction would indeed be a reality in the near future. Learners eagerly signed up for courses taught with the use of computer terminals. Seats filled up rapidly, requiring dawdling learners to sign up for traditionally taught classes or wait another term to see if they would be lucky enough to get into one of the computer-assisted classes.

But some disturbing realities crept in, realities that countered initial perceptions and rosy predictions. For one thing, it took a lot longer for information to be typed out than to find it in a book. While you didn’t get to watch them being typed, books were pretty fast at presenting information—and they were portable, too. They didn’t make any noise, either—so they could be used in libraries and other quiet places that are conducive to study. They often included graphics and visual aids—things our Selectric typewriters couldn’t provide. You could earmark a page and easily review. You could skim ahead to get some orientation and get a preview of what was to come.