![]()

Considering Data Access Strategies

At this point in the process of covering the topic of database design, we have designed and implemented the database, devised effective security and indexing strategies, implemented concurrency controls, organized the database(s) into a manageable package and taken care of all of the other bits and pieces that go along with the task of creating a database. The next logical step is to decide on the data-access strategy and how best to implement and distribute data-centric business logic. Of course, in reality, design is not really a linear process, as performance tuning and indexing require a test plan, and data access is going to require that tools and some form of UI has been designed and that tools have been chosen to get an idea of how the data is going to be accessed. In this chapter I will take a rather brief look at some data access concerns and provide pros and cons of different methods…in other words opinions, technically-based opinions nevertheless.

Regardless of whether your application is a good, old-fashioned, client-server application, a multi-tier web application, uses an object relational mapper, or uses some new application pattern that hasn’t been yet been created, data must be stored in and retrieved from tables. Therefore, most of the advice presented in this chapter will be relevant regardless of the type of application you’re building. One way or another, you are going to have to build some interface between the data and the applications that use them. At minimum I will point out some of the pros and cons of the methods you will be using.

The really “good” arguments tend to get started about the topics in this chapter. Take every squabble to decide whether or not to use surrogate keys as primary keys, add to it all of the discussions about whether or not to use triggers in the application, and then multiply that by the number of managers it takes to screw in a light bulb, which I calculate as about 3.5. That is about how many times I have argued with a system implementer to decide whether stored procedures were a good idea.

![]() Note The number of managers required to screw in a light bulb, depending on the size of the organization, would generally be a minimum of three or four: one manager to manage the person who notices the burnt out bulb, the manager of building services, and the shift manager of the person who actually changes light bulbs. Sometimes, the manager of the two managers might have to get involved to actually make things happen, so figure about three and a half.

Note The number of managers required to screw in a light bulb, depending on the size of the organization, would generally be a minimum of three or four: one manager to manage the person who notices the burnt out bulb, the manager of building services, and the shift manager of the person who actually changes light bulbs. Sometimes, the manager of the two managers might have to get involved to actually make things happen, so figure about three and a half.

In this chapter, I will present a number of opinions on how to use stored procedures, ad hoc SQL , and the CLR. Each of these opinions is based on years of experience working with SQL Server technologies, but I am not so set in my ways that I cannot see the point of the people on the other side of any fence—anyone whose mind cannot be changed is no longer learning. So if you disagree with this chapter, feel free to e-mail me at [email protected] ; you won’t hurt my feelings and we will both probably end up learning something in the process.

In this chapter, I am going to discuss the following topics:

- Using ad hoc SQL: Formulating queries in the application’s presentation and manipulation layer (typically functional code stored in objects, such as .NET or Java, and run on a server or a client machine).

- Using stored procedures: Creating an interface between the presentation/manipulation layer and the data layer of the application. Note that views and functions, as well as procedures, also form part of this data-access interface. You can use all three of these object types.

- Using CLR in T-SQL : In this section, I will present some basic opinions on the usage of the CLR within the realm of T-SQL.

Each section will analyze some of the pros and cons of each approach, in terms of flexibility, security, performance, and so on. Along the way, I’ll offer some personal opinions on optimal architecture and give advice on how best to implement both types of access.

![]() Note You may also be thinking that another thing I might discuss is object-relational mapping tools, like Hibernate, Spring, or even the ADO.NET Entity Framework. In the end, however, these tools are really using ad hoc access, in that they are generating SQL on the fly. For the sake of this book, they should be lumped into the ad hoc group, unless they are used with stored procedures (which is pretty rare).

Note You may also be thinking that another thing I might discuss is object-relational mapping tools, like Hibernate, Spring, or even the ADO.NET Entity Framework. In the end, however, these tools are really using ad hoc access, in that they are generating SQL on the fly. For the sake of this book, they should be lumped into the ad hoc group, unless they are used with stored procedures (which is pretty rare).

The most difficult part of a discussion of this sort is that the actual arguments that go on are not so much about right and wrong, but rather the question of which method is easier to program and maintain. SQL Server and Visual Studio .NET give you lots of handy-dandy tools to build your applications, mapping objects to data, and as the years pass, this becomes even truer with the Entity Framework and many of the object relational mapping tools out there.

The problem is that these tools don’t always take enough advantage of SQL Server’s best practices to build applications in their most common form of usage. Doing things in a best practice manner would mean doing a lot of coding manually, without the ease of automated tools to help you. Some organizations do this manual work with great results, but such work is rarely going to be popular with developers who have never hit the wall of having to support an application that is extremely hard to optimize once the system is in production.

A point that I really should make clear is that I feel that the choice of data-access strategy shouldn’t be linked to the methods used for data validation nor should it be linked to whether you use (or how much you use) check constraints, triggers, and such. If you have read the entire book, you should be kind of tired of hearing how much I feel that you should do every possible data validation on the SQL Server data that can be done without making a maintenance nightmare. Fundamental data rules that are cast in stone should be done on the database server in constraints and triggers at all times so that these rules can be trusted by the user (for example, an ETL process). On the other hand, procedures or client code is going to be used to enforce a lot of the same rules, plus all of the mutable business rules too, but in either situation, non-data tier rules can be easily circumvented by using a different access path. Even database rules can be circumvented using bulk loading operations, so be careful there too.

![]() Note While I stand by all of the concepts and opinions in this entire book (typos not withstanding), I definitely do not suggest that your educational journey end here. Please read other people’s work, try out everything, and form your own opinions. If some day you end up writing a competitive book to mine, the worst thing that happens is that people have another resource to turn to.

Note While I stand by all of the concepts and opinions in this entire book (typos not withstanding), I definitely do not suggest that your educational journey end here. Please read other people’s work, try out everything, and form your own opinions. If some day you end up writing a competitive book to mine, the worst thing that happens is that people have another resource to turn to.

Ad Hoc SQL

Ad hoc SQL is sometimes referred to as “straight SQL” and generally refers to the formulation of SELECT, INSERT, UPDATE, and DELETE statements (as well as any others) in the client. These statements are then sent to SQL Server either individually or in batches of multiple statements to be syntax checked, compiled, optimized (producing a plan), and executed. SQL Server may use a cached plan from a previous execution, but it will have to pretty much exactly match the text of one call to another to do so, the only difference can be some parameterization of literals, which we will discuss a little later in the chapter.

I will make no distinction between ad hoc calls that are generated manually and those that use a middleware setup like LINQ: from SQL Server’s standpoint, a string of characters is sent to the server and interpreted at runtime. So whether your method of generating these statements is good or poor is of no concern to me in this discussion, as long as the SQL generated is well formed and protected from users’ malicious actions. (For example, injection attacks are generally the biggest offender. The reason I don’t care where the ad hoc statements come from is that the advantages and disadvantages for the database support professionals are pretty much the same, and in fact, statements generated from a middleware tool can be worse, because you may not be able to change the format or makeup of the statements, leaving you with no easy way to tune statements, even if you can modify the source code.

Sending queries as strings of text is the way that most tools tend to converse with SQL Server, and is, for example, how SQL Server Management Studio does all of its interaction with the server metadata. If you have never used Profiler to watch the SQL that any of management and development tools uses, you should; just don’t use it as your guide for building your OLTP system. It is, however, a good way to learn where some bits of metadata that you can’t figure out come from.

There’s no question that users will perform some ad hoc queries against your system, especially when you simply want to write a query and execute it just once. However, the more pertinent question is: should you be using ad hoc SQL when building the permanent interface to an OLTP system’s data?

![]() Note This topic doesn’t include ad hoc SQL statements executed from stored procedures (commonly called dynamic SQL), which I’ll discuss in the section "Stored Procedures."

Note This topic doesn’t include ad hoc SQL statements executed from stored procedures (commonly called dynamic SQL), which I’ll discuss in the section "Stored Procedures."

Advantages

Using uncompiled ad hoc SQL has the following advantages over building compiled stored procedures:

- Runtime control over queries : Queries are built at runtime, without having to know every possible query that might be executed. This can lead to better performance as queries can be formed at runtime; you can retrieve only necessary data for SELECT queries or modify data that’s changed for UPDATE operations.

- Flexibility over shared plans and parameterization: Because you have control over the queries, you can more easily build queries at runtime that use the same plans and even can be parameterized as desired, based on the situation.

Runtime Control over Queries

Unlike stored procedures, which are prebuilt and stored in the SQL Server system tables, ad hoc SQL is formed at the time it’s needed: at runtime. Hence, it doesn’t suffer from some of the inflexible requirements of stored procedures. For example, say you want to build a user interface to a list of customers. You can add several columns to the SELECT clause, based on the tables listed in the FROM clause. It’s simple to build a list of columns into the user interface that the user can use to customize his or her own list. Then the program can issue the list request with only the columns in the SELECT list that are requested by the user. Because some columns might be large and contain quite a bit of data, it’s better to send back only the columns that the user really desires instead of also including a bunch of columns the user doesn’t care about.

For instance, consider that you have the following table to document contacts to prospective customers (it’s barebones for this example). In each query, you might return the primary key but show or not show it to the user based on whether the primary key is implemented as a surrogate or natural key—it isn’t important to our example either way. You can create this table in any database you like. In the sample code, I’ve created a database named architectureChapter.

CREATE SCHEMA sales;

GO

CREATE TABLE sales.contact

(

contactId int CONSTRAINT PKsales_contact PRIMARY KEY,

firstName varchar(30),

lastName varchar(30),

companyName varchar(100),

salesLevelId int, --real table would implement as a foreign key

contactNotes varchar(max),

CONSTRAINT AKsales_contact UNIQUE (firstName, lastName, companyName)

);

--a few rows to show some output from queries

INSERT INTO sales.contact

(contactId, firstName, lastname, companyName, saleslevelId, contactNotes)

VALUES( 1,'Drue','Karry','SeeBeeEss',1,

REPLICATE ('Blah…',10) + 'Called and discussed new ideas'),

( 2,'Jon','Rettre','Daughter Inc',2,

REPLICATE ('Yada…',10) + 'Called, but he had passed on'),

One user might want to see the person’s name and the company, plus the end of the contactNotes, in his or her view of the data:

SELECT contactId, firstName, lastName, companyName,

RIGHT(contactNotes,30) AS notesEnd

FROM sales.contact;

So something like:

| contactId | firstName | lastName | companyName | notesEnd |

| --------- | --------- | -------- | ------------ | ------------------------------ |

| 1 | Drue | Karry | SeeBeeEss | Called and discussed new ideas |

| 2 | Jon | Rettre | Daughter Inc | ..Called, but he had passed on |

Another user might want (or need) to see less:

SELECT contactId, firstName, lastName, companyName

FROM sales.contact;

Which returns:

| contactId | firstName | lastName | companyName |

| --------- | -------- | -------- | ------------ |

| 1 | Drue | Karry | SeeBeeEss |

| 2 | Jon | Rettre | Daughter Inc |

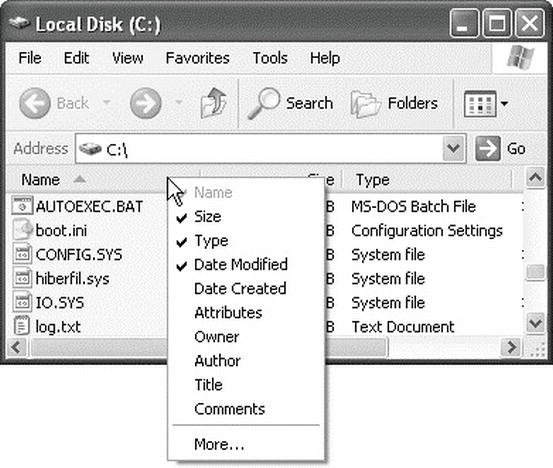

And yet another may want to see all columns in the table, plus maybe some additional information. Allowing the user to choose the columns for output can be useful. Consider how the file-listing dialog works in Windows, as shown in Figure 13-1.

Figure 13-1. The Windows file-listing dialog

You can see as many or as few of the attributes of a file in the list as you like, based on some metadata you set on the directory. This is a useful method of letting the users choose what they want to see. Let’s take this one step further. Consider that the contact table is then related to a table that tells us if a contact has purchased something:

CREATE TABLE sales.purchase

(

purchaseId int CONSTRAINT PKsales_purchase PRIMARY KEY,

amount numeric(10,2),

purchaseDate date,

contactId int

CONSTRAINT FKsales_contact$hasPurchasesIn$sales_purchase

REFERENCES sales.contact(contactId)

);

INSERT INTO sales.purchase(purchaseId, amount, purchaseDate, contactId)

VALUES (1,100.00,'2012-05-12',1),(2,200.00,'2012-05-10',1),

(3,100.00,'2012-05-12',2),(4,300.00,'2012-05-12',1),

(5,100.00,'2012-04-11',1),(6,5500.00,'2012-05-14',2),

(7,100.00,'2012-04-01',1),(8,1020.00,'2012-06-03',2);

Now consider that you want to calculate the sales totals and dates for the contact and add these columns to the allowed pool of choices. By tailoring the output when transmitting the results of the query back to the user, you can save bandwidth, CPU, and disk I/O. As I’ve stressed, values such as this should usually be calculated rather than stored, especially when working on an OLTP system.

In this case, consider the following two possibilities. If the user asks for a sales summary column, the client will send the whole query:

SELECT contact.contactId, contact.firstName, contact.lastName,

sales.yearToDateSales, sales.lastSaleDate

FROM sales.contact AS contact

LEFT OUTER JOIN

(SELECT contactId,

SUM(amount) AS yearToDateSales,

MAX(purchaseDate) AS lastSaleDate

FROM sales.purchase

WHERE purchaseDate >= --the first day of the current year

DATEADD(day, 0, DATEDIFF(day, 0, SYSDATETIME() )

- DATEPART(dayofYear,SYSDATETIME() ) + 1)

GROUP BY contactId) AS sales

ON contact.contactId = sales.contactId

WHERE contact.lastName Like 'Rett%';

Which returns:

| contactId | firstName | lastName | yearToDateSales | lastSaleDate |

| ---------- | --------- | -------- | --------------- | ------------ |

| 2 | Jon | Rettre | 6620.00 | 2012-06-03 |

If the user doesn’t ask for a sales summary column, the client will send only the bolded query:

SELECT contact.contactId, contact.firstName, contact.lastName

-- ,sales.yearToDateSales, sales.lastSaleDate

FROM sales.contact AS contact

--LEFT OUTER JOIN

-- (SELECT contactId,

-- SUM(amount) AS yearToDateSales,

-- MAX(purchaseDate) AS lastSaleDate

-- FROM sales.purchase

-- WHERE purchaseDate >= --the first day of the current year

-- DATEADD(Day, 0, DATEDIFF(Day, 0, SYSDATETIME() )

-- -DATEPART(dayofyear,SYSDATETIME() ) + 1)

-- GROUP by contactId) AS sales

-- ON contact.contactId = sales.contactId

WHERE contact.lastName Like 'Karr%';

Which only returns:

contactId firstName lastName

---------- --------- ---------

1 Drue Karry

Not wasting the resources to do calculations that aren’t needed can save a lot of system resources if the aggregates in the derived table were very costly to execute

In the same vein, when using ad hoc calls, it’s trivial (from a SQL standpoint) to build UPDATE statements that include only the columns that have changed in the set lists, rather than updating all columns, as can be necessary for a stored procedure. For example, take the customer columns from earlier: customerId, name, and number. You could just update all columns:

UPDATE sales.contact

SET firstName = 'Drew',

lastName = 'Carey',

salesLevelId = 1, --no change

companyName = 'CBS',

contactNotes = 'Blah…Blah…Blah…Blah…Blah…Blah…Blah…Blah…Blah…'

+ 'Blah…Called and discussed new ideas' --no change

WHERE contactId = 1;

But what if only the firstName and lastName columns change? What if the company column is part of an index, and it has data validations that take three seconds to execute? How do you deal with varchar(max) columns (or other long types)? Say the notes columns for contactId = 1 contain 3 MB each. Execution could take far more time than is desirable if the application passes the entire value back and forth each time. Using ad hoc SQL, to update the firstName column only, you can simply execute the following code:

UPDATE sales.contact

SET firstName = 'John',

lastName = 'Ritter'

WHERE contactId = 1;

Some of this can be done with dynamic SQL calls built into the stored procedure, but it’s far easier to know if data changed right at the source where the data is being edited, rather than having to check the data beforehand. For example, you could have every data-bound control implement a “data changed” property, and perform a column update only when the original value doesn’t match the value currently displayed. In a stored-procedure-only architecture, having multiple update procedures is not necessarily out of the question, particularly when it is very costly to modify a given column.

One place where using ad hoc SQL can produce more reasonable code is in the area of optional parameters. Say that, in your query to the sales.contact table, your UI allowed you to filter on either firstName, lastName, or both. For example, take the following code to filter on both firstName and lastName:

SELECT firstName, lastName, companyName

FROM sales.contact

WHERE firstName Like 'J%'

AND lastName Like 'R%';

What if the user only needed to filter by last name? Sending the '%' wildcard for firstName can cause code to perform less than adequately, especially when the query is parameterized. (I’ll cover query parameterization in the next section, “Performance.”)

SELECT firstName, lastName, companyName

FROM sales.contact

WHERE firstName LIKE '%'

AND lastName LIKE 'Carey%';

If you think this looks like a very silly query to execute, you are right. If you were writing this query, you would write the more logical version of this query, without the superfluous condition:

SELECT firstName, lastName, companyName

FROM sales.contact

WHERE lastName LIKE 'Carey%';

This doesn’t require any difficult coding. Just remove one of the criteria from the WHERE clause, and the optimizer needn’t consider the other. What if you want to OR the criteria instead? Simply build the query with OR instead of AND. This kind of flexibility is one of the biggest positives to using ad hoc SQL calls.

![]() Note The ability to change the statement programmatically does sort of play to the downside of any dynamically built statement, as now, with just two parameters, we have three possible variants of the statement to be used, so we have to consider performance for all three when we are building our test cases.

Note The ability to change the statement programmatically does sort of play to the downside of any dynamically built statement, as now, with just two parameters, we have three possible variants of the statement to be used, so we have to consider performance for all three when we are building our test cases.

For a stored procedure, you might need to write code that functionally works in a manner such as the following:

IF @firstNameValue <> '%'

SELECT firstName, lastName, companyName

FROM sales.contact

WHERE firstName LIKE @firstNameValue

AND lastName LIKE @lastNameValue;

ELSE

SELECT firstName, lastName, companyName

FROM sales.contact

WHERE lastName LIKE @lastNameValue;

Or do something messy like this in your WHERE clause so if there is any value passed in it uses it, or uses '%' otherwise:

WHERE Firstname Like isnull(nullif(ltrim(@FirstNamevalue) +'%','%'),Firstname)

and Lastname Like isnull(nullif(ltrim(@LastNamevalue) +'%','%'),Lastname)

Unfortunately though, this often does not optimize very well because the optimizer has a hard time optimizing for factors that can change based on different values of a variable—leading to the need for the branching solution mentioned previously to optimize for specific parameter cases. A better way to do this with stored procedures might be to create two stored procedures—one with the first query and another with the second query—especially if you need extremely high performance access to the data. You’d change this to the following code:

IF @firstNameValue <> '%'

EXECUTE sales.contact$get @firstNameValue, @lastNameValue;

ELSE

EXECUTE sales.contact$getLastOnly @lastNameValue;

You can do some of this kind of ad hoc SQL writing using dynamic SQL in stored procedures. However, you might have to do a good bit of these sorts of IF blocks to arrive at which parameters aren’t applicable in various datatypes. Because you know which parameters are applicable, due to knowing what the user filled in, it can be far easier to handle this situation using ad hoc SQL. Getting this kind of flexibility is the main reason that I use an ad hoc SQL call in an application (usually embedded in a stored procedure): you can omit parts of queries that don’t make sense in some cases, and it’s easier to avoid executing unnecessary code.

Flexibility over Shared Plans and Parameterization

Queries formed at runtime, using proper techniques, can actually be better for performance in many ways than using stored procedures. Because you have control over the queries, you can more easily build queries at runtime that use the same plans, and even can be parameterized as desired, based on the situation.

This is not to say that it is the most favorable way of implementing parameterization. (If you want to know the whole picture you have to read the whole section on ad hoc and stored procedures.) However, the fact is that ad hoc access tends to get a bad reputation for something that Microsoft fixed several versions back. In the following sections, Shared Execution Plans and Parameterization, I will take a look at the good points and the caveats you will deal with when building ad hoc queries and executing them on the server.

The age-old reason that people used stored procedures was because the query processor cached their plans. Every time you executed a procedure, you didn’t have to decide the best way to execute the query. As of SQL Server 7.0 (which, was released in 1998!), cached plans were extended to include ad hoc SQL. However, the standard for what can be cached is pretty strict. For two calls to the server to use the same plan, the statements that are sent must be identical, except possibly for the literal values in search arguments. Identical means identical; add a comment, change the case, or even add a space character, and the plan will no longer match. SQL Server can build query plans that have parameters, which allow plan reuse by subsequent calls. However, overall, stored procedures are better when it comes to using cached plans for performance, primarily because the matching and parameterization are easier for the optimizer to do, since it can be done by object_id, rather than having to match larger blobs of text.

A fairly major caveat is that for ad hoc queries to use the same plan, they must be exactly the same, other than any values that can be parameterized. For example, consider the following two queries. (I’m using AdventureWorks2012 tables for this example, as that database has a nice amount of data to work with.)

SELECT address.AddressLine1, address.AddressLine2,

address.City, state.StateProvinceCode, address.PostalCode

FROM Person.address as address

JOIN Person.StateProvince as state

on address.StateProvinceID = state.StateProvinceID

WHERE address.addressLine1 = '1, rue Pierre-Demoulin';

Next, run the following query. See whether you can spot the difference between the two queries.

SELECT address.AddressLine1, address.AddressLine2,

address.City, state.StateProvinceCode, address.PostalCode

FROM Person.Address AS address

JOIN Person.StateProvince AS state

on address.StateProvinceID = state.StateProvinceID

WHERE address.AddressLine1 = '1, rue Pierre-Demoulin';

These queries can’t share plans because the AS in the first query’s FROM clause is lowercase (FROM Person.Address as address); but in the second, it’s uppercase. Using the sys.dm_exec_query_stats dynamic management view, you can see that the case difference does cause two plans by running:

SELECT *

FROM (SELECT execution_count,

SUBSTRING(st.text, (qs.statement_start_offset/2)+1,

((CASE qs.statement_end_offset

WHEN -1 THEN DATALENGTH(st.text)

ELSE qs.statement_end_offset

END - qs.statement_start_offset)/2) + 1) AS statement_text

FROM sys.dm_exec_query_stats AS qs

CROSS APPLY sys.dm_exec_sql_text(qs.sql_handle) AS st

) AS queryStats

WHERE queryStats.statement_text LIKE 'SELECT address.AddressLine1%';

This SELECT statement will return at least two rows; one for each query you have just executed. (It could be more depending on whether or not you have executed the statement in this statement more than two times). Hence, trying to use some method to make sure that every query sent that is essentially the same query is formatted the same is important: queries must use the same format, capitalization, and so forth.

Parameterization

The next performance query plan topic to discuss is parameterization. When a query is parameterized, only one version of the plan is needed to service many queries. Stored procedures are parameterized in all cases, but SQL Server does parameterize ad hoc SQL statements. By default, the optimizer doesn’t parameterize most queries, and caches most plans as straight text, unless the query is “simple.” For example, it can only reference a single table (search for “Forced Parameterization” in Books Online for the complete details). When the query meets the strict requirements, it changes each literal it finds in the query string into a parameter. The next time the query is executed with different literal values, the same plan can be used. For example, take this simpler form of the previous query:

SELECT address.AddressLine1, address.AddressLine2

FROM Person.Address AS address

WHERE address.AddressLine1 = '1, rue Pierre-Demoulin';

The plan (from using showplan_text on in the manner we introduced in Chapter 10 “Basic Index Usage Patterns”) is as follows:

|--Index Seek(OBJECT:([AdventureWorks2012].[Person].[Address].|

IX_Address_AddressLine1_AddressLine2_City_StateProvinceID_PostalCode] AS [address]),

SEEK:([address].[AddressLine1]=CONVERT_IMPLICIT(nvarchar(4000),[@1],0)) ORDERED FORWARD)

The value of N'1, rue Pierre-Demoulin' has been changed to @1 (which is in bold in the plan), and the value is filled in from the literal at execute time. However, try executing this query that accesses two tables:

SELECT address.AddressLine1, address.AddressLine2,

address.City, state.StateProvinceCode, address.PostalCode

FROM Person.Address AS address

JOIN Person.StateProvince AS state

ON address.StateProvinceID = state.StateProvinceID

WHERE address.AddressLine1 ='1, rue Pierre-Demoulin';

The plan won’t recognize the literal and parameterize it:

|--Nested Loops(Inner Join, OUTER REFERENCES:([address].[StateProvinceID])) |--Index Seek(OBJECT:([AdventureWorks].[Person].[Address]. [IX_Address_AddressLine1_AddressLine2_City_StateProvinceID_PostalCode] AS [address]),SEEK:([address].[AddressLine1]=N'1, rue Pierre-Demoulin') ORDERED FORWARD) |--Clustered Index Seek(OBJECT:([AdventureWorks2008].[Person].[StateProvince]. [PK_StateProvince_StateProvinceID] AS [state]), SEEK:([state].[StateProvinceID]=[AdventureWorks].[Person]. [Address].[StateProvinceID] as [address].[StateProvinceID]) ORDERED FORWARD)

Note that the literal (bolded in this plan) from the query is still in the plan, rather than a parameter. Both plans are cached, but the first one can be used regardless of the literal value included in the WHERE clause. In the second, the plan won’t be reused unless the precise literal value of N'1, rue Pierre-Demoulin' is passed in.

If you want the optimizer to be more liberal in parameterizing queries, you can use the ALTER DATABASE command to force the optimizer to parameterize:

ALTER DATABASE AdventureWorks2012

SET PARAMETERIZATION FORCED;

Try the plan of the query with the join. It now has replaced the N'1, rue Pierre-Demoulin' with CONVERT_IMPLICIT(nvarchar(4000),[@0],0) . Now the query processor can reuse this plan no matter what the value for the literal is. This can be a costly operation in comparison to normal, text-only plans, so not every system should use this setting. However, if your system is running the same, reasonably complex-looking queries over and over, this can be a wonderful setting to avoid the need to pay for the query optimization.

|--Nested Loops(Inner Join, OUTER REFERENCES:([address].[StateProvinceID]))

|--Index Seek(OBJECT:([AdventureWorks2012].[Person].[Address].

[IX_Address_AddressLine1_AddressLine2_City_StateProvinceID_PostalCode]

AS [address]),

SEEK:([address].[AddressLine1]= CONVERT_IMPLICIT(nvarchar(4000),[@0],0))

ORDERED FORWARD)

|--Clustered Index Seek(OBJECT:([AdventureWorks2012].[Person].[StateProvince].

[PK_StateProvince_StateProvinceID] AS [state]),

SEEK:([state].[StateProvinceID]=[AdventureWorks2012].[Person].

[Address].[StateProvinceID] as [address].[StateProvinceID]

Not every query will be parameterized when forced parameterization is enabled. For example, change the equality to a LIKE condition:

SELECT address.AddressLine1, address.AddressLine2,

address.City, state.StateProvinceCode, address.PostalCode

FROM Person.Address AS address

JOIN Person.StateProvince AS state

ON address.StateProvinceID = state.StateProvinceID

WHERE address.AddressLine1 Like '1, rue Pierre-Demoulin';

The plan will contains the literal, rather than the parameter, because it cannot parameterize the second and third arguments of the LIKE operator (the arguments are arg1 LIKE arg2 [ESCAPE arg3]).

|--Nested Loops(Inner Join, OUTER REFERENCES:([address].[StateProvinceID]))

|--Index Seek(OBJECT:([AdventureWorks2012].[Person].[Address].

[IX_Address_AddressLine1_AddressLine2_City_StateProvinceID_PostalCode]

AS [address]),

SEEK:([address].[AddressLine1] >= N'1, rue Pierre-Demoulin'

AND [address].[AddressLine1] <= N'1, ru …(cut off in view)

|--Clustered Index Seek(OBJECT:([AdventureWorks2012].[Person].[StateProvince].

[PK_StateProvince_StateProvinceID] AS [state]),

SEEK:([state].[StateProvinceID]=[AdventureWorks2012].[Person].

[Address].[StateProvinceID] AS [address].[StateProvinceID]

If you change the query to end with WHERE '1, rue Pierre-Demoulin' Like address.AddressLine1, it would be parameterized, but that construct is rarely what is desired.

For your applications, another method is to use parameterized calls from the data access layer. Basically using ADO.NET, this would entail using T-SQL variables in your query strings, and then using a SqlCommand object and its Parameters collection. The plan that will be created from SQL parameterized on the client will in turn be parameterized in the plan that is saved.

The myth that performance is definitely worse with ad hoc calls is just not quite true (certainly after 7.0). Performance can actually be less of a worry than you might have been led to believe when using ad hoc calls to the SQL Server in your applications. However, don’t stop reading here. While performance may not suffer tremendously, performance tuning is one of the pitfalls, since once you have compiled that query into your application, changing the query is never as easy as it might seem during the development cycle.

So far, we have just executed queries directly, but there is a better method when building your interfaces that allows you to parameterize queries in a very safe manner. Using sp_executeSQL , you can fashion your SQL statement using variables to parameterize the query:

DECLARE @AddressLine1 nvarchar(60) = '1, rue Pierre-Demoulin',

@Query nvarchar(500),

@Parameters nvarchar(500)

SET @Query= N'SELECT address.AddressLine1, address.AddressLine2,address.City,

state.StateProvinceCode, address.PostalCode

FROM Person.Address AS address

JOIN Person.StateProvince AS state

ON address.StateProvinceID = state.StateProvinceID

WHERE address.AddressLine1 Like @AddressLine1';

SET @Parameters = N'@AddressLine1 nvarchar(60)';

EXECUTE sp_executesql @Query, @Parameters, @AddressLine1 = @AddressLine1;

Using sp_executesql is generally considered the safest way to parameterize queries because it does a good job of parameterizing the query and helps avoid issues like SQL injection attacks, which I will cover later in this chapter.

Finally, if you know you need to reuse the query multiple times, you can compile it and save the plan for reuse. This is generally useful if you are going to have to call the same object over and over, Instead of sp_executesql, use sp_prepare to prepare the plan; only this time you won’t use the actual value:

DECLARE @Query nvarchar(500),

@Parameters nvarchar(500),

@Handle int

SET @Query= N'SELECT address.AddressLine1, address.AddressLine2,address.City,

state.StateProvinceCode, address.PostalCode

FROM Person.Address AS address

JOIN Person.StateProvince AS state

ON address.StateProvinceID = state.StateProvinceID

WHERE address.AddressLine1 Like @AddressLine1';

SET @Parameters = N'@AddressLine1 nvarchar(60)';

EXECUTE sp_prepare @Handle output, @Parameters, @Query;

SELECT @handle;

That batch will return a value that corresponds to the prepared plan, in my case it was 1. This value is you handle to the plan that you can use with sp_execute on the same connection only. All you need to execute the query is the parameter values and the sp_execute statement, and you can use and reuse the plan as needed:

DECLARE @AddressLine1 nvarchar(60) = '1, rue Pierre-Demoulin';

EXECUTE sp_execute 1, @AddressLine1;

SET @AddressLine1 = '6387 Scenic Avenue';

EXECUTE sp_execute 1, @AddressLine1;

You can unprepare the statement using sp_unprepare and the handle number. It is fairly rare that anyone will manually execute sp_prepare and sp_execute, but it is very frequently built into engines that are built to manage ad hoc access for you. It can be good for performance, but it is a pain for troubleshooting because you have to decode what the handle 1 actually represents. You can release the plan using sp_unprepare with a parameter of the handle.

What you end up with is pretty much the same as a procedure for performance, but it has to be done every time you run your app, and it is scoped to a connection, not shared on all connections. The better solution for parameterizing complex statements is a stored procedure. Generally, the only way this makes sense as a best practice is when you cannot use procedures, perhaps because of your tool choice or using a third-party application. Why? Well, the fact is with stored procedures, the query code is stored on the server and is a layer of encapsulation that reduces coupling; but more on that in the stored procedure section.

Pitfalls

I’m glad you didn’t stop reading at the end of the previous section, because although I have covered the good points of using ad hoc SQL, there are the following significant pitfalls, as well:

- Low cohesion, high coupling

- Batches of statements

- Security issues

- SQL injection

- Performance-tuning difficulties

The number-one pitfall of using ad hoc SQL as your interface relates to what you learned back in Programming 101: strive for high cohesion, low coupling. Cohesion means that the different parts of the system work together to form a meaningful unit. This is a good thing, as you don’t want to include lots of irrelevant code in the system, or be all over the place. On the other hand, coupling refers to how connected the different parts of a system are to one another. It’s considered bad when a change in one part of a system breaks other parts of a system. (If you aren’t too familiar with these terms, I suggest you go to www.wikipedia.org and search for these terms. You should build all the code you create with these concepts in mind.)

When issuing T-SQL statements directly from the application, the structures in the database are tied directly to the client interface. This sounds perfectly normal and acceptable at the beginning of a project, but it means that any change in database structure might require a change in the user interface. This makes making small changes to the system just as costly as large ones, because a full testing cycle is required.

![]() Note When I started this section, I told you that I wouldn’t make any distinction between toolsets used. This is still true. Whether you use a horribly, manually coded system or the best object-relational mapping system, the fact that the application tier knows and is built specifically with knowledge of the base structure of the database is an example of the application and data tiers being highly coupled. Though stored procedures are similarly inflexible, they are stored with the data, allowing the disparate systems to be decoupled: the code on the database tier can be structurally dependent on the objects in the same tier without completely sacrificing your loose coupling.

Note When I started this section, I told you that I wouldn’t make any distinction between toolsets used. This is still true. Whether you use a horribly, manually coded system or the best object-relational mapping system, the fact that the application tier knows and is built specifically with knowledge of the base structure of the database is an example of the application and data tiers being highly coupled. Though stored procedures are similarly inflexible, they are stored with the data, allowing the disparate systems to be decoupled: the code on the database tier can be structurally dependent on the objects in the same tier without completely sacrificing your loose coupling.

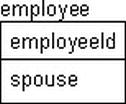

For example, consider that you’ve created an employee table, and you’re storing the employee’s spouse’s name, as shown in Figure 13-2.

Figure 13-2. An employee table

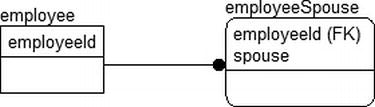

Now, some new regulation requires that you have to include the ability to have more than one spouse, which necessitates a new table, as shown in Figure 13-3.

Figure 13-3. Adding the ability to have more than one spouse

The user interface must immediately be morphed to deal with this case, or at the least, you need to add some code to abstract this new way of storing data. In a scenario such as this, where the condition is quite rare (certainly most everyone will have zero or one spouse), a likely solution would be simply to encapsulate the one spouse into the employee table via a view, change the name of the object the non-data tier accesses, and the existing UI would still work. Then you can add support for the atypical case with some sort of otherSpouse functionality that would be used if the employee had more than one spouse. The original UI would continue to work, but a new form would be built for the case where COUNT(spouse) > 1.

Batches of More Than One Statement

A major problem with ad hoc SQL access is that when you need to do multiple commands and treat them as a single operation, it becomes increasingly more difficult to build the mechanisms in the application code to execute multiple statements as a batch, particularly when you need to group statements together in a transaction. When you have only individual statements, it’s easy to manage ad hoc SQL for the most part. Some queries can get mighty complicated and difficult, but generally speaking, things pretty much work great when you have single statements per transaction. However, as complexity rises in the things you need to accomplish in a transaction, things get tougher. What about the case where you have 20 rows to insert, update, and/or delete at one time and all in one transaction?

Two different things usually occur in this case. The first way to deal with this situation is to start a transaction using functional code. For the most part, as discussed in Chapter 10, the best practice was stated as never to let transactions span batches, and you should minimize starting transactions using an ADO.NET object (or something like it). This isn’t a hard and fast rule, as it’s usually fine to do this with a middle-tier object that requires no user interaction. However, if something occurs during the execution of the object, you can still leave open connections and transactions to the server.

The second way to deal with this is to build a batching mechanism for batching SQL calls. Implementing the first method is self explanatory, but the second is to build a code-wrapping mechanism, such as the following:

BEGIN TRY

BEGIN TRANSACTION;

<-- statements go here

COMMIT TRANSACTION;

END TRY

BEGIN CATCH

ROLLBACK TRANSACTION;

THROW 50000, '<describe what happened>',1;

END CATCH

For example, if you wanted to send a new invoice and line items, the application code would need to build a batch such as in the following code. Each of the SET @Action = … and INSERT statements would be put into the batch by the application with the rest being boilerplate code that is repeatable:

SET NOCOUNT ON;

BEGIN TRY

BEGIN TRANSACTION;

DECLARE @Action nvarchar(200);

SET @Action = 'Invoice Insert';

INSERT invoice (columns) values (values);

SET @Action = 'First InvoiceLineItem Insert';

INSERT invoiceLineItem (columns) values (values);

SET @Action = 'Second InvoiceLineItem Insert';

INSERT invoiceLineItem (columns) values (values);

SET @Action = 'Third InvoiceLineItem Insert';

INSERT invoiceLineItem (columns) values (values);

COMMIT TRANSACTION;

END TRY

BEGIN CATCH

ROLLBACK TRANSACTION;

DECLARE @Msg nvarchar(4000);

SET @Msg = @Action + ': Error was: ' + CAST(ERROR_NUMBER() as varchar(10)) + ':' +

ERROR_MESSAGE ();

THROW 50000, @Msg,1 ;

END CATCH

Executing multiple statements in a transaction is done on the server and the transaction either completes or not. There’s no chance that you’ll end up with a transaction swinging in the wind, ready to block the next user who needs to access the locked row (even in a table scan for unrelated items). A downside is that it does stop you from using any interim values from your statements (without some really tricky coding/forethought), but starting transactions outside of the batch you commit them is asking for blocking and locking due to longer batch times.

Starting the transaction outside of the server using application code is likely easier, but building this sort of batching interface is always the preferred way to go. First, it’s better for concurrency, because only one batch needs to be executed instead of many little ones. Second, the execution of this batch won’t have to wait on communications back and forth from the server before sending the next command. It’s all there and happens in the single batch of statements.

![]() Note The problem I have run into in almost all cases was that building a batch of multiple SQL statements is a very unnatural thing for the object oriented code to do. The way the code is generally set up is more one table to one object, where the invoice and invoiceLineItem objects have the responsibility of saving themselves. It is a lot easier to code, but too often issues come up in the execution of multiple statements and then the connection gets left open and other connections get blocked behind the locks that are left open due because of the open transaction.

Note The problem I have run into in almost all cases was that building a batch of multiple SQL statements is a very unnatural thing for the object oriented code to do. The way the code is generally set up is more one table to one object, where the invoice and invoiceLineItem objects have the responsibility of saving themselves. It is a lot easier to code, but too often issues come up in the execution of multiple statements and then the connection gets left open and other connections get blocked behind the locks that are left open due because of the open transaction.

Security is one of the biggest downsides to using ad hoc access. For a user to use his or her own Windows login to access the server, you have to grant too many rights to the system, whereas with stored procedures, you can simply give access to the stored procedures. Using ad hoc T-SQL, you have to go with one of three possible security patterns, each with their own downsides:

- Use one login for the application: This, of course, means that you have to code your own security system rather than using what SQL Server gives you. This even includes some form of login and password for application access, as well as individual object access.

- Use application roles: This is slightly better: while you have to implement security in your code since all application users will have the same database security, at the very least, you can let SQL server handle the data access via normal logins and passwords (probably using Windows authentication). Using application roles can be better way to give a user multiple sets of permissions as having only the one password for users to log in with usually avoids the mass of sticky notes embossed in bold letters all around the office: payroll system ID: fred, password: fredsisawesome!

- Give the user direct access to the tables, or possibly views: This unfortunately opens up your tables to users who discover the magical world of Management Studio, where they can open a table and immediately start editing it without any of those pesky UI data checks or business rules that only exist in your data access layer that I warned you about.

Usually, almost all applications follow the first of the three methods. Building your own security mechanisms is just considered a part of the process, and just about as often, it is considered part of the user interface’s responsibilities. At the very least, by not giving all users direct access to the tables, the likelihood of them mucking around in the tables editing data all willy-nilly is greatly minimized. With procedures, you can give the users access to stored procedures, which are not natural for them to use and certainly would not allow them to accidentally delete data from a table.

The other issues security-wise are basically performance related. SQL Server must evaluate security for every object as it’s used, rather than once at the object level for stored procedures—that is, if the owner of the procedure owns all objects. This isn’t generally a big issue, but as your need for greater concurrency increases, everything becomes an issue!

![]() Caution If you use the single-application login method, make sure not to use an account with system administration or database owner privileges. Doing so opens up your application to programmers making mistakes, and if you miss something that allows SQL injection attacks, which I describe in the next section, you could be in a world of hurt.

Caution If you use the single-application login method, make sure not to use an account with system administration or database owner privileges. Doing so opens up your application to programmers making mistakes, and if you miss something that allows SQL injection attacks, which I describe in the next section, you could be in a world of hurt.

A big issue with ad hoc query being hacked by a SQL injection attack. Unless you (and/or your toolset) program your ad hoc SQL intelligently and/or (mostly and) use the parameterizing methods we discussed earlier in this section on ad hoc SQL, a user could inject something such as the following:

' + char(13) + char(10) + ';SHUTDOWN WITH NOWAIT;' + '--'

In this case, the command might just shut down the server if the user has rights, but you can probably see far greater attack possibilities. I’ll discuss more about injection attacks and how to avoid them in the “Stored Procedures” section. When using ad hoc SQL, you must be careful to avoid these types of issues for every call.

A SQL injection attack is not terribly hard to beat, but the fact is, for any use enterable text where you don’t use some form of parameterization, you have to make sure to escape any single quote characters that a user passes in. For general text entry, like a name, commonly if the user passes in a string like “O'Malley”, you know to change this to 'O''Malley'. For example, consider the following batch, where the resulting query will fail. (I have to escape the single quote in the literal to allow the query to execute.)

DECLARE @value varchar(30) = 'O''Malley';

SELECT 'SELECT '''+ @value + '''';

EXECUTE ('SELECT '''+ @value + ''''),

This will return:

SELECT 'O'Malley'

Msg 105, Level 15, State 1, Line 1

Unclosed quotation mark after the character string ''.

This is a very common problem that arises, and all too often the result is that the user learns not to enter a single quote in the query parameter value. But you can make sure this doesn’t occur by changing all single quotes in the value to double single quotes, like in the DECLARE @value statement:

DECLARE @value varchar(30) = 'O''Malley', @query nvarchar(300);

SELECT @query = 'SELECT ' + QUOTENAME(@value,''''),

SELECT @query;

EXECUTE (@query );

This returns:

SELECT 'O''Malley'

------------------

O'Malley

Now, if someone tries to put a single quote, semicolon, and some other statement in the value, it doesn’t matter; it will always be treated as a literal string:

DECLARE @value varchar(30) = 'O''; SELECT ''badness',

@query nvarchar(300);

SELECT @query = 'SELECT ' + QUOTENAME(@value,''''),

SELECT @query;

EXECUTE (@query );

The query is now, followed by the return:

SELECT 'O''; SELECT ''badness'

------------------------------

O'; SELECT 'badness

However, what isn’t quite so obvious is that you have to do this for every single string, even if the string value could never legally (due to application and constraints) have a single quote in it. If you don’t double up on the quotes, the person could put in a single quote and then a string of SQL commands—this is where you get hit by the injection attack. And as I said before, the safest method of avoiding injection issues is to always parameterize your queries; though it is very hard to use a variable query conditions using the parameterized method, losing some of the value of using ad hoc SQL.

DECLARE @value varchar(30) = 'O''; SELECT ''badness',

@query nvarchar(300),

@parameters nvarchar(200) = N'@value varchar(30)';

SELECT @query = 'SELECT ' + QUOTENAME(@value,''''),

SELECT @query;

EXECUTE sp_executesql @Query, @Parameters, @value = @value;

If you employ ad hoc SQL in your applications, I strongly suggest you do more reading on the subject of SQL injection, and then go in and look at the places where SQL commands can be sent to the server to make sure you are covered. SQL injection is especially dangerous if the accounts being used by your application have too much power because you didn’t set up particularly granular security.

Note that if all of this talk of parameterization sounds complicated, it kind of is. Generally there are two ways that parameterization happens well. Either by building a framework that forces you to follow the correct pattern (as do most object-relational tools, thought they often force (or at least lead) you into sub-optimal patterns of execution, like dealing with every statement separately without transactions.) The second way is stored procedures. Stored procedures parameterize in a manner that is impervious to SQL injection (except, when you use dynamic SQL, which, as I will discuss later, is subject to the same issues as ad hoc access from any client.)

Difficulty Tuning for Performance

Performance tuning is generally far more difficult when having to deal with ad hoc requests, for a few reasons:

- Unknown queries: The application can be programmed to send any query it wants, in any way. Unless very extensive testing is done, slow or dangerous scenarios can slip through. With procedures, you have a tidy catalog of possible queries that might be executed. Of course, this concern can be mitigated by having a single module where SQL code can be created and a method to list all possible queries that the application layer can execute (however, that takes more discipline than most organizations have.).

- Often requires people from multiple teams: It may seem silly, but when something is running slower, it is always blamed on SQL Server first. With ad hoc calls, the best thing that the database administrator can do is use a profiler to capture the query that is executed, see if an index could help, and call for a programmer, leading to the issue in the next bullet.

- Recompile required for changing queries: If you want to add a tip to a query to use an index, a rebuild and a redeploy are required. For stored procedures, you’d simply modify the query without the client knowing.

These reasons seem small during the development phase, but often they’re the real killers for tuning, especially when you get a third-party application and its developers have implemented a dumb query that you could easily optimize, but since the code is hard coded in the application, modification of the query isn’t possible (not that this regularly happens; no, not at all; nudge, nudge, wink, wink).

SQL Server 2005 gave us plan guides (and there are some improvements in their usability in later versions) that can be used to force a plan for queries, ad hoc calls, and procedures when you have troublesome queries that don’t optimize naturally, but the fact is, going in and editing a query to tune is far easier than using plan guides.

Stored Procedures

Stored procedures are compiled batches of SQL code that can be parameterized to allow for easy reuse. The basic structure of stored procedures follows. (See SQL Server Books Online at http://msdn.microsoft.com/en-us/library/ms130214.aspx for a complete reference.)

CREATE PROCEDURE <procedureName>

[(

@parameter1 <datatype> [ = <defaultvalue> [OUTPUT]]

@parameter2 <datatype> [ = <defaultvalue> [OUTPUT]]

…

@parameterN <datatype> [ = <defaultvalue> [OUTPUT]]

)]

AS

<T-SQL statements> | <CLR Assembly reference>

There isn’t much more to it. You can put any statements that could have been sent as ad hoc calls to the server into a stored procedure and call them as a reusable unit. You can return an integer value from the procedure by using the RETURN statement, or return almost any datatype by declaring the parameter as an output parameter other than text or image, though you should be using (max) datatypes instead because they will not likely exist in the version after 2012. (You can also not return a table valued parameter.) After the AS, you can execute any T-SQL commands you need to, using the parameters like variables.

The following is an example of a basic procedure to retrieve rows from a table (continuing to use the AdventureWorks2012 tables for these examples):

CREATE PROCEDURE Person.Address$select

(

@addressLine1 nvarchar(120) = '%',

@city nvarchar(60) = '%',

@state nchar(3) = '___', --special because it is a char column

@postalCode nvarchar(8) = '%'

) AS

--simple procedure to execute a single query

SELECT address.AddressLine1, address.AddressLine2,

address.City, state.StateProvinceCode, address.PostalCode

FROM Person.Address as address

JOIN Person.StateProvince as state

ON address.StateProvinceID = state.StateProvinceID

WHERE address.AddressLine1 Like @addressLine1

AND address.City Like @city

AND state.StateProvinceCode Like @state

AND address.PostalCode Like @postalCode;

Now instead of having the client programs formulate a query by knowing the table structures, the client can simply issue a command, knowing a procedure name and the parameters. Clients can choose from four possible criteria to select the addresses they want. For example, they’d use the following if they want to find people in London:

Person.Address$select @city = 'London';

Or they could use the other parameters:

Person.Address$select @postalCode = '98%', @addressLine1 = '%Hilltop%';

The client doesn’t know whether the database or the code is well built or even if it’s horribly designed. Originally our state value might have been a part of the address table but changed to its own table when we realized that it was necessary to store more information about a state than a simple code. The same might be true for the city, the postalCode, and so on. Often, the tasks the client needs to do won’t change based on the database structures, so why should the client need to know the database structures?

For much greater detail about how to write stored procedures and good T-SQL, consider the books Pro T-SQL 2008 Programmer’s Guide by Michael Coles (Apress, 2008); Inside Microsoft SQL Server 2008: T-SQL Programming (Pro-Developer) by Itzik Ben-Gan, et al. (Microsoft Press, 2009); or search for “stored procedures” in Books Online. In this section, I’ll look at some of the characteristics of using stored procedures as our only interface between client and data. I’ll discuss the following topics:

- Encapsulation : Limits client knowledge of the database structure by providing a simple interface for known operations

- Dynamic procedures: Gives the best of both worlds, allowing for ad hoc–style code without giving ad hoc access to the database

- Security : Provides a well-formed interface for known operations that allows you to apply security only to this interface and disallow other access to the database

- Performance: Allows for efficient parameterization of any query, as well as tweaks to the performance of any query, without changes to the client interface

- Pitfalls: Drawbacks associated with the stored-procedure–access architecture

To me, encapsulation is the primary reason for using stored procedures, and it’s the leading reason behind all the other topics that I’ll discuss. When talking about encapsulation, the idea is to hide the working details from processes that have no need to know about the details. Encapsulation is a large part of the desired “low coupling” of our code that I discussed in the pitfalls of ad hoc access. Some software is going to have to be coupled to the data structures, of course, but locating that code with the structures makes it easier to manage. Plus, the people who generally manage the SQL Server code on the server are not the same people who manage the compiled code.

For example, when we coded the person.address$select procedure, it’s unimportant to the client how the procedure was coded. We could have built it based on a view and selected from it, or the procedure could call 16 different stored procedures to improve performance for different parameter combinations. We could even have used the dreaded cursor version that you find in some production systems:

--pseudocode:

CREATE PROCEDURE person.address$select

…

Create temp table;

Declare cursor for (select all rows from the address table);

Fetch first row;

While not end of cursor (@@fetch_status)

Begin

Check columns for a match to parameters;

If match, put into temp table;

Fetch next row;

End;

SELECT * FROM temp table;

This would be horrible, horrible code to be sure. I didn’t give real code so it wouldn’t be confused for a positive example and imitated. (Somebody would end up blaming me, though definitely not you!) However, it certainly could be built to return correct data and possibly could even be fast enough for smaller data sets. Even better, when the client executes the following code, they get the same result, regardless of the internal code:

Person.Address$select @city = 'london';

What makes procedures great is that you can rewrite the guts of the procedure using the server’s native language without any concern for breaking any client code. This means that anything can change, including table structures, column names, and coding method (cursor, join, and so on), and no client code need change as long as the inputs and outputs stay the same.

The only caveat to this is that you can get some metadata about procedures only when they are written using compiled SQL without conditionals (if condition select … else select…). For example, using sp_describe_first_result_set you can metadata about what the procedure we wrote earlier returns:

EXECUTE sp_describe_first_result_set

N'Person.Address$select @postalCode = ''98%'', @addressLine1 = ''%Hilltop%'';'

This returns metadata about what will be returned (this is just a small amount of what is returned):

| column_ordinal | name | system_type_name |

| -------------- | ----------------- | ---------------- |

| 1 | AddressLine1 | nvarchar(60) |

| 2 | AddressLine2 | nvarchar(60) |

| 3 | City | nvarchar(30) |

| 4 | StateProvinceCode | nchar(3) |

| 5 | PostalCode | nvarchar(15) |

For poorly formed procedures, (even if it returns what appears to be the exact same result set, you will not be as able to get the metadata from the procedure. For example, consider the following procedure:

CREATE PROCEDURE test (@value int = 1)

AS

IF @value = 1

SELECT 'FRED' AS name;

ELSE

SELECT 200 AS name;

If you run the procedure with 1 for the parameter, it will return FRED, for any other value, it will return 200. Both are named “name”, but they are not the same type. So checking the result set:

EXECUTE sp_describe_first_result_set N'test'

Returns this (actually quite) excellent error message:

Msg 11512, Level 16, State 1, Procedure sp_describe_first_result_set, Line 1

The metadata could not be determined because the statement 'SELECT 'FRED' as name;' in procedure 'test' is not compatible with the statement 'SELECT 200 as name;' in procedure 'test'.

This concept of having easy access to the code may seem like an insignificant consideration, especially if you generally only work with limited sized sets of data. The problem is, as data set sizes fluctuate, the types of queries that will work often vary greatly. When you start dealing with increasing orders of magnitude in the number of rows in your tables, queries that seemed just fine somewhere at ten thousand rows start to fail to produce the kinds of performance that you need, so you have to tweak the queries to get results in an amount of time that users won’t complain to your boss about. I will cover more about performance tuning in a later section.

![]() Note Some of the benefits of building your objects in the way that I describe can also be achieved by building a solid middle-tier architecture with a data layer that is flexible enough to deal with change. However, I will always argue that it is easier to build your data access layer in the T-SQL code that is built specifically for data access. Unfortunately, it doesn’t solve the code ownership issues (functional versus relational programmers) nor does it solve the issue with performance-tuning the code.

Note Some of the benefits of building your objects in the way that I describe can also be achieved by building a solid middle-tier architecture with a data layer that is flexible enough to deal with change. However, I will always argue that it is easier to build your data access layer in the T-SQL code that is built specifically for data access. Unfortunately, it doesn’t solve the code ownership issues (functional versus relational programmers) nor does it solve the issue with performance-tuning the code.

Dynamic Procedures

You can dynamically create and execute code in a stored procedure, just like you can from the front end. Often, this is necessary when it’s just too hard to get a good query using the rigid requirements of precompiled stored procedures. For example, say you need a procedure that needs a lot of optional parameters. It can be easier to include only parameters where the user passes in a value and let the compilation be done at execution time, especially if the procedure isn’t used all that often. The same parameter sets will get their own plan saved in the plan cache anyhow, just like for typical ad hoc SQL.

Clearly, some of the problems of straight ad hoc SQL pertain here as well, most notably SQL injection. You must always make sure that no input users can enter can allow them to return their own results, allowing them to poke around your system without anyone knowing. As mentioned before, a common way to avoid this sort of thing is always to check the parameter values and immediately double up the single quotes so that the caller can’t inject malicious code where it shouldn’t be.

Make sure that any parameters that don’t need quotes (such as numbers) are placed into the correct datatype. If you use a string value for a number, you can insert things such as 'novalue' and check for it in your code, but another user could put in the injection attack value and be in like Flynn. For example, take the sample procedure from earlier, and let’s turn it into the most obvious version of a dynamic SQL statement in a stored procedure:

ALTER PROCEDURE Person.Address$select

(

@addressLine1 nvarchar(120) = '%',

@city nvarchar(60) = '%',

@state nchar(3) = '___',

@postalCode nvarchar(50) = '%'

) AS

BEGIN

DECLARE @query varchar(max);

SET @query =

'SELECT address.AddressLine1, address.AddressLine2,

address.City, state.StateProvinceCode, address.PostalCode

FROM Person.Address AS address

join Person.StateProvince AS state

on address.StateProvinceID = state.StateProvinceID

WHERE address.City LIKE ''' + @city + '''

AND state.StateProvinceCode Like ''' + @state + '''

AND address.PostalCode Like ''' + @postalCode + '''

--this param is last because it is largest

--to make the example

--easier as this column is very large

AND address.AddressLine1 Like ''' + @addressLine1 + '''';

SELECT @query; --just for testing purposes

EXECUTE (@query);

END;

There are two problems with this version of the procedure. The first is that you don’t get the full benefit, because in the final query you can end up with useless parameters used as search arguments that make using indexes more difficult, which is one of the main reasons I use dynamic procedures. I’ll fix that in the next version of the procedure, but the most important problem is the injection attack. For example, let’s assume that the user who’s running the application has dbo powers or rights to sysusers . The user executes the following statement:

EXECUTE Person.Address$select

@addressLine1 = '∼''select name from sysusers--';

This returns three result sets: the two (including the test SELECT) from before plus a list of all of the users in the AdventureWorks2012 database. No rows will be returned to the proper result sets, because no address lines happen to be equal to '∼', but the list of users is not a good thing because with some work, a decent hacker could probably figure out how to use a UNION and get back the users as part of the normal result set.

The easy way to correct this is to use the quotename() function to make sure that all values that need to be surrounded by single quotes are formatted in such a way that no matter what a user sends to the parameter, it cannot cause a problem. Note that if you programmatically chose columns that you ought to use the quotename() function to insert the bracket around the name. SELECT quotename('FRED') would return [FRED].

In the next code block, I will change the procedure to safely deal with invalid quote characters, plus instead of just blindly using the parameters, if the parameter value is the same as the default, I will leave off the values from the WHERE clause. Note that using sp_executeSQL and parameterizing will not be possible if you want to do a variable WHERE or JOIN clause , so you have to take care to avoid SQL injection in the query itself.

ALTER PROCEDURE Person.Address$select

(

@addressLine1 nvarchar(120) = '%',

@city nvarchar(60) = '%',

@state nchar(3) = '___',

@postalCode nvarchar(50) = '%'

) AS

BEGIN

DECLARE @query varchar(max);

SET @query =

'SELECT address.AddressLine1, address.AddressLine2,

address.City, state.StateProvinceCode, address.PostalCode

FROM Person.Address AS address

join Person.StateProvince AS state

on address.StateProvinceID = state.StateProvinceID

WHERE 1=1';

IF @city <> '%'

SET @query = @query + ' AND address.City Like ' + quotename(@city,''''),

IF @state <> '___'

SET @query = @query + ' AND state.StateProvinceCode Like ' + quotename(@state,''''),

IF @postalCode <> '%'

SET @query = @query + ' AND address.City Like ' + quotename(@city,''''),

IF @addressLine1 <> '%'

SET @query = @query + ' AND address.AddressLine1 LIKE ' +

quotename(@addressLine1,''''),

SELECT @query;

EXECUTE (@query);

END;

Now you might get a much better plan, especially if there are several useful indexes on the table. That’s because SQL Server can make the determination of what indexes to use at runtime based on the parameters needed, rather than using a single stored plan for every possible combination of parameters. You also don’t have to worry about injection attacks, because it’s impossible to put something into any parameter that will be anything other than a search argument, and that will execute any code other than what you expect. Basically this version of a stored procedure is the answer to the flexibility of using ad hoc SQL, though it is a bit messier to write. However, it is located right on the server where it can be tweaked as necessary.

Try executing the evil version of the query, and look at the WHERE clause it fashions:

WHERE 1=1

AND address.AddressLine1 Like '∼''select name from sysusers--'

The query that is formed, when executed will now just return two result sets (one for the query and one for the results), and no rows for the executed query. This is because you are looking for rows where address.addressLine1 is like ∼'select name from sysusers--. While not being exactly impossible, this is certainly very, very unlikely.

I should also note that in versions of SQL Server before 2005, using dynamic SQL procedures would break the security chain, and you’d have to grant a lot of extra rights to objects just used in a stored procedure. This little fact was enough to make using dynamic SQL not a best practice for SQL Server 2000 and earlier versions. However, in SQL Server 2005 you no longer had to grant these extra rights, as I’ll explain in the next section. (Hint: you can EXECUTE AS someone else.)

Security

My second most favorite reason for using stored-procedure access is security. You can grant access to just the stored procedure, instead of giving users the rights to all the different resources used by the stored procedure. Granting rights to all the objects in the database gives them the ability to open Management Studio and do the same things (and more) that the application allows them to do. This is rarely the desired effect, as an untrained user let loose on base tables can wreak havoc on the data. (“Oooh, I should change that. Oooh, I should delete that row. Hey, weren’t there more rows in this table before?”) I should note that if the database is properly designed, users can’t violate core structural business rules, but they can circumvent business rules in the middle tier and can execute poorly formed queries that chew up important resources.

![]() Note In general, it is best to keep your users away from the power tools like Management Studio and keep them in a sandbox where even if they have advanced powers (like because they are CEO) they cannot accidentally see too much (and particularly modify and/or delete) data that they shouldn’t. Provide tools that hold user’s hands and keep them from shooting off their big toe (or really any toe for that matter).

Note In general, it is best to keep your users away from the power tools like Management Studio and keep them in a sandbox where even if they have advanced powers (like because they are CEO) they cannot accidentally see too much (and particularly modify and/or delete) data that they shouldn’t. Provide tools that hold user’s hands and keep them from shooting off their big toe (or really any toe for that matter).

With stored procedures, you have a far clearer surface area of a set of stored procedures on which to manage security at pretty much any granularity desired, rather than tables, columns, groups of rows (row-level security), and actions (SELECT, UPDATE, INSERT, DELETE), so you can give rights to just a single operation, in a single way. For example, the question of whether users should be able to delete a contact is wide open, but should they be able to delete their own contacts? Sure, so give them rights to execute deletePersonalContact (meaning a contact that the user owned). Making this choice easy would be based on how well you name your procedures. I use a naming convention of <tablename | subject area>$<action>. For example, to delete a contact, the procedure might be contact$delete , if users were allowed to delete any contact. How you name objects is completely a personal choice, as long as you follow a standard that is meaningful to you and others.

As discussed back in the ad hoc section, a lot of architects simply avoid this issue altogether by letting objects connect to the database as a single user (or being forced into this by political pressure, either way), and let the application handle security. That can be an adequate method of implementing security, and the security implications of this are the same for stored procedures or ad hoc usage. Using stored procedures still clarifies what you can or cannot apply security to.

In SQL Server 2005, the EXECUTE AS clause was added on the procedure declaration. In versions before SQL Server 2005, if a different user owned any object in the procedure (or function, view, or trigger), the caller of the procedure had to have explicit rights to the resource. This was particularly annoying when having to do some small dynamic SQL operation in a procedure, as discussed in the previous section.

The EXECUTE AS clause gives the programmer of the procedure the ability to build procedures where the procedure caller has the same rights in the procedure code as the owner of the procedure—or if permissions have been granted, the same rights as any user or login in the system.

For example, consider that you need to do a dynamic SQL call to a table. (In reality, it ought to be more complex, like needing to use a sensitive resource.) First, create a test user:

CREATE USER fred WITHOUT LOGIN;

Next, create a simple stored procedure:

CREATE PROCEDURE dbo.testChaining

AS

EXECUTE ('SELECT CustomerID, StoreID, AccountNumber

FROM Sales.Customer'),

GO

GRANT EXECUTE ON testChaining TO fred;