![]()

“It has been my observation that most people get ahead during the time that others waste.”

—Henry Ford

Concurrency is all about having the computer utilize all of its resources simultaneously, or basically having more than one thing done at the same time when serving multiple users (technically, in SQL Server, you open multiple requests, on one or more connections). Even if you haven’t done much with multiple users, if you know anything about computing you probably are familiar with the term multitasking. The key here is that when multiple processes or users are accessing the same resources, each user expects to see a consistent view of the data and certainly expects that other users will not be stomping on his or her results.

The topics of this chapter will center on understanding why and how you should write your database code or design your objects to make them accessible concurrently by as many users as you have in your system. In this chapter, I’ll discuss the following:

- OS and hardware concerns: I’ll briefly discuss various issues that are out of the control of SQL code but can affect concurrency.

- Transactions: I’ll give an overview of how transactions work and how to start and stop them in T-SQL code.

- SQL Server concurrency controls: In this section, I’ll explain locks and isolation levels that allow you to customize how isolated processes are from one another

- Coding for concurrency: I’ll discuss methods of coding data access to protect from users simultaneously making changes to data and placing data into less-than-adequate situations. You’ll also learn how to deal with users stepping on one another and how to maximize concurrency.

The key goal of this chapter is to acquaint you with many of the kinds of things SQL Server does to make it fast and safe to have multiple users doing the same sorts of tasks with the same resources and how you can optimize your code to make it easier for this to happen.

Resource Governor

SQL Server 2008 had a new feature that is concurrency related (especially as it relates to performance tuning), though it is more of a management tool than a design concern. The feature is called Resource Governor, and it allows you to partition the workload of the entire server by specifying maximum and minimum resource allocations (memory, CPU, concurrent requests, etc.) to users or groups of users. You can classify users into groups using a simple user-defined function that, in turn, takes advantage of the basic server-level functions you have for identifying users and applications (IS_SRVROLEMEMBER, APP_NAME, SYSTEM_USER, etc.). Like many of the high-end features of SQL Server, Resource Governor is only available with the Enterprise Edition and higher editions.

Using Resource Governor , you can group together and limit the users of a reporting application, of Management Studio, or of any other application to a specific percentage of the CPU, a certain percentage and number of processors, and limited requests at one time. In SQL Server 2012, I/O limits are still not available in Resource Governor.

One nice thing about Resource Governor is that some settings can only apply when the server is under a load. So if the reporting user is the only active process, that user might get the entire server’s power. But if the server is being heavily used, users would be limited to the configured amounts. I won’t talk about Resource Governor anymore in this chapter, but it is definitely a feature that you might want to consider if you are dealing with different types of users in your applications.

What Is Concurrency?

The concept of concurrency can be boiled down to the following statement:

Maximize the amount of work that can be done by all users at the same time, and most importantly, make all users feel like they’re important.

Because of the need to balance the amount of work with the user’s perception of the amount of work being done, there are going to be the following tradeoffs:

- Number of concurrent users: How many users can (or need to) be served at the same time?

- Overhead: How complex are the algorithms to maintain concurrency?

- Accuracy: How correct must the results be?

- Performance: How fast does each process finish?

- Cost: How much are you willing to spend on hardware and programming time?

As you can probably guess, if all the users of a database system never needed to run queries at the same time, life in database-system–design land would be far simpler. You would have no need to be concerned with what other users might want to do. The only real performance goal would be to run one process really fast and move to the next process. If no one ever shared resources, multitasking server operating systems would be unnecessary. All files could be placed on a user’s local computer, and that would be enough. And if we could single-thread all activities on a server, more work might be done, but just like the old days, people would sit around waiting for their turns (yes, with mainframes, people actually did that sort of thing). Internally, the situation is still technically the same in a way, as a computer cannot process more individual instructions than it has cores in its CPUs, but it can run and swap around fast enough to make hundreds or thousands of people feel like they are the only users. This is especially true if your system engineer builds computers that are good as SQL Server machines (and not just file servers) and the architects/programmers build systems that meet the requirements for a relational database (and not just what seems expedient at the time).

A common scenario for a multiuser database involves a sales and shipping application. You might have 50 salespeople in a call center trying to sell the last 25 closeout items that are in stock. It isn’t desirable to promise the last physical item accidentally to multiple customers, since two users might happen to read that it was available at the same time and both be allowed to place an order for it. In this case, stopping the first order wouldn’t be necessary, but you would want to disallow or otherwise prevent the second (or subsequent) orders from being placed, since they cannot be fulfilled immediately.

Most programmers instinctively write code to check for this condition and to try to make sure that this sort of thing doesn’t happen. Code is generally written that does something along these lines:

- Check to make sure that there’s adequate stock.

- Create a shipping row to allocate the product to the customer.

That’s simple enough, but what if one person checks to see if the product is available at the same time as another, and more orders are placed than you have adequate stock for? This is a far more common possibility than you might imagine. Is this acceptable? If you’ve ever ordered a product that you were promised in two days and then found out your items are on backorder for a month, you know the answer to this question: “No! It is very unacceptable.” When this happens, you try another retailer next time, right?

I should also note that the problems presented by concurrency aren’t quite the same as those for parallelism, which is having one task split up and done by multiple resources at the same time. Parallelism involves a whole different set of problems and luckily is more or less not your problem. In writing SQL Server code, parallelism is done automatically, as tasks can be split among resources (sometimes, you will need to adjust just how many parallel operations can take place, but in practice, SQL Server does most of that work for you). When I refer to concurrency, I generally mean having multiple different operations happening at the same time by different connections to SQL Server. Here are just a few of the questions you have to ask yourself:

- What effect will there be if a query modifies rows that have already been used by a query in a different batch?

- What if the other query creates new rows that would have been important to the other batch’s query? What if the other query deletes others?

- Most importantly, can one query corrupt another’s results?

You must consider a few more questions as well. Just how important is concurrency to you, and how much are you willing to pay in performance? The whole topic of concurrency is basically a set of tradeoffs between performance, consistency, and the number of simultaneous users.

![]() Tip Starting with SQL Server 2005, a new way to execute multiple batches of SQL code from the same connection simultaneously was added; it is known as Multiple Active Result Sets (MARS)

. It allows interleaved execution of several statements, such as SELECT, FETCH, RECEIVE READTEXT, or BULK INSERT. As the product continues to mature, you will start to see the term “request” being used in the place where we commonly thought of connection in SQL Server 2000 and earlier. Admittedly, this is still a hard change that has not yet become embedded in people’s thought processes, but in some places (like in the Dynamic Management Views), you need to understand the difference.

Tip Starting with SQL Server 2005, a new way to execute multiple batches of SQL code from the same connection simultaneously was added; it is known as Multiple Active Result Sets (MARS)

. It allows interleaved execution of several statements, such as SELECT, FETCH, RECEIVE READTEXT, or BULK INSERT. As the product continues to mature, you will start to see the term “request” being used in the place where we commonly thought of connection in SQL Server 2000 and earlier. Admittedly, this is still a hard change that has not yet become embedded in people’s thought processes, but in some places (like in the Dynamic Management Views), you need to understand the difference.

MARS is principally a client technology and must be enabled by a connection, but it can change some of the ways that SQL Server handles concurrency. I’ll note places where MARS affects the fundamentals of concurrency.

SQL Server is designed to run on a variety of hardware types. Essentially the same basic code runs on a low-end netbook and on a clustered array of servers that rivals many supercomputers. Every machine running a version of SQL Server, from Express to Enterprise Edition, can have a vastly different concurrency profile. Each edition will also be able to support different amounts of hardware: Express supports 1GB of RAM and one processor socket (with up to 4 cores, which is still more than our first SQL Server that had 16MB of RAM), and at the other end of the spectrum, the Enterprise Edition can handle as much hardware as a manufacturer can stuff into one box. Additionally, a specialized version called the Parallel Data Warehouse edition is built specifically for data warehousing loads. The fact is that, in every version, many of the very same concerns exist concerning how SQL Server handles multiple users using the same resources seemingly simultaneously. Fast-forward to the future (i.e., now), and the Azure platform allows you to access your data in the cloud on massive computer systems from anywhere. In this section, I’ll briefly touch on some of the issues governing concurrency that our T-SQL code needn’t be concerned with, because concurrency is part of the environment we work in.

SQL Server and the OS balance all the different requests and needs for multiple users. It’s beyond the scope of this book to delve too deeply into the details, but it’s important to mention that concurrency is heavily tied to hardware architecture. For example, consider the following subsystems:

- Processor: The heart of the system is the CPU. It controls the other subsystems, as well as doing any calculations needed. If you have too few processors, excessive time can be wasted switching between requests.

-

Disk subsystem: Disk is always the slowest part of the system (even with solid state drives becoming more and more prevalent). A slow disk subsystem is the downfall of many systems, particularly because of the expense involved. Each drive can read only one piece of information at a time, so to access disks concurrently, it’s necessary to have multiple disk drives, and even multiple controllers or channels to disk drive arrays. Especially important is the choice between RAID systems, which take multiple disks and configure them for performance and redundancy:

- 0: Striping across all disks with no redundancy, performance only.

-

1: Mirroring between two disks, redundancy only.

- 5: Striping with distributed parity; excellent for reading, but can be slow for writing. Not typically suggested for most SQL Server OLTP usage, though it isn’t horrible for lighter loads.

- 0+1: Mirrored stripes. Two RAID 1 arrays, mirrored. Great for performance, but not tremendously redundant.

- 1+0 (also known as 10): Striped mirrors. Some number of RAID 0 mirrored arrays, then striped across the mirrors. Usually, the best mix of performance and redundancy for an OLTP SQL Server installation.

- Network interface: Bandwidth to the users is critical but is usually less of a problem than disk access. However, it’s important to attempt to limit the number of round trips between the server and the client. This is highly dependent on whether the client is connecting over a dialup connection or a gigabit Ethernet (or even multiple network interface cards). Turning on SET NOCOUNT in all connections and coded objects, such as stored procedures and triggers, is a good first step, because otherwise, a message is sent to the client for each query executed, requiring bandwidth (and processing) to deal with them.

- Memory: One of the cheapest commodities that you can improve substantially on a computer is memory. SQL Server 2012 can use a tremendous amount of memory within the limits of the edition you used (and the amount of RAM will not affect your licensing costs like processor cores either.)

Each of these subsystems needs to be in balance to work properly. You could theoretically have 100 CPUs and 128GB of RAM, and your system could still be slow. In this case, a slow disk subsystem could be causing your issues. The goal is to maximize utilization of all subsystems—the faster the better—but it’s useless to have super-fast CPUs with a super-slow disk subsystem. Ideally, as your load increases, disk, CPU, and memory usage would increase proportionally, though this is a heck of a hard thing to do. The bottom line is that the number of CPUs, disk channels, disk drives, and network cards and the amount of RAM you have all affect concurrency.

Monitoring hardware and OS performance issues is a job primarily for perfmon and/or the data collector. Watching counters for CPU, memory, SQL Server, and so on lets you see the balance among all the different subsystems. In the end, poor hardware configuration can kill you just as quickly as poor SQL Server implementation.

For the rest of this chapter, I’m going to ignore these types of issues and leave them to others with a deeper hardware focus, such as the MSDN web site ( http://msdn.microsoft.com ) or great blogs like Glenn Berry’s ( http://sqlserverperformance.wordpress.com ). I’ll be focusing on design- and coding-related issues pertaining to how to code better SQL to manage concurrency between SQL Server processes.

No discussion of concurrency can really have much meaning without an understanding of the transaction. Transactions are a mechanism that allows one or more statements to be guaranteed either to be fully completed or to fail totally. It is an internal SQL Server mechanism that is used to keep the data that’s written to and read from tables consistent throughout a batch, as required by the user.

Whenever data is modified in the database, the changes are not written to the physical data files, but first to a page in RAM and then a log of every change is written to the transaction log immediately before the change is registered as complete. (Any log files need to be on a very fast disk drive subsystem for this reason). Later, the physical table structure is written to when the system is able to do the write (during what is called a checkpoint ). Understanding the process of how modifications to data are made is essential, because while tuning your overall system, you have to be cognizant that every modification operation is logged when considering how large to make your transaction log.

The purpose of transactions is to provide a mechanism to allow multiple processes access to the same data simultaneously, while ensuring that logical operations are either carried out entirely or not at all. To explain the concurrency issues that transactions help with, there’s a common acronym: ACID. It stands for the following:

- Atomicity: Every operation within a transaction is treated as a singular operation; either all its data modifications are performed, or none of them is performed.

- Consistency: Once a transaction is completed, the system must be left in a consistent state. This means that all the constraints on the data that are part of the RDBMS definition must be honored.

- Isolation: This means that the operations within a transaction must be suitably isolated from other transactions. In other words, no other transactions ought to see data in an intermediate state, within the transaction, until it’s finalized. This is typically done by using locks (for details on locks, refer to the section “SQL Server Concurrency Controls” later in this chapter).

- Durability: Once a transaction is completed (committed), all changes must be persisted as requested. The modifications should persist even in the event of a system failure.

Transactions are used in two different ways. The first way is to provide for isolation between processes. Every DML and DDL statement, including INSERT, UPDATE, DELETE, CREATE TABLE, ALTER TABLE, CREATE INDEX, and even SELECT statements, that is executed in SQL Server is run within a transaction. If you are in the middle of adding a column to the table, you don’t want another user to try to modify data in the table at the same time. For DDL and modification statements, such as INSERT, UPDATE, and DELETE, locks are placed, and all system changes are recorded in the transaction log. If any operation fails, or if the user asks for an operation to be undone, SQL Server uses the transaction log to undo the operations already performed. For SELECT operations (and during the selection of rows to modify/remove from a table), locks will also be used to ensure that data isn’t changed as it is being read.

Second, the programmer can use transaction commands to batch together multiple commands into one logical unit of work. For example, if you write data to one table successfully, and then try unsuccessfully to write to another table, the initial writes can be undone. This section will mostly be about defining and demonstrating this syntax.

The key to using transactions is that, when writing statements to modify data using one or more SQL statements, you need to make use of transactions to ensure that data is written safely and securely. A typical problem with procedures and operations in T-SQL code is underusing transactions, so that when unexpected errors (such as security problems, constraint failures, and hardware glitches) occur, orphaned or inconsistent data is the result. And when, a few weeks later, users are complaining about inconsistent results, you have to track down the issues; you lose some sleep; and your client loses confidence in the system you have created and more importantly loses confidence in you.

How long the log is stored is based on the recovery model under which your database is operating. There are three models:

- Simple : The log is maintained only until the operation is executed and a checkpoint is executed (by SQL Server automatically or manually). A checkpoint operation makes certain that the data has been written to the data files, so it is permanently stored.

- Full : The log is maintained until you explicitly clear it out.

- Bulk logged : This keeps a log much like the full recovery model but doesn’t fully log some operations, such as SELECT INTO, bulk loads, index creations, or text operations. It just logs that the operation has taken place. When you back up the log, it will back up extents that were added during BULK operations, so you get full protection with quicker bulk operations.

Even in the simple model, you must be careful about log space, because if large numbers of changes are made in a single transaction or very rapidly, the log rows must be stored at least until all transactions are committed and a checkpoint takes place. This is clearly just a taste of transaction log management; for a more complete explanation, please see SQL Server 2012 Books Online.

Transaction Syntax

The syntax to start and stop transactions is pretty simple. I’ll cover four variants of the transaction syntax in this section:

- Basic transactions: The syntax of how to start and complete a transaction

- Nested transactions: How transactions are affected when one is started when another is already executing

- Savepoints: Used to selectively cancel part of a transaction

- Distributed transactions: Using transactions to control saving data on multiple SQL Servers

In the final part of this section, I’ll also cover explicit versus implicit transactions. These sections will give you the foundation needed to move ahead and start building proper code, ensuring that each modification is done properly, even when multiple SQL statements are necessary to form a single-user operation.

In transactions’ basic form, three commands are required: BEGIN TRANSACTION (to start the transaction), -COMMIT TRANSACTION (to save the data), and ROLLBACK TRANSACTION (to undo the changes that were made). It’s as simple as that.

For example, consider the case of building a stored procedure to modify two tables. Call these tables table1 and table2. You’ll modify table1, check the error status, and then modify table2 (these aren’t real tables, just syntax examples):

BEGIN TRY

BEGIN TRANSACTION;

UPDATE table1

SET value = 'value';

UPDATE table2

SET value = 'value';

COMMIT TRANSACTION;

END TRY

BEGIN CATCH

ROLLBACK TRANSACTION;

THROW 50000,'An error occurred',16

END CATCH

Now, if some unforeseen error occurs while updating either table1 or table2, you won’t get into the case where table1 is updated and table2 is not. It’s also imperative not to forget to close the transaction (either save the changes with COMMIT TRANSACTION, or undo the changes with ROLLBACK TRANSACTION), because the open transaction that contains your work is in a state of limbo, and if you don’t either complete it or roll it back, it can cause a lot of issues just hanging around in an open state. For example, if the transaction stays open and other operations are executed within that transaction, you might end up losing all work done on that connection. You may also prevent other connections from getting their work done, because each connection is isolated from one another messing up or looking at their unfinished work. Another user who needed the affected rows in table1 or table2 would have to wait (more on why this is throughout this chapter). The worst case of this I saw a number of years back was a single connection that was open all day with a transaction open after a failure because there was no error handling on the transaction. We lost a day’s work because we finally had to roll back the transactions when we killed the process.

There’s an additional setting for simple transactions known as named transactions, which I’ll introduce for completeness. (Ironically, this explanation will take more ink than introducing the more useful transaction syntax, but it is something good to know and can be useful in rare circumstances!) You can extend the functionality of transactions by adding a transaction name, as shown:

BEGIN TRANSACTION <tranName> or <@tranvariable>;

This can be a confusing extension to the BEGIN TRANSACTION statement. It names the transaction to make sure you roll back to it, for example:

BEGIN TRANSACTION one;

ROLLBACK TRANSACTION one;

Only the first transaction mark is registered in the log, so the following code returns an error:

BEGIN TRANSACTION one;

BEGIN TRANSACTION two;

ROLLBACK TRANSACTION two;

The error message is as follows:

Msg 6401, Level 16, State 1, Line 3

Cannot roll back two. No transaction or savepoint of that name was found.

Unfortunately, after this error has occurred, the transaction is still left open. For this reason, it’s seldom a good practice to use named transactions in your code unless you have a very specific purpose. The specific use that makes named transactions interesting is when named transactions use the WITH MARK setting. This allows marking the transaction log, which can be used when restoring a transaction log instead of a date and time. A common use of the marked transaction is to restore several databases back to the same condition and then restore all of the databases to a -common mark.

![]() Note A very good practice in testing your code that deals with transactions is to make sure that there are no transactions by executing ROLLBACK TRANSACTION until the message "The ROLLBACK TRANSACTION request has no corresponding BEGIN TRANSACTION" is returned. At that point, you can feel safe that you are outside a transaction. In code, you should use @@trancount to check, which I will demonstrate later in this chapter.

Note A very good practice in testing your code that deals with transactions is to make sure that there are no transactions by executing ROLLBACK TRANSACTION until the message "The ROLLBACK TRANSACTION request has no corresponding BEGIN TRANSACTION" is returned. At that point, you can feel safe that you are outside a transaction. In code, you should use @@trancount to check, which I will demonstrate later in this chapter.

The mark is only registered if data is modified within the transaction. A good example of its use might be to build a process that marks the transaction log every day before some daily batch process, especially one where the database is in single-user mode. The log is marked, and you run the process, and if there are any troubles, the database log can be restored to just before the mark in the log, no matter when the process was executed. Using the AdventureWorks2012 database, I’ll demonstrate this capability. You can do the same, but be careful to do this somewhere where you know you have a proper backup (just in case something goes wrong).

We first set up the scenario by putting the AdventureWorks2012 database in full recovery model.

USE Master;

GO

ALTER DATABASE AdventureWorks2012

SET RECOVERY FULL;

Next, we create a couple of backup devices to hold the backups we’re going to do:

EXEC sp_addumpdevice 'disk', 'TestAdventureWorks2012',

'C:SQLBackupAdventureWorks2012.bak';

EXEC sp_addumpdevice 'disk', 'TestAdventureWorks2012Log',

'C:SQLBackupAdventureWorks2012Log.bak';

![]() Tip You can see the current setting using the following code:

Tip You can see the current setting using the following code:

SELECT recovery_model_desc

FROM sys.databases

WHERE name = 'AdventureWorks2012';

Next, we back up the database to the dump device we created:

BACKUP DATABASE AdventureWorks2012 TO TestAdventureWorks2012;

Now, we change to the AdventureWorks2012 database and delete some data from a table:

USE AdventureWorks2012;

GO

SELECT COUNT(*)

FROM Sales.SalesTaxRate;

BEGIN TRANSACTION Test WITH MARK 'Test';

DELETE Sales.SalesTaxRate;

COMMIT TRANSACTION;

This returns 29. Run the SELECT statement again, and it will return 0. Next, back up the transaction log to the other backup device:

BACKUP LOG AdventureWorks2012 to TestAdventureWorks2012Log;

Now, we can restore the database using the RESTORE DATABASE command (the NORECOVERY setting keeps the database in a state ready to add transaction logs). We apply the log with RESTORE LOG. For the example, we’ll only restore up to before the mark that was placed, not the entire log:

USE Master

GO

RESTORE DATABASE AdventureWorks2012 FROM TestAdventureWorks2012

WITH REPLACE, NORECOVERY;

RESTORE LOG AdventureWorks2012 FROM TestAdventureWorks2012Log

WITH STOPBEFOREMARK = ’Test’, RECOVERY;

Now, execute the counting query again, and you can see that the 29 rows are in there.

USE AdventureWorks2012;

GO

SELECT COUNT(*)

FROM Sales.SalesTaxRate;

If you wanted to include the actions within the mark, you could use STOPATMARK instead of STOPBEFOREMARK. You can find the log marks that have been made in the MSDB database in the -logmarkhistory table.

Yes, I am aware that the title of this section probably sounds a bit like Marlin Perkins is going to take over and start telling of the mating habits of transactions, but I am referring to starting a transaction after another transaction has already been started. The fact is that you can nest the starting of transactions like this:

BEGIN TRANSACTION;

BEGIN TRANSACTION;

BEGIN TRANSACTION;

Technically speaking, there is really only one transaction being started, but an internal counter is keeping up with how many logical transactions have been started. To commit the transactions, you have to execute the same number of COMMIT TRANSACTION commands as the number of BEGIN TRANSACTION commands that have been executed. To tell how many BEGIN TRANSACTION commands have been executed without being committed, you can use the @@TRANCOUNT global variable. When it’s equal to one, then one BEGIN TRANSACTION has been executed. If it’s equal to two, then two have, and so on. When @@TRANCOUNT equals zero, you are no longer within a transaction context.

The limit to the number of transactions that can be nested is extremely large (the limit is 2,147,483,647, which took about 1.75 hours to reach in a tight loop on my old 2.27-GHz laptop with 2GB of RAM—clearly far, far more than any process should ever need).

As an example, execute the following:

SELECT @@TRANCOUNT AS zeroDeep;

BEGIN TRANSACTION;

SELECT @@TRANCOUNT AS oneDeep;

It returns the following results:

zeroDeep

--------

0

oneDeep

-------

1

Then, nest another transaction, and check @@TRANCOUNT to see whether it has incremented. Afterward, commit that transaction, and check @@TRANCOUNT again:

BEGIN TRANSACTION;

SELECT @@TRANCOUNT AS twoDeep;

COMMIT TRANSACTION; --commits very last transaction started with BEGIN TRANSACTION

SELECT @@TRANCOUNT AS oneDeep;

This returns the following results:

twoDeep

-------

2

oneDeep

-------

1

Finally, close the final transaction:

COMMIT TRANSACTION;

SELECT @@TRANCOUNT AS zeroDeep;

This returns the following result:

zeroDeep

--------

0

As I mentioned earlier in this section, technically only one transaction is being started. Hence, it only takes one ROLLBACK TRANSACTION command to roll back as many transactions as you have nested. So, if you’ve coded up a set of statements that end up nesting 100 transactions and you issue one rollback transaction, all transactions are rolled back—for example:

BEGIN TRANSACTION;

BEGIN TRANSACTION;

BEGIN TRANSACTION;

BEGIN TRANSACTION;

BEGIN TRANSACTION;

BEGIN TRANSACTION;

BEGIN TRANSACTION;

SELECT @@trancount as InTran;

ROLLBACK TRANSACTION;

SELECT @@trancount as OutTran;

This returns the following results:

InTran

-------

7

OutTran

-------

0

This is, by far, the trickiest part of using transactions in your code leading to some messy error handling and code management. It’s a bad idea to just issue a ROLLBACK TRANSACTION command without being cognizant of what will occur once you do—especially the command’s influence on the following code. If code is written expecting to be within a transaction and it isn’t, your data can get corrupted.

In the preceding example, if an UPDATE statement had been executed immediately after the ROLLBACK command, it wouldn’t be executed within an explicit transaction. Also, if COMMIT TRANSACTION is executed immediately after the ROLLBACK command, an error will occur:

SELECT @@trancount

COMMIT TRANSACTION

This will return

-----------

0

Msg 3902, Level 16, State 1, Line 2

The COMMIT TRANSACTION request has no corresponding BEGIN TRANSACTION.

In the previous section, I explained that all open transactions are rolled back using a ROLLBACK TRANSACTION call. This isn’t always desirable, so a tool is available to roll back only certain parts of a transaction. Unfortunately, it requires forethought and a special syntax. Savepoints are used to provide “selective” rollback.

For this, from within a transaction, issue the following statement:

SAVE TRANSACTION <savePointName>; --savepoint names must follow the same rules for

--identifiers as other objects

For example, use the following code in whatever database you desire. In the source code, I’ll continue to place it in the tempdb, because the examples are self-contained.

CREATE SCHEMA arts;

GO

CREATE TABLE arts.performer

(

performerId int NOT NULL identity,

name varchar(100) NOT NULL

);

GO

BEGIN TRANSACTION;

INSERT INTO arts.performer(name) VALUES ('Elvis Costello'),

SAVE TRANSACTION savePoint;

INSERT INTO arts.performer(name) VALUES ('Air Supply'),

--don't insert Air Supply, yuck! …

ROLLBACK TRANSACTION savePoint;

COMMIT TRANSACTION;

SELECT *

FROM arts.performer;

The output of this listing is as follows:

performerId name

----------- --------------

1 Elvis Costello

In the code, there were two INSERT statements within the transaction boundaries, but in the output, there’s only one row. Obviously, the row that was rolled back to the savepoint wasn’t persisted.

Note that you don’t commit a savepoint; SQL Server simply places a mark in the transaction log to tell itself where to roll back to if the user asks for a rollback to the savepoint. The rest of the operations in the overall transaction aren’t affected. Savepoints don’t affect the value of @@trancount, nor do they release any locks that might have been held by the operations that are rolled back, until all nested transactions have been committed or rolled back.

Savepoints give the power to effect changes on only part of the operations transaction, giving you more control over what to do if you’re deep in a large number of operations.

I’ll mention savepoints later in this chapter when writing stored procedures, as they allow the rolling back of all the actions of a single stored procedure without affecting the transaction state of the stored procedure caller, though in most cases, it is usually just easier to roll back the entire transaction. Savepoints do, however, allow you to perform some operation, check to see if it is to your liking, and if it’s not, roll it back.

You can’t use savepoints in a couple situations:

- When you’re using MARS and executing more than one batch at a time

- When the transaction is enlisted into a distributed transaction (the next section discusses this)

How MARS Affects Transactions

There’s a slight wrinkle in how multiple statements can behave when using OLE DB or ODBC native client drivers to retrieve rows in SQL Server 2005 or later. When executing batches under MARS, there can be a couple scenarios:

- Connections set to automatically commit : Each executed batch is within its own transaction, so there are multiple transaction contexts on a single connection.

- Connections set to be manually committed : All executed batches are part of one transaction.

When MARS is enabled for a connection, any batch or stored procedure that starts a transaction (either implicitly in any statement or by executing BEGIN TRANSACTION) must commit the transaction; if not, the transaction will be rolled back. These transactions were new to SQL Server 2005 and are referred to as batch-scoped transactions.

It would be wrong not to at least bring up the subject of distributed transactions. Occasionally, you might need to update data on a server that’s different from the one on which your code resides. The Microsoft Distributed Transaction Coordinator service (MS DTC) gives us this ability.

If your servers are running the MS DTC service, you can use the BEGIN DISTRIBUTED TRANSACTION command to start a transaction that covers the data residing on your server, as well as the remote server. If the server configuration 'remote proc trans' is set to 1, any transaction that touches a linked server will start a distributed transaction without actually calling the BEGIN DISTRIBUTED TRANSACTION command. However, I would strongly suggest you know if you will be using another server in a transaction (check sys.configurations or sp_configure for the current setting, and set the value using sp_configure). Note also that savepoints aren’t supported for distributed transactions.

The following code is just pseudocode and won’t run as is, but this is representative of the code needed to do a distributed transaction:

BEGIN TRY

BEGIN DISTRIBUTED TRANSACTION;

--remote server is a server set up as a linked server

UPDATE remoteServer.dbName.schemaName.tableName

SET value = 'new value'

WHERE keyColumn = 'value';

--local server

UPDATE dbName.schemaName.tableName

SET value = 'new value'

WHERE keyColumn = 'value';

COMMIT TRANSACTION;

END TRY

BEGIN CATCH

ROLLBACK TRANSACTION;

DECLARE @ERRORMessage varchar(2000);

SET @ERRORMessage = ERROR_MESSAGE();

THROW 50000, @ERRORMessage,16;

END CATCH

The distributed transaction syntax also covers the local transaction. As mentioned, setting the configuration option 'remote proc trans' automatically upgrades a BEGIN TRANSACTION command to a BEGIN DISTRIBUTED TRANSACTION command. This is useful if you frequently use distributed transactions. Without this setting, the remote command is executed, but it won’t be a part of the current -transaction.

Explicit vs. Implicit Transactions

Before finishing the discussion of transaction syntax, there’s one last thing that needs to be covered for the sake of completeness. I’ve alluded to the fact that every statement is executed in a transaction (again, this includes even SELECT statements). This is an important point that must be understood when writing code. Internally, SQL Server starts a transaction every time a SQL statement is started. Even if a transaction isn’t started explicitly with a BEGIN TRANSACTION statement, SQL Server automatically starts a new transaction whenever a statement starts and commits or rolls it back depending on whether or not any errors occur. This is known as an autocommit transaction. When the SQL Server engine commits the transaction, it starts for each statement-level transaction.

SQL Server gives us a setting to change this behavior of automatically committing the transaction: SET IMPLICIT_TRANSACTIONS. When this setting is turned on and the execution context isn’t already within a transaction, such as one explicitly declared using BEGIN TRANSACTION, BEGIN TRANSACTION is automatically (logically) executed when any of the following statements are executed: INSERT, UPDATE, DELETE, SELECT, TRUNCATE TABLE, DROP, ALTER TABLE, REVOKE, CREATE, GRANT, FETCH, or OPEN. This will mean that a COMMIT TRANSACTION or ROLLBACK TRANSACTION command has to be executed to end the transaction. Otherwise, once the connection terminates, all data is lost (and until the transaction terminates, locks that have been accumulated are held, other users are blocked, and pandemonium might occur).

SET IMPLICIT_TRANSACTIONS isn’t a typical setting used by SQL Server programmers or administrators but is worth mentioning because if you change the setting of ANSI_DEFAULTS to ON, IMPLICIT_TRANSACTIONS will be enabled!

I’ve mentioned that every SELECT statement is executed within a transaction, but this deserves a bit more explanation. The entire process of rows being considered for output, then transporting them from the server to the client is contained inside a transaction. The SELECT statement isn’t finished until the entire result set is exhausted (or the client cancels the fetching of rows), so the transaction doesn’t end either. This is an important point that will come back up in the “Isolation Levels” section, as I discuss how this transaction can seriously affect concurrency based on how isolated you need your queries to be.

Now that I’ve discussed the basics of transactions, it’s important to understand some of the slight differences involved in using them within compiled code versus the way we have used transactions so far in batches. You can’t use transactions in user-defined functions (you can’t change system state in a function, so they aren’t necessary anyhow), but it is important to understand the caveats when you use them in

- Stored procedures

- Triggers

Stored procedures, simply being compiled batches of code, use transactions as previously discussed, with one caveat. The transaction nesting level cannot be affected during the execution of a procedure. In other words, you must commit at least as many transactions as you begin in a stored procedure if you want everything to behave smoothly.

Although you can roll back any transaction, you shouldn’t roll it back unless the @@TRANCOUNT was zero when the procedure started. However, it’s better not to execute a ROLLBACK TRANSACTION statement at all in a stored procedure, so there’s no chance of rolling back to a transaction count that’s different from when the procedure started. This protects you from the situation where the procedure is executed in another transaction. Rather, it’s generally best to start a transaction and then follow it with a savepoint. Later, if the changes made in the procedure need to be backed out, simply roll back to the savepoint, and commit the transaction. It’s then up to the stored procedure to signal to any caller that it has failed and to do whatever it wants with the transaction.

As an example, let’s build the following simple procedure that does nothing but execute a BEGIN TRANSACTION and a ROLLBACK TRANSACTION:

CREATE PROCEDURE tranTest

AS

BEGIN

SELECT @@TRANCOUNT AS trancount;

BEGIN TRANSACTION;

ROLLBACK TRANSACTION;

END;

Execute this procedure outside a transaction, and you will see it behaves like you would expect:

EXECUTE tranTest;

It returns

Trancount

---------

0

However, say you execute it within an existing transaction:

BEGIN TRANSACTION;

EXECUTE tranTest;

COMMIT TRANSACTION;

The procedure returns the following results:

Trancount

---------

1

Msg 266, Level 16, State 2, Procedure tranTest, Line 0

Transaction count after EXECUTE indicates a mismatching number of BEGIN and COMMIT statements. Previous count = 1, current count = 0.

Msg 3902, Level 16, State 1, Line 3

The COMMIT TRANSACTION request has no corresponding BEGIN TRANSACTION.

The errors occur because the transaction depth has changed while rolling back the transaction inside the procedure. This error is one of the most frightening errors out there, because it usually says that you probably have been doing work that you expected to be in a transaction outside of a transaction, thus you will probably end up with out-of-sync data that needs to be repaired.

Finally, let’s recode the procedure as follows, putting a savepoint name on the transaction so we only roll back the code in the procedure:

ALTER PROCEDURE tranTest

AS

BEGIN

--gives us a unique savepoint name, trim it to 125 characters if the

--user named the procedure really really large, to allow for nestlevel

DECLARE @savepoint nvarchar(128) =

cast(object_name(@@procid) AS nvarchar(125)) +

cast(@@nestlevel AS nvarchar(3));

SELECT @@TRANCOUNT AS trancount;

BEGIN TRANSACTION;

SAVE TRANSACTION @savepoint;

--do something here

ROLLBACK TRANSACTION @savepoint;

COMMIT TRANSACTION;

END;

Now, you can execute it from within any number of transactions, and it will never fail, but it will never actually do anything either:

BEGIN TRANSACTION;

EXECUTE tranTest;

COMMIT TRANSACTION;

Now, it returns

Trancount

---------

1

You can call procedures from other procedures (even recursively from the same procedure) or external programs. It’s important to take these precautions to make sure that the code is safe under any calling circumstances.

![]() Caution As mentioned in the “Savepoints” section, you can’t use savepoints with distributed transactions or when sending multiple batches over a MARS–enabled connection. To make the most out of MARS, you might not be able to use this strategy. Frankly speaking, it might simply be prudent to execute modification procedures one at a time anyhow.

Caution As mentioned in the “Savepoints” section, you can’t use savepoints with distributed transactions or when sending multiple batches over a MARS–enabled connection. To make the most out of MARS, you might not be able to use this strategy. Frankly speaking, it might simply be prudent to execute modification procedures one at a time anyhow.

Naming savepoints is important. Because savepoints aren’t scoped to a procedure, you must ensure that they’re always unique. I tend to use the procedure name (retrieved here by using the object_name function called for the @@procId, but you could just enter it textually) and the current transaction nesting level. This guarantees that I can never have the same savepoint active, even if calling the same procedure recursively.

Let’s look briefly at how to code this into procedures using proper error handling:

ALTER PROCEDURE tranTest

AS

BEGIN

--gives us a unique savepoint name, trim it to 125

--characters if the user named it really large

DECLARE @savepoint nvarchar(128) =

cast(object_name(@@procid) AS nvarchar(125)) +

cast(@@nestlevel AS nvarchar(3));

--get initial entry level, so we can do a rollback on a doomed transaction

DECLARE @entryTrancount int = @@trancount;

BEGIN TRY

BEGIN TRANSACTION;

SAVE TRANSACTION @savepoint;

--do something here

THROW 50000, 'Invalid Operation',16;

COMMIT TRANSACTION;

END TRY

BEGIN CATCH

--if the tran is doomed, and the entryTrancount was 0,

--we have to roll back

IF xact_state()= -1 and @entryTrancount = 0

rollback transaction;

--otherwise, we can still save the other activities in the

--transaction.

ELSE IF xact_state() = 1 --transaction not doomed, but open

BEGIN

ROLLBACK TRANSACTION @savepoint;

COMMIT TRANSACTION;

END

DECLARE @ERRORmessage nvarchar(4000);

SET @ERRORmessage = 'Error occurred in procedure ''' + OBJECT_NAME(@@procid)

+ ''', Original Message: ''' + ERROR_MESSAGE() + '''';

THROW 50000, @ERRORmessage,16;

RETURN -100

END CATCH

END

In the CATCH block, instead of rolling back the transaction, I checked for a doomed transaction, and if we were not in a transaction at the start of the procedure, I rolled back. A doomed transaction is one in which some operation has made it impossible to do anything other than roll back the transaction. A common cause is a trigger-based error message. It is still technically an active transaction, giving you the chance to roll it back so operations do occur outside of the expected transaction space.

If the transaction was not doomed, I simply rolled back the savepoint. An error is returned for the caller to deal with. You could also eliminate RAISERROR altogether if the error wasn’t critical and the caller needn’t ever know of the rollback. You can place any form of error handling in the CATCH block, and as long as you don’t roll back the entire transaction and the transaction does not become doomed, you can keep going and later commit the transaction.

If this procedure called another procedure that used the same error handling, it would roll back its part of the transaction. It would then raise an error, which, in turn, would cause the CATCH block to be called, roll back the savepoint, and commit the transaction (at that point, the transaction wouldn’t contain any changes at all). If the transaction is doomed, when you get to the top level, it is rolled back. You might ask why you should go through this exercise if you’re just going to roll back the transaction anyhow. The key is that each level of the calling structure can decide what to do with its part of the transaction. Plus, in the error handler we have created, we get the basic call stack for debugging purposes.

As an example of how this works, consider the following schema and table (create it in any database you desire, likely tempdb, as this sample is isolated to this section):

CREATE SCHEMA menu;

GO

CREATE TABLE menu.foodItem

(

foodItemId int not null IDENTITY(1,1)

CONSTRAINT PKmenu_foodItem PRIMARY KEY,

name varchar(30) not null

CONSTRAINT AKmenu_foodItem_name UNIQUE,

description varchar(60) not null,

CONSTRAINT CHKmenu_foodItem_name CHECK (name <> ''),

CONSTRAINT CHKmenu_foodItem_description CHECK (description <> '')

);

Now, create a procedure to do the insert:

CREATE PROCEDURE menu.foodItem$insert

(

@name varchar(30),

@description varchar(60),

@newFoodItemId int = null output --we will send back the new id here

)

AS

BEGIN

SET NOCOUNT ON;

--gives us a unique savepoint name, trim it to 125

--characters if the user named it really large

DECLARE @savepoint nvarchar(128) =

cast(object_name(@@procid) AS nvarchar(125)) +

cast(@@nestlevel AS nvarchar(3));

--get initial entry level, so we can do a rollback on a doomed transaction

DECLARE @entryTrancount int = @@trancount;

BEGIN TRY

BEGIN TRANSACTION;

SAVE TRANSACTION @savepoint;

INSERT INTO menu.foodItem(name, description)

VALUES (@name, @description);

SET @newFoodItemId = scope_identity(); --if you use an instead of trigger,

--you will have to use name as a key

--to do the identity "grab" in a SELECT

--query

COMMIT TRANSACTION;

END TRY

BEGIN CATCH

--if the tran is doomed, and the entryTrancount was 0,

--we have to roll back

IF xact_state()= -1 and @entryTrancount = 0

ROLLBACK TRANSACTION;

--otherwise, we can still save the other activities in the

--transaction.

ELSE IF xact_state() = 1 --transaction not doomed, but open

BEGIN

ROLLBACK TRANSACTION @savepoint;

COMMIT TRANSACTION;

END

DECLARE @ERRORmessage nvarchar(4000);

SET @ERRORmessage = 'Error occurred in procedure ''' + object_name(@@procid)

+ ''', Original Message: ''' + ERROR_MESSAGE() + '''';

--change to RAISERROR (50000, @ERRORmessage,16) if you want to continue processing

THROW 50000,@ERRORmessage, 16;

RETURN -100;

END CATCH

END;

Next, try out the code:

DECLARE @foodItemId int, @retval int;

EXECUTE @retval = menu.foodItem$insert @name ='Burger',

@description = 'Mmmm Burger',

@newFoodItemId = @foodItemId output;

SELECT @retval as returnValue;

IF @retval >= 0

SELECT foodItemId, name, description

FROM menu.foodItem

where foodItemId = @foodItemId;

There's no error, so the row we created is returned:

returnValue

-----------

0

foodItemId name description

---------- ---- -----------

1 Burger Mmmm Burger

Now, try out the code with an error:

DECLARE @foodItemId int, @retval int;

EXECUTE @retval = menu.foodItem$insert @name ='Big Burger',

@description = '',

@newFoodItemId = @foodItemId output;

SELECT @retval as returnValue;

IF @retval >= 0

SELECT foodItemId, name, description

FROM menu.foodItem

where foodItemId = @foodItemId;

Because the description is blank, an error is returned:

Msg 50000, Level 16, State 16, Procedure foodItem$insert, Line 50

Error occurred in procedure 'foodItem$insert', Original Message: 'The INSERT statement conflicted with the CHECK constraint "CHKmenu_foodItem_description". The conflict occurred in database "ContainedDatabase", table "menu.foodItem", column 'description'.'

Note that no code in the batch is executed after the THROW statement is executed. Using RAISERROR will allow the processing to continue if you so desire.

Just as in stored procedures, you can start transactions, set savepoints, and roll back to a savepoint. However, if you execute a ROLLBACK TRANSACTION statement in a trigger, two things can occur:

- Outside a TRY-CATCH block, the entire batch of SQL statements is canceled.

- Inside a TRY-CATCH block, the batch isn’t canceled, but the transaction count is back to zero.

Back in Chapters 6 and 7, we discussed and implemented triggers that consistently used rollbacks when any error occurred. If you’re not using TRY-CATCH blocks, this approach is generally exactly what’s desired, but when using TRY-CATCH blocks, it can make things more tricky. To handle this, in the CATCH block of stored procedures I’ve included this code:

--if the tran is doomed, and the entryTrancount was 0,

--we have to roll back

IF xact_state()= -1 and @entryTrancount = 0

rollback transaction;

--otherwise, we can still save the other activities in the

--transaction.

ELSE IF xact_state() = 1 --transaction not doomed, but open

BEGIN

ROLLBACK TRANSACTION @savepoint;

COMMIT TRANSACTION;

END

This is an effective, if perhaps limited, method of working with errors from triggers that works in most any situation. Removing all ROLLBACK TRANSACTION commands but just raising an error from a trigger dooms the transaction, which is just as much trouble as the rollback. The key is to understand how this might affect the code that you’re working with and to make sure that errors are handled in an understandable way. More than anything, test all types of errors in your system (trigger, constraint, and so on).

For an example, I will create a trigger based on the framework we used for triggers in Chapter 6 and 7, which is presented in more detail in Appendix B. Instead of any validations, I will just immediately cause an error with the statement THROW 50000,'FoodItem''s cannot be done that way',16. Note that my trigger template does do a rollback in the trigger, assuming that users of these triggers follow the error handing setup here, rather than just dooming the transaction. Dooming the transaction could be a safer way to go if you do not have full control over error handling.

CREATE TRIGGER menu.foodItem$InsertTrigger

ON menu.foodItem

AFTER INSERT

AS

BEGIN

SET NOCOUNT ON;

SET ROWCOUNT 0; --in case the client has modified the rowcount

--use inserted for insert or update trigger, deleted for update or delete trigger

--count instead of @@rowcount due to merge behavior that sets @@rowcount to a number

--that is equal to number of merged rows, not rows being checked in trigger

DECLARE @msg varchar(2000), --used to hold the error message

--use inserted for insert or update trigger, deleted for update or delete trigger

--count instead of @@rowcount due to merge behavior that sets @@rowcount to a number

--that is equal to number of merged rows, not rows being checked in trigger

@rowsAffected int = (SELECT COUNT(*) FROM inserted);

--@rowsAffected int = (SELECT COUNT(*) FROM deleted);

--no need to continue on if no rows affected

IF @rowsAffected = 0 RETURN;

BEGIN TRY

--[validation blocks][validation section]

THROW 50000, 'FoodItem''s cannot be done that way',16

--[modification blocks][modification section]

END TRY

BEGIN CATCH

IF @@trancount > 0

ROLLBACK TRANSACTION;

THROW;

END CATCH

END

In the downloadable code, I have modified the error handling in the stored procedure to put out markers, so you can see what branch of the code is being executed:

SELECT 'In error handler'

--if the tran is doomed, and the entryTrancount was 0,

--we have to roll back

IF xact_state()= -1 and @entryTrancount = 0

begin

SELECT 'Transaction Doomed'

ROLLBACK TRANSACTION

end

--otherwise, we can still save the other activities in the

--transaction.

ELSE IF xact_state() = 1 --transaction not doomed, but open

BEGIN

SELECT 'Savepoint Rollback'

ROLLBACK TRANSACTION @savepoint

COMMIT TRANSACTION

END

Executing the code that contains an error that the constraints catch:

DECLARE @foodItemId int, @retval int;

EXECUTE @retval = menu.foodItem$insert @name ='Big Burger',

@description = '',

@newFoodItemId = @foodItemId output;

SELECT @retval;

This is the output, letting us know that the transaction was still technically open and we could have committed any changes we wanted to:

----------------

In Error Handler

------------------

Savepoint Rollback

Msg 50000, Level 16, State 16, Procedure foodItem$insert, Line 57

Error occurred in procedure 'foodItem$insert', Original Message: 'The INSERT statement conflicted with the CHECK constraint "CHKmenu_foodItem_description". The conflict occurred in database "ContainedDatabase", table "menu.foodItem", column 'description'.'

You can see the constraint message, after the template error. Now, try to enter some data that is technically correct but is blocked by the trigger with the ROLLBACK:

DECLARE @foodItemId int, @retval int;

EXECUTE @retval = menu.foodItem$insert @name ='Big Burger',

@description = 'Yummy Big Burger',

@newFoodItemId = @foodItemId output;

SELECT @retval;

These results are a bit more mysterious, though the transaction is clearly in an error state. Since the rollback operation occurs in the trigger, once we reach the error handler, there is no need to do any savepoint or rollback, so it just finishes:

----------------

In Error Handler

Msg 50000, Level 16, State 16, Procedure foodItem$insert, Line 57

Error occurred in procedure 'foodItem$insert', Original Message: 'FoodItem's cannot be done that way'

For the final demonstration, I will change the trigger to just do a RAISERROR, with no other error handling:

ALTER TRIGGER menu.foodItem$InsertTrigger

ON menu.foodItem

AFTER INSERT

AS

BEGIN

DECLARE @rowsAffected int, --stores the number of rows affected

@msg varchar(2000); --used to hold the error message

SET @rowsAffected = @@rowcount;

--no need to continue on if no rows affected

IF @rowsAffected = 0 return;

SET NOCOUNT ON; --to avoid the rowcount messages

SET ROWCOUNT 0; --in case the client has modified the rowcount

THROW 50000,'FoodItem''s cannot be done that way',16;

END;

Then, reexecute the previous statement that caused the trigger error:

----------------

In Error Handler

------------------

Transaction Doomed

Msg 50000, Level 16, State 16, Procedure foodItem$insert, Line 57

Error occurred in procedure 'foodItem$insert', Original Message: 'FoodItem's cannot be done that way'

Hence, our error handler covered all of the different bases of what can occur for errors. In each case, we got an error message that would let us know where an error was occurring and that it was an error. The main thing I wanted to show in this section is that error handling is messy and adding triggers, while useful, complicates the error handling process, so I would certainly use constraints as much as possible and triggers as rarely as possibly (the primary uses I have for them were outlined in Chapter 7). Also, be certain to develop an error handler in your T-SQL code and applications that is used with all of your code so that you capture all exceptions in a manner that is desirable for you and your developers.

In the previous section, I introduced transactions, which are the foundation of the SQL Server concurrency controls. Even without concurrency they would be useful, but now, we are going to get a bit deeper into concurrency controls and start to demonstrate how multiple users can be manipulating and modifying the exact same data, making sure that all users get consistent usage of the data. Picture a farm with tractors and people picking vegetables. Both sets of farm users are necessary, but you definitely want to isolate their utilization from one another.

Of the ACID properties discussed earlier, isolation is probably the most difficult to understand and certainly the most important to get right. You probably don’t want to make changes to a system and have them trampled by the next user any more than the farm hand wants to become tractor fodder (well, OK, perhaps his concern is a bit more physical—a wee bit at least). In this section, I will introduce a couple important concepts that are essential to building concurrent applications, both in understanding what is going on, and introduce the knobs you can use to tune the degree of isolation for sessions:

- Locks: These are holds put by SQL Server on objects that are being used by users.

- Isolation levels: These are settings used to control the length of time for which SQL Server holds onto the locks.

These two important things work together to allow you to control and optimize a server’s concurrency, allowing users to work at the same time, on the same resources, while still maintaining consistency. However, just how consistent your data remains is the important thing, and that is what you will see in the “Isolation Levels” section.

Locks

Locks are tokens laid down by the SQL Server processes to stake their claims to the different resources available, so as to prevent one process from stomping on another and causing inconsistencies or prevent another process from seeing data that has not yet been verified by constraints or triggers. They are a lot like the “diver down” markers that deep-sea divers place on top of the water when working below the water. They do this to alert other divers, pleasure boaters, fishermen, and others that they’re below. Other divers are welcome, but a fishing boat with a trolling net should please stay away, thank you very much! Every SQL Server process applies a lock to anything it does to ensure that that other user processes know what they are doing as well as what they are planning to do and to ensure that other processes don’t get in it’s way. Minimally, a lock is always placed just to make sure that the database that is in use cannot be dropped.

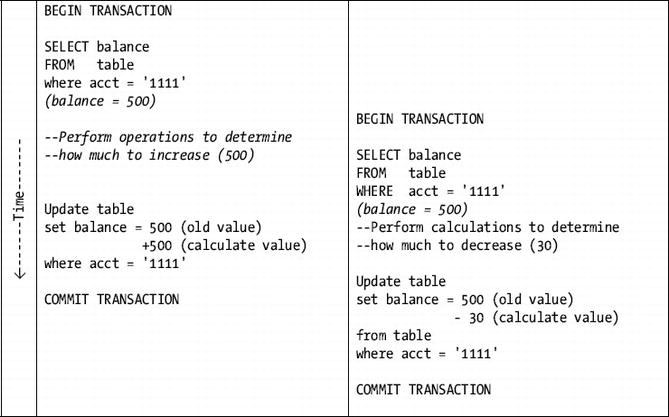

The most common illustration of why locks are needed is called the lost update , as illustrated in Figure 11-1.

Figure 11-1. A lost update illustration (probably one of the major inspirations for the other definition of multitasking: “screwing up everything simultaneously”)

In the scenario in Figure 11-1, you have two concurrent users. Each of these executes some SQL statements adding money to the balance, but in the end, the final value is going to be the wrong value, and 500 will be lost from the balance. Why? Because each user fetched a reality from the database that was correct at the time and then acted on it as if it would always be true.

Locks act as a message to other processes that a resource is being used, or at least probably being used. Think of a railroad-crossing sign. When the bar crosses the road, it acts as a lock to tell you not to drive across the tracks because the train is going to use the resource. Even if the train stops and never reaches the road, the bar comes down, and the lights flash. This lock can be ignored (as can SQL Server locks), but it’s generally not advisable to do so, because if the train does come, you may not have the ability to go back to Disney World, except perhaps to the Haunted Mansion. (Ignoring locks isn’t usually as messy as ignoring a train-crossing signal, unless you are creating the system that controls that warning signal. Ignore those locks—ouch.)

In this section, I will look at a few characteristics of locks:

- Type of lock: Indicates what is being locked

- Mode of lock: Indicates how strong the lock is

If you’ve been around for a few versions of SQL Server, you probably know that since SQL Server 7.0, SQL Server primarily uses row-level locks. That is, a user locking some resource in SQL Server does it on individual rows of data, rather than on pages of data, or even on complete tables.

However, thinking that SQL Server only locks at the row level is misleading, as SQL Server can use six different types of locks to lock varying portions of the database, with the row being the finest type of lock, all the way up to a full database lock. And each of them will be used quite often. The types of locks in Table 11-1 are supported.

![]() Tip In terms of locks, database object locks (row, RID, key range, key, page, table, database) are all you have much knowledge of or control over in SQL Server, so these are all I’ll cover. However, you should be aware that many more locks are in play, because SQL Server manages its hardware and internal needs as you execute queries. Hardware and internal resource locks are referred to as latches, and you’ll occasionally see them referenced in SQL Server Books Online, though the documentation is not terribly deep regarding them. You have little control over them, because they control physical resources, like the lock on the lavatory door in an airplane. Like the lavatory, though, you generally only want one user accessing a physical resource at a time.

Tip In terms of locks, database object locks (row, RID, key range, key, page, table, database) are all you have much knowledge of or control over in SQL Server, so these are all I’ll cover. However, you should be aware that many more locks are in play, because SQL Server manages its hardware and internal needs as you execute queries. Hardware and internal resource locks are referred to as latches, and you’ll occasionally see them referenced in SQL Server Books Online, though the documentation is not terribly deep regarding them. You have little control over them, because they control physical resources, like the lock on the lavatory door in an airplane. Like the lavatory, though, you generally only want one user accessing a physical resource at a time.

Table 11-1. Lock Types

| Type of Lock | Granularity |

|---|---|

| Row or row identifier (RID) | A single row in a table |

| Key or key range | A single value or range of values (for example, to lock rows with values from A–M, even if no rows currently exist) |

| Page | An 8-KB index or data page |

| Extent | A group of eight 8-KB pages (64KB), generally only used when allocating new space to the database |

| HoBT | An entire heap or B-tree structure |

| Table | An entire table, including all rows and indexes |

| File | An entire file, as covered in Chapter 10 |

| Application | A special type of lock that is user defined (will be covered in more detail later in this chapter) |

| Metadata | Metadata about the schema, such as catalog objects |

| Allocation unit | A group of 32 extents |

| Database | The entire database |

At the point of request, SQL Server determines approximately how many of the database resources (a table, a row, a key, a key range, and so on) are needed to satisfy the request. This is calculated on the basis of several factors, the specifics of which are unpublished. Some of these factors include the cost of acquiring the lock, the amount of resources needed, and how long the locks will be held (the next major section, “Isolation Levels,” will discuss the factors surrounding the question “how long?”). It’s also possible for the query processor to upgrade the lock from a more granular lock to a less specific type if the query is unexpectedly taking up large quantities of resources.

For example, if a large percentage of the rows in a table are locked with row locks, the query processor might switch to a table lock to finish out the process. Or, if you’re adding large numbers of rows into a clustered table in sequential order, you might use a page lock on the new pages that are being added.

Beyond the type of lock, the next concern is how strongly to lock the resource. For example, consider a construction site. Workers are generally allowed onto the site but not civilians who are not part of the process. Sometimes, however, one of the workers might need exclusive use of the site to do something that would be dangerous for other people to be around (like using explosives, for example.)

Where the type of lock defined the amount of the database to lock, the mode of the lock refers to how strict the lock is and how protective the engine is when dealing with other locks. Table 11-2 lists these available modes.

Table 11-2. Lock Modes

| Mode | Description |

|---|---|

| Shared | This lock mode grants access for reads only. It’s generally used when users are looking at but not editing the data. It’s called “shared” because multiple processes can have a shared lock on the same resource, allowing read-only access to the resource. However, sharing resources prevents other processes from modifying the resource. |

| Exclusive | This mode gives exclusive access to a resource and can be used during modification of data also. Only one process may have an active exclusive lock on a resource. |

| Update | This mode is used to inform other processes that you’re planning to modify the data but aren’t quite ready to do so. Other connections may also issue shared, but not update or exclusive, locks while you’re still preparing to do the modification. Update locks are used to prevent deadlocks (I’ll cover them later in this section) by marking rows that a statement will possibly update, rather than upgrading directly from a shared lock to an exclusive one. |

| Intent | This mode communicates to other processes that taking one of the previously listed modes might be necessary. You might see this mode as intent shared, intent exclusive, or shared with intent exclusive. |

| Schema | This mode is used to lock the structure of an object when it’s in use, so you cannot alter a table when a user is reading data from it. |

Each of these modes, coupled with the granularity, describes a locking situation. For example, an exclusive table lock would mean that no other user can access any data in the table. An update table lock would say that other users could look at the data in the table, but any statement that might modify data in the table would have to wait until after this process has been completed.

To determine which mode of a lock is compatible with another mode of lock, we deal with lock compatibility. Each lock mode may or may not be compatible with the other lock mode on the same resource (or resource that contains other resources). If the types are compatible, two or more users may lock the same resource. Incompatible lock types would require the any additional users simply to wait until all of the incompatible locks have been released.

Table 11-3 shows which types are compatible with which others.

Table 11-3. Lock Compatibility Modes

| Mode | IS | S | U | IX | SIX | X |

|---|---|---|---|---|---|---|

| Intent shared (IS) | • | • | • | • | • | |

| Shared (S) | • | • | • | |||

| Update (U) | • | • | ||||

| Intent exclusive (IX) | • | • | ||||

| Shared with intent exclusive (SIX) | • | |||||

| Exclusive (X) |

Although locks are great for data consistency, as far as concurrency is considered, locked resources stink. Whenever a resource is locked with an incompatible lock type and another process cannot use it to complete its processing, concurrency is lowered, because the process must wait for the other to complete before it can continue. This is generally referred to as blocking: one process is blocking another from doing something, so the blocked process must wait its turn, no matter how long it takes.

Simply put, locks allow consistent views of the data by only letting a single process modify a single resource at a time, while allowing multiple viewers simultaneous utilization in read-only access. Locks are a necessary part of SQL Server architecture, as is blocking to honor those locks when needed, to make sure one user doesn’t trample on another’s data, resulting in invalid data in some cases.

In the next section, I’ll discuss isolation levels, which determine how long locks are held. Executing SELECT * FROM sys.dm_os_waiting_tasks gives you a list of all processes that tells you if any users are blocking and which user is doing the blocking. Executing SELECT * FROM sys.dm_tran_locks lets you see locks that are being held. SQL Server Management Studio has a decent Activity Monitor, accessible via the Object Explorer in the Management folder.

It’s possible to instruct SQL Server to use a different type of lock than it might ordinarily choose by using table hints on your queries. For individual tables in a FROM clause, you can set the type of lock to be used for the single query like so:

FROM table1 [WITH] (<tableHintList>)

join table2 [WITH] (<tableHintList>)

Note that these hints work on all query types. In the case of locking, you can use quite a few. A partial list of the more common hints follows:

- PageLock: Forces the optimizer to choose page locks for the given table.

- NoLock: Leave no locks, and honor no locks for the given table.

- RowLock: Force row-level locks to be used for the table.

- Tablock: Go directly to table locks, rather than row or even page locks. This can speed some operations, but seriously lowers write concurrency.

- TablockX: This is the same as Tablock, but it always uses exclusive locks (whether it would have normally done so or not).

- XLock: Use exclusive locks.

- UpdLock: Use update locks.

Note that SQL Server can override your hints if necessary. For example, take the case where a query sets the table hint of NoLock, but then rows are modified in the table in the execution of the query. No shared locks are taken or honored, but exclusive locks are taken and held on the table for the rows that are modified, though not on rows that are only read (this is true even for resources that are read as part of a trigger or constraint).

A very important term that’s you need to understand is “deadlock.” A deadlock is a circumstance where two processes are trying to use the same objects, but neither will ever be able to complete because each is blocked by the other connection. For example, consider two processes (Processes 1 and 2), and two resources (Resources A and B). The following steps lead to a deadlock:

- Process 1 takes a lock on Resource A, and at the same time, Process 2 takes a lock on Resource B.

- Process 1 tries to get access to Resource B. Because it’s locked by Process 2, Process 1 goes into a wait state.

- Process 2 tries to get access to Resource A. Because it’s locked by Process 1, Process 2 goes into a wait state.

At this point, there’s no way to resolve this issue without ending one of the processes. SQL Server arbitrarily kills one of the processes, unless one of the processes has voluntarily raised the likelihood of being the killed process by setting DEADLOCK_PRIORITY to a lower value than the other. Values can be between integers –10 and 10, or LOW (equal to –5), NORMAL (0), or HIGH (5). SQL Server raises error 1205 to the client to tell the client that the process was stopped:

Server: Msg 1205, Level 13, State 1, Line 4

Transaction (Process ID 55) was deadlocked on lock resources with another process and has been chosen as the deadlock victim. Rerun the transaction.

At this point, you could resubmit the request, as long as the call was coded such that the application knows when the transaction was started and what has occurred (something every application programmer ought to strive to do).

![]() Tip Proper deadlock handling requires that you build your applications in such a way that you can easily tell how much of an operation succeeded or failed. This is done by proper use of transactions. A good practice is to send one transaction per batch from a client application. Keep in mind that the engine views nested transactions as one transaction, so what I mean here is to start and complete one high-level transaction per batch.

Tip Proper deadlock handling requires that you build your applications in such a way that you can easily tell how much of an operation succeeded or failed. This is done by proper use of transactions. A good practice is to send one transaction per batch from a client application. Keep in mind that the engine views nested transactions as one transaction, so what I mean here is to start and complete one high-level transaction per batch.

Deadlocks can be hard to diagnose, as you can deadlock on many things, even hardware access. A common trick to try to alleviate frequent deadlocks between pieces of code is to order object access in the same order in all code (so table dbo.Apple, dbo.Bananna, etc) if possible. This way, locks are more likely to be taken in the same order, causing the lock to block earlier, so that the next process is blocked instead of deadlocked.