![]()

Table Structures and Indexing

A lot of us have jobs where we need to give people structure but that is different from controlling.

—Keith Miller

To me, the true beauty of the relational database engine comes from its declarative nature. As a programmer, I simply ask the engine a question, and it answers it. The questions I ask are usually pretty simple; just give me some data from a few tables, correlate it on some of the data, do a little math perhaps, and give me back these pieces of information (and naturally do it incredibly fast if you don’t mind). Generally, the engine obliges with an answer extremely quickly. But how does it do it? If you thought it was magic, you would not be right. It is a lot of complex code implementing a massive amount of extremely complex algorithms that allow the engine to answer your questions in a timely manner. With every passing version of SQL Server, that code gets better at turning your relational request into a set of operations that gives you the answers you desire in remarkably small amounts of time. These operations will be shown to you on a query plan, which is a blueprint of the algorithms used to execute your query. I will use query plans often in this chapter and others to show you how your design choices can affect the way work gets done.

Our job as data-oriented designers and programmers is to assist the query optimizer (which takes your query and turns it into a plan of how to run the query), the query processor (which takes the plan and uses it to do the actual work), and the storage engine (which manages IO for the whole process) by first designing and implementing as close to the relational model as possible by normalizing your structures, using good set-based code (no cursors), following best practices with coding T-SQL, and so on. This is a design book, so I won’t cover T-SQL coding, but it is a skill you should master. Consider Apress’s Beginning T-SQL 2012 by Kathi Kellenberger (Aunt Kathi!) and Scott Shaw or perhaps one of Itzik Ben-Gan’s Inside SQL books on T-SQL for some deep learning on the subject. Once you have built your system correctly, the next step is to help out by adjusting the physical structures using indexing, filegroups, files, partitioning, and everything else you can do to adjust the physical layers to assist the optimizer deal with your commonly asked questions.

When it comes to tuning your database structures, you must maintain a balance between doing too much and too little. Indexing strategies are a great example of this. If you don’t use indexes enough, searches will be slow, as the query processor could have to read every row of every table for every query (which, even if it seems fast on your machine, can cause the concurrency issues we will cover in the next chapter by forcing the query processor to lock a lot more resources than is necessary). Use too many indexes, and modifying data could take too long, as indexes have to be maintained. Balance is the key, kind of like matching the amount of fluid to the size of the glass so that you will never have to answer that annoying question about a glass that has half as much fluid as it can hold. (The answer is either that the glass is too large or the waitress needs to refill your glass immediately, depending on the situation.)

Everything we have done so far has been centered on the idea that the quality of the data is the number one concern. Although this is still true, in this chapter, we are going to assume that we’ve done our job in the logical and implementation phases, so the data quality is covered. Slow and right is always better than fast and wrong (how would you like to get paid a week early, but only get half your money?), but the obvious goal of building a computer system is to do things right and fast. Everything we do for performance should affect only the performance of the system, not the data quality in any way.

We have technically added indexes in previous chapters as a side effect of adding primary key and unique constraints (in that a unique index is built by SQL Server to implement the uniqueness condition). In many cases, those indexes will turn out to be a lot of what you need to make normal queries run nicely, since the most common searches that people will do will be on identifying information. Of course, you will likely discover that some of the operations you are trying to achieve won’t be nearly as fast as you hope. This is where physical tuning comes in, and at this point, you need to understand how tables are structured and consider organizing the physical structures.

The goal of this chapter is to provide a basic understanding of the types of things you can do with the physical database implementation, including the indexes that are available to you, how they work, and how to use them in an effective physical database strategy. This understanding relies on a base knowledge of the physical data structures on which we based these indexes—in other words, of how the data is structured in the physical SQL Server storage engine. In this chapter, I’ll cover the following:

- Physical database structure: An overview of how the database and tables are stored. This acts mainly as foundation material for subsequent indexing discussion, but the discussion also highlights the importance of choosing and sizing your datatypes carefully.

- Indexing: A survey of the different types of indexes and their structure. I’ll demonstrate many of the index settings and how these might be useful when developing your strategy, to correct any performance problems identified during optimization testing.

- Index usage scenarios: I’ll discuss a few specialized cases of how to apply and use indexes.

- Index Dynamic Management View queries: In this section, I will introduce a couple of the dynamic management views that you can use to help determine what indexes you may need and to see which indexes have been useful in your system.

Once you understand the physical data structures, it will be a good bit easier to visualize what is occurring in the engine and then optimize data storage and access without affecting the correctness of the data. It’s essential to the goals of database design and implementation that the physical storage not affect the physically implemented model. This is what Codd’s eighth rule, also known as the Physical Data Independence rule—is about. As we discussed in Chapter 1, this rule states that the physical storage can be implemented in any manner as long as the users don’t have to know about it. It also implies that if you change the physical storage, the users shouldn’t be affected. The strategies we will cover should change the physical model but not the model that the users (people and code) know about. All we want to do is enhance performance, and understanding the way SQL Server stores data is an important step.

![]() Note I am generally happy to treat a lot of the deeper internals of SQL Server as a mystery left to the engine to deal with. For a deeper explanation consider any of Kalen Delaney’s books, where I go whenever I feel the pressing need to figure out why something that seems bizarre is occurring. The purpose of this chapter is to give you a basic feeling for what the structures are like, so you can visualize the solution to some problems and understand the basics of how to lay out your physical structures.

Note I am generally happy to treat a lot of the deeper internals of SQL Server as a mystery left to the engine to deal with. For a deeper explanation consider any of Kalen Delaney’s books, where I go whenever I feel the pressing need to figure out why something that seems bizarre is occurring. The purpose of this chapter is to give you a basic feeling for what the structures are like, so you can visualize the solution to some problems and understand the basics of how to lay out your physical structures.

Some of the samples may not work 100% the same way on your computer, depending on our hardware situations, or changes to the optimizer from updates or service packs.

Physical Database Structure

In SQL Server, databases are physically structured as several layers of containers that allow you to move parts of the data around to different disk drives for optimum access. As discussed in Chapter 1, a database is a collection of related data. At the logical level, it contains tables that have columns that contain data. At the physical level, databases are made up of files, where the data is physically stored. These files are basically just typical Microsoft Windows files, and they are logically grouped into filegroups that control where they are stored on a disk. Each file contains a number of extents, which are is an allocation of 64-KB in a database file that’s made up of eight individual contiguous 8-KB pages. The page is the basic unit of data storage in SQL Server databases. Everything that’s stored in SQL Server is stored on pages of several types—data, index, overflow and others—but these are the ones that are most important to you (I will list the others later in the section called “Extents and Pages”). The following sections describe each of these containers in more detail, so you understand the basics of how data is laid out on disk.

![]() Note Because of the extreme variety of hardware possibilities and needs, it’s impossible in a book on design to go into serious depth about how and where to place all your files in physical storage. I’ll leave this task to the DBA-oriented books. For detailed information about choosing and setting up your hardware, check out

http://msdn.microsoft.com

or most any of Glenn Berry’s writing. Glenn’s blog (at

http://sqlserverperformance.wordpress.com/

at the time of this writing) contained a wealth of information about SQL Server hardware, particularly CPU changes.

Note Because of the extreme variety of hardware possibilities and needs, it’s impossible in a book on design to go into serious depth about how and where to place all your files in physical storage. I’ll leave this task to the DBA-oriented books. For detailed information about choosing and setting up your hardware, check out

http://msdn.microsoft.com

or most any of Glenn Berry’s writing. Glenn’s blog (at

http://sqlserverperformance.wordpress.com/

at the time of this writing) contained a wealth of information about SQL Server hardware, particularly CPU changes.

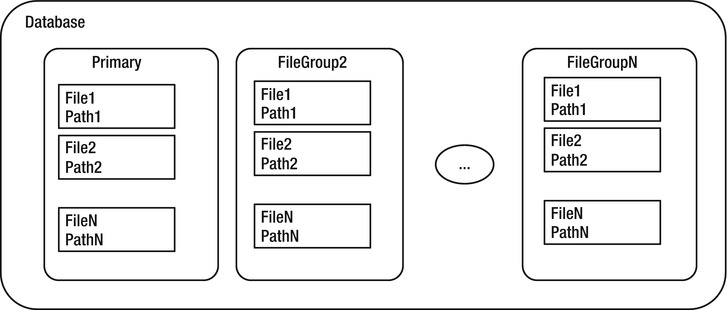

Figure 10-1 provides a high-level depiction of the objects used to organize the files (I’m ignoring logs in this chapter, because you don’t have direct access to them).

Figure 10-1. Database storage organization

At the top level of a SQL Server instance, we have the database. The database is comprised of one or more filegroups, which are logical groupings of one or more files. We can place different filegroups on different disk drives (hopefully on a different disk drive controller) to distribute the I/O load evenly across the available hardware. It’s possible to have multiple files in the filegroup, in which case SQL Server allocates space across each file in the filegroup. For best performance, it’s generally best to have no more files in a filegroup than you have physical CPUs (not including hyperthreading, though the rules with hyperthreading are changing as processors continue to improve faster than one could write books on the subject).

A filegroup contains one or more files, which are actual operating system files. Each database has at least one primary filegroup, whose files are called primary files (commonly suffixed as .mdf, although there’s no requirement to give the files any particular names or extension). Each database can possibly have other secondary filegroups containing the secondary files (commonly suffixed as .ndf), which are in any other filegroups. Files may only be a part of a single filegroup. SQL Server proportionally fills files by allocating extents in each filegroup equally, so you should make all of the files the same size if possible. (There is also a file type for full-text indexing and a filegroup type we used in Chapter 7 for filestream types of data that I will largely ignore in this chapter as well. I will focus only on the core file types that you will use for implementing your structures.)

You control the placement of objects that store physical data pages at the filegroup level (code and metadata is always stored on the primary filegroup, along with all the system objects). New objects created are placed in the default filegroup, which is the PRIMARY filegroup (every database has one as part of the CREATE DATABASE statement, or the first file specified is set to primary) unless another filegroup is specified in any CREATE <object> commands. For example, to place an object in a filegroup other than the default, you need to specify the name of the filegroup using the ON clause of the table- or index-creation statement:

CREATE TABLE <tableName>

(…) ON <fileGroupName>

This command assigns the table to the filegroup, but not to any particular file. Where in the files the object is created is strictly out of your control.

![]() Tip If you want to move a table to a different filegroup, you can use the MOVE TO option of the ALTER TABLE statement if the table has a clustered index, or for a heap (a table without a clustered index, covered later in this chapter), create a clustered index on the object on the filegroup you want it and then drop it. For nonclustered indexes, use the DROP_EXISTING setting on the CREATE INDEX statement.

Tip If you want to move a table to a different filegroup, you can use the MOVE TO option of the ALTER TABLE statement if the table has a clustered index, or for a heap (a table without a clustered index, covered later in this chapter), create a clustered index on the object on the filegroup you want it and then drop it. For nonclustered indexes, use the DROP_EXISTING setting on the CREATE INDEX statement.

Use code like the following to create indexes and specify a filegroup:

CREATE INDEX <indexName> ON <tableName> (<columnList>) ON <filegroup>;

Use the following type of command (or use ALTER TABLE) to create constraints that in turn create indexes (UNIQUE, PRIMARY KEY):

CREATE TABLE <tableName>

(

…

<primaryKeyColumn> int CONSTRAINT PKTableName ON <fileGroup>

…

);

For the most part, having just one filegroup and one file is the best practice for a large number of databases. If you are unsure if you need multiple filegroups, my advice is to build your database on a single filegroup and see if the data channel provided can handle the I/O volume (for the most part, I will avoid making too many such generalizations, as tuning is very much an art that requires knowledge of the actual load the server will be under). As activity increases and you build better hardware with multiple CPUs and multiple drive channels, you might place indexes on their own filegroup, or even place files of the same filegroup across different controllers.

In the following example, I create a sample database with two filegroups, with the secondary filegroup having two files in it (I put this sample database in an SQLData folder in the root of the C drive to keep the example simple (and able to work on the types of drives that many of you will probably be testing my code on), but it is rarely a good practice to place your files on the C: drive when you have others available. I generally put a sql directory on every drive and put everything SQL in that directory in subfolders to keep things consistent over all of our servers. Put the files wherever works best for you.):

CREATE DATABASE demonstrateFilegroups ON

PRIMARY ( NAME = Primary1, FILENAME = 'c:sqldatademonstrateFilegroups_primary.mdf',

SIZE = 10MB),

FILEGROUP SECONDARY

( NAME = Secondary1, FILENAME = 'c:sqldatademonstrateFilegroups_secondary1.ndf',

SIZE = 10MB),

( NAME = Secondary2, FILENAME = 'c:sqldatademonstrateFilegroups_secondary2.ndf',

SIZE = 10MB)

LOG ON ( NAME = Log1,FILENAME = 'c:sqllogdemonstrateFilegroups_log.ldf', SIZE = 10MB);

You can define other file settings, such as minimum and maximum sizes and growth. The values you assign depend on what hardware you have. For growth, you can set a FILEGROWTH parameter that allows you to grow the file by a certain size or percentage of the current size, and a MAXSIZE parameter, so the file cannot just fill up existing disk space. For example, if you wanted the file to start at 1GB and grow in chunks of 100MB up to 2GB, you could specify the following:

CREATE DATABASE demonstrateFileGrowth ON

PRIMARY ( NAME = Primary1,FILENAME = 'c:sqldatademonstrateFileGrowth_primary.mdf',

SIZE = 1GB, FILEGROWTH=100MB, MAXSIZE=2GB)

LOG ON ( NAME = Log1,FILENAME = 'c:sqlldatademonstrateFileGrowth_log.ldf', SIZE = 10MB);

The growth settings are fine for smaller systems, but it’s usually better to make the files large enough so that there’s no need for them to grow. File growth can be slow and cause ugly bottlenecks when OLTP traffic is trying to use a file that’s growing. When SQL Server is running on a desktop operating system like Windows XP or greater (and at this point you probably ought to be using something greater like Windows 7—(or presumably Windows 8 or 9 depending on when you are reading this) or on a server operating system such as Windows Server 2003 or greater (again, it is 2012, so emphasize “or greater”), you can improve things by using “instant” file allocation (though only for data files). Instead of initializing the files, the space on disk can simply be allocated and not written to immediately. To use this capability, the system account cannot be LocalSystem, and the user account that the SQL Server runs under must have SE_MANAGE_VOLUME_NAME Windows permissions. Even with the existence of instant file allocation, it’s still going to be better to have some idea of what size data you will have and allocate space proactively, as you then have cordoned off the space ahead of time: no one else can take it from you, and you won’t fail when the file tries to grow and there isn’t enough space. In either event, the DBA staff should be on top of the situation and make sure that you don’t run out of space.

You can query the sys.filegroups catalog view to view the files in the newly created database:

USE demonstrateFilegroups;

GO

SELECT case WHEN fg.name IS NULL

then CONCAT('OTHER-',df.type_desc COLLATE database_default)

ELSE fg.name end as file_group,

df.name as file_logical_name,

df.physical_name as physical_file_name

FROM sys.filegroups fg

RIGHT JOIN sys.database_files df

ON fg.data_space_id = df.data_space_id;

This returns the following results:

| file_group | file_logical_name | physical_file_name |

| ========= | ------------- | ------------- |

| PRIMARY | Primary1 | c:sqldatademonstrateFilegroups_primary.mdf |

| OTHER-LOG | Log1 | c:sqllogdemonstrateFilegroups_log.ldf |

| SECONDARY | Secondary1 | c:sqldatademonstrateFilegroups_secondary1.ndf |

| SECONDARY | Secondary2 | c:sqldatademonstrateFilegroups_secondary2.ndf |

The LOG file isn’t technically part of a filegroup, so I used a right outer join to the database files and gave it a default filegroup name of OTHER plus the type of file to make the results include all files in the database. You may also notice a couple other interesting things in the code. First, the CONCAT function is new to SQL Server 2012 to add strings together.. Second is the COLLATE database_default. The strings in the system functions are in the collation of the server, while the literal OTHER- is in the database collation. If the server doesn’t match the database, this query would fail.

There’s a lot more information than just names in the catalog views I’ve referenced already in this chapter. If you are new to the catalog views, dig in and learn them. There is a wealth of information in those views that will be invaluable to you when looking at systems to see how they are set up and to determine how to tune them.

![]() Tip An interesting feature of filegroups is that you can back up and restore them individually. If you need to restore and back up a single table for any reason, placing it in its own filegroup can achieve this.

Tip An interesting feature of filegroups is that you can back up and restore them individually. If you need to restore and back up a single table for any reason, placing it in its own filegroup can achieve this.

These databases won’t be used anymore, so if you created them, just drop them if you desire:

USE MASTER;

GO

DROP DATABASE demonstrateFileGroups;

GO

DROP DATABASE demonstrateFileGrowth;

Extents and Pages

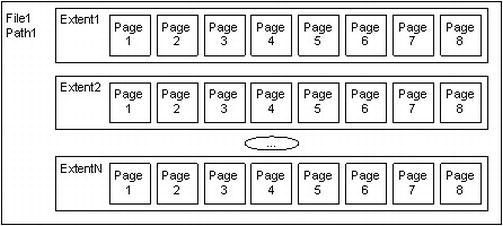

As shown in Figure 10-2, files are further broken down into a number of extents , each consisting of eight separate 8-KB pages where tables, indexes, and so on are physically stored. SQL Server only allocates space in a database to extents. When files grow, you will notice that the size of files will be incremented only in 64-KB increments.

Figure 10-2. Files and extents

Each extent in turn has eight pages that hold one specific type of data each:

- Data: Table data.

- Index: Index data.

- Overflow data : Used when a row is greater than 8,060 bytes or for varchar(max), varbinary(max), text, or image values.

- Allocation map : Information about the allocation of extents.

- Page free space : Information about what different pages are allocated for.

- Index allocation : Information about extents used for table or index data.

- Bulk changed map : Extents modified by a bulk INSERT operation.

- Differential changed map : Extents that have changed since the last database backup command. This is used to support differential backups.

In larger databases, most extents will contain just one type of page, but in smaller databases, SQL Server can place any kind of page in the same extent. When all data is of the same type, it’s known as a uniform extent. When pages are of various types, it’s referred to as a mixed extent.

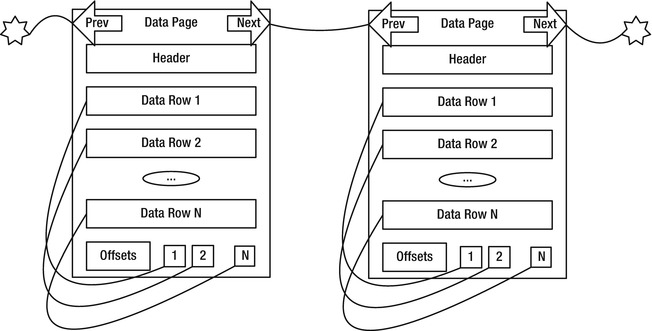

SQL Server places all table data in pages, with a header that contains metadata about the page (object ID of the owner, type of page, and so on), as well as the rows of data, which I’ll cover later in this chapter. At the end of the page are the offset values that tell the relational engine where the rows start.

Figure 10-3 shows a typical data page from a table. The header of the page contains identification values such as the page number, the object ID of the object the data is for, compression information, and so on. The data rows hold the actual data. Finally, there’s an allocation block that has the offsets/pointers to the row data.

Figure 10-3. Data pages

Figure 10-3 shows that there are pointers from the next to the previous rows. These pointers are only used when pages are ordered, such as in the pages of an index. Heap objects (tables with no clustered index) are not ordered. I will cover this a bit later in the “Index Types” section.

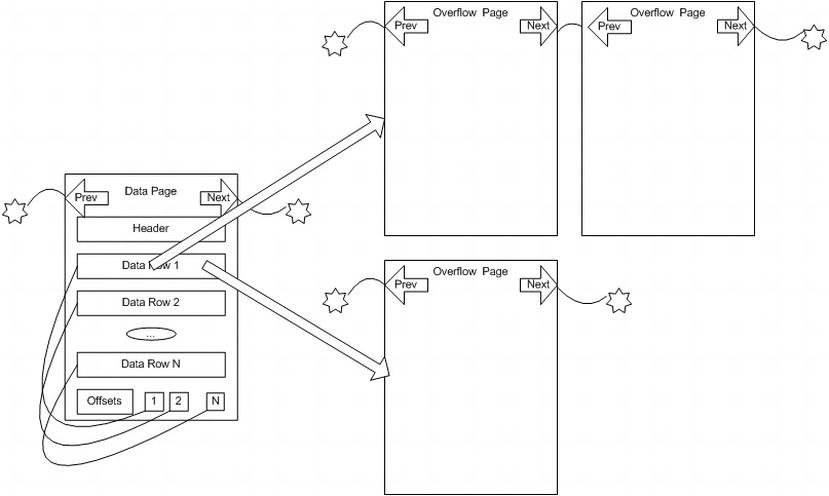

The other kind of page that is frequently used that you need to understand is the overflow page . It is used to hold row data that won’t fit on the basic 8,060-byte page. There are two reasons an overflow page is used:

- The combined length of all data in a row grows beyond 8,060 bytes. In versions of SQL Server prior to 2000, this would cause an error. In versions after this, data goes on an overflow page automatically, allowing you to have virtually unlimited row sizes.

- By setting the sp_tableoption setting on a table for large value types out of row to 1, all the (max) and XML datatype values are immediately stored out of row on an overflow page. If you set it to 0, SQL Server tries to place all data on the main page in the row structure, as long as it fits into the 8,060-byte row. The default is 0, because this is typically the best setting when the typical values are short enough to fit on a single page.

For example, Figure 10-4 depicts the type of situation that might occur for a table that has the large value types out of row set to 1. Here, Data Row 1 has two pointers to a varbinary(max) columns: one that spans two pages and another that spans only a single page. Using all of the data in Data Row 1 will now require up to four reads (depending on where the actual page gets stored in the physical structures), making data access far slower than if all of the data were on a single page. This kind of performance problem can be easy to overlook, but on occasion, overflow pages will really drag down your performance, especially when other programmers use SELECT * on tables where they don’t really need all of the data.

Figure 10-4. Sample overflow pages

The overflow pages are linked lists that can accommodate up to 2GB of storage in a single column. Generally speaking, it isn’t really a very good idea to store 2GB in a single column (or even a row), but the ability to do so is available if needed.

Understand that storing large values that are placed off of the main page will be far more costly when you need these values than if all of the data can be placed in the same data page. On the other hand, if you seldom use the data in your queries, placing them off the page can give you a much smaller footprint for the important data, requiring far less disk access on average. It is a balance that you need to take care with, as you can imagine how costly a table scan of columns that are on the overflow pages is going to be. Not only will you have to read extra pages, you have to be redirected to the overflow page for every row that’s overflowed.

Be careful when allowing data to overflow the page. It’s guaranteed to make your processing more costly, especially if you include the data that’s stored on the overflow page in your queries—for example, if you use the dreaded SELECT * regularly in production code! It’s important to choose your datatypes correctly to minimize the size of the data row to include only frequently needed values. If you frequently need a large value, keep it in row; otherwise, consider placing it off row or even create two tables and join them together as needed.

![]() Tip The need to access overflow pages is just one of the reasons to avoid using SELECT * FROM <tablename>–type queries in your production code, but it is an important one. Too often, you get data that you don’t intend to use, and when that data is located off the main data page, performance could suffer tremendously, and, in most cases, needlessly.

Tip The need to access overflow pages is just one of the reasons to avoid using SELECT * FROM <tablename>–type queries in your production code, but it is an important one. Too often, you get data that you don’t intend to use, and when that data is located off the main data page, performance could suffer tremendously, and, in most cases, needlessly.

Data on Pages

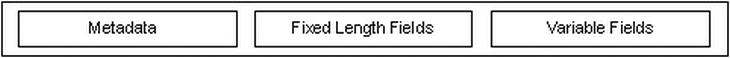

When you get down to the row level, the data is laid out with metadata, fixed length fields, and variable length fields, as shown in Figure 10-5. (Note that this is a generalization, and the storage engine does a lot of stuff to the data for optimization, especially when you enable compression.)

Figure 10-5. Data row

The metadata describes the row, gives information about the variable length fields, and so on. Generally speaking, since data is dealt with by the query processor at the page level, even if only a single row is needed, data can be accessed very rapidly no matter the exact physical representation.

![]() Note I use the term “column” when discussing logical SQL objects such as tables and indexes, but when discussing the physical table implementation, “field” is the proper term. Remember from Chapter 1 that a field is a physical location within a record.

Note I use the term “column” when discussing logical SQL objects such as tables and indexes, but when discussing the physical table implementation, “field” is the proper term. Remember from Chapter 1 that a field is a physical location within a record.

The maximum amount of data that can be placed on a single page (including overhead from variable fields) is 8,060 bytes. As illustrated in Figure 10-4, when a data row grows larger than 8,060 bytes, the data in variable length columns was can spill out onto an overflow page. A 16-byte pointer is left on the original page and points to the page where the overflow data is placed.

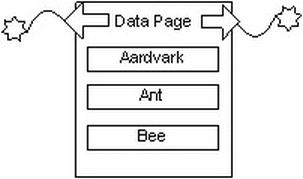

When inserting or updating rows, SQL Server might have to rearrange the data on the pages due to the pages being filled up. Such rearranging can be a particularly costly operation. Consider the situation from our example shown in Figure 10-6, assuming that only three values can fit on a page.

Figure 10-6. Sample data page before page split

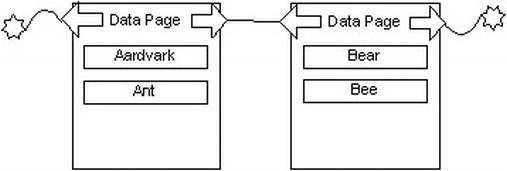

Say we want to add the value Bear to the page. If that value won’t fit onto the page, the page will need to be reorganized. Pages that need to be split are split into two, generally with 50 percent of the data on one page, and 50 percent on the other (there are usually more than three values on a real page). Once the page is split and its values are reinserted, the new pages would end up looking something like Figure 10-7.

Figure 10-7. Sample data page after page split

Page splits are awfully costly operations and can be terrible for performance, because after the page split, data won’t be located on successive physical pages. This condition is commonly known as fragmentation. Page splits occur in a normal system and are simply a part of adding data to your table. However, they can occur extremely rapidly and seriously degrade performance if you are not careful. Understanding the effect that page splits can have on your data and indexes is important as you tune performance on tables that have large numbers of inserts or updates.

To tune your tables and indexes to help minimize page splits, you can use the FILL FACTOR of the index. When you build or rebuild an index or a table (using ALTER TABLE <tablename> REBUILD, a command that was new in SQL Server 2008), the fill factor indicates how much space is left on each page for future data. If you are inserting random values all over the structures, a common situation that occurs when you use a nonsequential uniqueidentifier for a primary key, you will want to leave adequate space on each page to cover the expected numbers of rows that will be created in the future. During a page split, the data page is always split approximately fifty-fifty, and it is left half empty on each page, and even worse, the structure is becoming, as mentioned, fragmented.

Let’s jump ahead a bit: one of the good things about using a monotonously increasing value for a clustered index is that page splits over the entire index are greatly decreased. The table grows only on one end of the index, and while the index does need to be rebuilt occasionally using ALTER INDEX REORGANIZE or ALTER INDEX REBUILD, you don’t end up with page splits all over the table. And since the new value that won’t fit on the page comes at the end of the table, instead of a split, another page can be added to the chain. In the “Index Dynamic Management Views” section later, I will provide a query that will help you to know when to rebuild the index due to fragmentation.

Compression

The reason that the formats for pages and rows are generalized in the previous sections is that in SQL Server 2008, Microsoft implemented compression of data at the row and page levels. In versions prior to SQL Server 2005 SP2, all data was stored on a page in a raw format. However, in SQL Server 2005 SP2, Microsoft introduced datatype-level compression, which allowed data of the decimal datatype to be stored in a variable length field, also referred to as vardecimal. For SQL Server 2005, datatype compression was set using the sp_tableoption procedure with a setting of 'vardecimal storage format'.

In SQL Server 2008, the concept of compression was extended even further to all of the fixed length datatypes, including int, char, and float. Basically, you can allow SQL Server to save space by storing your data like it was a variable-sized type, yet in usage, the data will appear and behave like a fixed length type. Then in 2008R2, the feature was expanded yet again to include Unicode values. In Appendix A, I will note how compression will affect each datatype individually.

For example, if you stored the value of 100 in an int column, SQL Server needn’t use all 32 bits; it can store the value 100 the same amount of space as a tinyint. So instead of taking a full 32 bits, SQL Server can simply use 8 bits (1 byte). Another case is when you use a fixed length type like char(30) column but store only two characters; 28 characters could be saved, and the data padded as it is used. There is an overhead of 2 bytes per variable length column (or 4 bits if the size of the column is less than 8 bytes). Note that compression is only available in the Enterprise Edition.

This datatype-level compression is referred to as row compression , where each row in the table will be compressed as datatypes allow, shrinking the size on disk, but not making any major changes to the page structure. In Appendix B, I will indicate how each of the datatypes is affected by row compression, or for a list that may show any recent changes, you can check SQL Server Books Online for the topic of “Row Compression Implementation.” Row compression is a very interesting thing for many databases that use lots of fixed length data (for example, integers, especially for surrogate keys).

SQL Server also includes an additional compression capability called page compression. With page compression, first the data is compressed in the same manner as row compression, and then, the storage engine does a couple of interesting things to compress the data on a page:

- Prefix compression : Looks for repeated values in a value (like '0000001' and compresses the prefix to something like 6-0 (six zeros)

- Dictionary compression : For all values on the page, the storage engine looks for duplication, stores the duplicated value once, and then stores pointers on the data pages where the duplicated values originally resided.

You can apply data compression to your tables and indexes with the CREATE TABLE, ALTER TABLE, CREATE INDEX, and ALTER INDEX syntaxes. As an example, I will create a simple table, called test, and enable page compression on the table, row compression on a clustered index, and page compression on another index. (This code will work only on an Enterprise installation, or if you are using Developer Edition for testing.)

USE tempdb;

GO

CREATE TABLE testCompression

(

testCompressionId int NOT NULL,

value int NOT NULL

);

WITH (DATA_COMPRESSION = ROW) -- PAGE or NONE

ALTER TABLE testCompression REBUILD WITH (DATA_COMPRESSION = PAGE);

CREATE CLUSTERED INDEX XTestCompression_value

ON testCompression (value) WITH ( DATA_COMPRESSION = ROW );

ALTER INDEX XTestCompression_value

ON testCompression REBUILD WITH ( DATA_COMPRESSION = PAGE );

![]() Note The syntax of the

CREATE INDEX command allows for compression of the partitions of an index in different manners. I mention partitioning in the next section of the chapter. For full syntax, refer to SQL Server Books Online.

Note The syntax of the

CREATE INDEX command allows for compression of the partitions of an index in different manners. I mention partitioning in the next section of the chapter. For full syntax, refer to SQL Server Books Online.

Giving advice on whether to use compression is not really possible without knowing the factors that surround your actual situation. One tool you should use is the system procedure—sp_estimate_data_compression_savings—to check existing data to see just how compressed the data in the table or index would be after applying compression, but it won’t tell you how the compression will positively or negatively affect your performance. There are trade-offs to any sorts of compression. CPU utilization will go up in most cases, because instead of directly using the data right from the page, the query processor will have to translate the values from the compressed format into the uncompressed format that SQL Server will use. On the other hand, if you have a lot of data that would benefit from compression, you could possibly lower your I/O enough to make doing so worth the cost. Frankly, with CPU power growing by leaps and bounds with multiple-core scenarios these days and I/O still the most difficult to tune, compression could definitely be a great thing for many systems. However, I suggest testing with and without compression before applying in your production systems.

The last general physical structure concept that I will introduce is partitioning. Partitioning allows you to break a table (or index) into multiple physical structures by breaking them into more manageable chunks. Partitioning can allow SQL Server to scan data from different processes, enhancing opportunities for parallelism. SQL Server 7.0 and 2000 had partitioned views, where you would define a view and a set of tables, with each serving as a partition of the data. If you have properly defined (and trusted) constraints, SQL Server would use the WHERE clause to know which of the tables referenced in the view would have to be scanned in response to a given query. The data in the view was also editable like in a normal table. One thing you still can do with partitioned views is to build distributed partitioned views, which reference tables on different servers.

In SQL Server 2005, you could begin to define partitioning as part of the table structure. Instead of making a physical table for each partition, you define, at the DDL level, the different partitions of the table. Internally, the table is broken into the partitions based on a scheme that you set up. Note, too, that this feature is only included in the Enterprise Edition.

At query time, SQL Server can then dynamically scan only the partitions that need to be searched, based on the criteria in the WHERE clause of the query being executed. I am not going to describe partitioning too much, but I felt that it needed a mention in this edition of this book as a tool at your disposal with which to tune your databases, particularly if they are very large or very active. For deeper coverage, I would suggest you consider on of Kalen Delaney’s SQL Server Internals books. They are the gold standard in understanding the internals of SQL Server.

I will, however, present the following basic example of partitioning. Use whatever database you desire. I used tempdb for the data and AdventureWorks2012 for the sample data on my test machine and included the USE statement in the code download. The example is that of a sales order table. I will partition the sales into three regions based on the order date. One region is for sales before 2006, another for sales between 2006 and 2007, and the last for 2007 and later. The first step is to create a partitioning function. You must base the function on a list of values, where the VALUES clause sets up partitions that the rows will fall into based on the smalldatetime values that are presented to it, for example:

CREATE PARTITION FUNCTION PartitionFunction$dates (smalldatetime)

AS RANGE LEFT FOR VALUES ('20060101','20070101'),

--set based on recent version of

--AdventureWorks2012.Sales.SalesOrderHeader table to show

--partition utilization

Specifying the function as RANGE LEFT says that the values in the comma-delimited list should be considered the boundary on the side listed. So in this case, the ranges would be as follows:

- value <= '20060101'

- value > '20060101' and value <= '20070101'

- value > '20070101'

Specifying the function as RANGE RIGHT would have meant that the values lie to the right of the values listed, in the case of our ranges, for example:

- value < '20060101'

- value >= '20060101' and value < '20070101'

- value >= '20070101'

Next, use that partition function to create a partitioning scheme:

CREATE PARTITION SCHEME PartitonScheme$dates

AS PARTITION PartitionFunction$dates ALL to ( [PRIMARY] );

which will let you know:

Partition scheme 'PartitonScheme$dates' has been created successfully. 'PRIMARY' is marked as the next used filegroup in partition scheme 'PartitonScheme$dates'.

With the CREATE PARTITION SCHEME command, you can place each of the partitions you previously defined on a specific filegroup. I placed them all on the same filegroup for clarity and ease, but in practice, you usually want them on different filegroups, depending on the purpose of the partitioning. For example, if you were partitioning just to keep the often-active data in a smaller structure, placing all partitions on the same filegroup might be fine. But if you want to improve parallelism or be able to just back up one partition with a filegroup backup, you would want to place your partitions on different filegroups.

Next, you can apply the partitioning to a new table. You’ll need a clustered index involving the partition key. You apply the partitioning to that index. Following is the statement to create the partitioned table:

CREATE TABLE dbo.salesOrder

(

salesOrderIdint NOT NULL,

customerIdint NOT NULL,

orderAmountdecimal(10,2) NOT NULL,

orderDatesmalldatetime NOT NULL,

CONSTRAINT PKsalesOrder primary key nonclustered (salesOrderId)

ON [Primary],

CONSTRAINT AKsalesOrder unique clustered (salesOrderId, orderDate)

) on PartitonScheme$dates (orderDate);

Next, load some data from the AdventureWorks2012.Sales.SalesOrderHeader table to make looking at the metadata more interesting. You can do that using an INSERT statement such as the following:

INSERT INTO dbo.salesOrder(salesOrderId, customerId, orderAmount, orderDate)

SELECT SalesOrderID, CustomerID, TotalDue, OrderDate

FROM AdventureWorks2012.Sales.SalesOrderHeader;

You can see what partition each row falls in using the $partition function. You suffix the $partition function with the partition function name and the name of the partition key (or a partition value) to see what partition a row’s values are in, for example:

SELECT *, $partition.PartitionFunction$dates(orderDate) as partiton

FROM dbo.salesOrder;

You can also view the partitions that are set up through the sys.partitions catalog view. The following query displays the partitions for our newly created table:

SELECT partitions.partition_number, partitions.index_id,

partitions.rows, indexes.name, indexes.type_desc

FROM sys.partitions as partitions

JOIN sys.indexes as indexes

on indexes.object_id = partitions.object_id

AND indexes.index_id = partitions.index_id

WHERE partitions.object_id = object_id('dbo.salesOrder'),

This will return the following:

| partition_number | index_id | rows | name | type_desc |

| ========= | ------------- | ------------- | ------------- | ------------- |

| 1 | 1 | 1424 | 1424 | 1424 |

| 2 | 1 | 3720 | AKsalesOrder | CLUSTERED |

| 3 | 1 | 26321 | AKsalesOrder | CLUSTERED |

| 1 | 2 | 31465 | PKsalesOrder | NONCLUSTERED |

Partitioning is not a general purpose tool that should be used on every table, which is one of the reasons why it is only included in Enterprise Edition. However, partitioning can solve a good number of problems for you, if need be:

- Performance: If you only ever need the past month of data out of a table with three years’ worth of data, you can create partitions of the data where the current data is on a partition and the previous data is on a different partition.

- Rolling windows: You can remove data from the table by dropping a partition, so as time passes, you add partitions for new data and remove partitions for older data (or move to a different archive table).

- Maintainence: Some maintainance can be done at the partition level rather than the entire table, so once partition data is read-only, you may not need to maintain any longer. Some caveats do apply (you cannot rebuild a partitioned index online, for example.)

Indexes Overview

Indexes allow the SQL Server engine to perform fast, targeted data retrieval rather than simply scanning though the entire table. A well-placed index can speed up data retrieval by orders of magnitude, while a haphazard approach to indexing can actually have the opposite effect when creating, updating, or deleting data.

Indexing your data effectively requires a sound knowledge of how that data will change over time, the sort of questions that will be asked of it, and the volume of data that you expect to be dealing with. Unfortunately, this is what makes any topic about physical tuning so challenging. To index effectively, you almost need the psychic ability to fortell the future of your exact data usage patterns. Nothing in life is free, and the creation and maintenance of indexes can be costly. When deciding to (or not to) use an index to improve the performance of one query, you have to consider the effect on the overall performance of the system.

In the upcoming sections, I’ll do the following:

- Introduce the basic structure of an index.

- Discuss the two fundamental types of indexes and how their structure determines the structure of the table.

- Demonstrate basic index usage, introducing you to the basic syntax and usage of indexes.

- Show you how to determine whether SQL Server is likely to use your index and how to see if SQL Server has used your index.

If you are producing a product for sale that uses SQL Server as the backend, indexes are truly going to be something that you could let your customers manage unless you can truly effectively constrain how users will use your product. For example, if you sell a product that manages customers, and your basic expectation is that they will have around 1,000 customers, what happens if one wants to use it with 100,000 customers? Do you not take their money? Of course you do, but what about performance? Hardware improvements generally cannot even give linear improvement in performance. So if you get hardware that is 100 times “faster,” you would be extremely fortunate to get close to 100 times improvement. However, adding a simple index can provide 100,000 times improvement that may not even make a difference at all on the smaller data set. (This is not to pooh pooh the value of faster hardware at all. Just that situationally you get far greater gain from writing better code than you will from just throwing hardware at the problem. The ideal situation is adequate hardware and excellent code, naturally).

An index is an object that SQL Server can maintain to optimize access to the physical data in a table. You can build an index on one or more columns of a table. In essence, an index in SQL Server works on the same principle as the index of a book. It organizes the data from the column (or columns) of data in a manner that’s conducive to fast, efficient searching, so you can find a row or set of rows without looking at the entire table. It provides a means to jump quickly to a specific piece of data, rather than just starting on page one each time you search the table and scanning through until you find what you’re looking for. Even worse, unless SQL Server knows exactly how many rows it is looking for, it has no way to know if it can stop scanning data when one row had been found. Also, like the index of a book, an index is a separate entity from the actual table (or chapters) being indexed.

As an example, consider that you have a completely unordered list of employees and their details. If you had to search this list for persons named “Davidson”, you would have to look at every single name on every single page. Soon after trying this, you would immediately start trying to devise some better manner of searching. On first pass, you would probably sort the list alphabetically. But what happens if you needed to search for an employee by an employee identification number? Well, you would spend a bunch of time searching through the list sorted by last name for the employee number. Eventually, you could create a list of last names and the pages you could find them on and another list with the employee numbers and their pages. Following this pattern, you would build indexes for any other type of search you’d regularly perform on the list. Of course, SQL Server can page through the phone book one name at a time in such a manner that, if you need to do it occasionally, it isn’t such a bad thing, but looking at two or three names per search is always more efficient than two or three hundred, much less two or three million.

Now, consider this in terms of a table like an Employee table. You might execute a query such as the following:

SELECT LastName, <EmployeeDetails>

FROM Employee

WHERE LastName = 'Davidson';

In the absence of an index to rapidly search, SQL Server will perform a scan of the data in the entire table (referred to as a table scan) on the Employee table, looking for rows that satisfy the query predicate. A full table scan generally won’t cause you too many problems with small tables, but it can cause poor performance for large tables with many pages of data, much as it would if you had to manually look through 20 values versus 2,000. Of course, when you have a light load, like on your development box, you probably won’t be able to discern the difference between a seek and a scan (or even hundreds of scans). Only when you are experiencing a reasonably heavy load will the difference be noticed.

If we instead created an index on the LastName column, the index would sort the LastName rows in a logical fashion (in ascending alphabetical order by default) and the database engine can move directly to rows where the last name is Davidson and retrieve the required data quickly and efficiently. And even if there are ten people with the last name of Davidson, SQL Server knows to stop when it hits 'Davidtown'.

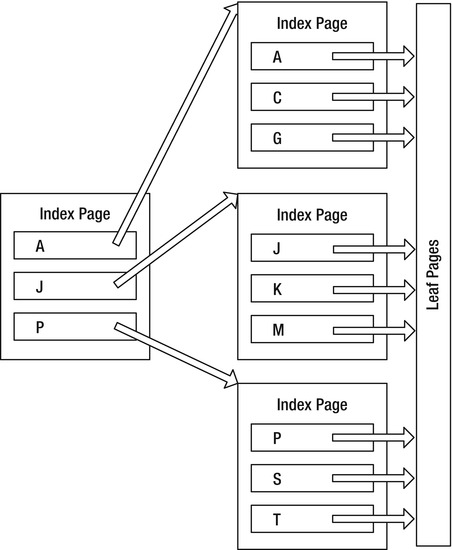

Of course, as you might imagine, the engineer types who invented the concept of indexing and searching data structures don’t simply make lists to search through. Instead, indexes are implemented using what is known as a balanced tree (B-tree) structure. The index is made up of index pages structured, again, much like an index of a book or a phone book. Each index page contains the first value in a range and a pointer to the next lower page in the index. The last level in the index is referred to as the leaf page, which contains the actual data values that are being indexed, plus either the data for the row or pointers to the data. This allows the query processor to go directly to the data it is searching for by checking only a few pages, even when there are a millions of values in the index.

Figure 10-8 shows an example of the type of B-tree that SQL Server uses for indexes. Each of the outer rectangles is an 8K index page, just as we discussed earlier. The three values—A, J, and P—are the index keys in this top-level page of the index. The index page has as many index keys as is possible. To decide which path to follow to reach the lower level of the index, we have to decide if the value requested is between two of the keys: A to I, J to P, or greater than P. For example, say the value we want to find in the index happens to be I. We go to the first page in the index. The database determines that I doesn’t come after J, so it follows the A pointer to the next index page. Here, it determines that I comes after C and G, so it follows the G pointer to the leaf page.

Figure 10-8. Basic index structure

Each of these pages is 8KB in size. Depending on the size of the key (determined by summing the data lengths of the columns in the key, up to a maximum of 900 bytes), it’s possible to have anywhere from 8 entries to over 1,000 on a single page. The more keys you can fit on a page, the greater the number of pages you can have on each level of the index. The more pages are linked from each level to the next, the fewer numbers of steps from the top page of the index to reach the leaf.

B-tree indexes are extremely efficient, because for an index that stores only 500 different values on a page—a reasonable number for a typical index of an integer—it has 500 pointers on the next level in the index, and the second level has 500 pages with 500 values each. That makes 250,000 different pointers on that level, and the next level has up to 250,000 * 500 pointers. That’s 125,000,000 different values in just a three-level index. Change that to a 100-byte key, do the math, and you will see why smaller keys are better! Obviously, there’s overhead to each index key, and this is just a rough estimation of the number of levels in the index.

Another idea that’s mentioned occasionally is how well balanced the tree is. If the tree is perfectly balanced, every index page would have exactly the same number of keys on it. Once the index has lots of data on one end, or data gets moved around on it for insertions or deletions, the tree becomes ragged, with one end having one level, and another many levels. This is why you have to do some basic maintenance on the indexes, something I have mentioned already.

Index Types

How indexes are structured internally is based on the existence (or nonexistence) of a clustered index. For the nonleaf pages of an index, everything is the same for all indexes. However, at the leaf node, the indexes get quite different—and the type of index used plays a large part in how the data in a table is physically organized.

There are two different types of relational indexes:

- Clustered: This type orders the physical table in the order of the index.

- Nonclustered: These are completely separate structures that simply speed access.

In the upcoming sections, I’ll discuss how the different types of indexes affect the table structure and which is best in which situation.

A clustered index physically orders the pages of the data in the table. The leaf pages of the clustered indexes are the data pages of the table. Each of the data pages is then linked to the next page in a doubly linked list to provide ordered scanning. The leaf pages of the clustered index are the actual data pages. In other words, the records in the physical structure are sorted according to the fields that correspond to the columns used in the index. Tables with clustered indexes are referred to as clustered tables.

The key of a clustered index is referred to as the clustering key, and this key will have additional uses that will be mentioned later in this chapter. For clustered indexes that aren’t defined as unique, each record has a 4-byte value (commonly known as an uniquifier) added to each value in the index where duplicate values exist. For example, if the values were A, B, and C, you would be fine. But, if you added another value B, the values internally would be A, B + 4ByteValue, B + Different4ByteValue, and C. Clearly, it is not optimal to get stuck with 4 bytes on top of the other value you are dealing with in every level of the index, so in general, you should try to use the clustered index on a set of columns where the values are unique.

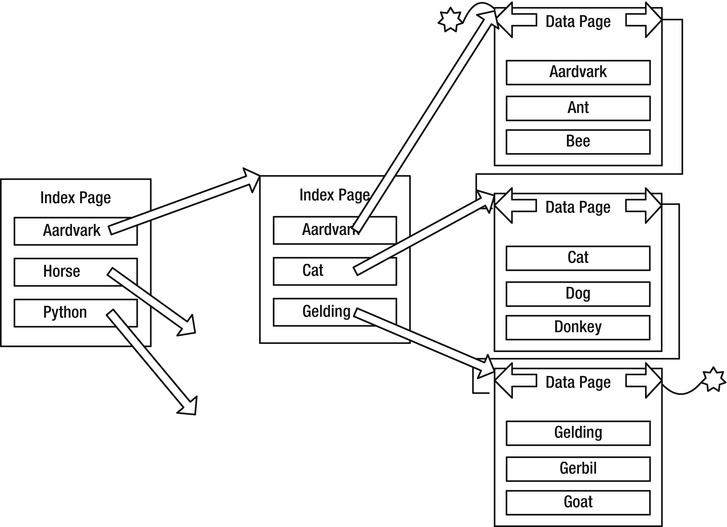

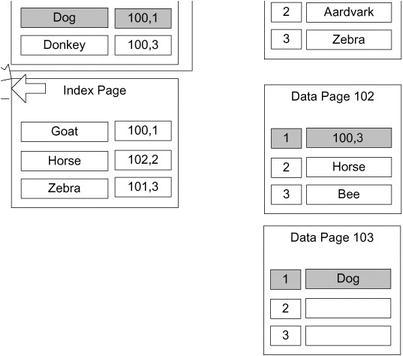

Figure 10-9 shows, at a high level, what a clustered index might look like for a table of animal names. (Note that this is just a partial example, there would likely be more second-level pages for Horse and Python at a minimum.)

Figure 10-9. Clustered index example

You can have only one clustered index on a table, because the table cannot be ordered in more than one direction. (Remember this; it is one of the most fun interview questions. Answering anything other than “one clustered index per table” leads to a fun line of followup questioning.)

A good real-world example of a clustered index would be a set of old-fashioned encyclopedias. Each letter is a level of the index, and on each page, there is another level that denotes the things you can find on each page (e.g., Office–Officer). Then. each topic is the leaf level of the index. The encyclopedia is are clustered on the topics in these books, just as the example was clustered on the name of the animal. In essence, the entire book is a table of information in clustered ordered. And indexes can be partitioned as well. The encylopedias are partitioned by letter into multiple books.

Now, consider a dictionary. Why are the words sorted, rather than just having a separate index with the words not in order? I presume that at least part of the reason is to let the readers scan through words they don’t know exactly how to spell, checking the definition to see if the word matches what they expect. SQL Server does something like this when you do a search. For example, back in Figure 10-9, if you were looking for a cat named George, you could use the clustered index to find rows where animal = 'Cat', then scan the data pages for the matching pages for any rows where name = 'George'.

I must caution you that although it’s true, physically speaking, that tables are stored in the order of the clustered index; logically speaking, tables must be thought of as having no order. (I know I promised to not mention this again back in Chapter 1, but it really is an important thing to remember.) This lack of order is a fundamental truth of relational programming: you aren’t required to get back data in the same order when you run the same query twice. The ordering of the physical data can be used by the query processor to enhance your performance, but during intermediate processing, the data can be moved around in any manner that results in faster processing the answer to your query. It’s true that you do almost always get the same rows back in the same order, mostly because the optimizer is almost always going to put together the same plan every time the same query is executed under the same conditions. However, load up the server with many requests, and the order of the data might change so SQL Server can best use its resources, regardless of the data’s order in the structures. SQL Server can choose to return data to us in any order that’s fastest for it. If disk drives are busy in part of a table and it can fetch a different part, it will. If order matters, use an ORDER BY clause to make sure that data is returned as you want.

Nonclustered index structures are fully independent of the underlying table. Where a clustered index is like a dictionary with the index physically linked to the table (since the leaf pages of the index are a part of the table), nonclustered indexes are more like indexes in a textbook. A nonclustered index is completely separate from the data, and on the leaf page, there are pointers to go to the data pages much like the index of a book contains page numbers.

Each leaf page in a nonclustered index contains some form of pointer to the rows on the data page. The pointer from the index to a data row is known as a row locator. Exactly how the row locator of a nonclustered indexes is structured is based on whether or not the underlying table has a clustered index.

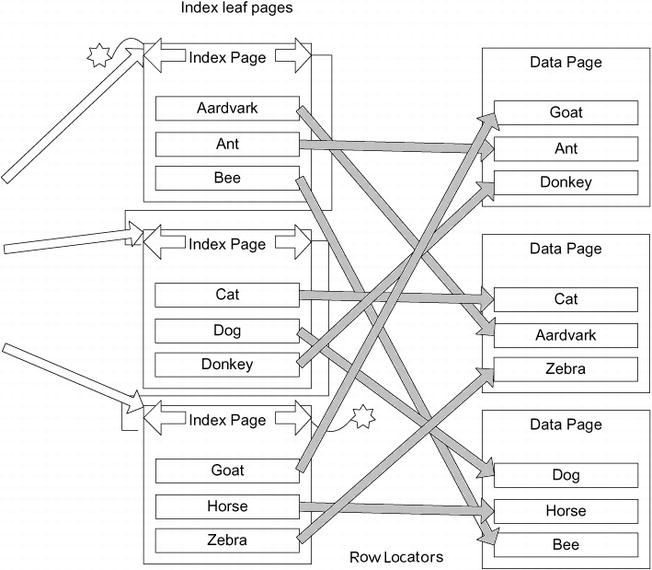

In this section, I will first show an abstract representation of the nonclustered index and then show the differences between the implementation of a nonclustered index when you do and do not also have a clustered index. At an abstract level, all nonclustered indexes follow the form shown in Figure 10-10.

Figure 10-10. Sample nonclustered index

The major difference between the two possibilities comes down to the row locator being different based on whether the underlying table has a clustered index. There are two different types of pointer that will be used:

- Tables with a clustered index: Clustering key

- Tables without a clustered index: Pointer to physical location of the data

In the next two sections, I’ll explain these in more detail. New in 2012 is a new type of index that I will briefly mention called a columnstore index. Rather than store index data per row, they store data by column. They are typical indexes, in that they don’t change the structure of the data, but they do make the table read only. It is a very exciting feature for data warehouse types of queries, but in relational, OLTP databases, there isn’t really a use for columnstore indexes. In Chapter 14, a columnstore index will be used in an example for the data warehouse overview, but that will be the extent of discussion on the subject. They are structured quite differently from classic B-tree indices, so if you do get to building a data warehouse (or if you are also involved with building dimensional databases), you will want to understand how they work and how they are structured as they will likely provide your star schema queries with a tremendous performance increase.

![]() Tip You can place nonclustered indexes on a different filegroup than the data pages to maximize the use of your disk subsystem in parallel. Note that the filegroup you place the indexes on ought to be on a different controller channel than the table; otherwise, it’s likely that there will be minimal or no gain.

Tip You can place nonclustered indexes on a different filegroup than the data pages to maximize the use of your disk subsystem in parallel. Note that the filegroup you place the indexes on ought to be on a different controller channel than the table; otherwise, it’s likely that there will be minimal or no gain.

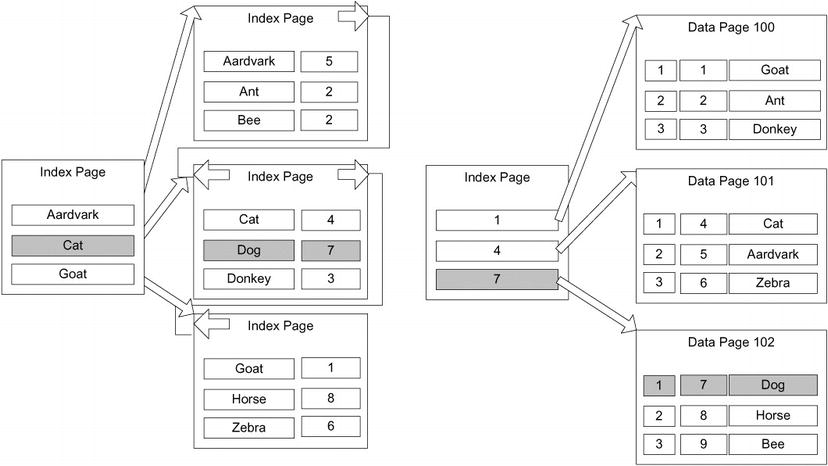

Nonclustered Indexes on Clustered Tables

When a clustered index exists on the table, the row locator for the leaf node of any nonclustered index is the clustering key from the clustered index. In Figure 10-10, the structure on the right side represents the clustered index, and on the left, the nonclustered index. To find a value, you start at the leaf node of the index and traverse the leaf pages. The result of the index traversal is the clustering key, which you then use to traverse the clustered index to reach the data, as shown in Figure 10-11.

Figure 10-11. Nonclustered index on a clustered table

The overhead of the operation I’ve just described is minimal as long as you keep your clustering key optimal and the index maintained. While having to scan two indexes is more work than just having a pointer to the physical location you have to think of the overall picture. Overall, it’s better than having direct pointers to the table, because only minimal reorganization is required for any modification of the values in the table. Consider if you had to maintain a book index manually. If you used the book page as the way to get to an index value, if had to add a page to the book in the middle you would have to update all of the page numbers. But if all of the topics were ordered alphabetically, and you just pointed to the topic name adding a topic would be easy.

The same is true for SQL Server and the structures can be changed thousands of times a second or more. Since there is very little hardware-based information lingering in the structure, data movement is easy for the query processor, and maintaining indexes is an easy operation. Early versions of SQL Server used physical location pointers, and this led to all manners of corruption in our indexes and tables. And let’s face it, the people with better understanding of such things also tell us that when the size of the clustering key is adequately small, this method is remarkably faster overall than having pointers directly to the table.

The primary benefit of the key structure becomes more obvious when we talk about modification operations. Because the clustering key is the same regardless of physical location, only the lowest level of the clustered index need know where the physical data is. Add to this that the data is organized sequentially, and the overhead of modifying indexes is significantly lowered making all of the data modification operations far faster. Of course, this benefit is only true if the clustering key rarely, or never, changes. Therefore, the general suggestion is to make the clustering key a small nonchanging value, such as an identity column (but the advice section is still a few pages away).

Nonclustered Indexes on a Heap

If a table does not have a clustered index, the table is physically referred to as a heap. One definition of a heap is “a group of things placed or thrown one on top of the other.” This is a great way to explain what happens in a table when you have no clustered index: SQL Server simply puts every new row on the end of the last page for the table. Once that page is filled up, it puts a data on the next page or a new page as needed.

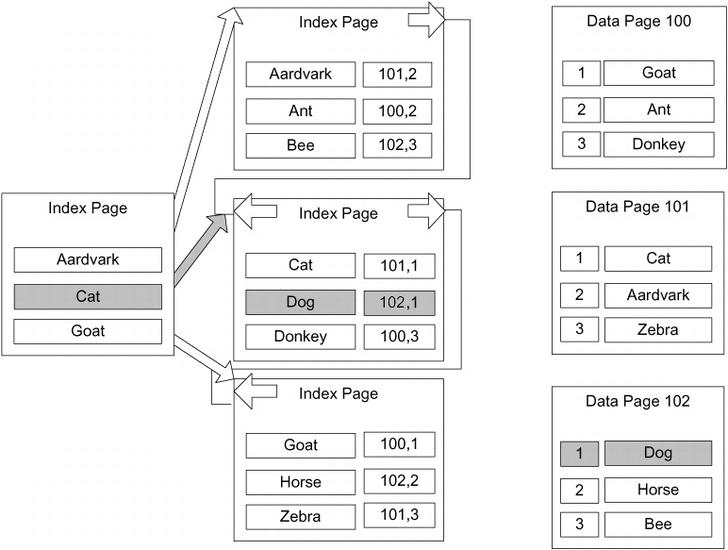

When building a nonclustered index on a heap, the row locator is a pointer to the physical page and row that contains the row. As an example, take the example structure from the previous section with a nonclustered index on the name column of an animal table, represented in Figure 10-12.

Figure 10-12. Nonclustered index on a heap

If you want to find the row where name ='Dog', you first find the path through the index from the top-level page to the leaf page. Once you get to the leaf page, you get a pointer to the page that has a row with the value, in this case Page 102, Row 1. This pointer consists of the page location and the record number on the page to find the row values (the pages are numbered from 0, and the offset is numbered from 1). The most important fact about this pointer is that it points directly to the row on the page that has the values you’re looking for. The pointer for a table with a clustered index (a clustered table) is different, and this distinction is important to understand because it affects how well the different types of indexes perform.

To avoid the types of physical corruption issues that, as I mentioned in the previous section, can occur when you are constantly managing pointers and physical locations, heaps use a very simple method of keeping the row pointers from getting corrupted. Instead of reordering pages, or changing pointers if the page must be split, it moves rows to a different page and a forwarding pointer is left to point to the new page where the data is now. So if the row where name ='Dog' had moved (for example, due to a large varchar(3000) column being updated from a data length of 10 to 3,000), you might end up with following situation to extend the number of steps required to pick up the data. In Figure 10-13, a forwarding pointer is illustrated.

Figure 10-13. Fowarding pointer

All existing indexes that have the old pointer simply go to the old page and follow the forwarding pointer on that page to the new location of the data. If you are using heaps, which should be rare, it is important to be careful with your structures to make sure that data should rarely be moved around within a heap. For example, you have to be careful if you’re often updating data to a larger value in a variable length column that’s used as an index key, it’s possible that a row may be moved to a different page. This adds another step to finding the data, and if the data is moved to a page on a different extent, another read to the database. This forwarding pointer is immediately followed when scanning the table, causing possible horrible performance over time if it’s not managed.

Space is not reused in the heap without rebuilding the table (by selecting into another table, adding a clustered index temporarily, or in 2008, using the ALTER TABLE command with the REBUILD option). In the last section of this chapter on Indexing Dynamic Management View queries, I will provide a query that will give you information on the structure of your index, including the count of forwarding pointers in your table.

The basic syntax for creating an index is as follows:

CREATE [UNIQUE] INDEX [CLUSTERED | NONCLUSTERED] <indexName>

ON <tableName> (<columnList>);

As you can see, you can specify either a clustered or nonclustered index, with nonclustered being the default type. (Recall that the default index created for a primary key constrant is a clustered index.) Each type of index can be unique or nonunique. If you specify that your index must be unique, every row in the indexed column must have a different value—no duplicate entries are accepted, just like one of the uniqueness constraints.

The <columnList> is a comma-delimited list of columns in the table. Each column can be specified either in ascending (ASC) or descending (DESC) order for each column, with ascending being the default. SQL Server can traverse the index in either direction for searches, so the direction is generally only important when you have multiple columns in the index.

For example, the following statement creates an index called XtableA_column1AndColumn2 on column1 and column2 of tableA, with ascending order for column1 and descending order for column2:

CREATE INDEX XtableA_column1AndColumn2 ON tableA (column1, column2 DESC);

Let’s take a look at a more detailed example. First, we need to create a base table. Use whatever database you desire. I used tempdb on my test machine and included the USE statement in the code download.

CREATE SCHEMA produce;

GO

CREATE TABLE produce.vegetable

(

--PK constraint defaults to clustered

vegetableId int NOT NULL CONSTRAINT PKproduce_vegetable PRIMARY KEY,

name varchar(15) NOT NULL

CONSTRAINT AKproduce_vegetable_name UNIQUE,

color varchar(10) NOT NULL,

consistency varchar(10) NOT NULL,

filler char(4000) default (replicate('a', 4000)) NOT NULL

);

![]() Note I included a huge column in the produce table to exacerbate the issues with indexing the table in lieu of having tons of rows to work with. This causes the data to be spread among several data pages, and as such, forces some queries to perform more like a table with a lot more data. I will not return this filler column or acknowledge it much in the chapter, but this is its purpose. Obviously, creating a filler column is not a performance tuning best practice, but it is a common trick to force a table’s data pages to take up more space in a demonstration.

Note I included a huge column in the produce table to exacerbate the issues with indexing the table in lieu of having tons of rows to work with. This causes the data to be spread among several data pages, and as such, forces some queries to perform more like a table with a lot more data. I will not return this filler column or acknowledge it much in the chapter, but this is its purpose. Obviously, creating a filler column is not a performance tuning best practice, but it is a common trick to force a table’s data pages to take up more space in a demonstration.

Now, we create two single-column nonclustered indexes on the color and consistency columns, respectively:

CREATE INDEX Xproduce_vegetable_color ON produce.vegetable(color);

CREATE INDEX Xproduce_vegetable_consistency ON produce.vegetable(consistency);

Then, we create a unique composite index on the vegetableID and color columns. We make this index unique, not to guarantee uniqueness of the values in the columns but because the values in vegetableId must be unique because it’s part of the PRIMARY KEY constraint. Making this unique signals to the optimizer that the values in the index are unique (note that this index is probably not very useful in reality but is created to demonstrate a unique index that isn’t a constraint).

CREATE UNIQUE INDEX Xproduce_vegetable_vegetableId_color

ON produce.vegetable(vegetableId, color);

Finally, we add some test data:

INSERT INTO produce.vegetable(vegetableId, name, color, consistency)

VALUES (1,'carrot','orange','crunchy'), (2,'broccoli','green','leafy'),

(3,'mushroom','brown',’squishy'), (4,'pea','green',’squishy'),

(5,'asparagus','green','crunchy'), (6,’sprouts','green','leafy'),

(7,'lettuce','green','leafy'),( 8,'brussels sprout','green','leafy'),

(9,’spinach','green','leafy'), (10,'pumpkin','orange',’solid'),

(11,'cucumber','green',’solid'), (12,'bell pepper','green',’solid'),

(13,’squash','yellow',’squishy'), (14,'canteloupe','orange',’squishy'),

(15,'onion','white',’solid'), (16,'garlic','white',’solid'),

To see the indexes on the table, we check the following query:

SELECT name, type_desc, is_unique

FROM sys.indexes

WHERE OBJECT_ID('produce.vegetable') = object_id;

This returns the following results:

| name | type_desc | is_unique |

| ========= | ------------- | ------------- |

| PKproduce_vegetable | CLUSTERED | 1 |

| AKproduce_vegetable_name | NONCLUSTERED | 1 |

| Xproduce_vegetable_color | NONCLUSTERED | 0 |

| Xproduce_vegetable_consistency | NONCLUSTERED | 0 |

| Xproduce_vegetable_vegetableId_color | NONCLUSTERED | 1 |

One thing to remind you here is that PRIMARY KEY and UNIQUE constraints were implemented behind the scenes using indexes. The PK constraint is, by default, implemented using a clustered index and the UNIQUE constraint via a nonclustered index. As the primary key is generally chosen to be an optimally small value, it tends to make a nice clustering key.

![]() Note Foreign key constraints aren’t automatically implemented using an index, though indexes on migrated foreign key columns are often useful for performance reasons. I’ll return to this topic later when I discuss the relationship between foreign keys and indexes.

Note Foreign key constraints aren’t automatically implemented using an index, though indexes on migrated foreign key columns are often useful for performance reasons. I’ll return to this topic later when I discuss the relationship between foreign keys and indexes.

The remaining entries in the output show the three nonclustered indexes that we explicitly created and that the last index was implemented as unique since it included the unique key values as one of the columns. Before moving on, briefly note that to drop an index, use the DROP INDEX statement, like this one to drop the Xproduce_vegetable_consistency index we just created:

DROP INDEX Xproduce_vegetable_consistency ON produce.vegetable;

One last thing I want to mention on the basic index creation is a feature that was new to 2008. That feature is the ability to create filtered indexes. By including a WHERE clause in the CREATE INDEX statement, you can restrict the index such that the only values that will be included in the leaf nodes of the index are from rows that meet the where clause. We built a filtered index back in Chapter 8 when I introduced selective uniqueness, and I will mention them again in this chapter to show how to optimize for certain WHERE clauses.

The options I have shown so far are clearly not all of the options for indexes in SQL Server, nor are these the only types of indexes available. For example, there are options to place indexes on different filegroups from tables. Also at your disposal are filestream data (mentioned in Chapter 8), data compression, setting the maximum degree of parallelism to use with an index, locking (page or row locks), rebuilding, and several other features. There are also XML, spatial index types, and as previously mentioned, columnstore indexes. This book focuses specifically on relational databases, so relational indexes are really all that I am covering in any depth.

Basic Index Usage Patterns

In this section, I’ll look at some of the basic usage patterns of the different index types, as well as how to see the use of the index within a query plan:

- Clustered indexes: I’ll discuss the choices you need to make when choosing the columns in which to put the clustered index.

- Nonclustered indexes: After the clustered index is applied, you need to decide where to apply nonclustered indexes.

- Unique indexes: I’ll look at why it’s important to use unique indexes as frequently as possible.

All plans that I present will be obtained using the SET SHOWPLAN_TEXT ON statement. When you’re doing this locally, it can be easier to use the graphical showplan from Management Studio. However, when you need to post the plan or include it in a document, use one of the SET SHOWPLAN_TEXT commands. You can read about this more in SQL Server Books Online. Note that using SET SHOWPLAN_TEXT (or the other versions of SET SHOWPLAN that are available, such as SET SHOWPLAN_XML), do not actually execute the statement/batch; rather, they show the estimated plan. If you need to execute the statement (like to get some dynamic SQL statements to execute to see the plan), you can use SET STATISTICS PROFILE ON to get the plan and some other pertinent information about what has been executed. Each of these session settings will need to be turned OFF explicitly once you have finished, or they will continue executing returning plans where you don’t want so.

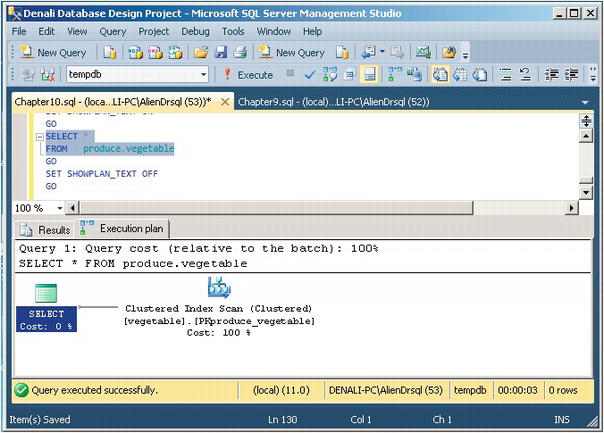

For example, say you execute the following query:

SET SHOWPLAN_TEXT ON;

GO

SELECT *

FROM produce.vegetable;

GO

SET SHOWPLAN_TEXT OFF;

GO

Running these statements echoes the query as a single column result set of StmtText and then returns another with the same column name that displays the plan:

|--Clustered Index Scan(OBJECT:([tempdb].[produce].[vegetable].[PKproduce_vegetable]))

Although this is the best way to communicate the plan in text (for example, to post to the MSDN/TechNet or any other forums to get help on why your query is so slow), it is not the richest or easiest experience. In SSMS, click the Query menu and choose Display Estimated Execution Plan (Ctrl+L); you’ll see the plan in a more interesting way, as shown in Figure 10-14. Or by choosing Include Actual Execution Plan, you can see exactly what SQL Server did (which is analogous to SET STATISTICS PROFILE ON).

Figure 10-14. Plan display in Management Studio

Before breaking down the different index types, we need to start out by introducing a few terms need to be introduced:

- Scan : This refers to an unordered search, where SQL Server scans the leaf pages of the index looking for a value. Generally speaking, all leaf pages would be considered in the process.

- Seek : This refers to an ordered search, in that the index pages are used to go to a certain point in the index and then a scan is done on a range of values. For a unique index, this would always return a single value.

- Lookup : The clustered index is used to look up a value for the nonclustered index.