C H A P T E R 7

Structured Design

Invest in the abstraction, not the implementation. Abstractions can survive the barrage of changes from different implementations and new technologies”

—Andy Hunt and Dave Thomas1

Structured Programming

Structured design has its genesis in Edsger Dijkstra's famous 1968 letter to the Communications of the ACM, “Go To Statement Considered Harmful.” Dijkstra's paper concludes with

The go to statement as it stands is just too primitive; it is too much an invitation to make a mess of one's program. One can regard and appreciate the clauses considered (ed. if-then-else, switch, while-do, and do-while) as bridling its use. I do not claim that the clauses mentioned are exhaustive in the sense that they will satisfy all needs, but whatever clauses are suggested (e.g. abortion clauses) they should satisfy the requirement that a programmer independent coordinate system can be maintained to describe the process in a helpful and manageable way.2

Programming languages created from this point onward, while not eliminating the goto statement (except for Java, which has none), certainly downplayed its use, and courses that taught programming encouraged students to avoid it. Instead, problem-solving was taught in a top-down structured manner, where one begins with the problem statement and attempts to break the problem down into a set of solvable sub-problems. The process continues until each sub-problem is small enough to be either trivial or very easy to solve. This technique is called structured programming. Before the advent and acceptance of object-oriented programming in the mid-80s, this was the standard approach to problem solving and programming. It is still one of the best ways to approach a large class of problems.

__________

1 Hunt, A. and D. Thomas. The Pragmatic Programmer: From Journeyman to Master. (Boston, MA: Addison-Wesley, 2000.)

2 Dijkstra, E. “GoTo Statement Considered Harmful.” Communications of the ACM 11(3): 147-148. (1968)

Stepwise Refinement

Niklaus Wirth formalized the technique in his 1971 paper, “Program Development by Stepwise Refinement.”3 Stepwise refinement contends that designing programs consists of a set of refinement steps. In each step, a given task is broken up into a number of subtasks. Each refinement of a task must be accompanied by a refinement of the data description and the interface. The degree of modularity obtained will determine the ease or difficulty with which a program can be adapted to changes in requirements or environment.

During refinement, you use a notation that is natural to the problem space. Avoid using a programming language for description as long as possible. Each refinement implies a number of design decisions based on a set of design criteria. These criteria include efficiency of time and space, clarity, and regularity of structure (simplicity).

Refinement can proceed in two ways, top-down or bottom-up. Top-down refinement is characterized by moving from a general description of the problem to detailed statements of what individual modules or routines do. The guiding principle behind stepwise refinement is that humans can concentrate on only a few things at a time; Miller's famous 7 +/− 2 chunks of data rule.4 One works by

- analyzing the problem and trying to identify the outlines of a solution and the pros and cons of each possibility;

- then, designing the top levels first;

- steering clear of language-specific details;

- pushing down the details until you get to the lower levels;

- formalizing each level;

- verifying each level; and

- moving to the next lower level to make the next set of refinements. (That is, repeat.)

One continues to refine the solution until it seems as if it would be easier to code than to decompose; we'll see an example of this process later in this chapter.

That is, you work until you become impatient at how obvious and easy the design becomes. The down-side here is that you really have no good metric on “when to stop.” It just takes practice.

If you can't get started at the top, then start at the bottom.

- Ask yourself, “What do I know that the system needs to do?” This usually involves lower level I/O operations, other low-level operations on data structures, and so on.

- Identify as many low-level functions and components as you can from that question.

- Identify common aspects of the low-level components and group them together.

- Continue with the next level up, or go back to the top and try again to work down.

__________

3 Wirth, N. “Program Development by Stepwise Refinement.” CACM 14(4): 221-227. (1971)

4 Miller, G. A. “The magical number seven, plus or minus two: Some limits on our capacity for processing information.” Psychological Review 63: 81-97. (1956)

Bottom-up assessment usually results in early identification of utility routines, which can lead to a more compact design. It also helps promote reuse – because you are reusing the lower level routines. On the downside, bottom-up assessment is hard to use exclusively – you nearly always end up switching to a top down approach at some point, sometimes you find you just can't put a larger piece together from the bottom-up. This isn't really stepwise refinement, but it can help get you started. Most real step-wise refinements involve alternating between top-down and bottom-up design elements. Fortunately, top-down and bottom-up design methodologies can be very complementary.

Example of Stepwise Refinement: The Eight-Queens Problem

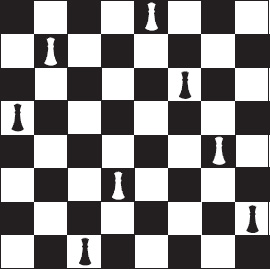

The eight queens problem is familiar to most students. The problem is to find a placement of eight queens on a standard 8 × 8 chess board in such a way that no queen can be attacked by any other. One possible solution to the problem is shown in Figure 7-1.

Figure 7-1. One solution to the eight-queens problem

Remember that queens can move any number of spaces horizontally, vertically, or diagonally. It turns out that no one has yet found an analytical solution to this problem, and it's likely one does not exist. So how would you approach this problem? Go ahead, think about it; I'll wait.

…

…

Done? Okay. Let's see one way to decompose this problem.

Proposed Solution 1

The first thing we need to do is to look at the problem and tease out the requirements and the outline of a solution. This will start us down the road of answering the question of what the top-level decomposition should be.

First you could think of solving the problem using brute force; just try all the possible arrangements of queens and pick the ones that work. With 8 queens and 64 possible squares there are

![]()

possible board configurations, where n is the number of squares on the board and k is the number of queens, which is only 4,426,165,368 (a bit over 4 billion configurations). These days, that's not very many, so brute force might be the way to go.

So if we generate a set A of all the possible board combinations, we can create a test called q(x) that returns a true if the board configuration x is a solution, and returns false if x is not a solution. Then we can create a program that looks like the following:

Generate the set A of all board configurations;

while there are still untested configurations in A do

x = the next configuration from A

if (q(x) == true) then print the solution x and stop

go back to the top and do it again.

Notice that all the work is getting done in two steps – generating the set A and performing the test q(x). The generation of A only happens once, but performing the test q(x) happens once for every configuration in A until you find a solution. While this decomposition will surely work, it's not terribly efficient. So let's just say that we'd rather reduce the number of combinations. Efficiency is a good thing, after all.

Proposed Solution 2

Again, we need to start at the top level. But this time we've done some analysis, so we have a clearer idea of what has to happen. We've eliminated brute-force, but we see that we can think in terms of board configurations. In order to reduce the number of total possible configurations and then come up with a more efficient algorithm, we need to think about the problem. The first thing to notice is that you can never have more than one queen in a column, in fact, you must have exactly one queen per column. That reduces the number of possible combinations to 224 or just 16 million. Although this is good, it doesn't really change the algorithm. Our proposed solution would now look like:

Generate the set B of restricted board configurations;

while there are still untested configurations in B do

x = the next configuration from B

if (q(x) == true) then print the solution x and stop

go back to the top and do it again.

This version requires generating the set, B, of board positions with one queen in each column and still requires visiting up to 16 million possible board positions. Generating B is now more complicated than generating A because we now have to test to see if a proposed board position meets the one queen per column restriction. There must be a better way.

Proposed Solution 3

Well, of course there is. We just need to be more intelligent about generating board configurations and evaluate board positions while we're generating them. Instead of generating a complete board configuration and then testing it, why not generate and test partial solutions? If we can stop as soon as we know we don't have a valid solution, then things should go faster. Also, if we can back up from a bad solution to the last good partial solution, we can eliminate bad configurations more quickly.

Now we're at the point where we can do that top-level design, formalize it, and move down to the next refinement level.

Refinement 1

Here's the idea.

- Put down a queen on the next row in the next available column.

- Test the queen to see if she can attack any other queen. (That's a variation on the q(x) test above.)

- If she can attack, pick her back up, back up to the previous trial solution and try again.

- If she can't attack, leave her alone and go back to the top and try the next queen.

With this method, we're guaranteed that the trial solution at column j is correct. We then attempt to generate a new solution by adding a queen in column j+1. If this fails, we just need to back up to our previous correct trial solution at column j and try again. This technique of creating and testing partial solutions is called a stepwise construction of trial solutions by Wirth. And the backing up technique is, of course, called backtracking. Here's more formal pseudo-code to find a single solution:

do {

while ((row < 8) && (col < 8)) {

if (the current queen is safe) then

advance: keep the queen on the board and advance to the next col.

else

the queen is not safe, so move up to the next row.

}

if (we've exhausted all the rows in this column) then

regress: retreat a column, move its queen up a row, and start again.

} while ((col < 8) && (col >= 0));

if (we've reached column 8) then

we have a solution, print it.

This solution tests only a single queen at a time. One implication of this is that, at any given time, there are only j queens on the board and so only j queens need to be tested each time through the outer loop. (One only needs to test the queens up through column j.) That reduces the amount of work in the testing routine.

This algorithm is our first formal view of the solution. Notice in the method described above that we're using pseudo-code rather than a real programming language. This is because we want to push language details further down the refinement levels. Also, while we've got a general outline of the method, there are a lot of details still to be considered. These details have been pushed down in the hierarchy of control we're creating, and we'll get to them in the next refinement iteration. This is also a function of the stepwise refinement.

Now that we have a description of the algorithm, we can also work to verify it. The final verification will, of course, be watching the program produce a correct solution but we're not at that point yet. However, we can surely take a chess board (or a piece of paper) and walk through this algorithm by hand to verify that we can generate a placement of queens on the board that is a solution to the problem.

At this point we've got a more formal top-level description, we've done what verification we can, and we're ready to expand those fuzzy steps we see above.

Refinement 2

Now that we've got a first cut at the program, we need to examine each of the steps in the program and see what they are made of. The steps we're interested in are

- Check to see if the current queen is safe.

- Keep a safe queen on the board and advance to the next column.

- Advance an unsafe queen up a row.

- Retreat a column and reset the queen in that column.

Checking to see if the current queen is safe means that we need to check that there are no other queens on either of the diagonals (the up or down diagonals) or the row that the current queen is on. The row check is easy, one just checks all the other squares in the same row. To check the up and down diagonals, remember that if the current queen is at column j, that we only need to check columns 1 through j-1. If you think about it for a minute, you'll see that the difference between the row and column indexes of all the squares on the up diagonal (those that go from lower-left to upper-right) are a constant. Similarly, the sum of the row and column indexes of all the squares on the down diagonal (those that go from upper-left to lower-right) are also a constant. This makes it easier to figure out which cells are on the diagonal of a queen and how to check them.

Now we're ready to start considering data structures. Where did this come from, you ask? Well, stepwise refinement is mostly about describing the control flow of the program. But at some point you need to decide on exactly what the data will look like. For each problem you try to solve, this will happen at a different place in your refinement process. For this problem we're at a place where in the next refinement we should be writing more detailed pseudo-code. That is pretty much forcing us to think about data structures now, so we can do the pseudo-code.

In particular now we need to ask ourselves, how are we going to represent the board and the queens on the board? How are we going to represent the empty cells? We need a data structure that will allow us to efficiently represent queens and check whether they can be attacked. A first cut at this might be an 8 × 8 two-dimensional array where we place queens at the appropriate row and column intersections. Because we don't need to do any computation on this matrix – all we need is to indicate the presence or absence of a queen – we can save space by making it a boolean array. This data structure also allows us to quickly check the rows, and the up and down diagonals for queens that can attack the current queen. So we should use a 2D boolean array, right?

Well, not so fast. This isn't the only way to think about the data representation for queens. In fact, if we think about the data structure and the operations we need to perform during the safety check we might be able to simplify things a bit.

First of all, since we know that there can only be one queen in each column and one queen in each row, why not combine that information? Instead of a 2D array, why not just use a 1D boolean array, say

boolean column[8];

where column[i] = true means that the ith column is still free. For the diagonals, we can use the property about the constant difference or sum of up and down diagonals to create two other arrays

boolean up[-7..+7], down[0..14];

that will indicate which diagonal squares are free. With this arrangement, the test for a queen being safe is

(column[i] and up[row-col] and down[row+col])5

All right. This seems simple enough, so we're finally done with this, right?

Well, no. There's yet another way to think about this. Going back to using a 1D array, but this time using an integer array

int board[8];

where each index into the array represents a column (0 through 7 in the eight-queens case), and each value stored in the array represents the row on which a queen is deposited (also 0 through 7 in the eight-queens case). Because we now have data on the exact location (rows and columns) of each queen, we don't need separate arrays for the up and down diagonals. The test for safety is a bit more difficult, but still simple. This might be the time for some more code. At this point it seems appropriate to move from pseudo-code to a real language. You'll have to make that move at some point in the refinement process. Just like deciding when to define your data structures, exactly when to insert language-specific features depends on the problem and what how detailed the refinement is at this point. A Java method to test for safety might look like:

public boolean isSafe (int[ ] board) {

boolean safe = true;

for (int i = 0; i < col; i++) {

if ( ( ( board[i] + i) == (row + col) ) || // down diagonal test

( ( board[i] - i) == (row - col) ) || // up diagonal test

( board[i] == row) ) // row test

safe = false;

}

return safe;

}

Remember that, because we're creating partial solutions by adding one queen to a column at a time, we only need to test the first col columns each time.

__________

5 Dahl, O. J., E. Dijkstra, et al. (1972). Structured Programming. (London, UK: Academic Press, 1972.)

Now that we have the safety procedure out of the way and we've decided on a simple data structure to represent the current board configuration, we can proceed to the remaining procedures in the decomposition. The remaining procedures are

5. Keep a safe queen on the board and advance to the next column;

6. Advance an unsafe queen up a row; and

7. Retreat a column and reset the queen in that column.

These are all simple enough to just write without further decomposition. This is a key point of structured programming – keep doing the decompositions until a procedure becomes obvious, then you can code. These three methods then look like the following when written in code:

/*

* keep a safe queen on the board and advance to the next column

* the queen at (row, col) is safe, so we have a partial solution.

* advance to the next column

*/

public void advance (int[] board) {

board[col] = row; // put the queen at (row, col) on the board

col++; // move to the next column

row = 0; // and start at the beginning of the column

}

For advance an unsafe queen up a row we don't even need a method. The test in the main program for safety moves the queen up a row if the isSafe() method determines that the current (row, col) position is unsafe. The code for this is:

if (isSafe(board))

advance(board);

else

row++;

Finally, we have:

/**

* retreat a column and reset the queen in that column

* we could not find a safe row in current col

* so back up one col and move that queen

* up a row so we can start looking again

*/

public void retreat (int[] board) {

col--;

row = board[col] + 1;

}

The complete Java program is in the Appendix.

Modular Decomposition

In 1972, David Parnas published a paper titled “On the Criteria to Be Used in Decomposing Systems into Modules” that proposed that one could design programs using a technique called modularity.6 Parnas' paper was also one of the first papers to describe a decomposition based on information hiding, one of the key techniques in object-oriented programming. In his paper, Parnas highlighted the differences between a top-down decomposition of a problem based on the flow of control of a problem solution and a decomposition of the problem that used encapsulation and information hiding to isolate data definitions and their operations from each other. His paper is a clear precursor to object-oriented analysis and design (OOA&D), which we'll see in the next chapter.

While Parnas' paper pre-dates the idea, he was really talking about a concept called separation of concerns. “In computer science, separation of concerns is the process of separating a computer program into distinct features that overlap in functionality as little as possible. A concern is any piece of interest or focus in a program. Typically, concerns are synonymous with features or behaviors. Progress towards separation of concerns is traditionally achieved through modularity of programming and encapsulation (or “transparency” of operation), with the help of information hiding.”7 Traditionally, separation of concerns was all about separating functionality of the program. Parnas added the idea of separating the data as well, so that individual modules would control data as well as the operations that acted on the data and the data would be visible only through well-defined interfaces.

There are three characteristics of modularity that are key to creating modular programs:

- Encapsulation

- Loose coupling (how closely do modules relate to each other)

- Information hiding

In a nutshell, encapsulation means to bundle a group of services defined by their data and behaviors together as a module, and keep them together. This group of services should be coherent and clearly belong together. (Like a function, a module should do just one thing.) The module then presents an interface to the user and that interface is ideally the only way to access the services and data in the module. An objective of encapsulating services and data is high cohesion. This means that your module should do one thing and all the functions inside the module should work towards making that one thing happen. The closer you are to this goal, the higher the cohesion in your module. This is a good thing.

The complement of encapsulation is loose coupling. Loose coupling describes how strongly two modules are related to each other. This means we want to minimize the dependence any one module has on another. We separate modules to minimize interactions and make all interactions between modules through the module interface. The goal is to create modules with internal integrity (strong cohesion) and small, few, direct, visible, and flexible connections to other modules (loose coupling). Good coupling between modules is loose enough that one module can easily be called by others.

__________

6 Parnas, D. “On the Criteria to be Used in Decomposing Systems into Modules.” Communications of the ACM 15(12): 1053-1058. (1972)

7 Wikipedia. Separation of Concerns. 2009. http://en.wikipedia.org/wiki/Separation_of_concerns. Retrieved on December 7, 2009.

Coupling falls into four broad categories that go from good to awful:

- Simple data coupling: Where non-structured data is passed via parameter lists. This is the best kind of coupling, because it lets the receiving module structure the data as it sees fit and it allows the receiving module to decide what to do with the data.

- Structured data coupling: Where structured data is passed via parameter lists. This is also a good kind of coupling, because the sending module keeps control of the data formats and the receiving module gets to do what it wants to with the data.

- Control coupling: Where data from module A is passed to module B and the content of the data tells module B what to do. This is not a good form of coupling; A and B are too closely coupled in this case because module A is controlling how functions in module B will execute.

- Global-data coupling: Where the two modules make use of the same global data. This is just awful. It violates a basic tenet of encapsulation by having the modules share data. This invites unwanted side effects and ensures that at any given moment during the execution of the program that neither module A nor module B will know precisely what is in the globally shared data. And what the heck are you doing using global variables anyway? Bad programmer!

Information hiding is often confused with encapsulation, but they are not the same thing. Encapsulation describes a process of wrapping both data and behaviors into a single entity – in our case, a module. Data can be publicly visible from within a module, and thus not hidden. Information hiding, on the other hand, says that the data and behaviors in a module should be controlled and visible only to the operations that act on the data within the module, so it's invisible to other, external, modules. This is an important feature of modules (and later of objects as well) because it leaves control of data in the module that understands best how to manipulate the data and it protects the data from side effects that can arise from other modules reaching in and tweaking the data.

Parnas was not just talking about hiding data in modules. His definition of information hiding was even more concerned with hiding design decisions in the module definition. “We propose … that one begins with a list of difficult design decisions or design decisions which are likely to change. Each module is then designed to hide such a decision from the others.”8 Hiding information in this manner allows clients of a module to use the module successfully without needing to know any of the design decisions that went into constructing the module. It also allows developers to change the implementation of the module without affecting how the client uses the module.

Example: Keyword in Context: Indexes for You and Me

Back in the day, when Unix was young and the world was new, the Unix documentation was divided into eight (8) different sections and the entire manual started with a permuted index. The problem with Unix is not the command line interface, it's not the inverted tree file system structure. No, the problem with Unix is that the three guys who developed it Kernighan, Ritchie, and Thompson, are the three laziest guys on the planet. How do I know? Where's my proof? Well, the proof is in practically every Unix command- ls, cat, cp, mv, mkdir, ps, cc, as, ld, m4 … I could go on. Unix has to have the most cryptic command line set of any operating system on the planet. The cardinal rule for creating Unix command line tools was apparently, “why use three characters when two will do?”

__________

8 Parnas, 1972.

So finding anything in any of the 8 sections of Unix documentation could have been a real trial. Enter the permuted index. Every Unix man page starts with a header line that contains the name of the command, and a short description of what the command does. For example, the cat(1) man page begins

cat -- concatenate and print files

The problem is, what if I don't know the name of a command, but I do know what it does? The permuted index solves this problem by making most of the words in the description (the articles were ignored) of the command part of the index itself. So that cat could be found under “cat” and also “concatenate”, “print” and “files.” This is known as a Keyword in Context (KWIC) index. It works just dandy.

So our problem is to take as input two files, the first of which contains words to ignore, the second of which contains lines of text to index, and create a KWIC index for them. For example, say that we're ignoring the articles for, the, and, et.c, and the second file looks like

The Sun also Rises

For Whom the Bell Tolls

The Old Man and the Sea

Our KWIC index would look like:

the sun ALSO rises

for whom the BELL tolls

the old MAN and the sea

the OLD man and the sea

the sun also RISES

the old man and the SEA

the SUN also rises

for whom the bell TOLLS

for WHOM the bell tolls

Note that each keyword is in all caps, each input line appears once for every index word in the line, and the keywords are sorted alphabetically. Each line of text has its keywords made visible by circularly shifting the words in the line. In the case of a tie (two lines of text have the same index word and so should appear together in the output), the lines of text should appear in the same order in which they appeared in the text input file. So the question we have to answer is is, how do we create the KWIC index? A secondary question we'll need to answer almost immediately is, how do we store the data?

Top-Down Decomposition

We'll start by designing the problem solution using a top-down decomposition. Top-down decompositions, as we've seen with the eight queens problem earlier in this chapter, are all about control flow. We want to figure out how to sequentially solve the problem, making progress with each step we take. It is assumed that the data are stored separately from the routines and each subroutine in the control flow can access the data it needs. The alternative is to pass the data along to each subroutine as we call it; this can be cumbersome and time consuming because the data usually has to be copied each time you pass it to a routine. A first decomposition of this problem might look like:

- Input the words to ignore and the text.

- Create a data structure containing the circularly shifted lines of text, keeping track of which word in the line is the index word for this line.

- Sort the circularly shifted lines of text by the index words.

- Format the output lines.

- Output the text.

Note that these five steps can easily become five subroutines that are all called in sequence from a main program. The data structure used for the input text could be an array of characters for each line, a String for each line, or an array of Strings for the entire input file. One could also use a map data structure that uses each index word as the key and a String containing the input text line as the value of the map element. There are certainly other possible data structures to be used. Sorting can be done by any of the stable sorting algorithms and which algorithm to use would depend on the data structure chosen and on the expected size of the input text. Your sort must be stable because of the requirement that identical index words sort their respective lines in the same order that they appear in the input text file. Depending on the programming language you use and the data structure you choose, sorting might be done automatically for you. The data structure you choose will affect how the circular shifts are done and how the output routine does the work of formatting each output line.

Now that we've got a feel for how a top-down decomposition might proceed, let's move on and consider a modular decomposition.

Modular Decomposition of KWIC

A modular decomposition of the KWIC problem can be based on information hiding in the sense that we will hide both data structures and design decisions. The modules we create will not necessarily be the sequential list we have above, but will be modules that can cooperate with each other and are called when needed. One list of modules for KWIC is:

- A Line module (for lines of input text)

- A Keyword-Line pair module

- A KWICIndex module to create the indexed list itself

- A Circular Shift module

- A module to format and print the output

- A master control module

The Line module will use the Keyword-Line module to create a map data structure where each Line is a keyword and a list of lines that contain that keyword. The KWICIndex module will use the Line module to create the indexed list. The Circular Shift module will use the KWICIndex module (and recursively, the Line and Keyword-Line modules) and create the circularly shifted set of keyword-line pairs. Sorting will be taken care of internally in the KWICIndex module; ideally the index will be created as a sorted list and any additions to the list will maintain the ordering of the index. The format and print module will organize the keyword-lines so that the keywords are printed in all caps and centered on the output line. Finally, the master control module will read the input, create the KWICIndex and cause it to print correctly.

The key of these modules is that one can describe the modules and their interactions without needing the details of how each module is implemented and how the data is stored. That is hidden in the module description itself. Other designs are also possible. For example, it might be better to subsume the circular shift operations inside the Line module, allowing it to store the input lines and their shifts. Regardless, the next step in the design is to create the interface for each module and to coordinate the interfaces so that each module can communicate with every other module regardless of the internal implementation.

We'll continue this discussion in way more detail in the next chapter on object-oriented design.

Conclusion

Structured design describes a set of classic design methodologies. These design ideas work for a large class of problems. The original structured design idea, stepwise refinement, has you decompose the problem from the top-down, focusing on the control flow of the solution. It also relates closely to some of the architectures mentioned in Chapter 5, particularly the main program-subroutine and pipe-and-filter architectures. Modular decomposition is the immediate precursor to the modern object-oriented methodologies, and introduced the ideas of encapsulation and information hiding. These ideas are the fundamentals of your design toolbox.

References

Dahl, O. J., E. Dijkstra, et al. (1972). Structured Programming. (London, UK: Academic Press, 1972.)

Dijkstra, E. “GoTo Statement Considered Harmful.” Communications of the ACM 11(3): 147-148. (1968)

Hunt, A. and D. Thomas. The Pragmatic Programmer: From Journeyman to Master. (Boston, MA: Addison-Wesley, 2000.)

McConnell, S. Code Complete 2. (Redmond, WA: Microsoft Press, 2004.)

Miller, G. A. “The magical number seven, plus or minus two: Some limits on our capacity for processing information.” Psychological Review 63: 81-97. (1956)

Parnas, D. “On the Criteria to be Used in Decomposing Systems into Modules.” Communications of the ACM 15(12): 1053-1058. (1972)

Wikipedia. Separation of Concerns. 2009. http://en.wikipedia.org/wiki/Separation_of_concerns. Retrieved on December 7, 2009.

Wirth, N. “Program Development by Stepwise Refinement.” CACM 14(4): 221-227. (1971)

Appendix: The Complete Non-Recursive Eight-Queens Program

/*

* NQueens.java

* 8-Queens Program

* A non-recursive version for a single solution

* jfd

*/

import java.util.*;

public class NQueens

{

static int totalcount = 0;

static int row = 0;

static int col = 0;

/*

* the queen at (row, col) is safe,

* so we have a partial solution

* advance to the next colum

*/

public void advance (int[] board) {

board[col] = row;

col++;

row = 0;

}

/*

* could not find a safe row in current col

* so back up one col and move that quee

* up a ro

*/

public void retreat (int[] board) {

col--;

row = board[col] + 1;

}

/*

* check to see if queen at (row, col) can be

* attacked

*/

public boolean isSafe (int[] board) {

boolean safe = true;

totalcount++;

/*

* check diagonals and row for attacks

* since we're just checking partial solutions

* only need to go up to current col

*/

for (int i=0; i<col; i++) {

if (( (board[i] + i) == (row + col) ) || // up diagonal

( (board[i] - i) == (row - col) ) || // down diagonal

(board[i] == row) ) {

safe = false;

}

}

return safe;

}

public static void main(String args[]) {

int N = 8; // default board size

System.out.print("Enter the size of the board: ");

Scanner stdin = new Scanner(System.in);

N = stdin.nextInt();

System.out.println();

NQueens queen = new NQueens();

/*

* index into board is a column number

* value stored in board is a row number

* so board[2] = 3; says put a queen on col 2, row 3

*/

int[] board = new int [N]; /*

* simple algorithm to build partial solutions

* for N-queens problem. Place a queen in the

* next available column, test to see if it

* can be attacked. If not, then move to the next

* column. If it can be attacked, move the queen

* up a row and try again.

* If we exhaust all the rows in a column, back up

* reset the previous column and try again.

*/

do {

while ((row < N) && (col < N)) {

if (queen.isSafe(board)) {

queen.advance(board);

} else {

row++;

}

}

if (row == N) {

queen.retreat(board);

}

} while ((col < N) && (col >= 0));

/* If we've placed all N queens, we've got a solution */

if (col == N) {

for (int i = 0; i < N; i++) {

System.out.print(board[i] + " ");

}

} else {

System.out.println("No solution. ");

}

System.out.println();

System.out.println("after trying " + totalcount +

" board positions.");

}

}