Chapter 8

Operating Systems History

Chapter 7 covered the evolution of hardware and software. In this chapter, the focus is on the evolution of operating systems with particular emphasis on the development of Linux and Unix and separately, the development of PC-based GUI operating systems. The examination of Linux covers two separate threads: first, the variety of Linux distributions that exist today, and second, the impact that the open source community has had on the development of Linux.

The learning objectives of this chapter are to

- Describe how operating systems have evolved.

- Discuss the impact that Linux has had in the world of computing.

- Compare various Linux distributions.

- Describe the role that the open source community has played.

- Trace the developments of PC operating systems.

In this chapter, we look at the history and development of various operating systems (OSs), concentrating primarily on Windows and Linux. We spotlight these two OSs because they are two of the most popular in the world, and they present two separate philosophies of software. Windows, a product of the company Microsoft, is an OS developed as a commercial platform and therefore is proprietary, with versions released in an effort to entice or force users to upgrade and thus spend more money. Linux, which evolved from the OS Unix, embraces the Open Source movement. It is free, but more than this, the contents of the OS (the source code) are openly available so that developers can enhance or alter the code and produce their own software for the OS—as long as they follow the Open GNUs Licensing (GL) agreement. Before we look at either Windows or Linux, we look at the evolution of earlier OSs.

Before Linux and Windows

As discussed in Chapter 7, early computers had no OSs. The users of these computers were the programmers and the engineers who built the computers. It was expected that their programs would run with few or no resources (perhaps access to punch cards for input, with the output being saved to tape). The operations of reading from punch cards and writing to tape had to be inserted into the programs themselves, and thus were written by the users.

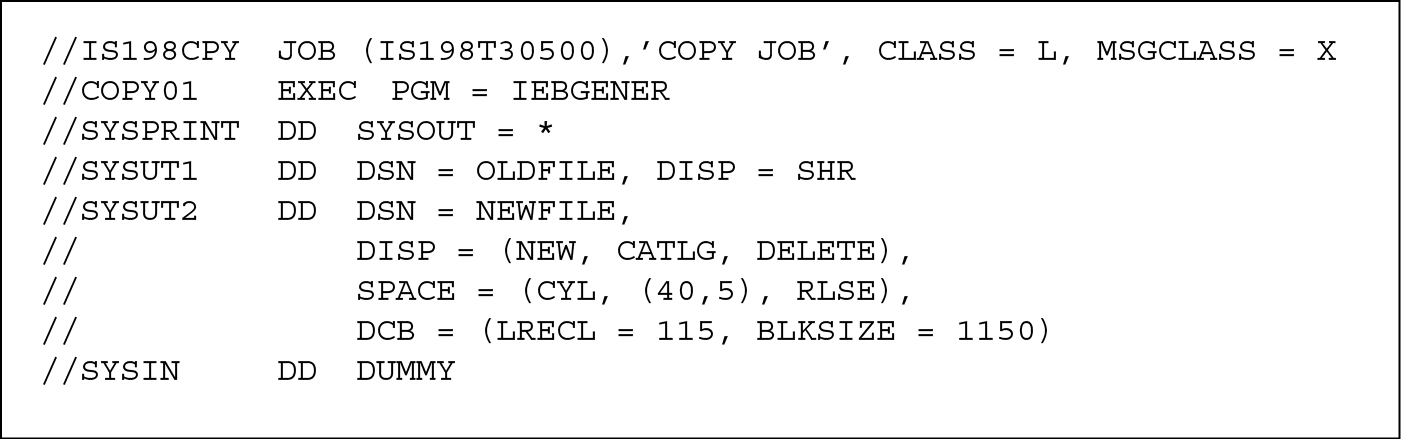

Several changes brought about the need for an OS. The first was the development of language translators. To run a program, a programmer would have to first compile (or assemble) the program from its original language into machine language. The programmer would have to program the computer for several distinct steps. First, a translation program (compiler or assembler) would have to be input from tape or punch card. Second, the source code would have to be input, again from tape or punch card. The translation program would execute, loading the source code, translating the source code, and saving the resulting executable code onto magnetic tape. Then the executable program would be input from tape, with data being input again from card or tape. The program would be executed and the output would be sent to magnetic tape to be printed later. Without an OS, every one of the input and output tasks would have to be written as part of the program. To simplify matters, the resident monitor was created to handle these operations based on a few commands rather than dozens or hundreds of program instructions. See Figure 8.1, which illustrates in Job Control Language (JCL; used on IBM mainframes) the instructions to copy a file from one location to another.

Another change that brought about the need for OSs was the availability of computers. Into the second generation, computers were less expensive, resulting in more computers being sold and more organizations having access to them. With the increase in computers came an increase in users. Users, starting in the second generation, were not necessarily programmers or engineers. Now, a typical employee might use a computer. The OS was a mechanism that allowed people to use a computer without necessarily having to understand how to program a computer.

Additionally, the improvement in computer hardware led to OSs in two ways. First, the improved speed led to programs requiring less time to execute. In the first generation, the computer user was also the engineer and the programmer. Switching from one program and thus one user to another was time consuming. With programs taking less time to execute, the desire to improve this transition led to OSs. Also, the improved reliability of computers (having abandoned short lifespan vacuum tubes) permitted longer programs to execute to completion. With longer programs came a need for handling additional computing resources and therefore a greater demand for an OS.

OSs grew more complex with the development of multiprogramming and time sharing (multitasking). This, in turn, permitted dozens or hundreds or thousands of users to use the computer at the same time. This increase in usage, in turn, required that OSs handle more and more tasks, and so the OSs became even more complex. And as discussed in Chapter 7, windowing OSs in the fourth generation changed how users interact with computers. What follows is a brief description of some of the early OSs and their contributions.

The earliest OS used for “real work” was GM-NAA I/O, written by General Motors for use on IBM 701 mainframe computers. Its main purpose was to automatically execute a new program once the previously executing program had terminated. It used batch processing whereby input was supplied with the program. It was a collection of an expanded resident monitor written in 1955 along with programs that could access the input and output devices connected to the mainframe.

Atlas Supervisor in 1957 for Manchester University permitted concurrent user access. It is considered to be the first true OS (rather than resident monitor). Concurrent processing would not generally be available until the early 1960s (see CTSS). Additionally, the Atlas was one of the first to offer virtual memory.

BESYS was Bell Operating System, developed by Bell Laboratories in 1957 for IBM 704 mainframes. It could handle input from both punch cards and magnetic tape, and output to either printer or magnetic tape. It was set up to compile and run FORTRAN programs.

IBSYS, from 1960, was released by IBM with IBM 7090 and 7094 mainframes. OS commands were embedded in programs by inserting a $ in front of any OS command to differentiate it from a FORTRAN instruction. This approach would later be used to implement JCL (Figure 8.1) instructions for IBM 360 and IBM 370 mainframe programs.

CTSS, or Compatible Time-Sharing System, released in 1961 by the Massachusetts Institute of Technology’s (MIT) Computation Center, was the first true time-sharing (multitasking) OS. Unlike the Atlas Supervisor, one component of CTSS was in charge of cycling through user processes, offering each a share of CPU time (thus the name time sharing).

EXEC 8, in 1964, was produced for Remington Rand’s UNIVAC mainframes. It is notable because it was the first successful commercial multiprocessing OS (i.e., an OS that runs on multiple processors). It supported multiple forms of process management: batch, time sharing, and real-time processing. The latter means that processes were expected to run immediately without delay and complete within a given time limit, offering real-time interaction with the user.

TOPS-10, also from 1964, was released by Digital Equipment Corporation (DEC) for their series of PDP-10 mainframes. TOPS-10 is another notable time sharing OS because it introduced shared memory. Shared memory would allow multiple programs to communicate with each other through memory. To demonstrate the use of shared memory, a multiplayer Star Trek–based computer game was developed called DECWAR.

MULTICS, from 1964, introduced dynamic linking of program code. In software engineering, it is common for programmers to call upon library routines (pieces of code written by other programmers, compiled and stored in a library). By using dynamic linking, those pieces of code are loaded into memory only when needed. MULTICS was the first to offer this. Today, we see a similar approach in Windows with the use of “dll” files. MULTICS was a modularized OS so that it could support many different hardware platforms and was scalable so that it could still be efficient when additional resources were added to the system. Additionally, MULTICS introduced several new concepts including access control lists for file access control, sharing process memory and the file system (i.e., treating running process memory as if it were file storage, as with Unix and Linux using the /proc directory), and a hierarchical file system. Although MULTICS was not a very commercially successful OS, it remained in operation for more than a decade, still in use by some organizations until 1980. It was one of the most influential OSs though because it formed a basis for the later Unix OS.

OS360 for IBM 360 (and later, OS370 for IBM 370) mainframe computers was released in 1966. It was originally a batch OS, and later added multiprogramming. Additionally, within any single task, the task could be executed through multitasking (in essence, there was multithreading in that a process could be multitasked, but there was no multitasking between processes). OS360 used JCL for input/output (I/O) instructions. OS360 shared a number of innovations introduced in other OSs such as virtual memory and a hierarchical file system, but also introduced its own virtual storage access method (which would later become the basis for database storage), and the ability to spawn child processes. Another element of OS360 was a data communications facility that allowed the OS to communicate with any type of terminal. Because of the popularity of the IBM 360 and 370 mainframes, OS360 became one of the most popular OSs of its time. When modified for the IBM 370, OS360 (renamed System/370) had few modifications itself. Today, OS360 remains a popular experimental OS and is available for free download.

Unics (later, UNIX) was developed by AT&T in 1969 (see the next section for details). Also in 1969, the IBM Airline Control Program (ACP) was separated from the remainder of IBM’s airline automation system that processed airline reservations. Once separated, ACP, later known as TPF (Transaction Processing Facility) was a transaction processing OS for airline database transactions to handle such tasks as credit card processing, and hotel and rental car reservations. Although not innovative in itself, it provides an example of an OS tailored for a task rather than a hardware platform.

In the early 1970s, most OSs merely expanded upon the capabilities introduced during the 1960s. One notable OS, VM used by IBM, and released in 1972, allowed users to create virtual machines.

A History of Unix

The Unix OS dates back to the late 1960s. It is one of the most powerful and portable OSs. Its power comes from a variety of features: file system administration, strong network components, security, custom software installation, and the ability to define your own kernel programs, shells, and scripts to tailor the environment. Part of Unix’s power comes from its flexibility of offering a command line to receive OS inputs. The command line allows the user or system administrator to enter commands with a large variety of options. It is portable because it is written in C, a language that can be compiled for nearly any platform, and has been ported to a number of very different types of computers including supercomputers, mainframes, workstations, PCs, and laptops. In fact, Unix has become so popular and successful that it is now the OS for the Macintosh (although the Mac windowing system sits on top of it).

Unix was created at AT&T Bell Labs. The original use of the OS was on the PDP-7 so that employees could play a game on that machine (called Space Travel). Unix was written in assembly language and not portable. Early on, it was not a successful OS; in fact, it was not much of a system at all. After the initial implementation, two Bell Laboratories employees, Ken Thompson and Dennis Ritchie, began enhancing Unix by adding facilities to handle files (copy, delete, edit, print) and the command-line interpreter so that a user could enter commands one at a time (rather than through a series of punch cards called a “job”). By 1970, the OS was formally named Unix.

By the early 1970s, Unix was redeveloped to run on the PDP-11, a much more popular machine than the PDP-7. Before it was rewritten, Ritchie first designed and implemented a new programming language, C. He specifically developed the language to be one that could implement an OS, and then he used C to rewrite Unix for the PDP-11. Other employees became involved in the rewriting of Unix and added such features as pipes (these are discussed in Chapter 9). The OS was then distributed to other companies (for a price, not for free).

By 1976, Unix had been ported to a number of computers, and there was an ever-increasing Unix interest group discussing and supporting the OS. In 1976, Thompson took a sabbatical from Bell Labs to teach at University of California–Berkeley, where he and UCB students developed the Berkeley Standard Distribution version of Unix, now known as BSD Unix. BSD version 4.2 would become an extremely popular release. During this period, Unix also adopted the TCP/IP protocol so that computers could communicate over network. TCP/IP was the protocol used by the ARPAnet (what would become the Internet), and Unix was there to facilitate this.

Into the 1980s, Unix’s increasing popularity continued unabated, and it became the OS of choice for many companies purchasing mainframe and minicomputers. Although expensive and complex, it was perhaps the best choice available. In 1988, the Open Source Foundation (OSF) was founded with the express intent that software and OS be developed freely. The term “free” is not what you might expect. The founder, Richard Stallman, would say “free as in freedom not beer.” He intended that the Unix user community would invest time into developing a new OS and support software so that anyone could obtain it, use it, modify it, and publish the modifications. The catch—anything developed and released could only be released under the same “free” concept. This required that all code be made available for free as source code. He developed the GPL (GNUs* Public License), which stated that any software produced from GPL software had to also be published under the GPL license and thus be freely available. This caused a divide into the Unix community—those willing to work for free and those who worked for profit.

The OSF still exists and is still producing free software, whereas several companies are producing and selling their own versions of Unix. Control of AT&T’s Unix was passed on to Novell and then later to Santa Cruz Operation, whereas other companies producing Unix include Sun (which produces a version called Solaris), Hewlett Packard (which sells HP-UX), IBM (which sells AIX), and Compaq (which sells Tru64 Unix). Unix is popular today, but perhaps not as popular as Linux.

A History of Linux

Linux looks a lot like Unix but was based on a free “toy” OS called Minix. Minix was available as a sample OS from textbook author Andrew Tanenbaum. University of Helsinki student Linus Torvalds wanted to explore an OS in depth, and although he enjoyed learning from Minix, he ultimately found it unsatisfying, so he set about to write his own. On August 26, 1991, he posted to the comp.os.minix Usenet newsgroup to let people know of his intentions and to see if others might be interested. His initial posting stated that he was building his own version of Minix for Intel 386/486 processors (IBM AT clones). He wanted to pursue this as a hobby, and his initial posting was an attempt to recruit other hobbyists who might be interested in playing with it. His initial implementation contained both a Bash interpreter and the current GNU’s C compiler, gcc. Bash is the Bourne Again Shell, a variation of the original Bourne shell made available for Unix. Bash is a very popular shell, and you will learn about it in Chapter 9. He also stated that this initial implementation was completely free of any Minix code. However, this early version of an OS lacked device drivers for any disk drive other than AT hard disks.

Torvalds released version .01 in September of 1991 and version .02 in October having received a number of comments from users who had downloaded and tested the fledgling OS. On October 5, to announce the new release, Torvalds posted a follow-up message to the same newsgroup. Here, he mentions that his OS can run a variety of Unix programs including Bash, gcc, make (we cover make in Chapter 13), sed (mentioned in Chapter 10), and compress. He made the source code available for others to not only download and play with, but to work on the code in order to bring about more capabilities.

By December, Torvalds released version .10 even though the OS was still very much in a skeletal form. For instance, it had no log in capabilities booting directly to a Bash shell and only supported IBM AT hard disks. Version .11 had support for multiple devices and after version .12, Torvalds felt that the OS was both stable enough and useful enough to warrant the release number .95. Interestingly, at this point, Torvalds heard back from Tanenbaum criticizing Torvalds’ concept of an OS with a “monolithic” kernel, remarking that “Linux is obsolete.” Tanenbaum would be proved to be wrong with his comments. In releasing his first full-blown version, Linus Torvalds decided to adopt the GNU General Public License. This permitted anyone to obtain a free copy of the OS, make modifications, and publish those modifications for free. However, Torvalds also decided to permit others to market versions of Linux. This decision to have both free versions (with source code available for modification) and commercial versions turns out to be a fortuitous idea.

Within a few years, Linux group supporters numbered in the hundreds of thousands. Commercial versions were being developed while the user community continued to contribute to the software to make it more useful and desirable. Graphical user interfaces were added to make Linux more useable to the general computing populace.

Today, both Unix and Linux are extremely popular OS formats. Both are available for a wide range of machines from handheld devices to laptops and PCs to mainframes to supercomputers. In fact, Linux can run on all of the following machines/processors: Sun’s Sparc, Compaq’s Alpha, MIPS processors, ARM processors (found in many handheld devices), Intel’s x86/Pentium, PowerPC processors, Motorola’s 680x0 processors, IBM’s RS6000, and others. One reason why the OSs are so popular is that most servers on the Internet run some version of Unix or Linux.

Here are a few interesting facts about Linux:

- The world’s most powerful supercomputer, IBM’s Sequoia, runs on Linux, and 446 of the top 500 supercomputers run Linux.

- Ninety-five percent of the servers used in Hollywood animation studios run Linux.

- Google runs its web servers in Linux.

- The OSs for Google’s Android and Nokia’s Maemo are built on top of the Linux kernel.

- Notably, 33.8% of all servers run Linux (as of 2009), whereas only 7.3% run a Microsoft server.

- Available application software for Linux include Mozilla Firefox and OpenOffice, which are free, and Acrobat Reader and Adobe Flash Player, which are proprietary.

- There are more than 300 distributions of Linux deployed today.

- The Debian version of Linux consists of more than 280 million lines of code, which, if developed commercially, would cost more than $7 billion; the original Linux kernel (1.0.0) had 176,250 lines of code.

On the other hand, as of January 2010, slightly more than 1% of all desktops run Linux.

Aside from the popularity of Linux, its cross-platform capabilities and the ability to obtain Linux versions and application software for free, there are several other appealing characteristics of Linux. First, Linux is a stable OS. If you are a Windows user, you have no doubt faced the frustration of seeing application software crash on you with no notice and little reason. There are also times when rebooting Windows is a necessity because the OS has been active for too long (reasons for the need to reboot Windows include corrupted OS pages and fragmented swap space). Linux almost never needs to be rebooted. In fact, Linux only tends to stop if there is a hardware failure or the user shuts the system down. Anything short of a system upgrade does not require a reboot. Furthermore, Linux is not susceptible to computer viruses. Although most viruses target the Windows OS, if someone were to write a virus to target Linux, it would most likely fail because of Linux’ memory management, which ensures no memory violations (see Chapter 4).† Also, Linux is able to support software more efficiently. As an experiment, Oracle compared the performance of Oracle 9i when run on Windows 2000 versus Linux. The Linux execution was 25–30% more efficient.

In a survey of attendees at LinuxCon 2011, the results showed that the most popular distribution of Linux was Ubuntu (34%), followed by Fedora/Red Hat (28%). Of those surveyed, nearly half used Linux at home, work, and/or school, whereas 38% used Linux at work and 14% at home.

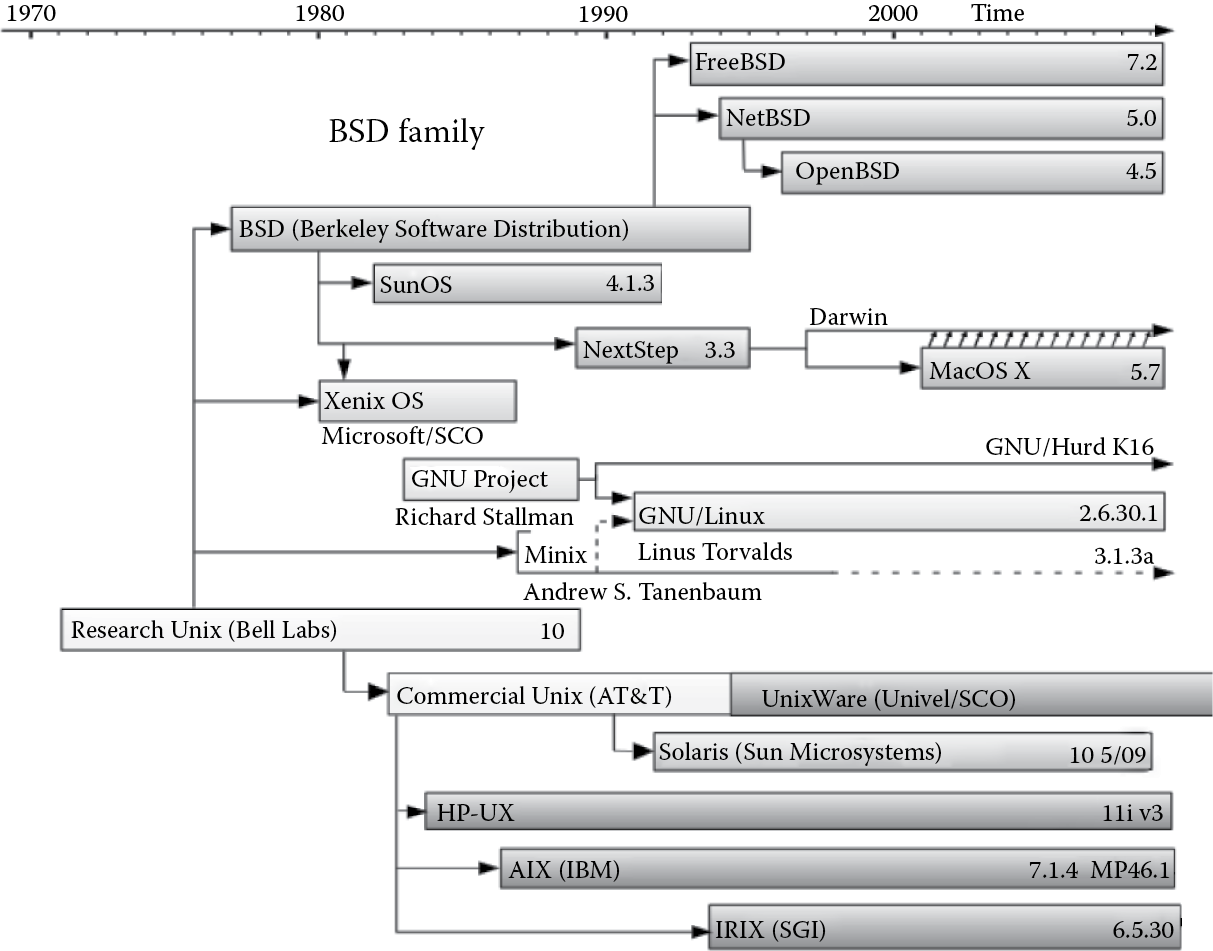

Differences and Distributions

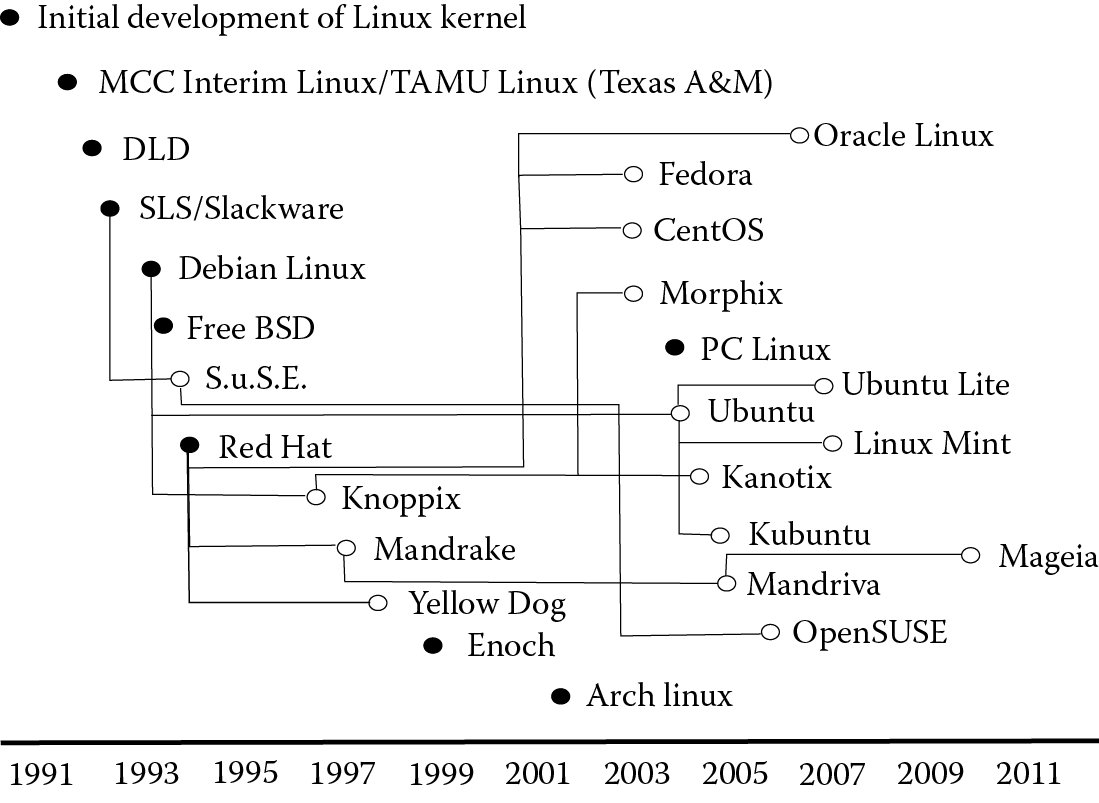

Figure 8.2 provides a timeline for the various releases between the original Unics OS through Unix, MacOS, and Linux releases (up to 2010). See Figure 8.3 for a look at the more significant Linux distribution releases. What are the differences between Unix and Linux? What are the differences between the various distributions of Linux (Debian, Red Hat, etc.)? Why are there so many releases? Why is this so confusing?

Unix/Linux timeline. (Courtesy of http://commons.wikimedia.org/wiki/File:Unix.png , author unknown.)

Let us consider how Linux and Unix differ. If you look at the two OSs at a shallow level, they will look very similar. They have nearly identical commands (e.g., ls, cd, rm, pwd), and most of those commands have overlapping or identical options. They have many of the same shells available, and the most common graphical user interfaces (GUIs) run on both platforms (KDE and Gnome). They have very similar top level directory structures. Additionally, both Linux and Unix are reliable (as compared to say Windows), portable to many different types of hardware, and on the small size (when compared to an OS like Windows). What are the differences then?

Although Linux is open source and many of the software products written for Linux and Unix are open source, commercial versions of Unix are not open source, and most versions of Unix are commercial products. There are standards established for the various Unix releases that help maintain consistency between versions. This does not guarantee that a software product written for one version of Unix will run on another, but it does help. For Linux, the Linux Standards Base project began in 2001 and is an ongoing attempt to help create standards for all Linux releases. The standards describe what should or should not be contained in a distribution. For instance, the standard dictates which version of the C++ gnus compiler and which C++ libraries should be included. In 2011, the Java compiler was removed from the standard. However, Linux programmers do not have to follow the Linux Standards Base. This can lead to applications software that may not run on a given distribution, patches that are required for one release but not another, and command line arguments which work in one distribution that may not work in another. This can be very frustrating for both programmers and users.

Other differences between Linux and Unix are subtle and perhaps not noticeable to end users. Some differences are seen by system administrators because configuration files will differ (different locations, different names, different formats). A programmer might see deeper differences. For instance, the Linux kernel rarely executes in a threaded form whereas in Unix, kernel threads are common.

What about the Linux releases? As you can see in Figure 8.3, there are many different forms, or distributions, of Linux, and the various “spinoff” distributions have occurred numerous times over the past 20 years. For instance, as shown in the figure, SLS/Slackware Linux was used to develop S.u.S.E Linux, which itself was used to develop OpenSUSE. Debian Linux led to both Knoppix and Ubuntu, whereas Ubuntu led to Kubuntu, Linux Mint, and Ubuntu Lite. Red Hat was the basis for Mandrake, Yellow Dog, CentOS, Fedora, and Oracle Linux. Out of Mandrake came PC Linux and Mandiva.

The main two distributions of Linux are Debian and Red Hat, which along with Slackware (SLS), were the earliest distributions. Although Figure 8.3 shows the major distributions of Linux, there are dozens of other releases that are not shown.

There are far too many differences in the various distributions to list here. In fact, there are websites whose primary reason for existence is to list such differences. Here, we will limit ourselves to just four releases: Red Hat, Debian, Ubuntu, and SUSE.

- Red Hat—Released in 1994 by Bob Young and Marc Ewing, it is the leading version of Linux in terms of development and deployment. It can be found anywhere from embedded devices to Internet servers. Red Hat (the OS) is open source. However, Red Hat Enterprise Linux is only available for purchase, although it comes with support. Red Hat supports open source applications software, and in an interesting development, has acquired proprietary software and made it available as open source as well. Some of the innovations of Red Hat include their software management package, RPM (Red Hat Package Manager), SELinux (security enabled Linux), and the JBoss middleware software (middleware permits multiprocessing between applications software and the OS). Two Spinoffs of Red Hat are Fedora and CentOS, both of which were first released in 2004 and are free and open source. CentOS Linux is currently the most popular Linux distribution for web servers.

- Debian—Released in 1993 by Ian Murdock, Debian was envisioned to be entirely noncommercial, unlike Red Hat for which organizations can purchase and obtain support. Debian boasts more than 20,000 software packages. Debian has been cited as the most stable and least buggy release of any Linux. Debian also runs on more architectures (processors) than any other version of Linux. However, these two advantages may not necessarily be as good as they sound, as to support this the OS developers are more conservative in their releases. Each release is limited regarding new developments and features. In fact, Debian major releases come out at a much slower pace than other versions of Linux (every 1 to 3 years). Debian, like Red Hat, can run in embedded devices. Debian introduced the APT (Advanced Package Tool) for software management. Unlike Red Hat, the Debian OS can be run from removable media (known as a live CD). For instance, one could boot Debian from either CD or USB device rather than having Debian installed on the hard disk. Aside from Ubuntu (see below), there are several other Debian spinoffs, most notably MEPIS, Linux Mint, and Knoppix, all of which can be run from a boot disk or USB.

- Ubuntu—This was released in 2004 by Mark Shuttleworth and based on an unstable version of Debian, but has moved away from Debian so that it is not completely compatible. Unlike Debian, Ubuntu has regular releases (6 months) with occasional long-term support releases (every 3 to 5 years). Ubuntu, more than other Linux releases, features a desktop theme and assistance for users who wish to move from Windows to Linux. There are 3-D effects available, and Ubuntu provides excellent support for Wiki-style documentation. Shuttleworth is a multimillionaire and shared Ubuntu with everyone, including installation CDs for free (this practice was discontinued in 2011). As with other Debian releases, Ubuntu can be run from a boot disk or USB device.

- SUSE—Standing for Software und System-Entwicklung (German for software and systems development), it is also abbreviated as SuSE. This version of Linux is based on Slackware, a version of Linux based on a German version of Softlanding Linux Systems (SLS). Since SLS became defunct, SuSE is then an existing descendant of SLS. The first distribution of SuSE came in 1996, and the SuSE company became the largest distributor of Linux in Germany. SuSE entered the U.S. market in 1997 and other countries shortly thereafter. In 2003, Novell acquired SuSE for $210 million. Although SuSE started off independently of the Linux versions listed above, it has since incorporated aspects of Red Hat Linux including Red Hat’s package manager and Red Hat’s file structure. In 2006, Novell signed an agreement with Microsoft so that SuSE would be able to support Microsoft Windows software. This agreement created something of a controversy with the Free Software Foundation (FSF; discussed in the next section).

Still confused? Probably so.

Open Source Movement

The development of Linux is a more recent event in the history of Unix. Earlier, those working in Unix followed two different paths. On one side, people were working in BSD Unix, a version that was developed from UC Berkeley from work funded by Defense Advanced Research Projects Agency (DARPA). The BSD users, who came from all over the world, but largely were a group of hackers from the West Coast, helped debug, maintain, and improve Unix over time. Around the same time in the late 1970s or early 1980s, Richard Stallman, an artificial intelligence researcher at MIT, developed his own project, known as GNU.‡ Stallman’s GNU project was to develop software for the Unix OS in such a way that the software would be free, not only in terms of cost but also in terms of people’s ability to develop the software further.

Stallman’s vision turned into the free software movement. The idea is that any software developed under this movement would be freely available and people would be free to modify it. Therefore, the software would have to be made available in its source code format (something that was seldom if ever done). Anyone who altered the source code could then contribute the new code back to the project. The Unix users who followed this group set about developing their own Unix-like OS called GNU (or sometimes GNU/Linux). It should be noted that although Stallman’s project started as early as 1983, no stable version of GNU has ever been released.

In 1985, Stallman founded the FSF. The FSF, and the entire notion of a free software movement, combines both a sociological and political view—users should have free access to software to run it, to study it, to change it, and to redistribute copies with or without changes.

It is the free software movement that has led to the Open Source Initiative (OSI), an organization created in 1998 to support open source software. However, the OSI is not the same as the FSF; in fact, there are strong disagreements between the two (mostly brought about by Stallman himself). Under the free software movement, all software should be free. Selling software is viewed as ethically and morally wrong. Software itself is the implementation of ideas, and ideas should be available to all; therefore, the software should be available to all. To some, particularly those who started or are involved in OSI, this view is overly restrictive. If one’s career is to produce software, surely that person has a right to earn money in doing so. The free software movement would instead support hackers who would illegally copy and use copyrighted software.

The open source community balances between the extremes of purely commercial software (e.g., Microsoft) and purely free software (FSF) by saying that software contributed to the open source community should remain open. However, one who uses open source software to develop a commercial product should be free to copyright that software and sell it as long as the contributions made by others are still freely available. This has led to the development of the GNU General Public License (GPL). Although the GPL was written by Stallman for his GNU Project, it is in fact regularly used by the open source community. It states that the given software title is freely available for distribution and modification as long as anything modified retains the GPL license. Stallman called the GPL a copyleft instead of a copyright. It is the GPL that has allowed Linux to have the impact it has had. About 50% of Red Hat Linux 7.1 contains the GPL as do many of the software products written for the Linux environment. In fact, GPL accounts for nearly 65% of all free software projects.§

Today, the open source community is thriving. Thousands of people dedicate time to work on software and contribute new ideas, new components within the software, and new software products. Without the open source philosophy, it is doubtful that Linux would have attained the degree of success that is has because there would be no global community contributing new code. The open source community does not merely contribute to Linux, but Linux is the largest project and the most visible success to come from the community. It has also led to some of the companies rethinking their commercial approaches. For instance, Microsoft itself has several open source projects.

A History of Windows

The history of Windows in some ways mirrors the history of personal computers because Windows, and its predecessor, MS-DOS, have been the most popular OS for personal computers for decades. Yet, to fully understand the history of Windows, we also have to consider another OS, that of the Macintosh. First though, we look at MS-DOS.

MS-DOS was first released in 1981 to accompany the release of the IBM PC, the first personal computer released by IBM. IBM decided, when designing their first personal computer for the market, to use an open architecture; they used all off-the-shelf components to construct their PC, including the Intel 8088 microprocessor. This, in effect, invited other companies to copy the architecture and release similar computers, which were referred to as IBM PC Clones (or Compatibles). Since these computers all shared the same processor, they could all run the same programs. Therefore, with MS-DOS available for the IBM PC, it would also run on the PC Clones. The popularity of the IBM PC Clones was largely because (1) the computers were cheaper than many competitors since the companies (other than IBM) did not have to invest a great deal in development, and (2) there was more software being produced for this platform because there were more computers of this type in the marketplace. As their popularity increased, so did MS-DOS’s share in the marketplace.

Learning Multiple Operating Systems

As an IT professional, you should learn multiple OSs. However, you do not need to buy multiple computers to accomplish this. Here, we look at three approaches to having multiple systems on your computer.

The first is the easiest. If you have an Apple Macintosh running OS version X, you can get to Unix any time you want by opening up an xterm window. This is available under utilities. The xterm window runs the Mach kernel, which is based, at least in part, on FreeBSD and NetBSD versions of Unix. This is not strictly speaking Unix, but it looks very similar in nearly every way.

Another approach, no matter which platform of computer you are using, is to install a virtual machine. The VM can mimic most other types of machines. Therefore, you install a VM in your computer, you boot your computer normally, and then you run the VM software, which boots your VM. Through a virtual machine, you can actually run several different OSs. The drawbacks of this approach are that you will have to install the OSs, which may require that you purchase them from vendors (whereas Linux is generally free, Windows and the Mac OS are not). Additionally, the VM takes a lot of resources to run efficiently, and so you might find your system slows down when running a VM. A multicore processor alleviates this problem.

Finally, if you are using a Windows machine, and you do not want to use a virtual machine, then you are limited to dual booting. A dual boot computer is one that has two (or more) OSs available. Upon booting the computer, the Windows boot loader can be paused and you can transfer control to a Linux boot loader. The two most popular boot loaders are Lilo (Linux Loader) and GRUB (Grand Unified Boot loader). Thus, Windows and Linux share your hard disk space and although the default is to boot to Windows, you can override this to boot to Linux any time. Unlike the Macintosh or the virtual machine approach, however, you will have to shut down from one OS to bring up the other.

DOS was a single tasking, text-based (not GUI) OS. The commands were primarily those that operated on the file system: changing directories, creating directories, moving files, copying files, deleting files, and starting/running programs. As a single tasking OS, the OS would run, leaving a prompt on the screen for the user. The user would enter a command. The OS would carry out that command.

If the user command was to start a program, then the program would run until it completed and control would return as a prompt to the user. The only exceptions to this single tasking nature were if the program required some I/O, in which case the program would be interrupted in favor of the OS, or the user did something that caused an interrupt to arise, such as by typing cntrl+c or cntrl+alt+del. Most software would also be text-based although many software products used (text-based) menus that could be accessed via the arrow keys and some combination of control keys or function keys.

In the 1970s, researchers at Xerox Palo Alto (Xerox Parc) had developed a graphical interface for their OS. They did this mostly to simplify their own research in artificial intelligence, not considering the achievement to be one that would lead to a commercial product. Steve Jobs and Steve Wozniak, the men behind Apple computers, toured Xerox Parc and realized that a windows-based OS could greatly impact the fledgling personal computer market.

Apple began development of a new OS shortly thereafter. Steve Jobs went to work on the Apple Lisa, which was to be the first personal computer with a GUI. However, because of development cost overruns, the project lagged behind and Jobs was thrown off of the project. He then joined the Apple Macintosh group. Although the Lisa beat the Macintosh to appear first, it had very poor sales in part due to an extremely high cost ($10,000 per unit). The Macintosh would be sold 1 year later and caught on immediately. The Apple Macintosh’s OS is considered to be the first commercial GUI-based OS because of the Mac’s success. During the Macintosh development phase, Apple hired Microsoft to help develop applications software. Bill Gates, who had been developing MS-DOS, realized the significance of a windows OS and started having Microsoft develop a similar product.

Although MS-DOS was released in 1981, it had been under development during much of the 1970s. In the latter part of the 1970s, Microsoft began working on Windows, originally called Interface Manager. It would reside on top of MS-DOS, meaning that you would first boot your computer to MS-DOS, and then you would run the GUI on top of it. Because of its reliance on MS-DOS, it meant that the user could actually use the GUI, or fall back to the DOS prompt whenever desired. Windows 1.0 was released in 1985, 1 year after the release of the Apple Macintosh. The Macintosh had no prompt whatsoever; you had to accomplish everything from the GUI.

Microsoft followed Windows 1.0 with Windows 2.0 in 1987 and Windows 3.0 in 1990. At this point, Windows was developed to be run on the Intel 80386 (or just 386) processor. In 1992, Windows 3.1 was released and, at the time, became the most popular and widely used of all OSs, primarily targeting the Intel 486 processor. All of these OSs were built upon MS-DOS and they were all multiprogramming systems in that the user could force a switch from one process (window) to another. This is a form of cooperative multitasking. None (excluding a version of Windows 2.1 when run with special hardware and software) would perform true (competitive) multitasking.

Around the same time, Microsoft also released Windows NT, a 32-bit, networked OS. Aside from being faster because of its 32-bit nature, Windows NT was designed for client–server networks. It was also the first version of Windows to support competitive multitasking. Windows NT went on to make a significant impact for organizations.

The next development in Windows history came with the release of Windows 95. Windows 95 was the first with built-in Internet capabilities, combining some of the features of Windows 3.1 with Windows NT. As with Windows NT, Windows 95 also had competitive multitasking. Another innovation in Windows 95 was plug and play capabilities. Plug and play means that the user can easily install new hardware on the fly. This means that the computer can recognize devices as they are added. Windows 95 was developed for the Intel Pentium processor.

In quick succession, several new versions of Windows were released in 1998 (Windows 98), 2000 [Windows 2000 and Windows Millennium Edition (Windows ME)], 2001 Windows XP (a version based on NT), and 2003 (Windows Server). In addition, in 1996, Microsoft released Windows CE, a version of Windows for mobile phones and other “scaled down” devices (e.g., navigation systems). In 2007, Microsoft released their next-generation windowing system, Vista. Although Vista contained a number of new security features, it was plagued with problems and poor performance. Finally, in 2009, Windows 7 was released.

In an interesting twist of fate, because of Microsoft’s large portion of the marketplace, Apple struggled to stay in business. In 1997, Microsoft made a $150 million investment in Apple. The result has had a major impact on Apple Computers. First, by 2005, Apple Macintosh had switched from the RISC-based Power PC process to Intel processors. Second, because of the change, it permitted Macintosh computers to run most of the software that could run in Windows. Macintosh retained their windows-based OS, known as Mac OS, now in version X. Unlike Windows, which was originally based on MS-DOS, Mac OS X is built on top of the Unix OS. If one desires, one can open up a Unix shell and work with a command line prompt. Windows still contains MS-DOS, so that one can open a DOS prompt.

Further Reading

Aside from texts describing the hardware evolution as covered in Chapter 7, here are additional books that spotlight either the rise of personal computer-based OSs (e.g., Fire in the Valley ) or the open source movement in Linux/Unix. Of particular note are both Fire in the Valley, which provides a dramatic telling of the rise of Microsoft, and the Cathedral and the Bazaar, which differentiates the open source movement from the free software foundation.

- Freiberg, P. and Swaine, M. Fire in the Valley: The Making of the Personal Computer. New York: McGraw Hill, 2000.

- Gancarz, M. Linux and the Unix Philosophy. Florida: Digital Press, 2003.

- Gay, J., Stallman, R., and Lessig, L. Free Software, Free Society: Selected Essays of Richard M. Stallman. Washington: CreateSpace, 2009.

- Lewis, T. Microsoft Rising: . . . and Other Tales of Silicon Valley. New Jersey: Wiley and Sons, 2000.

- Moody, G. Rebel Code: Linux and the Open Source Revolution. New York: Basic Books, 2002.

- Raymond, E. The Cathedral and the Bazaar: Musings on Linux and Open Source by an Accidental Revolutionary. Cambridge, MA: O’Reilly, 2001.

- St. Laurent, A. Understanding Open Source and Free Software Licensing. Cambridge, MA: O’Reilly, 2004.

- Watson, J. A History of Computer Operating Systems: Unix, Dos, Lisa, Macintosh, Windows, Linux. Ann Arbor, MI: Nimble Books, 2008.

- Williams, S. Free as in Freedom: Richard Stallman’s Crusade for Free Software. Washington: CreateSpace, 2009.

The website http://distrowatch.com/ provides a wealth of information on various Linux distributions. Aside from having information on just about every Linux release, you can read about how distributions differ from an implementation point of view. If you find Figure 8.3 difficult to read, see http://futurist.se/gldt/ for a complete and complex timeline of Linux releases.

Review Terms

Terminology introduced in this chapter:

Debian Linux Open architecture

Free software movement Open source

GNU GPL Open source initiative

GNU project Red Hat Linux

Linux SuSE Linux

Live CD Windows

Macintosh OS Ubuntu Linux

Minix Unix

MS-DOS

Review Questions

- What is the difference between a resident monitor and an operating system?

- What is the relationship between Windows and MS-DOS?

- In which order were these operating systems released: Macintosh OS, Windows, MS-DOS, Windows 7, Windows 95, Windows NT, Unix, Linux?

- What is the difference between the free software movement and the open source initiative?

- What does the GNU general purpose license state?

Discussion Questions

- How can the understanding of the rather dramatic history of operating systems impact your career in IT?

- Provide a list of debate points that suggest that proprietary/commercial software is the best approach in our society.

- Provide a list of debate points that suggest that open source software is the best approach in our society.

- Provide a list of debate points that suggest that free software, using Stallman’s movement, is the best approach in our society.

- How important is it for an IT person to have experience in more than one operating system? Which operating system(s) would it make most sense to understand?

* GNU stands for GNU Not Unix. This is a recursive definition that really does not mean anything. What Stallman was trying to convey was that the operating system was not Unix, but a Unix-like operating system that would be composed solely of free software.

† Linux does not claim to be virus-free, but years of research have led to an operating system able to defeat many of the mechanisms used by viruses to propagate themselves.

‡ GNU stands for GNU Not Unix, thus a recursive definition, which no doubt appeals to Stallman as recursion is a common tool for AI programming.

§ The GPL discussed here is actually the second and third versions, GPLv2 and GPLv3, released in 1991 and 2005, respectively. GPLv1 did not necessarily support the ideology behind FSF, because it allowed people to distribute software in binary form and thus restrict some from being able to modify it.