Chapter 2. Drawing

The views illustrated in Chapter 1 were mostly colored rectangles; they had a backgroundColor and no more. But that, of course, is not what a real iOS program looks like. Everything the user sees is a UIView, and what the user sees is a lot more than a bunch of colored rectangles. That’s because the views that the user sees have content. They contain drawing.

Many UIView subclasses, such as a UIButton or a UILabel, know how to draw themselves. Sooner or later, you’re also going to want to do some drawing of your own. You can prepare your drawing as an image file beforehand. You can draw an image as your app runs, in code. You can display an image in a UIView subclass that knows how to show an image, such as a UIImageView or a UIButton. A pure UIView is all about drawing, and it leaves that drawing largely up to you; your code determines what the view draws, and hence what it looks like in your interface.

This chapter discusses the mechanics of drawing. Don’t be afraid to write drawing code of your own! It isn’t difficult, and it’s often the best way to make your app look the way you want it to. (For how to draw text, see Chapter 10.)

Images and Image Views

The basic general UIKit image class is UIImage. UIImage can read a file from disk, so if an image does not need to be created dynamically, but has already been created before your app runs, then drawing may be as simple as providing an image file as a resource in your app’s bundle. The system knows how to work with many standard image file types, such as TIFF, JPEG, GIF, and PNG; when an image file is to be included in your app bundle, iOS has a special affinity for PNG files, and you should prefer them whenever possible. You can also obtain image data in some other way, such as by downloading it, and transform this into a UIImage.

(The converse operation, saving image data to disk as an image file, is discussed in Chapter 22.)

Image Files

A pre-existing image file in your app’s bundle can be obtained through the UIImage initializer init(named:). This method looks in two places for the image:

- Asset catalog

-

We look in the asset catalog for an image set with the supplied name. The name is case-sensitive.

- Top level of app bundle

-

We look at the top level of the app’s bundle for an image file with the supplied name. The name is case-sensitive and should include the file extension; if it doesn’t include a file extension, .png is assumed.

When calling init(named:), an asset catalog is searched before the top level of the app’s bundle. If there are multiple asset catalogs, they are all searched, but the search order is indeterminate and cannot be specified, so avoid image sets with the same name.

A nice thing about init(named:) is that the image data may be cached in memory, and if you ask for the same image by calling init(named:) again later, the cached data may be supplied immediately. Alternatively, you can read an image file from anywhere in your app’s bundle directly and without caching, using init(contentsOfFile:), which expects a pathname string; you can get a reference to your app’s bundle with Bundle.main, and Bundle then provides instance methods for getting the pathname of a file within the bundle, such as path(forResource:ofType:).

Methods that specify a resource in the app bundle, such as init(named:) and path(forResource:ofType:), respond to special suffixes in the name of an actual resource file:

- High-resolution variants

-

On a device with a double-resolution screen, when an image is obtained by name from the app bundle, a file with the same name extended by

@2x, if there is one, will be used automatically, with the resulting UIImage marked as double-resolution by assigning it ascaleproperty value of2.0. Similarly, if there is a file with the same name extended by@3x, it will be used on the triple-resolution screen of the iPhone 6 Plus, with ascaleproperty value of3.0.In this way, your app can contain multiple versions of an image file at different resolutions. Thanks to the

scaleproperty, a high-resolution version of an image is drawn at the same size as the single-resolution image. Thus, on a high-resolution screen, your code continues to work without change, but your images look sharper.

- Device type variants

-

A file with the same name extended by

~ipadwill automatically be used if the app is running natively on an iPad. You can use this in a universal app to supply different images automatically depending on whether the app runs on an iPhone or iPod touch, on the one hand, or on an iPad, on the other. (This is true not just for images but for any resource obtained by name from the bundle. See Apple’s Resource Programming Guide.)

One of the great benefits of an asset catalog, though, is that you can forget all about those name suffix conventions. An asset catalog knows when to use an alternate image within an image set, not from its name, but from its place in the catalog. Put the single-, double-, and triple-resolution alternatives into the slots marked “1x,” “2x,” and “3x” respectively. For a distinct iPad version of an image, check iPhone and iPad in the Attributes inspector for the image set; separate slots for those device types will appear in the asset catalog.

An asset catalog can also distinguish between versions of an image intended for different size class situations. (See the discussion of size classes and trait collections in Chapter 1.) In the Attributes inspector for your image set, use the Width Class and Height Class pop-up menus to specify which size class possibilities you want to distinguish. Thus, for example, if we’re on an iPhone with the app rotated to landscape orientation, and if there’s both an Any Height and a Compact Height alternative in the image set, the Compact Height version is used. These features are live as the app runs; if the app rotates from landscape to portrait, and there’s both an Any height and a Compact height alternative in the image set, the Compact Height version is replaced with the Any Height version in your interface, there and then, automatically.

How does an asset catalog perform this magic? When an image is obtained from an asset catalog through init(named:), its imageAsset property is a UIImageAsset that effectively points back into the asset catalog at the image set that it came from. Each image in the image set has a trait collection associated with it (its traitCollection). By calling image(with:) and supplying a trait collection, you can ask an image’s imageAsset for the image from the same image set appropriate to that trait collection. A built-in interface object that displays an image is automatically trait collection–aware; it receives the traitCollectionDidChange(_:) message and responds accordingly.

To demonstrate how this works under the hood, we can build a custom UIView with an image property that behaves the same way:

class MyView: UIView {

var image : UIImage!

override func traitCollectionDidChange(_: UITraitCollection?) {

self.setNeedsDisplay() // causes drawRect to be called

}

override func draw(_ rect: CGRect) {

if var im = self.image {

if let asset = self.image.imageAsset {

im = asset.image(with:self.traitCollection)

}

im.draw(at:.zero)

}

}

}

It is also possible to associate images as trait-based alternatives for one another without using an asset catalog. You might do this, for example, because you have constructed the images themselves in code, or obtained them over the network while the app is running. The technique is to instantiate a UIImageAsset and then associate each image with a different trait collection by registering it with this same UIImageAsset. For example:

let tcreg = UITraitCollection(verticalSizeClass: .regular) let tccom = UITraitCollection(verticalSizeClass: .compact) let moods = UIImageAsset() let frowney = UIImage(named:"frowney")! let smiley = UIImage(named:"smiley")! moods.register(frowney, with: tcreg) moods.register(smiley, with: tccom)

The amazing thing is that if we now display either frowney or smiley in a UIImageView, we automatically see the image associated with the environment’s current vertical size class, and it automatically switches to the other image when the app changes orientation. Moreover, this works even though I didn’t keep any persistent reference to frowney, smiley, or the UIImageAsset! (The reason is that the images are cached by the system and they maintain a strong reference to the UIImageAsset with which they are registered.)

Tip

In Xcode 8, an image set in an asset catalog can make numerous further distinctions based on a device’s processor type, wide color capabilities, and more. Moreover, these distinctions are used not only by the runtime when the app runs, but also by the App Store when thinning your app for a specific target device. Asset catalogs should thus be regarded as preferable rather than keeping your images at the top level of the app bundle.

Image Views

Many built-in Cocoa interface objects will accept a UIImage as part of how they draw themselves; for example, a UIButton can display an image, and a UINavigationBar or a UITabBar can have a background image. I’ll discuss those in Chapter 12. But when you simply want an image to appear in your interface, you’ll probably hand it to an image view — a UIImageView — which has the most knowledge and flexibility with regard to displaying images and is intended for this purpose.

The nib editor supplies some shortcuts in this regard: the Attributes inspector of an interface object that can have an image will have a pop-up menu listing known images in your project, and such images are also listed in the Media library (Command-Option-Control-4). Media library images can often be dragged onto an interface object such as a button in the canvas to assign them; and if you drag a Media library image into a plain view, the image is transformed into a UIImageView displaying that image.

A UIImageView can actually have two images, one assigned to its image property and the other assigned to its highlightedImage property; the value of the UIImageView’s isHighlighted property dictates which of the two is displayed at any given moment. A UIImageView does not automatically highlight itself merely because the user taps it, the way a button does. However, there are certain situations where a UIImageView will respond to the highlighting of its surroundings; for example, within a table view cell, a UIImageView will show its highlighted image when the cell is highlighted (Chapter 8).

A UIImageView is a UIView, so it can have a background color in addition to its image, it can have an alpha (transparency) value, and so forth (see Chapter 1). An image may have areas that are transparent, and a UIImageView will respect this; thus an image of any shape can appear. A UIImageView without a background color is invisible except for its image, so the image simply appears in the interface, without the user being aware that it resides in a rectangular host. A UIImageView without an image and without a background color is invisible, so you could start with an empty UIImageView in the place where you will later need an image and subsequently assign the image in code. You can assign a new image to substitute one image for another, or set the image view’s image property to nil to remove it.

How a UIImageView draws its image depends upon the setting of its contentMode property (UIViewContentMode). (This property is inherited from UIView; I’ll discuss its more general purpose later in this chapter.) For example, .scaleToFill means the image’s width and height are set to the width and height of the view, thus filling the view completely even if this alters the image’s aspect ratio; .center means the image is drawn centered in the view without altering its size. The best way to get a feel for the meanings of the various contentMode settings is to assign a UIImageView a small image in the nib editor and then, in the Attributes inspector, change the Mode pop-up menu, and see where and how the image draws itself.

You should also pay attention to a UIImageView’s clipsToBounds property; if it is false, its image, even if it is larger than the image view and even if it is not scaled down by the contentMode, may be displayed in its entirety, extending beyond the image view itself.

Warning

By default, the clipsToBounds of a UIImageView created in the nib editor is false. This is very unlikely to be what you want!

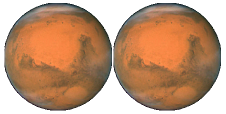

When creating a UIImageView in code, you can take advantage of a convenience initializer, init(image:). The default contentMode is .scaleToFill, but the image is not initially scaled; rather, the view itself is sized to match the image. You will still probably need to position the UIImageView correctly in its superview. In this example, I’ll put a picture of the planet Mars in the center of the app’s interface (Figure 2-1; for the CGRect center property, see Appendix B):

let iv = UIImageView(image:UIImage(named:"Mars")) self.view.addSubview(iv) iv.center = iv.superview!.bounds.center iv.frame = iv.frame.integral

Figure 2-1. Mars appears in my interface

What happens to the size of an existing UIImageView when you assign an image to it depends on whether the image view is using autolayout. If it isn’t, the image view’s size doesn’t change. But under autolayout, the size of the new image becomes the image view’s new intrinsicContentSize, so the image view will adopt the image’s size unless other constraints prevent.

An image view automatically acquires its alignmentRectInsets from its image’s alignmentRectInsets. Thus, if you’re going to be aligning the image view to some other object using autolayout, you can attach appropriate alignmentRectInsets to the image that the image view will display, and the image view will do the right thing. To do so, derive a new image by calling the original image’s withAlignmentRectInsets(_:) method.

Warning

In theory, you should be able to set an image’s alignmentRectInsets in an asset catalog (using the image’s Alignment fields). As of this writing, however, this feature is not working correctly.

Resizable Images

Certain places in the interface require a resizable image; for example, a custom image that serves as the track of a slider or progress view (Chapter 12) must be resizable, so that it can fill a space of any length. And there can frequently be other situations where you want to fill a background by tiling or stretching an existing image.

To make a resizable image, start with a normal image and call its resizableImage(withCapInsets:resizingMode:) method. The capInsets: argument is a UIEdgeInsets, whose components represent distances inward from the edges of the image. In a context larger than the image, a resizable image can behave in one of two ways, depending on the resizingMode: value (UIImageResizingMode):

.tile-

The interior rectangle of the inset area is tiled (repeated) in the interior; each edge is formed by tiling the corresponding edge rectangle outside the inset area. The four corner rectangles outside the inset area are drawn unchanged.

.stretch-

The interior rectangle of the inset area is stretched once to fill the interior; each edge is formed by stretching the corresponding edge rectangle outside the inset area once. The four corner rectangles outside the inset area are drawn unchanged.

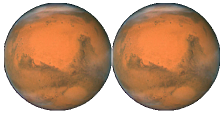

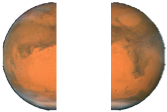

In these examples, assume that self.iv is a UIImageView with absolute height and width (so that it won’t adopt the size of its image) and with a contentMode of .scaleToFill (so that the image will exhibit resizing behavior). First, I’ll illustrate tiling an entire image (Figure 2-2); note that the capInsets: is UIEdgeInsets.zero:

let mars = UIImage(named:"Mars")! let marsTiled = mars.resizableImage(withCapInsets:.zero, resizingMode: .tile) self.iv.image = marsTiled

Figure 2-2. Tiling the entire image of Mars

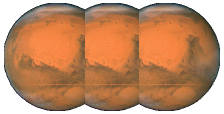

Now we’ll tile the interior of the image, changing the capInsets: argument from the previous code (Figure 2-3):

let marsTiled = mars.resizableImage(withCapInsets:

UIEdgeInsetsMake(

mars.size.height / 4.0,

mars.size.width / 4.0,

mars.size.height / 4.0,

mars.size.width / 4.0

), resizingMode: .tile)

Figure 2-3. Tiling the interior of Mars

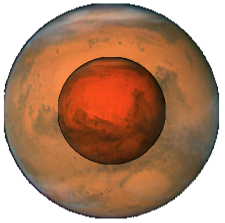

Next, I’ll illustrate stretching. We’ll start by changing just the resizingMode: from the previous code (Figure 2-4):

let marsTiled = mars.resizableImage(withCapInsets:

UIEdgeInsetsMake(

mars.size.height / 4.0,

mars.size.width / 4.0,

mars.size.height / 4.0,

mars.size.width / 4.0

), resizingMode: .stretch)

Figure 2-4. Stretching the interior of Mars

A common stretching strategy is to make almost half the original image serve as a cap inset, leaving just a tiny rectangle in the center that must stretch to fill the entire interior of the resulting image (Figure 2-5):

let marsTiled = mars.resizableImage(withCapInsets:

UIEdgeInsetsMake(

mars.size.height / 2.0 - 1,

mars.size.width / 2.0 - 1,

mars.size.height / 2.0 - 1,

mars.size.width / 2.0 - 1

), resizingMode: .stretch)

Figure 2-5. Stretching a few pixels at the interior of Mars

You should also experiment with different scaling contentMode settings. In the preceding example, if the image view’s contentMode is .scaleAspectFill, and if the image view’s clipsToBounds is true, we get a sort of gradient effect, because the top and bottom of the stretched image are outside the image view and aren’t drawn (Figure 2-6).

Figure 2-6. Mars, stretched and clipped

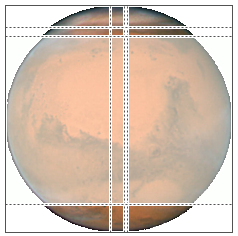

Alternatively, you can configure a resizable image without code, in the project’s asset catalog. It is often the case that a particular image will be used in your app chiefly as a resizable image, and always with the same capInsets: and resizingMode:, so it makes sense to configure this image once rather than having to repeat the same code.

To configure an image in an asset catalog as a resizable image, select the image and, in the Slicing section of the Attributes inspector, change the Slices pop-up menu to Horizontal, Vertical, or Horizontal and Vertical. When you do this, additional interface appears. You can specify the resizingMode with the Center pop-up menu. You can work numerically, or click Show Slicing at the lower right of the canvas and work graphically. The graphical editor is zoomable, so zoom in to work comfortably.

This feature is actually even more powerful than resizableImage(withCapInsets:resizingMode:). It lets you specify the end caps separately from the tiled or stretched region, with the rest of the image being sliced out. In Figure 2-7, for example, the dark areas at the top left, top right, bottom left, and bottom right will be drawn as is. The narrow bands will be stretched, and the small rectangle at the top center will be stretched to fill most of the interior. But the rest of the image, the large central area covered by a sort of gauze curtain, will be omitted entirely. The result is shown in Figure 2-8.

Figure 2-7. Mars, sliced in the asset catalog

Figure 2-8. Mars, sliced and stretched

Transparency Masks

Several places in an iOS app’s interface want to treat an image as a transparency mask, also known as a template. This means that the image color values are ignored, and only the transparency (alpha) values of each pixel matter. The image shown on the screen is formed by combining the image’s transparency values with a single tint color. Such, for example, is the behavior of a tab bar item’s image.

The way an image will be treated is a property of the image, its renderingMode. This property is read-only; to change it, start with an image and generate a new image with a different rendering mode, by calling its withRenderingMode(_:) method. The rendering mode values (UIImageRenderingMode) are:

-

.automatic -

.alwaysOriginal -

.alwaysTemplate

The default is .automatic, which means that the image is drawn normally everywhere except in certain limited contexts, where it is used as a transparency mask. With the other two rendering mode values, you can force an image to be drawn normally, even in a context that would usually treat it as a transparency mask, or you can force an image to be treated as a transparency mask, even in a context that would otherwise treat it normally.

To accompany this feature, iOS gives every UIView a tintColor, which will be used to tint any template images it contains. Moreover, this tintColor by default is inherited down the view hierarchy, and indeed throughout the entire app, starting with the window (Chapter 1). Thus, assigning your app’s main window a tint color is probably one of the few changes you’ll make to the window; otherwise, your app adopts the system’s blue tint color. (Alternatively, if you’re using a main storyboard, set the Global Tint color in its File inspector.) Individual views can be assigned their own tint color, which is inherited by their subviews. Figure 2-9 shows two buttons displaying the same background image, one in normal rendering mode, the other in template rendering mode, in an app whose window tint color is red. (I’ll say more about template images and tintColor in Chapter 12.)

Figure 2-9. One image in two rendering modes

An asset catalog can assign an image a rendering mode. Select the image set in the asset catalog, and use the Render As pop-up menu in the Attributes inspector to set the rendering mode to Default (.automatic), Original Image (.alwaysOriginal), or Template Image (.alwaysTemplate). This is an excellent approach whenever you have an image that you will use primarily in a specific rendering mode, because it saves you from having to remember to set that rendering mode in code every time you fetch the image. Instead, any time you call init(named:), this image arrives with the rendering mode already set.

Reversible Images

Starting in iOS 9, the entire interface is automatically reversed when your app runs on a system for which your app is localized if the system language is right-to-left. In general, this probably won’t affect your images. The runtime assumes that you don’t want images to be reversed when the interface is reversed, so its default behavior is to leave them alone.

Nevertheless, you might want an image reversed when the interface is reversed. For example, suppose you’ve drawn an arrow pointing in the direction from which new interface will arrive when the user taps a button. If the button pushes a view controller onto a navigation interface, that direction is from the right on a left-to-right system, but from the left on a right-to-left system. This image has directional meaning within the app’s own interface; it needs to flip horizontally when the interface is reversed.

To make this possible, call the image’s imageFlippedForRightToLeftLayoutDirection method and use the resulting image in your interface. On a left-to-right system, the normal image will be used; on a right-to-left system, a reversed version of the image will be created and used automatically. You can override this behavior, even if the image is reversible, for a particular UIView displaying the image, such as a UIImageView, by setting that view’s semanticContentAttribute to prevent mirroring.

New in Xcode 8, you can make the same determination for an image in the asset catalog using the Direction pop-up menu (choose one of the Mirrors options). Moreover, new in iOS 10, the layout direction (as I mentioned in Chapter 1) is a trait. This means that, just as you can have pairs of images to be used on iPhone or iPad, or triples of images to be used on single-, double-, or triple-resolution screens, you can have pairs of images to be used under left-to-right or right-to-left layout. The easy way to configure such pairs is to choose Both in the asset catalog’s Direction pop-up menu; now there are left-to-right and right-to-left image slots where you can place your images. Alternatively, you can register the paired images with a UIImageAsset in code, as I demonstrated earlier in this chapter.

New in iOS 10, you can also force an image to be flipped horizontally without regard to layout direction or semantic content attribute by calling its withHorizontallyFlippedOrientation method.

Graphics Contexts

Instead of plopping an existing image file directly into your interface, you may want to create some drawing yourself, in code. To do so, you will need a graphics context.

A graphics context is basically a place you can draw. Conversely, you can’t draw in code unless you’ve got a graphics context. There are several ways in which you might obtain a graphics context; these are the most common:

- You create an image context

-

In iOS 9 and before, this was done by calling

UIGraphicsBeginImageContextWithOptions. New in iOS 10, you use a UIGraphicsImageRenderer. I’ll go into detail later. - Cocoa creates the graphics context

-

You subclass UIView and implement

draw(_:). At the time yourdraw(_:)implementation is called, Cocoa has already created a graphics context and is asking you to draw into it, right now; whatever you draw is what the UIView will display. - Cocoa passes you a graphics context

-

You subclass CALayer and implement

draw(in:), or else you give a CALayer a delegate and implement the delegate’sdraw(_:in:). Thein:parameter is a graphics context. (Layers are discussed in Chapter 3.)

Moreover, at any given moment there either is or is not a current graphics context:

-

When you create an image context, that image context automatically becomes the current graphics context.

-

When UIView’s

draw(_:)is called, the UIView’s drawing context is already the current graphics context. -

When CALayer’s

draw(in:)or its delegate’sdraw(_:in:)is called, thein:parameter is a graphics context, but it is not the current context. It’s up to you to make it current if you need to.

What beginners find most confusing about drawing is that there are two sets of tools for drawing, which take different attitudes toward the context in which they will draw. One set needs a current context; the other just needs a context:

- UIKit

-

Various Cocoa classes know how to draw themselves; these include UIImage, NSString (for drawing text), UIBezierPath (for drawing shapes), and UIColor. Some of these classes provide convenience methods with limited abilities; others are extremely powerful. In many cases, UIKit will be all you’ll need.

With UIKit, you can draw only into the current context. If there’s already a current context, you just draw. But with CALayer, where you are handed a context as a parameter, if you want to use the UIKit convenience methods, you’ll have to make that context the current context; you do this by calling

UIGraphicsPushContext(and be sure to restore things withUIGraphicsPopContextlater). - Core Graphics

-

This is the full drawing API. Core Graphics, often referred to as Quartz, or Quartz 2D, is the drawing system that underlies all iOS drawing; UIKit drawing is built on top of it. It is low-level and consists of C functions (though in Swift 3 these are mostly “renamified” to look like method calls). There are a lot of them! This chapter will familiarize you with the fundamentals; for complete information, you’ll want to study Apple’s Quartz 2D Programming Guide.

With Core Graphics, you must specify a graphics context (a CGContext) to draw into, explicitly, for each bit of your drawing. With CALayer, you are handed the context as a parameter, and that’s the graphics context you want to draw into. But if there is already a current context, you have no reference to a context; to use Core Graphics, you need to get such a reference. You call

UIGraphicsGetCurrentContextto obtain it.

Tip

You don’t have to use UIKit or Core Graphics exclusively. On the contrary, you can intermingle UIKit calls and Core Graphics calls in the same chunk of code to operate on the same graphics context. They merely represent two different ways of telling a graphics context what to do.

So we have two sets of tools and three ways in which a context might be supplied; that makes six ways of drawing. I’ll now demonstrate all six of them! Without worrying just yet about the actual drawing commands, focus your attention on how the context is specified and on whether we’re using UIKit or Core Graphics. First, I’ll draw a blue circle by implementing a UIView subclass’s draw(_:), using UIKit to draw into the current context, which Cocoa has already prepared for me:

override func draw(_ rect: CGRect) {

let p = UIBezierPath(ovalIn: CGRect(0,0,100,100))

UIColor.blue.setFill()

p.fill()

}

Now I’ll do the same thing with Core Graphics; this will require that I first get a reference to the current context:

override func draw(_ rect: CGRect) {

let con = UIGraphicsGetCurrentContext()!

con.addEllipse(in:CGRect(0,0,100,100))

con.setFillColor(UIColor.blue.cgColor)

con.fillPath()

}

Next, I’ll implement a CALayer delegate’s draw(_:in:). In this case, we’re handed a reference to a context, but it isn’t the current context. So I have to make it the current context in order to use UIKit (and I must remember to stop making it the current context when I’m done drawing):

override func draw(_ layer: CALayer, in con: CGContext) {

UIGraphicsPushContext(con)

let p = UIBezierPath(ovalIn: CGRect(0,0,100,100))

UIColor.blue.setFill()

p.fill()

UIGraphicsPopContext()

}

To use Core Graphics in a CALayer delegate’s draw(_:in:), I simply keep referring to the context I was handed:

override func draw(_ layer: CALayer, in con: CGContext) {

con.addEllipse(in:CGRect(0,0,100,100))

con.setFillColor(UIColor.blue.cgColor)

con.fillPath()

}

Finally, I’ll make a UIImage of a blue circle. We can do this at any time (we don’t need to wait for some particular method to be called) and in any class (we don’t need to be in a UIView subclass). The old way of doing this, in iOS 9 and before, was as follows:

-

You call

UIGraphicsBeginImageContextWithOptions. It creates an image context and makes it the current context. -

You draw, thus generating the image.

-

You call

UIGraphicsGetImageFromCurrentImageContextto extract an actual UIImage from the image context. -

You call

UIGraphicsEndImageContextto dismiss the context.

The desired image is the result of step 3, and now you can display it in your interface, draw it into some other graphics context, save it as a file, or whatever you like.

New in iOS 10, UIGraphicsBeginImageContextWithOptions is superseded by UIGraphicsImageRenderer (though you can still use the old way if you want to). The reason for this change is that the old way assumed you wanted an sRGB image with 8-bit color pixels, whereas the introduction of the iPad Pro 9.7-inch and iPhone 7 makes that assumption wrong: they can display “wide color,” meaning that you probably want a P3 image with 16-bit color pixels. UIGraphicsImageRenderer knows how to make such an image, and will do so by default if we’re running on a “wide color” device.

Another nice thing about UIGraphicsImageRenderer is that its image method takes a function containing your drawing commands and returns the image. Thus there is no need for the step-by-step imperative style of programming required by UIGraphicsBeginImageContextWithOptions, where after drawing you had to remember to fetch the image and dismiss the context yourself.

In this edition of the book, therefore, I will adopt UIGraphicsImageRenderer throughout. If you need to know the details of UIGraphicsBeginImageContextWithOptions, consult an earlier edition. If you need a backward compatible way to draw an image — you want to use UIGraphicsBeginImageContextWithOptions on iOS 9 and before, but UIGraphicsImageRenderer on iOS 10 and later — see Appendix B, which provides a utility function for that very purpose.

So now, I’ll draw my image using UIKit:

let r = UIGraphicsImageRenderer(size:CGSize(100,100))

let im = r.image { _ in

let p = UIBezierPath(ovalIn: CGRect(0,0,100,100))

UIColor.blue.setFill()

p.fill()

}

// im is the blue circle image, do something with it here ...

And here’s the same thing using Core Graphics:

let r = UIGraphicsImageRenderer(size:CGSize(100,100))

let im = r.image { _ in

let con = UIGraphicsGetCurrentContext()!

con.addEllipse(in:CGRect(0,0,100,100))

con.setFillColor(UIColor.blue.cgColor)

con.fillPath()

}

// im is the blue circle image, do something with it here ...

Tip

Instead of calling image, you can call UIGraphicsImageRenderer methods that generate JPEG or PNG image data, suitable for saving to disk.

In those examples, we’re calling UIGraphicsImageRenderer’s init(size:) and accepting its default configuration, which is usually what’s wanted. To configure its image context further, call the UIGraphicsImageRendererFormat class method default, configure the format through its properties, and pass it to UIGraphicsImageRenderer’s init(size:format:). Those properties are:

opaque-

By default,

false; the image context is transparent. Iftrue, the image context is opaque and has a black background, and the resulting image has no transparency. scale-

By default, the same as the scale of the main screen,

UIScreen.main.scale. This means that the resolution of the resulting image will be correct for the device we’re running on. prefersExtendedRange-

By default,

trueonly if we’re running on a device that supports “wide color.”

You may also be wondering about the parameter that arrives into the UIGraphicsImageRenderer’s image function (which is ignored in the preceding examples). It’s a UIGraphicsImageRendererContext. This provides access to the configuring UIGraphicsImageRendererFormat (its format). It also lets you obtain the graphics context (its cgContext); you can alternatively get this by calling UIGraphicsGetCurrentContext, and the preceding code does so, for consistency with the other ways of drawing. In addition, the UIGraphicsImageRendererContext can hand you a copy of the image as drawn up to this point (its currentImage); also, it implements a few basic drawing commands of its own.

Tip

A nice thing about UIGraphicsImageRenderer is that it doesn’t have to be torn down after use. If you know that you’re going to be drawing multiple images with the same size and format, you can keep a reference to the renderer and call its image method again.

UIImage Drawing

A UIImage provides methods for drawing itself into the current context. We know how to obtain a UIImage, and we know how to obtain a graphics context and make it the current context, so we can experiment with these methods.

Here, I’ll make a UIImage consisting of two pictures of Mars side by side (Figure 2-10):

let mars = UIImage(named:"Mars")!

let sz = mars.size

let r = UIGraphicsImageRenderer(size:CGSize(sz.width*2, sz.height))

let im = r.image { _ in

mars.draw(at:CGPoint(0,0))

mars.draw(at:CGPoint(sz.width,0))

}

Figure 2-10. Two images of Mars combined side by side

Observe that image scaling works perfectly in that example. If we have multiple resolution versions of our original Mars image, the correct one for the current device is used, and is assigned the correct scale value. The image context that we are drawing into also has the correct scale by default. And the resulting image has the correct scale as well. Thus, this same code produces an image that looks correct on the current device, whatever its screen resolution may be.

Additional UIImage methods let you scale an image into a desired rectangle as you draw, and specify the compositing (blend) mode whereby the image should combine with whatever is already present. To illustrate, I’ll create an image showing Mars centered in another image of Mars that’s twice as large, using the .multiply blend mode (Figure 2-11):

let mars = UIImage(named:"Mars")!

let sz = mars.size

let r = UIGraphicsImageRenderer(size:CGSize(sz.width*2, sz.height*2))

let im = r.image { _ in

mars.draw(in:CGRect(0,0,sz.width*2,sz.height*2))

mars.draw(in:CGRect(sz.width/2.0, sz.height/2.0, sz.width, sz.height),

blendMode: .multiply, alpha: 1.0)

}

Figure 2-11. Two images of Mars in different sizes, composited

There is no UIImage drawing method for specifying the source rectangle — that is, for times when you want to extract a smaller region of the original image. You can work around this by creating a smaller graphics context and positioning the image drawing so that the desired region falls into it. For example, to obtain an image of the right half of Mars, you’d make a graphics context half the width of the mars image, and then draw mars shifted left, so that only its right half intersects the graphics context. There is no harm in doing this, and it’s a perfectly standard strategy; the left half of mars simply isn’t drawn (Figure 2-12):

let mars = UIImage(named:"Mars")!

let sz = mars.size

let r = UIGraphicsImageRenderer(size:CGSize(sz.width/2.0, sz.height))

let im = r.image { _ in

mars.draw(at:CGPoint(-sz.width/2.0,0))

}

Figure 2-12. Half the original image of Mars

CGImage Drawing

The Core Graphics version of UIImage is CGImage. In essence, a UIImage is (usually) a wrapper for a CGImage: the UIImage is bitmap image data plus scale, orientation, and other information, whereas the CGImage is the bare bitmap image data alone. The two are easily converted to one another: a UIImage has a cgImage property that accesses its Quartz image data, and you can make a UIImage from a CGImage using init(cgImage:) or its more configurable sibling, init(cgImage:scale:orientation:).

A CGImage lets you create a new image cropped from a rectangular region of the original image, which you can’t do with UIImage. (A CGImage has other powers a UIImage doesn’t have; for example, you can apply an image mask to a CGImage.) I’ll demonstrate by splitting the image of Mars in half and drawing the two halves separately (Figure 2-13):

let mars = UIImage(named:"Mars")!

// extract each half as CGImage

let marsCG = mars.cgImage!

let sz = mars.size

let marsLeft = marsCG.cropping(to:

CGRect(0,0,sz.width/2.0,sz.height))!

let marsRight = marsCG.cropping(to:

CGRect(sz.width/2.0,0,sz.width/2.0,sz.height))!

let r = UIGraphicsImageRenderer(size: CGSize(sz.width*1.5, sz.height))

let im = r.image { ctx in

let con = ctx.cgContext

con.draw(marsLeft, in:

CGRect(0,0,sz.width/2.0,sz.height))

con.draw(marsRight, in:

CGRect(sz.width,0,sz.width/2.0,sz.height))

}

Figure 2-13. Image of Mars split in half (and flipped)

But there’s a problem with that example: the drawing is upside-down! It isn’t rotated; it’s mirrored top to bottom, or, to use the technical term, flipped. This phenomenon can arise when you create a CGImage and then draw it, and is due to a mismatch in the native coordinate systems of the source and target contexts.

There are various ways of compensating for this mismatch between the coordinate systems. One is to draw the CGImage into an intermediate UIImage and extract another CGImage from that. Example 2-1 presents a utility function for doing this.

Example 2-1. Utility for flipping an image drawing

func flip (_ im: CGImage) -> CGImage {

let sz = CGSize(CGFloat(im.width), CGFloat(im.height))

let r = UIGraphicsImageRenderer(size:sz)

return r.image { ctx in

ctx.cgContext.draw(im, in: CGRect(0, 0, sz.width, sz.height))

}.cgImage!

}Armed with the utility function from Example 2-1, we can fix our CGImage drawing calls in the previous example so that they draw the halves of Mars the right way up:

con.draw(flip(marsLeft!), in:

CGRect(0,0,sz.width/2.0,sz.height))

con.draw(flip(marsRight!), in:

CGRect(sz.width,0,sz.width/2.0,sz.height))

However, we’ve still got a problem: on a high-resolution device, if there is a high-resolution variant of our image file, the drawing comes out all wrong. The reason is that we are obtaining our initial Mars image using UIImage’s init(named:), which returns a UIImage that compensates for the increased size of a high-resolution image by setting its own scale property to match. But a CGImage doesn’t have a scale property, and knows nothing of the fact that the image dimensions are increased! Therefore, on a high-resolution device, the CGImage that we extract from our Mars UIImage as mars.cgImage is larger (in each dimension) than mars.size, and all our calculations after that are wrong.

It would be best, therefore, is to wrap each CGImage in a UIImage and draw the UIImage instead of the CGImage. The UIImage can be formed in such a way as to compensate for scale: call init(cgImage:scale:orientation:). Moreover, by drawing a UIImage instead of a CGImage, we avoid the flipping problem! So here’s an approach that deals with both flipping and scale, with no need for the flip utility:

let mars = UIImage(named:"Mars")!

let sz = mars.size

let marsCG = mars.cgImage!

let szCG = CGSize(CGFloat(marsCG.width), CGFloat(marsCG.height))

let marsLeft =

marsCG.cropping(to:

CGRect(0,0,szCG.width/2.0,szCG.height))

let marsRight =

marsCG.cropping(to:

CGRect(szCG.width/2.0,0,szCG.width/2.0,szCG.height))

let r = UIGraphicsImageRenderer(size:CGSize(sz.width*1.5, sz.height))

let im = r.image { _ in

UIImage(cgImage: marsLeft!,

scale: mars.scale,

orientation: mars.imageOrientation).draw(at:CGPoint(0,0))

UIImage(cgImage: marsRight!,

scale: mars.scale,

orientation: mars.imageOrientation).draw(at:CGPoint(sz.width,0))

}

Tip

Yet another solution to flipping is to apply a transform to the graphics context before drawing the CGImage, effectively flipping the context’s internal coordinate system. This is elegant, but can be confusing if there are other transforms in play. I’ll talk more about graphics context transforms later in this chapter.

Snapshots

An entire view — anything from a single button to your whole interface, complete with its contained hierarchy of views — can be drawn into the current graphics context by calling the UIView instance method drawHierarchy(in:afterScreenUpdates:). (This method is much faster than the CALayer method render(in:); nevertheless, the latter does still come in handy, as I’ll show in Chapter 5.) The result is a snapshot of the original view: it looks like the original view, but it’s basically just a bitmap image of it, a lightweight visual duplicate.

An even faster way to obtain a snapshot of a view is to use the UIView (or UIScreen) instance method snapshotView(afterScreenUpdates:). The result is a UIView, not a UIImage; it’s rather like a UIImageView that knows how to draw only one image, namely the snapshot. Such a snapshot view will typically be used as is, but you can enlarge its bounds and the snapshot image will stretch. If you want the stretched snapshot to behave like a resizable image, call resizableSnapshotView(from:afterScreenUpdates:withCapInsets:) instead. It is perfectly reasonable to make a snapshot view from a snapshot view.

Snapshots are useful because of the dynamic nature of the iOS interface. For example, you might place a snapshot of a view in your interface in front of the real view to hide what’s happening, or use it during an animation to present the illusion of a view moving when in fact it’s just a snapshot.

Here’s an example from one of my apps. It’s a card game, and its views portray cards. I want to animate the removal of all those cards from the board, flying away to an offscreen point. But I don’t want to animate the views themselves! They need to stay put, to portray future cards. So I make a snapshot view of each of the card views; I then make the card views invisible, put the snapshot views in their place, and animate the snapshot views. This code will mean more to you after you’ve read Chapter 4, but the strategy is evident:

for v in views {

let snapshot = v.snapshotView(afterScreenUpdates: false)!

let snap = MySnapBehavior(item:snapshot, snapto:CGPoint(

x: self.anim.referenceView!.bounds.midX,

y: -self.anim.referenceView!.bounds.height)

)

self.snaps.append(snapshot) // keep a list so we can remove them later

snapshot.frame = v.frame

v.isHidden = true

self.anim.referenceView!.addSubview(snapshot)

self.anim.addBehavior(snap)

}

CIFilter and CIImage

The “CI” in CIFilter and CIImage stands for Core Image, a technology for transforming images through mathematical filters. Core Image started life on the desktop (macOS), and when it was originally migrated into iOS 5, some of the filters available on the desktop were not available in iOS (presumably because they were too intensive mathematically for a mobile device). Over the years, however, more and more macOS filters were added to the iOS repertoire, and now the two have complete parity: all macOS filters are available in iOS, and the two platforms have nearly identical APIs.

A filter is a CIFilter. The 180 available filters fall naturally into several broad categories:

- Patterns and gradients

-

These filters create CIImages that can then be combined with other CIImages, such as a single color, a checkerboard, stripes, or a gradient.

- Compositing

-

These filters combine one image with another, using compositing blend modes familiar from image processing programs.

- Color

-

These filters adjust or otherwise modify the colors of an image. Thus you can alter an image’s saturation, hue, brightness, contrast, gamma and white point, exposure, shadows and highlights, and so on.

- Geometric

-

These filters perform basic geometric transformations on an image, such as scaling, rotation, and cropping.

- Transformation

-

These filters distort, blur, or stylize an image.

- Transition

-

These filters provide a frame of a transition between one image and another; by asking for frames in sequence, you can animate the transition (I’ll demonstrate in Chapter 4).

- Special purpose

-

These filters perform highly specialized operations such as face detection and generation of QR codes.

The basic use of a CIFilter is quite simple:

-

You specify what filter you want by supplying its string name; to learn what these names are, consult Apple’s Core Image Filter Reference, or call the CIFilter class method

filterNames(inCategories:)with anilargument. -

Each filter has a small number of keys and values that determine its behavior (as if a filter were a kind of dictionary). You can learn about these keys entirely in code, but typically you’ll consult the documentation. For each key that you’re interested in, you supply a key–value pair. In supplying values, a number must be wrapped up as an NSNumber (Swift will take care of this for you), and there are a few supporting classes such as CIVector (like CGPoint and CGRect combined) and CIColor, whose use is easy to grasp.

Among a CIFilter’s keys may be the input image or images on which the filter is to operate; such an image must be a CIImage. You can obtain this CIImage from a CGImage with init(cgImage:) or from a UIImage with init(image:).

Warning

Do not attempt, as a shortcut, to obtain a CIImage directly from a UIImage through the UIImage’s ciImage property. This property does not transform a UIImage into a CIImage! It merely points to the CIImage that already backs the UIImage, if the UIImage is backed by a CIImage; but your images are not backed by a CIImage, but rather by a CGImage. I’ll explain where a CIImage-backed UIImage comes from in just a moment.

Alternatively, you can obtain a CIImage as the output of a filter — which means that filters can be chained together.

There are three ways to describe and use a filter:

-

Create the filter with CIFilter’s

init(name:). Now append the keys and values by callingsetValue(_:forKey:)repeatedly, or by callingsetValuesForKeys(_:)with a dictionary. Obtain the output CIImage as the filter’soutputImage. -

Create the filter and supply the keys and values in a single move, by calling CIFilter’s

init(name:withInputParameters:). Obtain the output CIImage as the filter’soutputImage. -

If a CIFilter requires an input image and you already have a CIImage to fulfill this role, specify the filter and supply the keys and values, and receive the output CIImage as a result, all in a single move, by calling the CIImage instance method

applyingFilter(_:withInputParameters:).

As you build a chain of filters, nothing actually happens. The only calculation-intensive move comes at the very end, when you transform the final CIImage in the chain into a bitmap drawing. This is called rendering the image. There are two main ways to do this:

- With a CIContext

-

Create a CIContext by calling

init()orinit(options:), and then call itscreateCGImage(_:from:), handing it the final CIImage as the first argument. This renders the image. The only mildly tricky thing here is that a CIImage doesn’t have a frame or bounds; it has anextent. You will often use this as the second argument tocreateCGImage(_:from:). The final output CGImage is ready for any purpose, such as for display in your app, for transformation into a UIImage, or for use in further drawing. - With a UIImage

-

Create a UIImage directly from the final CIImage by calling

init(ciImage:)orinit(ciImage:scale:orientation:). You then draw the UIImage into some graphics context. At the moment of drawing, the image is rendered. (Apple claims that you can simply hand a UIImage created by callinginit(ciImage:)to a UIImageView, as itsimage, and that the UIImageView will render the image. In my experience, this is not true. You must draw the image explicitly in order to render it.)

Warning

Rendering a CIImage in either of these ways is slow and expensive. With the first approach, the expense comes at the moment when you create the CIContext; wherever possible, you should create your CIContext once, beforehand — preferably, once per app — and reuse it each time you render. With the second approach, the expense comes at the moment of drawing the UIImage. Other ways of rendering a CIImage, involving things like GLKView or CAEAGLLayer, which are not discussed in this book, have the advantage of being very fast and suitable for animated or rapid rendering.

To illustrate, I’ll start with an ordinary photo of myself (it’s true I’m wearing a motorcycle helmet, but it’s still ordinary) and create a circular vignette effect (Figure 2-14). We derive from the image of me (moi) a CIImage (moici). We use a CIFilter (grad) to form a radial gradient between the default colors of white and black. Then we use a second CIFilter that treats the radial gradient as a mask for blending between the photo of me and a default clear background: where the radial gradient is white (everything inside the gradient’s inner radius) we see just me, and where the radial gradient is black (everything outside the gradient’s outer radius) we see just the clear color, with a gradation in between, so that the image fades away in the circular band between the gradient’s radii. The code illustrates two different ways of configuring a CIFilter:

let moi = UIImage(named:"Moi")!

let moici = CIImage(image:moi)!

let moiextent = moici.extent

let center = CIVector(x: moiextent.width/2.0, y: moiextent.height/2.0)

let smallerDimension = min(moiextent.width, moiextent.height)

let largerDimension = max(moiextent.width, moiextent.height)

// first filter

let grad = CIFilter(name: "CIRadialGradient")!

grad.setValue(center, forKey:"inputCenter")

grad.setValue(smallerDimension/2.0 * 0.85, forKey:"inputRadius0")

grad.setValue(largerDimension/2.0, forKey:"inputRadius1")

let gradimage = grad.outputImage!

// second filter

let blendimage = moici.applyingFilter("CIBlendWithMask",

withInputParameters: [ "inputMaskImage":gradimage ])

Figure 2-14. A photo of me, vignetted

We now have the final CIImage in the chain (blendimage); remember, the processor has not yet performed any rendering. Now, however, we want to generate the final bitmap and display it. Let’s say we’re going to display it as the image of a UIImageView self.iv. We can do it in two different ways. We can create a CGImage by passing the CIImage through a CIContext; in this code, I have prepared this CIContext beforehand as a property, self.context, by calling CIContext():

let moicg = self.context.createCGImage(blendimage, from: moiextent)! self.iv.image = UIImage(cgImage: moicg)

Alternatively, we can capture our final CIImage as a UIImage and then draw with it in order to generate the bitmap output of the filter chain:

let r = UIGraphicsImageRenderer(size:moiextent.size)

self.iv.image = r.image { _ in

UIImage(ciImage: blendimage).draw(in:moiextent)

}

A filter chain can be encapsulated into a single custom filter by subclassing CIFilter. Your subclass just needs to override the outputImage property (and possibly other methods such as setDefaults), with additional properties to make it key–value coding compliant for any input keys. Here’s our vignette filter as a simple CIFilter subclass, where the input keys are the input image and a percentage that adjusts the gradient’s smaller radius:

class MyVignetteFilter : CIFilter {

var inputImage : CIImage?

var inputPercentage : NSNumber? = 1.0

override var outputImage : CIImage? {

return self.makeOutputImage()

}

private func makeOutputImage () -> CIImage? {

guard let inputImage = self.inputImage else {return nil}

guard let inputPercentage = self.inputPercentage else {return nil}

let extent = inputImage.extent

let grad = CIFilter(name: "CIRadialGradient")!

let center = CIVector(x: extent.width/2.0, y: extent.height/2.0)

let smallerDimension = min(extent.width, extent.height)

let largerDimension = max(extent.width, extent.height)

grad.setValue(center, forKey:"inputCenter")

grad.setValue(smallerDimension/2.0 * CGFloat(inputPercentage),

forKey:"inputRadius0")

grad.setValue(largerDimension/2.0, forKey:"inputRadius1")

let blend = CIFilter(name: "CIBlendWithMask")!

blend.setValue(inputImage, forKey: "inputImage")

blend.setValue(grad.outputImage, forKey: "inputMaskImage")

return blend.outputImage

}

}

And here’s how to use our CIFilter subclass and display its output in a UIImageView:

let vig = MyVignetteFilter()

let moici = CIImage(image: UIImage(named:"Moi")!)!

vig.setValuesForKeys([

"inputImage":moici,

"inputPercentage":0.7

])

let outim = vig.outputImage!

let outimcg = self.context.createCGImage(outim, from: outim.extent)!

self.iv.image = UIImage(cgImage: outimcg)

Blur and Vibrancy Views

Certain views on iOS, such as navigation bars and the control center, are translucent and display a blurred rendition of what’s behind them. To help you imitate this effect, iOS provides the UIVisualEffectView class. You can place other views in front of a UIVisualEffectView, but any subviews should be placed inside its contentView. To tint what’s seen through a UIVisualEffectView, set the backgroundColor of its contentView.

To use a UIVisualEffectView, create it with init(effect:); the effect: argument will be an instance of a UIVisualEffect subclass:

- UIBlurEffect

-

To initialize a UIBlurEffect, call

init(style:); the styles (UIBlurEffectStyle) are.dark,.light, and.extraLight. (.extraLightis suitable particularly for pieces of interface that function like a navigation bar or toolbar.) For example:let fuzzy = UIVisualEffectView(effect:(UIBlurEffect(style:.light)))

- UIVibrancyEffect

-

To initialize a UIVibrancyEffect, call

init(blurEffect:). Vibrancy tints a view so as to make it harmonize with the blurred colors underneath it. The intention here is that the vibrancy effect view should sit in front of a blur effect view, typically in itscontentView, adding vibrancy to a single UIView that’s inside its owncontentView; you tell the vibrancy effect what the underlying blur effect is, so that they harmonize. You can fetch a visual effect view’s blur effect as itseffectproperty, but that’s a UIVisualEffect — the superclass — so you’ll have to cast down to a UIBlurEffect in order to hand it toinit(blurEffect:).

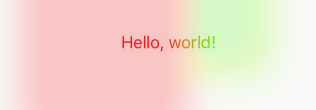

Here’s an example of a blur effect view covering and blurring the interface (self.view), and containing a UILabel wrapped in a vibrancy effect view (Figure 2-15):

let blur = UIVisualEffectView(effect: UIBlurEffect(style: .extraLight))

blur.frame = self.view.bounds

blur.autoresizingMask = [.flexibleWidth, .flexibleHeight]

let vib = UIVisualEffectView(effect: UIVibrancyEffect(

blurEffect: blur.effect as! UIBlurEffect))

let lab = UILabel()

lab.text = "Hello, world!"

lab.sizeToFit()

vib.frame = lab.frame

vib.contentView.addSubview(lab)

vib.center = CGPoint(blur.bounds.midX, blur.bounds.midY)

vib.autoresizingMask = [.flexibleTopMargin, .flexibleBottomMargin,

.flexibleLeftMargin, .flexibleRightMargin]

blur.contentView.addSubview(vib)

self.view.addSubview(blur)

Figure 2-15. A blurred background and a vibrant label

Apple seems to think that vibrancy makes a view more legible in conjunction with the underlying blur, but I’m not persuaded. The vibrant view’s color is made to harmonize with the blurred color behind it, but harmony implies similarity, which can make the vibrant view less legible. You’ll have to experiment. With the particular interface I’m blurring, the vibrant label in Figure 2-15 looks okay with a .dark or .extraLight blur effect view, but is hard to see with a .light blur effect view.

There are a lot of useful additional notes, well worth consulting, in the UIVisualEffectView.h header. For example, the header points out that an image displayed in an image view needs to be a template image in order to receive the benefit of a vibrancy effect view.

Observe that both a blur effect view and a blur effect view with an embedded vibrancy effect view are available as built-in objects in the nib editor.

Drawing a UIView

The examples of drawing so far in this chapter have mostly produced UIImage objects, suitable for display by a UIImageView or any other interface object that knows how to display an image. But, as I’ve already explained, a UIView itself provides a graphics context; whatever you draw into that graphics context will appear directly in that view. The technique here is to subclass UIView and implement the subclass’s draw(_:) method.

So, for example, let’s say we have a UIView subclass called MyView. You would then instantiate this class and get the instance into the view hierarchy. One way to do this would be to drag a UIView into a view in the nib editor and set its class to MyView in the Identity inspector; another would be to run code that creates the MyView instance and puts it into the interface.

The result is that, from time to time, or whenever you send it the setNeedsDisplay message, MyView’s draw(_:) will be called. This is your subclass, so you get to write the code that runs at that moment. Whatever you draw will appear inside the MyView instance. There will usually be no need to call super, since UIView’s own implementation of draw(_:) does nothing. At the time that draw(_:) is called, the current graphics context has already been set to the view’s own graphics context. You can use Core Graphics functions or UIKit convenience methods to draw into that context. I gave some basic examples earlier in this chapter (“Graphics Contexts”).

The need to draw in real time, on demand, surprises some beginners, who worry that drawing may be a time-consuming operation. This can indeed be a reasonable consideration, and where the same drawing will be used in many places in your interface, it may well make sense to construct a UIImage instead, once, and then reuse that UIImage by drawing it in a view’s draw(_:). In general, however, you should not optimize prematurely. The code for a drawing operation may appear verbose and yet be extremely fast. Moreover, the iOS drawing system is efficient; it doesn’t call draw(_:) unless it has to (or is told to, through a call to setNeedsDisplay), and once a view has drawn itself, the result is cached so that the cached drawing can be reused instead of repeating the drawing operation from scratch. (Apple refers to this cached drawing as the view’s bitmap backing store.) You can readily satisfy yourself of this fact with some caveman debugging, logging in your draw(_:) implementation; you may be amazed to discover that your custom UIView’s draw(_:) code is called only once in the entire lifetime of the app! In fact, moving code to draw(_:) is commonly a way to increase efficiency. This is because it is more efficient for the drawing engine to render directly onto the screen than for it to render offscreen and then copy those pixels onto the screen.

Where drawing is extensive and can be compartmentalized into sections, you may be able to gain some additional efficiency by paying attention to the parameter passed into draw(_:). This parameter is a CGRect designating the region of the view’s bounds that needs refreshing. Normally, this is the view’s entire bounds; but if you call setNeedsDisplay(_:), which takes a CGRect parameter, it will be the CGRect that you passed in as argument. You could respond by drawing only what goes into those bounds; but even if you don’t, your drawing will be clipped to those bounds, so, while you may not spend less time drawing, the system will draw more efficiently.

When a custom UIView subclass has a draw(_:) implementation and you create an instance of this subclass in code, you may be surprised (and annoyed) to find that the view has a black background! This can be frustrating if what you expected and wanted was a transparent background, and it’s a source of considerable confusion among beginners. The black background arises when two things are true:

-

The view’s

backgroundColorisnil. -

The view’s

isOpaqueistrue.

Unfortunately, when creating a UIView in code, both those things are true by default! So if you don’t want the black background, you must do something about one or the other of them (or both). If this view isn’t going to be opaque, then, this being your own UIView subclass, you could implement its init(frame:) (the designated initializer) to have the view set its own isOpaque to false:

class MyView : UIView {

override init(frame: CGRect) {

super.init(frame:frame)

self.isOpaque = false

}

// ...

}

With a UIView created in the nib, on the other hand, the black background problem doesn’t arise. This is because such a UIView’s backgroundColor is not nil. The nib assigns it some actual background color, even if that color is UIColor.clear.

On the other other hand, if a view fills its rectangle with opaque drawing or has an opaque background color, you can leave isOpaque set to true and gain some drawing efficiency (see Chapter 1).

Warning

You should never call draw(_:) yourself! If a view needs updating and you want its draw(_:) called, send the view the setNeedsDisplay message. This will cause draw(_:) to be called at the next proper moment. Also, don’t override draw(_:) unless you are assured that this is legal. For example, it is not legal to override draw(_:) in a subclass of UIImageView; you cannot combine your drawing with that of the UIImageView. Finally, do not do anything in draw(_:) except draw! Other configuration, such as setting the view’s background color, or giving it subviews or sublayers, should be performed elsewhere, such as its initializer override.

Graphics Context Commands

Whenever you draw, you are giving commands to the graphics context into which you are drawing. This is true regardless of whether you use UIKit methods or Core Graphics functions. Thus, learning to draw is really a matter of understanding how a graphics context works. That’s what this section is about.

Under the hood, Core Graphics commands to a graphics context are global C functions with names like CGContextSetFillColor; but in Swift 3, “renamification” recasts them as if a CGContext were a genuine object representing the graphics context, and the commands were methods sent to it. Moreover, thanks to Swift overloading, multiple functions are collapsed into a single command; thus, for example, CGContextSetFillColor and CGContextSetFillColorWithColor and CGContextSetRGBFillColor and CGContextSetGrayFillColor all become setFillColor.

Graphics Context Settings

As you draw in a graphics context, the drawing obeys the context’s current settings. Thus, the procedure is always to configure the context’s settings first, and then draw. For example, to draw a red line followed by a blue line, you would first set the context’s line color to red, and then draw the first line; then you’d set the context’s line color to blue, and then draw the second line. To the eye, it appears that the redness and blueness are properties of the individual lines, but in fact, at the time you draw each line, line color is a feature of the entire graphics context.

A graphics context thus has, at every moment, a state, which is the sum total of all its settings; the way a piece of drawing looks is the result of what the graphics context’s state was at the moment that piece of drawing was performed. To help you manipulate entire states, the graphics context provides a stack for holding states. Every time you call saveGState, the context pushes the entire current state onto the stack; every time you call restoreGState, the context retrieves the state from the top of the stack (the state that was most recently pushed) and sets itself to that state.

Thus, a common pattern is:

-

Call

saveGState. -

Manipulate the context’s settings, thus changing its state.

-

Draw.

-

Call

restoreGStateto restore the state and the settings to what they were before you manipulated them.

You do not have to do this before every manipulation of a context’s settings, however, because settings don’t necessarily conflict with one another or with past settings. You can set the context’s line color to red and then later to blue without any difficulty. But in certain situations you do want your manipulation of settings to be undoable, and I’ll point out several such situations later in this chapter.

Many of the settings that constitute a graphics context’s state, and that determine the behavior and appearance of drawing performed at that moment, are similar to those of any drawing application. Here are some of them, along with some of the commands that determine them (and some UIKit properties and methods that call them):

- Line thickness and dash style

-

setLineWidth(_:),setLineDash(phase:lengths:)(and UIBezierPathlineWidth,setLineDash(_:count:phase:)) - Line end-cap style and join style

-

setLineCap(_:),setLineJoin(_:),setMiterLimit(_:)(and UIBezierPathlineCapStyle,lineJoinStyle,miterLimit) - Line color or pattern

-

setStrokeColor(_:),setStrokePattern(_:colorComponents:)(and UIColorsetStroke) - Fill color or pattern

-

setFillColor(_:),setFillPattern(_:colorComponents:)(and UIColorsetFill) - Shadow

-

setShadow(offset:blur:color:) - Overall transparency and compositing

-

setAlpha(_:),setBlendMode(_:)

- Anti-aliasing

-

setShouldAntialias(_:)

Additional settings include:

- Clipping area

-

Drawing outside the clipping area is not physically drawn.

- Transform (or “CTM,” for “current transform matrix”)

-

Changes how points that you specify in subsequent drawing commands are mapped onto the physical space of the canvas.

Many of these settings will be illustrated by examples later in this chapter.

Paths and Shapes

By issuing a series of instructions for moving an imaginary pen, you construct a path, tracing it out from point to point. You must first tell the pen where to position itself, setting the current point; after that, you issue a series of commands telling it how to trace out each subsequent piece of the path. Each additional piece of the path starts at the current point; its end becomes the new current point.

Note that a path, in and of itself, does not constitute drawing! First you provide a path; then you draw. Drawing can mean stroking the path or filling the path, or both. Again, this should be a familiar notion from certain drawing applications.

Here are some path-drawing commands you’re likely to give:

- Position the current point

-

move(to:) - Trace a line

-

addLine(to:),addLines(between:) - Trace a rectangle

-

addRect(_:),addRects(_:) - Trace an ellipse or circle

-

addEllipse(in:) - Trace an arc

-

addArc(tangent1End:tangent2End:radius:) - Trace a Bezier curve with one or two control points

-

addQuadCurve(to:control:),addCurveTo(to:control1:control2:) - Close the current path

-

closePath. This appends a line from the last point of the path to the first point. There’s no need to do this if you’re about to fill the path, since it’s done for you. - Stroke or fill the current path

-

strokePath,fillPath(using:),drawPath. Stroking or filling the current path clears the path. UsedrawPathif you want both to fill and to stroke the path in a single command, because if you merely stroke it first withstrokePath, the path is cleared and you can no longer fill it.There are also some convenience functions that create a path from a CGRect or similar and stroke or fill it all in a single move:

-

stroke(_:),strokeLineSegments(between:) -

fill(_:) -

strokeEllipse(in:) -

fillEllipse(in:)

-

A path can be compound, meaning that it consists of multiple independent pieces. For example, a single path might consist of two separate closed shapes: a rectangle and a circle. When you call move(to:) in the middle of constructing a path (that is, after tracing out a path and without clearing it by filling or stroking it), you pick up the imaginary pen and move it to a new location without tracing a segment, thus preparing to start an independent piece of the same path. If you’re worried, as you begin to trace out a path, that there might be an existing path and that your new path might be seen as a compound part of that existing path, you can call beginPath to specify that this is a different path; many of Apple’s examples do this, but in practice I usually do not find it necessary.

To illustrate the typical use of path-drawing commands, I’ll generate the up-pointing arrow shown in Figure 2-16. This might not be the best way to create the arrow, and I’m deliberately avoiding use of the convenience functions, but it’s clear and shows a nice basic variety of typical commands:

// obtain the current graphics context let con = UIGraphicsGetCurrentContext()! // draw a black (by default) vertical line, the shaft of the arrow con.move(to:CGPoint(100, 100)) con.addLine(to:CGPoint(100, 19)) con.setLineWidth(20) con.strokePath() // draw a red triangle, the point of the arrow con.setFillColor(UIColor.red.cgColor) con.move(to:CGPoint(80, 25)) con.addLine(to:CGPoint(100, 0)) con.addLine(to:CGPoint(120, 25)) con.fillPath() // snip a triangle out of the shaft by drawing in Clear blend mode con.move(to:CGPoint(90, 101)) con.addLine(to:CGPoint(100, 90)) con.addLine(to:CGPoint(110, 101)) con.setBlendMode(.clear) con.fillPath()

Figure 2-16. A simple path drawing

If a path needs to be reused or shared, you can encapsulate it as a CGPath. In Swift 3, CGPath and its mutable partner CGMutablePath are treated as class types, and the global C functions that manipulate them are treated as methods. You can copy the graphics context’s current path using the CGContext path method, or you can create a new CGMutablePath and construct the path using various functions, such as move(to:transform:) and addLine(to:transform:), that parallel the CGContext path-construction functions. Also, there are ways to create a path based on simple geometry or on an existing path:

-

init(rect:transform:) -

init(ellipseIn:transform:) -

init(roundedRect:cornerWidth:cornerHeight:transform:) -

init(strokingWithWidth:lineCap:lineJoin:miterLimit:transform:) -

copy(dashingWithPhase:lengths:transform:) -

copy(using:)(takes a pointer to a CGAffineTransform)

The UIKit class UIBezierPath is actually a wrapper for CGPath; the wrapped path is its cgPath property. It provides methods parallel to the CGContext and CGPath functions for constructing a path, such as:

-

init(rect:) -

init(ovalIn:) -

init(roundedRect:cornerRadius:) -

move(to:) -

addLine(to:) -

addArc(withCenter:radius:startAngle:endAngle:clockwise:) -

addQuadCurve(to:controlPoint:) -

addCurve(to:controlPoint1:controlPoint2:) -

close

When you call the UIBezierPath instance methods fill or stroke or fill(with:alpha:) or stroke(with:alpha:), the current graphics context settings are saved, the wrapped CGPath is made the current graphics context’s path and stroked or filled, and the current graphics context settings are restored.

Thus, using UIBezierPath together with UIColor, we could rewrite our arrow-drawing routine entirely with UIKit methods:

let p = UIBezierPath() // shaft p.move(to:CGPoint(100,100)) p.addLine(to:CGPoint(100, 19)) p.lineWidth = 20 p.stroke() // point UIColor.red.set() p.removeAllPoints() p.move(to:CGPoint(80,25)) p.addLine(to:CGPoint(100, 0)) p.addLine(to:CGPoint(120, 25)) p.fill() // snip p.removeAllPoints() p.move(to:CGPoint(90,101)) p.addLine(to:CGPoint(100, 90)) p.addLine(to:CGPoint(110, 101)) p.fill(with:.clear, alpha:1.0)

There’s no savings of code here over calling Core Graphics functions, so your choice of Core Graphics or UIKit is a matter of taste. UIBezierPath is also useful when you want to capture a CGPath and pass it around as an object; an example appears in Chapter 20. See also the discussion in Chapter 3 of CAShapeLayer, which takes a CGPath that you’ve constructed and draws it for you within its own bounds.

Clipping

A path can be used to mask out areas, protecting them from future drawing. This is called clipping. By default, a graphics context’s clipping region is the entire graphics context: you can draw anywhere within the context.

The clipping area is a feature of the context as a whole, and any new clipping area is applied by intersecting it with the existing clipping area; so if you apply your own clipping region, the way to remove it from the graphics context later is to plan ahead and wrap things with calls to saveGState and restoreGState.

To illustrate, I’ll rewrite the code that generated our original arrow (Figure 2-16) to use clipping instead of a blend mode to “punch out” the triangular notch in the tail of the arrow. This is a little tricky, because what we want to clip to is not the region inside the triangle but the region outside it. To express this, we’ll use a compound path consisting of more than one closed area — the triangle, and the drawing area as a whole (which we can obtain as the context’s boundingBoxOfClipPath).

Both when filling a compound path and when using it to express a clipping region, the system follows one of two rules:

- Winding rule

-

The fill or clipping area is denoted by an alternation in the direction (clockwise or counterclockwise) of the path demarcating each region.

- Even-odd rule (EO)

-

The fill or clipping area is denoted by a simple count of the paths demarcating each region.

Our situation is extremely simple, so it’s easier to use the even-odd rule:

// obtain the current graphics context let con = UIGraphicsGetCurrentContext()! // punch triangular hole in context clipping region con.move(to:CGPoint(90, 100)) con.addLine(to:CGPoint(100, 90)) con.addLine(to:CGPoint(110, 100)) con.closePath() con.addRect(con.boundingBoxOfClipPath) con.clip(using:.evenOdd) // draw the vertical line con.move(to:CGPoint(100, 100)) con.addLine(to:CGPoint(100, 19)) con.setLineWidth(20) con.strokePath() // draw the red triangle, the point of the arrow con.setFillColor(UIColor.red.cgColor) con.move(to:CGPoint(80, 25)) con.addLine(to:CGPoint(100, 0)) con.addLine(to:CGPoint(120, 25)) con.fillPath()

The UIBezierPath clipping commands are usesEvenOddFillRule and addClip.

Gradients

Gradients can range from the simple to the complex. A simple gradient (which is all I’ll describe here) is determined by a color at one endpoint along with a color at the other endpoint, plus (optionally) colors at intermediate points; the gradient is then painted either linearly between two points or radially between two circles.

You can’t use a gradient as a path’s fill color, but you can restrict a gradient to a path’s shape by clipping, which amounts to the same thing.

To illustrate, I’ll redraw our arrow, using a linear gradient as the “shaft” of the arrow (Figure 2-17):

// obtain the current graphics context

let con = UIGraphicsGetCurrentContext()!

con.saveGState()

// punch triangular hole in context clipping region

con.move(to:CGPoint(10, 100))

con.addLine(to:CGPoint(20, 90))

con.addLine(to:CGPoint(30, 100))

con.closePath()

con.addRect(con.boundingBoxOfClipPath)

con.clip(using: .evenOdd)

// draw the vertical line, add its shape to the clipping region

con.move(to:CGPoint(20, 100))

con.addLine(to:CGPoint(20, 19))

con.setLineWidth(20)

con.replacePathWithStrokedPath()

con.clip()

// draw the gradient