Chapter 6. What Does a Validated Hypothesis Look Like?

At first people would tell me, “That’s a good idea,” and I would get excited. But after doing a few interviews, I would see some people have an a-ha moment and start talking and talking excitedly. The more interviews I did, the more I felt I could tell the difference between people who were trying to be nice and people who really had a problem that I could solve.

—Bartosz Malutko, Starters CEO

Now that you’ve started doing customer development interviews, you’re probably impatiently awaiting some answers. You created focused and falsifiable hypotheses, you found people to talk to, and you were disciplined in asking open-ended questions and letting the customer be the expert. Now is the time to start taking what you’ve learned and using it to guide your decisions.

In this chapter, we’ll talk through how to get reliable answers from your interview responses. You’ll learn why you should be a temporary pessimist, when to distrust what you’re hearing, and how to reduce bias. We’ll also cover:

What does validating a hypothesis mean? Does it mean you have a guaranteed product success? Does it mean that you cannot build anything until you’ve completed enough interviews? Does it mean that you can stop doing customer development now?

No, no, and no.

Validation simply means that you’re confident enough to continue investing time and effort in this direction. It also doesn’t mean that you need to wait until you’ve completed 10, 15, or 20 interviews before writing a line of code! Customer development happens in parallel with product development, which means that you may be starting to create an MVP at any point in your interview timeline. It also means that you should be continuing to talk to customers even after you’ve started building your product or service.

Maintaining a Healthy Skepticism

Customer development interviews are subjective, and that’s a challenge: it’s easy to hear what you want to hear. Without even meaning to, most people focus on the positives. We hear the word maybe, we see a smile, and we ignore the hesitation or tense body language.

It’s all too easy to interpret responses to prove your hypotheses right even if they aren’t. If you do that, you’ve wasted a ton of time. Let’s avoid that fate.

Are They Telling You What You Want to Hear?

What will you hear when people are too eager to please? There’s often a difference between politeness and honesty. You have to learn to read between the lines, and the subtext of the conversation can be slightly different depending on whether your product is aimed at consumers or at businesses.

Generally speaking, consumers don’t want to disappoint you. If you’ve done a good job in building rapport and sounding human, your interviewee wants to cooperate and make you happy. She may say that she’s experiencing a problem or behaving in a certain way even though it’s not true. She may say that she would pay for a solution that allowed her to accomplish something, but in reality she would never hand over her credit card.

For those of you who work with enterprise products, this takes on a different tone. Your interviewee may feel that being professional means valuing politeness over honesty. He may think he is being diplomatic by agreeing that something is possible when he knows it’s highly improbable. He may also believe that these conversations are an invitation to verbal horse-trading: by agreeing with you in one area, he may feel that he has earned the right to ask for something that he wants later.

In both of these situations, you may hear responses that align a little too perfectly with what you were hoping to hear. When you hear an interviewee say something that confirms your assumptions, don’t stop there. Be skeptical.

I often say, “If someone says maybe, write it down as no.”

Is the Customer Saying Something Real or Aspirational?

It’s not enough for someone to say that she wants something—a product, a service, the ability to accomplish a task. It’s free and easy to want.

Actually changing behaviors, spending money, or learning something new has a cost. You need to figure out the difference between want and will, and uncovering that difference requires discipline in how you talk to your target customers. If you ask a question and the person says “yes,” that carries significantly less weight than if he brought up the subject unprompted.

In Chapter 4, I talked about the importance of asking open-ended questions. It’s almost always better to avoid yes-or-no questions entirely, but in the beginning that’s a hard habit to form. You’ll slip up occasionally and ask something like “Would you do X?” or “Would you like X?” That’s fine, as long as you recognize that you can’t take the customer’s response at face value. You need to challenge it.

Years ago, I worked with a large bank on some in-person customer research. An executive with the bank insisted on asking the question “Are you concerned with the security of your financial information?” I pointed out that no one would ever say “no” to that question, but to no avail. As I predicted, 10 of the 10 people I spoke with answered that, of course, they were very concerned with the security and privacy of their financial information. As one of them was leaving, I thanked him and said, “In order to get your $50 gratuity, I’ll need you to write down your mother’s maiden name and social security number.” Without hesitation, the man grabbed a ballpoint pen and reached for my sheet of paper. I stopped him before he could write anything, but my point was made. Very concerned about security... until $50 was on the line.

A better way to phrase that initial question would have been to ask about existing behavior, such as “What actions do you believe people can take to keep their financial information secure?” If you’ve already asked a yes-or-no question and gotten a “yes,” you’ll want to use a more open-ended question in subsequent interviews.

A reliable answer should include some tangible actions, such as “choose secure passwords” or “always log out after using a bank website.” In contrast, consider a response like “I don’t know” or “I always read about someone hacking into somewhere and getting credit card numbers, but there’s really nothing you can do to stop that.”

Even if you’ve phrased your questions well, sometimes customers will sound extremely enthusiastic even though they’re unlikely to buy or use your product. I experienced this firsthand when I ran design and research for a personal finance product. When I did customer interviews to select people for our beta program, almost everyone was passionate about problems with managing their money. They described their frustrations in detail. When they saw a demo, they loved the idea of the product. Most said that they would definitely use such a product, even pay for it. And yet somehow, we didn’t end up with anything approaching 100% usage. (You’re shocked, I know.)

Over time, I began to see differences in the specific words people used. The people who became customers used a more active voice and described specifics; others used a more passive voice and talked in hypothetical terms. If you’ve taken verbatim notes, look for patterns like those in Table 6-1.

People who became customers said | Noncustomers said |

I’ve already tried... Here’s how I do... | Plan on doing... Haven’t tried yet... Keep meaning to... |

I need to be able to do [task] faster/better because... Here are the things making it difficult for me to do [task] currently... | [Task] is impossible... I just don’t know how anyone does [task]... |

This would help me achieve [goal]... If I had this, here’s what I could do... | I wish I had... It’d be interesting to see... Well, once I had it, I’d be able to figure out how to use it... |

Right now... | Soon... As soon as [some event happens]... Almost ready to... |

Here’s how I do... | I don’t! I really should do... |

It turned out I was hearing from a lot of people who were smiling and passionate and talking about what they wished they would do. Managing your finances well is one of those goals, like losing 10 pounds or flossing daily, which everyone feels they ought to do.

The best predictor of future behavior is current behavior. Focusing on specific examples of current behavior is the best way to defuse aspirational speak. If an interviewee talks about wanting to lose 10 pounds, ask what exercise she’s done in the past week. If someone talks about wanting to streamline the project management process, ask which meetings or bottlenecks she’s eliminated so far.

If your interview notes contain lots of exclamation points but little real-world behavioral evidence, you’re not validating your hypothesis. We’ll talk more about validating (or invalidating) your hypothesis later in this chapter. Remember that the most important thing is to do one or the other quickly. If your hypothesis is invalid, the sooner you know that, the sooner you can adjust it and make progress. If you validate your hypothesis quickly, you can get on with building an MVP (which I’ll cover in more detail in Chapter 7).

But before we get too far ahead of ourselves, let’s talk about your notes, which will help you in validating or invalidating your hypothesis.

Keeping Organized Notes

When I was at KISSmetrics, consolidating customer development insights across the team was one of my highest priorities. I averaged 10–15 customer interviews per week (and some weeks, many more). The founders typically had as many conversations (though rarely as structured) with customers. As the team grew, customer support and sales engineering also talked with customers daily. That’s a lot of notes to keep straight!

Keeping track of that much information is tricky enough with just one person. And ideally, many if not all of the people building products on your team are participating in customer development.

I quickly learned that if you’ve taken enough notes to accurately capture what someone said in a 20-minute interview, you’ve got more words than you can effectively share with your team. It’s impossible for every person to read every word of customer development notes. Having some structure around summarizing and maintaining notes is critical.

Keeping Your Notes in One File

For projects where I am the primary interviewer, I keep a single Word document with all of the notes from all customer interviews. Right before I talk to a new person, I add a few blank lines, type in the person’s name and company in bold, and copy and paste my simple interview template (which was presented in Chapter 5).

If you have multiple people interviewing, Google Docs is a better choice because it supports co-editing. You won’t need to worry about accidentally overwriting each other’s notes if two or more people are conducting interviews at the same time.

Having all of your notes in a single document makes it easier to search later on. If you remember that someone previously mentioned “email integration,” you could search for that phrase instead of having to remember who said it. It’s also easy to see the number of times a specific word or product name was used. You can do this across multiple documents, but it’s much slower.

Some people I’ve worked with recommend Evernote for customer development. One advantage of Evernote is its availability across multiple platforms. If you think of something later, you can look it up on your phone or iPad and make a quick note about it. But personally, I use Word or Google Docs.

Creating a Summary

I use a second Word document when creating summaries. Using a separate document for summaries makes it easier to skim and look for patterns. This document is lightweight enough that I can share it with my team without burying them in information.

The first step is to summarize what you learned from each interview. But don’t stop there. You shouldn’t be simply shortening what the interviewee told you, but drawing some conclusions and recommended next steps. Customer development is only effective if it drives your team to focus and avoid spending time on ineffective activities.

If the customer disagrees with the team’s beliefs, a summary document probably won’t be enough to convince people to change their minds. This is why I emphasized the practice of pair interviewing in Chapter 5. A team member who has participated in a few interviews, even if only as a note-taker, will be more receptive to summarized feedback. Pull out the full version of your notes for people who need more proof of what customers are saying, and see if you can persuade some of those folks to act as note-takers in future interviews.

As you remember from Chapter 5, the most important areas to capture in note-taking are:

Something that validates your hypotheses

Something that invalidates your hypotheses

Anything that takes you by surprise

Anything full of emotion

Those areas are where most of your learning will happen. When I’m summarizing, I force myself to boil each interview down to five to seven bullet points containing the most interesting information. This makes me prioritize what I heard and pick out the most valuable things I learned from each interview.

When you have multiple interviewers, each person can prioritize her own notes into a total of five to seven bullets for three sections: Validates, Invalidates, and Also Interesting.

If you’re not sure how to prioritize, let the interviewee’s emotion be the deciding factor. A matter-of-fact statement that supports one of your assumptions is interesting, but not nearly as interesting as an enthusiastic five-minute tangent on a competitor’s product.

Here’s a five-point summary from a KISSmetrics interview:

Joanne, marketing director, midsized company

- Validates

“I use Google Analytics but it leaves me with more questions than it answers, honestly.” (We assumed that our customers already use Google Analytics but have outgrown it.)

Analytics is a lower priority because developers are working on product features, so she feels like she’s working in the dark. (As we thought, setting up the product only happens if it requires minimal developer resources.)

- Invalidates

No online marketing efforts at all! “Knowing where people came from before they got to my site—well, it doesn’t affect me, right? So I’m not sure why I’d need to know.” (We’d assumed that market attribution was critical to our customers—this invalidates that assumption!)

- Also interesting

Long tangent on customer support—others in the company don’t see some of the product problems because they don’t have access to support emails that make the problem obvious.

Interested in early beta access; willing to pay a developer to help with install.

Rallying the Team Around New Information

The best way to ensure that your customer development efforts improve your product development efforts is to maximize the number of people on your team who talk to customers. Those people will organically bring that customer knowledge into their day-to-day decisions around product scope, implementation details, design details, and marketing.

Pair interviewing is one valuable technique for bringing more people into the customer’s world. Another technique is to invite a large group of people to help categorize customer feedback. At Yammer, we typically do this using sticky notes in a large physical space. This requires retranscribing feedback, which takes extra time. However, the act of moving around physical sticky notes encourages people to discuss and react to the feedback and helps it to sink in more effectively (Figure 6-1).

Minds don’t change immediately, though. Even when someone sees her assumptions invalidated firsthand, it takes time and repetition to turn that new information into a course correction.

Your job is to help your team to see the world through the eyes of the customer and make the necessary changes to stay focused and avoid wasted effort. Here are some tips to help you share what you’re learning effectively:

- Sell what you’re learning

What you’re learning is critical. You’re telling a story that may save or doom your product. It’s critical that you realize how important these insights are and frame them as such. Don’t send out an email with a boring subject line like “Customer interview summaries” unless you want people to tune you out.

- They lack context: supply it

One of the biggest challenges in reporting back to your team is remembering that they lack context. Once you’ve heard multiple customers share how they behave and what they believe, it’s hard to remember not understanding their situations. This isn’t unique to customer development; it goes back to the curse of knowledge mentioned in Chapter 5. The curse of knowledge blinds you to the realization that you need to explain each step that brought you closer to a conclusion. For people who didn’t participate in the conversation, it isn’t immediately obvious which comments, body language, or questions are significant.

- Encourage questions (don’t jump to recommendations)

Encourage questions and discussion. Jumping directly to your recommendations or opinions effectively shuts your team out of the loop. If others don’t feel that they’re participating in the customer development process, they may start to subtly resist it. The first step to getting people to participate? Make sure they’re listening.

- Be where the decisions are made

Customer development insights should turn into action items and decisions. It’s better to share information for five minutes during a product scope or prioritization meeting than to hold an hour-long meeting where people listen and no one takes action.

Set up a regular schedule for sharing what you’ve learned. How often should that be?

It depends. Use the frequency that makes sense for the size and velocity of your company. Let me tell you what worked for me in various settings.

At KISSmetrics, I typically consolidated and shared customer development feedback just before our weekly product prioritization meetings. In a typical week, I might have completed 5–10 interviews, each of which I summarized as described in the previous section.

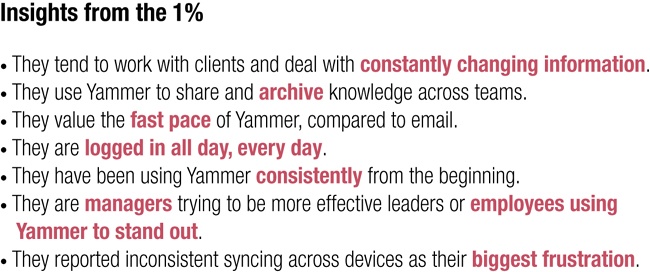

At Yammer, those of us doing customer development post our interview notes to our internal Yammer network as soon as the interview is completed. Everyone on the project team can read the interview notes immediately and ask questions. To spread information across product teams, we also share summaries at a monthly meeting to discuss user research and analytics updates. Those meetings are more conversational, so we present lightweight slide decks with just enough information to spur a conversation (see Figure 6-2).

How Many Interviews Do You Need?

Short answer 2: 15–20.

I’m not sure that either of these short answers is really helpful, but I’d hate to have you skim the next few pages looking for a real answer. So let’s start with these answers, and I’ll explain more about what you’ll encounter along the way.

After two interviews, look at your questions and notes and adapt your interview.

After five interviews, you should have encountered at least one excited person.

After 10 interviews, you should see patterns in the responses you’re getting.

How many interviews are enough?

After enough interviews, you’ll know it.[49] You’ll no longer hear things that surprise you.

In the following sections, I’ll expand on these answers.

After Two Interviews: Are You Learning What You Need to Learn?

In Chapter 5, we talked about taking a few minutes after your first customer interview to figure out which elements were most successful. Most likely, the first changes you made to your interviews related to your tone and the phrasing of your questions.

Once you’ve completed two or three interviews, you’ll have to make a more critical assessment. Are you learning what you need to learn?

Components of a validated hypothesis

A validated hypothesis typically has four components, expressed enthusiastically:

The customer confirms that there is definitely a problem or pain point

The customer believes that the problem can and should be resolved

The customer has actively invested (effort, time, money, learning curve) in trying to solve this problem

The customer doesn’t have circumstances beyond his control that prevent him from trying to fix the problem or pain point[50]

What each interview should tell you

When you reread your notes from an interview, you need to feel confident that you understood the depth of this person’s pain point and the level of her motivation to fix it.

You should be able to confidently answer questions like:

If I had a product today that completely solved this customer’s problem, do I see any obstacles that would prevent her from buying or using it?

How would she use it and fit it into her day-to-day activities?

What would it replace?

If she would not buy my solution, what are the specific reasons why not?

If you can’t answer these questions, modify your interview questions (see the sidebar below).

Two interviews are not enough to give you the confidence that you are correct. They are solely an indicator that you’re not completely incorrect.

Within Five Interviews: The First Really Excited Person

Within the first five interviews, you’ll encounter at least one person who is really excited about your idea. At minimum, if you’ve identified a good problem to solve, someone will give you a direct referral (“This doesn’t affect me, but I know exactly who you should talk to”). If you’ve heard something like that, great! Keep going.

If you haven’t, one of the following is likely true:

You’re talking to the wrong people

Your problem isn’t really a problem

Either way, this invalidates your hypothesis (remember that the hypothesis consists of two parts: that this type of person has this type of problem).

It’s possible to talk to one outlier. It’s possible to talk to two outliers. It’s highly unlikely to talk to five in a row. If five people aren’t interested, it’s probably because you’ve invalidated your hypothesis.

“But wait!” you might say. “Maybe I wasn’t asking the right questions.” That’s unlikely. When you’re talking to a person who really cares about solving her problem, her passion will shine through and she’ll answer the question you should have asked.

Once you’ve heard your first really excited person, you may want to think about how quickly you can assemble an MVP. I generally recommend starting with 10 interviews, but if you can put together an MVP in less time than it would take to do 5 more interviews, why wait?

No matter how excited someone sounds about your product, the real test is whether or not he is willing to throw down money (or some other personal currency, such as his time, a pre-order, or an email address). If you can figure out a way to collect currency from a user within a couple of hours, go for it!

Within 10 Interviews: Patterns Emerge

Within 10 interviews, you’re likely to have heard some repetition. This may come in the form of two or three people expressing similar frustrations, motivations, process limitations, or things they wish they could do.

Challenging the patterns

Once I’ve heard a concept three times, I deliberately try to challenge it in future interviews. I use the word challenge because the goal here is to test the pattern, not to assume that it will hold. It’s tempting to use yes-or-no or “Do you agree?” questions on future participants. That introduces bias. Focus on asking about scenarios and past behaviors and let the interviewee naturally confirm the pattern (or not).

If the topic doesn’t naturally come up, a helpful way to challenge the pattern you’ve seen is the “other people” method. You talk about invented “other people” and claim that they feel the opposite way or act in the opposite way of the pattern you’ve been hearing. For example:

- Pattern

You’ve heard multiple people say that they research cars online and then visit a dealer.

- Challenge

You might say the opposite: “Other people have told me that they prefer to visit the dealer and test drive a car first, then do research online. Could you tell me how you shop for cars?”

Be sure to follow up an “other people do this” statement with a question. This provokes your interviewee to pay more attention to what you’ve said and answer in more detail.[51] Whether he agrees or disagrees with your mythical other people, he will react to the comparison by describing exactly how he is different from or similar to them.

No patterns yet?

What if you aren’t hearing patterns? It is possible to talk with 10 or more people and hear a lot of responses that seem genuine and evoke emotion but don’t seem to fall into a pattern. What this typically means is that you’ve targeted too broad a customer audience. You may be talking to multiple different types of people, all of whom are plausible customers but have different needs.

I recommend narrowing the type of people you’re talking to. Try to focus in on one job title, lifestyle, technical competence, type of company, or other limiting category. This will help you to spot patterns more quickly so you can continue learning and don’t get frustrated. Don’t worry about aiming too narrow—your goal is to validate or invalidate as quickly as possible.

How Many Interviews Are Enough?

As I mentioned earlier, the real answer is “it depends.” Remember that the aim of customer development is to reduce risk. As such, the number of interviews you need to do is inversely correlated with:

Experience with customer development and your domain

Complexity of your business model and number of dependencies

Investment required to create and validate your MVP

Experience with customer development and industry

The more experience and comfort you have with customer development, the fewer interviews you need. (And this isn’t because you substitute your judgment for getting out of the building and talking to people!) Your improved ability to target individuals means you’ll talk to more of the right people early on. Asking better questions gets you better insights.

Similarly, familiarity with customers or your industry vertical shortens your cycle because your early hypotheses are based on current behaviors, decision-making patterns, and expressed needs. They’re still unlikely to be completely right, but you’ll have less trial-and-error time. When I was at Yodlee, I had been working with our users for several years prior to introducing customer development interviews. I already had quite a bit of insight into how users were handling their finances online and offline, so I was able to get away with fewer interviews before beginning to build product features.

Complexity of business model and number of dependencies

Even if you’re a customer development and domain expert, certain business models require that you validate a greater number of assumptions. If your business has a dependency on working with suppliers, distributors, or other third parties, you’ll need to speak with those people as well as your direct customers. If you are brokering a two-sided market, you’ll need to listen to both sides and ensure that you’re bringing value to both. This may double or triple the number of interviews you need to conduct. Because each party has its own pain points and constraints, you’ll need to allow for enough conversations to let those patterns emerge for each target stakeholder.

Investment required to create your MVP

In the Preface, I mentioned that I spent a month doing customer development interviews for KISSmetrics. In that time, I did about 50 interviews, which is probably near the high end of the spectrum. Let me explain why we needed that many interviews to support our MVP.

Technically, the beta version of KISSmetrics that came out of those 50 interviews was not the first step toward customer validation. We already had a prelaunch site where hundreds of customers had signed up and provided information about themselves and their companies. What we needed to validate was not their interest but that our approach to web analytics would be effective and differentiated for customers.

In that specific situation—a data-driven product where every customer’s experience would be different and accuracy was critical—we needed to invest significant engineering resources to build something that would allow us to learn. By stacking 50 customer development interviews up-front, we were able to minimize that investment as much as possible.

In general, though, if you find yourself thinking that you will need a month or more to work on your MVP, you are probably vastly overengineering. It’s worth seeking advice from a mentor who can sanity-check your idea of an MVP.

After Enough Interviews You Stop Hearing Things That Surprise You

The best indicator that you’re done is that you stop hearing people say things that surprise you. You’ll feel confident that you’ve gotten good enough insights on your customers’ common problems, motivations, frustrations, and stakeholders.

Typically, it has taken me 15–20 interviews to feel confident that the problem and solution have potential. Between recruiting participants, preparing questions, taking notes, and summarizing, that equates to about two weeks of work. That may sound like a lot of effort, but if you learn that you can cut a single feature, you’ve already justified the investment in customer development.

This process can and should happen in parallel with developing your MVP.

One of my recent customer development projects involved learning about Yammer and how our customers are measuring success with it.

We knew that many customers were reporting on Yammer usage and successes to their bosses. (Any employee can start a free Yammer internal social media network for their company without top-down permission. As a result, there is often internal selling by advocates of Yammer to executives who need to learn more about its value.) They expressed frustration with our existing reporting tool, which we built based on “what customers asked for” as opposed to controlled research into their needs and behaviors. Before we committed to building more functionality, I wanted to make sure that we fully understood their problems so that we could provide a more effective solution.

Over a two-week period, I conducted 22 short customer interviews. By the last few interviews, I wasn’t hearing any comments that were both new and significant. I could confidently make some assertions:

How our customers put together reporting slide decks

Which stakeholders they needed to please

What frustrations they were encountering

The root problems that explained why they were requesting certain product changes

Based on that information, I was able to identify that a couple of requests were tied to genuine pain points and recommend not building the others. More importantly, I was able to summarize what I’d learned from the interviews and share that back with our customers. This was an extra step that is particularly helpful with existing customers. Closing that loop—showing that we had taken their concerns seriously, identified their problems, and pointing to the changes we had implemented in response—strengthened our relationship with those customers.[52]

What Does a Validated Hypothesis Look Like?

And now: what you’ve all been waiting for—what does a validated hypothesis look like?

As an example, I’ll walk through how KISSmetrics decided to build a second product, KISSinsights, based on what we learned from customers. Like most real-world stories, it’s messier than pure theory.

I didn’t begin by creating a problem hypothesis because I didn’t realize that the problem existed until after I started talking to customers!

I didn’t set out to find a new product idea. I was conducting customer development interviews for the KISSmetrics web analytics product, and the problem that ended up becoming KISSinsights emerged as a pattern I couldn’t ignore.

I asked, keeping my language deliberately vague, what tools people were using for metrics for their websites. Nearly every potential customer mentioned two tools: Google Analytics and UserVoice. Many others had done usability testing, either by outsourcing it or using UserTesting.com or similar online tools to conduct usability testing in-house.

Originally, the purpose of my interviews was to validate that people needed better analytics—that they’d be willing to fire the free Google Analytics tool and instead pay to use KISSmetrics.

We weren’t competing with qualitative feedback platforms or usability testing. This was a tangent—a distraction from what I was trying to learn about our web analytics product—but it was an interesting tangent.

When customers keep telling you something, you’d be crazy to ignore them.

After four or five interviews, I was already seeing a pattern. People were getting frustrated when they talked about getting feedback and understanding users. They felt helpless. I heard:

I wish I knew what people were thinking when they’re on my site.

I tried surveys but they were a waste of time—takes forever to write one, and then you get, like, two responses.

All I hear from that feedback tab is “you’re awesome” and “you suck,” and neither of those is helpful.

I wish I could sit on a customer’s shoulder and, at the exact right moment, ask “why aren’t you buying?” or “what is confusing you right now?”

After the fourth or fifth unprompted comment, I formed a simple hypothesis:

Product manager types of people have a problem doing fast/effective/frequent customer research.

To learn more, the KISSmetrics team did two things in parallel:

The developers created an MVP to validate customer demand—a splash page summarizing the concept leading to a survey form where customers could sign up to use the beta product when it was available.

I began conducting customer interviews specific to this qualitative research concept. I asked what people were learning from qualitative feedback forms. I asked about other types of usability testing or research they’d done. I asked what was stopping them from doing more research. I asked what, if they could wave a magic wand and know anything about their visitors, they’d like to know.

Because we had an existing base of customers, I was able to quickly recruit and conduct 20 interviews. Within 20 interviews, I had a summary that looked like this:

- What are customers doing right now to solve this problem?

Going without customer research.

- What are other tools not providing/solving that customers really want?

Nonpublic feedback, ability to target people on specific pages or in the midst of specific actions.

- Who is involved in collecting customer feedback today?

The product manager, who is eager to get this information. The developer, who is reluctant to take away time from coding features to spend time on this.

- How severe/frequent is the pain?

Constant—“Whenever we make decisions on what to build.” No visibility into why things aren’t working.

- What else did people have strong emotions around?

Hate writing surveys, hate having to ask a developer for help, embarrassed that they don’t know where to start.

This made us confident enough to invest in building a very quick MVP that was poorly stylized and hard-coded to work only on the KISSmetrics site. As soon as our existing customers saw it, they started emailing us to ask, “How can I get that survey thing for my site?”

What felt like a tangent turned into an entirely new product: KISSinsights. Instead of a persistent and generic feedback tab, the KISSinsights survey could be configured to pop up only to visitors who’d been on a page for a certain amount of time and show a highly relevant, specific question. Best yet, we could provide prewritten survey questions so that people didn’t have to write their own.

Instead of the typical 1% to 2% response rates on surveys, KISSinsights customers were seeing anywhere from 10% to 40% response rates with high-signal, actionable responses. One customer, OfficeDrop, used KISSinsights to identify issues that led to a 40% increase in their signup conversion rate.[53] In 2012, the KISSinsights product was sold to another company and rebranded as Qualaroo. Qualaroo continues to use customer development to evolve the product and business model.

Now What?

There’s a natural ebb and flow to customer development. Once you’ve validated a hypothesis, the next step is to move forward in that direction.

If you don’t have a product yet, you’ll need to take what you’ve learned and use it in building (or changing) your MVP. In Chapter 7, we’ll cover different types of MVPs so that you can figure out which best maps to the resources you have and the questions you need to answer.

If you’re working with an existing product and looking for ways to improve it, Chapter 8 will feel like more familiar territory to you. Still, I encourage you to read Chapter 7—you may be surprised by how well MVPs have worked for even the largest companies.

[49] I know; this is a very frustrating and subjective answer. It reminds me of verbal driving directions in New England: go to the center of town and turn left. How will you know when you get to the center of town? Unless you’ve been in a small New England town, you might not believe this is enough information. Once you have, you realize that it is.

[50] Even if the customer is willing to invest time and resources into solving a problem, she may be constrained by other stakeholders, by rules or regulations, or by cultural or social norms about what is acceptable.

For example, teachers may be willing to invest in a solution that allows them to more effectively educate, but be unable to override a curriculum set at the state level. Restaurant workers may wish to reduce food waste, but be constrained by health regulations. Teenagers may be desperate for a job training program but live in a place with no viable public transit and no adult able to drive them to the location.

[51] Social psychology research theorizes that questions “arouse the reader’s uncertainty and motivate more intensive processing of message content than statements.” See Robert E. Burnkrant and Daniel J. Howard, “Effects of the Use of Introductory Rhetorical Questions Versus Statements on Information Processing,” Journal of Personality and Social Psychology, vol 47(6), Dec 1984.

[52] To be fair, customers would still prefer it if you said, “Yes, of course we’ll build what you asked for.” But they’re fairly well placated by hearing that we talked to customers, put a lot of thought into their problems, and have made progress toward fixing them—even if it’s not in the manner the customer expected.