Chapter 9. Using the cloud

- Introducing cloud computing

- Working with multiple cloud providers

- Gathering information on the cloud host

- Compiling to various operating systems and architectures

- Monitoring the Go runtime in an application

Cloud computing has become one of the buzzwords of modern computing. Is it just a buzzword or something more? This chapter opens with an introduction to cloud computing that explores this question and what cloud computing looks like in a practical sense. You’ll see how it relates to the traditional models working with hardware servers and virtual machines.

Cloud computing is a space filled with various cloud providers. It’s easy to build an application that ends up being locked into a single vendor. You’ll learn how to avoid cloud vendor lock-in while architecting code in a manner that’s easier to develop locally and test.

When you’re ready to run your application in the cloud, you’ll find situations you need to work with, such as learning about the host your application is running on, monitoring the Go runtime inside every application, and cross-compiling to various systems before deploying. You’ll explore how to do this while avoiding pitfalls that can catch you off guard.

This chapter rounds out some key cloud concepts. After you’ve completed this chapter and the previous chapters, you’ll have what you need to build and operate cloud-based applications written in Go.

9.1. What is cloud computing?

This is one of the fundamental questions that can make all the difference in both software development and operating applications. Is cloud a marketing term? Yes. Is there something else fundamentally different going on? Yes. Given the way the term is thrown around for use in everything from web applications and phone applications to physical servers and virtual machines, its meaning can be difficult to navigate for someone not familiar with the space. In this introductory section, you’ll learn about cloud computing in a way that you can apply to software development and operations.

9.1.1. The types of cloud computing

In the simplest form of cloud computing, part of a system is managed by someone else. This can be someone else in your company, an outside service provider, an automation system, or any combination of these. If an outside service provider is providing part of the stack, what parts are they providing? Figure 9.1 shows the three forms of cloud computing and how they compare to an environment where you own the entire stack.

Figure 9.1. The types of cloud computing

With a traditional server or rack of servers, you need to manage all of the components of the stack, right down to the physical space holding the hardware. When changes are needed, someone needs to order the hardware, wait for it to show up, connect it, and manage it. This can take a bit of time.

Infrastructure as a service

Accessing infrastructure as a service (IaaS) is different from previous forms of working with virtual machines. Sure, services have been providing virtual machines and colocated servers for years. When IaaS came into being, the change was the way those servers were used and accessed.

Prior to IaaS, you’d typically get a server and then continue to use that server for months or years. IaaS turned that on its head. Under an IaaS setup, virtual servers are created and destroyed as needed. When you need a server, it’s created. As soon as you no longer need it, you return it to the pool of available resources. For example, if you need to test an idea, you might create many servers, test the idea, and then immediately get rid of the servers.

Creating and working with IaaS resources happens via a programmable API. This is typically a REST API that can be used by command-line tools and applications to manage the resources.

IaaS is about more than servers. Storage, networking, and other forms of infrastructure are accessible and configurable in the same way. As the name suggests, the infrastructure is the configurable part. The operating system, runtime environment, application, and data are all managed by cloud consumers and their management software.

Examples of an IaaS include services provided by Amazon Web Services, Microsoft Azure, and Google Cloud.

Platform as a service

Platform as a service (PaaS) differs from IaaS in some important ways. One of the simplest is in how you work with it. To deploy an application to a platform, you use an API to deploy your application code and supporting metadata, such as the language the application was written in. The platform takes this information and then builds and runs the application.

In this model, the platform manages the operating system and runtime. You don’t need to start virtual machines, choose their resource sizes, choose an operating system, or install system software. Handling those tasks is left up to the platform to manage. You gain back the time typically spent managing systems so you can focus on other tasks such as working on your application.

To scale applications, instances of the application are created and run in parallel. In this way, applications are scaled horizontally. A PaaS may be able to do some scaling automatically, or you can use an API to choose the number of instances yourself.

Heroku, Cloud Foundry, and Deis are three of the most widely known examples of a PaaS.

Software as a service

Say your application needs a database to store data in. You could create a cluster of virtual machines using IaaS, install the database software and configure it, monitor the database to make sure everything works properly, and continually update the system software for security. Or you could consume a database as a service and leave the operations, scale, and updates to a service provider. Using APIs, you set up a database and access it. This ladder case is an example of software as a service (SaaS), shown in figure 9.2.

Figure 9.2. The layers of cloud services can sit on each other.

SaaS comprises a wide range of software, from the building blocks to other applications to consumer applications. Using SaaS for application building blocks, such as databases and storage, allows teams to focus on the activities that make their applications different.

SaaS examples are wide-ranging and include Salesforce, Microsoft Office 365, and the payment processor Stripe.

9.1.2. Containers and cloud-native applications

When Docker, the container management software, came into the public eye, using containers to run and distribute applications became popular. Containers are different from virtual machines or traditional servers.

Figure 9.3 compares virtual machines and containers. On the left is a system, going all the way down to the hardware server, that runs virtual machines. On the right is a separate system running containers. Each is running two applications as workloads, with App A being scaled horizontally to have two instances, for a total of three workload instances.

Figure 9.3. Comparing containers and virtual machines

When virtual machines run, the hypervisor provides an environment for an operating system to run in that emulates hardware or uses special hardware for virtual machines. Each virtual machine has a guest operating system with its own kernel. Inside the operating system are all the applications, binaries, and libraries in the operating system or set up by the users. Applications run in this environment. When two instances of an application run in parallel (which is how horizontal scaling works), the entire guest operating system, applications, libraries, and your application are replicated.

Using virtual machines comes with certain architectural elements worth noting:

- When a virtual machine starts up, the kernel and guest operating system need time to boot up. This takes time because computers take time to boot up.

- Hypervisors and modern hardware can enforce a separation between each virtual machine.

- Virtual machines provide an encapsulated server with resources being assigned to it. Those resources may be used by that machine or held for that machine.

Containers operate on a different model. The host server, whether it’s a physical server or virtual machine, runs a container manager. Each container runs in the host operating system. When using the host kernel, its startup time is almost instantaneous. Containers share the kernel, and hardware drivers and the operating system enforce a separation between the containers. The binaries and libraries used, commonly associated with the operating system, can be entirely separate. For example, in figure 9.3, App A could be using the Debian binaries and libraries, and App B could be running on those from CentOS. The applications running in those containers would see their environment as Debian or CentOS.

Cloud-native applications is a term that tends to be used alongside containers. Cloud-native applications take advantage of the programmatic nature of the cloud to scale with additional instances on demand, remediate failures so many problems in the systems are never experienced by end users, tie together microservices to build larger applications, and more. Containers, with their capability to start almost instantly while being more densely placed on the underlying servers than virtual machines, provide an ideal environment for scaling, remediation, and microservices.

Definition

Remediation is the automatic correction of problems in running applications. Microservices are small, independent processes that communicate with other small processes over defined APIs. Microservices are used together to create larger applications. Microservices are covered in more detail in chapter 10.

This only scratches the surface of containers, cloud-native computing, and cloud computing in general. If you’re interested in more information, numerous books, training courses, and other information cover the topic in more detail.

One of the most important elements of cloud services that we need to look at in more detail is the way you manage the services. The interface to manage cloud services provides a point of interaction for Go applications.

9.2. Managing cloud services

Managing cloud services, whether they’re IaaS, PaaS, or SaaS, typically happens through an API. This enables command-line tools, custom user interfaces, autonomous applications (bots), and other tools to manage the services for you. Cloud services are programmable.

Web applications, such as the web console each cloud service vendor provides, appear to be simple and effective ways to view and manage cloud services. For some simple cases, this works. But the full power of the cloud is in the ability to program it. That includes automatically scaling horizontally, automatically repairing problems, and operating on large numbers of cloud assets at the same time.

Each of the cloud providers offers a REST API, and most of the time an SDK is built to interact with the API. The SDK or the API can be used within your applications to use the cloud services.

9.2.1. Avoiding cloud provider lock-in

Cloud services are like platforms, and each has its own API. Although they provide the same or similar feature sets, their programmable REST API is often quite different. An SDK designed for one service provider won’t work against a competitor. There isn’t a common API specification that they all implement.

Technique 56 Working with multiple cloud providers

Many cloud service providers exist, and they’re distributed all over the world. Some are region-specific, conforming to local data-sovereignty laws, whereas others are global companies. Some are public and have an underlying infrastructure that’s shared with others; others are private, and everything down to the hardware is yours. These cloud providers can compete on price, features, and the changing needs of the global landscape.

Given the continuously changing landscape, it’s useful to remain as flexible as possible when working with cloud providers. We’ve seen code written specifically for one cloud provider that has caused an application to become stuck on that provider, even when the user wanted to switch. Switching would be a lot of work, causing delays in new features. The trick is to avoid this kind of lock-in up front.

Problem

Cloud service providers typically have their own APIs, even when they offer the same or similar features. Writing an application to work with one API can lead to lock-in with that API and that vendor.

Solution

The solution has two parts. First, create an interface to describe your cloud interactions. If you need to save a file or create a server instance, have a definition on the interface for that. When you need to use these cloud features, be sure to use the interface.

Second, create an implementation of the interface for each cloud provider you’re going to use. Switching cloud service providers becomes as simple as writing an implementation of an interface.

Discussion

This is an old model that’s proven to be effective. Imagine if computers could work with only a single printer manufacturer. Instead, operating systems have interfaces, and drivers are written to connect the two. This same idea can apply to cloud providers.

The first step is to define and use an interface for a piece of functionality rather than to write the software to use a specific provider’s implementation. The following listing provides an example of one designed to work with files.

Listing 9.1. Interface for cloud functionality

File handling is a useful case to look at because cloud providers offer different types of file storage, operators have differing APIs, and file handling is a common operation.

After an interface is defined, you need a first implementation. The easiest one, which allows you to test and locally develop your application, is to use the local filesystem as a store. This allows you to make sure the application is working before introducing network operations or a cloud provider. The following listing showcases an implementation of the File interface that loads and saves from the local filesystem.

Listing 9.2. Simple implementation of cloud file storage

After you have this basic implementation, you can write application code that can use it. The following listing shows a simple example of saving and loading a file.

Listing 9.3. Example using a cloud provider interface

The fileStore function retrieves an object implementing the File interface from listing 9.1. That could be an implementation connected to a cloud provider such as Amazon Web Services, Google Cloud, or Microsoft Azure. A variety of types of cloud file storage are available, including object storage, block storage, and file storage. The implementation could be connected to any of these. Although the implementation can vary, where it’s used isn’t tied to any one implementation.

The file store to use can be chosen by configuration, which was covered in chapter 2. In the configuration, the details of the type and any credentials can be stored. The credentials are important because development and testing should happen by using different account details from production.

In this example, the following function uses the local filesystem to store and retrieve files. In an application, the path will likely be a specific location:

func fileStore() (File, error) {

return &LocalFile{Base: "."}, nil

}

This same concept applies to every interaction with a cloud provider, whether it’s adding compute resourcing (for example, creating virtual machines), adding users to an SaaS customer relationship management (CRM) system, or anything else.

9.2.2. Dealing with divergent errors

Handling errors is just as important as handling successful operations when interacting with different cloud providers. Part of what has made the internet and those known for using the cloud successful is handling errors well.

Technique 57 Cleanly handling cloud provider errors

In technique 56, errors from the Load and Save methods were bubbled up to the code that called them. In practice, details from the errors saving to the local filesystem or any cloud provider, which are different in each implementation, were bubbled up to the application code.

If the application code that calls methods such as Load and Save is going to display errors to end users or attempt to detect meaning from the errors in order to act on them, you can quickly run into problems when working with multiple cloud providers. Each cloud provider, SDK, or implementation can have different errors. Instead of having a clean interface, such as the File interface in listing 9.1, the application code needs to know details about the implementations errors.

Problem

How can application code handle interchangeable implementations of an interface while displaying or acting on errors returned by methods in the interface?

Solution

Along with the methods on an interface, define and export errors. Instead of returning implementation-specific errors, return package errors.

Discussion

Continuing the examples from technique 56, the following listing defines several common errors to go along with the interface.

Listing 9.4. Errors to go with cloud functionality interface

By defining the errors as variables that are exported by the package, they can be used in comparisons. For example:

if err == ErrFileNotFound {

fmt.Println("Cannot find the file")

}

To illustrate using these errors in implementations of an interface, the following listing rewrites the Load method from listing 9.2 to use these errors.

Listing 9.5. Adding errors to file load method

Logging the original error is important. If a problem occurs when connecting to the remote system, that problem needs to be logged. A monitoring system can catch errors communicating with external systems and raise alerts so you have an opportunity to remediate the problems.

By logging the original error and returning a common error, the implementation error can be caught and handled, if necessary. The code calling this method can know how to act on returned errors without knowing about the implementation.

Logging is important to observe an application operating in its environment and debug issues, but that’s just the beginning of operating in the cloud. Detecting the environment, building in environment tolerance, monitoring the runtime, detecting information when it’s needed, and numerous other characteristics affect how well it runs and your ability to tune the application. Next you’ll look at applications running in the cloud.

9.3. Running on cloud servers

When an application is being built to run in the cloud, at times you may know all the details of the environment, but at other times information will be limited. Building applications that are tolerant to unknown environments will aid in the detection and handling of problems that could arise.

At the same time, you may develop applications on one operating system and architecture but need to operate them on another. For example, you could develop an application on Windows or Mac OS X and operate it on Linux in production.

In this section, you’ll explore how to avoid pitfalls that can come from assuming too much about an environment.

9.3.1. Performing runtime detection

It’s usually a good idea to detect the environment at runtime rather than to assume characteristics of it in your code. Because Go applications communicate with the kernel, one thing you need to know is that if you’re on Linux, Windows, or another system, details beyond the kernel that Go was compiled for can be detected at runtime. This allows a Go application to run on Red Hat Linux or Ubuntu. Or a Go application can tell you if a dependency is missing, which makes troubleshooting much easier.

Technique 58 Gathering information on the host

Cloud applications can run in multiple environments such as development, testing, and production environments. They can scale horizontally, with the potential to have many instances dynamically scheduled. And they can run in multiple data centers at the same time. Being run in this manner makes it difficult to assume information about the environment or pass in all the details with application configuration.

Instead of knowing through configuration, or assuming host environment details, it’s possible to detect information about the environment.

Problem

How can information about a host be detected within a Go application?

Solution

The os package enables you to get information about the underlying system. Information from the os package can be combined with information detected through other packages, such as net, or from calls to external applications.

Discussion

The os package has the capability to detect a wide range of details about the environment. The following list highlights several examples:

- os.Hostname() returns the kernel’s value for the hostname.

- The process ID for the application can be retrieved with os.Getpid().

- Operating systems can and do have different path and path list separators. Using os.PathSeparator or os.PathListSeparator instead of characters allows applications to work with the system they’re running on.

- To find the current working directory, use os.Getwd().

Information from the os package can be used in combination with other information to know more about a host. For example, if you try to look up the IP addresses for the machine an application is running on by looking at all the addresses associated with all the interfaces to the machine, you can end up with a long list. That list would include the localhost loop-back and IPv4 and IPv6 addresses, even when one case may not be routable to the machine. To find the IP address to use, an application can look up the hostname, known by the system, and find the associated IP address. The following listing shows this method.

Listing 9.6. Look up the host’s IP addresses via the hostname

The system knows its own hostname, and looking up the address for that hostname will return the local one. This is useful for an application that can be run in a variety of environments or scaled horizontally. The hostname and address information could change or have a high rate of variability.

Go applications can be compiled for a variety of operating systems and run in various environments. Applications can detect information about their environment rather that assuming it. This removes the opportunity for bugs or other unexpected situations.

Technique 59 Detecting dependencies

In addition to communicating with the kernel or base operating system, Go applications can call other applications on the system. This is typically accomplished with the os/exec package from the standard library. But what happens if the application being called isn’t installed? Assuming that a dependency is present can lead to unexpected behavior, and a failure to detect any issues in a reportable way makes detecting the problem in your application more difficult.

Problem

How can you ensure that it’s okay to execute an application before calling it?

Solution

Prior to calling a dependent application for the first time, detect whether the application is installed and available for you to use. If the application isn’t present, log an error to help with troubleshooting.

Discussion

We’ve already talked about how Go applications can run on a variety of operating systems. For example, if an application is compiled for Linux, it could be running on a variety of distributions with different applications installed. If your application relies on another application, it may or may not be installed. This becomes more complicated with the number of distributions available and used in the cloud. Some specialized Linux distributions for the cloud are small, with limited or virtually no commands installed.

Anytime a cloud application relies on another application being installed, it should validate that dependency and log the absence of the missing component. This is relatively straightforward to do with the os/exec package. The following listing provides a function to perform detection.

Listing 9.7. Function to check whether the application is available

This function can be used within the flow of an application to check whether a dependency exists. The following snippet shows an example of checking and acting on an error:

err := checkDep("fortune")

if err != nil {

log.Fatalln(err)

}

fmt.Println("Time to get your fortunte")

In this example, the error is logged when a dependency isn’t installed. Logging isn’t always the action to take. There may be a fallback method to retrieve the missing dependency or an alternative dependency to use. Sometimes a missing dependency may be fatal to an application, and other times it can skip an action when a dependency isn’t installed. When you know something is missing, you can handle the situation appropriately.

9.3.2. Building for the cloud

There’s no one hardware architecture or operating system for the cloud. You may write an application for the AMD64 architecture running on Windows and later find you need to run it on ARM8 and a Linux distribution. Building for the cloud requires designing to support multiple environments, which is easier handled up front in development and is something the standard library can help you with.

Technique 60 Cross-compiling

In addition to the variety of environments in cloud computing, it’s not unusual to develop a Go application on Microsoft Windows or Apple’s OS X and want to operate it on a Linux distribution in production, or to distribute an application via the cloud with versions for Windows, OS X, and Linux. In a variety of situations, an application is developed in one operating system but needs to run in a different one.

Problem

How can you compile for architectures and operating systems other than the one you’re currently on?

Solution

The go toolchain provides the ability to cross-compile to other architectures and operating systems. In addition to the go toolchain, gox allows you to cross-compile multiple binaries in parallel. You also can use packages, such as filepath, to handle differences between operating systems instead of hardcoding values, such as the POSIX path separator /.

Discussion

As of Go 1.5, the compiler installed with the go toolchain can cross-compile out of the box. This is done by setting the GOARCH and GOOS environment variables to specify the architecture and operating system. GOARCH specifies the hardware architecture such as amd64, 386, or arm, whereas GOOS specifies the operating system such as windows, linux, darwin, or freebsd.

The following example provides a quick illustration:

$ GOOS=windows GOARCH=386 go build

This tells go to build a Windows binary for the 386 architecture. Specifically, the resulting executable will be of the type “PE32 executable for MS Windows (console) Intel 80386 32-bit.”

Warning

If your application is using cgo to interact with C libraries, complications can arise. Be sure to test the applications on all cross-compiled platforms.

If you want to compile to multiple operating systems and architectures, one option is gox, which enables building multiple binaries concurrently, as shown in figure 9.4.

Figure 9.4. gox builds binaries for different operating systems and architectures concurrently.

You can install gox as follows:

$ go get -u github.com/mitchellh/gox

After gox is installed, you can create binaries in parallel by using the gox command. The following listing provides an example of building an application for OS X, Windows, and Linux on both the AMD64 and 386 architectures.

Listing 9.8. Cross-compile an application with gox

When building binaries in other operating systems—especially when operating them in the cloud—it’s a best practice to test the result before deploying. This way, any environment bugs can be detected before deploying to that environment.

Besides compiling for different environments, differences between operating systems need to be handled within an application. Go has two useful parts to help with that.

First, packages provide a single interface that handles differences behind the scenes. For example, one of the most well-known is the difference between path and path list separators. On Linux and other POSIX-based systems, these are / and :, respectively. On Windows, they’re and ;. Instead of assuming these, use the path/filepath package to make sure any paths are handled safely. This package provides features such as the following:

- filepath.Separator and filepath.ListSeparator—Represent the appropriate path and list separator values on any operating system the application is compiled to. You can use these when you need direct access to the separators.

- filepath.ToSlash—Take a string representing a path and convert the separators to the correct value.

- filepath.Split and filepath.SplitList—Split a path into its parts or split a list of paths into individual paths. Again, the correct separators will be used.

- filepath.Join—Join a list of parts into a path, using the correct separator for the operating system.

The go toolchain also has build tags that allow code files to be filtered, based on details such as operating system and architecture when being compiled. A build tag is at the start of a file and looks like this:

// +build !windows

This special comment tells the compiler to skip this file on Windows. Build tags can have multiple values. The following example skips building a file on Linux or OS X (darwin):

// +build !linux,!darwin

These values are linked to GOOS and GOARCH options.

Go also provides the ability to name files in such a way that they’re picked up for the different environments. For example, foo_windows.go would be compiled and used for a Windows build, and foo_386.go would be used when compiling for the 386 (sometimes called x86) hardware architecture.

These features enable applications to be written for multiple platforms while working around their differences and tapping into what makes them unique.

9.3.3. Performing runtime monitoring

Monitoring is an important part of operating applications. It’s typical to monitor running systems to find issues, to detect when the load has reached levels that require scaling up or down, or to understand what’s going on within an application to speed it up.

The easiest way to monitor an application is to write issues and other details to a log. The log subsystem can write to disk and another application can read it or the log subsystem can push it out to a monitoring application.

Technique 61 Monitoring the Go runtime

Go applications include more than application code or code from libraries. The Go runtime sits in the background, handling the concurrency, garbage collection, threads, and other aspects of the application.

The runtime has access to a wealth of information. That includes the number of processors seen by the application, current number of goroutines, details on memory allocation and usage, details on garbage collection, and more. This information can be useful for identifying problems within the application or for triggering events such as horizontal scaling.

Problem

How can your application log or otherwise monitor the Go runtime?

Solution

The runtime and runtime/debug packages provide access to the information within the runtime. Retrieve information from the runtime by using these packages and write it to the logs or other monitoring service at regular intervals.

Discussion

Imagine that an imported library update includes a serious bug that causes the goroutines it created to stop going away. The goroutines slowly accumulate so that millions of them are being handled by the runtime when it should have been hundreds. (We, the authors, don’t need to imagine this situation, because we’ve encountered it.) Monitoring the runtime enables you to see when something like this happens.

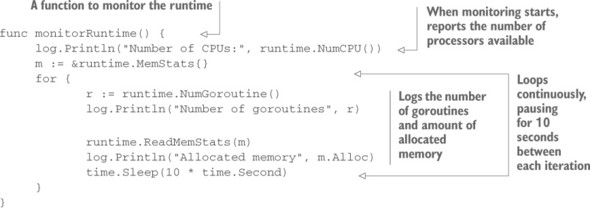

When an application starts up, it can start a goroutine to monitor the runtime and write details to a log. Running in a goroutine allows you to run the monitoring and write to the logs concurrently, alongside the rest of the application, as the following listing shows.

Listing 9.9. Monitor an application’s runtime

It’s important to know that calls to runtime.ReadMemStats momentarily halt the Go runtime, which can have a performance impact on your application. You don’t want to do this often, and you may want to perform operations that halt the Go runtime only when in a debug mode.

Organizing your runtime monitoring this way allows you to replace writing to the log with interaction with an outside monitoring service. For example, if you were using one of the services from New Relic, a monitoring service, you would send the runtime data to their API or invoke a library to do this.

The runtime package has access to a wealth of information:

- Information on garbage collection, including when the last pass was, the heap size that will cause the next to trigger, how long the last garbage collection pass took, and more

- Heap statistics, such as the number of objects it includes, the heap size, how much of the heap is in use, and so forth

- The number of goroutines, processors, and cgo calls

We’ve found that monitoring the runtime can provide unexpected knowledge and highlight bugs. It can help you find goroutine issues, memory leaks, or other problems.

9.4. Summary

Cloud computing has become one of the biggest trends in computing and is something Go is quite adept at. In this chapter, you learned about using Go in the cloud. Whereas previous chapters touched on complementary topics to cloud computing, this chapter covered aspects for making successful Go applications in the cloud, including the following:

- Working with various cloud providers, while avoiding vendor lock-in

- Gathering information about the host rather than assuming details of it

- Compiling applications for varying operating systems and avoiding operating system lock-in

- Monitoring the Go runtime to detect issues and details about a running application

In the next chapter, you’ll explore communicating between cloud services by using techniques other than REST APIs.