Chapter 11: Hash Object Memory Management

11.2 Memory vs. Disk Trade-Off

11.2.2 Hash Memory Overload Scenarios and Solutions

11.3 Making Use of Existing Key Order

11.4.2 MD5 Key Reduction in Sample Data

11.5 Data Portion Offload (Hash Index)

11.5.2 Selective Unduplication

11.6.1 Uniform Split Using Key Properties

11.6.2 Aggregation via Partial Key Split

11.6.3 Aggregation via Key Byte Split

11.6.4 Joining via Key Byte Split

11.7 Uniform MD5 Split On the Fly

11.7.1 MD5 Split Aggregation On the Fly

11.7.2 MD5 Split Join On the Fly

11.8 Uniform Split Using a SAS Index

11.9 Combining Hash Memory-Saving Techniques

11.10 MD5 Argument Concatenation Ins and Outs

11.10.1 MD5 Collisions and SHA256

11.10.2 Concatenation Length Sizing

11.10.4 Concatenation Delimiters and Endpoints

11.10.5 Auto-Formatting and Explicit Formatting

11.10.6 Concatenation Order and Consistency

11.1 Introduction

Anticipating performance and memory issues, the Bizarro Ball IT users have asked us to address several issues relating to performance, specifically data access times and memory management. If they should decide to proceed with the implementation of a follow-on project, they want to know what the options are as the amount of data grows. The generated data for our Proof of Concept included data for only one year. Any project will have to deal with multiple years of data.

This chapter focuses on the following three (overlapping) techniques:

1. Reducing the size of the key portion of the hash items.

2. Reducing the size of the data portion of the hash items.

3. Partitioning the data into groups that can be processed independently of one another.

This chapter addresses some of those concerns. Since the focus of this chapter is memory and performance and not the individual SAS programs, the IT and business users agreed that we need not annotate the programs to the same degree of detail as done for most of the previous programs.

Many of the examples here replicate samples in Chapters 6 and 10 by incorporating the techniques discussed here.

11.2 Memory vs. Disk Trade-Off

The data processing efficiency offered by the SAS hash object rests on two elements:

1. Its key-access operations, with the hashing algorithm running underneath, are executed in O(1) time.

2. Its data and underlying structure reside completely in memory.

These advantages over disk-based structures are easy to verify via a simple test. Consider, say, such ubiquitous data processing actions as key search and data retrieval against a hash table on the one hand, and against an indexed SAS data set on the other hand. For example, suppose that we have a SAS data set created from data set Dw.Pitches and indexed on a variable RID valued with its unique observation numbers. The DATA step creating it is shown as part of Program 11.1 below.

The DATA _NULL_ step in this program does the following:

1. Loads file Pitches into a hash table H with RID as its key and Pitcher_ID and Result as its data.

2. For all RID key-values in Pitches and an equal number of key-values not in Pitches, searches for RID in the table. If the key-value is found, retrieves the corresponding data values of Pitcher_ID and Result from the hash table into the PDV.

3. Does exactly the same against the indexed data set Pitches using its SAS index for search and retrieval.

4. Repeats both tests above the same number of times (specified in the macro variable test_reps below).

5. Calculates the summary run times for both approaches and reports them in the SAS log.

Program 11.1 Chapter 11 Hash Vs SAS Index Access Speed.sas

data Pitches (index=(RID) keep=RID Pitcher_ID Result) ;

set dw.Pitches ;

RID = _N_ ;

run ;

%let test_reps = 10 ;

data _null_ ;

dcl hash h (dataset: "Pitches", hashexp:20) ;

h.definekey ("RID") ;

h.defineData ("Pitcher_ID") ;

h.defineDone () ;

time = time() ;

do Rep = 1 to &test_reps ;

do RID = 1 to N * 2 ;

rc = h.find() ;

end ;

end ;

Hash_time = time() - time ;

time = time() ;

do Rep = 1 to &test_reps ;

do RID = 1 to N * 2 ;

set Pitches key=RID nobs=N ;

end ;

end ;

_error_ = 0 ; * prevent log error notes ;

Indx_time = time() - time ;

put "Hash_time =" Hash_time 6.2-R

/ "Indx_time =" Indx_time 6.2-R ;

stop ;

run ;

Note that the value of N used in both of these loops is initialized to the number of observations in Pitches because it is the variable specified in the SET statement NOBS= option. Also note that above, automatic variable _ERROR_ is set to zero to prevent the error notes in the log when the SET statement with the key=RID option fails to find the key.

When the program is run, the PUT statement prints the following in the SAS log:

Hash_time = 2.15

Indx_time = 21.58

As we see, a run with an equal number of search successes and failures shows that for the purposes of key search and data retrieval the SAS hash object works faster than the SAS index by about an order of magnitude. This is a consequence of both the difference between the search algorithms (the binary search versus hash search) and the access speed difference between memory and disk storage. The run-time figures above were obtained on the X64_7PRO platform. We told our IT users that if they are interested in trying it out under a different system, the program is included in the program file Chapter 11 Hash Vs SAS Index Access Speed.sas.

Note that the test above is strongly biased in favor of the SAS index because it always reads adjacent records which reduces paging, i.e., block I/O. Running it with the RID values picked randomly between 1 and N*2 shows that, in this case, the hash/(SAS index) speed ratio exceeds 50:1.

11.2.1 General Considerations

Holding data in memory comes at a price – the steeper, the larger the data volume handled in this manner is. At some point, it may approach or exceed the RAM capacity of the machine being used to process the data. As the IT users pointed out, the sample baseball data used in this book, though large and rich enough for our illustrative purposes, presents no challenge from this standpoint, as its largest data set of about 100 MB in size can nowadays be easily swallowed by the memory of even the most modest modern computing device. However, industrial practice, with its need to manipulate big and ever increasing volumes of data, presents a different picture. As the number of hash items, as well as the number of the hash variables and cardinality of the table keys grows, the hash memory footprint required to accomplish a task can easily reach into the territory of hundreds of gigabytes.

The sledge-hammer approach, of course, is to install more memory. Under some circumstances, it indeed makes sense. For instance, if it is vital for a large business to make crucial decisions based on frequently aggregated data (a "lead indicators" report in the managed health industry could be a good example), it appears to make more sense to invest in more RAM than to pay for coding around woeful hardware inadequacies. But as the authors have told the Bizarro Ball business users, our own experience of working on a number of industrial projects shows that this route can be blocked for a number of reasons.

Even if more machine resources can be obtained, it may turn out to be a temporary fix, working only till the time when the data volume grows or business demands change (for example, to require more hierarchical levels of data aggregation). In other words, crudely unoptimized code does not scale well. Thus, responsible SAS programmers do not merely throw a glaringly unoptimized program at the machine in hope that it will handle it and clamor for more hardware when it fails due to insufficient resources. Rather, they endeavor to avoid at least gross inefficiencies, regardless of whether or not the hardware has the capacity to cover for them at the moment, by taking at least obvious inexpensive measures to improve performance. Examples include making sure that the program reads and writes only the variables and records it needs, avoiding unnecessary sorts, etc.

Furthermore, sensible programmers aim to foresee how their code will behave if changes to the data, its volume, or business needs occur in the future – and structure their programs accordingly. Doing so requires more time and higher skill; yet, in the end, the result can prove to be well worth the price. Typical examples of this kind of effort are employing more efficient algorithms (e.g., the binary search rather than linear search) and making use of table lookup (e.g., via a format or a hash table) instead of sorting and merging, etc. This way, if (or rather when) the changes happen, chances are that the program will run successfully again in spite of them.

With the hash object, it is no different. Memory, though getting increasingly cheaper and abundant, is still a valuable resource. Hence, as the first line of defense, a programmer using the hash object should not overload it with unnecessary information for the sake of seemingly "simpler" coding and fewer lines of code. For instance, it is obvious that, to save memory, the hash table entry should contain only the variables and items it needs to do the job. Thus, using the argument tag ALL:"Y" with the DEFINEKEY and DEFINEDATA methods as a code shortcut (instead of defining only the needed variables in one way or another) must be judged accordingly. Likewise, if a hash table is used only for pure search (to find out if a key is there), using the MULTIDATA:"Y" argument tag is extraneous, as well as anything but a single short variable in the data portion.

As Bizarro Ball considers future expansion to more leagues, teams, and so on (e.g., some of the executives would like to offer the data analysis services to collegiate teams), expected data volumes may very well increase dramatically. Thus, hash memory management could be an important issue in the near term.

11.2.2 Hash Memory Overload Scenarios and Solutions

It is another question how to deal with more complex scenarios of the hash object running out of memory in spite of observing these obvious precautions. Typical circumstances under which it can occur include, but are not limited to, the following:

● A hash table is used as a lookup table for joining files. It can run out of memory if:

1. The file loaded into it has too many records.

2. The files are joined by a composite key with too many and/or too long components.

3. The satellite variables loaded into the data portion for future retrieval are too numerous and/or too long.

● A hash table is used as a container for data aggregation. It can run out of memory if:

1. The aggregation occurs at too many distinct key levels, consequently requiring too many unique-key table items.

2. The statistics to be aggregated are too numerous, and so are the corresponding data portion variables needed to hold them.

3. The keys are too numerous and/or long, and so are both the key and data portion variables needed to hold them (in the data portion, they are required in order to appear in the output).

Here are several tactics of resolving these hash memory overload problems which we will discuss in detail in the rest of this chapter:

● Take advantage of intrinsic pre-grouping. If the data input is sorted or grouped by one or more leading components of a composite key, use the BY statement to process each BY group separately, clearing the hash table after each group is processed. It works best if the BY groups are approximately equal in size.

● Reduce the key portion length. If the hash key is composite and the summary length of its components significantly exceeds 16 bytes, use the one-way hash function MD5 to shorten the entire key portion to a single $16 key. This can be done regardless of the total length of the composite key or the number of its components.

● Offload the data portion variables (use a hash index). It means that only the key (simple, composite, or its MD5 signature) is stored in the key portion, and only the file record identifier (RID) is stored in the data portion. When in the course of data processing a key-value is found in the table, the requisite satellite variables, instead of being carried in the data portion, are brought into the PDV by reading the input file via the SET POINT=RID statement. Effectively, this is tantamount to using a hash index created in the DATA step and existing for its duration.

● Use a known key distribution to split the input. If the values of some composite key component (or any if its bytes or group of bytes, for that matter) are known to correspond to a more or less equal number of distinct composite key-values, process the file using the WHERE clause separately for each such distinct value (or a number of distinct values) of such a component one at a time. As a result, the hash memory footprint will be reduced by the number of the separate passes.

● Use the MD5 function to split the input. Concatenate the components of the composite key and create an MD5 signature of the concatenated result. Because the MD5 function is a good hash function, the value of any byte (or a combination of bytes) of its signature will correspond to approximately the same number of different composite key-values. Then process the input in separate chunks based on these values using the WHERE clause as suggested above.

Note that these methods are not exclusive to themselves and can be combined. For example, you can both reduce the key portion length via the MD5 function and use the data portion offloading, any method can be combined with uniform input splitting, etc.

11.3 Making Use of Existing Key Order

It often happens that the input we intend to process using a hash table is pre-sorted or pre-grouped by one or more leading variables of the composite key in question. For example, consider data set Dw.Runs where each run is recorded as a record. A subset of the file for two arbitrarily selected games is shown below. Note that the column From_obs is not a variable in the file; it just indicates the observations from which the records in the subset come.

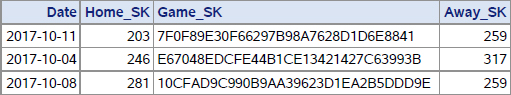

Output 11.1 Chapter 11 Two Games from Dw.Runs

Suppose that we want to process it in some way using the hash object – for example, aggregate or unduplicate it – by the composite key (Game_SK,Top_Bot). In general, to do so, we would create a hash table keyed by the entire composite key and proceed as described earlier in the book according to the type of task at hand.

However, this file, though not sorted or grouped by the whole composite key, is intrinsically sorted by its leading component Game_SK, whose distinct values correspond to approximately an equal number of distinct key-values of the entire composite key. Since Game_SK is part of the composite key, no two composite key-values can be the same if their Game_SK values are different. It means that no composite key-value is shared between the groups of records with different values of Game_SK. Therefore, we can make use of Game_SK in the BY statement and process each BY group independently. After each BY

group is processed, we would add needed records to the output and clear the hash table to prepare it for processing the next BY group. From the standpoint of memory usage, doing so offers three advantages:

1. The hash table can now be keyed only by Top_Bot rather than by both Games_SK and Top_Bot. It shortens the key portion of the table.

2. Game_SK does not have to be replicated in the data portion. It shortens the data portion of the table.

3. The table has to hold the information pertaining to the largest BY group rather than the entire file. It reduces the maximum number of items the hash table must hold.

To see more clearly how this concept works, let us consider three very basic examples:

1. Data aggregation

2. Data unduplication

3. Joining data

11.3.1 Data Aggregation

Suppose that for each game (identified by Game_SK) we would like to find the score by each of the opposing teams. Since each record in the Runs file represents a run, it means that we would need to count the records for the top and bottom of the inning (i.e., the 2 values of Top_Bot) within each game. In other words, we would need to aggregate at each level of composite key (Game_SK,Top_Bot).

From the data aggregation chapter in this book (Chapter 8) we already know how a hash object frontal attack on this task can be carried out:

1. Use a hash table to count the records at each distinct (Game_SK,Top_Bot) key-value level.

2. Output its content when the input has been all processed.

Program 11.2 Chapter 11 Frontal Attack Aggregation.sas

data Scores (keep = Game_SK Top_Bot Score) ;

if _n_ = 1 then do ;

dcl hash h (ordered:"A") ;

h.defineKey ("Game_SK", "Top_Bot") ;

h.defineData ("Game_SK", "Top_Bot", "Score") ;

h.defineDone () ;

dcl hiter ih ("h") ;

end ;

do until (LR) ;

set Dw.Runs (keep = Game_SK Top_Bot) end = LR ;

if h.find() ne 0 then Score = 1 ;

else Score + 1 ;

h.replace() ;

end ;

do while (ih.next() = 0) ;

output ;

end ;

run ;

For detailed explanations of the mechanics of this basic aggregation process, see any of the examples in Chapter 8. Running the program, we get the following output for the two selected games shown in Output 11.1:

Output 11.2 Chapter 11 Dw.Runs Aggregates

Note that hash table H in its final state (before its content was written out to data set Scores) has 4 items, which corresponds to the number of output records.

However, we know that the input is pre-sorted by Game_SK. Thus, we can reduce both the key portion and data portion lengths by aggregating the same file one Game_SK BY group at a time. The changes from the frontal attack Program 11.2 are shown in bold:

Program 11.3 Chapter 11 Pregrouped Aggregation.sas

data Scores_grouped (keep = Game_SK Top_Bot Score) ;

if _n_ = 1 then do ;

dcl hash h (ordered:"A") ;

h.defineKey ("Top_Bot") ; ❶

h.defineData ("Top_Bot", "Score") ; ❷

h.defineDone () ;

dcl hiter ih ("h") ;

end ;

do until (last.Game_SK) ; ❸

set Dw.Runs (keep = Game_SK Top_Bot) ;

by Game_SK ;

if h.find() ne 0 then Score = 1 ;

else Score + 1 ;

h.replace() ;

end ;

do while (ih.next() = 0) ; ❹

output ;

end ;

h.clear() ; ❺

run ;

The program generates the same exact output as the frontal attack Program 11.2. For our two sample games, it is identical to Output 11.2 above. Let us go over some focal points of Program 11.3:

❶ When the key portion is defined, the leading component Game_SK of the composite key (Game_SK,Top_Bot) is omitted. Including it is unnecessary since the aggregation occurs by the level of Top_Bot alone within each separate BY value of Game_SK.

❷ The data portion does not contain Game_SK either. Its output value comes from the PDV as populated directly by the SET statement.

❸ The DoW loop, as opposed to iterating over the whole file, now iterates over one Game_SK BY group at a time per one execution of the DATA step (or one iteration of its implied loop). Note that the logical structure of the program remains intact. Also note that if the data were grouped, but not sorted by Game_SK, we could add the NOTSORTED option to the BY statement.

❹ At this point, hash table H contains the distinct key-values of Top_Bot and respective sums accumulated for the BY group just processed. Hash iterator IH linked to hash table H enumerates the table, and the data portion values of its items are written as records to the output data set Scores_grouped. Every BY group that follows adds its own records to the output file in the same manner.

❺ Now that the needed information (resulting from the processing of the current BY group) is written out, the table is cleared before the processing of the next BY group commences. Thus, the memory occupied by the hash data from the current BY group is released. Therefore, the largest hash memory footprint the program will create is that for the largest BY Game_SK group available in the file.

From the standpoint of saving hash memory, the more uniformly the values of Game_SK are distributed throughout the input file and the more distinct values of Game_SK (i.e., BY groups) we have, the better this method works. Running this program back-to-back with the frontal attack program with the FULLSTIMER system option turned on shows that, in this case, making use of the intrinsic leading key order cuts memory usage roughly by half. With a rather small file like Dw.Runs it may not matter much. However, in an industrial application working against billions of input records such a reduction can make a crucial difference.

Even if the distribution of the leading key(s) values is skewed, using the existing pre-grouping still saves hash memory by shortening both the key and data portions. In the examples shown above, the savings amount to 32 bytes per hash entry. Again, it may sound trivial but can be very significant if the items in a hash summary table should number in tens or hundreds of millions, as does indeed happen in reality. For example, if Bizarro Ball offered these services to college-level teams and they all accepted the offer, there would be orders of magnitude more games and thus Game_SK values.

It is all the more true that the composite key in question may have more than just two variables and may be pre-grouped by more than one. In general, a composite key (at whose level aggregation is requested) is composed of a number of leading key variables KL (by which the file is pre-grouped) and a number of trailing key variables KT:

(KL1, KL2, ..., KLn, KT1, KT2, ..., KTm)

In such a general case, both the key and data portions of the hash table would include:

("KT1", "KT2", ..., "KTm")

and the corresponding DoW loop similar to the loop shown in Program 11.3 would look as follows:

do until (last.KLn) ;

set ... ;

by KL1 KL2 ... KLn ;

...

end ;

The more leading keys KLn (by which the file is pre-grouped) are available relative to the number of the trailing keys KTm, the more hash memory this technique saves compared to the frontal attack by the whole composite key.

11.3.2 Data Unduplication

The same technique works, almost verbatim, for data unduplication. Suppose that we want to use the same file Dw.Runs to find, for each game, the inning and its half (i.e., top vs. bottom) in which each scoring player scored first. Let us look, for example, at the records for the first game in the file Dw.Runs (some variables are hidden, and the column From_obs indicates the respective observation numbers in the file):

Output 11.3 Chapter 11 Records for the First Game in Dw.Runs

From the data processing viewpoint, our task means that, for each game and runner, we need to output only the first record where the corresponding Runner_ID value is encountered. Thus, in the sample above, we would need to keep records 1, 2, 3, 5, 8, 11, and 13 and discard the rest. In other words, we would unduplicate the file based on the first distinct combination of key-values of composite key (Game_SK,Runner_ID).

If file Dw.Runs above were not pre-sorted by Game_SK, we would have to include the entire composite key (Game_SK,Runner_ID) in the key portion to execute a frontal attack as shown in Program 11.4 below. It uses the same logic as Program 10.2 in Chapter 10.

Program 11.4 Chapter 11 Frontal Attack Unduplication.sas

data First_scores ;

if _n_ = 1 then do ;

dcl hash h () ;

h.defineKey ("Game_SK", "Runner_ID") ;

h.defineData ("_N_") ;

h.defineDone () ;

dcl hiter ih ("h") ;

end ;

do until (LR) ;

set dw.Runs end = LR ;

if h.check() = 0 then continue ;

output ;

h.add() ;

end ;

run ;

The logic in the DO loop part requires no more than to check if the composite key-value from the current record of Dw.Runs is in table H. Therefore, the CHECK method is called to perform the Search operation. Hence, the data portion plays no functional role. However, as a hash table cannot exist without it, we are interested to make it as short as possible to minimize the hash memory footprint. The simplest way to do it is to define the data portion with a single numeric variable. Since in the context of the program it never interacts with the PDV, it does not matter which one we pick. The automatic variable _N_ chosen above to play the role of the dummy placeholder suits the purpose well since it is (a) numeric and (b) always present in the PDV. Therefore, no parameter type matching issues can arise.

For the input records of the game shown in Output 11.3, the program generates the following output:

Output 11.4 Chapter 11 Dw.Runs First Game Subset Unduplicated

However, if we make use of the fact that the file is intrinsically sorted by Game_SK, the program can be re-coded as shown in Program 11.5 below (the changes from Program 11.4 are shown in bold). This program generates exactly the same output as the frontal attack Program 11.4 above.

Program 11.5 Chapter 11 Pregrouped Unduplication.sas

data First_scores_grouped ;

if _n_ = 1 then do ;

dcl hash h () ;

h.defineKey ("Runner_ID") ; ❶

h.defineData ("_iorc_") ;

h.defineDone () ;

dcl hiter ih ("h") ;

end ;

do until (last.Game_SK) ; ❷

set dw.Runs (keep = Game_SK Inning Top_Bot Runner_ID) ;

by Game_SK ;

if h.check() = 0 then continue ;

output ;

h.add() ;

end ;

h.clear() ; ❹

run ;

❶ Note that Game_SK is no longer included in the key portion of table H.

❷ Instead of looping through the entire file, the DoW loop now iterates through each sorted group BY Game_SK one DATA step execution at a time.

❸ This is technically made possible by the BY statement, which sets last.Game_SK=1 each time the last game record is read and thus terminates the DoW loop.

❹ After the current game is processed, the hash table is cleared of all items, ceding the memory occupied by them to the operating system. Program control is then passed to the top of the DATA step, and the next game is processed.

Just like with data aggregation, taking advantage of partial pre-sorting saves hash memory in two ways:

● It reduces the key portion length by the summary length of the pre-sorted leading keys (in this case, just Game_SK).

● The ultimate number of items in the table corresponds to the game with the largest number of distinct runners. Contrast that with the frontal attack program above where the ultimate number of items is the number of distinct runners in the whole file. Note that from the perspective of understanding the data, we know that the number of runners in a given game can never exceed the total number of players on both teams. Thus, we have a virtual guarantee on the upper bound of the number of items in the hash table object at any given point in the data.

11.3.3 Joining Data

In the cases with data aggregation and unduplication, we have dealt with a single file partially pre-grouped by a leading component of the composite key. An inquisitive SAS programmer may ask whether one can take advantage, in a similar fashion, of partial pre-grouping if both files to be joined by using a hash table are identically pre-grouped by one leading key or more. The answer to this question is "Yes". However, the implementation is a tad more involved, as in this case it requires interleaving the files by the pre-grouped key components and a bit more of programming gymnastics.

To illustrate how this can be done, suppose that, for each runner record in data set Dw.Runs, we would like to obtain a number of related pieces of information from those records of data set Dw.AtBats where the value of variable Runs is not zero (indicating one or more runs). For example, we might want to know:

● The batter, i.e., what batter was responsible for the runner scoring.

● "How deep in the count" (reasonably standard baseball terminology for how many pitches there were in the at bat) the at bat went and what the ball/strike count was. The Dw.AtBats fields furnishing this information are Number_of_Pitches, Balls, and Strikes.

● Whether these were two out runs, i.e., scored when there had already been two outs. The Dw.AtBats field for this is Outs. (The batters and runners who contribute to runs with two outs are often considered to be clutch players.)

● Whether the runner scored as the result of a hit and the type of hit indicated by the values of variables Is_A_Hit and Result from file Dw.AtBats.

The files Dw.Runs and Dw.AtBats are linked by the following composite key:

(Game_SK, Inning, Top_Bot, AB_Number)

For the demo purposes, we have picked three games (the same from both files) and a few rows from each game. The samples are shown below in Output 11.5 and Output 11.6. Note that the column From_Obs is not a variable; it is included below just to show which observations have been picked. For Dw.Runs:

Output 11.5 Chapter 11 Dw.Runs Subset for Joining

For Dw.AtBats with Runs>0 and non-key variables limited to Batter_ID, Result, and Is_A_Hit:

Output 11.6 Chapter 11 Dw.AtBats Subset for Joining

To join the files by a hash object using a frontal attack, as in Program 6.6 Chapter 6 Left Join via Hash.sas, we have to:

● Key the hash table by all of the composite key variables.

● Place all of them, together with the satellite variables from Dw.AtBats, into the data portion.

Using this approach for the task at hand, we could proceed as follows.

Program 11.6 Chapter 11 Frontal Attack Join.sas

%let comp_keys = Game_SK Inning Top_Bot AB_Number ; ❶

%let data_vars = Batter_ID Is_A_Hit Result ;

%let data_list = %sysfunc (tranwrd (&data_vars, %str( ), %str(,))) ; ❷

data Join_Runs_AtBats (drop = _: Runs) ;

if _n_ = 1 then do ;

dcl hash h (multidata:"Y", ordered:"A") ;

do _k = 1 to countw ("&comp_keys") ; ❸

h.defineKey (scan ("&comp_keys", _k)) ;

end ;

do _k = 1 to countw ("&data_vars") ; ❹

h.defineData (scan ("&data_vars", _k)) ;

end ;

h.defineDone() ;

do until (LR) ;

set dw.AtBats (keep=&comp_keys &data_vars Runs

where=(Runs)) end = LR ;

h.add() ;

end ;

end ;

set dw.Runs ;

call missing (&data_list, _count) ;

do while (h.do_over() = 0) ;

_count = sum (_count, 1) ;

output ;

end ;

if not _count then output ;

run ;

❶ Assign the lists of key variables and satellite variables (from Dw.AtBats) to macro variables. Their resolved values are used later in the program instead of hard-coding the variable lists repeatedly.

❷ Create a comma-separated list of variable names in data_vars (using macro language facilities that we agreed to discuss with the IT users later) to be used in the CALL MISSING routine later.

❸ Place all the composite key variables in the key portion. Note that instead of passing their names to the DEFINEKEY method as a single comma-separated list of quoted names, the hash variables are added one at a time in a DO loop by passing a character expression with the SCAN function to the method call.

❹ Place the satellite variables into the data portion using the same looping technique.

In general, the program follows the principle of using a hash table to join files described in section 6.3.5 “Unique-Key Joins.” Running it results in the following joined output (for the sample rows from Output 11.5 and Output 11.6):

Output 11.7 Chapter 11 Dw.Runs and Dw.AtBats Subsets Join Result

From the standpoint of hash memory usage, this head-on approach has two disadvantages:

● All components of the composite key (Game_SK,Inning,Top_Bot,AB_Number) with the summary length of 40 bytes must be present in both the key and the data portions.

● The number of items in table H equals the total number of records in the file Dw.AtBats.

However, since files Dw.Runs and Dw.AtBats are both pre-sorted by their common leading key variables (Game_SK,Inning), both issues above can be addressed via processing each (Game_SK,Inning) BY group separately. But since now we have to deal with two files, it involves the extra technicality of interleaving them in the proper order and treating the records from each file differently within the same BY group. The differences between the head-on approach and this one are shown in bold:

Program 11.7 Chapter 11 Pregrouped Join.sas

%let comp_keys = Game_SK Inning Top_Bot AB_Number ;

%let data_vars = Batter_ID Is_A_Hit Result ;

%let data_list = Batter_ID,Is_A_Hit,Result ;

%let sort_keys = Game_SK Inning ; ❶

%let tail_keys = Top_Bot Ab_Number ; ❷

%let last_key = Inning ; ❸

data Join_Runs_AtBats_grouped (drop = _: Runs) ;

if _n_ = 1 then do ;

dcl hash h (multidata:"Y", ordered:"A") ;

do _k = 1 to countw ("&tail_keys") ;

h.defineKey (scan ("&tail_keys", _k)) ; ❹

end ;

do _k = 1 to countw ("&data_vars") ;

h.defineData (scan ("&data_vars", _k)) ;

end ;

h.defineDone() ;

end ;

do until (last.&last_key) ; ❺

set dw.atbats (in=A keep=&comp_keys &data_vars

Runs where=(Runs)) ❻

dw.runs (in=R keep=&comp_keys Runner_ID)

;

by &sort_keys ;

if A then h.add() ; ❼

if not R then continue ; ❽

call missing (&data_list, _count) ; ❾

do while (h.do_over() = 0) ;

_count = sum (_count, 1) ;

output ;

end ;

if not _count then output ;

end ;

h.clear() ;

run ;

❶ Assign the list of the leading key variable names by which the files are pre-sorted to macro variable sort_keys.

❷ Assign the list of the key variables names, except for those in sort_keys, to the macro variable tail_keys.

❸ Assign the name of the last key variable in sort_keys to the macro variable last_key. It is needed to properly specify the last.<variable-name> in the UNTIL condition of the DoW loop.

❹ Define the key portion only with the key variables in the tail_keys list – i.e., with those not in the sort_keys list. Note that, just as in the frontal attack program, they are defined dynamically one at a time.

❺ Use the DoW loop to process one (Game_SK,Inning) key-value BY group per DATA step execution. Note that the UNTIL condition includes the trailing key variable last.&last_key from the sort_keys list.

❻ Interleave Dw.AtBats and Dw.Runs – exactly in this sequence – using the key variables in the sort_keys list as BY variables. Doing so forces all the records from Dw.AtBats to come before those from Dw.Runs within each BY group. The automatic variables A and R assigned to the IN= data set option assume binary values (1,0) identifying the records from Dw.AtBats if A=1 (while R=0) and the records from Dw.Runs if R=1 (while A=0).

❼ Within each BY group, reading the records from Dw.AtBats before any records from Dw.Runs allows using the IF condition to load the hash table with the key and data values from Dw.AtBats only. If some BY group has no records from Dw.AtBats, so be it – all it means is that the hash table for this BY group will have no items.

❽ If this condition evaluates true, the record is not from Dw.Runs. Hence, we can just proceed to the next record.

❾ Otherwise, we use the standard Keynumerate operation to look up the current (Top_Bot,AB_Number) key-value pair coming from Dw.Runs in the hash table. If it is there, we retrieve the corresponding values of the satellite hash variables (listed in data_vars) into their PDV host variables. The loop outputs as many records as there are hash items with the current (Top_Bot,AB_Number) key-value in the current BY group. Calling the MISSING routine ensures that, if there is no match, the satellite variables from the data_vars list for the current output record have missing values regardless of the values that may have been retrieved in preceding records. (This is necessary to ensure proper output in the case of a left join.) The MISSING routine also initializes the value of _count for each new (Top_Bot,AB_Number) key-value.

Note that the "if not _count then output;" statement is needed only if a left join, rather than an inner join, is requested. With these two particular files, there is no difference, as every composite key in the comp_keys list in one file has its match in the other.

❿ After the current BY group is processed, the Clear operation is performed to empty out the table before it is loaded from the next BY group. Thus, in this program the topmost number of items in the hash table is controlled, not by the total number of records in file Dw.AtBats but by the number of records in its largest (Game_SK,Inning) BY group.

This program generates exactly the same output (Output 11.7) as the frontal attack Program 11.6.

Note that instead of hard-coding the lists data_list, tail_keys, and last_key, they can be composed automatically using the macro function %SYSFUNC:

%let comp_keys = Game_SK Inning Top_Bot AB_Number ;

%let data_vars = Batter_ID Is_A_Hit Result ;

%let sort_keys = Game_SK Inning ;

%let data_list = %sysfunc (tranwrd (&data_vars, %str( ), %str(,))) ; ❶

%let tail_keys = %sysfunc (tranwrd (&comp_keys, &sort_keys, %str())) ; ❷

%let last_key = %sysfunc (scan (&sort_keys, -1)) ; ❸

❶ Insert commas between the elements of the data_vars list by replacing the blanks between them with commas using the TRANWRD function.

❷ Take the sort keys out of the comp_keys list by replacing their names with single blanks using the TRANWRD function.

❸ Extract the trailing element from the sort_keys list using the SCAN function reading the list leftward (-1 means get the first element counting from the right instead of the left).

From now on, we are going to use this more robust functionality instead of hard-coded lists.

Although this program and the frontal attack Program 11.6 generate the same output, their hash memory usage is quite different. Tested under X64_7PRO, the frontal attack step consumes approximately 10 times more memory (about 12 megabytes) than the step-making use of the pre-sorted order. While a dozen of megabytes of RAM may be a pittance by today's standards, with much bigger data an order-of-magnitude reduction in hash memory footprint can make the difference between a program that successfully delivers its output on time and one that fails to run to completion.

11.4 MD5 Hash Key Reduction

In real-life industrial applications, data often needs to be processed by a number of long composite keys with numerous components of both numeric and character type. A typical example of such need is pre-aggregating data for online reporting at many levels of composite categorical variables. It is not uncommon in such cases to see a composite key grow to the length of hundreds of bytes. A large hash table keyed by such a multi-component key can easily present a serious challenge to the available memory resources.

It is all the more true that the entry size of a hash table in bytes does not merely equal the sum of the lengths of the variables in its key and data portions but usually exceeds it by a sizable margin. For example, let us consider a hash table below keyed by 20 numeric variables with only a single numeric variable in its data portion and use the ITEM_SIZE hash operator to find out what the resulting hash entry size will be. Then let us load the table with 1 million items and see if the DATA step memory footprint reported in the log is what we intuitively expect it to be.

Program 11.8 Chapter 11 Hash Entry Size Test.sas

data _null_ ;

array KN [20] (20 * 1) ;

dcl hash h() ;

do i = 1 to dim (KN) ;

h.defineKey (vname(KN[i])) ;

end ;

h.defineData ("KN1") ;

h.definedone() ;

Hash_Entry_Size = h.item_size ;

put Hash_Entry_Size= ;

do KN1 = 1 to 1E6 ;

h.add() ;

end ;

run ;

Running this step (on the X64_7PRO platform) results in the following printout in the SAS log:

Hash_Entry_Size=208

It would seem that, with 22 variables of 8 byte apiece, the hash entry size would be 176 bytes; yet in reality it is 208. Likewise, with 1 million items, we would expect the memory footprint of 167 megabytes or so, and yet the FULLSTIMER option reports that in actuality it is 208 megabytes, i.e., 25 percent larger. Though the hash entry size for given number, length, and data type of hash variables varies depending on the operating system (and whether it is 32-bit or 64-bit), the tendency of the hash memory footprint to be larger than perceived persists across the board.

Note that in order to determine the hash table entry size using the ITEM_SIZE attribute, it is unnecessary to load even a single item into the table. In fact, it makes good sense to do it against a (properly defined and created) empty table at the program planning stage if odds are that the table can be loaded with a lot of items. Estimating their future number and multiplying it by the hash entry size gives a fairly accurate idea of how much memory the table will consume when the program is actually run. In turn, the result may lead us to decide ahead of time whether any memory-saving techniques described here should be employed to reduce the hash memory footprint.

Table H used in the test above is actually rather small. The authors have encountered real-life projects where aggregate hash tables had to be keyed by composite keys with hash entry lengths upward of 400 bytes and would grow to hundreds of gigabytes of occupied memory if special measures to shorten their entries were not taken. One such measure, described above, makes use of an existing pre-sorted input order. However, in the situations where no such order exists, no variables can be relegated from the key portion of the hash table to the BY statement.

Thankfully, there exists another mechanism by means of which all key variables of the table (i.e., its composite key) can be effectively replaced by a single key variable of length $16 regardless of the number, data type, and lengths of the composite key components. "Effectively" means that from the standpoint of the data processing task result, using the replacement hash key is equivalent to using the composite key it replaces.

11.4.1 The General Concept

SAS software offers a number of one-way hash functions (note that, despite the name and using hashing algorithms, they are not related to the SAS hash object per se). The simplest and fastest of them is the MD5 function (apparently based on a well-known MD5 algorithm). It operates in the following manner:

signature = MD5 (<character_expression>) ;

If the defined length of character variable signature is greater than 16, MD5 populates it with 16 non-blank leading bytes followed by a number of trailing blanks. The non-blank part of the MD5 response (called the MD5 signature) is a 16-byte character string whose values are all different for all different values of any possible argument. This way, any character string has its own and only one MD5 signature value. (We will briefly address a theoretical possibility of deviation from this rule in section 11.10.1 “MD5 Collisions and SHA256.”)

The one-to-one relationship between the values of an MD5 argument and its response means that if we need to key a hash table by a composite key, we can instead:

● Concatenate its components into a character expression.

● Pass the expression to the MD5 function.

● Use its 16-byte signature in the key portion as a single simple key instead of the original composite key.

The advantage of this approach is a significant reduction of the key portion length and, consequently, of the entire hash entry length. Suppose, for instance, that the total length of the key portion composite key is 400 bytes, 25 times the length of its MD5 16-byte replacement key. Due to the internal hash object storage rules, it does not mean that the hash entry length will actually be reduced 25 times. For example, if the length of the keys is 400 bytes and the data portion has one 8-byte variable, replacing the composite key by its MD5 signature results in the total hash entry size of 64 bytes (as returned by the ITEM_SIZE operator on the X64_7PRO platform). While it is not the 25-fold reduction (which would be great, of course), the 7-fold hash memory footprint reduction is quite significant as well.

The concept delineated here is not unique to a particular data processing task. On the contrary, it applies to a great variety of them. As an alert IT user noticed, it is illustrated below using various data processing tasks. The intent is not to repeat what is already known but to drive home the point that the same concept works across the board and to demonstrate how it is implemented under different guises, at the same time offering the IT users a number of sample programs where specific technicalities of those guises have already been developed.

11.4.2 MD5 Key Reduction in Sample Data

Note that essentially the same technique has already been used in this book to generate sample data. To wit, in the program Chapter 5 GenerateSchedule.sas, the surrogate 16-byte character key Game_SK is created using the following expression:

Game_SK = md5 (catx (":", League, Away_SK, Home_SK, Date,Time)) ;

You can access the blog entry that describes this program from the author page at http://support.sas.com/authors. Select either “Paul Dorfman” or “Don Henderson.” Then look for the cover thumbnail of this book, and select “Blog Entries.” This variable is used as a universal surrogate key linking data files AtBats, Lineups, Pitches, and Runs to each other and to the data file Games. The latter cross-references each distinct value of Game_SK with the corresponding distinct value of composite key (League,Away_SK,Home_SK,Date,Time) along with other variables related to the specific game. Using this approach affords a number of advantages:

● Identifies each particular game in the data sets listed above using a single key instead of five separate keys.

● Reduces the record length of each of the data sets by (5*8-16)=22 bytes.

● Makes it easier and simpler to process (i.e., aggregate, unduplicate, join, etc.) these files anytime the program needs a game identifier, regardless of whether the hash object is used in the process or not.

● If the hash object is used, it needs a single $16 key Game_SK instead of the composite key with 5 components of 8 bytes each, thus reducing the hash table entry length. Also, it makes code shorter and clearer.

● This approach also provides great flexibility should the key structure need to change. For example, if the components of the key are augmented with additional variables, those fields can simply be included. For the example shown above that creates Game_SK, if an additional key (e.g., League Type: Majors, AAA, AA, A, Collegiate) is needed, the field League_Type would be included in the CATX function above and in our Games dimension table. No other program changes would be needed.

However, while having such a key as Game_SK already available as part of the data is of great utility, it does not mean that using the MD5 function to reduce the hash key portion length even further on the fly is not desirable or necessary. First, not all data collections have such a convenient provision as Game_SK in our sample data. Moreover, as we have seen in previous examples, data processing may involve more hash keys in addition to Game_SK. The examples in the rest of this section illustrate how this technique is used in terms of different data processing tasks.

In the following subsections we will illustrate the applicability of this approach to data aggregation, data unduplication and joining data just as we did in the section “Making Use of Existing Key Order”.

11.4.3 Data Aggregation

Suppose that we would like to expand the task of finding the score for each game already discussed above and find the score for each game and each inning using data set Dw.Runs. In other words, we need to count the records in the bottom and top of each inning of each game. To recap, for the two selected games shown in Output 11.1, the input data with the added variable Inning looks as follows:

Output 11.8 Chapter 11 Two Games from Dw.Runs

Using the hash object to solve the problem head-on is practically the same as in the first step of Program 11.3 Chapter 11 Pregrouped Aggregation.sas. The only difference is that now, in addition to Game_SK, we have to include both Top_Bot and Inning in the key portion and data portion:

Program 11.9 Chapter 11 Frontal Attack Aggregation Inning.sas

data Scores_game_inning (keep = Game_SK Inning Top_Bot Score) ;

if _n_ = 1 then do ;

dcl hash h (ordered:"A") ;

h.defineKey ("Game_SK", "Inning", "Top_Bot") ;

h.defineData ("Game_SK", "Inning", "Top_Bot", "Score") ;

h.defineDone () ;

dcl hiter ih ("h") ;

end ;

do until (LR) ;

set Dw.Runs (keep = Game_SK Inning Top_Bot) end = LR ;

if h.find() ne 0 then Score = 1 ;

else Score + 1 ;

h.replace() ;

end ;

do while (ih.next() = 0) ;

output ;

end ;

run ;

The output from the program (for our small visual input sample) looks as follows:

Output 11.9 Chapter 11 Frontal Attack Aggregation by (Game_SK,Top_Bot,Inning)

Note that all components of the composite key (Game_SK,Inning,Top_Bot) are included in the data portion of hash table H. Let us now use the MD5 function to replace the entire composite key with a single 16-byte key _MD5. The changes to the frontal attack Program 11.9 are shown below in bold:

Program 11.10 Chapter 11 MD5 Key Reduction Aggregation.sas

%let cat_length = 36 ; ❶

data Scores_game_inning_MD5 (keep = Game_SK Inning Top_Bot Score) ;

if _n_ = 1 then do ;

dcl hash h () ; ❷

h.defineKey ("_MD5") ;

h.defineData ("Game_SK", "Inning", "Top_Bot", "Score") ;

h.defineDone () ;

dcl hiter ih ("h") ;

end ;

do until (LR) ;

set Dw.Runs end = LR ;

length _concat $ &cat_length _MD5 $ 16 ; ❶

_concat = catx (":", Game_SK, Inning, Top_Bot) ; ❸

_MD5 = MD5 (_concat) ;

if h.find() ne 0 then Score = 1 ; ❹

else Score + 1 ;

h.replace() ;

end ;

do while (ih.next() = 0) ;

output ;

end ;

run ;

❶ Sizing _concat variable to accept the ensuing concatenation as $36 accounts for the actual system length of Game_SK ($16), Top_Bot ($1), 17 bytes for numeric variable Inning, plus 2 bytes for the CATX separator character. With only 3 key variables in question, it is not difficult to do; but with many more, this kind of hard-coding can become error-prone. So, instead of doing that, an astutely lazy programmer would notice that the key variables come from a SAS data set and use the system table Dictionary.Columns (or view Sashelp.Vcolumn) to populate the macro variable cat_length programmatically.

❷ No ORDERED argument tag is used here since the order by the _MD5 values is different from the order by the original composite key replaced by _MD5, and so ordering the output by _MD5 offers no benefit.

❸ Concatenate the components of the composite key using a colon as a separator. (Using the functions of the CAT family for the purpose is not without caveats. This is discussed in 11.10.1 MD5 Collisions and SHA256). Then feed variable _concat to the MD5 function. Its response _MD5 will be now used as the key-value.

❹ Invoke the standard data aggregation mechanism, except that now we are looking up, not the value of the original composite key, but its MD5 signature _MD5. As the relationship between them is one-to-one, it makes no difference for which one's distinct values Score is accumulated. Note that table H in this form is essentially a cross-reference between the _MD5 values in the key portion and the (Game_SK,Inning,Top_Bot) tuple in the data portion. Though the aggregation is done by the unique values of _MD5, the related values of the original composite key (Game_SK,Inning,Top_Bot) end up in the output.

For our two sample games, the program produces the following output:

Output 11.10 Chapter 11 MD5 Key Reduction Aggregation by (Game_SK,Top_Bot,Inning)

Note that its record order is different from that coming out of the frontal attack program. This is because the table is keyed by _MD5 whose values, though uniquely related to those of (Game_SK,Inning,Top_Bot), follow a totally different order. However, it does not present a problem because:

● Except for the inconvenience of eyeballing the unsorted result, the output is exactly the same.

● If we still want the file sorted by the original keys, it is not too much work since aggregate files are usually much smaller than unaggregated input. Also, the aggregated file can be indexed instead.

● If we wanted to display the values of the component variables used to create Game_SK, we could simply use it as a key to do a table lookup against the data set Games to get the desired values.

11.4.4 Data Unduplication

The same exact principle of using the MD5 function applies if we want to shorten the key portion when unduplicating data. Let us recall the DATA step of Program 11.4 Chapter 11 Frontal Attack Unduplication.sas in section 11.3.2. There, we used the composite hash key (Game_SK,Runner) to find and keep the records where each of its distinct key-values occurs first.

Using the MD5 function, we can now replace the composite key (Game_SK,Runner_ID) with a single 16-byte key _MD5 (the changes from Program 11.4 are shown in bold):

Program 11.11 Chapter 11 MD5 Key Reduction Unduplication.sas

%let cat_length = 34 ;

data First_scores_MD5 (drop = _:) ;

if _n_ = 1 then do ;

dcl hash h() ;

h.defineKey ("_MD5") ;

h.defineData ("_N_") ;

h.defineDone () ;

dcl hiter ih ("h") ;

end ;

do until (LR) ;

set dw.Runs end = LR ;

length _concat $ &cat_length _MD5 $ 16 ;

_concat = catx (":", Game_SK, Runner_ID) ;

_MD5 = MD5 (_concat) ;

if h.check() = 0 then continue ;

output ;

h.add() ;

end ;

run ;

The principal differences between the head-on and MD5 approaches are these:

● With the former, we store the distinct composite key-values (Game_SK,Runner) in the hash table and search the table to see if the key-value from the current record is already in the table.

● With the latter, we use the fact that the composite key-values of (Game_SK,Runner) and _MD5 are related as one-to-one and key the table with the latter.

Since in this case the sequence of the input records defines the output sequence, the relative record orders in both are the same (in other words, the unduplication process is stable), and the replacement of the composite key by _MD5 does not affect it. Note that if the SORT or SQL procedure were used to accomplish the task, the same record order would not (or would not be guaranteed to) be preserved.

For data unduplication the benefit of using the MD5 key-reduction technique is aided by the fact that nothing needs to be retrieved from the data portion (the very reason it is merely filled with the dummy placeholder _N_). So, the reduction of the hash entry length as a whole is roughly proportional to the reduction of the key portion length. In the example above, the shortening of the key length from 24 bytes to 16 may look trivial. However, under different circumstances it can reach an order of magnitude or more.

One such scenario is cleansing a data set where whole records, i.e., the values of all variables, have been duplicated for some reason, which is a typical occurrence in ETL processes. Imagine, say, that due to either programming or clerical error, some records in the data set Dw.Games have been completely duplicated. For example, suppose that the data for a given date were loaded twice. Since that did not occur when we created the sample data, we can emulate such a situation as follows:

Program 11.12 Chapter 11 Full Unduplication.sas (Part 1)

data Games_dup ;

set Dw.Games ;

do _n_ = 1 to floor (ranuni(1) * 4) ;

output ;

end ;

run ;

As a result, file Games_dup would have about twice as many records as the original, some records wholly duplicated. Output 11.8 below shows the first 8 records from Games_dup. The column From_obs is not a variable from the file but indicates the original observation numbers from Dw.Games.

Output 11.11 Chapter 11 Work.Games_dup Sample

Suppose that we need to cleanse the file by keeping only the first copy of each duplicate record while preserving the relative sequence of the input records. In other words, after the cleansing is done, our output should match the original file Dw.Games.

If we approached the task headlong using the frontal hash object attack, we would have to include all 9 variables from Games_dup (with the total byte length of 80) in the key portion, plus a dummy placeholder variable in the data portion. (Although, for this task, we do not need our key variables in the data portion since their output values come directly from the input file records, we still need at least one data portion variable due to the hash object design.) Having to include all input file variables in the key portion has its consequences:

● If the variables in the file are numerous, it will result in a large hash entry length.

● Even in this simple case with only 9 key portion variables (and 1 dummy data portion variable), the hash entry length on a 64-bit platform would be 128 bytes. For a real-world file with 100 million distinct records, it would result in the hash memory footprint close to 20 GB of RAM.

However, the problem can be easily addressed by using the MD5 key reduction approach because it replaces all key portion hash variables with a single 16-byte _MD5 key variable. This approach is demonstrated by the program below modified from Program 11.11 (the changes are shown in bold):

Program 11.12 Chapter 11 Full Unduplication.sas (Part 2)

%let cat_length = 53 ;

data Games_nodup (drop = _:) ;

if _n_ = 1 then do ;

dcl hash h() ;

h.defineKey ("_MD5") ;

h.defineData ("_N_") ;

h.defineDone () ;

dcl hiter ih ("h") ;

end ;

do until (LR) ;

set Games_dup end = LR ;

array NN[*] _numeric_ ; ❶

array CC[*] _character_ ;

length _concat $ &cat_length _MD5 $ 16 ;

_concat = catx (":", of NN[*], of CC[*]) ; ❷

_MD5 = MD5 (trim(_concat)) ;

if h.check() = 0 then continue ;

output ;

h.add() ;

end ;

run ;

Principally, the program is not much different from Program 11.11. The differences are noted below:

❶ The ARRAY statement in this form automatically incorporates all non-automatic numeric variables currently in the PDV into array NN. Likewise, the next statement automatically incorporates all non-automatic character variables currently in the PDV into array CC. Since during compile time at this point in the step the only non-automatic variables the compiler has seen are the variables from the descriptor of file Games_dup, these are the only variables the arrays incorporate. Note that it is incumbent upon the programmer to ensure that no other non-automatic variables get in the PDV before the arrays are declared; otherwise we may end up concatenating variables we do not need.

❷ Concatenate the needed variables into the MD5 argument using the shortcut array notation [*]. Note that since all the numeric variables are concatenated first followed by all the character variables (due to the order in which the arrays are declared), the concatenation order may differ from that of the variables in the file. This is okay since from the standpoint of the final result the concatenation order does not matter. This aspect of the technique (actually adding to its robustness) will be discussed in some more detail in the section "MD5 Argument Concatenation Ins and Outs".

For our first 8 sample records from Games_dup (Output 11.8), the program generates the following output:

Output 11.12 Chapter 11 Work.Games_dup Unduplicated

11.4.5 Joining Data

Let us now turn to the case of joining data already described and discussed earlier in the section “Joining Data”. There, Program 11.6 Chapter 11 Frontal Attack Join.sas represents a frontal attack where all components of the joining composite key (Game_SK, Inning,Top_Bot, AB_Number) are included in the key portion. In the program below, files Dw.Runs and Dw.AtBats are joined by a single key variable _MD5 instead. The differences between this code and the frontal attack Program 11.6 are shown in bold:

Program 11.13 Chapter 11 MD5 Key Reduction Join.sas

%let comp_keys = Game_SK Inning Top_Bot AB_Number ;

%let data_vars = Batter_ID Position_code Is_A_Hit Result ;

%let data_list = %sysfunc (tranwrd (&data_vars, %str( ), %str(,))) ;

%let keys_list = %sysfunc (tranwrd (&comp_keys, %str( ), %str(,))) ; ❶

%let cat_length = 52 ;

data Join_Runs_AtBats_MD5 (drop = _: Runs) ;

if _n_ = 1 then do ;

dcl hash h (multidata:"Y", ordered:"A") ;

h.defineKey ("_MD5") ; ❷

do _k = 1 to countw ("&data_vars") ;

h.defineData (scan ("&data_vars", _k)) ;

end ;

h.defineDone() ;

do until (LR) ;

set dw.AtBats (keep=&comp_keys &data_vars Runs

where=(Runs)) end = LR ;

link MD5 ; ❸

h.add() ;

end ;

end ;

set dw.Runs ;

link MD5 ; ❹

call missing (&data_list, _count) ;

do while (h.do_over() = 0) ;

_count = sum (_count, 1) ;

output ;

end ;

if not _count then output ;

return ;

MD5: length _concat $ &cat_length _MD5 $ 16 ; ❺

_concat = catx ("", &keys_list) ;

_MD5 = MD5 (_concat) ;

return ;

run ;

❶ A comma-separated list of key variable names is prepared to be used in the CATX function later.

❷ Rather than defining separate key variables in a loop, a single key variable _MD5 is defined.

❸ The LINK routine MD5 is called for every record from Dw.AtBats (the right side of the join) to concatenate the components of its composite key and pass the result to the MD5 function. Its signature _MD5 is added to table H along with the values of the data_vars list variables as a new hash item.

❹ For each record read from file Dw.Runs, the LINK routine MD5 is called again, now for the left side of the join. It generates the value of _MD5 key to be looked up in the hash table.

❺ The MD5 LINK routine is relegated to the bottom of the step to avoid repetitive coding. This way, we have only one set of code creating the _MD5 key for both data sets involved in the join; and this ensures that they are kept in synch (e.g., if the key structure should change for some reason, we would have only one set of code to change).

The principal difference between using the MD5 key reduction technique in this program and the programs used for data aggregation and unduplication is that since in this case we have two files (Dw.Runs and Dw.AtBats), the composite key needs to be converted to its MD5 signature for each record from both input files: For Dw.AtBats with the data to be loaded into the hash table and for Dw.Runs before the Keynumerate operation is invoked.

11.5 Data Portion Offload (Hash Index)

Thus far in this chapter, we have dealt with measures of reducing the length of the key portion by either moving its hash variables elsewhere or replacing them with a surrogate key. In the related examples, similar measures cannot be used to reduce the length of the data portion as well because its hash variables are needed for the Retrieve operation to extract the data into the PDV host variables.

However, in some cases the size of the data portion can be reduced by replacing the data retrieval from the hash table with extracting the same data directly from disk. Doing so effectively relegates the hash table to the role of a searchable hash index. This is a tradeoff: It sacrifices a small percentage of execution speed for the possibility to remove all data portion variables and replace them with a single numeric pointer variable. Two data processing tasks that benefit from this approach most readily are (a) joining data and (b) selective unduplication.

11.5.1 Joining Data

Let us return to our archetypal case of joining file Dw.Runs to file Dw.AtBats that we have already discussed in this chapter. See the section “Joining Data” for the task outline, visual data samples, and Program 11.6 Chapter 11 Frontal Attack Join.sas.

To recap, the task is to join the two files by composite key (Game_SK,Inning,Top_Bot,AB_Number) with the intent to enrich Dw.Runs with the satellite fields Batter_ID, Is_A_Hit, and Result from Dw.AtBats. In the frontal attack program, all these data fields are included in the data portion of the hash table. As mentioned earlier, we could potentially need to bring in many more fields depending on the questions asked about the game results. In real industrial applications, data portion variables may be much more numerous – the more of them, the greater hash memory footprint they impose. The question is, can their number be reduced; and if yes, then how?

To find out, let us observe that each observation in file Dw.AtBats can be marked by its serial number – that is, the observation number, in SAS terminology. This number is commonly referred to as the Record Identification Number (or RID Number or just RID). The idea here is to include RID in the data portion and load the table from Dw.AtBats with its RID values instead of the values of satellite variables. Then, for each hash item whose composite key (Game_SK,Inning,Top_Bot,AB_Number) matches its counterpart from Dw.Runs, the value of RID retrieved from the table can be used to extract the corresponding data directly from Dw.AtBats by using the SET statement with POINT=RID option. With these changes in mind, the frontal attack Program 11.6 can be re-coded this way (the changes are shown in bold):

Program 11.14 Chapter 11 Hash Index Join.sas

%let comp_keys = Game_SK Inning Top_Bot AB_Number ;

%let data_vars = Batter_ID Is_A_Hit Result ;

%let data_list = %sysfunc (tranwrd (&data_vars, %str( ), %str(,))) ;

data Join_Runs_AtBats_RID (drop = _: Runs) ;

if _n_ = 1 then do ;

dcl hash h (multidata:"Y", ordered:"A") ;

do _k = 1 to countw ("&comp_keys") ;

h.defineKey (scan ("&comp_keys", _k)) ;

end ;

h.defineData ("RID") ; ❶

h.defineDone() ;

do RID = 1 by 1 until (LR) ; ❷

set dw.AtBats (keep=&comp_keys Runs where=(Runs)) end = LR ;

h.add() ;

end ;

end ;

set dw.Runs ;

call missing (&data_list, _count) ;

do while (h.do_over() = 0) ; ❸

_count = sum (_count, 1) ;

set dw.Runs point = RID ; ❹

output ;

end ;

if not _count then output ;

run ;

❶ The data portion is defined with variable RID (i.e., Dw.AtBats observation number) and none of the satellite variables from the data_vars list.

❷ The sequential values of RID (i.e., the sequential observation number) are created here by incrementing RID up by 1 for each next record from Dw.Runs. Each value is inserted into the hash table as a new item along with the corresponding key-values from the comp_keys list. This way, the values of RID in the table are linked with the respective records from Dw.AtBats – and thus, with the respective values of the satellite variables.

❸ The Keynumerate operation searches the table for the key-values coming from each record from Dw.Runs and retrieves the RID values pointing to records in Dw.AtBats.

❹ If the PDV composite key-value accepted by the DO_OVER method is found in the table, the Keynumerate operation retrieves the RID values from the group of items with this key-value, one at a time, into PDV host variable RID. For each enumerated item, the retrieved value of RID is then used to access Dw.AtBats directly via the SET statement with the POINT=RID option, the value of RID pointing to the exact record we need. Thus, the values of the variables from the data_vars appear in the PDV because they are read directly from the file – not as a result of their retrieval from the hash table, as in the frontal attack program. (Note that since variable RID is named in the POINT= option, it is automatically dropped despite also being used as a DO loop index.)

Running this program generates exactly the same output as the frontal attack Program 11.6. The technique used in it is notable in a number of aspects:

● Memory-Disk Tradeoff. Offloading the data portion variables in this manner means that the satellite values are extracted from disk. Obviously, it is slower than retrieving them from hash table memory. However, this is offset by the fact that now those same variables need not be either loaded into or read from the hash table. More importantly, the action affecting performance most – namely, searching for a key – is still done using the hash lookup in memory.

● Performance. Testing shows that overall, the reduction in execution performance is insignificant because POINT= access (compared to SAS index access) is in itself extremely fast. If the satellites variables involved in the process are numerous and long, the trade-off is well worth the price, as it could reduce hash memory usage from the point where the table may fail to fit in memory (which would abend the program) to where the program still runs to completion successfully.

● Hash-Indexing. This technique has yet another interesting aspect. Effectively, by pairing RID in the hash table with its file record, we have created, for the duration of the DATA step, a hash index for file Dw.AtBats defined with the composite key from the comp_keys list. Both such hash index and a standard SAS index (residing in an index file) do essentially the same job: They search for RID given a key-value, simple or composite; and if it is found, both use the RID value to locate the corresponding record and extract the data from it. The difference is that the hash memory-resident index coupled with the hashing algorithm renders both the key search and data extraction (using the POINT=) option much faster.

● Hybrid Approach. The hash-indexing approach can be readily adapted to a hybrid situation where it may be expedient to have a few specific satellite variables defined in the data portion along with RID. This way, if programming logic dictates so, the values of these specific hash variables can be retrieved into their PDV host counterparts from the hash table without incurring extra I/O. And yet when it dictates, at some point, that the values from more (or, indeed, all) satellite variables are needed in the PDV, they can be extracted directly from disk via the SET statement with the POINT=RID option as shown above.

11.5.2 Selective Unduplication

The hash-indexing scheme as described above can work for any data task where a hash table is used to store satellite variables with the intent of eventually retrieving their hash values into the PDV – for example, for data manipulation or generating output. From this standpoint:

● Using it for simple file unduplication is pointless. Indeed, for this task the Retrieve operation is not used, and the only variable in the data portion is a dummy placeholder.

● By contrast, selective stable unduplication illustrated by Program 10.3 Chapter 10 Selective Unduplication via PROC SORT + DATA Step.sas can benefit from data portion disk offloading because, in this case, the variables in the data portion are retrieved from the hash table into the PDV in order to generate the output.

Let us recall the subset of file Dw.Games used to illustrate selective unduplication in Chapter 10. (Again, From_obs is not a data set variable. It just shows the observation numbers in Dw.Games related to the records in the subset below.)

Output 11.13 Chapter 11 Subset from Dw.Games for Selective Unduplication

To recap, the task lies in the following:

● Using this data, find the away team which each home team played last. In other words, we need to unduplicate the records for each key-value of Home_SK by selecting its record with the latest value of Date.

● Along with Home_SK, output the values of Away_SK, Date, and Game_SK from the corresponding record with the most recent value of Date.

● Note that this time, the requirements include the extra variable Game_SK in the output.

● Also note that in the data sample above, the records we want in the output are shown in bold.

To solve the task by using a frontal attack approach, it is enough to slightly modify Program 10.3 Chapter 10 Selective Unduplication via Hash:

Program 11.15. Chapter 11 Frontal Attack Selective Unduplication.sas

data _null_ ;

dcl hash h (ordered:"A") ; *Output in Home_SK order;

h.defineKey ("Home_SK") ;

h.defineData ("Home_SK", "Away_SK", "Date", "Game_SK") ;

h.defineDone () ;

do until (lr) ;

set dw.games (keep = Game_SK Date Home_SK Away_SK) end = lr ;

_Away_SK = Away_SK ;

_Date = Date ;

_Game_SK = Game_SK ;

if h.find() ne 0 then h.add() ;

else if _Date > Date then

h.replace (key:Home_SK, data:Home_SK, data:_Away_SK, data:_Date

, data:_Game_SK

) ;

end ;

h.output (dataset: "Last_games_hash") ;

stop ;

run ;

This program generates the following output for the records of our sample subset from Dw.Games:

Output 11.14 Chapter 11 Results of Frontal Attack Selective Unduplication

Note that the variables Away_SK and Game_SK are included in the data portion since their values are needed for the OUTPUT method call. Also, the temporary variables _Away_SK, and _Game_SK are included in the explicit REPLACE method call to update the data-values in table H for every input PDV value of Date greater than its presently stored hash value.

In this rather simple situation, there are only three satellite variables to be stored in the data portion and hard-coded in the explicit REPLACE method call. In other situations with many more satellite variables, two things with the frontal attack approach can become bothersome:

● With large data volumes and numerous satellite variables, hash memory can get overloaded.

● Hard-coding many arguments with the REPLACE method call is unwieldy and error-prone. In fact, even with a mere five arguments, as above, typing them in already gets pretty tedious.

However, both issues are easily addressed by using the hash index approach. In the program below, a hash table index keyed by Home_SK is used to keep the data portion variables that are not critical to the program logic out of the data portion. The program may seem to be a tad longer than the frontal attack. However, as we will see, its benefits far outweigh the cost of the few extra lines of code:

Program 11.16 Chapter 11 Hash Index Selective Unduplication.sas

data Last_away_hash_RID (drop = _:) ;

dcl hash h (ordered:"A") ;

h.defineKey ("Home_SK") ;

h.defineData ("Date", "RID") ; ❶

h.defineDone () ;

do _RID = 1 by 1 until (lr) ; ❷

set dw.Games (keep = Date Home_SK) end = lr ; ❸

_Date = Date ;

RID = _RID ; ❹

if h.find() ne 0 then h.add() ;

else if _Date > Date then

h.replace (key:Home_SK, data:_Date, data:_RID) ; ❺

end ;

dcl hiter hi ("h") ; ❻

do while (hi.next() = 0) ;

set dw.Games (keep = Home_SK Away_SK Game_SK) point = RID ; ❼

output ;

end ;

stop ;

run ;

❶ The data portion is defined with variables Date and RID. Note the absence of other variables needed in the frontal attack Program 11.15.

❷ A temporary variable _RID instead of RID is used to increment the record identifier to safeguard its values from being overwritten by the FIND method call.

❸ No need to keep Away_SK and Game_SK in this input, as their values on disk are linked to by RID.

❹ RID is repopulated with the memorized value of _RID to ensure its value is correct, just in case it may have been overwritten in the previous iteration of the DO loop by the FIND method call.

❺ RID in this explicit REPLACE call is used instead of Home_SK,_Away_SK, and _Game_SK. If we had more satellite variables, the _RID assigned to the argument tag DATA would replace the need to code any of them. Essentially, all references to the satellite variables here are replaced en masse with a single RID pointer to their location on disk.

❻ The hash iterator is used to render the output instead of the OUTPUT method since satellite variables needed in the output are no longer in the data portion (the same would be true of all other satellite variables if we had more of them). The satellite variable values will be extracted directly from the input file Dw.Games via POINT=RID option.

❼ Use RID as a record pointer to retrieve Home_SK, Away_SK, and Game_SK related to the highest value of (Home_SK,Date) directly from the file; then output a record with these values for the current hash item.

This program generates the following output for the sample records shown in Output 11.10:

Output 11.15 Chapter 11 Results of Hash Index Selective Unduplication

The output contains the same data as Output 11.14, except for the order of the variables. If it matters, it can be easily addressed by inserting a RETAIN statement listing the variables, unvalued, in the desired order immediately after the DATA statement. As we see, using a hash index instead of the frontal attack offers the following benefits:

● It reduces the data portion variables only to those required by the program logic (such as Date above) and the pointer variable RID.

● In turn, this may result in substantial savings in terms of the hash memory footprint.

● It greatly simplifies coding because it is no longer necessary to code numerous variable names in the DEFINEDATA or REPLACE method calls.