In the previous chapter, the TurtleBot robot was described as a two-wheeled differential drive robot developed by Willow Garage. The setup of the TurtleBot hardware, netbook, network system, and remote computer were explained, so the user could set up and operate his own TurtleBot. Then, the TurtleBot was driven around using the keyboard control, command-line control, and a Python script.

In this chapter, we will expand TurtleBot's capabilities by giving the robot vision. The chapter begins by describing 3D vision systems and how they are used to map obstacles within the camera's field of view. The three types of 3D sensors typically used for TurtleBot are shown and described, detailing their specifications.

Setting up the 3D sensor for use on TurtleBot is described and the configuration is tested in a standalone mode. To visualize the sensor data coming from TurtleBot, two ROS tools are utilized: Image Viewer and rviz. Then, an important aspect of TurtleBot is described and realized: navigation. TurtleBot will be driven around and the vision system will be used to build a map of the environment. The map is loaded into rviz and used to give the user point and click control of TurtleBot so that it can autonomously navigate to a location selected on the map.

In this chapter, you will learn the following topics:

- How 3D vision sensors work

- The difference between the three primary 3D sensors for TurtleBot

- Information on TurtleBot environmental variables and the ROS software required for the sensors

- ROS tools for the rgb and depth camera output

- How to use TurtleBot to map a room using Simultaneous Localization and Mapping (SLAM)

- How to operate TurtleBot in autonomous navigation mode by adaptive monte carlo localization (amcl)

TurtleBot's capability is greatly enhanced by the addition of a 3D vision sensor. The function of 3D sensors is to map the environment around the robot by discovering nearby objects that are either stationary or moving. The mapping function must be accomplished in real time so that the robot can move around the environment, evaluate its path choices, and avoid obstacles. For autonomous vehicles, such as Google's self-driving cars, 3D mapping is accomplished by a high-cost LIDAR system that uses laser radar to illuminate its environment and analyze the reflected light. For our TurtleBot, we will present a number of low cost but effective options. These standard 3D sensors for TurtleBot include Kinect sensors, ASUS Xtion sensors, and Carmine sensors.

The 3D vision systems that we describe for TurtleBot have a common infrared technology to sense depth. This technology was developed by PrimeSense, an Israeli 3D sensing company and originally licensed to Microsoft in 2010 for the Kinect motion sensor used in the Xbox 360 gaming system. The depth camera uses an infrared projector to transmit beams that are reflected back to a monochrome Complementary Metal–Oxide–Semiconductor (CMOS) sensor that continuously captures image data. This data is converted into depth information, indicating the distance that each infrared beam has traveled. Data in x, y, and z distance is captured for each point measured from the sensor axis reference frame.

Note

For a quick explanation of how 3D sensors work, the video How the Kinect Depth Sensor Works in 2 Minutes is worth watching at https://www.youtube.com/watch?v=uq9SEJxZiUg.

This 3D sensor technology is primarily for use indoors and does not typically work well outdoors. Infrared from the sunlight has a negative effect on the quality of readings from the depth camera. Objects that are shiny or curved also present a challenge for the depth camera.

Currently, three manufacturers produce 3D vision sensors that have been integrated with the TurtleBot. Microsoft Kinect, ASUS Xtion, and PrimeSense Carmine have all been integrated with camera drivers that provide a ROS interface. The ROS packages that handle the processing for these 3D sensors will be described in an upcoming section, but first, a comparison of the three products is provided.

Kinect was developed by Microsoft as a motion sensing device for video games, but it works well as a mapping tool for TurtleBot. Kinect is equipped with a rgb camera, a depth camera, an array of microphones, and a tilt motor.

The rgb camera acquires 2D color images in the same way in which our smart phones or webcams acquire color video images. The Kinect microphones can be used to capture sound data and a 3-axis accelerometer can be used to find the orientation of the Kinect. These features hold the promise of exciting applications for TurtleBot, but unfortunately, this book will not delve into the use of these Kinect sensor capabilities.

Kinect is connected to the TurtleBot netbook through a USB 2.0 port (USB 3.0 for Kinect v2). Software development on Kinect can be done using the Kinect Software Develoment Kit (SDK), freenect, and OpenSource Computer Vision (OpenCV). The Kinect SDK was created by Microsoft to develop Kinect apps, but unfortunately, it only runs on Windows. OpenCV is an open source library of hundreds of computer vision algorithms and provides support for mostly 2D image processing. 3D depth sensors, such as the Kinect, ASUS, and PrimeSense are supported in the VideoCapture class of OpenCV. Freenect packages and libraries are open source ROS software that provides support for Microsoft Kinect. More details on freenect will be provided in an upcoming section titled Configuring TurtleBot and installing 3D sensor software.

Microsoft has developed three versions of Kinect to date: Kinect for Xbox 360, Kinect for Xbox One, and Kinect for Windows v2. The following table presents images of their variations and the subsequent table shows their specifications:

Microsoft Kinect versions

Microsoft Kinect version specifications:

|

Spec |

Kinect 360 |

Kinect One |

Kinect for Windows v2 |

|---|---|---|---|

|

Release date |

November 2010 |

November 2013 |

July 2014 |

|

Horizontal field of view (degrees) |

57 |

57 |

70 |

|

Vertical field of view (degrees) |

43 |

43 |

60 |

|

Color camera data |

640 x 480 32-bit @ 30 fps |

640 x 480 @ 30 fps |

1920 x 1080 @ 30 fps |

|

Depth camera data |

320 x 240 16-bit @ 30 fps |

320 x 240 @ 30 fps |

512 x 424 @ 30 fps |

|

Depth range (meters) |

1.2 – 3.5 |

0.5 – 4.5 |

0.5 – 4.5 |

|

Audio |

16-bit @ 16 kHz |

4 microphones | |

|

Dimensions |

28 x 6.5 x 6.5 cm |

25 x 6.5 x 6.5 cm |

25 x 6.5.x 7.5 cm |

|

Additional information |

Motorized tilt base range ± 27 degrees; USB 2.0 |

Manual tilt base; USB 2.0 |

No tilt base; USB 3.0 only |

|

Requires external power | |||

fps: frames per second

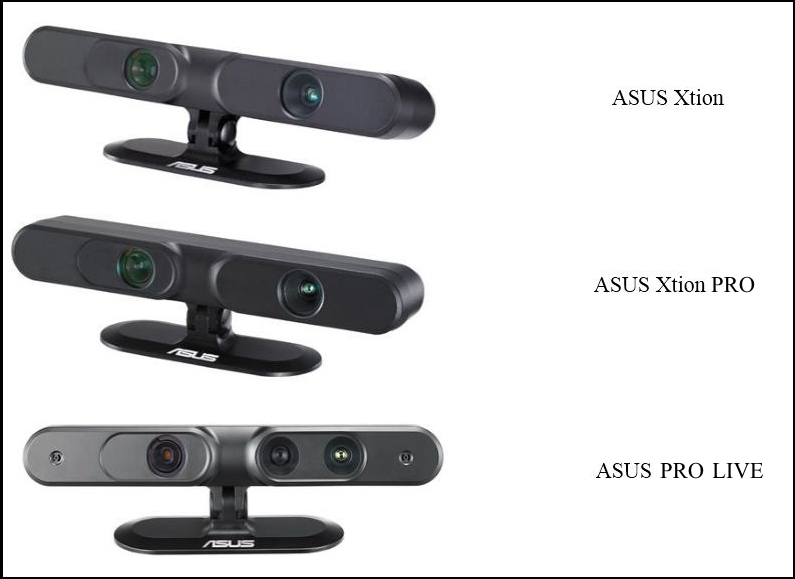

ASUS Xtion, Xtion PRO, and PRO LIVE are also 3D vision sensors designed for motion sensing applications. The technology is similar to the Kinect, using an infrared projector and a monochrome CMOS receptor to capture the depth information.

The ASUS sensor is connected to the TurtleBot netbook through a USB 2.0 port and no other external power is required. Applications for the ASUS Xtion PRO can be developed using the ASUS development solution software, OpenNi, and OpenCV for the PRO LIVE rgb camera. OpenNi packages and libraries are open source software that provides support for ASUS and PrimeSense 3D sensors. More details on OpenNi will be provided in the following Configuring TurtleBot and installing 3D sensor software section.

The following figure presents images of the ASUS sensor variations and the subsequent table shows their specifications:

ASUS Xtion and PRO versions

ASUS Xtion and PRO version specifications:

|

Spec |

Xtion |

Xtion PRO |

Xtion PRO LIVE |

|---|---|---|---|

|

Horizontal field of view (degrees) |

58 |

58 |

58 |

|

Vertical field of view (degrees) |

45 |

45 |

45 |

|

Color camera data |

none |

none |

1280 x 1024 |

|

Depth camera data |

unspecified |

640 x 480 @ 30 fps 320 x 240 @ 60 fps |

640 x 480 @ 30 fps 320 x 240 @ 60 fps |

|

Depth range (meters) |

0.8 – 3.5 |

0.8 – 3.5 |

0.8 – 3.5 |

|

Audio |

none |

none |

2 microphones |

|

Dimensions |

18 x 3.5 x 5 cm |

18 x 3.5 x 5 cm |

18 x 3.5 x 5 cm |

|

Additional information |

USB 2.0 |

USB 2.0 |

USB 2.0/ 3.0 |

|

No additional power required—powered through USB | |||

PrimeSense Carmine

PrimeSense was the original developer of the 3D vision sensing technology using near-infrared light. They also developed the NiTE software that allows developers to analyze people, track their motions, and develop user interfaces based on gesture control. PrimeSense offered its own sensors, Carmine 1.08 and 1.09, to the market before the company was bought by Apple in November 2013. The Carmine sensor is shown in the following image. ROS OpenNi packages and libraries also support the PrimeSense Carmine sensors. More details on OpenNi will be provided in an upcoming section titled Configuring TurtleBot and installing 3D sensor software:

PrimeSense Carmine

PrimeSense has two versions of the Carmine sensor: 1.08 and the short range 1.09. The preceding image shows how the sensors look and the subsequent table shows their specifications:

|

Spec |

Carmine 1.08 |

Carmine 1.09 |

|---|---|---|

|

Horizontal field of view (degrees) |

57.5 |

57.5 |

|

Vertical field of view (degrees) |

45 |

45 |

|

Color camera data |

640 x 480 @ 30 Hz |

640 x 480 @ 60 Hz |

|

Depth camera data |

640 x 480 @ 60 Hz |

640 x 480 @ 60 Hz |

|

Depth range (meters) |

0.8 – 3.5 |

0.35 – 1.4 |

|

Audio |

2 microphones |

2 microphones |

|

Dimensions |

18 x 2.5 x 3.5 cm |

18 x 3.5 x 5 cm |

|

Additional information |

USB 2.0 / 3.0 |

USB 2.0 / 3.0 |

|

No additional power required—powered through USB | ||

TurtleBot uses 3D sensing for autonomous navigation and obstacle avoidance, as described later in this chapter. Other applications that these 3D sensors are used in include 3D motion capture, skeleton tracking, face recognition, and voice recognition.

There are a few drawbacks that you need to know about when using the infrared 3D sensor technology for obstacle avoidance. These sensors have a narrow imaging area of about 58 degrees horizontal and 43 degrees vertical. It can also not detect anything within the first 0.5 meters (~20 inches). Highly reflective surfaces, such as metals, glass, or mirrors cannot be detected by the 3D vision sensors.