Viewing images on the remote computer is the next step to setting up the TurtleBot. Two ROS tools can be used to visualize the rgb and depth camera images. Image Viewer and rviz are used in the following sections to view the image streams published by the Kinect sensor.

A ROS node can allow us to view images that come from the rgb camera on Kinect. The camera_nodelet_manager node implements a basic camera capture program using OpenCV to handle publishing ROS image messages as a topic. This node publishes the camera images in the /camera namespace.

Three terminal windows will be required to launch the base and camera nodes on TurtleBot and launch the Image Viewer node on the remote computer. The steps are as follows:

- Terminal Window 1: Minimal launch of TurtleBot:

$ ssh <username>@<TurtleBot's IP Address> $ roslaunch turtlebot_bringup minimal.launch

- Terminal Window 2: Launch freenect camera:

$ ssh <username>@<TurtleBot's IP Address> $ roslaunch turtlebot_bringup minimal.launch

This

freenect.launchfile starts thecamera_nodelet_managernode, which prepares to publish both the rgb and depth stream data. When the node is running, we can check the topics by executing therostopic listcommand. The topic list shows the/cameranamespace with multiple depth,depth_registered,ir,rectify_color,rectify_mono, and rgb topics. - To view the image messages, open a third terminal window and type the following command to bring up the Image Viewer:

$ rosrun image_view image_view image:=/camera/rgb/image_colorThis command creates the

/image_viewnode that opens a window, subscribes to the/camera/rgb/image_colortopic, and displays the image messages. These image messages are published over the network from the TurtleBot to the remote computer (a workstation or laptop). If you want to save an image frame, you can right-click on the window and save the current image to your directory.

An image view of a rgb image

- To view depth camera images, press Ctrl + C keys to end the previous

image_viewprocess. Then, type the following command in the third terminal window:$ rosrun image_view image_view image:=/camera/depth/imageA pop-up window for Image Viewer will appear on your screen:

An image view of a depth image

To close the Image Viewer and other windows, press Ctrl + C keys in each terminal window.

To visualize the 3D sensor data from the TurtleBot using rviz, begin by launching the TurtleBot minimal launch software. Next, a second terminal window will be opened to start the launch software for the 3D sensor.

- Terminal Window 1: Minimal launch of TurtleBot:

$ ssh <username>@<TurtleBot's IP Address> $ roslaunch turtlebot_bringup minimal.launch

- Terminal Window 2: Launch 3D sensor software:

$ ssh <username>@<TurtleBot's IP Address> $ roslaunch turtlebot_bringup 3dsensor.launch

The

3dsensor.launchfile within theturtlebot_bringuppackage configures itself based on theTURTLEBOT_3D_SENSORenvironment variable set by the user. Using this variable, it includes a custom Kinect or ASUS Xtion PROlaunch.xmlfile that contains all of the unique camera and processing parameters set for that particular 3D sensor. The3dsensor.launchfile turns on all the sensor processing modules as the default. These modules include the following:rgb_processingir_processingdepth_processingdepth_registered_processingdisparity_processingdisparity_registered_processingscan_processing

It is typically not desirable to generate so much sensor data for an application. The

3dsensor.launchfile allows users to set arguments to minimize the amount of sensor data generated. Typically, TurtleBot applications only turn on the sensor data needed in order to minimize the amount of processing performed. This is done by setting theseroslauncharguments tofalsewhen data is not needed.When the

3dsensor.launchfile is executed, theturtlebot_bringuppackage launches a/camera_nodelet_managernode with multiple nodelets. Nodelets were described in the Camera software structure section. The following is a list of nodelets that are started:NODES /camera/ camera_nodelet_manager (nodelet/nodelet) debayer (nodelet/nodelet) depth_metric (nodelet/nodelet) depth_metric_rect (nodelet/nodelet) depth_points (nodelet/nodelet) depth_rectify_depth (nodelet/nodelet) depth_registered_rectify_depth (nodelet/nodelet) disparity_depth (nodelet/nodelet) disparity_registered_hw (nodelet/nodelet) disparity_registered_sw (nodelet/nodelet) driver (nodelet/nodelet) points_xyzrgb_hw_registered (nodelet/nodelet) points_xyzrgb_sw_registered (nodelet/nodelet) rectify_color (nodelet/nodelet) rectify_ir (nodelet/nodelet) rectify_mono (nodelet/nodelet) register_depth_rgb (nodelet/nodelet) / depthimage_to_laserscan (nodelet/nodelet)

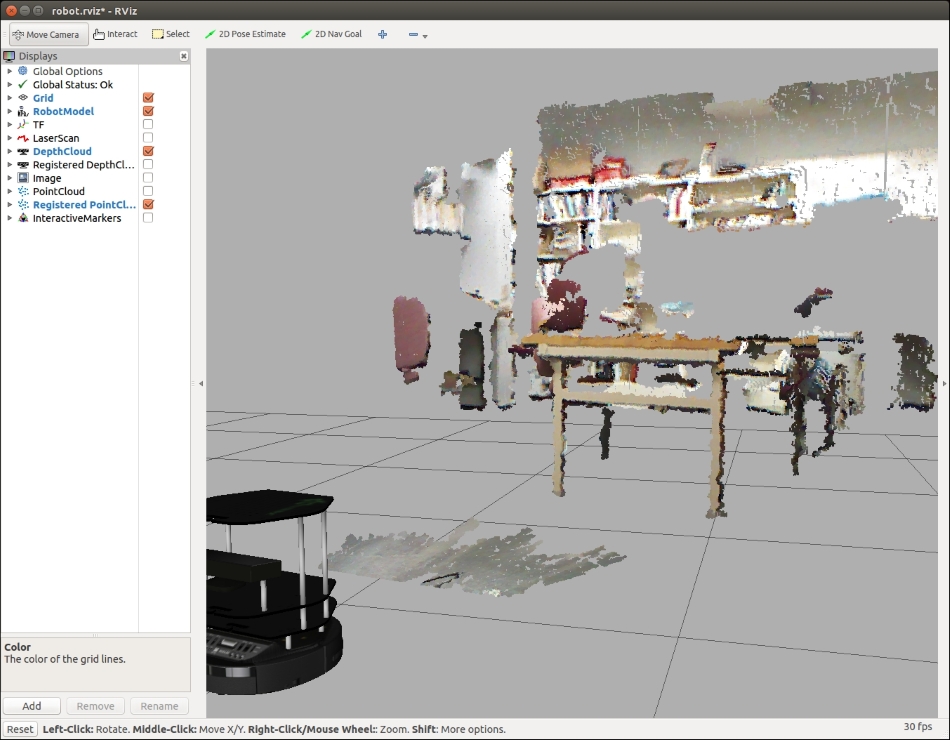

Next, rviz is launched to allow us to see the various forms of visualization data. A third terminal window will be opened for the following command:

- Terminal Window 3: View sensor data on rviz:

$ roslaunch turtlebot_rviz_launchers view_robot.launchThe

turtlebot_rviz_launcherspackage provides theview_robot.launchfile for bringing up rviz and is configured to visualize the TurtleBot and its sensor output.Within rviz, the 3D sensor data can be displayed in many formats. If images are not visible in the environment window, set the Fixed Frame (under Global Options) on the Displays panel to

/camera_link. Try checking the box for the Registered PointCloud and rotating the TurtleBot's screen environment in order to see what the Kinect is sensing. Then wait. Patience is required because displaying a point cloud involves a lot of processing power.The following screenshot shows the rviz display of a Registered PointCloud image in our lab:

A Registered PointCloud image

On the rviz Displays panel, the following sensors can be added and checked for display in the environment window:

- DepthCloud

- Registered DepthCloud

- Image

- LaserScan

- PointCloud

- Registered PointCloud

The following table describes the different types of image sensor displays available in rviz and the message types that they display:

|

Sensor name |

Description |

Messages used |

|---|---|---|

|

Camera |

This creates a new rendering window from the perspective of a camera and overlays the image on top of it. |

|

|

DepthCloud, Registered DepthCloud |

This displays point clouds based on depth maps. |

|

|

Image |

This creates a new rendering window with an image. Unlike the camera display, this display does not use camera information. |

|

|

LaserScan |

This shows data from a laser scan with different options for rendering modes, accumulation, and so on. |

|

|

Map |

This displays an occupancy grid on the ground plane. |

|

|

PointCloud, PointCloud2, and Registered PointCloud |

This shows data from a point cloud with different options for rendering modes, accumulation, and so on. |

|