To test Ceph tiering functionality, two RADOS pools are required. If you are running these examples on a laptop or desktop hardware, although spinning disk-based OSDs can be used to create the pools; SSDs are highly recommended if there is any intention to read and write data. If you have multiple disk types available in your testing hardware, then the base tier can exist on spinning disks and the top tier can be placed on SSDs.

Let's create tiers using the following commands, all of which make use of the Ceph tier command:

- Create two RADOS pools:

ceph osd pool create base 64 64 replicated

ceph osd pool create top 64 64 replicated

The preceding commands give the following output:

- Create a tier consisting of the two pools:

ceph osd tier add base top

The preceding command gives the following output:

- Configure the cache mode:

ceph osd tier cache-mode top writeback

The preceding command gives the following output:

![]()

- Make the top tier and overlay of the base tier:

ceph osd tier set-overlay base top

The preceding command gives the following output:

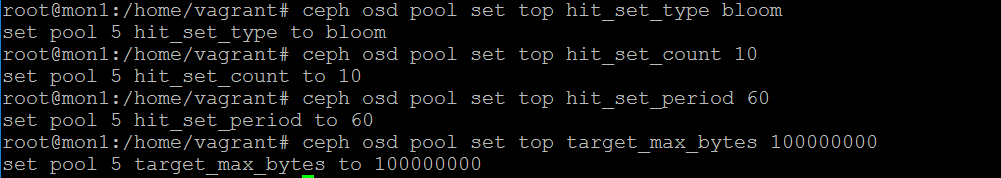

- Now that the tiering is configured, we need to set some simple values to make sure that the tiering agent can function. Without these, the tiering mechanism will not work properly. Note that these commands are just setting variables on the pool:

ceph osd pool set top hit_set_type bloom

ceph osd pool set top hit_set_count 10

ceph osd pool set top hit_set_period 60

ceph osd pool set top target_max_bytes 100000000

The preceding commands give the following output:

The earlier-mentioned commands are simply telling Ceph that the HitSets should be created using the bloom filter. It should create a new HitSet every 60 seconds and that it should keep 10 of them before discarding the oldest one. Finally, the top tier pool should hold no more than 100 MB; if it reaches this limit, I/O operations will block. More detailed explanations of these settings will follow in the next section.

- Next, we need to configure the various options that control how Ceph flushes and evicts objects from the top to the base tier:

ceph osd pool set top cache_target_dirty_ratio 0.4

ceph osd pool set top cache_target_full_ratio 0.8

The preceding commands give the following output:

The earlier example tells Ceph that it should start flushing dirty objects in the top tier down to the base tier when the top tier is 40% full. And that objects should be evicted from the top tier when the top tier is 80% full.

- And finally the last two commands instruct Ceph that any object should have been in the top tier for at least 60 seconds before it can be considered for flushing or eviction:

ceph osd pool set top cache_min_flush_age 60

ceph osd pool set top cache_min_evict_age 60

The preceding commands give the following output: