Before we failover the RBD to the secondary cluster, let's map it, create a file system, and place a file on it, so we can confirm that the mirroring is working correctly. As of Linux kernel 4.11, the kernel RBD driver does not support the RBD journaling feature required for RBD mirroring; this means you cannot map the RBD using the kernel RBD client. As such, we will need to use the rbd-nbd utility, which uses the librbd driver in combination with Linux nbd devices to map RBDs via user space. Although there are many things which may cause Ceph to experience slow performance, here are some of the most likely causes.

sudo rbd-nbd map mirror_test

sudo mkfs.ext4 /dev/nbd0

sudo mount /dev/nbd0 /mnt

echo This is a test | sudo tee /mnt/test.txt

sudo umount /mnt

sudo rbd-nbd unmap /dev/nbd0

Now lets demote the RBD on the primary cluster and promote it on the

secondary

sudo rbd --cluster ceph mirror image demote rbd/mirror_test

sudo rbd --cluster remote mirror image promote rbd/mirror_test

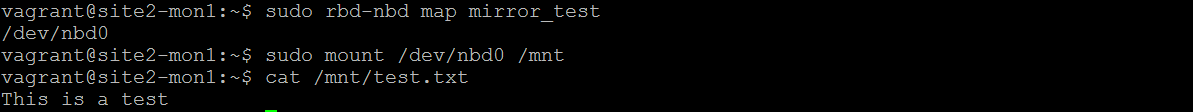

Now, map and mount the RBD on the secondary cluster, and you should be able to read the test text file that you created on the primary cluster:

We can clearly see that the RBD has successfully been mirrored to the secondary cluster, and the file system content is just as we left it on the primary cluster.

This concludes the section on RBD mirroring.