14

SAP Intelligent Technologies

Today, many enterprises are increasingly leveraging intelligent technologies such as Artificial Intelligence (AI) as part of their business processes, to forecast demands, augment employee productivity, and improve customer experience. As a result, businesses can prepare for new situations or address existing challenges more intelligently and proactively. Ultimately, AI enables humans to focus on value-adding tasks and reduce human errors, especially for repetitive and manual tasks. AI can have significant impacts on all aspects of business processes.

In this chapter, we will discuss the different product and technology offerings in ML and AI covering data preparation, training, inference, and AI operations. The following topics will be covered:

- SAP HANA ML

- SAP AI Core and AI Launchpad

- SAP AI Business Services

- AI capabilities in SAP Data Intelligence and SAP Analytics Cloud

- SAP Conversational AI

- SAP Process Automation

- Intelligent Scenario Lifecycle Management for S/4HANA

- Data architecture for AI

Throughout this chapter, you will learn the differences between the preceding technologies and gain an understanding of how to apply them in your business processes. Let’s get started!

Overview of Intelligent Technologies

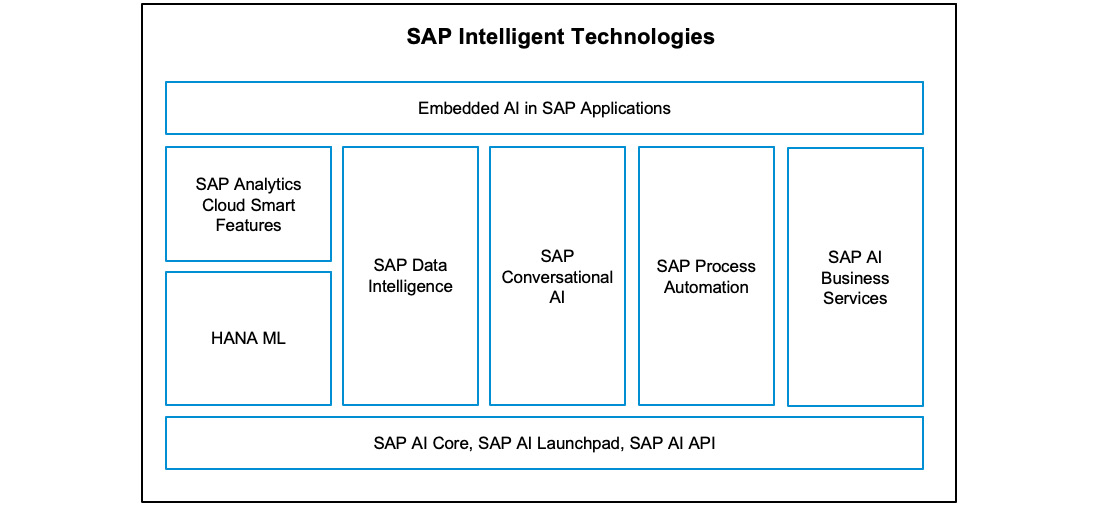

SAP Intelligent Technologies is a cornerstone of the Intelligent Enterprise strategy. SAP offers a full set of intelligent applications and services to help businesses with better decision-making based on data and intelligence. The intelligent technologies are embedded in SAP’s intelligent suite applications to support and optimize business processes. They are also exposed as part of SAP BTP for customers and ecosystem partners to extend and create new solutions that are innovative and provide competitive differentiation. As depicted in Figure 14.1, SAP’s intelligent offerings include the following:

- HANA ML: This contains in-database ML libraries and related tooling for AI modeling and algorithms, for structured and tabular data.

- AI Core and AI Launchpad: These are the foundation for AI runtime and the lifecycle management of AI scenarios. AI Core offers a robust environment for training and serving. AI Launchpad is a SaaS offering to operationalize and manage the lifecycle of AI models.

- SAP AI Business Services: They provide ready-to-use AI capabilities as reusable business services as part of SAP BTP that can help solve specific business problems in various business processes. These kinds of services are typically consumed as APIs as part of SAP API Business Hub.

- SAP Data Intelligence: This provides data integration, data governance, and features to create, deploy, and execute ML models.

- SAP Analytics Cloud (SAC): This provides smart features for citizen data scientists and business users to perform classification, predication, and forecasting.

- SAP Conversational AI: This is a low-code/low-touch chatbot building platform, with capabilities to build, train, test, and manage intelligent AI-powered chatbots to simplify business tasks and workflows and improve the user experience.

- SAP Process Automation: This combines Intelligent Robotic Process Automation (iRPA) and workflow capabilities to automate repetitive processes and help users to focus on high-value tasks.

- Embedded AI in SAP applications enables customers to consume AI directly as part of the standard applications. In this way, AI is natively infused into the business processes in SAP’s intelligent enterprise scenarios, with the support of AI clients such as ISLM for S/4HANA:

Figure 14.1: Overview of SAP Intelligent Technologies

SAP’s AI strategy is to focus on infusing AI into applications as part of business processes. Tools cover areas such as AI design-time activities, data analysis, modeling, experiment tracking, data acquisition, production runtime, operations, and the lifecycle management of AI functionalities.

Next, we will discuss the aforementioned capabilities in more detail.

HANA ML

SAP HANA contains in-database ML libraries and related tooling for AI modeling and algorithms, based on relational data. There are a few different ways to support ML use cases, with the built-in functions of the Automated Predictive Library (APL) and the Predictive Analysis Library (PAL) and Python and R clients for SAP HANA ML.

APL and PAL

APL provides data mining capabilities based on the Automated Analytics engine of HANA through functions that run, by default, in the SAP HANA script server. APL functions provide the capabilities to develop predictive models, train the models, and run the models to answer business questions based on data stored in SAP HANA. PAL is another library that provides built-in algorithms and functions to leverage ML capabilities in HANA.

APL and PAL together provide a broad range of AI scenarios, such as Classification, Regression, Time Series Forecasting, Cluster Analysis, Association Analysis, Statistics, Social Network Analysis, Recommender System, and Miscellaneous. In addition, PAL provides algorithms for outlier detection scenarios and advanced features, such as a unified interface for classification and regression scenarios for easy consumption by providing the same procedure interface.

For metrics to qualify AI model performance, APL and PAL generate them as part of the standard output of model training and function runs. The metrics can be persisted and used for comparison. Most algorithms provide functions for automated model evaluation and parameter search and comparison for the section of different regression algorithms. APL also supports additional metrics such as Predictive Power and Prediction Confidence for model performance. For example, SAC uses the HANA ML capabilities for its smart features as mentioned in the previous chapter.

APL and PAL are the embedded AI engine in SAP HANA, designed for providing maximum performance by leveraging HANA’s parallelization capabilities. As they are embedded in the database, both PAL and APL are invoked through SQL Scripts and expect the training dataset to be in a relational format from HANA data structures such as database tables, virtual structures such as calculation views, or remote data sources federated from other databases. Calls to APL and PAL could be SQLScript files and SAP HANA Stored Procedures. It can also be included in the table functions and calculation views. An important aspect of deployment is the integration with SAP applications to gain insights embedded in the business processes. One example is the ABAP Managed Database Procedures (AMDPs) that wrap SQLScript codes into standard ABAP syntax. SAP S/4HANA provides a framework for the integration of AI scenarios based on AMDPs called Intelligent Scenario Lifecycle Management (ISLM). We will discuss it later in this chapter.

APL provides another option for deployment. Models can be extracted as JavaScript code snippets, thus having the flexibility to run in existing JavaScript engines in addition to HANA runtimes.

Important Note

For more details about the supported algorithms in APL and PAL, please find the developer guide of APL at https://help.sap.com/docs/apl/7223667230cb471ea916200712a9c682/59b79cbb6beb4607875fa3fe1 16a8eef.html and reference guide of PAL at https://help.sap.com/docs/HANA_CLOUD_DATABASE/319d36de4fd64ac3afbf91b1fb3ce8de/c9eeed704f3f4ec39441434db8a874ad.html.

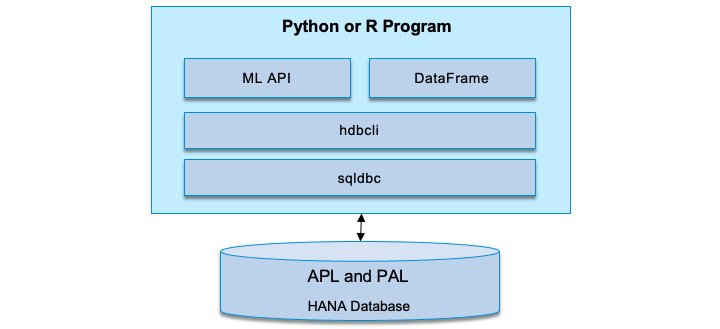

Next, SAP HANA introduced native libraries for Python and R to enable data scientists to interact with HANA’s built-in functions of APL and PAL for ease of consumption.

Python and R ML clients for SAP HANA

Python and R are the most popular languages for data science. The goal of Python and R clients is to provide a similar experience for HANA ML just like any open source ML library while the actual execution is delegated to HANA, without the need for data transfer.

Python and R clients include the standard data manipulation operations based on the DataFrame features. SAP HANA DataFrame provides a way to view data in SAP HANA, such as native DataFrame in Python and R – however, all the operations, including data training and scoring, are translated into SQL statements, and executed on the database, resulting in faster performance. Depending on the use cases, the generated SQL statements can be captured into SQLScript or data model artifacts. SAP HANA DataFrame is only available while the connection to HANA is open via the ConnectionContext object. The following statement creates a simple SAP HANA DataFrame instance:

with ConnectionContext('address', port, 'user', 'password') as c_context:

dataframe = (c_context.table('TABLE1', schema='SCHEMA1').select('COL1','COL2','COL2'))SAP HANA DataFrame doesn’t contain any data but provides a way to view data stored in HANA. If data manipulation and transformation with other datasets or use of other native libraries are required, you can convert SAP HANA DataFrame to a pandas DataFrame through the collect() function.

The library includes the modules and respective algorithms:

- hana.ml.dataframe: This represents the HANA data structure as a DataFrame.

- hana_ml.algorithms.apl: This package includes APL functions such as gradient boosting classification and regression, time series, and clustering algorithms.

- hana_ml.algorithms.pal: This package contains various algorithms of PAL for auto ML, classification, regression, clustering, exponential smoothing, association, time series forecasting, statistics, recommender systems, and miscellaneous algorithms, such as ABC Analysis, as well as model, pipeline, and metrics support.

- Other modules and packages such as exception and error handling, model storage, visualizer, spatial, graph, and text mining packages.

SAP HANA Cloud provides scalability and elasticity to manage resources and better scaling to support AI workloads. The algorithm libraries support multi-threading options to speed up training processes. To support the highest performance, HANA can be configured to hold ML models in the main memory if the use case requires it. Equally, the Python client provides the model storage that supports the versioning of models.

SAP HANA ML focuses on data scientist productivity and provides internal tooling and lifecycle management to support data preparation, model training, and evaluation. As HANA supports multi-mode data storage and processing, the ML libraries also tightly integrate with advanced capabilities such as the spatial and graph processing, and text mining of HANA. Consider SAP HANA ML libraries when HANA is the central data platform in your architecture. Within SAP, many products such as SAC, S/4HANA, and Integrated Business Planning (IBP) leverage HANA ML as the foundation of their intelligent capabilities. Figure 14.2 summarizes the AI capabilities in SAP HANA:

Figure 14.2: SAP HANA ML libraries

SAP HANA ML continues to bring more algorithms to improve the offerings for AI scenarios based on structured and tabular data in HANA. Next, we will talk about the ML/AI capabilities in SAP Data Intelligence.

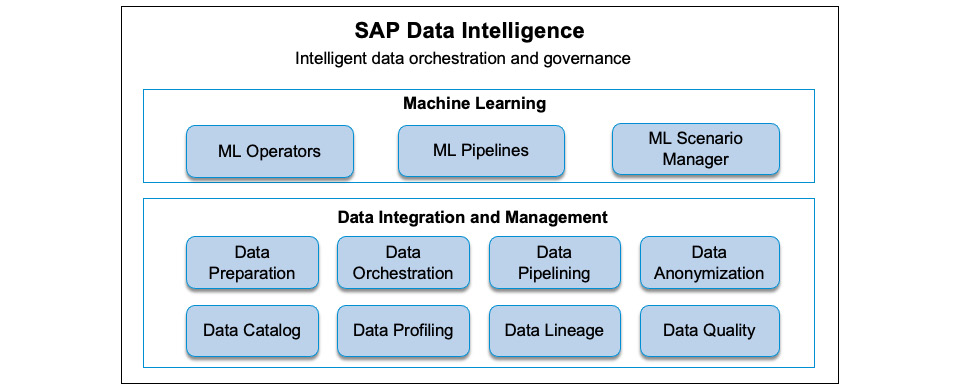

ML/AI in SAP Data Intelligence

SAP Data Intelligence is SAP’s data management solution to provide complex orchestration scenarios, data cataloging, data quality, and metadata management. It brings together data management capabilities that were previously available in SAP Data Hub and integrates them with ML capabilities from other SAP solutions. In Chapter 8, we already discussed the data integration capabilities of SAP Data Intelligence – here, we will focus on the ML capabilities of SAP Data Intelligence.

When all your data is already structured and in SAP HANA or SAP Data Warehouse Cloud, the built-in ML libraries discussed earlier might be sufficient. However, in many use cases, data is located across multiple places, with a mix of unstructured and structured data such as IoT data. SAP Data Intelligence is recommended for use cases when multiple disparate data sources need to be consolidated or orchestration of data pipelines to additional ML environments is necessary. SAP Data Intelligence includes more than 200 operators for different kinds of tasks, such as Image Classification, Optical Character Recognition (OCR), and the ability to create custom operators for different requirements. In addition, it can perform data transformation and data cleaning, is able to apply data quality rules, and has the ability to script in Python, R, and HANA ML as mentioned earlier.

As part of the SAP Data Intelligence, ML Scenario Manager provides a single place to track all ML-related artifacts, such as datasets, notebooks, training runs, and pipelines. The Modeler provides a low-code interface to utilize the functions as part of APL and PAL without knowing how to do SQL Scripting. It is also possible to combine HANA ML with open source R and Python libraries in the same pipeline to enable an end-to-end ML scenario. It is important to note that models run on standard data intelligence servers will not take advantage of GPUs. For scenarios where GPU is required such as deep learning scenarios, an alternative solution is suggested, such as AI Core, which we will cover later in this chapter. SAP Data Intelligence can still be utilized for the pipelining of the data to a different training or serving environment supporting GPUs.

Deployment of models is done using operators that wrap R and Python code and make it reusable in any pipeline. SAP Data Intelligence’s architecture is container-based and each operator runs in its own Docker container and can scale independently. Monitoring and pipeline metrics are available, and the pipelines can be scheduled to run as part of CI/CD through tools such as Git and Jenkins. Figure 14.3 depicts the core data orchestration and governance capabilities and the support of ML and AI scenarios in SAP Data Intelligence:

Figure 14.3: ML/AI support in SAP Data Intelligence

However, there are no specific MLOps lifecycle management tools available in SAP Data Intelligence, which we will discuss as part of AI Core. Before that, let’s review the smart features available in SAC that we discussed in the last chapter.

SAC smart features

SAC offers a set of smart features such as Smart Insights and Smart Predict, mainly for citizen data scientists and business users. We introduced the details of these capabilities in the last chapter, so here, we will briefly discuss what was not covered previously – the lifecycle and differences of predictive models, from data preparation to the operation of the models.

SAC supports two types of models – based on datasets or planning-enabled models. Datasets whether acquired or through live connection can be used as data sources to create any supported predictive scenarios. Planning-enabled models can be used for time series forecasting models.

SAC provides a simple way to create predictive models – underneath SAC, the predictive engine uses similar capabilities to the APL in SAP HANA. SAC doesn’t offer the ability to parameterize the underlying algorithms on purpose to keep it simple.

To evaluate the quality of predictive models, the following metrics are made available in SAC, as shown in Table 14.1:

|

Predictive Scenario |

Accuracy |

Robustness |

|

Classification |

Predictive Power |

Prediction Confidence |

|

Regression |

Root Mean Squared Error (RMSE) |

Prediction Confidence |

|

Time Series Forecasting |

Mean Absolute Percentage Error (MAPE) |

Table 14.1: SAC Predictive Model Metrics

Predictive scenarios and predictive models are created, stored in SAC, and the consumption of the prediction is typically done in SAC stories and analytics applications for the end users. Predictive forecasts generated from planning-enabled models can be exported and written back to the source systems for further integration with specific business processes.

The lifecycle of predictive models is fully managed within SAC. Predictive models created based on datasets will need to be retained manually for classification, regression, and time series forecasting. However, time series forecasting models can be created on top of datasets or planning-enabled models and the refresh of time series forecasting based on planning models can be automated.

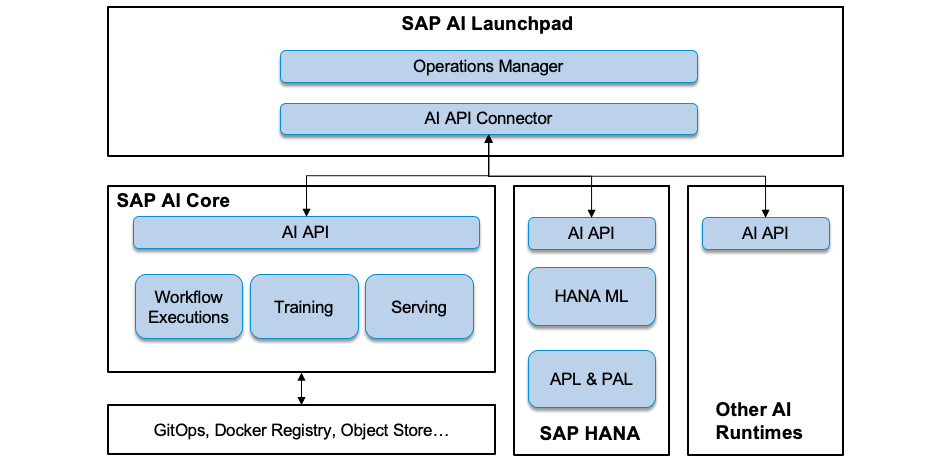

SAP AI Core, SAP AI Launchpad, and SAP AI API

SAP AI Core and SAP AI Launchpad are the two latest offerings from SAP to provide the unified AI consumption and lifecycle management of all types of AI scenarios. AI scenarios can be realized through the AI runtimes provided by SAP AI Core or using any framework, such as TensorFlow. The management of operations of AI scenarios such as versioning, training, deployment, and serving is provided through AI Launchpad. Together, they provide similar and additional functionalities to the SAP Leonardo Machine Learning Foundation. Let’s start by understanding SAP AI Core.

SAP AI Core

SAP AI Core is SAP’s AI runtime for ML and AI workloads with integration in SAP BTP to deploy AI scenarios at scale. Operationalizing AI scenarios, such as bringing an AI model developed by a data scientist to production, is not easy. SAP AI Core is the solution to productize AI for Bring Your Own Model (BYOM) scenarios. However, SAP AI Core is not intended for developing models – models can be created by leveraging any open source ML library and packaged as a Docker container. Once a model is built into a training workflow, SAP AI Core can execute and schedule the training, including the support of GPUs for parallelization.

Typically, datasets are provided via object stores such as AWS S3. SAP AI Core provides integration with all supported object stores for different hyperscalers. Unlike SAP Data Intelligence as a general data preparation tool, SAP AI Core offers limited capabilities and is only suitable for small and AI processing-focused data transformations. It is recommended to transform the dataset outside SAP AI Core to prepare it to train the AI models.

SAP AI Core offers the runtime environment to run AI scenarios, including capabilities such as multi-tenancy, auto-scaling, GPU support, and KFServing to standardize ML operations on top of Kubernetes. SAP AI Core leverages the standard Argo Workflows as the open source and container-native workflow engine, based on GitOps principles. Customers can create Argo workflows either by bringing their own models from experimental environments or promote training workflows within SAP AI Core. SAP AI Core supports multi-tenancy – each tenant can support multiple resource groups for the isolation of data and functions.

With a focus on the productization of AI scenarios, SAP AI Core collects and stores a wide range of metrics that can be configured, including the performance metrics of models and statistics on training jobs. Through the integration of the AI API, a standardized list of metrics is provided or can be further extended by customers specific to their scenarios. Monitoring of all key metrics is one of the main capabilities of SAP Al Core to enable operations. Through the integration, the metrics are available and can be analyzed in SAP AI Launchpad.

SAP AI Launchpad

SAP AI Launchpad is the SaaS offering that provides a user interface (UI) for managing and operating AI scenarios across multiple deployments and instances of supported AI runtimes such as SAP AI Core or other third-party AI runtimes. SAP AI Launchpad also provides all the necessary information on metrics and artifacts and supports the full lifecycle of training, executing, and operating in AI scenarios across AI execution engines.

From an MLOps perspective, SAP AI Launchpad is envisioned as the tool for data scientists and ML engineers for all AI content development and productization across the SAP landscape. Customers can leverage SAP AI Launchpad to trigger jobs to deploy a model and productize existing training models in supported AI runtimes, including SAP AI Core.

SAP AI Launchpad is considered the single place to explore and manage the full lifecycle of AI scenarios within the SAP landscape, regardless of runtimes.

SAP AI API

The lifecycle management of AI scenarios is standardized through a common set of APIs called SAP AI API. SAP AI Core and SAP AI Launchpad are also integrated through SAP AI API as the standard interface.

The AI API provides tools for managing the artifacts, workflows, and AI scenarios, such as the following:

- Register supported object stores such as AWS S3 and WebHDFS for storing training data and training models

- Create a Docker Registry secret to authorize the pull of docker images from your Docker Registry

- Create a configuration to specify the parameters (artifact references)

- Register an artifact for use in a configuration, such as a dataset or model

- Deploy a trained model and start to serve inference requests

- Manage training and execution metrics based on filtering conditions

SAP AI API is independent of the AI runtimes, whether it is in SAP HANA ML or leverages partner technologies such as the AI runtimes from Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP).

Important Note

For more details about SAP AI API, please read the API reference at https://help.sap.com/doc/2cefe221fddf410aab23dce890b5c603/CLOUD/en-US/index.html.

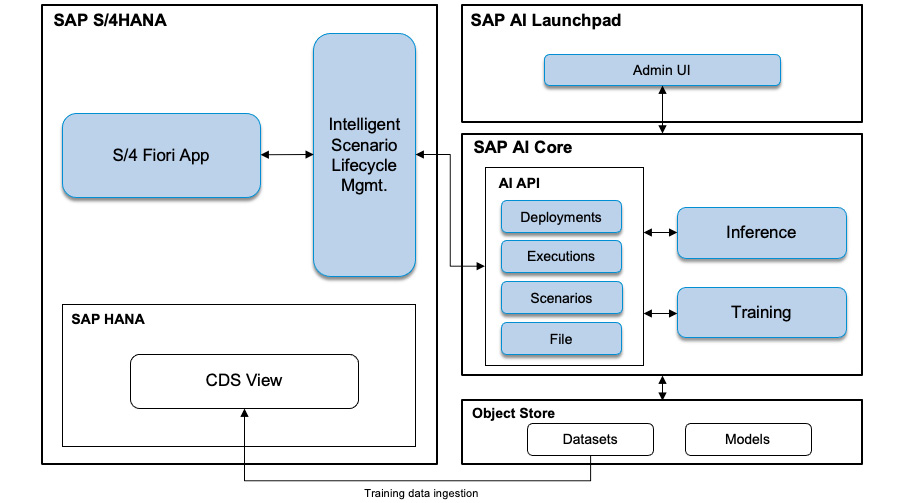

To summarize, Figure 14.4 depicts the integrations between SAP AI Core, SAP AI Launchpad, and SAP AI API:

Figure 14.4: SAP AI Core, AI Launchpad, and AI API

Next, let’s have a look at SAP AI Business Services, the simplest way to consume intelligent capabilities from SAP BTP.

SAP AI Business Services

SAP offers differentiated business-centric AI services through SAP AI Business Services. SAP AI Business Services is not a single product but a set of reusable business services and applications that provide the capabilities to automate and optimize business processes based on learning from historic data. It solves some specific business problems such as processing documents and business entity recognition.

Most of the SAP AI Business Services are delivered as SaaS offerings with their own UIs or as ready-to-use APIs that can be integrated into existing business processes. By embedding SAP AI Business Services, some SAP applications such as S/4HANA provide out-of-box integrations that infuse intelligence as part of the business application. SAP customers and partners can use SAP AI Business Services for their specific scenario with ready-to-use AI algorithms in your business context through SAP BTP, based on their own data.

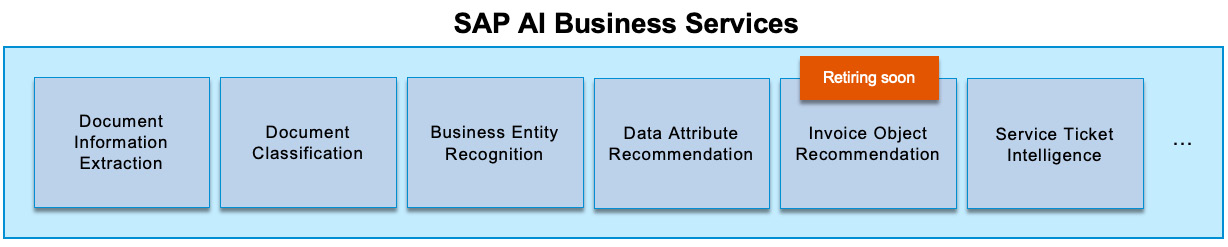

Figure 14.5 summarizes the list of services in SAP AI Business Services. SAP will continue to offer new services through SAP BTP:

Figure 14.5: SAP AI Business Services

Here are some more details:

- Document Information Extraction to process a large number of business documents such as invoices and payments and extract information from header fields and line items as structured data. It allows you to use master data to enrich information and find the best match and create your own template for processing custom documents.

- Document Classification helps to classify and manage many documents with either pre-trained or custom classification models to improve overall document processing productivity. It supports various use cases such as contract management and invoice processing.

- Business Entity Recognition helps to detect and extract business entities from unstructured text. It supports both pre-trained models and allows users to create their own ML models to detect new types of entities. Business Entity Recognition is offered as an API-only service without a UI.

- Data Attribute Recommendation helps to match and classify master data, such as matching a point of sales information with a product category and adding missing numerical attributes in sales orders. Data Attribute Recommendation is also offered as an API-only service without a UI.

- Invoice Object Recommendation helps the accounts payable department to recommend or increase the accuracy in assigning general ledger (G/L) accounts and cost objects for incoming invoices without a purpose, in order to reduce the time taken to process and carry out repetitive manual tasks. Invoice Object Recommendation is also offered as an API-only service without UI. At the time of writing, Invoice Object Recommendation is marked as to be deprecated soon.

- Service Ticket Intelligence helps to classify service tickets into their categories and assign them to the right agent with recommended solutions or knowledge base articles to improve service response time and overall support productivity and efficiency. Service Ticket Intelligence is embedded in SAP Service Cloud or can be consumed as a REST-based API.

SAP AI Business Services provide ready-to-use AI models consumable through standard REST APIs, enabling developers to use AI services without the need for data science know-how. Most SAP AI Business Services provide APIs for both training and inference – SAP also takes care of the deployment and ongoing operations of the models. Due to its simplicity and position to solve specific business problems, SAP AI Business Services is considered the starting point of the AI journey for your SAP landscape, all through easy-to-consume cloud offerings.

SAP AI Business Services is part of the Cloud Platform Enterprise Agreement (CPEA) commercial model of SAP BTP that allows customers and partners to consume the services with entitled cloud credits and only charged based on consumption.

SAP Conversational AI

SAP Conversational AI (CAI) offers Natural Language Processing (NLP) capabilities to enable users to create intelligent chatbots to automate workflows. SAP CAI is the conversational AI layer of SAP BTP to help businesses to build bots through its low-code bot building platform and embed chatbot experiences into SAP applications to deliver a personalized experience and automate time-consuming and repetitive tasks.

As an enterprise conversational AI platform, SAP CAI provides the following capabilities:

- Build the bot: Give skills to the bot that include the trigger, requirements, and responses, and create the conversational flow with the Bot Builder tool

- Train the bot: Create intents and use expressions to teach the bot what it can understand and recognize from the users

- Connect the bot: Connect the bot to SAP solutions and various messaging platforms and integrate with a fallback channel to a human agent if required

- Monitor the bot: Understand how users interact with the bot, understand unaddressed requirements, and improve user experience

Originally acquired from Recast.AI, SAP CAI helps to enhance the NLP capabilities for SAP solutions by providing a conversational user experience for SAP customers. CAI is offered as a service in BTP and will continue to provide more integration and usage with other SAP solutions, such as the SAP SuccessFactors Human Experience Management suite.

SAP Process Automation

There is an increasing demand for companies to enable their employees to focus on business-critical activities rather than manual and repetitive tasks. One approach is to upskill the workforce to leverage process automation tools to improve productivity and reduce cycle time. Think about the use cases such as automating repetitive tasks such as copying and pasting into a spreadsheet, high-volume process steps such as entering sales order data, changing purchase orders, the employee onboarding process, and leave request approval.

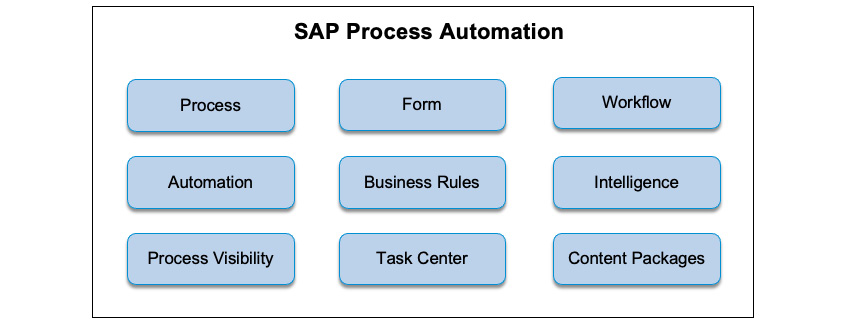

SAP Process Automation is an intelligent process automation platform that provides a low-code and no-code experience to users for building applications and process extensions. It combines the capabilities of SAP Workflow Management and SAP iRPA. It helps business users (aka Citizen Developers) to build automation for their business processes without the need to depend on the IT department. In this way, it unleashes the power to have a domain expert with process knowledge but not necessarily the developer skills to participate in simplifying and automating business processes.

SAP Process Automation is available as part of BTP’s free tier and can be consumed through the CPEA or pay-as-you-go models. Once enabled in the BTP Cockpit, the SAP Process Automation application development workbench is the no-code environment to create, test, deploy and monitor the processes, with different skills such as automation, forms, and advanced workflows, as Figure 14.6 shows:

Figure 14.6: SAP Process Automation capabilities

As part of SAP Process Automation, the desktop agent is an on-premises component, with a setup program as a Windows MSI installer. The installer can be downloaded from SAP Software Center.

SAP Process Automation organizes the features in the following areas:

- Lobby: For creating and managing business process projects or action projects. A business project is a way to group tasks of business scenarios and it can consist of different skills such as automation, approval forms, or business rules. An action project is a place to encapsulate external APIs typically created by IT teams that allow business users to consume in a business process project.

- Store: The place to browse and use existing pre-packaged content curated by SAP, which includes templated automation, forms, actions, workflows, processes, and process visibility dashboards. All the content is versioned and free to use but SAP doesn’t warrant the correctness

- Monitor: Allows business users to see how automation is performing through Process visibility dashboards. Users can create rich dashboards and collect events from automation jobs and workflows.

- Setting: Where to register and configure the desktop agents, backend settings such as a mail server or integration with SAP Cloud ALM, and configure API keys, destinations, and so on.

Now that we have covered the intelligent technologies offered through SAP BTP, let’s have a look at how they are integrated with SAP applications such as SAP S/4HANA.

Intelligent Scenario Lifecycle Management for S/4HANA

ISLM is the standardized framework for the lifecycle management and operation of intelligent scenarios in the context of SAP S4/HANA. ISLM acts as the central cockpit to operate ML in SAP S4/HANA and it cannot be used outside of it. An intelligent scenario is an ABAP representation of an ML-based use case.

The ISLM framework offers the necessary support to standardize the integration and consumption of intelligent scenarios for both embedded and side-by-side scenarios, which allows application developers for SAP S/4HANA to create scenarios and perform lifecycle operations including training, deploying, and activating models directly within the business applications. The operations of intelligent scenarios are supported by the Intelligent Scenario Management application. Depending on where the ML model runs, ISLM supports both of the following scenarios:

- Embedded: The ML model runs in the same stack as S/4HANA, leveraging SAP HANA ML capabilities such as the APL and PAL, and is consumed by the ABAP-based applications directly.

- Side-by-side: When the ML model runs in a separate stack such as SAP AI Core or through the consumption of SAP AI Business Services. The side-by-side scenarios have more flexibility to support advanced AI use cases by leveraging capabilities such as image recognition or deep learning based on neural networks

Through ISLM, an intelligent scenario of either type can be created. It provides the customers the possibility to perform prerequisite checks in the exploration phase and check whether the scenario will run successfully in their environment. An ML model can be trained and deployed for inference consumption; deployment is only applicable to side-by-side scenarios. To be consistent with the ABAP programming model, ISLM provides customers more control to specify the trained model to be activated and used in production, with the additional step of activation of an intelligent scenario. The inference result can be consumed through ABAP APIs for side-by-side scenarios and CDS views for embedded scenarios; batch inference requests can also be involved in the business applications. Figure 14.7 depicts the architectural integration for the side-by-side scenario:

Figure 14.7: A side-by-side intelligent scenario in S/4HANA

The focus of ISLM is to support more scenario types for the diverse need of S/4HANA business applications and provide enhanced capabilities for metering, model comparison, and multi-model management. In the current scope, ISLM doesn’t support data management capabilities. Depending on the scenario, it relies on HANA for embedded and can leverage a cloud object store or HANA data lake files for the side-by-side scenarios. ISLM also integrates with AI Launchpad for monitoring and metrics.

One of the key challenges in AI projects is how to handle the data – from data integration and transformation to data governance. Next, we will discuss the data architecture for AI that covers these aspects.

Data architecture for AI

Data is an essential ingredient for any AI scenario. One of the key elements for the success of an AI project is the data architecture – how to bring data together, store and process it, bring the results back, and integrate the insights and actions back into the applications. The following are some of the typical challenges of the data architecture for AI:

- Data is located across different data sources based on different formats, systems, and structured and unstructured data types.

- Data integration and consolidation require a common data model.

- Replicating data involves how to address data privacy and data protection concerns, as well as other compliance requirements.

- The data platform provides data for the AI execution engine and also needs to address data ingestion, data storage, and data lifecycle management.

- AI requires metadata such as labeling for supervised learning.

- AI lifecycle events can be tightly coupled with the data lifecycle, such as an inference based on a live data change.

- The insights and AI-based recommendations need to be served by the data platform and integrated back into the applications.

- There are performance requirements for the initial load and event-based data integration for incremental changes.

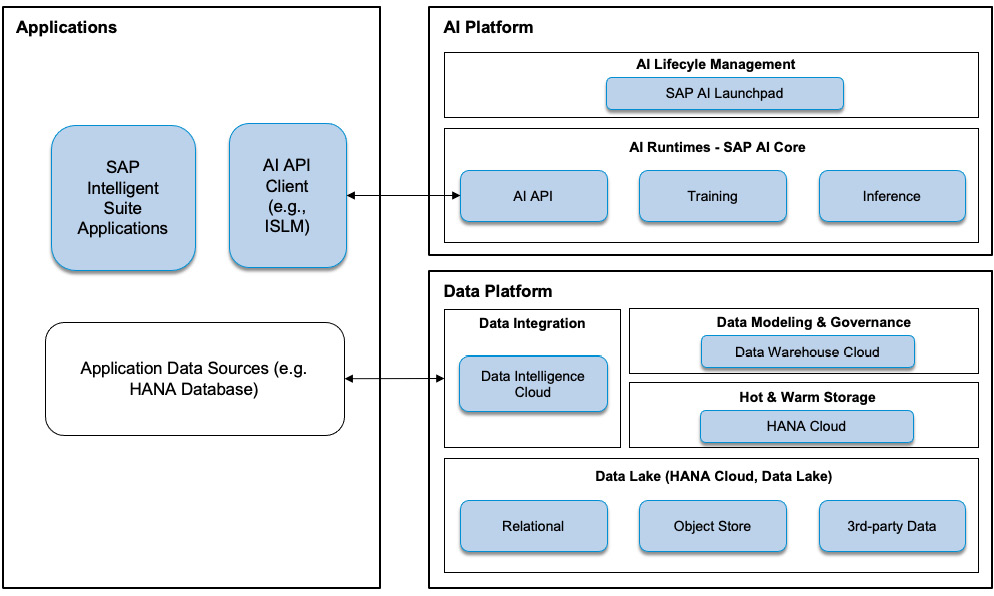

In this section, we will describe the data architecture for AI. Most of the time, we have SAP AI Core as the AI execution engine, but SAP also supports other AI execution engines from partners, such as the relevant services from AWS, Azure, and GCP. HANA ML as described earlier provides in-database AI and doesn’t necessarily require an extended data architecture. Thus, we will not discuss it further here.

The availability of data is critical for the success of AI projects, for both the design time and run time in the production phase.

During design time, for exploration and experimentation, data acquisition and access to training data is the first important step. Data integration tools such as SAP Data Intelligence can be leveraged to extract data from source systems, in batch or through events, as well as manage the lifecycle of data pipelines. In many use cases, access to and management of third-party datasets will be required. For that, the data architecture should be able to leverage scalable cloud storage such as object stores such as AWS S3. AI runtimes such as AI Core can work directly with object stores. Besides, content creation such as labeling can be labor-intensive and should be automated and coordinated as much as possible.

In production, the data architecture needs to support the severance, monitoring, and retention of the models. Data needs to be continuously available from various data sources including SAP applications to do the re-training and inference processes. A frequent challenge is how to make sure that data governance and compliance are ensured across heterogeneous data sources. A common approach for data governance and policy enforcement is required. It is important to trace the data lineage to understand where data is coming from and how it is processed, especially when data is transported and transformed over several stages, which is often required in modern AI use cases.

In the meantime, it is important to meet the non-functional requirements specific to business needs. Such requirements include but are not limited to multi-tenancy support to isolate the needs of different customers, the ability to monitor the data quality, and ensure consistency with training data. AI in production requires operational readiness. For that, SAP AI Launchpad can support lifecycle management in production as the central operational tool, which supports flexible AI runtime options including SAP AI Core.

Based on the design time needs and runtime requirements described previously, we can start to draw the data architecture diagram covering three major components that include a data platform, an AI platform, and business applications. We will describe the roles and responsibilities of each of them here in detail.

The data platform will be responsible for the following:

- Ingest and store data from SAP applications and third-party data sources

- Keep data up to date through streaming or delta loads from various data sources

- Expose data and make it consumable for AI runtimes

- Store and expose inference results and make them consumable for the applications and downstream consumers

- Store and manage the metadata such as domain models, labeling, connectivity, data cataloging, and data lineage

- Handle data privacy, data protection, and compliance requirements, such as data encryption at rest, data segregation, and audit logging

- Store the AI model and intermediate artifacts such as derived datasets and models

The AI platform will focus on the following responsibilities:

- Manage the AI scenarios and its lifecycle, such as training, deployments, and inference

- Manage the artifacts such as datasets and models as well as the intermediate artifacts

- Expose the AI lifecycle via AI APIs across different runtimes if necessary

- Provide capabilities for data anonymization, labeling, a data annotation workflow, and data quality management

While most of the heavy lifting is performed by the data platform and AI platform, the applications are still responsible for the following aspects:

- Provide and integrate data into the data platform by exposing API or data events

- Consume the inference results and integrate them back into the application for the end users

- Control the AI lifecycle using specific clients such as S/4HANA ISLM (optional)

This kind of data architecture is depicted in Figure 14.8, leveraging some of the offerings from SAP BTP described in this chapter and previous chapters:

Figure 14.8: Data architecture for AI

In this data architecture, as part of the described data platform, the data lake provides the cold storage and the processing layer of relational data, object store support through HANA Cloud, the data lake, and HANA Cloud and data lake files. For HANA Cloud, the data lake is a disk-based relational database optimized for an OLAP workload and provides native integration with a cloud object store through HANA Cloud and data lake files, to leverage it as a cheaper data storage with SQL on Files capabilities. HANA Cloud as the in-memory HTAP database provides the hot and warm storage and processing of relational and multi-model data such as spatial, document, and graph data. HANA Cloud includes multi-tier storage, including in-memory and extended storage based on disks. SAP Data Warehouse Cloud focuses on the data modeling and governance of the data architecture and provides the semantic layer and data access governance. Besides this, SAP Data Intelligence provides the data integration and ingestion capabilities for extracting data from source systems and managing the lifecycle of data pipelines. Next, the AI platform comprised of SAP AI Core and SAP AI Launchpad is responsible for managing the AI scenarios across training, inference, monitoring, and the operational readiness of AI projects. Finally, applications are served as both the data sources and consumers in an AI scenario.

While this data architecture illustrates how different kinds of technologies can be put together to serve AI needs, regardless, it is not a necessary architecture for all kinds of use cases. Depending on the complexity and integration needs of your use cases, the data architecture can be dramatically simplified, and not all the mentioned technology components will be required. For example, SAP AI Core can directly consume data from a cloud object store bucket and manage the AI lifecycle directly together with SAP AI Launchpad. In the simplest scenario, you may even just call an API from SAP API Business Services without the need to worry about the operational complexity of AI models.

Data Privacy and Protection

Data Privacy and Protection (DPP) in the handling of customer data in AI scenarios is a critical enterprise quality that must be prioritized in the data architecture. For an ML/AI use case, data that comes from different sources may contain Personal Identifiable Information (PII) and therefore must be handled properly.

When PII is involved, no human interaction will be allowed whether data is in a structured or unstructured format. This is applicable to data extraction and integration, the inspection of data, model training, and the deletion of data. Concretely, the data architecture needs to consider the following concepts and mechanisms to support DPP-compliant data processing:

- Authorization management: Only authorized data can be processed and the user’s data authorization in the applications needs to be translated into the data authorization of replicated data. Authorization management is based on DPP purposes and can be achieved by providing the DPP context of the user from the leading source system to the data platform. For example, a manager should only see the salary information of members of their team.

- Data anonymization: Applying the anonymization function to translate a raw dataset into an anonymized dataset while preserving the necessary information for the AI use cases. HANA supports in-database anonymization or can be applied as part of the data pipeline for in-transit anonymization enabled by the K-Anonymity operator of Data Intelligence.

- Data access and change logging: If sensitive DPP data is stored, the data platform must provide read-access logging. Besides this, any change to personal data should be logged.

- Data deletion: Data is usually replicated to the data platform. If the data is erased in the source system when the retention period has expired or when requested by the user in the context of the GDPR (General Data Protection Regulation) in the EU, it also needs to be prorogated to delete the data replicated in the data platform.

Summary

This chapter wrapped up the overall “data to value” offerings from SAP BTP. Throughout this chapter, we introduced a broader set of intelligent technologies, starting from the ML capabilities in SAP HANA through the APL and PAL. After that, we discussed the embedded ML/AI capabilities in SAP Analytics Cloud and how SAP Data Intelligence supports the orchestration and governance of AI scenarios.

We also briefly covered SAP CAI for supporting the digital assistance experience and SAP Process Automation for workflow automation to enable business users to automate repetitive tasks without the need to depend on the IT department.

Of course, SAP AI Core and SAP AI Launchpad are the core offerings to support advanced AI use cases such as deep learning, with SAP AI Core as the execution runtime and SAP AI Launchpad providing lifecycle management and monitoring operational AI scenarios.

Lastly, data is essential for any AI scenario. In this context, we explored what the typical challenges in data management for AI are, the core components of data architecture, the SAP technologies that can be used as part of the data architecture, and the guidance for supporting DPP requirements.