Chapter 9

Sizing Software Deliverables

Up until this point our discussion of software cost estimating has dealt primarily with surface issues that are not highly complex. We are now beginning to deal with some of the software cost-estimating issues that are very complex indeed.

It will soon be evident why software cost-estimating tools either must be limited to a small range of software projects or else must utilize hundreds of rules and a knowledge base of thousands of projects in order to work well.

It is easier to build software estimating tools that aim at specific domains, such as those aimed only at military projects or at management information systems projects, than to build estimating tools that can work equally well with information systems, military software, systems and embedded software, commercial software, web applets, object-oriented applications, and the myriad of classes and types that comprise the overall software universe.

In this section we will begin to delve into some of the harder problems of software cost estimating that the vendors in the software cost-estimating business attempt to solve, and then we will place the solutions into our commercial software cost-estimating tools.

General Sizing Logic for Key Deliverables

One of the first and most important aspects of software cost estimating is that of sizing, or predicting the volumes of various kinds of software deliverable items. Sizing is a very complex problem for software cost estimating, but advances in sizing technology over the past 30 years have been impressive.

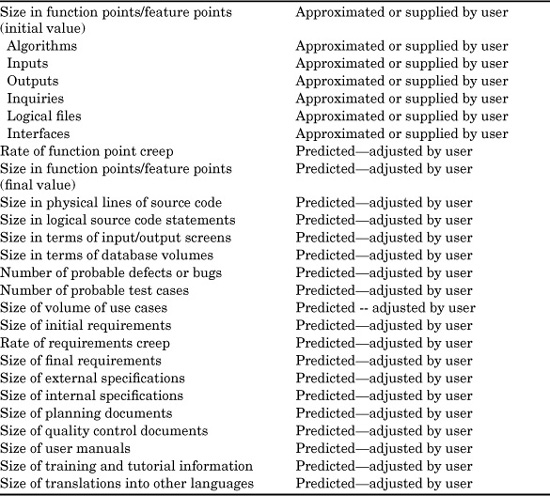

Software cost-estimating tools usually approach sizing in a sequential or cascade fashion. First, the overall size of the application is determined, using source code volumes, function point totals, use cases, stories, object points, screens, or some other metric of choice. Then the sizes for other kinds of artifacts and deliverable items are predicted based on the primary size in terms of LOC, function points, or whatever metric was selected.

TABLE 9.1 Software Artifacts for Which Size Information Is Useful

1. Use cases for software requirements

2. User stories for software requirements

3. Classes and methods for object-oriented projects

4. Function point sizes for new development

5. Function point sizes for reused material and packages

6. Function point sizes for changes and deletions

7. Function point sizes for creeping requirements

8. Function point sizes for reusable components

9. Function point sizes for object-oriented class libraries

10. Source code to be developed for applications

11. Source code to be developed for prototypes

12. Source code to be extracted from a library of reusable components

13. Source code to be updated for enhancement projects

14. Source code to be changed or removed during maintenance

15. Source code to be updated from software packages

16. Screens to be created for users

17. Screens to be reused from other applications

18. Text-based paper documents, such as requirements and specifications

19. Text-based paper documents, such as test plans and status reports

20. Percentages of text-based paper documents that are reused

21. Graphics-based paper documents, such as data flow diagrams or control flows

22. Percentages of graphics elements that are reused

23. Online HELP text

24. Graphics and illustrations

25. Multimedia materials (music and animation)

26. Defects or bugs in all deliverables

27. New test cases

28. Existing test cases from regression test libraries

29. Database contents

30. Percentage of database contents that are reused

Before discussing how sizing is performed, it is useful to consider some 30 examples of the kinds of software artifacts for which sizing may be required. The main software artifacts are shown in Table 9.1.

Although this list is fairly extensive, it covers only the more obvious software artifacts that need to be dealt with. Let us now consider the sizing implications of these various software artifacts at a somewhat more detailed level.

Sizing Methods Circa 2007

One of the major historical problems of the software engineering world has been the need to attempt to produce accurate cost estimates for software projects before the requirements are fully known.

The first step in software cost estimating requires a knowledge of the size of the application in some tangible measurement such as lines of code, function points, object points, use cases, story points, or some other alternative.

The eventual size of the application is, of course, derived from user requirements of the application. Thus sizing and understanding user requirements are essentially the same problem.

Empirical data from hundreds of measured software projects reveals that a full and perfect understanding of user requirements is not usually possible to achieve at one time. User requirements tend to unfold and grow over time. In fact, the measured rate of this growth of user requirements averages 2 percent per calendar month, from the end of the nominal “requirements phase” through the subsequent design and coding phases.

The total accumulated growth in requirements averages about 25 percent more than initially envisioned, but the maximum growth in requirements has exceeded 100 percent. That is, the final application ends up being about twice as large as initially envisioned.

Historically, the growth in requirements after the formal requirements phase has led to several different approaches for dealing with this phenomenon, such as the following:

![]() Improved methods of requirements gathering such as joint application design (JAD), where clients work side by side with designers using formal methods that are intended to gather requirements with few omissions.

Improved methods of requirements gathering such as joint application design (JAD), where clients work side by side with designers using formal methods that are intended to gather requirements with few omissions.

![]() Freezing requirements for the initial release at some arbitrary point. Additional requirements are moved into subsequent releases.

Freezing requirements for the initial release at some arbitrary point. Additional requirements are moved into subsequent releases.

![]() Including anticipated growth in the initial cost estimates. Often the first estimate will include an arbitrary “contingency factor” such as an additional 35 percent for handling unknown future requirements that occur after the estimate is produced.

Including anticipated growth in the initial cost estimates. Often the first estimate will include an arbitrary “contingency factor” such as an additional 35 percent for handling unknown future requirements that occur after the estimate is produced.

![]() Various forms of iterative development, where pieces of the final application are developed and used before starting to build the next set of features.

Various forms of iterative development, where pieces of the final application are developed and used before starting to build the next set of features.

![]() Various forms of Agile development, where development commences when only the most important and obvious features are understood. As experiences with using the first features accumulate, additional features are planned and constructed. The features for the final application may not be understood until a number of versions have been developed and used. For some of the Agile approaches, clients are part of the team and so the requirements evolve in real time.

Various forms of Agile development, where development commences when only the most important and obvious features are understood. As experiences with using the first features accumulate, additional features are planned and constructed. The features for the final application may not be understood until a number of versions have been developed and used. For some of the Agile approaches, clients are part of the team and so the requirements evolve in real time.

Software applications are a different kind of engineering problem from designing a house or a tangible object such as an automobile. Before construction starts on a house, a full set of design blueprints are developed by the architect, using inputs from the owners. It does happen that the owners may make changes afterwards, but usually the initial blueprint is more than 95 percent complete.

Unfortunately for the software world, clients usually demand cost estimates for 100 percent of the final application at a point in time where less than 50 percent of the final features are understood. A key challenge to software estimating specialists and software project managers is to find effective methods for sizing applications in the absence of full knowledge of their final requirements.

Some of the methods that have developed for producing software application size and cost estimates in the absence of full requirements include the following:

![]() Pattern matching, or using historical data from similar projects as the basis for both predicting the final size and costs of a new project.

Pattern matching, or using historical data from similar projects as the basis for both predicting the final size and costs of a new project.

![]() Using historical data on the average rate of requirements growth to predict the probable amount of growth from the time of the first estimate to the end of the project.

Using historical data on the average rate of requirements growth to predict the probable amount of growth from the time of the first estimate to the end of the project.

![]() Using various mathematical or statistical methods to attempt a final size prediction from partial requirements.

Using various mathematical or statistical methods to attempt a final size prediction from partial requirements.

![]() Using arbitrary rules of thumb to add “contingency” amounts to initial estimates to fund future requirements.

Using arbitrary rules of thumb to add “contingency” amounts to initial estimates to fund future requirements.

![]() Attempting to limit requirements growth by freezing requirements at a specific point, and deferring all additions to future versions that will have their own separate cost estimates.

Attempting to limit requirements growth by freezing requirements at a specific point, and deferring all additions to future versions that will have their own separate cost estimates.

![]() Producing formal cost estimates only for the features and requirements that are fully understood, and delaying the production of a full cost estimate until later when the requirements are finally defined.

Producing formal cost estimates only for the features and requirements that are fully understood, and delaying the production of a full cost estimate until later when the requirements are finally defined.

Let us consider the pros and cons of these six methods for dealing with sizing software applications in the absence of full requirements.

Pattern Matching from Historical Data

In 2007, about 70 percent of all software applications are repeats of legacy applications that have lived past their prime and need to be retired. However, only about 15 percent of these legacy applications have reasonably complete historical data available in terms of schedules, costs, and quality. What the legacy applications do have available is a known size. However this size data may only be available in terms of source code. Also, the programming language for the legacy software is probably not going to be the same as that of the new replacement application.

For example, if you are replacing a legacy order entry system circa 1990 with a new application, the size of the legacy application might be 5000 function points and 135,000 COBOL source statements in size.

The new version will probably include all of the existing legacy features, and some new features as well. You might also be planning to use an object-oriented method of developing the new version and the Smalltalk programming language.

You can assume that the set of features for the new application will be larger than the old but the source code volume might not be. Using the legacy application as the starting point, and some suitable conversion rules, you might predict that the new version will be about 6000 object points in size. The volume of code in the Smalltalk programming language might be about 36,000 Smalltalk statements since Smalltalk is a much more powerful language than COBOL.

As an additional assumption since you are using OO methods and code, you probably will have about 50 percent reusable code so you will only be developing about 3000 object points and 18,000 new Smalltalk statements.

Of course, this is not a perfect approach to sizing, but if you do happen to have historical size information available from one or more similar legacy applications, you can be reasonably sure that you will not under-state the size of the new application. The new application will almost certainly be somewhat larger than the old in terms of features.

If you have access to a large volume of historical data from a consulting company or benchmark group you might be able to evaluate a number of similar legacy applications. Doing this requires a benchmark database and a good taxonomy of application nature, class, scope, and type in order to ensure appropriate matches.

Pattern matching from legacy applications is the only method that can be used before requirements gathering is started. Thus, pattern matching provides the earliest chronology for creating a cost estimate that does not depend almost exclusively on guesswork.

Using Historical Data to Predict Growth in Requirements

It is a proven fact that for large software applications, the requirements are never fully defined at the end of the nominal requirements phase. It is also a proven fact that requirements typically grow at a rate of about 2 percent per calendar month assuming a standard waterfall development model and a normal requirements gathering and analysis process.

This growth continues during the subsequent design and coding phases, but stops at the testing phase. For an application with a six-month design phase and a nine-month coding phase, the cumulative growth will be about 30 percent in new features.

(However, if you are using one of the Agile methods that concentrates exclusively on the most obvious and important initial requirements, the monthly rate of growth in new requirements will be about 15 percent per calendar month. For an Agile application with a one-month design phase and four months of sprints or iterations, the cumulative growth in requirements will be about 75 percent in new features.)

Thus, historical data from past projects that show the rate at which requirements evolve and grow during the development cycle can be used to estimate the probable size of the entire application. Incidentally, predicting the growth of requirements over time is a useful adjunct to the method of “earned value” costing.

Several commercial software cost-estimating tools include features that attempt to predict the volume of growth of requirements after the requirements phase. The actual calculations, of course, are somewhat more complex than the simple examples discussed here.

Mathematical or Statistical Attempts to Extrapolate Size from Partial Requirements

Recall from Chapters 6, 7, and 8 that the taxonomy of nature, class, type, and scope places a software application squarely among similar applications. In fact, once an application has been placed in this taxonomy, simply raising the sum to the 2.35 power will yield a rough approximation of the function point total for the application as illustrated in Chapter 8. Similar rules can be applied to predict the number of object points, use case points, or other metrics.

One of the characteristics of applications that occupy the same place in a taxonomy is that such applications often have very similar distributions of function point values for the five elements: inputs, outputs, inquiries, logical files, and interfaces.

Assume that the taxonomy of your new application matches a legacy application that had 50 inputs, 75 outputs, 50 inquiries, 15 logical files, and 10 interfaces. When analyzing early requirements for the new application, you will probably start with “outputs” since that is the most common starting place for understanding user needs.

If you ascertain that your new application will have 80 outputs, then you have enough information to make a mathematical prediction of likely values for the missing data on inputs, inquiries, logical files, and interfaces. Thus, you can predict about 53 inputs, 80 outputs, 53 inquiries, 17 logical files, and 11 interfaces.

We started with knowledge only of the outputs, and used mathematical extrapolation to predict the missing values.

Similar kinds of predictions could also be made with object points or use case points. However, there is comparatively little historical data available that is expressed in terms of either object points or use case points.

Some commercial software cost-estimating tools do include extrapolation features from partial knowledge. It should be noted that there are two drawbacks to sizing using this approach: (1) you need firm knowledge of the size of at least one factor, and (2) you need access to historical data from similar projects.

Also, from time to time new applications will not actually match the volumes of inputs, outputs, inquiries, etc., from legacy or historical projects. Thus, this method occasionally will lead to erroneous sizing.

Arbitrary Rules of Thumb for Adding Contingency Factors

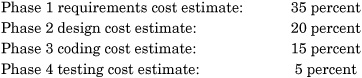

The oldest and simplest method for dealing with incomplete requirements is to add a contingency factor to each cost estimate. These contingency factors are usually expressed as simple percentages of the total cost estimate. For example, back in the 1970s IBM used the following sliding scale of contingency factors for software cost estimates:

The rationale for these contingency factors is that the extra money would be used to fund requirements that had not been understood or present at the time of each cost estimate. In other words, although expressed in terms of dollars, contingency factors are inserted to deal with the growth of unknown requirements and to handle the increase in size that these requirements will cause.

While the contingency factors were moderately successful in the 1970s, they gradually ran into technical difficulties. As typical IBM applications grew in size from less than 1000 function points in the 1970s to more than 10,000 function points in the 1990s, the contingency factors needed to be increased. This is because for large systems, less is known early and more growth occurs later on.

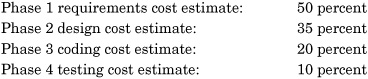

To use simple percentage contingency factors on applications in the 10,000–function point range, the values would have to approximate the following:

However, such large contingency factors are psychologically unsettling to executives and clients. They feel uncomfortable with estimates that are based on such large adjustment factors.

Freezing Requirements at Fixed Points in Time

Since the 1970s some large corporations such as AT&T, IBM, and Microsoft have had firm cut-off dates for all features that were intended to go out in a specific releases. After the initial release of a large system such as the AT&T ESS5 switching system, IBM’s MVS operating system, and Microsoft’s Windows XP operating system, future releases are planned at fixed intervals.

For example, IBM would plan a maintenance release for bug repairs six months after the initial release of an application, and then add new features 12 months after the initial release. Maintenance releases and new-feature releases would then continue to alternate on those schedules for about five calendar years. After about 36 months there would be a “mid-life kicker” or a release with quite a lot of interesting new features.

New features would be targeted for a specific release, but if there were problems that made a feature miss its planned release, it had to wait for another 12 months before going out. This sometimes led to rushing and poor quality in order to meet the deadline for an important new feature.

It also happens that for very large systems in the 10,000–function point range (equivalent to 500,000 Java statements or 1,250,000 C statements) the initial release usually contains only about 80 percent of the features that were originally intended. An analysis of large IBM applications by the author in the 1970s found that 20 percent of planned features missed the first release. However, about 30 percent of the features in the first release were not originally planned, but occurred later in the form of creeping requirements. This is a fairly typical pattern.

For large applications that are likely to last for five or ten years once deployed, having fixed release intervals is a fairly effective solution. Development teams soon become comfortable with fixed release intervals and can plan accordingly.

Also, customers or clients usually prefer a fixed release interval because it makes their maintenance and support planning easier and helps keep costs level over time. The one exception to the advantages of fixed release intervals is the case of high-severity defects, which need to be fixed and released as quickly as possible.

For example, users of Microsoft Windows receive very frequent updates from Microsoft when security breaches or other critical problems are found in Windows XP or Microsoft Office.

Producing Formal Cost Estimates Only for Subsets of the Total Application

Some of the Agile approaches attempt to avoid the problems of complete sizing and cost estimating in the absence of full requirements by producing cost estimates only for the next iteration or sprint that will be produced. The overall or final cost for the application is not attempted, on the grounds that it is probably unknowable until customers have used the early releases and decided what they want next.

This method does avoid large errors in sizing and cost estimating. But that is because the predictions of the final size and final costs are not attempted initially. Currently in 2007, this approach is used mainly for internal projects where the costs and schedule are not subject to contractual requirements. It is not currently the method of choice for fixed-price contracts or for other software applications where the total cost must be known for legal or corporate reasons.

The approach of formal estimates only for the next sprint or iteration does fit in reasonably well with the “earned value” approach, although not very many Agile projects have reported using earned value measurements to date.

However, as more and more Agile projects are completed there will be a steady accumulation of historical data. Within a few years, there should be enough information to know the total numbers of stories, story points, use cases, sprints, and other data from hundreds of Agile projects.

Within perhaps five or ten years, pattern matching approaches will begin to be widely available in an Agile context. Once there is a critical mass of completed Agile projects with historical data available, then it will be possible to use this information to predict overall sizes and costs for new applications.

This means that the Agile development teams will have to record historical information, which will add slightly to the effort for developing the applications.

The same kinds of patterns are starting to be developed for object-oriented projects. At some point in the near future, pattern matching will begin to be effective not only for the technical features of OO applications, but also for predicting costs, schedules, staffing, quality, and other business features as well.

Function Point Variations Circa 2007

Over and above the function point metric defined by IFPUG, there are close to 40 other variants that do not give the same results as the IFPUG method. Further, many of the function point variants have no published conversion rules to standard IFPUG function points or much data of any kind in print.

This means that the same application can appear to have very different sizes, based on whether the function point totals follow the IFPUG counting rules, the British Mark II counting rules, COSMIC function point counting rules, object-point counting rules, the SPR feature point counting rules, the Boeing 3D counting rules, or any of the other function point variants. Thus, application sizing and cost estimating based on function point metrics must also identify the rules and definitions of the specific form of function point being utilized.

These variants have introduced serious technical challenges into software benchmarks and economic analysis. Suppose you were a metrics consultant with a client in the telecommunications industry who wanted to know what methods and programming languages gave the best productivity for PBX switching systems. This is a fairly common kind of request.

You search various benchmark data bases and find 21 PBX switching systems that appear to be relevant to the client’s request. Now the problems start:

![]() Three of the PBXs were measured using “lines of code.” One counted physical lines, one counted logical statements, and one did not define which method was used.

Three of the PBXs were measured using “lines of code.” One counted physical lines, one counted logical statements, and one did not define which method was used.

![]() Three of the PBXs were object-oriented. One was counted using object points and two were counted with use case points.

Three of the PBXs were object-oriented. One was counted using object points and two were counted with use case points.

![]() Three of the PBXs were counted with IFPUG function points.

Three of the PBXs were counted with IFPUG function points.

![]() Three of the PBXs were counted with COSMIC function points.

Three of the PBXs were counted with COSMIC function points.

![]() Three of the PBXs were counted with NESMA function points.

Three of the PBXs were counted with NESMA function points.

![]() Three of the PBXs were counted with Feature points.

Three of the PBXs were counted with Feature points.

![]() Three of the PBXs were counted with Mark II function points

Three of the PBXs were counted with Mark II function points

As of 2007, there is no easy technical way to provide the client with an accurate answer to what is really a basic economic question. You cannot average the results of these 21 similar projects nor do any kind of useful statistical analysis because of the use of so many different metrics.

Prior to this book there have been no published conversion rules from one metric variant to another. Although this book does have some tentative conversion rules, they are not viewed by the author as being accurate enough to use for serious business purposes such as providing clients with valid comparisons between projects counted via different approaches.

In the author’s opinion, the developers of alternate function point metrics have a professional obligation to provide conversion rules from their new metrics to the older IFPUG function point metric. It is not the job of IFPUG to evaluation every new alternative.

Also, IFPUG itself introduced a major change in function point counting rules in 1994, when Version 4 of the rules was published. The Version 4 changes eliminated counts of some forms of error messages (over substantial protest, it should be noted) and, hence, reduced the counts from the prior Version 3.4 by perhaps 20 percent for projects with significant numbers of error messages.

The function point sizes in this book are based on IFPUG counts, with Version 4.1 being the most commonly used variant. However, from time to time points require that the older Version 3.4 form be used. The text will indicate which form is utilized for specific cases.

Over and above the need to be very clear as to which specific function point is being used, there are also some other issues associated with function point sizing that need to be considered.

The rules for counting function points using most of the common function point variants are rather complex. This means that attempts to count function points by untrained individuals generally lead to major errors. This is unfortunate, but is also true of almost any other significant metric.

Both the IFPUG and the equivalent organization in the United Kingdom, the United Kingdom Function Point (Mark II) Users Group, offer training and certification examinations. Other metrics organizations, such as the Australian Software Metrics Association (ASMA) and the Netherlands Software Metrics Association (NESMA) may also offer certification services. However, most of the minor function point variants have no certification examinations and have very little published data.

When reviewing data expressed in function points, it is important to know whether the published function point totals used for software cost estimates are derived from counts by certified function point counters, from attempts to create totals by untrained counters, or from four other common ways of deriving function point totals:

![]() Backfiring from source code counts, either manually or using tools such as those marketed by ViaSoft

Backfiring from source code counts, either manually or using tools such as those marketed by ViaSoft

![]() Automatic generation of function points from requirements and design, using tools

Automatic generation of function points from requirements and design, using tools

![]() Deriving function points by analogy, such as assuming that Project B will be the same size as Project A, a prior project that has a function point size of known value

Deriving function points by analogy, such as assuming that Project B will be the same size as Project A, a prior project that has a function point size of known value

![]() Counting function points using one of the many variations in functional counting methods (i.e., SPR feature points, Boeing 3D function points, COSMIC function points, Netherlands function points, etc.)

Counting function points using one of the many variations in functional counting methods (i.e., SPR feature points, Boeing 3D function points, COSMIC function points, Netherlands function points, etc.)

(Of course it is also important to know whether data expressed in lines of code is based on counts of physical lines or logical statements, just as it is important to know whether distance data expressed in miles refers to statute miles or nautical miles, or whether volumetric data expressed in terms of gallons refers to U.S. gallons or Imperial gallons.)

As a result of the lack of written information for legacy projects, the method called “backfiring,” or direct conversion from source code statements to equivalent function point totals, has become one of the most widely used methods for determining the function point totals of legacy applications. Since legacy applications far outnumber new software projects, this means that backfiring is actually the most widely used method for deriving function point totals.

Backfiring is highly automated, and a number of vendors provide tools that can convert source code statements into equivalent function point values. Backfiring is very easy to perform, so that the function point totals for applications as large as 1 million source code statements can be derived in only a few minutes of computer time.

The downside of backfiring is that it is based on highly variable relationships between source code volumes and function point totals. Although backfiring may achieve statistically useful results when averaged over hundreds of projects, it may not be accurate by even plus or minus 50 percent for any specific project. This is due to the fact that individual programming styles can create very different volumes of source code for the same feature. Controlled experiments by IBM in which eight programmers coded the same specification found variations of about 5 to 1 in the volume of source code written by the participants.

Also, backfiring results will vary widely based upon whether the starting point is a count of physical lines, or a count of logical statements. In general, starting with logical statements will give more accurate results. However, counts of logical statements are harder to find than counts of physical lines.

In spite of the uncertainty of backfiring, it is supported by more tools and is a feature of more commercial software estimating tools than any other current sizing method. The need for speed and low sizing costs explains why many of the approximation methods, such as backfiring, sizing by analogy, and automated function point derivations, are so popular: They are fast and cheap, even if they are not as accurate. It also explains why so many software-tool vendors are actively exploring automated rule-based function point sizing engines that can derive function point totals from requirements and specifications, with little or no human involvement.

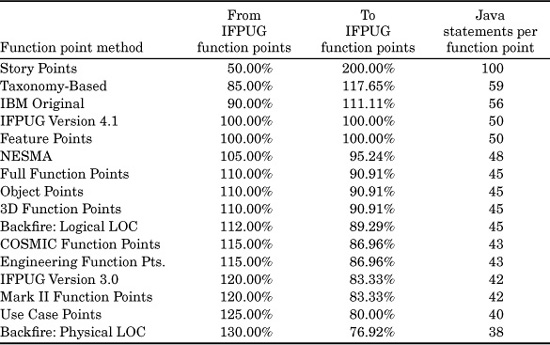

Since function point metrics have splintered in recent years, the family of possible function point variants used for estimation and measurement include at least 38 choices (see Table 9.2).

Note that this listing is not stated to be 100 percent complete. The 38 variants shown in Table 9.2 are merely the ones that have surfaced in the software measurement literature or been discussed at metrics conferences. No doubt, at least another 20 or so variants may exist that have not yet published any information or been presented at metrics conferences.

TABLE 9.2 Function Point Counting Variations Circa 2007

1. The 1975 internal IBM function point method

2. The 1979 published Albrecht IBM function point method

3. The 1982 DeMarco bang function point method

4. The 1983 Rubin/ESTIMACS function point method

5. The 1983 British Mark II function point method (Symons)

6. The 1984 revised IBM function point method

7. The 1985 SPR function point method using three adjustment factors

8. The 1985 SPR backfire function point method

9. The 1986 SPR feature point method for real-time software

10. The 1994 SPR approximation function point method

11. The 1997 SPR analogy-based function point method

12. The 1997 SPR taxonomy-based function point method

13. The 1986 IFPUG Version 1 method

14. The 1988 IFPUG Version 2 method

15. The 1990 IFPUG Version 3 method

16. The 1995 IFPUG Version 4 method

17. The 1989 Texas Instruments IEF function point method

18. The 1992 Reifer coupling of function points and Halstead metrics

19. The 1992 ViaSoft backfire function point method

20. The 1993 Gartner Group backfire function point method

21. The 1994 Boeing 3D function point method

22. The 1994 object point method

23. The 1994 Bachman Analyst function point method

24. The 1995 Compass Group backfire function point method

25. The 1995 Air Force engineering function point method

26. The 1995 Oracle function point method

27. The 1995 NESMA function point method

28. The 1995 ASMA function point method

29. The 1995 Finnish function point method

30. The 1996 CRIM micro–function point method

31. The 1996 object point method

32. The 1997 data point method for database sizing

33. The 1997 Nokia function point approach for telecommunications software

34. The 1997 full function point approach for real-time software

35. The 1997 ISO working group rules for functional sizing

36. The 1998 COSMIC function point approach

37. The 1999 story point method

38. The 2003 use case point method

With some reluctance, the author is providing a table of conversion factors between some of the more common function point variants and standard IFPUG function points (see Table 9.3). The accuracy of the conversion ratios is questionable in 2007. Hopefully, even the publication of incorrect conversion rules will lead to refinements and more accurate rules in the future. This is an area that needs a great deal of research.

TABLE 9.3 Approximate Conversion Ratios to IFPUG Function Points (Assumes Version 4.1 of the IFPUG Counting Rules)

Table 9.3 uses IFPUG 4.1 as its base. If you want to convert 100 IFPUG function points to COSMIC function points, use the “From IFPUG...” column. The result would be about 115 COSMIC function points. Going the other way, if you start with 100 COSMIC function points and want to convert to IFPUG, use the “To IFPUG...” column. The result would be about 87 IFPUG function points.

If you want to perform conversions among the other metrics, you will need to do a double conversion. For example, if you want to convert use case points into COSMIC function points, you will have to convert both values into IFPUG first. If you start with 100 use case points, that is equal to about 80 IFPUG function points. Then you would use the “From IFPUG...” value of 115 percent for COSMIC, and the result would be about 92 COSMIC function points.

The software industry has long been criticized for lacking good historical data and for inaccurate sizing and estimating of many applications. Unfortunately, the existence of so many different metrics is exacerbating an already difficult challenge for software estimators.

Reasons Cited for Creating Function Point Variations

The reasons why there are at least 38 variations in counting function points deserve some research and discussion. First, it was proven long ago in the 1970s that the lines-of-code-metrics cannot measure software productivity in an economic sense and is harmful for activity-based cost analysis. Therefore, there is a strong incentive to adopt some form of functional metric because the older LOC method has been proven to be unreliable.

However, the mushrooming growth of function point variations can be traced to other causes. From meetings and discussions with the developers of many function point variants, the following reasons have been noted as to why variations have been created.

First, a significant number of variations were created due to a misinterpretation of the nature of function point metrics. Because the original IBM function points were first applied to information systems, a belief grew up that standard function points don’t work for real-time and embedded software. This belief is caused by the fact that productivity rates for real-time and embedded software are usually well below the rates for information systems of the same size measured with function points.

Almost all of the function point variants yield larger counts for real-time and embedded software than do standard IFPUG function points.

The main factors identified as differentiating embedded and real-time software from information systems applications include the following:

![]() Embedded software is high in algorithmic complexity.

Embedded software is high in algorithmic complexity.

![]() Embedded software is often limited in logical files.

Embedded software is often limited in logical files.

![]() Embedded software’s inputs and outputs may be electronic signals.

Embedded software’s inputs and outputs may be electronic signals.

![]() Embedded software’s interfaces may be electronic signals.

Embedded software’s interfaces may be electronic signals.

![]() The user view for embedded software may not reflect human users.

The user view for embedded software may not reflect human users.

These differences are great enough that the real-time and systems community has been motivated to create a number of function point variations that give more weight to algorithms, give less weight to logical files, and expand the concept of inputs and outputs to deal with electronic signals and sensor-based data rather than human-oriented inputs and outputs, such as forms and screens. Are these alternative function point methods really useful? There is no definitive answer, but from the point of view of benchmarking and international comparisons they have caused more harm than they have created value.

Another phenomenon noted when exploring function point variations is the fact that many function point variants are aligned to national borders. The IFPUG is headquartered in the United States, and most of the current officers and committee chairs are U.S. citizens.

There is a widespread feeling in Europe and elsewhere that in spite of the association having international in its name, IFPUG is dominated by the United States and may not properly reflect the interests of Europe, South America, the Pacific Rim, or Australia. Therefore, some of the function point variants are more or less bounded by national borders, such as the Netherlands function point method and the older British Mark II function point method.

If function points are to remain viable into the next century, it is urgent to focus energies on perfecting one primary form of functional metric rather than dissipating energies into the creation of scores of minor function point variants, many of which have no published data and have only a handful of users.

Even worse, while many months of effort have been spent developing 38 function point variants, some major measurement and metrics issues are almost totally unexamined. As will be pointed out later in this chapter, the software engineering community lags physics and engineering in understanding and measuring complexity. Also, there are no effective measurements for database volumes or database quality. There are no effective measurements or metrics that can deal with intangible value. There are no good measurements or metrics for customer service. It would be far more useful for software engineering if metrics research began to concentrate on important topics that are beyond the current measurement state of the art, rather than dissipating energies on scores of minor function point variants.

Regardless of the reasons, the existence of so many variations in counting function points is damaging to the overall software metrics community and is not really advancing the state of the art of software measurement.

As the situation currently stands, the overall range of apparent function point counts for the same application can vary by perhaps two to one, based on which specific varieties of function point metrics are utilized. This situation obviously requires that the specific form of function point be recorded in order for the size information to have any value.

Although the large range of metric choices all using the name “function points” is a troublesome situation, it is not unique to software. Other measurements outside the software arena also have multiple choices for metrics that use the same name. For example, it is necessary to know whether statute miles or nautical miles are being used; whether American dollars, Australian dollars, or Canadian dollars are being used; and whether American gallons or Imperial gallons are being used. It is also necessary to know whether temperatures are being measured in Celsius or Fahrenheit degrees. There are also three ways of calculating the octane rating of fuel, and several competing methods for calculating fuel efficiency or miles per gallon. There are even multiple ways of calculating birthdays.

However, the explosion of the function point metric into 38 or so competitive claimants must be viewed as an excessive number of choices. Hopefully, the situation will not reach the point seen among programming languages, where 600 or more languages are competing for market share.

Volume of Function Point Data Available

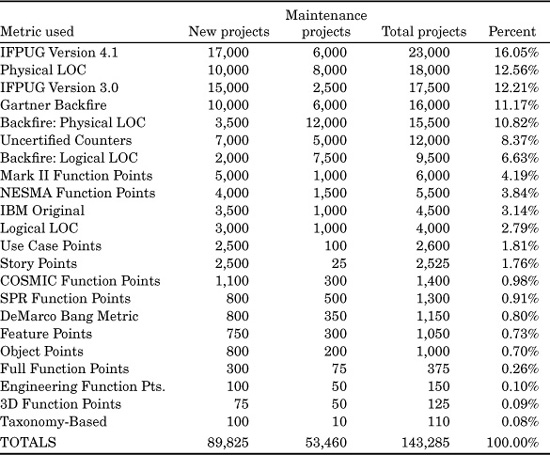

The next table is an attempt to quantify the approximate number of software projects that have been measured using various forms of metrics. IFPUG Version 4.1 is in the majority, but due to the global preponderance of aging legacy applications, various forms of backfiring appear to be the major sources of global function point data for maintenance projects.

The information in Table 9.4 is derived from discussions with various benchmarking companies, from the software metrics literature, and from informal discussions with function point users and developers during the course of software assessment and benchmarking studies. The table has a high margin of error and is simply a rough attempt to evaluate the size of the universe of function point data and lines of code data when all major variations are included.

TABLE 9.4: Approximate Numbers of Projects Measured

Because aging legacy applications comprise the bulk of all software projects in the world, the various forms of backfiring, or direct conversion between source code statements and function points, is the most widely utilized method for enumerating function points, especially for legacy applications. All of the major software benchmarking companies (e.g., Davids, Gartner Group, SPR, etc.) utilize backfiring for their client studies and, hence, have substantial data derived from backfiring.

Curiously, none of the major function point groups, such as IFPUG, the United Kingdom Function Point Users Group, or the Netherlands Software Metrics Association, have attempted any formal studies of backfiring, or even made any visible contribution to this popular technology. The great majority of reports and data on backfiring come from the commercial benchmark consulting companies, such as the David’s Consulting Company, Gartner Group, Rubin Systems, and Software Productivity Research.

As stated, the margin of error with Table 9.4 is very high, and the information is derived from informal surveys at various function point events in the United States, Europe, and the Pacific Rim. However, there seems to be no other source of this kind of information on the distribution of software projects among the various forms of function point analysis.

It is also curious that none of the function point user associations, such as IFPUG or the United Kingdom Function Point Users Group, have attempted to quantify the world number of projects measured using function points. IFPUG has attempted some benchmarking work, but only in the context of projects measured using the IFPUG Version 4 counting rules.

Unfortunately, the major function point associations, such as IFPUG in the United States, the British Mark II users group, NESMA, ASMA, and others, tend to view each other as political rivals and, hence, ignore one another’s data or sometimes even actively disparage one another.

Consider yet another issue associated with function point metrics. The minimum weighting factors assigned to standard IFPUG function points have a lower limit or cut-off point. These limits mean that the smallest practical project where such common function point metrics as IFPUG and Mark II can be used is in the vicinity of 10 to 15 function points. Below that size, the weighting factors tend to negate the use of the function point metric.

Because of the large number of small maintenance and enhancement projects, there is a need for some kind of micro–function point that can be used in the zone that runs from a fraction of a single function point up to the current minimum level where normal function points apply.

Since it is the weighting factors that cause the problem with small projects, one obvious approach would be to use unadjusted function point counts for small projects without applying any weights at all. However, this experimental solution would necessitate changes in the logic of software estimating tools to accommodate this variation.

The huge numbers of possible methods for counting function points are very troublesome for software cost-estimating tool vendors and for all those who build metrics tools and software project management tools. None of us can support all 38 variations in function point counting, so most of us support only a subset of the major methods.

Software Complexity Analysis

The topic of complexity is very important for software cost estimation because it affects a number of independent and dependent variables, such as the following:

![]() High complexity levels can increase bug or defect rates.

High complexity levels can increase bug or defect rates.

![]() High complexity levels can lower defect-removal efficiency rates.

High complexity levels can lower defect-removal efficiency rates.

![]() High complexity levels can decrease development productivity rates.

High complexity levels can decrease development productivity rates.

![]() High complexity levels can raise maintenance staffing needs.

High complexity levels can raise maintenance staffing needs.

![]() High complexity levels can lengthen development schedules.

High complexity levels can lengthen development schedules.

![]() High complexity levels increase the number of test cases needed.

High complexity levels increase the number of test cases needed.

![]() High complexity levels affect the size of the software application.

High complexity levels affect the size of the software application.

![]() High complexity levels change backfiring ratios.

High complexity levels change backfiring ratios.

Unfortunately, the concept of complexity is an ambiguous topic that has no exact definition agreed upon by all software researchers. When we speak of complexity in a software context, we can be discussing the difficulty of the problem that the software application will attempt to implement, the structure of the code, or the relationships among the data items that will be used by the application. In other words, the term complexity can be used in a general way to discuss problem complexity, code complexity, and data complexity.

The scientific and engineering literature encompasses no fewer than 30 different flavors of complexity, some or all of which may be found to be relevant for software applications. Unfortunately, most of the forms of scientific complexity are not even utilized in a software context. The software engineering community is far behind physics and other forms of engineering in measuring and understanding complexity.

In a very large book on software complexity, Dr. Horst Zuse (Software Complexity: Measures and Methods; Walter de Gruyter, Berlin 1990) discusses about 50 variants of structural complexity for programming alone. It is perhaps because of the European origin—functional metrics are not as dominant there as in the United States—but in spite of the book’s large size and fairly complete treatment, Zuse seems to omit all references to function point metrics and to the forms of complexity associated with functional metrics.

When software sizing and estimating tools utilize complexity as an adjustment factor, the methods tend to be highly subjective. Some of the varieties of complexity encountered in the scientific literature that show up in a software context include the following:

![]() Algorithmic complexity concerns the length and structure of the algorithms for computable problems. Software applications with long and convoluted algorithms are difficult to design, to inspect, to code, to prove, to debug, and to test. Although algorithmic complexity affects quality, development productivity, and maintenance productivity, it is utilized as an explicit factor by only a few software cost-estimating tools.

Algorithmic complexity concerns the length and structure of the algorithms for computable problems. Software applications with long and convoluted algorithms are difficult to design, to inspect, to code, to prove, to debug, and to test. Although algorithmic complexity affects quality, development productivity, and maintenance productivity, it is utilized as an explicit factor by only a few software cost-estimating tools.

![]() Code complexity concerns the subjective views of development and maintenance personnel about whether the code they are responsible for is complex or not. Interviewing software personnel and collecting their subjective opinions is an important step in calibrating more formal complexity metrics, such as cyclomatic and essential complexity. A number of software estimating tools have methods for entering and adjusting code complexity based on ranking tables that run from high to low complexity in the subjective view of the developers.

Code complexity concerns the subjective views of development and maintenance personnel about whether the code they are responsible for is complex or not. Interviewing software personnel and collecting their subjective opinions is an important step in calibrating more formal complexity metrics, such as cyclomatic and essential complexity. A number of software estimating tools have methods for entering and adjusting code complexity based on ranking tables that run from high to low complexity in the subjective view of the developers.

![]() Combinatorial complexity concerns the numbers of subsets and sets that can be constructed out of N components. This concept sometimes shows up in the way that modules and components of software applications might be structured. From a psychological vantage point, combinatorial complexity is a key reason why some problems seem harder to solve than others. However, this form of complexity is not utilized as an explicit estimating parameter.

Combinatorial complexity concerns the numbers of subsets and sets that can be constructed out of N components. This concept sometimes shows up in the way that modules and components of software applications might be structured. From a psychological vantage point, combinatorial complexity is a key reason why some problems seem harder to solve than others. However, this form of complexity is not utilized as an explicit estimating parameter.

![]() Computational complexity concerns the amount of machine time and the number of iterations required to execute an algorithm. Some problems are so high in computational complexity that they are considered to be noncomputable. Other problems are solvable but require enormous quantities of machine time, such as cryptanalysis or meteorological analysis of weather patterns. Computational complexity is sometimes used for evaluating the performance implications of software applications, but not the difficulty of building or maintaining them.

Computational complexity concerns the amount of machine time and the number of iterations required to execute an algorithm. Some problems are so high in computational complexity that they are considered to be noncomputable. Other problems are solvable but require enormous quantities of machine time, such as cryptanalysis or meteorological analysis of weather patterns. Computational complexity is sometimes used for evaluating the performance implications of software applications, but not the difficulty of building or maintaining them.

![]() Cyclomatic complexity is derived from graph theory and was made popular for software by Dr. Tom McCabe (IEEE Transactions on Software Engineering, Vol SE2, No. 4 1976). Cyclomatic complexity is a measure of the control flow of a graph of the structure of a piece of software. The general formula for calculating cyclomatic complexity of a control flow graph is edges − nodes + unconnected parts × 2. Software with no branches has a cyclomatic complexity level of 1. As branches increase in number, cyclomatic complexity levels also rise. Above a cyclomatic complexity level of 20, path flow testing becomes difficult and, for higher levels, probably impossible.

Cyclomatic complexity is derived from graph theory and was made popular for software by Dr. Tom McCabe (IEEE Transactions on Software Engineering, Vol SE2, No. 4 1976). Cyclomatic complexity is a measure of the control flow of a graph of the structure of a piece of software. The general formula for calculating cyclomatic complexity of a control flow graph is edges − nodes + unconnected parts × 2. Software with no branches has a cyclomatic complexity level of 1. As branches increase in number, cyclomatic complexity levels also rise. Above a cyclomatic complexity level of 20, path flow testing becomes difficult and, for higher levels, probably impossible.

Cyclomatic complexity is often used as a warning indicator for potential quality problems. Cyclomatic complexity is the most common form of complexity analysis for software projects and the only one with an extensive literature. At least 20 tools can measure cyclomatic complexity, and these tools range from freeware to commercial products. Such tools support many programming languages and operate on a variety of platforms.

![]() Data complexity deals with the number of attributes associated with entities. For example, some of the attributes that might be associated with a human being in a typical medical office database of patient records could include date of birth, sex, marital status, children, brothers and sisters, height, weight, missing limbs, and many others. Data complexity is a key factor in dealing with data quality. Unfortunately, there is no metric for evaluating data complexity, so only subjective ranges are used for estimating purposes.

Data complexity deals with the number of attributes associated with entities. For example, some of the attributes that might be associated with a human being in a typical medical office database of patient records could include date of birth, sex, marital status, children, brothers and sisters, height, weight, missing limbs, and many others. Data complexity is a key factor in dealing with data quality. Unfortunately, there is no metric for evaluating data complexity, so only subjective ranges are used for estimating purposes.

![]() Diagnostic complexity is derived from medical practice, where it deals with the combinations of symptoms (temperature, blood pressure, lesions, etc.) needed to identify an illness unambiguously. For example, for many years it was not easy to tell whether a patient had tuberculosis or histoplasmosis because the superficial symptoms were essentially the same. For software, diagnostic complexity comes into play when customers report defects and the vendor tries to isolate the relevant symptoms and figure out what is really wrong. However, diagnostic complexity is not used as an estimating parameter for software projects.

Diagnostic complexity is derived from medical practice, where it deals with the combinations of symptoms (temperature, blood pressure, lesions, etc.) needed to identify an illness unambiguously. For example, for many years it was not easy to tell whether a patient had tuberculosis or histoplasmosis because the superficial symptoms were essentially the same. For software, diagnostic complexity comes into play when customers report defects and the vendor tries to isolate the relevant symptoms and figure out what is really wrong. However, diagnostic complexity is not used as an estimating parameter for software projects.

![]() Entropic complexity is the state of disorder of the component parts of a system. Entropy is an important concept because all known systems have an increase in entropy over time. That is, disorder gradually increases. This phenomenon has been observed to occur with software projects because many small changes over time gradually erode the original structure. Long-range studies of software projects in maintenance mode attempt to measure the rate at which entropy increases and determine whether it can be reversed by such approaches as code restructuring. Surrogate metrics for evaluating entropic complexity are the rates at which cyclomatic and essential complexity change over time, such as on an annual basis. However, there are no direct measures for software entropy.

Entropic complexity is the state of disorder of the component parts of a system. Entropy is an important concept because all known systems have an increase in entropy over time. That is, disorder gradually increases. This phenomenon has been observed to occur with software projects because many small changes over time gradually erode the original structure. Long-range studies of software projects in maintenance mode attempt to measure the rate at which entropy increases and determine whether it can be reversed by such approaches as code restructuring. Surrogate metrics for evaluating entropic complexity are the rates at which cyclomatic and essential complexity change over time, such as on an annual basis. However, there are no direct measures for software entropy.

![]() Essential complexity is also derived from graph theory and was made popular by Dr. Tom McCabe (IEEE Transactions on Software Engineering, Vol SE2, No. 4 1976). The essential complexity of a piece of software is derived from cyclomatic complexity after the graph of the application has been simplified by removing redundant paths. Essential complexity is often used as a warning indicator for potential quality problems. As with cyclomatic complexity, a module with no branches at all has an essential complexity level of 1. As unique branching sequences increase in number, both cyclomatic and essential complexity levels will rise. Essential complexity and cyclomatic complexity are supported by a variety of software tools.

Essential complexity is also derived from graph theory and was made popular by Dr. Tom McCabe (IEEE Transactions on Software Engineering, Vol SE2, No. 4 1976). The essential complexity of a piece of software is derived from cyclomatic complexity after the graph of the application has been simplified by removing redundant paths. Essential complexity is often used as a warning indicator for potential quality problems. As with cyclomatic complexity, a module with no branches at all has an essential complexity level of 1. As unique branching sequences increase in number, both cyclomatic and essential complexity levels will rise. Essential complexity and cyclomatic complexity are supported by a variety of software tools.

![]() Fan complexity refers to the number of times a software module is called (termed fan in) or the number of modules that it calls (termed fan out). Modules with a large fan-in number are obviously critical in terms of software quality, since they are called by many other modules. However, modules with a large fan-out number are also important, and they are hard to debug because they depend upon so many extraneous modules. Fan complexity is relevant to exploration of reuse potentials. Fan complexity is not used as an explicit estimating parameter, although in real life this form of complexity appears to exert a significant impact on software quality.

Fan complexity refers to the number of times a software module is called (termed fan in) or the number of modules that it calls (termed fan out). Modules with a large fan-in number are obviously critical in terms of software quality, since they are called by many other modules. However, modules with a large fan-out number are also important, and they are hard to debug because they depend upon so many extraneous modules. Fan complexity is relevant to exploration of reuse potentials. Fan complexity is not used as an explicit estimating parameter, although in real life this form of complexity appears to exert a significant impact on software quality.

![]() Flow complexity is a major topic in the studies of fluid dynamics and meteorology. It deals with the turbulence of fluids moving through channels and across obstacles. A new subdomain of mathematical physics called chaos theory has elevated the importance of flow complexity for dealing with physical problems. Many of the concepts, including chaos theory itself, appear relevant to software and are starting to be explored. However, the application of flow complexity to software is still highly experimental.

Flow complexity is a major topic in the studies of fluid dynamics and meteorology. It deals with the turbulence of fluids moving through channels and across obstacles. A new subdomain of mathematical physics called chaos theory has elevated the importance of flow complexity for dealing with physical problems. Many of the concepts, including chaos theory itself, appear relevant to software and are starting to be explored. However, the application of flow complexity to software is still highly experimental.

![]() Function point complexity refers to the set of adjustment factors needed to calculate the final adjusted function point total of a software project. Standard U.S. function points as defined by the IFPUG have 14 complexity adjustment factors. The British Mark II function point uses 19 complexity adjustment factors. The SPR function point and feature point metrics use three complexity adjustment factors. Function point complexity is usually calculated by reference to tables of known values, many of which are automated and are present in software estimating tools or function point analysis tools.

Function point complexity refers to the set of adjustment factors needed to calculate the final adjusted function point total of a software project. Standard U.S. function points as defined by the IFPUG have 14 complexity adjustment factors. The British Mark II function point uses 19 complexity adjustment factors. The SPR function point and feature point metrics use three complexity adjustment factors. Function point complexity is usually calculated by reference to tables of known values, many of which are automated and are present in software estimating tools or function point analysis tools.

![]() Graph complexity is derived from graph theory and deals with the numbers of edges and nodes on graphs created for various purposes. The concept is significant for software because it is part of the analysis of cyclomatic and essential complexity, and also is part of the operation of several source code restructuring tools. Although derivative metrics, such as cyclomatic and essential complexity, are used in software estimating, graph theory itself is not utilized.

Graph complexity is derived from graph theory and deals with the numbers of edges and nodes on graphs created for various purposes. The concept is significant for software because it is part of the analysis of cyclomatic and essential complexity, and also is part of the operation of several source code restructuring tools. Although derivative metrics, such as cyclomatic and essential complexity, are used in software estimating, graph theory itself is not utilized.

![]() Halstead complexity is derived from the software-science research carried out by the late Dr. Maurice Halstead (Elements of Software Science, Elsevier North Holland, New York, 1977) and his colleagues and students at Purdue University. The Halstead software science treatment of complexity is based on four discrete units: (1) number of unique operators (i.e., verbs), (2) number of unique operands (i.e., nouns), (3) instances of operator occurrences, and (4) instances of operand occurrences.

Halstead complexity is derived from the software-science research carried out by the late Dr. Maurice Halstead (Elements of Software Science, Elsevier North Holland, New York, 1977) and his colleagues and students at Purdue University. The Halstead software science treatment of complexity is based on four discrete units: (1) number of unique operators (i.e., verbs), (2) number of unique operands (i.e., nouns), (3) instances of operator occurrences, and (4) instances of operand occurrences.

The Halstead work overlaps linguistic research, in that it seeks to enumerate such concepts as the vocabulary of a software project. Although the Halstead software-science metrics are supported in some software cost-estimating tools, there is very little recent literature on this topic.

![]() Information complexity is concerned with the numbers of entities and the relationships between them that might be found in a database, data repository, or data warehouse. Informational complexity is also associated with research on data quality. Unfortunately, all forms of research into database sizes and database quality are handicapped by the lack of metrics for dealing with data size, or for quantifying the forms of complexity that are likely to be troublesome in a database context.

Information complexity is concerned with the numbers of entities and the relationships between them that might be found in a database, data repository, or data warehouse. Informational complexity is also associated with research on data quality. Unfortunately, all forms of research into database sizes and database quality are handicapped by the lack of metrics for dealing with data size, or for quantifying the forms of complexity that are likely to be troublesome in a database context.

![]() Logical complexity is important for both software and circuit design. It is based upon the combinations of AND, OR, NOR, and NAND logic conditions that are concatenated together. This form of complexity is significant for expressing algorithms and for proofs of correctness. However, logical complexity is utilized as an explicit estimating parameter in only a few software cost-estimating tools.

Logical complexity is important for both software and circuit design. It is based upon the combinations of AND, OR, NOR, and NAND logic conditions that are concatenated together. This form of complexity is significant for expressing algorithms and for proofs of correctness. However, logical complexity is utilized as an explicit estimating parameter in only a few software cost-estimating tools.

![]() Mnemonic complexity is derived from cognitive psychology and deals with the ease or difficulty of memorization. It is well known that the human mind has both temporary and permanent memory. Some kinds of information (i.e., names and telephone numbers) are held in temporary memory and require conscious effort to be moved into permanent memory. Other kinds of information (i.e., smells and faces) go directly to permanent memory.

Mnemonic complexity is derived from cognitive psychology and deals with the ease or difficulty of memorization. It is well known that the human mind has both temporary and permanent memory. Some kinds of information (i.e., names and telephone numbers) are held in temporary memory and require conscious effort to be moved into permanent memory. Other kinds of information (i.e., smells and faces) go directly to permanent memory.

Mnemonic complexity is important for software debugging and during design and code inspections. Many procedural programming languages have symbolic conventions that are very difficult to either scan or debug because they oversaturate human temporary memory. Things such as nested loops that use multiple levels of parentheses—that is, (((...)))—tend to swamp human temporary memory capacity.

Mnemonic complexity appears to be a factor in learning and using programming languages, and is also associated with defect rates in various languages. However, little information is available on this potentially important topic in a software context, and it is not used as an explicit software estimating parameter.

![]() Organizational complexity deals with the way human beings in corporations arrange themselves into hierarchical groups or matrix organizations. This topic might be assumed to have only an indirect bearing on software, except for the fact that many large software projects are decomposed into components that fit the current organizational structure rather than the technical needs of the project. For example, many large software projects are decomposed into segments that can be handled by eight-person departments, whether or not that approach meets the needs of the system’s architecture.

Organizational complexity deals with the way human beings in corporations arrange themselves into hierarchical groups or matrix organizations. This topic might be assumed to have only an indirect bearing on software, except for the fact that many large software projects are decomposed into components that fit the current organizational structure rather than the technical needs of the project. For example, many large software projects are decomposed into segments that can be handled by eight-person departments, whether or not that approach meets the needs of the system’s architecture.

Although organizational complexity is seldom utilized as an explicit estimating parameter, it is known that large software projects that are well organized will outperform similar projects with poor organizational structures.

![]() Perceptional complexity is derived from cognitive psychology and deals with the arrangements of edges and surfaces that appear to be simple or complex to human observers. For example, regular patterns appear to be simple while random arrangements appear to be complex. This topic is important for studies of visualization, software design methods, and evaluation of screen readability. Unfortunately, the important topic of the perceptional complexity of various software design graphics has only a few citations in the literature, and none in the cost-estimating literature.

Perceptional complexity is derived from cognitive psychology and deals with the arrangements of edges and surfaces that appear to be simple or complex to human observers. For example, regular patterns appear to be simple while random arrangements appear to be complex. This topic is important for studies of visualization, software design methods, and evaluation of screen readability. Unfortunately, the important topic of the perceptional complexity of various software design graphics has only a few citations in the literature, and none in the cost-estimating literature.

![]() Problem complexity concerns the subjective views of people asked to solve various kinds of problems about their difficulty. Psychologists know that increasing the number of variables and the length of the chain of deductive reasoning usually brings about an increase in the subjective view that the problem is complex. Inductive reasoning also adds to the perception of complexity. In a software context, problem complexity is concerned with the algorithms that will become part of a program or system. Determining the subjective opinions of real people is a necessary step in calibrating more objective complexity measures.

Problem complexity concerns the subjective views of people asked to solve various kinds of problems about their difficulty. Psychologists know that increasing the number of variables and the length of the chain of deductive reasoning usually brings about an increase in the subjective view that the problem is complex. Inductive reasoning also adds to the perception of complexity. In a software context, problem complexity is concerned with the algorithms that will become part of a program or system. Determining the subjective opinions of real people is a necessary step in calibrating more objective complexity measures.

![]() Process complexity is mathematically related to flow complexity, but in day-to-day software work it is concerned with the flow of materials through a software development cycle. This aspect of complexity is often dealt with in a practical way by project management tools that can calculate critical paths and program evaluation and review technique (PERT) diagrams of software development processes.

Process complexity is mathematically related to flow complexity, but in day-to-day software work it is concerned with the flow of materials through a software development cycle. This aspect of complexity is often dealt with in a practical way by project management tools that can calculate critical paths and program evaluation and review technique (PERT) diagrams of software development processes.

![]() Semantic complexity is derived from the study of linguistics and is concerned with ambiguities in the definitions of terms. Already cited in this book are the very ambiguous terms quality, data, and complexity. The topic of semantic complexity is relevant to software for a surprising reason: Many lawsuits between software developers and their clients can be traced back to the semantic complexity of the contract when both sides claim different interpretations of the same clauses. Semantic complexity is not used as a formal estimating parameter.

Semantic complexity is derived from the study of linguistics and is concerned with ambiguities in the definitions of terms. Already cited in this book are the very ambiguous terms quality, data, and complexity. The topic of semantic complexity is relevant to software for a surprising reason: Many lawsuits between software developers and their clients can be traced back to the semantic complexity of the contract when both sides claim different interpretations of the same clauses. Semantic complexity is not used as a formal estimating parameter.

![]() Syntactic complexity is also derived from linguistics and deals with the grammatical structure and lengths of prose sections, such as sentences and paragraphs. A variety of commercial software tools are available for measuring syntactic complexity, using such metrics as the FOG index. (Unfortunately, these tools are seldom applied to software specifications, although they would appear to be valuable for that purpose.)

Syntactic complexity is also derived from linguistics and deals with the grammatical structure and lengths of prose sections, such as sentences and paragraphs. A variety of commercial software tools are available for measuring syntactic complexity, using such metrics as the FOG index. (Unfortunately, these tools are seldom applied to software specifications, although they would appear to be valuable for that purpose.)

![]() Topologic complexity deals with rotation and folding patterns. This topic is often explored by mathematicians, but it also has relevance for software. For example, topological complexity is a factor in some of the commercial source code restructuring tools.

Topologic complexity deals with rotation and folding patterns. This topic is often explored by mathematicians, but it also has relevance for software. For example, topological complexity is a factor in some of the commercial source code restructuring tools.