Chapter 18

Estimating Code Inspections

The topic of formal code inspections has a continuous stream of empirical data that runs back to the early 1970s. Formal code inspections were originally developed at the IBM Kingston programming laboratory by Michael Fagan and his colleagues, and have since spread throughout the programming world.

(It is interesting that Michael Fagan, the inventor of inspections, received an IBM outstanding contribution award for the discovery that design and code inspections benefit software quality, schedules, and costs simultaneously.)

Code Inspection Literature

Other researchers, such as Tom Gilb, Dr. Gerald Weinberg, and the author of this book, have followed the use of inspections in recent years, and the method is still the top-ranked method for achieving high levels of overall defect-removal efficiency.

Even in 2007 inspections continue to attract interest and research. One interesting new book is Peer Reviews in Software by Karl Wiegers (Addison-Wesley, 2002). Another interesting and useful book is High-Quality Low-Cost Software Inspections by Ron Radice (Paradoxican Publisher, 2002). Ron was a colleague of Michael Fagan at IBM Kingston when the first design and code inspections were being tried out. Thus, Ron has been an active participant in formal inspections for almost 35 years.

An even newer book that discusses not only inspections per se, but also their economic value and the measurement approaches for collecting inspection data is Stephen Kan’s Metrics and Models in Software Quality Engineering (Addison-Wesley, 2003). This is the second edition of Kan’s popular book.

Roger Pressman’s book Software Engineering—A Practitioner’s Approach (McGraw-Hill, 2005) also discusses various forms of defect removal. The sixth edition of this book includes new chapters on Agile development, extreme programming, and several other of the newer methods. It provides an excellent context for the role of quality in all of the major development approaches.

It should be noted that code inspections work perfectly well on every known programming language. They have been successfully utilized on APL, assembly language, Basic, C, C++, HTML, Java, Smalltalk, and essentially all known languages. Inspections can be a bit tricky on languages where “programming” can be performed via buttons or pull-down menus such as those used in Visual Basic.

Effectiveness of Code Inspections

Formal code inspections are about twice as efficient as any known form of testing in finding deep and obscure programming bugs, and are the only known method to top 85 percent in defect-removal efficiency.

However, formal code inspections are fairly expensive and time-consuming, so they are most widely utilized on software projects where operational reliability and safety are mandatory, such as the following:

![]() Mainframe operating systems

Mainframe operating systems

![]() Telephone switching systems

Telephone switching systems

![]() Aircraft flight control software

Aircraft flight control software

![]() Medical instrument software

Medical instrument software

![]() Weapons systems software

Weapons systems software

It is an interesting observation, with solid empirical data to back it up, that large and complex systems (>1000 function points or >100,000 source code statements) that utilize formal code inspections will achieve shorter schedules and lower development and maintenance costs than similar software projects that use only testing for defect removal. Indeed, the use of formal inspections has represented a best practice for complex systems software for more than 35 years.

The reason for this phenomenon is based on the fact that for complex software applications the schedule, effort, and costs devoted to finding and fixing bugs take longer and are more expensive than any other known cost factors. In fact, the cost of finding coding errors is about four times greater than the cost of writing the code. Without inspections, the testing cycle for a large system is often a nightmare of numerous bug reports, hasty patches, retests, slipping schedules, and lots of overtime.

Formal inspections eliminate so many troublesome errors early that when testing does occur, very few defects are encountered; hence, testing costs and schedules are only a fraction of those experienced when testing is the first and only form of defect removal. When considering the total cost of ownership of a major software application, the return on investment (ROI) from formal inspections can top $15 for every $1 spent, which ranks as one of the top ROIs of any software technology.

Formal code inspections overlap several similar approaches, and except among specialists the terms inspection, structured walkthrough, and code review are often used almost interchangeably. Following are the major differences among these variations.

Formal code inspections are characterized by the following attributes:

![]() Training is given to novices before they participate in their first inspection.

Training is given to novices before they participate in their first inspection.

![]() The inspection team is comprised of a moderator, a recorder, one or more inspectors, and the person whose work product is being inspected.

The inspection team is comprised of a moderator, a recorder, one or more inspectors, and the person whose work product is being inspected.

![]() Schedule and timing protocols are carefully adhered to for preparation time, the inspection sessions themselves, and follow-up activities.

Schedule and timing protocols are carefully adhered to for preparation time, the inspection sessions themselves, and follow-up activities.

![]() Accurate data is kept on the number of defects found, hours of effort devoted to preparation, and the size of the work product inspected.

Accurate data is kept on the number of defects found, hours of effort devoted to preparation, and the size of the work product inspected.

![]() Standard metrics are calculated from the data collected during inspections, such as defect-removal efficiency, work hours per function point, work hours per KLOC, and work hours per defect.

Standard metrics are calculated from the data collected during inspections, such as defect-removal efficiency, work hours per function point, work hours per KLOC, and work hours per defect.

The less formal methods of structured walkthroughs and code reviews differ from the formal code inspection method in the following key attributes:

![]() Training is seldom provided for novices before they participate.

Training is seldom provided for novices before they participate.

![]() The usage of a moderator and recorder seldom occurs.

The usage of a moderator and recorder seldom occurs.

![]() Little or no data is recorded on defect rates, hours expended, costs, or other quantifiable aspects of the review or walkthrough.

Little or no data is recorded on defect rates, hours expended, costs, or other quantifiable aspects of the review or walkthrough.

As a result, it is actually harder to estimate the less formal methods, such as code walkthroughs, than it is to estimate formal code inspections. The reason is that formal code inspections generate accurate quantitative data as a standard output, while the less formal methods usually have very little data available on either defects, removal efficiency, schedules, effort, or costs.

However, there is just enough data to indicate that the less formal methods are not as efficient and effective as formal code inspections, although they are still better than many forms of testing.

Formal code inspections will average about 65 percent in defect-removal efficiency, and the best results can top 85 percent.

Less formal structured walkthroughs average about 45 percent in defect-removal efficiency, and the best results can top about 70 percent.

For peer reviews in small applications using the Agile “pair-programming” concept, the reviews of one colleague on the code of the second colleague will average about 50 percent efficient. The best results can top about 75 percent, assuming a very experienced and capable programmer.

In general, the Agile methods and XP have not adopted formal inspections. This is due to the fact that inspections are somewhat time-consuming and perhaps not fully necessary for the kinds of smaller applications that are typically being developed under the Agile and XP methods.

However, since most forms of testing are less than 30 percent efficient in finding bugs, it can be seen that either formal code inspections or informal code walkthroughs can add value to defect-removal operations.

Another common variant on the inspection method is that of doing partial inspections on less than 100 percent of the code in an application. This variant makes estimating tricky, because there is no fixed ratio of code that will be inspected. Some of the variants that the author’s clients have utilized include the following:

![]() Inspecting only the code that deals with the most complex and difficult algorithms (usually less than 10 percent of the total volume of code)

Inspecting only the code that deals with the most complex and difficult algorithms (usually less than 10 percent of the total volume of code)

![]() Inspecting only modules that are suspected of being error prone due to the volumes of incoming defect reports (usually less than 5 percent of the total volume of code)

Inspecting only modules that are suspected of being error prone due to the volumes of incoming defect reports (usually less than 5 percent of the total volume of code)

![]() Using time box inspections (such as setting aside a fixed period such as one month), and doing as much as possible during the assigned time box (often less than 50 percent of the total volume of code)

Using time box inspections (such as setting aside a fixed period such as one month), and doing as much as possible during the assigned time box (often less than 50 percent of the total volume of code)

However, for really important applications that will affect human life or safety (i.e., medical instrument software, weapons systems, nuclear control systems, aircraft flight control, etc.), anything less than 100 percent inspection of the entire application is hazardous and should be avoided.

Another aspect of inspections that makes estimation tricky is the fact that the inspection sessions are intermittent activities that must be slotted into other kinds of work. Using the formal protocols of the inspection process, inspection sessions are limited to a maximum of two hours each, and no more than two such sessions can be held in any given business day.

These protocols mean that for large systems, the inspection sessions can be strung out for several months. Further, because other kinds of work must be done and travel might occur, and because re-inspections may be needed, the actual schedules for the inspection sessions are unpredictable using simple algorithms and rules of thumb.

Using a sophisticated scheduling tool, such as Microsoft Project, Timeline, Artemis, and the like, what usually occurs is that inspections are slotted into other activities over a several-month period. A common practice is to run inspections in the morning, and leave the afternoons for other kinds of work.

However, one of the other kinds of work is preparing for the next inspection. Although preparation goes faster than the inspection sessions themselves, the preparation for the next code inspection session can easily amount to one hour per inspector prior to each planned inspection session.

Although the programmer whose work is being inspected may not have as much preparation work as the inspectors, after the inspection he or she may have to fix quite a few defects that the inspection churns up.

Yet another aspect of inspections that adds complexity to the estimation task is the fact that with more and more experience, the participants in formal code inspection benefit in two distinct ways:

![]() Programmers who participate in inspections have reduced bug counts in their own work.

Programmers who participate in inspections have reduced bug counts in their own work.

![]() The inspectors and participants become significantly more efficient in preparation time and also in the time needed for the inspection sessions.

The inspectors and participants become significantly more efficient in preparation time and also in the time needed for the inspection sessions.

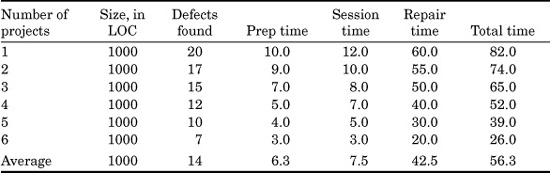

Table 18.1 illustrates these simultaneous improvements for a scenario that assumes that six different software projects will be inspected over time by more or less the same set of programmers. As can be seen, by the time the sixth project is reached, the team is quite a bit better than when it started with its first inspection.

As can be observed, inspections are beneficial in terms of both their defect-prevention aspects and their defect-removal aspects. Indeed, one of the most significant benefits of formal design and code inspections is that they raise the defect-removal efficiency of testing.

TABLE 18.1 Improvement in Code Inspection Performance with Practice

(Time in hours)

Some of the major problems of achieving high-efficiency testing are that the specifications are often incomplete, the code often does not match the specifications, and the poor and convoluted structure of the code makes testing of every path difficult. Formal design and code inspections will minimize these problems by providing test personnel with more complete and accurate specifications and by eliminating many of the problem areas of the code itself.

Considerations for Estimating Code Inspections

A simple rule of thumb is that every hour spent on formal code inspections will probably save close to three hours in subsequent testing and downstream defect-removal operations.

Estimating both design and code inspections can be tricky, because both forms of inspection have wide ranges of possible variance, such as the following:

![]() Preparation time is highly variable.

Preparation time is highly variable.

![]() The number of participants in any session can range from three to eight.

The number of participants in any session can range from three to eight.

![]() Personal factors (flu, vacations, etc.) can cancel or delay inspections.

Personal factors (flu, vacations, etc.) can cancel or delay inspections.

![]() Inspections are intermittent events limited to two-hour sessions.

Inspections are intermittent events limited to two-hour sessions.

![]() Inspections have to be slotted into other kinds of work activities.

Inspections have to be slotted into other kinds of work activities.

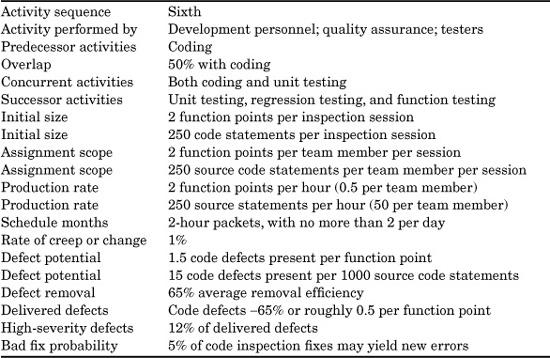

The nominal default values for code inspections are shown in Table 18.2, although these values should be replaced by local data as quickly as possible. Indeed, inspections lend themselves to template construction because local conditions can vary so widely.

Although design and code inspections are time-consuming and admittedly expensive, they will speed up test cycles to such a degree that follow-on test stages such as new-function test, regression test, system test, stress test, and customer acceptance test will often be reduced in time and cost by more than 50 percent. Thus, when estimating the costs and schedule impacts of formal inspections, don’t forget that testing costs and test schedules will be significantly lower.

When inspections become a normal part of software development processes in large corporations, there may be several, or even dozens, of inspections going on concurrently on any given business day. For some large systems, there may even be dozens of inspections for different components of the same system taking place simultaneously.

TABLE 18.2 Nominal Default Values for Code Inspections

This phenomenon raises some practical issues that need to be dealt with:

![]() Possible conflicts in scheduling inspection conference rooms.

Possible conflicts in scheduling inspection conference rooms.

![]() Possible conflicts in scheduling inspection participants.

Possible conflicts in scheduling inspection participants.

Often, large companies that use inspections have an inspection coordinator, who may be part of the quality-assurance organization. The inspection coordinator handles the conference room arrangements and also the scheduling of participants. For scheduling individual participants, some kind of calendar management tool is usually used.

Although the inspection process originated as a group activity in which all members of the inspection team met face to face, software networking technologies are now powerful enough that some inspections are being handled remotely. There is even commercial software available that allows every participant to interact, to chat with the others, and to mark up the listings and associated documentation.

These online inspections are still evolving, but the preliminary data indicates that they are slightly more efficient than face-to-face inspections. Obviously, with online inspections there is no travel to remote buildings, and another less obvious advantage also tends to occur.

In face-to-face inspection sessions, sometimes as much as 15 to 20 minutes out of each two-hour session may be diverted into such unrelated topics as sports, the weather, politics, or whatever. With online inspections idle chat tends to be abbreviated, and, hence, the work at hand goes quicker. The usual result is that the inspection sessions themselves are shorter, and the online variants seldom run much more than 60 minutes, as opposed to the two-hour slots assigned to face-to-face inspections.

Alternatively, the production rates for the online inspection sessions are often faster and some can top 400 source code statements per hour—and with experienced personnel who make few defects to slow down progress, inspections have been clocked at more than 750 source code statements per hour.

Given the power and effectiveness of formal inspections, it is initially surprising that they are not universally adopted and used on every critical software project. The reason why formal inspections are noted only among best-in-class organizations is that the average and lagging organizations simply do not know what works and what doesn’t.

In other words, both the project managers and the programming personnel in lagging companies that lack formal measurements of quality, formal process improvement programs, and the other attributes of successful software production do not know enough about the effectiveness of inspections to see how large an ROI they offer.

Consider the fact that lagging and average companies collect no historical data of their own and seldom review the data collected by other companies. As a result, lagging and average enterprises are not in a position to make rational choices about effective software technologies. Instead, they usually follow whatever current cult is in vogue, whether or not the results are beneficial. They also fall prey to pitches of various tool and methodology vendors, with or without any substantial evidence that what is being sold will be effective.

Leading companies, on the other hand, do measure such factors as defect-removal efficiency, schedules, costs, and other critical factors. Leading companies also tend to be more familiar with the external data and the software engineering literature. Therefore, leading enterprises are aware that design and code inspections have a major place in software engineering because they benefit quality, schedules, and costs simultaneously.

Another surprising reason why inspections are not more widely used is the fact that the method is in the public domain, so none of the testing-tool companies can generate any significant revenues from the inspection technology. Thus, the testing-tool companies tend to ignore inspections as though they did not even exist, although if the testing companies really understood software quality they would include inspection support as part of their offerings.

Judging from visits to at least a dozen public and private conferences by testing-tool and quality-assurance companies, inspections are sometimes discussed by speakers but almost never show up in vendor’s showcases or at quality tool fairs. This is unfortunate, because inspections are a powerful adjunct to testing and, indeed, can raise the defect-removal efficiency level of downstream testing by perhaps 15 percent as compared to the results from similar projects that do only testing. Not only will testing defect removal go up, but testing costs and schedules will go down once formal inspections are deployed.

From a software-estimating standpoint, the usage of formal inspections needs to be included in the estimate, of course. Even more significant, the usage of formal design and code inspections will have a significant impact on downstream activities that also need to be included in the estimate. For example, the usage of formal inspections will probably have the following downstream effects:

![]() At least 65 percent of latent errors will be eliminated via inspections, so testing will be quicker and cheaper. The timeline for completing testing will be about 50 percent shorter than for similar projects that do not use inspections.

At least 65 percent of latent errors will be eliminated via inspections, so testing will be quicker and cheaper. The timeline for completing testing will be about 50 percent shorter than for similar projects that do not use inspections.

![]() The inspections will clean up the specifications and, hence, will allow better test cases to be constructed, so the defect-removal efficiency levels of testing will be about 12 to 15 percent higher than for similar projects that do not use inspections.

The inspections will clean up the specifications and, hence, will allow better test cases to be constructed, so the defect-removal efficiency levels of testing will be about 12 to 15 percent higher than for similar projects that do not use inspections.

![]() The combined defect-removal efficiency levels of the inspections coupled with better testing will reduce maintenance costs by more than 50 percent compared to similar projects that don’t use inspections.

The combined defect-removal efficiency levels of the inspections coupled with better testing will reduce maintenance costs by more than 50 percent compared to similar projects that don’t use inspections.

![]() Projects that use formal inspections will usually score higher on customer-satisfaction surveys.

Projects that use formal inspections will usually score higher on customer-satisfaction surveys.

There are some psychological barriers to introducing formal inspections for the first time. Most programmers are somewhat afraid of inspections because they imply a detailed scrutiny of their code and an evaluation of their programming styles.

One effective way to introduce inspections is to start them as a controlled experiment. Management will ask that formal inspections be used for a six-week period. At the end of that period, the programming team will decide whether to continue with inspections, or revert back to previous methods of testing and perhaps informal reviews.

When this approach is used and inspections are evaluated on their own merits, rather than being perceived as something forced by management, the teams will vote to continue with inspections about 75 percent of the time.

References

Dunn, Robert, and Richard Ullman: Quality Assurance for Computer Software, McGraw-Hill, New York, 1982.

Fagan, M.E.: “Design and Code Inspections to Reduce Errors in Program Development,” IBM Systems Journal, 12(3):219–248 (1976).

Friedman, Daniel P., and Gerald M. Weinberg: Handbook of Walkthroughs, Inspections, and Technical Reviews, Dorset House Press, New York, 1990.

Gilb, Tom, and D. Graham: Software Inspections, Addison-Wesley, Reading, Mass., 1993.

Jones, Capers: Assessment and Control of Software Risks, Prentice-Hall, Englewood Cliffs, N.J., 1994.

———: Patterns of Software System Failure and Success, International Thomson Computer Press, Boston, Mass., 1995.

———: Software Quality—Analysis and Guidelines for Success, International Thomson Computer Press, Boston, Mass., 1997.

———: Software Assessments, Benchmarks, and Best Practices, Addison-Wesley Longman, Boston, Mass., 2000.

———: Conflict and Litigation Between Software Clients and Developers, Software Productivity Research, Burlington, Mass., 2003.

Kan, Stephen H.: Metrics and Models in Software Quality Engineering, Second Edition, Addison-Wesley Longman, Boston, Mass., 2003.

Larman, Craig, and Victor Basili: “Iterative and Incremental Development—A Brief History,” IEEE Computer Society, June 2003, pp 47–55.

Mills, H., M. Dyer, and R. Linger: “Cleanroom Software Engineering,” IEEE Software, 4, 5 (Sept. 1987), pp. 19–25.

Pressman, Roger: Software Engineering—A Practitioner’s Approach, Sixth Edition; McGraw-Hill, New York, 2005.

Putnam, Lawrence H.: Measures for Excellence—Reliable Software on Time, Within Budget, Yourdon Press/Prentice-Hall, Englewood Cliffs, N.J., 1992.

———, and Ware Myers: Industrial Strength Software—Effective Management Using Measurement, IEEE Computer Society Press, Washington D.C., 1997.

Quality Function Deployment (http://en.wikipedia.org/wiki/Quality_function_ deployment).

Radice, Ronald A.: High-Quality Low-Cost Software Inspections, Paradoxican Publishing, Andover, Mass., 2002.

Rubin, Howard: Software Benchmark Studies for 1997, Howard Rubin Associates, Pound Ridge, N.Y., 1997.

Wiegers, Karl E.: Peer Reviews in Software—A Practical Guide, Addison-Wesley, Boston, Mass., 2002.