5 Dealing with noisy tests

- explaining why tests are crucially important to CD

- creating and executing a plan to go from noisy test failures to a useful signal

- understanding what makes tests noisy

- treating test failures as bugs

- defining flaky tests and understanding why they are harmful

- retrying tests appropriately

It’d be nearly impossible to have continuous delivery (CD) without tests! For a lot of folks, tests are synonymous with at least the CI side of CD, but over time some test suites seem to degrade in value. In this chapter, we’ll take a look at how to take care of noisy test suites.

Continuous delivery and tests

How do tests fit into CD? From chapter 1, CD is all about getting to a state where

-

you can safely deliver changes to your software at any time.

-

delivering that software is as simple as pushing a button.

How do you know you can safely deliver changes? You need to be confident that your code will do what you intended it to do. In software, you gain confidence about your code by testing it. Tests confirm to you that your code does what you meant for it to do.

This book isn’t going to teach you to write tests; you can refer to many great books written on the subject. I'm going to assume that not only do you know how to write tests, but also that most modern software projects have at least some tests defined for them (if that’s not the case for your project, it’s worth investing in adding tests as soon as possible).

![]() Vocab time

Vocab time

A test suite is a grouping of tests. It often means “the set of tests that test this particular piece of software.”

Chapter 3 talked about the importance of continuously verifying every change. It is crucially important that tests are run not only frequently, but on every single change. This is all well and good when a project is new and has only a few tests, but as the project grows, so do the suites of tests, and they can become slower and less reliable over time. In this chapter, I'll show how to maintain these tests over time so you can keep getting a useful signal and be confident that your code is always in a releasable state.

Where does QA fit into CD?

With all this focus on test automation, you might wonder if CD means getting rid of the quality assurance (QA) role. It doesn’t! The important thing is to let humans do what humans do best: explore and think outside the box. Automate when you can, but automated tests will always do exactly what you tell them. If you want to discover new problems you’ve never even thought of, you’ll need humans performing a QA role!

Ice Cream for All outage

One company that’s really struggling with its test maintenance is the wildly successful ice-cream delivery company, Ice Cream for All. Its unique business proposition is that it connects you directly to ice-cream vendors in your area so that you can order your favorite ice cream and have it delivered directly to your house within minutes!

Ice Cream for All connects users to thousands of ice-cream vendors. To do this, the Ice Cream service needs to be able to connect to each vendor’s unique API.

![]() Vocab time

Vocab time

A retrospective, sometimes called a postmortem, is an opportunity to reflect on processes, often when something goes wrong, and decide how to improve in the future.

July 4 is a peak day for Ice Cream for All. Every year on July 4, Ice Cream for All receives the most ice-cream orders of the year. But this year, the company had a terrible outage during the busiest part of the day! The Ice Cream service was down for more than an hour.

The team working on the Ice Cream service wrote up a retrospective to try to capture what went wrong and fix it in the future, and had an interesting discussion in the comments:

Signal vs. noise

Ice Cream for All has a problem with noisy tests. Its tests fail so frequently that the engineers often ignore the failures. And this caused real-world problems: ignoring a noisy test cost the company business on their busiest day of the year!

What should the team members do about their noisy tests? Before they do anything, they need to understand the problem. What does it mean for tests to be noisy?

The term noisy comes from the signal-to-noise ratio which compares some desired information (the signal) to interfering information that obscures it (the noise).

When we’re talking about tests, what is the signal? What is the information that we’re looking for? This is an interesting question, because your gut reaction might be to say that the signal is passing tests. Or maybe the opposite, that failures are the signal.

The answer is: both! The signal is the information, and the noise is anything that distracts us from the information.

When tests pass, this gives you information: you know the system is behaving as you expect it to (as defined by your tests). When tests fail, that gives you information too. And it’s even more complicated than that. In the following chart, you can see that both failures and successes can be signals and can be noise.

|

Tests |

Succeed |

Fail |

|

Signal |

Passes and should pass (i.e., catches the errors it was meant to catch) |

Failures provide new information. |

|

Noise |

Passes but shouldn’t (i.e., the error condition is happening) |

Failures do not provide any new information. |

Noisy successes

This can be a bit of a paradigm shift, especially if you are used to thinking of passing tests as providing a good signal, and failing tests as causing noise. This can be true, but as you’ve just seen, it’s a bit more complicated:

The signal is the information, and the noise is anything that distracts us from the information.

-

Successes are signals unless they are covering up information.

-

Failures are signals when they provide new information, and are noise when they don’t.

When can a successful test cover up information? One example is a test that passes but really shouldn’t, aka a noisy success. For example, in the Orders class, a method recently added to the Ice Cream For All codebase was supposed to return the most recent order, and this test was added for it:

def test_get_most_recent(self):

orders = Orders()

orders.add(datetime.date(2020, 9, 4), "swirl cone")

orders.add(datetime.date(2020, 9, 7), "cherry glazed")

orders.add(datetime.date(2020, 9, 10), "rainbow sprinkle")

most_recent = orders.get_most_recent()

self.assertEqual(most_recent, "rainbow sprinkle")The test currently passes, but it turns out that the get_most_recent method is just returning the last order in the underlying dictionary:

class Orders:

def __init__(self):

self.orders = collections.defaultdict(list)

def add(self, date, order):

self.orders[date].append(order)

def get_most_recent(self):

most_recent_key = list(self.orders)[-1]

return self.orders[most_recent_key][0] ❶❶ Several things are wrong with this method, including not handling the case of no orders being added, but more importantly, what if the orders are added out of order?

The get_most_recent method is not paying attention to when the orders are made at all. It is just assuming that the last key in the dictionary corresponds to the most recent order. And since the test just so happens to be adding the most recent order last (and as of Python 3.6 dictionary ordering is guaranteed to be insertion order), the test is passing.

But since the underlying functionality is broken, the test really shouldn’t be passing at all. As I said previously, this is what we call a noisy success: by passing, this test is covering up the information that the underlying functionality does not work as intended.

How failures become noise

You’ve just seen how a test success can be noise. But what about failures? Are failures always noise? Always signal? Neither! The answer is that failures are signals when they provide new information, and are noise when they don’t. Remember:

The signal is the information, and the noise is anything that distracts us from the information.

-

Successes are signals unless they are covering up information.

-

Failures are signals when they provide new information, and are noise when they don’t.

When a test fails initially, it gives us new information: it tells us that some kind of mismatch occurs between the behavior the test expects and the actual behavior. This is a signal.

That same signal can become noise if you ignore the failure. The next time the same failure occurs, it’s giving us information that we already know: we already know that the test had failed previously, so this new failure is not new information. By ignoring test failure, we have made that failure into noise.

This is especially common if it’s hard to diagnose the cause of the failure. If the failure doesn’t always happen (say, the test passes when run as part of the CI automation, but fails locally), it’s much more likely to get ignored, therefore creating noise.

![]() It’s your turn: Evaluating signal vs. noise

It’s your turn: Evaluating signal vs. noise

Take a look at the following test situations that Ice Cream for All is dealing with and categorize them as noise or signal:

-

While working on a new feature around selecting favorite ice-cream flavors, Pete creates a PR for his changes. One of the UI tests fails because Pete’s changes accidentally moved the Order button from the expected location to a different one.

-

While running the integration tests on his machine, Piyush sees a test fail:

TestOrderCancelledWhenPaymentRejected. He looks at the output from the test, looks at the test and the code being tested, and doesn’t understand why it fails. When he reruns the test, it passes. -

Although

TestOrderCancelledWhenPaymentRejectedfailed once for Piyush, he can’t reproduce the issue and so he merges his changes. Later, he submits another change and sees the same test fail against his PR. He reruns it, and it passes, so he ignores the failure again and merges the changes. -

Nishi has been refactoring some of the code around displaying order history. While doing this, she notices that the logic in one of the tests is incorrect:

TestPaginationLastPageis expecting the generated page to include three elements, but it should include only two. The pagination logic contains a bug.

![]() Answers

Answers

-

Signal. The failure of the UI test has given Piyush new information: that he moved the Order button.

-

Signal. Piyush didn’t understand what caused the failure of this test, but something caused the test to fail, and this revealed new information.

-

Noise. Piyush suspected from his previous experience with this test that something might be wrong with it. Seeing the test fail again tells him that the information he got before was legitimate, but by allowing this failure and merging, he has created noise.

-

Noise. The test Nishi discovered should have been failing; by passing, it was covering up information.

Going from noise to signal

Alerting systems are useful only if people pay attention to the alerts. When they are too noisy, people stop paying attention, so they may miss the signal.

Car alarms are an example: if you live in a neighborhood where a lot of cars are parked, and you hear an alarm go off, are you rushing to your window with your phone out, ready to phone in an emergency? It probably depends on how frequently it’s happened; if you’ve never heard a alarm like that, you might. But if you hear them every few days, more likely you’re thinking, “Oh someone bumped into that car. I hope the alarm gets turned off soon.”

What if you live or work in an apartment building and the fire alarm goes off? You probably take it seriously and begrudgingly exit the building. What if it happens again the next day? You’ll probably leave the building anyway because those alarms are loud but you’d probably start to doubt that it’s an actual emergency, and the next day you’d definitely think it’s a false alarm.

The longer you tolerate a noisy signal, the easier it is to ignore it and the less effective it is.

Getting to green

The longer you tolerate noisy tests, the easier it is to ignore them—even when they provide real information—and the less effective they are. Leaving them in this state seriously undermines their value. People get desensitized to the failures and feel comfortable ignoring them.

![]() Vocab time

Vocab time

Test successes are often visualized as green, while failures are often red. Getting to green means that all your tests are passing!

This is the same position that Ice Cream for All is in: its engineers have gotten so used to ignoring their tests that they’ve let some major problems slip through. These problems were caught by the tests, and the company has lost money as a result.

How do they fix this? The answer is to get to green as fast as possible: get to a state where the tests are consistently passing, so that any change in this state (failing) is a real signal that needs to be investigated.

Nishi is totally right: creating and maintaining tests isn’t something we do for the sake of the tests themselves; we do this because we believe they add value, and most of that value is the signals they give us. So she makes a hard decision: stop adding features until all the tests are fixed.

Tests provide value in other ways too. Creating unit tests, for example, can help improve the quality of your code. That topic is beyond the scope of this book. Look for a book about unit testing to learn more.

Another outage!

The team members do what Nishi requests: they freeze feature development for two weeks and during that time do nothing but fix tests. After the end of week 3, the test task in their pipeline is consistently passing. They got to green!

The team feels confident about adding new features again and in the third week, goes back to their regular work. At the end of that week, the team members have another release—and a small party to celebrate. But at 3 a.m., Nishi is roused from her sleep by an alert telling her another outage has occurred.

![]() Noodle on it

Noodle on it

Did Nishi make a good call? She decided to freeze feature development for two weeks, to focus on fixing tests, and the end result was another outage. Looking back at that decision, an easy conclusion to draw is that the (undoubtedly expensive) feature freeze was not worth it and did more harm than good.

Nishi was faced with a decision that many of us will often face: maintain the status quo (for Ice Cream for All, this means noisy tests that unexpectedly cause outages) or take some kind of action. Taking action means trying something out and making a change. Anytime you change the way you do things, you’re taking a risk: the change could be good or it could be bad. Often it’s both! And, often, when changes are made, there can be an adjustment period during which things are definitely worse, though ultimately they end up better.

What would you do if you were in Nishi’s position? Do you think she made the right call? What would you do differently in her shoes, if anything?

Passing tests can be noisy

Nishi jumps into the team’s group chat to investigate the outage she has just been alerted to:

Saturday 3:00 a.m.

The team members had felt good about their test suite because all the tests were passing. Unfortunately, they hadn’t actually removed the noise; they’d just changed it. Now, the successful tests were the noise.

![]() Vocab time

Vocab time

I’ll explain in more detail in a few pages, but briefly: a flake is a test that sometimes passes and sometimes fails.

Getting the test suite from noise to signal was the right call to make, and getting the suite from often failing to green was a good first step, because it combats desensitization.

But just getting to green isn’t enough: test suites that pass can still be noisy and can hide serious problems.

The team at Ice Cream for All had fixed their desensitization problem, but hadn’t actually fixed their tests.

![]() Takeaway

Takeaway

Nishi made a good call, but getting to green on its own isn’t enough. The goal is to get the tests to a consistent state (passing), and any change in this state (failing) is a real signal that needs to be investigated. When dealing with noisy test suites, do the following:

-

Get to green as fast as possible.

-

Actually fix every failing test; just silencing them adds more noise.

Fixing test failures

You might be surprised to learn that knowing whether you have fixed a test is not totally straightforward. It comes back to the question of what constitutes a signal and what is noise when it comes to tests.

People often think that fixing a test means going from a failing test to a passing test. But there is more to it than that!

Technically, fixing the test means that you have gone from the state of the test being noise to it being a signal. This means that there are tests that are currently passing that may need fixing. More about that in a bit; for now, I'm going to talk about fixing tests that are currently failing.

Every time a test fails, this means one (or both) of two things has happened:

-

The test was written incorrectly (the system was not intended to behave in the way the test was written to expect).

-

The system has a bug (the test is correct, and it’s the system that isn’t behaving correctly).

What’s interesting is that we write tests with situation 2 in mind, but when tests fail (especially if we can’t immediately understand why), we tend to assume that the situation is 1 (that the tests themselves are the problem).

This is what is usually happening when people say their tests are noisy: their tests are failing, and they can’t immediately understand why, so they jump to the conclusion that something is wrong with the tests.

But both situations 1 and 2 have something in common:

When a test fails, a mismatch exists between how the test expects the system to behave and how the system is actually behaving.

Regardless of whether the fix is to update the test or to update the system, this mismatch needs to be investigated. This is the point in the test’s life cycle with the greatest chance that noise will be introduced. The test’s failure has given you information—specifically, that a mismatch exists between the tests and the system. If you ignore that information, every new failure isn’t telling you anything new. Instead it’s repeating what you already know: a mismatch exists. This is how test failures become noise.

The other way you can introduce noise is by misdiagnosing case 2 as case 1. It is often easier to change the test than it is to figure out why the system is behaving the way it is. If you do this without really understanding the system’s behavior, you’ve created a noisy successful test. Every time that test passes, it’s covering up information: the fact that a mismatch exists between the test and the system that was never fully investigated.

Treat every test failure as a bug and investigate it fully.

Ways of failing: Flakes

Complicating the story around signal and noise in tests, we have the most notorious kind of test failure: the test flake. Tests can fail in two ways:

-

Consistently—Every time the test is run, it will fail.

-

Inconsistently—Sometimes the test succeeds, sometimes it fails, and the conditions that make it fail are not clear.

Tests that fail inconsistently are often called flakes or flaky. When these tests fail, this is often called flaking, because in the same way that you cannot rely on a flaky friend to follow through on plans you make with them, you cannot depend on these tests to consistently pass or fail.

Consistent tests are much easier to deal with than flakes, and much more likely to be acted on (hopefully, in a way that reduces noise). Flakes are the most common reason that a test suite ends up in a noisy state. And maybe because of that, or maybe just because it’s easier, people do not treat flakes as seriously as consistent failures:

-

Flakes make test suites noisy.

-

Flakes are likely to be ignored and treated as not serious.

This is kind of ironic, because we’ve seen that the noisier a test suite is, the less valuable it is. And what kind of test is likely to make a test suite noisy? The flake, which we are likely to ignore. What is the solution?

Treat flakes like any other kind of test failure: like a bug.

Just like any other case of test failure, flakes represent a mismatch between the system’s behavior and the behavior that the system expects. The only difference is that something about that mismatch is nondeterministic.

Reacting to failures

What went wrong with Ice Cream For All’s approach? The team members had the right initial idea:

When tests fail, stop the line: don’t move forward until they are fixed.

If you have failing tests in your codebase, it’s important to get to green as fast as possible; stop all merging into your main branch until those failures are fixed. And if the failing tests are happening in a branch, don’t merge that branch until the failures are fixed. But the question is, how do you fix those failures? You have a few options:

-

Actually fix it—Ultimately, the goal is to understand why the test is failing and either fix the bug that is being revealed or update an incorrect test.

-

Delete the test—This is rare, but your investigation may reveal that this test was not adding any value and that its failure is not actionable. In that case, there’s no reason to keep it around and maintain it.

-

Disable the test—This is an extreme measure, and if it is done, it should be done only temporarily. Disabling the test means that you are hiding the signal. Any disabled tests should be investigated as fast as possible and either fixed (see option 1) or deleted.

-

Retry the test—This is another extreme measure, that also hides the signal. This is a common way of dealing with flaky tests. The reasoning behind this is rooted in the idea that ultimately what we want the tests to do is pass, but this is incorrect: what we want the tests to do is provide us information. If a test is sometimes failing, and you cover that up by retrying it, you’re hiding the information and creating more noise. Retrying is sometimes appropriate, but rarely at the level of the test itself.

Looking at these options, the only good ones are option 1 and in some rare cases, option 2. Both options 3 and 4 are stopgap measures that should be taken only temporarily, if at all, because they add noise to your signal by hiding failures.

Fixing the test: Change the code or the test?

Ice Cream for All has rolled back its latest release and once again frozen feature development as the team members look into the tests they had tried to “fix” previously.

Looking back through some of the fixes that had been merged, Nishi notices a disturbing pattern: many of the “fixes” change only the tests, and very few of them change the actual code being tested. Nishi knows this is an antipattern. For example, this test had been flaking, so it was updated to wait longer for the success condition:

The test was initially written with an assumption in mind: that the order would be considered acknowledged immediately after it had been submitted. And the code that called submit_orders was built with this assumption as well. But this test was flaking because a race condition is in submit_orders!

Instead of fixing this problem in the submit_orders function, someone updated the test instead, which covers up the bug, and adds a noisy success to the test suite.

They were, in fact, hiding the bug.

Whenever you deal with a test that is failing, before you make any changes, you have to understand whether the test failing is because of a problem with the actual code that is being tested. That is, if the code acts like this when it is being used outside of tests, is that what it should be doing? If it is, then it’s appropriate to fix the test. But if not, the fix shouldn’t go in the test: it should be in the code.

This means making a mental shift from “let’s fix the test” (making the test pass) to “let’s understand the mismatch between the actual behavior of the code, and make the fix in the appropriate place.”

Treat every test failure as a bug and investigate it fully.

Nishi asks the engineer who updated the test to investigate further. After finding the source of the race condition, they are able to fix the underlying bug, and the test doesn't need to be changed at all.

The dangers of retries

Retrying an entire test is usually not a good idea, because anything that causes the failure will be hidden. Take a look at this test in the Ice Cream service integration test suite, one of the tests for the integration with Mr. Freezie:

# We don't want this test to fail just because

# the MrFreezie network connection is unreliable

@retry(retries=3)

def test_process_order(self):

order = _generate_mr_freezie_order()

mrf = MrFreezie()

mrf.connect()

mrf.process_order(order)

_assert_order_updated(order)During the development freeze, Pete decided that this test should be retried. His reasons were sound: the network connection to Mr. Freezie’s servers were known to be unreliable, so this test would sometimes flake because it couldn’t establish a connection successfully, and would immediately pass on a retry.

But the problem is that Pete is retying the entire test. Therefore, if the test fails for another reason, the test will still be retried. And that’s exactly what happened. It turned out there was a bug in the way they were passing orders to Mr. Freezie, which made it so that the total charge was sometimes incorrect. When this happened in the live system, users were being charged the wrong amount, leading to 500 Errors and an outage.

What should Pete do instead? Remember that test failures represent a mismatch:

When a test fails, a mismatch exists between how the test expects the system to behave and how the system is actually behaving.

Pete needs to ask himself the question we need to ask every time we investigate a test failure:

Which represents the behavior we actually want: the test or the system.

A reasonable improvement on Pete’s strategy would be to change the retry logic to be just around the network connection:

def test_process_order_better(self):

order = _generate_mr_freezie_order()

mrf = MrFreezie()

# We don't want this test to fail just because

# the MrFreezie network connection is unreliable

def connect():

mrf.connect()

retry_network_errors(connect, retries=3)

mrf.process_order(order)

_assert_order_updated(order)Retrying revisited

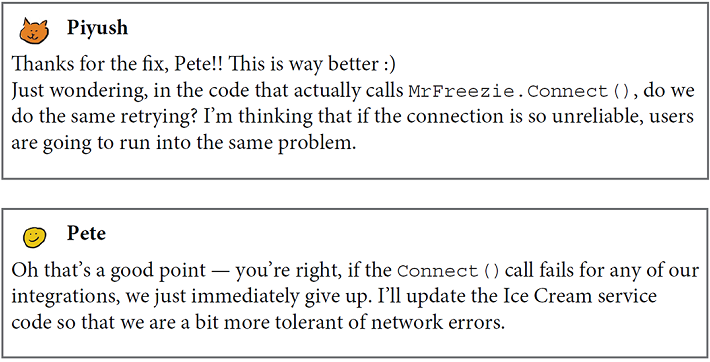

Pete improved his retry-based solution by retrying only the part of the test that he felt was okay to have fail sometimes. In code review, Piyush takes it a step further:

Two bugs were actually being covered up by the retry: in addition to missing the bug in the way orders were being passed to Mr. Freezie, a larger bug existed in that none of the Ice Cream service code was tolerant of network failures (you don’t want your ice-cream order to fail just because of a temporary network problem, do you?).

Ice Cream for All was lucky that the engineers caught the issues that the retry was introducing so quickly. If an outage hadn’t occurred, the engineers may never have noticed, and they probably would have used this retry strategy to deal with more flaky tests. You can imagine how this can build up over time: imagine how many bugs they would be hiding after a few years of applying this strategy.

Causing flaky tests to pass with retries introduces noise: the noise of tests that pass but shouldn’t.

The nature of software projects is that you are going to keep adding more and more complexity, which means the little shortcuts you take are going to get blown up in scope as the project progresses. Slowing down a tiny bit and rethinking stopgap measures like retries will pay off in the long run!

![]() It’s your turn: Fixing a flake

It’s your turn: Fixing a flake

Piyush is trying to deal with another flaky test in the Ice Cream service. This test fails, but it’s happens less than once a week, even though the tests run at least a hundred times per day, and it’s very hard to reproduce locally:

def test_add_to_cart(self):

cart = _generate_cart()

items = _genterate_items(5)

for item in items:

cart.add_item(item)

self.assertEqual(len(cart.get_items()), 5) ❶❶ This assertion sometimes fails.

The cart is backed by a database. Every time an item is added to the cart, the underlying database is updated, and when items are read from the cart, they are read from the database.

-

Assume that what when the tests fail, the number of items in the cart is 4 instead of 5. What do you think might be going wrong?

-

What if the problem is that the number of items is 6 instead of 5; what might be going wrong then?

-

If Piyush deals with this by retrying the test, what bugs might he risk hiding?

-

Let’s say Piyush notices this problem while he is trying to fix a critical production issue. What can he do to make sure he doesn’t add more noise, while not blocking his critical fix?

![]() Answers

Answers

-

If the number of items read is less than the number written, a race may be occurring somewhere. Some kind of synchronization needs to be introduced to ensure that the reads actually reflect the writes.

-

If the number is greater, a fundamental flaw might exist in the way items are being written to the database.

-

Either of the preceding scenarios suggest flaws in the cart logic that could lead to customer orders being lost and incorrect customer charges.

-

In this scenario, adding a temporary retry to unblock this work might be reasonable, as long as the issue with

test_add_to_cartis subsequently treated as a bug and the retry logic is quickly removed.

Why do we retry?

Given what we just looked at, you might be surprised that anyone retries failing tests at all. If it’s so bad, why do so many people do it, and why do so many test frameworks support it? There are a few reasons:

-

People often have good reasons for using some kind of retrying logic; for example, Pete was right to want to retry network connections when they fail. But instead of taking the extra step of making sure the retry logic is in the appropriate place, it was easier to retry the whole test.

-

If you’ve set up your pipelines appropriately, a failing test blocks development and slows people down. It’s reasonable that people often want to do the quickest, easiest thing they can to unblock development, and in situations like that, using retries as a temporary fix can be appropriate—as long as it’s only temporary.

-

It feels good to fix something, and it feels even better to fix something with a clever piece of technology; retries let you get immediate satisfaction.

-

Most importantly, people often have the mentality that the goal is to get the tests to pass, but that’s a misconception. We don’t make tests pass just for the sake of making tests pass. We maintain tests because we want to get information from them (the signal). When we cover up failures without addressing them properly, we’re reducing the value of our test suite by introducing noise.

Temporary is forever

Be careful whenever you make a temporary fix. These fixes reduce the urgency of addressing the underlying problem, and before you know it, two years have gone by and your temporary fix is now permanent.

So if you find yourself tempted to retry a test, try to slow down and see if you can understand what’s actually causing the problem. Retrying can be appropriate if it is

-

applied only to nondeterministic elements that are outside of your control (for example, integrations with other running systems)

-

isolated to precisely the operation you want to retry (for example, in Pete’s case, retrying the

Connect()call only instead of retrying the entire test)

Get to green and stay green

It seemed like no matter what Ice Cream for All did, something went wrong. In spite of that, the engineers had the right approach; they just ran into some valuable lessons that they needed to learn along the way—and hopefully we can learn from their mistakes!

Regardless of your project, your goal should be to get your test suite to green and keep it green. If you currently have a lot of tests that fail (whether they fail consistently or are flakes), it makes sense to take some drastic measures in order to get back to a meaningful signal:

-

Freezing development to fix the test suites will be worth the investment. If you can’t get the buy-in for this (it’s expensive, after all), all hope is not lost; getting to green will just be harder.

-

Disabling and retrying problematic tests, while not approaches you want to take in the long run, can help you get to a green (get back to a signal people will listen to), as long as you prioritize properly investigating them afterward!

Remember, there’s always a balance: no matter how hard you try and how well you maintain your tests, bugs will always exist. The question is, what is the cost of those bugs?

If you’re working on critical healthcare technology, the cost of those bugs is enormous, and it’s worth taking the time to carefully stamp out every bug you can. But if you’re working on a website that lets people buy ice cream, you can definitely get away with a lot more. (Not to say ice cream isn’t important; it’s delicious!)

Get to green and stay green. Treat every failure as a bug, but also don’t treat failures any more seriously than you need to.

Okay, come on: lots of tests are flaky and they don’t all cause outages, right?

It’s probably extreme that poor Ice Cream for All had multiple outages that could have been caught by these neglected tests, but it’s not out of the question. Usually, the issues these cause are a bit more subtle, but the point is that you never know. And the bigger problem is that treating tests like this undermines their value over time. Imagine the difference between a fire alarm that sometimes means fire and sometimes doesn’t (or even worse, sometimes fails to go off when there is a fire!) versus one that always means fire. Which is more valuable?

![]() Build engineer vs. developer

Build engineer vs. developer

Depending on your role on the team, you may be reading this chapter in horror, thinking, “But I can’t change the tests!” It’s pretty common to divide up roles on the team such that someone ends up responsible for the state of the test suite, but they are not the same people developing the features or writing the tests. This can happen to folks in a role called build engineer, or engineering productivity, or something similar: these roles are adjacent to, and supporting, the feature development of a team.

If you find yourself in this role, it can be tempting to lean on solutions that don’t require input or work from the developers on the team working on features. This is another big reason we end up seeing folks trying to rely on automation (e.g., retrying) instead of trying to tackle the problems in the tests directly.

But if the evolution of software development so far has taught us anything, it’s that drawing lines of responsibility too rigidly between roles is an antipattern. Just take a look at the whole DevOps movement: an attempt to break down the barriers between the developers and the operations team. Similarly, if we draw a hard line between build engineering and feature development, we’ll find ourselves walking down a similar path of frustration and wasted effort.

When we’re talking about CD in general, and about testing in particular, the truth is that we can’t do this effectively without effort from the feature developers themselves. Trying to do this will lead to a degradation in the quality and effectiveness of the test suite over time.

So if you’re a build engineer, what do you do? You have three options:

-

Apply automation features like retries and accept the reality that this will cause the test suite to degrade over time.

-

Learn to put on the feature developer hat and make these required fixes (to the tests and the code you’re testing).

-

Get buy-in from the feature developers and work closely with them to address any test failures (e.g., opening bugs to track failing tests and trusting them to treat the bugs with appropriate urgency).

Conclusion

Testing is the beating heart of CD. Without testing, you don’t know if the changes that you are trying to continuously integrate are safe to deliver. But the sad truth is that the way we maintain our tests suites over time often causes them to degrade in value. In particular, this often comes from a misunderstanding about what it means for tests to be noisy—but it’s something you can proactively address!

Summary

-

Tests are crucial to CD.

-

Both failing and passing tests can be causing noise; noisy tests are any tests that are obscuring the information that your test suite is intended to provide.

-

The best way to restore the value of a noisy test suite is to get to green (a passing suite of tests) as quickly as possible.

-

Treat test failures as bugs and understand that often the appropriate fix for the test is in the code and not the test itself. Either way, the failure represents a mismatch between the system’s behavior and the behavior the test expected, and the failure deserves a thorough investigation.

-

Retrying entire tests is rarely a good idea and should be done with caution.

Up next . . .

In the next chapter, we’ll continue to look at the kinds of issues that plague test suites as they grow over time, particularly their tendency to become slower, often to the point of slowing feature development.