6

Data Registration

Richard R. Brooks and Lynne Grewe

CONTENTS

6.3 Review of Existing Research

6.4 Registration Using Meta-Heuristics

6.5 Wavelet-Based Registration of Range Images

6.6 Registration Assistance/Preprocessing

6.7 Registration Using Elastic Transformations

6.8 Multimodal Image Registration

6.1 Introduction

Sensor fusion refers to the use of multiple sensor readings to infer a single piece of information. Inputs may be received from a single sensor over a period of time. They may also be received from multiple sensors of the same or different types. This is a very important technology for radiology and remote sensing applications. In medical imaging alone, the number of papers published per year has increased from 20 in 1990 to about 140 in 2001.1 Registering elastic images and finding theoretical bounds for image registration are particularly active research topics.

Registration inputs may be raw data, extracted features, or higher-level decisions. This process provides increased robustness and accuracy in machine perception. This is conceptually similar to the use of repeated experiments to establish parameter values using statistics.2 Several reference books have been published on sensor fusion.3, 4, 5 and 6

One decomposition of the sensor fusion process is shown in Figure 6.1. Sensor readings are gathered, preprocessed, compared, and combined, arriving at the final result. An essential preprocessing step for comparing readings from independent physical sensors is transforming all input data into a common coordinate system. This is referred to as data registration. In this chapter, we describe data registration, provide a review of the existing methods, and discuss some recent results.

Data registration transformation is often assumed to be known a priori partially because the problem is not trivial. Traditional methods are based on methods developed by cartographers. These methods have a number of drawbacks and often make invalid assumptions concerning the input data.

FIGURE 6.1

Decomposition of sensor fusion process. (From Keller, Y. and Averbuch, A., IEEE Trans. Patt. Anal. Mach. Intell. 28, 794, 2006. With permission.)

Although data input includes raw sensor readings, features extracted from sensor data, and higher-level information, registration is a preprocessing stage and, therefore, is usually applied only to either raw data or extracted features. Sensor readings can have one to n dimensions. The number of dimensions will not necessarily be an integer. Most techniques deal with data of two or three dimensions; however, same approaches can be trivially applied to one-dimensional (1D) readings. Depending on the sensing modalities used, occlusion may be a problem with data in more than two dimensions, leading to obscurity of data by the relative position of objects in the environment. The specific case studies presented in this chapter use image data in two dimensions and range data in 2½ dimensions.

This chapter is organized as follows. Section 6.2 gives a formal definition of image registration. Section 6.3 provides a brief survey of existing methods. Section 6.4 discusses meta-heuristic techniques that have been used for image registration. This includes objective functions for sensor readings with various types of noise. Section 6.5 discusses a multiresolution implementation of image registration. Section 6.6 discusses human-assisted registration. Section 6.7 looks at registration of warped images. In Section 6.8, we discuss problems faced by researchers registering data from multiple sensing modes. The theoretical bounds that have been established for the registration process are given in Section 6.9. Section 6.10 concludes with a brief summary discussion.

6.2 Registration Problem

Competitive multiple sensor networks consist of a large number of physical sensors providing readings that are at least partially redundant. The first step in fusing multiple sensor readings is registering them to a common frame of reference.7 Registration refers to finding the correct mapping of one image onto another. When an inaccurate estimate of the registration is known, finding the exact registration is referred to as refined registration. Another survey of image registration can be found in Ref. 8.

As shown in Figure 6.2, the general image registration problem is, given two N-dimensional sensor readings, find the function F that best maps the reading from sensor 2, S2(x1, …, xn) onto the reading from sensor 1, S1(x1, …, xn). Ideally, F(S2(x1, …, xn)) = S1(x1, …, xn). Because all sensor readings contain some amount of measurement error or noise, the ideal case rarely occurs.

Many processes require that data from one image, called the observed image, be compared with or mapped to another image, called the reference image. Hence, a wide range of critical applications depends on image registration.

Perhaps the largest amount of image registration research is focused on medical imaging. One application is sensor fusion to combine outputs from several medical imaging technologies such as positron emission tomography (PET) and magnetic resonance imagery (MRI) to form a more complete image of internal organs.9 Registered images are then used for medical diagnosis of illness10 and automated control of radiation therapy.11 Similar applications of registered and fused images are common12 in military applications (e.g., terrain “footprints”),13 remote sensing applications, and robotics. A novel application is registering portions of images to estimate motion. Descriptions of motion can then be used to construct intermediate images in television transmissions. Jain and Jain describe the applications of this to bandwidth reduction in video communications.14 These are some of the more recent applications that rely on accurate image registration. Methods of image registration have been studied since the beginning of the field of cartography.

FIGURE 6.2

Registration is finding the mapping function F(S2).

6.3 Review of Existing Research

This section discusses the current state of research concerning image registration. Image registration is a basic problem in image processing, and a large number of methods have been proposed.

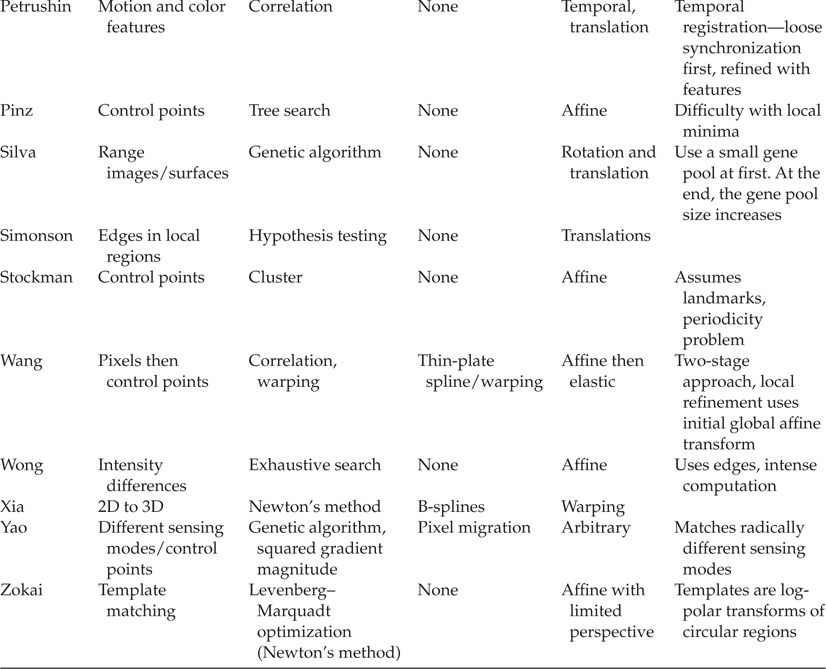

Table 6.1 summarizes the features of representative image registration methods discussed in this section. The discussion is followed by a detailed discussion of the established methodologies and algorithms currently in use. Each is explored in more detail in the remainder of the section.

The traditional method of registering two images is an extension of methods used in cartography. A number of control points are found in both images. The control points are matched, and this match is used to deduce equations that interpolate all points in the new image to corresponding points in the reference image.15,16

Several algorithms exist for each phase of this process. Control points must be unique and easily identified in both images. Control points have been explicitly placed in the image by the experimenter,11 and edges have been defined by intensity changes,17,18 specific points peculiar to a given image,8,19 line intersections, center of gravity of closed regions, or points of high curvature.15 The type of control point that should be used primarily depends on the application and contents of the image. For example, in medical image processing, the contents of the image and approximate poses are generally known a priori.

TABLE 6.1

Sensor Registration Methods

When registering images from different sensing modes, features that are invariant to sensor differences are sought.18 Registration of data not explicitly represented in the same data space like sound and imagery is complicated, and a common “spatial” representation must be constructed.20, 21 and 22 Petrushin et al. have used “events” as “control points” in registration.20 In their system, 32 web cameras, a higher-quality video camera, and a fingerprint reader are the sensors used for indoor surveillance. Gradient maxima have been used to find small sets of pixels to use as candidate control points.23,24 In a subapplication of person-tracking, the event is associated with the movement of a person from one “location” to another. A location is predefined as a spatial area. This is what is registered between the sensors for the purpose of identification and tracking. Han and Bhanu25 have also used movement queues to determine “control points” for matching. In particular, “human silhouettes” representing humans moving are extracted from synchronous color and infrared image sequences. The two-dimensional (2D) to three-dimensional (3D) multimodal registration problem26,27 is fairly common in radiology but is done using radiometry values instead of control points.

Similarly, many methods have been proposed for matching control points in the observed image to the control points in the reference image. The obvious method is to correlate a template of the observed image.28,29 Another widely used approach is to calculate the transformation matrix, which describes the mapping with the least square error.12,19,30 Other standard computational methods, such as relaxation and hill climbing, have also been used.14,31,32 Pinz et al. have used a hill climbing algorithm to match images and note the difficulty posed by local minima in the search space; to overcome this, they run a number of attempts in parallel with different initial conditions.33 Inglada and Giros have compared many similarity measures for comparing readings of different modes.34 Some measures consider the variance of radiometry values at each sample point. Other measures are based on entropy and mutual information content. Different metrics perform better for different combinations of sensors.

Some interesting methods have been implemented that consider all possible transformations. Stockman et al. have constructed vectors between all pairs of control points in an image.13 For each vector in each image, an affine transformation matrix is computed that converts the vector from the observed image to one of the vectors from the reference image. These transformations are then plotted, and the region containing the largest number of correspondences is assumed to contain the correct transformation.13 This method is computationally expensive because it considers the power set of control points in each image. Wong and Hall have matched scenes by extracting edges or intensity differences and constructing a tree of all possible matches that fall below a given error threshold.17 They have reduced the amount of computation needed by stopping all computation concerning a potential matching once the error threshold is exceeded; however, this method remains computationally intensive. Dai and Khorram have extracted affine transform invariant features based on the central moments of regions found in remote sensing images.35 Regions are defined by zero-crossing points. Similarly, Yang and Cohen have described a moments-based method for registering images using affine transformations given sets of control points.36

Registration of multisensor data to a 3D scene, given a knowledge of the contents of the scene, is discussed by Chellappa et al.37 The use of an extended Kalman filter (EKF) to register moving sensors in a sensor fusion problem is discussed by Zhou et al.38 Mandara and Fitzpatrick have implemented a very interesting approach10 using simulated annealing and genetic algorithm heuristics to find good matches between two images. They have found a rubber sheet transformation that fits two images by using linear interpolation around four control points, and assumed that the images match approximately at the beginning. A similar approach has been espoused by Matsopoulos et al.39 Silva et al. have used a modified genetic algorithm, where they have modified the size of the gene pool over time.40 During the first few generations, most mappings are terrible. By considering only a few samples at that stage, the system saves a lot of computation. During the final stages, the gene pool size is increased to allow a finer grain search for the optimal solution. Yao and Goh have registered images from different sensing modes by using a genetic algorithm constrained to search only in subspaces of the problem space that could contain the solution.24

A number of researchers have used multiresolution methods to prune the search space considered by their algorithms. Mandara and Fitzpatrick10 have used a multiresolution approach to reduce the size of their initial search space for registering medical images using simulated annealing and genetic algorithms. This work has influenced Oghabian and Todd-Pokropek, who have similarly reduced their search space when registering brain images with small displacements.9 Pinz et al. have adjusted both multiresolution scale space and step size to reduce the computational complexity of a hill climbing registration method.33 A hierarchical genetic algorithm has been used by Han and Bhanu to achieve registration of color and infrared image sequences.25 These researchers believe that by starting with low-resolution images or feature spaces, they can reject large numbers of possible matches and find the correct match by progressively increasing the resolution. Zokai and Wolberg have done Levenberg–Marquadt optimization (similar to Newton’s algorithm) to find optimal correspondences in the state space.41 Note that in images with a strong periodic component, a number of low-resolution matches may be feasible. In such cases, the multiresolution approach would be unable to prune the search space and, instead, will increase the computational load. Another problem with a common multiresolution approach, the wavelet transform, is its sensitivity to translation.42 Kybic and Unser have used splines as basis functions instead of wavelets.27

A number of methods have been proposed for fitting the entire image around the control points once an appropriate match has been found. Simple linear interpolation is computationally straightforward.10 Goshtasby has explored using a weighted least-squares approach,30 constructing piecewise linear interpolation functions within triangles defined by the control points,15 and developing piecewise cubic interpolation functions.43 These methods create nonaffine rubber sheet transformation functions to attempt to reduce the image distortion caused by either errors in control point matching or differences in the sensors that constructed the image. Similarly, Wang et al. have presented a system that looks at merging 2D medical images.44 In a two-stage approach, the intensity values are used to determine the best global affine transformation between the two images. This first step yields good results because much of the material, like bone, that may be imaged can be matched between two images using an affine transform. However, as there are also small local tissue deformations that are not rigid, a refinement of this initial registration takes place locally using the thin-plate spine interpolation method with landmark control points selected as a function of the image properties.44 This problem is discussed further in Section 6.7.

Several algorithms exist for image registration. The algorithms described have some common drawbacks. The matching algorithms assume that (a) a small number of distinct features can be matched,13,19,45 (b) specific shapes are to be matched,46 (c) no rotation exists, or (d) the relative displacement is small.9,10,14,19,28,32 Refer to Table 6.1 for a summary of many of these points.

Choosing a small number of control points is not a trivial problem and has a number of inherent drawbacks. For example, the control point found may be a product of measurement noise. When two readings have more than a trivial relative displacement, control points in one image may not exist in the other image. This requires considering the power set of the control points. When an image contains periodic components, control points may not define a unique mapping of the observed image to the reference image. Additional problems exist. The use of multiresolution cannot always trim the search space and, if the image is dominated by periodic elements, it would only increase the computational complexity of an algorithm.9,10,33

Many algorithms attempt to minimize the square error over the image; however, this does not consider the influence of noise in the image.9,10 Most of the existing methods are sensitive to noise.8,19,28 Section 6.4 discusses meta-heuristics-based methods, which try to overcome these drawbacks. Section 6.5 discusses a multiresolution approach.

Although much of the discussion of this chapter concentrates on the issue of spatial registration, temporal registration for some applications is important. Michel and Stanford21 have developed a system that uses off-the-shelf video cameras and microphones for a smart-meeting application. Video and sound frames from multiple sources must be synchronized and this is done in a two-part approach. Because of the lower quality of sensor components there can be a lot of temporal drift and some sensors do not possess internal clocks; therefore, a master network external clock is used and each sensor/capture node is synchronized to this. This brings capture nodes within a few milliseconds of each other temporally. Next, a multimodal event is generated for a more refined synchronization between the captured video and the audio streams. They combine a “clap” sound with a strobe flash to create a multimodal event that is then found in each video and audio stream. The various streams are correlated temporally using this multimodal event trigger.

Petrushin et al.20 have provided another example of a system performing temporal registration/synchronization where they have a number of video sensors that can run at different sampling rates. Because of this, they have defined the concept of a “tick,” which represents a unit of time large enough to contain at least one frame for the slowest video capture but also hopefully small enough to detect changes necessary for their application, including people tracking. Using ticks, a kind of “loose” synchronization is achieved. Petrushin et al.20 have used self-organizing maps to cluster tick data for the detection of events such as people moving in a scene.

6.4 Registration Using Meta-Heuristics

This section discusses research on automatically finding a gruence (i.e., translation and rotation) registering two overlapping images. Results from this research have previously been presented in a number of sources.3,47,48,50,66

This approach attempts to correctly calibrate two 2D sensor readings with identical geometries. These assumptions about the sensors can be made without a loss of generality because

A method that works for two readings can be extended to register any number of readings sequentially.

The majority of sensors work in one or two dimensions. Extensions of calibration methods to more dimensions are desirable, but not imperative.

Calibration of two sensors presupposes known sensor geometry. If geometries are known, a function can be derived that maps the readings as if the geometries were identical when a registration is given.

This approach finds gruences because these functions best represent the most common class of problems. The approach that has been used can be directly extended to include the class of all affine transformations by adding scaling transformations.49 It does not consider “rubber sheet” transformations that warp the contents of the image because these transformations mainly correct local effects after use of an affine transformation correctly matches the images.16 It assumes that any rubber sheet deformations of the sensor image are known and corrected before the mapping function is applied, or that their effects over the image intersections are negligible.

The computational examples used pertain to two sensors returning 2D gray scale data from the same environment. The amount of noise and the relative positions of the two sensors are not known. Sensor 2 is translated and rotated by an unknown amount with relation to sensor 1.

If the size or content of the overlapping areas is known, a correlation using the contents of the overlap on the two images could find the point where they overlap directly. Use of central moments could also find relative rotation of the readings. However, when the size or content of the areas is unavailable, this approach is impossible.

In this work, the two sensors have identical geometric characteristics. They return readings covering a circular region, and these readings overlap. Both sensors’ readings contain noise. What is not known, however, is the relative positions of the two sensors. Sensor 2 is translated and rotated by an unknown amount with relation to sensor 1.

The best way to solve this problem depends on the nature of the terrain being observed. If unique landmarks can be identified in both the images, those points can be used as control points. Depending on the number of landmarks available, minor adjustments may be needed to fit the readings exactly. Goshtasby’s methods could be used at that point.15,30,43

Hence, the problem to be solved is, given noisy gray scale data readings from sensor 1 and sensor 2, finding the optimal set of parameters (x-displacement, y-displacement, and angle of rotation) that defines the center of the sensor 2 image relative to the center of the sensor 1 image. These parameters would provide the optimal mapping of sensor 2 readings to the readings from sensor 1. This can be done using meta-heuristics for optimization. Brooks and Iyengar have described implementations of genetic algorithms, simulated annealing, and tabu search for this problem.3 Chen et al. have applied TRUST, a subenergy tunneling approach from Oak Ridge National Laboratories.48

To measure optimality, a fitness function can be used. The fitness function provides a numerical measure of the goodness of a proposed answer to the registration problem. Brooks and Iyengar have derived a fitness function for sensor readings corrupted with Gaussian noise:3

where w is a point in the search space; K(W) is the number of pixels in the overlap for w; (x′, y′) is the point corresponding to (x, y); read1(x, y)read2(x′, y′) is the pixel value returned by sensor 1 (2) at point (x, y)(x′, y′); gray1(x, y)gray2(x′, y′) is the noiseless value for sensor 1 (2) at (x, y)(x′, y′); noise1(x, y)noise2(x′, y′) is the noise in the sensor 1 (2) reading at (x, y)(x′, y′).

The equation is derived by separating the sensor reading into information and additive noise components. This means the fitness function is made up of two components: (a) lack of fit and (b) stochastic noise. The lack of fit component has a unique minimum when the two images have the same gray scale values in the overlap (i.e., when they are correctly registered). The noise component follows a χ2 distribution, whose expected value is proportional to the number of pixels in the region where the two sensor readings intersect. Dividing the difference squared by the cardinality of the overlap makes the expected value of the noise factor constant. Dividing by the cardinality squared favors large intersections. For a more detailed explanation of this derivation, see Ref. 3.

Other noise models simply modify the fitness function. Another common noise model addresses salt-and-pepper noise typically caused by either malfunctioning pixels in electronic cameras or dust in optical systems. In this model, the correct gray scale value in a picture is replaced by a value of 0 (255) with an unknown probability p(q). An appropriate fitness function for this type of noise is

FIGURE 6.3

Fitness function results with variance 1.

A similar function can be derived for uniform noise by using the expected value E[(U1 – U2)2] of the squared difference of two uniform variables U1 and U2. An appropriate fitness function is then given by

Figure 6.3 shows the best fitness function value found by simulated annealing, elitist genetic algorithms, classic genetic algorithms, and tabu search versus the number of iterations performed. In the study by Brooks and Iyengar, elitist genetic algorithms outperformed the other methods attempted. Further work by Chen et al. indicates that TRUST is more efficient than the elitist genetic algorithms.48 These studies show that optimization techniques can work well on the problem, even in the presence of large amounts of noise. This is surprising because the fitness functions take the difference of noise-corrupted data—essentially a derivative. Derivatives are sensitive to noise. Further inspection of the fitness functions explains this surprising result. Summing over the area of intersection is equivalent to integrating over the area of intersection. Implicitly, integrating counteracts the derivative’s magnification of noise.

Matsopoulos et al. have used affine, bilinear, and projective transformations to register medical images of the retina.39 The techniques tested include genetic algorithms, simulated annealing, and the downhill simplex method. They use image correlation as a fitness function. For their application, much preprocessing is necessary, which removes sensor noise. Their results indicate the superiority of genetic algorithms for automated image registration. This is consistent with Brooks’ results.3,50

6.5 Wavelet-Based Registration of Range Images

This section uses range sensor readings. More details have been provided by Grewe and Brooks.51 Range images consist of pixels with values corresponding to range or depth rather than photometric information. The range image represents a perspective of a 3D world. The registration approach described herein can be trivially applied to other kinds of images, including 1D readings. If desired, the approaches described by Brooks3,50 and Chen et al.48 can be directly extended to include the class of all affine transformations by adding scaling transformations. This section discusses an approach for finding these transformations.

The approach uses a multiresolution technique, the wavelet transform, to extract features used to register images. Other researchers have also applied wavelets to this problem, including using locally maximum wavelet coefficient values as features from two images.52 The centroids of these features are used to compute the translation offset between the two images. A principal components analysis is then performed and the eigenvectors of the covariance matrix provide an orthogonal reference system for computing the rotation between the two images. (This use of a simple centroid difference is subject to difficulties when the scenes only partially overlap and, hence, contain many other features.)

In another example, the wavelet transform is used to obtain a complexity index for two images.53 The complexity measure is used to determine the amount of compression appropriate for the image. Compression is then performed, yielding a small number of control points. The images, made up of control points for rotations, are tested to determine the best fit.

The system described by Grewe and Brooks51 is similar to that in some of the previous works discussed. Similar to DeVore et al.,53 Grewe and Brooks have used wavelets to compress the amount of data used in registration. Unlike previous wavelet-based systems prescribed by Sharman et al.52 and DeVore et al.,53 Grewe and Brooks51 have capitalized on the hierarchical nature of the wavelet domain to further reduce the amount of data used in registration. Options exist to perform a hierarchical search or simply to perform registration inside one wavelet decomposition level. Other system options include specifying an initial registration estimate, if known, and the choice of the wavelet decomposition level in which to perform or start registration. At higher decomposition levels, the amount of data is significantly reduced, but the resulting registration would be approximate. At lower decomposition levels, the amount of data is reduced to a lesser extent, but the resulting registration is more exact. This allows the user to choose between accuracy and speed as necessary.

Figure 6.4 shows a block diagram of the system. It consists of a number of phases, beginning with the transformation of the range image data to the wavelet domain. Registration can be performed on only one decomposition level of this space to reduce registration complexity. Alternately, a hierarchical registration across multiple levels would extract features from a wavelet decomposition level as a function of a number of user-selected parameters that determine the amount of compression desired in the level. Matching features from the two range images are used to hypothesize the transformation between the two images and evaluated. The “best” transformations are retained. This process is explained in the following paragraphs.

FIGURE 6.4

Block diagram of WaveReg system.

FIGURE 6.5

Features detected, approximate location indicated by white squares: (a) for wavelet level 2 and (b) for wavelet level 1.

First, a Daubechies-4 wavelet transform is applied to each range image. The wavelet data is compressed by thresholding the data to eliminate low magnitude wavelet coefficients. The wavelet transform produces a series of 3D edge maps at different resolutions. A maximal wavelet value indicates a relatively sharp change in depth.

Features, special points of interest in the wavelet domain, are simply points of maximum value in the current wavelet decomposition level under examination. These points are selected so that no two points are close to each other. The minimum distance is scaled with the changing wavelet level under examination. Figure 6.5 shows features detected for different range scenes at different wavelet levels. Notice how these correspond to points of sharp change in depth.

Using a small number of feature points allows this approach to overcome the wavelets transform’s sensitivity to translation. Stone et al.42 have proposed another method for overcoming the sensitivity to translation. They have noted that the low-pass portions of the wavelet transform are less sensitive to translation and that coarse-to-fine registration of images using the wavelet transform should be robust.

The next stage involves hypothesizing correspondences between features extracted from the two unregistered range images. Each hypothesis represents a possible registration and is subsequently evaluated for its goodness. Registrations are compared and the best retained.

Hypothesis formation begins at a default wavelet decomposition level. Registrations retained at this level are further “refined” at the next lower level, L-1. This process continues until the lowest level in the wavelet space is reached.

For each hypothesis, the corresponding geometric transformation relating the matched features is calculated, and the remaining features from one range image are transformed into the other’s space. This greatly reduces the computation involved in hypothesis evaluation in comparison to those systems that perform nonfeature-based registration. Next, features that are not part of the hypothesis are compared. Two features match if they are close in value and location. Hypotheses are ranked by the number of features matched and how closely the features match. Examples are given in Figure 6.6.

FIGURE 6.6

(a) Features extracted level 1, image 1, (b) features extracted level 1, image 2, (c) merged via averaging registered images, and (d) merged via subtraction of registered images.

6.6 Registration Assistance/Preprocessing

All the registration techniques discussed herein operate on the basic premise that there is identical content in the data sets being compared. However, the difficulty in registration pertains to the fact that the content is the same semantically, but often not numerically. For example, sensor readings taken at different times of the day can lead to lighting changes that can significantly alter the underlying data values. Also, weather changes can lead to significant changes in data sets. Registration of these kinds of data sets can be improved by first preprocessing the data. Figure 6.7 shows some preliminary work by Grewe54 on the process of altering one image to appear more like another image in terms of photometric values. Such systems may improve registration systems of the future.

In some systems, we are dealing with very different kinds of data and registration can require operator assistance. Grewe et al.22 have developed a system for aiding decisions and communication in disaster relief scenarios. The system, named DiRecT, takes as input different kinds of data; some such as images and maps follow the spatial grid pattern of images. However, DiRecT also takes in intelligence data from the field, which represent reports of victims, emergency situations, and facilities found by field workers. In this case, DiRecT requires the operator to gather information about the placement of these items in the field, itself represented by a grid, from the reporter. Figure 6.8 indicates the process of how this can be done relative to a grid backdrop that may be visually aided by the display of a corresponding map if one is available. The operator not only fixes the hypothetical location using a mouse but also gives a measure of certainty about the location. This uncertainty in the registration process is visually represented in different controllable ways using uncertainty visualization techniques as shown in Figure 6.9.

FIGURE 6.7

(a) Image 1, (b) image 2, and (c) new image 1 corrected to appear more like image 2 in photometric content.

FIGURE 6.8

DiRecT GUI, which will direct operator to select location in current grid of, in this case a victim, or biotarget, along with a measure of certainty of this information. Different kinds of disaster items would have varying interfaces for registration in the scene. Victims are simply placed by location, orientation is not considered unlike other kinds of items.

FIGURE 6.9

An image scene alterable in DiRecT interface.

6.7 Registration Using Elastic Transformations

Many registration problems assume that the images only undergo rigid body transformations. Unfortunately, this assumption is rarely true. Most technologies either rely on lenses that warp the data or have local imperfections that also complicate the mapping process. This is why elastic transformations are often needed to accurately register images. The approach from Bentoutou et al., which we discuss in Section 6.8, is a good example of how elastic transforms can be integrated into a general registration approach.23 The rest of this section details how warping transforms registered images.

Kybic and Unser27 and Xia and Liu55 have used B-splines as basis functions for registering images. In both cases, a variant of Newton’s algorithm uses gradient descent to optimize the mapping of the observed image to the reference image. Xia and Liu55 varies from Kybic and Unser27 in that the B-splines find a “super-curve,” which maps two affine transforms.

Caner et al. have registered images containing small displacements with warping transforms.56 To derive the elastic mapping, they have converted the 2D problem into a 1D problem by using a space-filling Hilbert curve to scan the image. Adaptive filtering techniques compensate for smooth changes in the image. This approach has been verified both by recovering a watermark from an intentionally distorted image and by compensating for lens distortions in photographs.

Probably the most useful results in this domain can be found in Ref. 57, which compares nonrigid registration techniques for problems defined by the number of control points and variation in spacing between control points. Thin-plate spline and multiquadric approaches work well when there are few control points and variations are small. When variations are large, piecewise linear interpolation works better. When the number of control points is large and their correspondences are inaccurate, a weighted means approach is preferable.

6.8 Multimodal Image Registration

Multimodal image registration is important for many applications. Real-time military and intelligence systems rely on a number of complementary sensing modes from a number of platforms. The range of medical imaging technologies keeps growing, which leads to a growing need for registration. Similarly, more and more satellite-based imaging services are available, using an increasing number of technologies and data frequencies. We have conducted a survey of recent radiology research literature and found over 100 publications a year on image registration; hence, we limit our discussion here to the most important and representative ideas. This problem is challenging because pixel values in the images being registered may have radically different meanings.

For registration of images from different kinds of sensors and different modalities, careful attention must be paid to find features or spaces that are invariant to differences in the modalities.18,58 Matching criteria that measure invariant similarities are sought.18,59 Pluim et al.59 have discussed the use of mutual information, which is a measure of the statistical correlation between two images as a criterion for maximization. This work not only uses mutual information, which can work well for images from different modalities, but also includes a spatial component in matching via the inclusion of image gradient information, which in general is more invariant to differences in modalities than raw data. Similarly, Keller and Averbuch58 have discussed some of the problems of using mutual information, including the problem of finding local, not global, maxima. They have suggested that as images become lower in resolution, or contain limited information or reduced overlap, they also become less statistically dependent and, hence, the maximization of mutual information becomes ill-posed. Keller and Averbuch58 have developed a matching criterion that does not use the intensities of both images at the same time to reduce some of these effects. First, they have examined both images (I1, I2) and, using metrics related to sharpness, have determined which of the two images is of better quality (in focus, not blurred), I2. The pixels corresponding to the locations of higher-gradient values of the poorer-quality image, I1, are recorded. Iteratively, these pixels are aligned to the better-quality image, I2, using only the pixel values from I2. Specifically, the maximization of the magnitude of the intensity gradient of the matched pixels in I2 is iteratively sought. Thus, there is at no step a direct comparison of I1 and I2 intensity values that supports invariance of multimodal differences.

One particularly important problem is mapping 2D images of varying modalities to 3D computed tomography data sets. Penney et al.26 have matched (2D) fluoroscopy images to (3D) computed tomography images using translation, rotation, 3D perspective, and position on film. They have considered the following similarity measures:

Normalized cross-correlation

Entropy of the difference image

Mutual information

Gradient correlation

Pattern intensity

Gradient difference

They have compared their results using synthetic images derived from existing images. Ground truth has been derived by inserting fiducial artifacts in the images. The noise factors they have considered included adding soft tissue and stents. Their search algorithm has kept the optimal perspective known from the ground truth, introduced small errors in other parameters, and then used a greedy search by one parameter at a time. Their results found mutual information to be the least accurate measure. Correlation measures were sensitive to thin line structures. Entropy measures were not sensitive to thin lines, but fail when slowly varying changes exist. Pattern intensity and gradient difference approaches have been found to be most applicable to medical registration problems.

Kybic and Unser27 have used B-spline basis functions to deform 2D images so as to best match a 3D reference image as determined by a sum-square of differences metric. In their work, splines have been shown to be computationally more efficient than wavelet basis functions. Because warping could make almost any image fit another one, they assume some operator intervention. The optimization algorithms they use include gradient descent and a variation of Newton’s algorithm. This approach was tested on a number of real and synthetic data sets.

Another medical imaging problem involving mapping sensing modes of the same dimensionality, such as ultrasound and magnetic resonance, can be found in Yao and Goh.24 There is no clear correspondence between the intensity values of the two sensing modes. It is also unlikely that all the control points in one mode exist in the other mode. To counter this, a “pixel migration” approach is considered. The control points chosen are gradient maxima, and the fitness function they use is the sum of the squared gradient magnitude (SSG). Unfortunately, the SSG maximum is not necessarily the best solution. To counter this, they partition the search space into feasible and unfeasible regions and restrict the search to feasible regions. A genetic algorithm is then used to find the optimal matching within the feasible regions. Electrooptical, infrared, and synthetic aperture radar (SAR) images are matched in examples.

Other multimodal registration problems have been addressed for remote sensing applications. Inglada and Giros34 have performed subpixel accurate registration of images from different sensing modalities using rigid body transformations. This is problematic because the different sensing modes have radically different radiometry values. Correlation metrics are not applicable to this problem. The authors34 have presented a number of similarity measures that are very useful for this problem, including the following:

Normalized standard deviation—the variance of the differences between pixel values of two images will be minimized when the two are registered correctly

Correlation ratio—which is similar conceptually to normalized standard deviation but provides worse results

A normalized version of the χ2-test—measures the statistical dependence between two images (should be maximal when registration is correct)

The Kolmogorov distance of the relative entropy between the two images

The mutual information content of the two images

Many additional entropy-related metrics

A cluster rewards algorithm that measures the dispersion of the marginal histogram of the registered image

They have tested these methods by registering optical and SAR remote sensing images.

In the study by Bentoutou et al.,23 SAR and Systeme Pour l’Observation de la Terre [French remote sensing satellite] (SPOT) optical remote sensing images have been registered. They have considered translation rotation and scaling (i.e., rigid body) transformations. Their algorithm has been the following:

Control points found by edge detection, retaining points with large gradients. A strength measure computed by finding local maxima as a function of the determinant of the autocorrelation function is used to reduce the number of control points.

Central moments of the set of control functions are used to derive image templates that are made up of invariant features. Matches are found from the minimum distance between control points in the invariant space.

An inverse mapping is found for the least-squares affine transform that best fits the two pairs of strongest control points.

An interpolation algorithm finally warps the image to perform subpixel registration.

The approaches presented are typical of the state of this field, and provide insights that can be used in many related applications.

Related to registration preprocessing is the topic of sensor calibration. The meaning of calibration changes with the sensor and even its use in an application. In some ways, calibration is similar to registration, but here the goal is not correlation between sensors but from a sensor to some known ground truth. Whitehouse and Culler60 have discussed the problems of calibration of multiple sensors. They have specifically addressed the application of localization of a target, but discussed the use in other multisensor network applications. The calibration problem is framed as a general parameter estimation problem. However, rather than calibrating each sensor independently, they have chosen for each sensor the parameters that optimize the entire sensor networks response. This, although not necessarily optimal for each sensor, eliminates the work of observing each sensor if a sensor network response can be formulated and observed. Also, it reduces the task of collecting ground truth for each sensor to a task that collects ground truth for the network response. Interestingly, in this work60 and specifically for their application of simple localization, they have reduced individual sensor calibration and multisensor registration to one of “network calibration.”

6.9 Theoretical Bounds

In practice, the images being registered are always less than ideal. Data are lost in the sampling process and the presence of noise further obscures the object being observed. Because noise processes are due to random processes following statistical laws, it is natural to phrase the image registration problem as a statistical decision problem. For example, the registration fitness functions given in Section 6.4 (see Equations 6.1, 6.2 and 6.3) include random variables and are suited to statistical analysis. Recent research has established theoretical bounds to image registration using both the confidence intervals (CIs) and the Cramer–Rao lower bound (CRLB).

Two recent approaches use CIs to determine the theoretical bounds of their registration approach. Simonson et al.61 have used statistical hypothesis testing for determining when to accept or reject candidate registrations. They try to find translations that match pairs of “chip” regions and calculate joint confidence regions for these correspondences. If the statistics do not adequately support the hypothesis, the registration is rejected. The statistical foundation of their approach helps make it noise tolerant. Wang et al. have used the residual sum of squares statistic to determine CIs for their registration results.62 This information is valuable because it provides the application with not only an answer but also a metric for how accurate the result is likely to be.

A larger body of research has found the CRLB for a number of registration approaches. Unlike the CI, which states that the true answer would be within a known envelope for a given percentage of the data samples, CRLB expresses the best performance that could ever be obtained for a specific problem. This information is useful to researchers because it tells them objectively how close their approach is to the best possible performance.

Li and Leung63 have registered images using a fitness function containing both control points and intensity values. They have searched for the best affine match between the observed and the reference images by varying one parameter at a time while keeping all the others fixed. This process iterates until a good solution is found. The CRLB they have derived for this process shows that the combination of control points and intensity values can perform better than either set of features alone.

Robinson and Milanfar64 have taken a similar approach to finding the theoretical bounds of registration. They look at translations of images corrupted with Gaussian white noise. The CRLB of this process provides important insights. For periodic signals, registration quality is independent of the translation distance. It depends only on the image contents. However, translation registration accuracy depends on the angle of translation. Unfortunately, unbiased estimators do not generally exist for image registration. The CRLB for gradient-based translation algorithms is given in Ref. 64.

Yetik and Nehorai65 have carried this idea further by deriving the CRLB for 2D affine transforms, 3D affine transforms, and warping transformations using control points. For translations, accuracy depends only on the number of points used, not on their placement or the amount of translation. These results are consistent with those of Robinson and Milanfar.64 For rotations, it is best to use points far away from the rotation axis. Affine transformation registration accuracy is bounded by noise and the points but is independent of transformation parameters. For warping transformations, accuracy depends on the warping parameters. The bounds get to be large as the amount of warping increases.

6.10 Conclusion

Addressing the data registration problem is an essential preprocessing step in multisensor fusion. Data from multiple sensors must be transformed onto a common coordinate system. This chapter has provided a survey of existing methods, including methods for finding registrations and applying registrations to data after they have been found. In addition, example approaches have been described in detail.

Brooks and Iyengar3 and Chen et al.48 have detailed meta-heuristic-based optimization methods that can be applied to raw data. Of these methods, TRUST, a new meta-heuristic from Oak Ridge National Laboratories, is the most promising. Fitness functions have been given for readings corrupted with Gaussian, uniform, and salt-and-pepper noise. Because these methods use raw data, they are computationally intensive.

Grewe and Brooks51 have presented a wavelet-based approach to registering range data. Features are extracted from the wavelet domain. A feedback approach is then applied to search for good registrations. Use of the wavelet domain compresses the amount of data that must be considered, providing increased computational efficiency. Drawbacks to using feature-based methods have also been discussed in this chapter.

Acknowledgments

The study has been sponsored by the Defense Advance Research Projects Agency (DARPA) and Air Force Research Laboratory, Air Force Materiel Command, USAF, under agreement number F30602-99-2-0520 (Reactive Sensor Network). The U.S. Government is authorized to reproduce and distribute reprints for governmental purposes notwithstanding any copyright annotation thereon. The views and conclusions that have been contained herein are those of the authors and should not be interpreted as necessarily representing the official policies or endorsements, either expressed or implied, of the DARPA, the Air Force Research Laboratory, or the U.S. Government.

References

1. J. P. W. Pluim and J. M. Fitzpatrick, “Image registration” introduction to special issue, IEEE Transactions on Medical Imaging, 22(11), 1341–1342, 2003.

2. D. C. Montgomery, Design and Analysis of Experiments, 4th Edition, Wiley, New York, NY, 1997.

3. R. R. Brooks and S. S. Iyengar, Multi-Sensor Fusion: Fundamentals and Applications with Software, Prentice Hall, PTR, Upper Saddle River, NJ, 1998.

4. B. V. Dasarathy, Decision Fusion, IEEE Computer Society Press, Los Alamitos, CA, 1994.

5. D. L. Hall, Mathematical Techniques in Multi-sensor Fusion, Artech House, Norwood, MA, 1992.

6. R. Blum and Z. Liu, Multi-Sensor Image Fusion and Its Applications, CRC Press, Boca Raton, FL, 2006.

7. R. C. Gonzalez and R. E. Woods, Digital Image Processing, Addison-Wesley, Reading, PA, 302, 1993.

8. L. G. Brown, A survey of image registration techniques, ACM Computing Surveys, 24(4), 325–376, 1992.

9. M. A. Oghabian and A. Todd-Pokropek, Registration of brain images bay a multiresolution sequential method, in Information Processing in Medical Imaging, Springer, Berlin, pp. 165–174, 1991.

10. V. R. Mandara and J. M. Fitzpatrick, Adaptive search space scaling in digital image registration, IEEE Transactions on Medical Imaging, 8(3), 251–262, 1989.

11. C. A. Palazzini, K. K. Tan, and D. N. Levin, Interactive 3D patient-image registration, Information Processing in Medical Imaging, Springer, Berlin, pp. 132–141, 1991.

12. P. Van Wie and M. Stein, A LANDSAT digital image rectification system, IEEE Transactions on Geoscience Electronics, GE-15, 130–137, 1977.

13. G. Stockman, S. Kopstein, and S. Benett, Matching images to models for registration and object detection via clustering, IEEE Transactions on Pattern Analysis and Machine Intelligence, 4(3), 229–241, 1982.

14. J. R. Jain and A. K. Jain, Displacement measurement and its application in interface image coding, IEEE Transactions on Communications, COM-29(12), 1799–1808, 1981.

15. A. Goshtasby, Piecewise linear mapping functions for image registration, Pattern Recognition, 19(6), 459–466, 1986.

16. G. Wolberg, Digital Image Warping, IEEE Computer Society Press, Los Alamitos, CA, 1964.

17. R. Y. Wong and E. L. Hall, Performance comparison of scene matching techniques, IEEE Transaction on Pattern Analysis and Machine Intelligence, PAMI-1(3), 325–330, 1979.

18. M. Irani and P. Anandan, Robust multisensor image alignment, in IEEE Proceedings of International Conference on Computer Vision, 959–966, 1998.

19. A. Mitiche and K. Aggarwal, Contour registration by shape specific points for shape matching comparison, Vision, Graphics and Image Processing, 22, 396–408, 1983.

20. V. Petrushin, G. Wei, R. Ghani, and A. Gershman, Multiple sensor integration for indoor surveillance, in Proceedings of the 6th International Workshop on Multimedia Data Mining: Mining Integrated Media and Complex Data MDM’05, 53–60, 2005.

21. M. Michel and V. Stanford, Synchronizing multimodal data streams acquired using commodity hardware, in Proceedings of the 4th ACM International Workshop on Video Surveillance and Sensor Networks VSSN’06, 3–8, 2006.

22. L. Grewe, S. Krishnagiri, and J. Cristobal, DiRecT: A disaster recovery system, Signal Processing, Sensor Fusion, and Target Recognition XIII, Proceedings of SPIE, Vol. 5429, 480–489, 2004.

23. Y. Bentoutou, N. Taleb, K. Kpalma, and J. Ronsin, An automatic image registration for applications in remote sensing, IEEE Transactions in Geoscience and Remote Sensing, 43(9), 2127–2137, 2005.

24. J. Yao and K. L. Goh, A refined algorithm for multisensor image registration based on pixel migration, IEEE Transactions on Image Processing, 15(7), 1839–1847, 2006.

25. J. Han and B. Bhanu, Hierarchical multi-sensor image registration using evolutionary computation, Proceedings of the 2005 Conference on Genetic and Evolutionary Computation, 2045–2052, 2005.

26. G. P. Penney, J. Weese, J. A. Little, P. Desmedt, D. L. G. Hill, and D. J. Hawkes, A comparison of similarity measures for use in 2D–3D medical image registration, IEEE Transactions on Medical Imaging, 17(4), 586–595, 1998.

27. J. Kybic and M. Unser, Fast parametric image registration, IEEE Transactions on Image Processing, 12(11), 1427–1442, 2003.

28. J. Andrus and C. Campbell, Digital image registration using boundary maps, IEEE Transactions on Computers, 19, 935–940, 1975.

29. D. Barnea and H. Silverman, A class of algorithms for fast digital image registration, IEEE Transaction on Computers, C-21(2), 179–186, 1972.

30. A. Goshtasby, Image registration by local approximation methods, Image and Vision Computing, 6(4), 255–261, 1988.

31. B. R. Horn and B. L. Bachman, Using synthetic images with surface models, Communications of the ACM, 21, 914–924, 1977.

32. H. G. Barrow, J. M. Tennenbaum, R. C. Bolles, and H. C. Wolf, Parametric correspondence and chamfer matching: Two new techniques for image matching, in Proceedings of International Joint Conference on Artificial Intelligence, 659–663, 1977.

33. A. Pinz, M. Prontl, and H. Ganster, Affine matching of intermediate symbolic presentations, in CAIP’95 Proceedings LNCS 970, Hlavac and Sara (Eds.), Springer-Verlag, New York, NY, pp. 359–367, 1995.

34. J. Inglada and A. Giros, On the possibility of automatic multisensor image registration, IEEE Transactions on Geoscience and Remote Sensing, 42(10), 2104–2102, 2004.

35. X. Dai and S. Khorram, A feature-based image registration algorithm using improved chain-code representation combined with invariant moments, IEEE Transactions on Geoscience and Remote Sensing, 37(5), 2351–2362, 1999.

36. Z. Yang and F. S. Cohen, Cross-weighted moments and affine invariants for image registration and matching, IEEE Transactions on Pattern Analysis and Machine Intelligence, 21(8), 804–814, 1999.

37. R. Chellappa, Q. Zheng, P. Burlina, C. Shekhar, and K. B. Eom, On the positioning of multisensory imagery for exploitation and target recognition, Proceedings of IEEE, 85(1), 120–138, 1997.

38. Y. Zhou, H. Leung, and E. Bosse, Registration of mobile sensors using the parallelized extended Kalman filter, Optical Engineering, 36(3), 780–788, 1997.

39. G. K. Matsopoulos, N. A. Mouravliansky, K. K. Delibasis, and K. S. Nikita, Automatic retinal image registration scheme using global optimization techniques, IEEE Transactions on Information Technology in Biomedicine, 3(1), 47–68, 1999.

40. L. Silva, O. R. P. Bellon, and K. L. Boyer, Precision range image registration using a robust surface interpenetration measure and enhanced genetic algorithms, IEEE Transactions on Pattern Analysis and Machine Intelligence, 27(5), 762–776, 2005.

41. S. Zokai and G. Wolberg, Image registration using log-polar mappings for recovery of large-scale similarity and projective transformations, IEEE Transactions on Image Processing, 14(10), 1422–1434, 2005.

42. H. S. Stone, J. Lemoigne, and M. McGuire, The translation sensitivity of wavelet-based registration, IEEE Transactions on Pattern Analysis and Machine Intelligence, 21(10), 1074–1081, 1999.

43. A. Goshtasby, Piecewise cubic mapping functions for image registration, Pattern Recognition, 20(5), 525–535, 1987.

44. X. Wang, D. Feng, and H. Hong, Novel elastic registration for 2D medical and gel protein images, in Proceedings of the First Asia-Pacific Bioinformatics Conference on Bioinformatics 2003, Vol. 19, 223–22, 2003.

45. H. S. Baird, Model-Based Image Matching Using Location, MIT Press, Boston, MA, 1985.

46. L. S. Davis, Shape matching using relaxation techniques, IEEE Transaction on Pattern Analysis and Machine Intelligence, 1(1), 60–72, 1979.

47. R. R. Brooks and S. S. Iyengar, Self-calibration of a noisy multiple sensor system with genetic algorithms, self-calibrated intelligent optical sensors and systems, SPIE, Bellingham, WA, in Proceedings of SPIE International Symposium Intelligent Systems and Advanced Manufacturing, 1995.

48. Y. Chen, R. R. Brooks, S. S. Iyengar, N. S. V. Rao, and J. Barhen, Efficient global optimization for image registration, IEEE Transactions on Knowledge and Data Engineering, 14(1), 79–92, 2002.

49. F. S. Hill, Computer Graphics, Prentice Hall, Englewood Cliffs, NJ, 1990.

50. R. R. Brooks, Robust Sensor Fusion Algorithms: Calibration and Cost Minimization, PhD dissertation in Computer Science, Louisiana State University, Baton Rouge, LA, 1996.

51. L. Grewe and R. R. Brooks, Efficient registration in the compressed domain, in Wavelet Applications VI, SPIE Proceedings, H. Szu (Ed.), Vol. 3723, AeroSense 1999.

52. R. Sharman, J. M. Tyler, and O. S. Pianykh, Wavelet-based registration and compression of sets of images, SPIE Proceedings, Vol. 3078, 497–505, 1997.

53. R. A. DeVore, W. Shao, J. F. Pierce, E. Kaymaz, B. T. Lerner, and W. J. Campbell, Using nonlinear wavelet compression to enhance image registration, SPIE Proceedings, Vol. 3078, 539–551, 1997.

54. L. Grewe, Image Correction, Technical Report, CSUMB, 2000.

55. M. Xia and B. Liu, Image Registration by “Super-Curves”, IEEE Transactions on Image Processing, 13(5), 720–732, 1994.

56. G. Caner, A. M. Tekalp, G. Sharma, and W. Heinzelman, Local image registration by adaptive filtering, IEEE Transactions on Image Processing, 15(10), 3053–3065, 2006.

57. L. Zagorchev and A. Goshtasby, A comparative study of transformation functions for nonrigid image registration, IEEE Transactions on Image Processing, 15(3), 529–538, 2006.

58. Y. Keller and A. Averbuch, Multisensor image registration via implicit similarity, IEEE Transactions on Pattern Analysis and Machine Intelligence Archive, 28, 794–801, 2006.

59. J. Pluim, J. Maintz, and M. Viergever, Image registration by maximization of combined mutual information and gradient information, IEEE Transactions on Medical Imaging, 19(8), 809–814, 2000.

60. K. Whitehouse and D. Culler, Macro-calibration in sensor/actuator networks, Mobile Networks and Applications, 8, 463–472, 2003.

61. K. M. Simonson, S. M. Drescher Jr., and F. R. Tanner, A statistics-based approach to binary image registration with uncertainty analysis, IEEE Transactions on Pattern Analysis and Machine Intelligence, 29(1), 112–125, 2007.

62. H. S. Wang, D. Feng, E. Yeh, and S. C. Huang, Objective assessment of image registration results using statistical confidence intervals, IEEE Transactions on Nuclear Science, 48(1), 106–110, 2001.

63. W. Li and H. Leung, A maximum likelihood approach for image registration using control point and intensity, IEEE Transactions on Image Processing, 13(8), 1115–1127, 2004.

64. D. Robinson and P. Milanfar, Fundamental performance limits in image registration, IEEE Transactions on Image Processing, 13(9), 1185–1199, 2004.

65. I. S. Yetik and A. Nehorai, Performance bounds on image registration, IEEE Transactions on Signal Processing, 54(5), 1737–1749, 2006.

66. R. R. Brooks, S. S. Iyengar, and J. Chen, Automatic correlation and calibration of noisy sensor readings using elite genetic algorithms, Artificial Intelligence, 84(1), 339–354, 1996.

67. X. Dai and S. Khorram, A feature-based image registration algorithm using improved chain-code representation combined with invariant moments, IEEE Transactions on Geoscience and Remote Sensing, 37(5), 2351–2362, 1999.