19

Introduction to Level 5 Fusion: The Role of the User

Erik Blasch

CONTENTS

19.2 User Refinement in Information Fusion Design

19.2.2 Prioritization of Needs

19.3 Data Fusion Information Group Model

19.3.2 User Interaction with Design

19.4.3 User Interaction with Estimation

19.5.1 User Models in Situational Awareness

19.5.4 Cognitive Processing in Dynamic Decision Making

19.5.5 Cognitive Work Analysis/Task Analysis

19.5.6 Display/Interface Design

19.6 Example: Assisted Target Identification through User-Algorithm Fusion

19.1 Introduction

Before automated information fusion (IF) can be qualified as a technical solution to a problem, we must consider a defined need, function, and capability. The need typically includes data gathering and exploitation to augment the current workload such as a physician desiring assistance in detecting a tumor; an economist looking for inflationary trends; or a military commander trying to find, locate, and track targets. “Information needs” can only be designated by these users who will utilize the information fusion system (IFS) developments.1 Once the needs have been defined, decomposing the needs into actionable functions requires data availability and observability. Finally, transforming the data into information to satisfy a need has to become a realizable capability. All too often, IF engineers gather user information needs and start building a system without developing in the interaction, the guidance, or the planned role of the users.

Level 5 fusion, preliminarily labeled “user refinement,”2 places emphasis on developing the IF process model by utilizing the cognitive fusion capability of the user (1) to assist in the design and analysis of emerging concepts; (2) to play a dedicated role in control and management; and (3) to understand the necessary supporting activities to plan, organize, and coordinate sensors, platforms, and computing resources for the IFS. We will explore three cases—the prototype user, the operational user, and the business user. “Prototype users” must be involved throughout the entire design and development process to ensure that the system meets specifications. “Operational users” focus on behavioral aspects including neglect, consult, rely, and interact.3 Many times, operational users lack trust and credibility in the fusion system because they do not understand the reasoning behind the fusion-system. To facilitate design acceptance, the user must be actively involved in the control and management of the data fusion system (DFS). “Business users” focus on refinement functions for planning, organizing, coordinating, directing, and controlling aspects in terms of resources, approval processes, and deployment requirements.

This chapter emphasizes the roles that users play in the IF design. To adequately design systems that will be acceptable, the prototype, operational, and business users must work hand-in-hand with the engineers to fully understand what IFSs can do (i.e., augment the users task functions) and can not do (i.e., make decisions without supporting data).4 Together, engineers and users can design effective and efficient systems that have a defined function. As an example of a poorly defined system, one can only look at marketing brochures that infer that a full spectrum of situational awareness will be provided of a city. Engineers look at the statement and fully understand that to achieve full situational awareness (SA) (such as tracking and identifying all objects in the city), the entire city must be observable at all times, salient features must be defined for distinguishing all target types, and redundant sensors and platforms must be available and working reliably. However, users reading the brochure expect that any city location will be available to them at their fingertips, information will always be timely, and the system will require no maintenance (e.g., a priori values). To minimize the gap between system capability and user expectation that facilitates acceptance of fusion systems, level 5 fusion is meant to focus specifically on the process refinement opportunities for users.

This chapter is organized as follows. Section 19.2 focuses on issues surrounding the complexity of IF designs and the opportunities for users to aid in providing constraints and controls to minimize the complexity. Section 19.3 overviews the data fusion information group (DFIG) model, an extension to the Joint Directors of Laboratories (JDL) model. Section 19.4 details user refinement (UR) whereas Section 19.5 discusses supporting topics. Section 19.6 presents an example that develops a system prototype where operational users are involved in a design. Section 19.7 concludes with a summary.

19.2 User Refinement in Information Fusion Design

19.2.1 User Roles

IFSs are initiated, designed, and fielded based on a need: such as data compression,5 spatial coverage, and task assistance. Inherently behind every fusion system design is a user in question. Users naturally understand the benefits of fusion such as multisensor communication with their eyes and ears,6 multisensor transportation control of vehicles that augment movement,7 and multisensor economic management of daily living through computers, transactions, and supply of goods and services.8 Although opportunities for successful fusion abound, some of the assumptions are not well understood. For efficient communication, a point-to-point connection is needed. For transportation control, societal protocols are understood such as roadways, vehicle maintenance, and approved operators. For economic management, organization and business operations are contractually agreed on. On the basis of these examples, IFS designs also require individual, mechanical, and economic support dependent on operational, prototype, and business users.

Fusion designs can be separated into two modes: micro and macro levels. The micro level involves using a set of defined sensors within a constrained setting. Such examples include a physician requiring a fused result of a PET–MRI image, a driver operating a car, or a storeowner looking at the financial report. In each of these cases, the settings are well defined, the role of the fusion systems is constrained, and the decisions are immediate. The more difficult case is the macro level that deals with military decision support systems,9 political representatives managing a society, or a corporate board managing a trans-continental business. The complexity of the macro level generally involves many users and many sensors operating over a variety of data and information sources with broad availability. An example of an aggregation of micro- and macro-level fusion concepts can be seen by the multilevel display in Figure 19.1. As shown, individual users sought to spatially minimize the information by placing the displays together. Although this is not the ideal “information-fusion” display, it does demonstrate that “business users” afforded the resources, “operational users” trusted the system, and that “prototype users” were able to provide the technology. To manage large, complex systems and hope that IF can be a useful tool to augment the macro-level systems, the appropriate constraints, assumptions, and defined user roles must be subscribed up front. One goal is to reduce the complexity of fusion at the macro level by creating a common operational picture10 across sensors, targets, and environments.

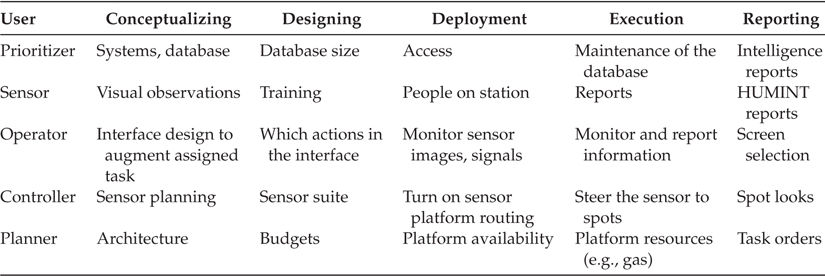

The user (level 5) can take on many roles: such as a prioritizer, sensor, operator, controller, and planner, which can design, plan, deploy, and execute an IF design. Although there are a host of roles that could be determined, one taxonomy is presented in Table 19.1. This table is based on trying to field a typical IFS such as a target tracker and an identifier. The first role is that of a prioritizer, which determines what objects are of interest, where to look, and what supporting database information is needed. Most fusion algorithms require some initializing information such as a priori probabilities, objective functions, processing weights, and performance parameters. The second role is that of a sensor, which includes reporting of contextual and message reports, making decisions on data queries, and drafting information requests. The third role is that of an operator, which includes data verification such as highlighting images, filtering text reports, and facilitating communication and reporting. Many times the operator is trained for a specific task and has a defined role (which distinguishes the role vs. that of a sensor). A controller is a “business” operator who routes sensors and platforms, determines the mission of the day, and utilizes the IF output for larger goals. Finally, the planner is not looking at today’s operations, but tomorrow’s (e.g., how can the data gathering be better facilitated in the future to meet undefined threats and situations forthcoming).

FIGURE 19.1

User information fusion of colocated displays.

TABLE 19.1

Users and their Refinements in the Stage of an Information Fusion Design

The most fundamentally overlooked opportunities for successful fusion design is in the conceptual phase. Engineers take advantage of the inherent benefits of data fusion within the initial design process: developing, for instance, Bayesian rules that assume that exemplar data, a prior probabilities, and sensors will be available. Without adequate “user refinement” of the problem through constraints, operational planning, and user-augmented algorithms; the engineering design has little chance of being deployed. By working with a team of users, as in Table 19.1, we can work within operational limits in terms of available databases, observation exploitation capabilities, task allocations, platforms, and system-level opportunities. The interplay between the engineers and the users can build trust and efficacy in the system design.

In the design phase, users can aid in characterizing fusion techniques that are realizable. Two examples come to mind—one is object detection and the other is sensor planning. Because no automatic target recognition (ATR) fusion algorithm can reduce the false alarm rate (FAR) to zero, although simultaneously providing near-perfect probability of detection, individual users must somehow determine acceptable thresholds. Because operators are burdened with a stream of image data, the fusion algorithm can reprioritize images important to the user for evaluation (much like a prescreener). Another example includes sensor planning. IF requires the right data to integrate, and thus, having operators and controllers present in the design phase can aid in developing concept-of-operations constraints based on estimated data collection possibilities.

For macro-level systems, deployment, execution, and reporting within IF designs are currently notional since complexity limits successes. One example is in training,5 where prototype and operational users work with the system designers to better the interface. Lessons from systems engineering can aid in IF designs. Systems engineering focuses on large, complex, and multiple levels of uncertainty systems. Many concepts such as optimization, human factors, and logistics focus on system’s maintenance and sustainability.

Figure 19.2 highlights the concept that systems engineering costs escalate if users at all levels are not employed up front in the IF design. At the information problem definition stage, the research team has to determine what types of information is observable and available, and which information can be correlated with sensor design, data collection, and algorithm data exploitation. Thus, information needs and user tasks drive the concept design. During the development phase, which includes elaboration and construction, design studies, trade-offs, and prototypes need to be tested and refined (which includes the expected user roles). Llinas11* indicates that many of the design developments are prototypes; however, one of the main concerns in the development phase is to ensure that the user be a part of the fusion process to set priorities and constraints. One emerging issue is that IF designs are dependent on software developers. To control costs of deployment and maintenance, software developers need to work with users to be able to include operator controls such as data filtering, algorithm reinitialization, and process initiation.

FIGURE 19.2

Design cycle—maybe test and evaluation.

19.2.2 Prioritization of Needs

To reduce information to a dimensionally minimal set requires the user to develop a hierarchy of needs to allow one to perform active reasoning over collected and synthesized data. Some needs that can be included in such a hierarchy are:

Things. Objects, number and types; threat, whether harmful, passive, or helpful; location, close or far; basic primitives, features, edges, and peaks; existence known or unknown, that is, new objects; dynamics, moving or stationary.

Processes. Measurement system reliability (uncertainty); ability to collect more data; delays in the measurement process; number of users and interactions.

The user must prioritize information needs for designers. The information priority is related to the information desired. The user must have the ability to choose or select the objects of interest and the processes from which the raw data is converted into the fused information. One of the issues in the processing of fused information is related to the ability to understand the information origin or pedigree. To determine an area of interest, for instance, a user must seek answers to basic questions:

Who—is controlling the objects of interest (i.e., threat information)

What—are the objects and activities of interest (i.e., diagnosis, products, and targets)

Where—are the objects located (i.e., regions of interest)

When—should we measure the information (i.e., time of day)

How—should we collect the information (i.e., sensor measurements)

Which—sensors to use and the algorithms to exploit the information

To reason actively over a complex prioritized collection, a usable set of information must be available along with collection uncertainties on which the user can act.12 Ontology, the seeking of knowledge,13 indicates that goals must be defined, such as reducing uncertainty. The user has to deal with many aspects of uncertainty, such as sensor bias, communication delays, and noise. Heisenberg uncertainty principle exemplifies the challenge of observation and accuracy.14 Uncertainty is a measure of doubt,15 which can prevent a user from making a decision. The user can supplement a machine-fusion system to account for deficiencies and uncertainties. Jousselme et al. presented a useful representation of the different types of uncertainty.16 A state of ignorance can result in an error. An error can occur between expected and measured results caused by distortion of the data (mis-registered) or incomplete data (not enough coverage). With insufficient sensing capabilities, lack of context for situational assessment, and undefined priorities, IFSs will be plagued by uncertainty. Some of the categories of uncertainty are

Vague—not having a precise meaning or clear sensing17

Probability—belief in element of the truth

Ambiguity—cannot decide between two results

Priority of information needs and uncertainty reduction is based on context, which necessitates the user to augment IF designs with changing priorities, informal data gathering, and real-time mission planning.

19.2.3 Contextual Information

Context information, as supplied by a user, can augment object, situational, and threat understanding. There are certain object features the user desires to observe. By selecting objects of interest, target models can be called to assess feature matches.

Modeled target features can be used to predict target profiles and movement characteristics of the targets for any set of aspect angles. Object context includes a pose match between kinematic and feature relationships. Targeting includes selecting priority weights for targets of interest, and information modifications based on location (i.e., avenues of approach and mobility corridors). By properly exploiting the sensor, algorithm, and target, the user can select the ideal perspective from which to identify a target. Examples of contextual information aids include tracking,18 robotics,19 and medicine.

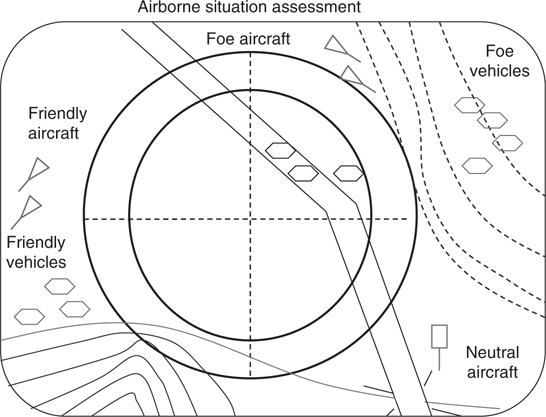

SA usually means that the user’s team has some a priori world information. Aspects of context in situational assessment could include the sociopolitical environment of interest, a priori historical data, topology, and mission goals. Contextual information such as road networks, geographical information, and weather is useful.20 In a military context, targets can be linked by observing selected areas, segmented by boundaries as shown in Figure 19.3. If there is a natural boundary of control, then the targets are more likely to be affiliated with the group defending that area. Types of situation contexts include

Sociopolitical information

Battlefield topography and weather

Adjacent hostile and friendly force structures

Tactical or strategic operational doctrine

Tactical or strategic force deployments

Supporting intelligence data

FIGURE 19.3

Situation assessment display.

From Figure 19.3, we see that if targets are grouped near other targets of the same allegiance, we could expect the classification of a target to the same as that of other spatially adjacent targets. Likewise, we could subscribe to the fact that trucks are on roads and only tanks can be off-road. Thus, information context plays a role in classifying the objects, developing situation understanding, and assessing threat priorities. The method by which a user integrates contextual information to manage the fusion process is cognitive.

19.2.4 Cognitive Fusion

Formally, IF integrates the machine and cognitive fusion functions:

IF is a formal mathematics, techniques, and processes to combine, integrate, and align data originating from different sources through association and correlation for decision making. The construct entails obtaining greater quality, decreased dimensionality, and reduced uncertainty for the user’s application of interest.

We can subdivide the field of fusion technology into a number of categories:

Data fusion. Combining and organizing numerical entities for analysis

Sensor fusion. Combining devices responding to a stimulus

IF. Combining data to create knowledge

Display fusion. Presenting data simultaneously to support human operations

Cognitive fusion. Integrating information in user’s mental model.

Display fusion is an example of integrating cognitive and machine fusion. Users are required to display machine-correlated data from individual systems. Figure 19.1 shows a team of people coordinating decisions derived from displays. Since we desire to utilize IF where appropriate, we assume that combining different sets of data from multiple sources would improve performance gain (i.e., increased quality and reduced uncertainty). Uncertainty about world information can be reduced through systematic and appropriate data combinations, but how to display uncertainty is not well understood. Because the world is complex (based on lots of information, users, and machines) we desire timely responses and extended dimensionality over different operating conditions.21 The computer or fusion system is adept at numerical computations whereas the human is adept at reasoning over displayed information, so what is needed is an effective fusion of physical, process, and cognitive models. The strategy of “how to fuse” requires the fusion system to display a pragmatic set of query options that effectively and efficiently decompose user information needs into signal, feature, and decision fusion tasks.12 The decomposition requires that we prioritize selected data combinations to ensure a knowledge gain. The decomposition of tasks requires fusion models and architectures developed from user information needs. Information needs are based on user requirements that include object information and expected fusion performance. To improve fusion performance, management techniques are necessary to direct machine or database resources based on observations, assessments, and mission constraints, which can be accepted, adapted, or changed by the user. Today, with a variety of users and many ways to fuse information, standards are evolving to provide effective and efficient fusion display techniques that can address team requirements for distributed cognitive fusion.22

Users are important in the design, deployment, and execution of IFSs. They play active roles at various levels of command as individuals or as teams. UR can include a wide range of roles such as (1) prioritizer with database support, (2) sensor as a person facilitating communication and reporting, (3) an operator who sits at an human–computer interface (HCI) terminal monitoring fusion outputs, (4) controller who routes sensors and platforms, and (5) planner who looks at the system-level operations. Recognizing the importance of the roles of the user, the DIFG sought to update the JDL model.

19.3 Data Fusion Information Group Model

Applications for multisensor IF require insightful analysis of how these systems will be deployed and utilized. Increasingly complex, dynamically changing scenarios arise, requiring more intelligent and efficient reasoning strategies. Decision making (DM) is integral to information reasoning, which requires a pragmatic knowledge representation for user interaction.23 Many IF strategies are embedded within systems; however, the user-IFS must be rigorously evaluated by a standardized method that can operate over a variety of locations, including changing targets, differing sensor modalities, IF algorithms, and users.24 A useful model is one that represents critical aspects of the real world.

The IF community has rallied behind the JDL process model with its revisions and developments.25, 26 and 27 Steinberg indicates that “the JDL model was designed to be a functional model—a set of definitions of the functions that could comprise any data fusion system.” The JDL model, like Boyd’s user-derived observe, orient, decide, and act (OODA) loop and the MSTAR predict, extract, match, and search (PEMS) loop, has been interpreted as a process model—a control-flow diagram specifying interactions among functions. It has been also categorized as a formal mode involving a set of axioms and rules for manipulating entities such as probabilistic, possibilistic, and evidential reasoning.

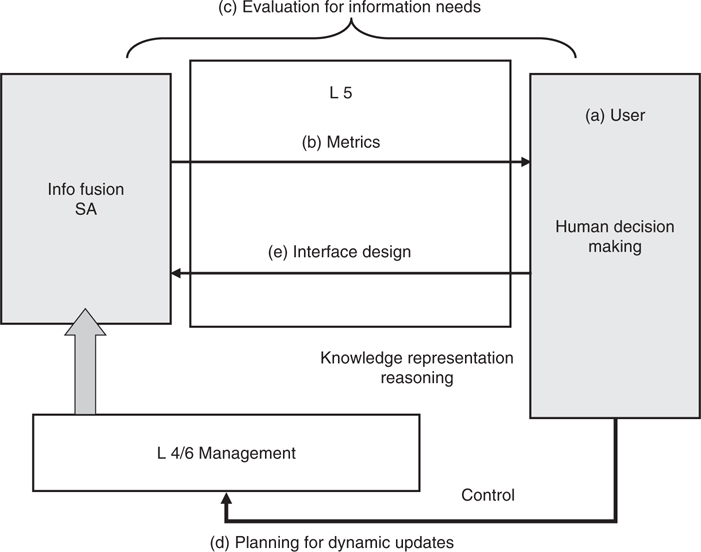

Figure 19.4 shows a user-fusion model that builds a user model28 that emphasizes user interaction within a functional, process, and formal model framework. For example, SA includes the user’s mental model.29 The mental model provides a representation of the world as aggregated through the data gathering; IF design; and the user’s perception of the social, political, and military situations. The interplay between the fusion results (formal), protocol (functional), control (process), and perceptual (mental) models form the basis of UR.

FIGURE 19.4

User-fusion model.

The current team* (now called the data fusion information group) assessed this model, shown in Figure 19.5, to advocate the user as a part of management (control) versus estimation (fusion). In the DFIG model,† the goal was to separate the IF and management functions. Management functions are divided into sensor control, platform placement, and user selection to meet mission objectives. level 2 (SA) includes tacit functions that are inferred from level 1 explicit representations of object assessment. Because algorithms cannot process data not observed, data fusion must evolve to include user knowledge and reasoning to fill these gaps. The current definitions, based on the DFIG recommendations, include

Level 0: Data assessment. Estimation and prediction of signal/object observable states on the basis of pixel/signal level data association (e.g., information systems collections)

Level 1: Object assessment. Estimation and prediction of entity states on the basis of data association, continuous state estimation, and discrete state estimation (e.g., data processing)

Level 2: Situation assessment. Estimation and prediction of relations among entities, to include force structure and force relations, communications, etc. (e.g., information processing)

Level 3: Impact assessment. Estimation and prediction of effects on situations of planned or estimated actions by the participants, to include interactions between action plans of multiple players (e.g., assessing threat actions to planned actions and mission requirements, performance evaluation)

FIGURE 19.5

DFIG 2004 model.

Level 4: Process refinement (an element of resource management). Adaptive data acquisition and processing to support sensing objectives (e.g., sensor management and information systems dissemination, command/control)

Level 5: UR (an element of knowledge management). Adaptive determination of who queries information and who has access to information (e.g., information operations), adaptive data retrieved and displayed to support cognitive DM and actions (e.g., HCI)

Level 6: Mission management (an element of platform management). Adaptive determination of spatial-temporal control of assets (e.g., airspace operations) and route planning and goal determination to support team DM and actions (e.g., theater operations) over social, economic, and political constraints.

19.3.1 Sensor Management

The DFIG model updates include acknowledging the resource management of (1) sensors, (2) platforms that may carry one or multiple sensors, and (3) user control; each with differing user needs based on levels of command. The user not only plans the platform location but can also reason over situations and threats to alter sensing needs. For a time-line assessment, (1) the user plans days in advance for sensing needs, (2) platforms are routed hours in advance, and (3) sensors are slewed in seconds, to capture the relevant information.

Level 4, process refinement, includes sensor management and control of sensors and information. To utilize sensors effectively, the IFS must explore service priorities, search methods (breadth or depth), and determine the scheduling and monitoring of tasks. Scheduling is a control function that relies on the aggregated state-position, state-identity, and uncertainty management for knowledge reasoning. Typical methods used are (1) objective cost function for optimization, (2) dynamic programming (such as neural-network [NN] methods and reinforcement-learning based on a goal), (3) greedy information-theoretic approaches for optimal task assignment,30 or (4) Bayesian networks that aggregate probabilities to accommodate rule constraints. Whichever method is used, the main idea is to reduce the uncertainty.

Level 4 control should entail hierarchical control functions as well. The system is designed to accommodate five classes of sensing (static, event, trigger, trend, and drift), providing a rich source of information about the user’s information needs. The most primitive sensor class, state, allows the current value of an object to be obtained, whereas the event class can provide notification that a change of value has occurred. A higher-level sensor class, trigger, enables the measurement of the current value of a sensor when another sensor changes its value, that is, a temporal combination of state and event sensing. The design supports the logging of sensor data over time, and the production of simple statistics, known as trend sensing. A fifth sensor class, drift, is used for detecting when trend statistics cross a threshold. This can be used to indicate that a situation trends toward an undesirable condition, perhaps a threat. There is also a need for checking the integrity, reliability, and quality of the sensing system.

Issues must also involve absolute and relative assessment as well as local and global referencing. Many IF strategies are embedded within systems; however the user-IFS must be rigorously evaluated by a standardized approach that assesses a wide range of locations, changing targets, differing sensor modalities, and IF algorithms.

19.3.2 User Interaction with Design

The IF community has seen numerous papers that assumed a data-level, bottom-up, level 1 object refinement design, including target tracking and ID. Some efforts explored level 1 aggregation for SA.31,32 However, if the fusion design approach was top-down, then the community would benefit to start the IF designs by asking the customer what they need. The customer is the IFS user, whether the business person, analyst, or commander. If we ask the customers what they want, they would most likely request something that affords reasoning and DM, given their perceptual limitations.33 Different situations will drive different needs; however, the situation is never fully known a priori. There are general constructs that form an initial set of situation hypotheses, but in reality not all operating conditions will be known. To minimize the difficulty of trying to assess all known/unknown a priori situational conditions, the user helps to develop these a priori conditions that help to define the more relevant models. In the end, IFS designs would be based on the user, not strictly the machine or the situation.

Management includes business, social, and economic effects on the fusion design. Business includes managing people, processes, and products. Likewise IF is concerned with managing people, sensors, and data. The ability to design an IFS with regard to physical, social, economic, military, and political environments would entail user reasoning about the data to infer information. User control should be prioritized as user, mission, and sensor management. For example, if sensors are on platforms, then the highest ranking official coordinating a mission determines who is in charge of the assets (not under automatic control). Once treaties, air space, insurance policies, and other documentation are in place, only then would we want to turn over control to an automatic controller (e.g., sensor).

UR, level 5 fusion could form the basis to (1) advocate standard fusion metrics, (2) recommend a rigorous fusion evaluation and training methodology, (3) incorporate dynamic decision planning, and (4) detail knowledge presentation for fusion interface standards. These issues are shown in Figure 19.6.

FIGURE 19.6

User refinement of fusion system needs.

19.4 User Refinement

Level 5, UR,2 functions include (1) selecting models, techniques, and data; (2) determining the metrics for DM; and (3) performing higher-level reasoning over the information based on the user’s needs as shown in Figure 19.7. The user’s goal is to perform a task or a mission. The user has preconceived expectations and utilizes the levels 0–4 capabilities of a machine to aggregate data for DM. To perform a mission, a user employs engineers to design a machine to augment his/her workload. Each engineer, as a vicarious user, imparts decision assumptions into the algorithm design. However, since the machine is designed by engineers, system controls might end up operating the fusion system in ways different from what the user needs. For example, the user plans ahead (forward reasoning) whereas the machine reasons over collected data (backward reasoning). If a delay exists in the IFS, a user might deem it useless for planning (e.g., sensor management—where to place sensor for future observations).

A user (or IF designer) is forced to address situational constraints. We define UR operations as a function of responsibilities. Once an IF design is ready, the user can act in a variety of ways: monitoring a situation in an active or passive role or planning by either reacting to new data or providing proactive control over a future course of actions (COAs). When a user interacts with an IFS, it is important that they supply knowledge reasoning. The user has the abilities to quickly grasp where key target activity is occurring and use this information to reduce the search space for the fusion system algorithm and hence, guide the fusion system process.

FIGURE 19.7

Level 5: User-refinement functions.

19.4.1 User Action

User actions have many meanings that could be conveyed by SA and assessment needs. One of the key issues of SA (defined in Section 19.5) is that the IF must map to the user’s perceptual needs: spatial awareness,34 neurophysiological,35 perceptual,36 and psychological,37 and that those needs combine perceptually and cognitively.6,38,39 If the display/delivery of information is not consistent with the user expectations, all is lost. The machine cannot reason, as Brooks stated in “Elephant’s don’t play chess.”40 A machine cannot deal with unexpected situations.41 For this reason, there are numerous implications for (1) incorporating the user in the design process, (2) gathering user needs, and (3) providing the user with available control actions.

Users are not Gaussian processors, yet they do hypothesis confirmation. In hypothesis reasoning, we can never prove the null condition, but we can disprove the alternative hypothesis. Level 5 is intended to address the cognitive SA that includes knowledge representation and reasoning methods. The user defines a fusion system, for without a user, there is no need to provide fusion of multisensory data. The user has a defined role with objectives and missions. Typically, the IF community has designed systems that did not require user participation. However, through years of continuing debate, we find that the user plays a key role in the design process by

Level 0: determining what and how much data value to collect

Level 1: determining the target priority and where to look

Level 2: understanding scenario context and user role

Level 3: defining what is a threat and adversarial intent

Level 4: determining which sensors to deploy and activate by assessing the utility of information

Level 5: designing user interface controls (Figure 19.1)

Kokar and coworkers42 stress ontological and linguistic43 questions concerning user interaction with a fusion system such as semantics, syntactics, efficacy, and spatiotemporal queries. Developing a framework for UR requires semantics or interface actions that allow the system to coordinate with the user. Such an example is a query system in which the user seeks questions and the system translates these requests into actionable items. An operational system must satisfy the users’ functional needs and extend their sensory capabilities. A user fuses data and information over time and space and acts through a perceived world reference (mental) model—whether in the head or on graphical displays, tools, and techniques.44 The IFS is just an extension of the user’s sensing capabilities. Thus, effective and efficient interactions between the fusion system and the user should be greater than the separate parts.

The reason why users will do better than machines is that they are able to reason about the situation, they can assess likely routes a target can take, and they bring in contextual information to reason over the uncertainty.16 For example, as target numbers increase and processing times decrease, a user will get overloaded. To assist the user, we can let routine calculations and data processing be performed by the computer and relegate higher-level reasoning to the user.

Process refinement of the machine controls data flow. UR guides information collection, region of coverage, situation and context assessment as well as process refinement of sensor selections. Although each higher level builds on information from lower fusion levels, the refinement of fused information is a function of the user. Two important issues are control of the information and the planning of actions. There are many social issues related to centralized and distributed control such as team management and individual survivability. For example, the display shown in Figure 19.1 might be globally distributed, but centralized for a single commander for local operations. Thus, the information displayed on an HCI should not only reflect the information received from lower levels, but also afford analysis and distribution of commander actions, directives, and missions. Once actions are taken, new observable data should be presented as operational feedback. Likewise, the analysis should be based on local and global operational changes and the confidence of new information. Finally, execution should include updates including orders, plans, and actions for people carrying out mission directives. Thus, process refinement is not the fusing of signature, image, track, and situational data, but that of decision information fusion (DEC-IF) for functionality.

DEC-IF is a refined assessment of fused observational information, globally and locally. DEC-IF is a result on which a user can plan and act. Additionally, data should be gathered by humans to aid in deciding what information to collect, analyze, and distribute to others. One way to facilitate the receipt, analysis, and distribution of actions is that of the OODA loop. The OODA loop requires an interactive display for functional cognitive DM. In this case, the display of information should orient a user to assess a situation based on the observed information (i.e., SA). These actions should be assessed in terms of the user’s ability to deploy assets against the resources, within constraints, and provide opportunities that capitalize on the environment.

Extended information gathering can be labeled as action information fusion (ACT-IF) because an assessment of possible/plausible actions can be considered. The goal of any fusion system is to provide the user with a functionally actionable set of refined information. Taken together, the user-fusion system is actually a functional sensor, whereas the traditional fusion model just represents sensor observations. The UR process not only determines who wants the data and whether they can refine the information, but also how they process the information for knowledge. Bottom-up processing of information can take the form of physically sensed quantities that support higher-level functions. To include machine automation as well as IF, it is important to afford an interface that allows the user to interact with the system (i.e., sensors). Specifically, the top-down user can query necessary information to plan and focus on specific situations while the automated system can work in the background to update on the changing environment. Thus, the functional fusion system is concerned with processing the entire situation while a user can typically focus on key activities. The computer must process an exhaustive set of combinations to arrive at a decision, but the human is adept at cognitive reasoning and is sensitive to the changes in situational context.

The desired result of data processing is to identity entities or locally critical information spatially and temporally. The difficulty in developing a complete understanding is the sheer amount of data available. For example, a person monitoring many regions of interest (ROIs) waits for some event or threat to come into the sensor domain. Under continuously collecting sensors, most processed data are entirely useless. Useful data occurs when a sensed event interrupts the monitoring system and initiates a threat update. Decision makers only need a subset of the entire data to perform successful SA and impact assessment to respond to a threat. Specifically, the user needs a time-event update on an object of interest. Thus, data reduction must be performed. A fusion strategy can reduce this data over time as well as space, by creating entities. Entity data can be fused into a single common operating picture for enhanced cognitive fusion for action; however, user control options need to be defined.

19.4.2 User Control

Table 19.2 shows different user management modes that range from passive to active control.45 In the table, we see that the best alternative for fusion systems design is “management by interaction.” Management by interaction can include both compensatory and noncompensatory strategies. The compensatory strategies include linear additive (weighting inputs), additive difference (compares alternative strategies), or ideal point (compare alternative against desired strategies) methods. The noncompensatory alternative selective strategies include dominance (strategy alternatives equal in all dimensions except one), conjunctive (passes threshold on all dimensions), lexicographic (best alternative strategy in one dimension, if equal strategies are based on second dimension), disjunctive (passes threshold on one dimension), single feature difference (best alternative strategy over alternative dimensions of greatest difference), elimination by aspects (choose one strategy based on probabilistic elimination of less important dimensions), and compatibility test (compare alternative strategies based on weighted threshold dimensions) strategies. In the strategies presented, both the user and the DFS require rules for DM. Because the computer can only do computations, the user must select the best set of rules to employ.

One method to assess the performance of a user or fusion process for target identification (ID) (for instance) is through the use of receiver operator curves (ROC). Management by interaction includes supervisory functions such as monitoring, data reduction and mining, and control. An ROC plots the hit rate (HR) versus the FAR. The ROC could represent any modality such as hearing, imaging, or fusion outputs. As an example, we can assess the performance of a user-fusion system by comparing the individual ROC to the performance of the integrated user-fusion system. The user may act in three ways (1) management-by-ignorance—turn off fusion display, do manual manipulation (cognitive-based); (2) management-by-acceptance—agree with the computer (i.e., automatic); or (3) management-by-interaction—adapt with output, reasoning (i.e., associative). What is needed is the reduction of data that the user needs to process.

For instance, consider that the human might desire acceptance—such as the case when the HR is high and independent of the FAR; or the user might desire ignorance—when we want FAR to be low, but track length high. However an appropriate combination of the two approaches would lead to a case of interaction—adjust decisions (hit [H]/false alarm [FA]) based on fusion ROC performance for unknown environments, weak fusion results, or high levels of uncertainty. From Figure 19.8 we see there is the possibility for fusion gain (moving the curve to the upper left) is associated with being able to process more information. One obvious benefit of the user to the fusion system is that the user can quickly rule out obvious false alarms (thereby moving the curve left). Likewise, the fusion system can process more data, thereby cueing more detections (moving the curve up). Operating on the upper left of the 2D curve would allow the user to increase confidence in DM over an acceptable FAR.

TABLE 19.2

Continuum of System Control and Management for Operators

We have outlined user control actions; now let us return to estimation.

19.4.3 User Interaction with Estimation

It is important to understand which activities are best suited for user engagement with a DFS. We need to understand the impact of the user on fusion operations, communication delays, as well as target density. For example, UR includes monitoring to ensure that the computer is operating properly. Many UR functions are similar to business management where the user is not just operating independently with a single machine but operating with many people to gather facts and estimate states. Typically, one key metric is reaction time44 that determines which user strategy can be carried out efficiently.

FIGURE 19.8

2D ROC analysis combined user actions and fusion system performance gain.

UR can impact near- and far-term analyses over which the human must make decisions. For the case of a short-term analysis, the human monitors (active vs. passive) the system by being engaged with the system or just watching the displays. For the far-term analysis, the human acts as a planner46 (reactive vs. proactive) in which the user estimates long-term effects and consequences of information. The information presented includes not only the time span associated with collecting the data, but also the time delay needed to display the data (latent information), as well as the time between the presentation of information on the screen to the user’s perception and reaction time. User reaction time includes physical responses, working associations, and cognitive reasoning. User focus and attention for action engagement can be partitioned into immediate and future information needs. Engagement actions can be defined as

Predictive actions. Projective, analytical approach to declare instructions in advance

Reactive actions. Immediate, unthinking response to a stimulus

Proactive actions. Active approach requiring an evaluation of the impact of the data on future situations

Passive actions. Waiting, nonthought condition allowing current state to proceed without interaction

The user’s role is determined by both the type of DFS action and user goals. In Table 19.3, we see that the action the user chooses determines the time horizon for analysis and a mapping of the user actions.

Other user data metrics needed in the analysis and presentation of data and fusion information include integrity—formal correctness (i.e., uncertainty analysis); consistency—enhancing redundancy, reducing ambiguity, or double counting (i.e., measurement origination); and traceability—backward inference chain (i.e., inferences assessed as data is translated into information).

Integrity, consistency, and traceability map into trust, confidence, and assurance that the fused data display is designed to meet user objectives. To be able to relate the user needs for the appropriate design action, we use the automatic, associative, and cognitive levels of IF. To perform the actions of planning, prediction, and proactive control for fusion assessment, the user must be able to trust the current information and be assured that the fusion system is adaptable and flexible to respond to different requests and queries. Typical HCI results discussed in the literature offer a number of opportunities for the fusion community: user models, metrics, evaluation, decision analysis, work domain assessment, and display design, as detailed in Section 19.5.

TABLE 19.3

User Actions Mapped to Metrics of Performance

Actions |

Time |

Thought |

Need |

Future |

Predictive |

Projective |

Some |

Within |

Future |

Reactive |

Immediate |

None |

Within |

Present |

Proactive |

Anticipatory |

Much—active |

Across |

Future + |

Passive |

Latent |

Delayed/none |

Across |

Present |

19.5 User-Refinement Issues

Designing complex and often-distributed decision support systems—which process data into information, information into decisions, decisions into plans, and plans into actions—requires an understanding of both fusion and DM processes. Important fusion issues include timeliness, mitigation of uncertainty, and output quality. DM contexts, requirements, and constraints add to the overall system constraints. Standardized metrics for evaluating the success of deployed and proposed systems must map to these constraints. For example, issues of trust, workload, and attention47 of the user can be assessed as an added quality for an IFS to balance control management with perceptual needs.

19.5.1 User Models in Situational Awareness

Situation assessment is an important concept of how people become aware of things happening in their environment. SA can be defined as keeping track of prioritized, significant events, and the conditions in one’s environment. Level 2 SA is the estimation and prediction of relations among entities, to include force structure and relations, communications, etc., which require adequate user inputs to define these entities. The human-in-the-loop (HIL) of a semiautomated system must be given adequate SA. According to Endsley, SA is the perception of the elements in the environment within a volume of time and space, the comprehension of their meaning, and the projection of their status in the near future.48, 49 and 50 This now-classic model, shown in Figure 19.9, translates into three levels:

Level 1 SA: Perception of environment elements

Level 2 SA: Comprehension of the current situation

Level 3 SA: Projection of future states

Operators of dynamic systems use their SA to determine actions. To optimize DM, the SA provided by an IFS should be as precise as possible with respect to the objects in the environment (level 1 SA). An SA approach should present a fused representation of the data (level 2 SA) and provide support for the operator’s projection needs (level 3 SA) to facilitate operator’s goals. From the SA model presented in Figure 19.9, workload51 is a key component of the model that affects not only SA, but also user decision and reaction time.

To understand how the human uses the situation context to refine the SA, we use the recognition primed decision-making (RPD) model,52 shown in Figure 19.10. The RPD model develops user DM capability based on the current situation and past experience. The RPD model shows the goals of the user and the cues that are important. From the IFS, both the user and the IFS algorithm can cue each other to determine data needs. The user can cue the IFS by either selecting the data of interest or choosing the sensor collection information.

FIGURE 19.9

Endsley’s situation awareness model.

FIGURE 19.10

Recognition primed decision-making model for situational awareness.

As another example, the fusion SA model components53,54 developed by Kettani and Roy show the various information needs to provide the user with an appropriate SA. To develop the SA model further, we note that the user must be primed for situations to be able to operate faster and more effectively and work against a known set of metrics for SA.

19.5.2 Metrics

Standardized performance and quality of service (QoS) metrics are sine qua non for evaluating every stage of data processing and subsystem hand off of data and state information. Without metrics, a proper scientific evaluation cannot be made that takes into account the diversity of proposed systems, and their complexity and operability. A chief concern is adequate SA. The operator is not always automatically guaranteed SA when new fusion systems are deployed. Even though promises are made for these systems to demonstrate increases in capacity, data acuity (sharpened resolution, separating a higher degree of information from noise backgrounds), and timeliness among the QoS metrics, the human cognitive process can be a bottleneck in the overall operation. This occurs most frequently at the perception level of SA, with level 1 accounting for 77% of SA-related errors,51 and indirectly affecting comprehension and projection DM acuity in levels 2 and 3.

Dynamic DM requires: (1) SA/image analysts (IA), (2) dynamic responsiveness to changing conditions, and (3) continual evaluation to meet throughput and latency requirements. These factors usually impact the IFS directly, require an interactive display to allow the user to make decisions, and must include metrics for replanning and sensor management.55 To afford interactions between future IF designs and users’ information needs, metrics are required. The metrics chosen include timeliness, accuracy, throughput, confidence, and cost. These metrics are similar to the standard QoS metrics in communication theory and human factors literature, as shown in Table 19.4.

To plan a mission, an evaluation of fusion requires an off-line and on-line assessment of individual sensors and integrated sensor exploitation (i.e., performance models). To achieve desired system management goals, the user must be able to develop a set of metrics based on system optimization requirements and performance evaluation criteria. Both of which define the quality to provide effective mission management and to ensure an accurate and effective capability to put the right platforms and sensors on station at the correct time, prepare operators for the results, and determine pending actions based on the estimated data.

19.5.3 User Evaluation

IF does not depend only on individuals making decisions, or single subsystems generating analyses. Rather, team communication and team-based decisions have always been integral to complex operations, whether civilian or military, tactical or strategic, municipal, federal, or intergovernmental. Team communication takes many forms across operational environments and changes dynamically with new situations. Communication and DM can be joint, allocated, shared, etc., and this requires that the maintenance of any team-generated SA be rigorously evaluated.

TABLE 19.4

Metrics for Various Disciplines

As computer processing loads increase, both correct and incorrect ways are available to design IFSs. The incorrect way is to fully automate the system, providing no role for the user. The correct way is to build the role of the operator into the design at inception and test the effectiveness of the operator to meet system requirements. Fusionists are aware of many approaches, such as level 1 target ID and tracking methods that only support the user, but do not replace the user. In the case of target ID, a user typically does better with a small number of images. However, as the number of collected images and throughput increases, a user does not have the time to process every image. One key issue for fusion is the timeliness of information.

Because every stage of IF DM adds to overall delays—in receiving sensor information, in presenting fused information to the user, and in the information user’s processing capacity—overall system operation must be evaluated in its entirety. The bottom-level component in the human-system operation involves data from the sensors. Traditionally, the term data fusion has referred to bottom-level, data-driven fusion processing, whereas the term information fusion refers to the conversion of already-fused data into meaningful and preferably relevant information useful to higher cognitive systems, which may or may not include humans.

Cognitive processes—human or machine equivalent “smart” algorithms—require nontrivial processing time to reach a decision, at both individual and team levels. This applies, analogously, to individual components and intercomponent/subsystem levels of machine-side DM. DM durations that run shorter than the interarrival time of sensor data lead to starvation within the DM process; DM durations that run longer than the interval between new data arrivals lead to dropped data. Both cases create situations prone to error, whether the DM operator is human or machine. The former case has consequences of starving the human’s cognitive flow, causing wandering attention spans, and loss of key pieces of information held in short-term memory, which could have been relevant to later-arriving data. In the latter case, data arrives while the information is being processed, or the operator is occupied, so this data often passes out of the system without being used by the operator. This occurs when systems are not adequately designed to store excess inputs to an already overburdened system. These excess inputs are dropped if there is no buffer in which to queue the data, or if the buffer is so small as to overwrite data. The operator and team must have the means to access quality information and sufficiently process the data from different perspectives within a timely manner. In addition to the metrics that establish core quality (reliability/integrity) for information, there are issues surrounding information security. Precision performance measures may be reflected by content validity (whether the measure adequately measures the various facets of the target construct), construct validity (how well the measured construct relates to other well-established measures of the same underlying construct), or criterion-related validity (whether measures correlate with performance on some task). The validity of these IF performance measures needs to be verified in an operational setting where operators are making cognitive decisions.

19.5.4 Cognitive Processing in Dynamic Decision Making

There are many ways to address the users’ roles (reactive, proactive, and preventive) in system design. Likewise, it is also important to develop metrics for the interactions to enhance the users’ abilities in a defined role or task. One way to understand how a user operates is to address the automatic actions performed by the user (which includes eye–arm movements). Additionally, the user associates past experiences to current expectations, such as identifying targets in an image. Intelligent actions include the cognitive functions of reasoning and understanding. Rasmussen37 developed these ideas by mapping behaviors into decision actions including skills, rules, and knowledge through a means-end decomposition.

When tasked with an SA analysis, a user can respond broadly by one of three manners: reactive, proactive, or preventive, as shown in Figure 19.11. In the example that follows, we use a target tracking and ID exemplar to detail the user cognitive actions. In a reactive mode, the user makes a rapid detection to minimize damage or repeat offense. An IFS would gather information from a sensor grid detection of in situ threats and is ready to act. In this model, the system interprets and alerts users to immediate threats. The individual user selects the immediate appropriate response (in seconds) with aid of sensor warnings of nonlethal or lethal threats.

In the proactive mode, the user utilizes sensor data to anticipate, detect, and capture needed information before an event. In this case, a sensor grid provides surveillance based on prior intelligence and predicted target movements. A multi-INT sensor system could detect and interpret anomalous behavior and alert an operator to anticipated threats in minutes. Additionally, the directed sensor mesh tracks individuals back to dwellings and meeting places, where troops respond quickly and capture the insurgents, weapons, or useful intelligence.

The mode that captures the entire force over a period of time (i.e., an hour) is the preventive mode. To prevent potential threats or actions, we would (1) increase insurgent risk (i.e., arrested after being detected), (2) increase effort (i.e., make it difficult to act), and (3) lower payoff of action (i.e., reduce the explosive damage). The preventive mode includes an intelligence database to track events before they reach deployment.

The transition and integration of decision modes to perceptual views is shown in Figure 19.12. The differing displays for the foot soldier or command center would correlate with the DM mode of interest. For the reactive mode, the user would want an actual location of the immediate threats on a physical map. For the anticipated threats, the user would want the predicted locations of the adversary and the range of possible actions. Finally, for the potential threats, the user could utilize behavior analysis displays that piece together aggregated information of group affiliations, equipment stores, and previous events to predict actions over time. These domain representations were postulated by Waltz39 as cognitive, symbolic, and physical views that capture differing perceptual needs for intelligent DM.

FIGURE 19.11

Reasoning in proactive strategies.

FIGURE 19.12

Categories of analytic views. (Extracted from Waltz, E., Data Fusion in Offensive and Defensive Information Operations, NSSDF, June 2000.)

Intelligent DM employs many knowledge-based information fusion (KBIF) strategies such as neural networks, fuzzy logic, Bayesian networks, evolutionary computing, and expert systems.56 Each KBIF strategy has different processing durations. Furthermore, each strategy differs in the extent to which it is constrained by the facility and its use within the user-fusion system. OODA loop helps to model a DM user’s planned, estimated, or predicted actions. Assessing susceptibilities and vulnerabilities to detect/estimate/predict threat actions, in the context of planned actions, requires a concurrent timeliness assessment. Such assessment is required for adequate DM, yet is not easily attained.

This is similarly posed in the Endsley model of SA: the “projection” level 3 of SA maps to this assessment processing activity. DM is most successful in the presence of high levels of projection SA, such as high accuracy of vulnerability or adversarial action assessments. DM is enhanced by correctly anticipating effects and state changes that will result from potential actions. The nested nature of effect-upon-effect creates difficulty in making estimations within an OODA cycle. For instance, effects of own-force intended COA are affected by the DM cycle time of the threat instigator and the ability to detect and recognize them. Three COA processes57 to reduce the IFS search space are as follows: (1) manage similar COA, (2) plan related COA, and (3) select novel COA, shown in Figure 19.13.

Given that proactive and preventive actions have a similar foundational set of requirements for communicating actions, employing new sensor technologies, and managing interactions in complex environments, any performance evaluation must start with the construct of generally applicable metrics. Adding to the general framework, we must also create an evaluation analysis that measures responsiveness to unanticipated states, since these are often the first signals of relevant SA threats. A comparative performance analysis for future applications requires intelligent reasoning, adequate IF knowledge representation, and process control within realistic environments. The human factors literature appropriately discusses the effects of realistic environments as a basis for cognitive work analysis (CWA).

FIGURE 19.13

Course of action model.

19.5.5 Cognitive Work Analysis/Task Analysis

The key to supporting user reasoning is in designing a display that supports the users task and work roles. A task analysis (TA) is the modeling of user actions in the context of achieving goals. In general, actions are analyzed to move from a current state to a goal state. Different approaches based on actions include58

Sequential TA. It includes organizing activities and describing them in terms of the perceptual, cognitive, and manual user behavior to show the man-machine activities.

Timeline TA. It is an approach that assesses temporal demands of the tasks or sequences of actions and compared time availability for task execution.

Hierarchical TA. It represents the relationships between which tasks and subtasks need to be done to meet operating goals.

Cognitive Task Analysis (CTA). It describes the cognitive skills and abilities needed to perform a task proficiently, rather than the specific physical activities carried out in performing the task. CTA is used to analyze and understand task performance in complex real-world situations, especially those involving change, uncertainty, and time pressure.

Level 5 issues require an understanding of the workload, time, and information availability. Workload and time can be addressed through a TA; however, user performance is a function of the task within the mission. A CWA includes (1) defining what task the user is doing, (2) structuring the order of operations, (3) ensuring that the interface supports SA, (4) defining the decomposition of required tasks, and (5) characterizing what the human actually perceives.59 The CWA looks at the tasks the human is performing and the understanding of how the tasks correlate with the information needs for intelligent actions. The five stages of a CWA are (1) work domain, (2) control task, (3) strategy, (4) social-organizational, and (5) worker competency analysis. Vicente59 shows that a CWA requires an understanding of the environment and the user capabilities. An evaluation CWA requires starting from the work domain and progressing through the fusion interface to the cognitive domain. Starting from the work domain, the user defines control tasks and strategies (i.e., utilizing the fusion information) to conduct the action. The user strategies, in the context of the situation, require an understanding of the social and cognitive capabilities of the user. Social issues would include no-fly zones for target ID and tracking tasks, whereas cognitive abilities include the reaction time of the user to a new situation. Elements of the CWA process include reading the fusion display, interpreting errors, and assessing sensory information and mental workloads. We are interested in the typical work-analysis concepts of fusion/sensor operators in the detection of events and state changes, and pragmatic ways to display the information.

19.5.6 Display/Interface Design

A display interface is a key to allowing the user to have control over data collection and fusion processing.60,61 Without designing a display that matches the cognitive perception, it is difficult for the user to reason over a fused result. Although many papers address interface issues (i.e., multimodal interfaces), it is of concern to the fusion community to address cognitive issues such as ontological relations and associations.62,63 Fusion models are based on processing information for DM. A user-fusion model would highlight the fact that fusion systems are designed for human consumption. In effect, the top-down approach would explore an information need—pull through queries to the IFS (cognitive fusion), whereas a bottom-up approach would push data combinations to the user from the fusion system (display fusion). The main issues for user-fusion interaction include ontology development for initiating queries, constructing metrics for conveying successful fusion opportunities, and developing techniques to reduce uncertainty1 and dimensionality. In Section 19.6, we highlight the main mathematical techniques for fusion based on the user-fusion model for DM.

An inherent difficulty resides in the fusion system that processes only two forms of data. If one sensor perceives the correct entity and the other does not, there is a conflict. Sensor conflicts increase the cognitive workload and delay action. However, if time, space, and spectral events from different sensors are fused, conflicts can be resolved with an emergent process to allow for better decisions over time. The strategy is to employ multiple sensors from multiple perspectives with UR. Additionally, the HCI can be used to give the user a global and local SA/IA to guide attention, reduce workload, increase trust, and afford action.

Usability evaluation is a user-centered, user-driven method that defines the system as the connection between the application software and hardware. It focuses on whether the system delivers the right information in the appropriate way for users to complete their tasks. Once user requirements have been identified, good interface designs transform these requirements into display elements, and organize them in a way that is compatible with how users perceive and utilize information, and how they are used to support work context and to use conditions.33 The following items are useful principles for interface design:

Consistency. Interfaces should be consistent with experiences, conform to work conventions, and facilitate reasoning to minimize user errors, information retrieval, and action execution.

Visually pleasing composition. Interface organization includes balance, symmetry, regularity, predictability, economy, unity, proportion, simplicity, and groupings.

Grouping. Gestalt principles provide six general ways to group screen elements spatially: principles of proximity, similarity, common region, connectedness, continuity, and closure.

Amount of information. Too much information could result in confusion. Using Miller’s principle that people cannot retain more than 7±2 items in short-term memory, chunking data using this heuristic could provide the ability to add more relevant information on the screen as needed.

Meaningful ordering. The ordering of elements and their organization should have meaning with respect to the task and information processing activities.

Distinctiveness. Objects should be distinguishable from other objects and the background.

Focus and emphasis. Salience of objects should reflect the relative importance of focus.

Typically, nine usability areas are considered when evaluating interface designs with users:

Terminology. Labels, acronyms, and terms used

Workflow. Sequence of tasks in using the application

Navigation. Methods used to find application parts

Symbols. Icons used to convey information and status

Access. Availability and ease of access of information

Content. Style and quality of information available.

Format. Style of information conveyed to the user

Functionality. Application capabilities

Organization. Layout of the application screens

The usability criteria typically used for evaluation of these usability areas are noted below:33

Visual clarity. This displayed information should be clear, well-organized, unambiguous, and easy-to-read to enable users to find required information, draw the user’s attention to important information, and allow the user to see where information should be entered quickly and easily.

Consistency. This dimension conveys that the way the system looks and works should be consistent at all times. Consistency reinforces user expectations by maintaining predictability across the interface.

Compatibility. This dimension corresponds to whether the interface conforms to existing user conventions and expectations. If the interface is familiar to users, it will be easier for them to navigate, understand, and interpret what they are looking at and what the system is doing.

Informative feedback. Users should be given clear informative feedback on where they are, and what actions were taken, whether successful, and what should be taken.

Explicitness. The way the system works and is structured should be clear to the user.

TABLE 19.5

Interface Design ActivitiesWork Domain Activities

Info requirements

Cognitive work analysis, SA analysis, contextual inquiry—task analysis, scenario-based design, participatory design

Interface design

User interface design principles, participatory design

Evaluation

Situation awareness analysis, usability evaluation—scenario-based design, participatory design, usability evaluation

Appropriate functionality. The system should meet the user requirements and needs when carrying out tasks.

Flexibility and control. The interface should be sufficiently flexible in structure—the way information is presented and in terms of what the user can do, to suit the needs and requirements of all users, and to allow them to feel in control.

Error prevention and correction. A system should be designed to minimize the possibility of user error, with built-in functions to detect when these errors occur. Users should be able to check their inputs and to correct potential error before inputs are processed.

User guidance, usability, and support. Informative, easy-to-use, and relevant guidance should be provided to help the users understand and use the system.

Work domain issues should be considered in the environment in which processes will take place, such as the natural setting of a command post. Ecological interface design is the methodology concerned with evaluating user designs as related to stress, vigilance, and the physical conditions such as user controls (Table 19.5).

19.6 Example: Assisted Target Identification through User-Algorithm Fusion

Complex fusion designs are built from a wide range of experiences. A large set of business, operational, and prototype users are involved in deploying a fusion system. One such example, the automated fusion technology (AFT) system, is the assisted target recognition for time-critical targeting program that seeks to enhance the operational capability of the digital command ground station.64 The AFT system consists of the data fusion processor, change detection scheduler, image prioritizer, automatic target cuer (shown in Figure 19.14), and a reporting system. Some of the user needs requested from IA include the following:

Reduce noninterference background processing (areas without targets and opportunity cost)

Cue them to only high probability images with high confidence (accuracy)

Reduce required workload and minimize additional workload (minimize error)

Reduce time to exploit targets (timeliness)

Alert IA to possible high-value targets in images extracted from a larger set of images on the image deck (throughput)

FIGURE 19.14

AFT system.

There are many reports on the BAE real-time moving and stationary automatic target recognition (RT-MSTAR) and Raytheon detected imagery change extractor (DICE) algorithms. Developments for the RT-MSTAR came from enhancements of the MSTAR DARPA program.65 Additionally, the ATR algorithms from MSTAR have undergone a rigorous testing and evaluation over various operating conditions.66 The key for AFT is to integrate these techniques for the operationally viable VITec™ display. Figure 19.15 shows a sample image of the AFT system.

Although extensive research has been conducted on object-level fusion, change detection, and platform scheduling, these developments are limited by the exhaustive amount of information to completely automate the system. Users have been actively involved in design reconfiguration, user control, testing,67 and deployment. As the first phase of deployment, the automatic fusion capabilities were used to reorder the “image deck” so that user verification proceeded pragmatically. The key lesson learned was that complete IF solutions were still not reliable enough, and tactful UR controls are necessary for acceptance. For the AFT system, the worthy elements of object-level fusion include support for sensor observations, cognitive assistance in detecting targets, and providing an effective means for target reporting. These capabilities combine to increase fusion system efficacy for time savings and extended spatial coverage, as detailed in Figure 19.16.

19.7 Summary

If a machine presents results that conflict with the user’s expectations, the user would experience cognitive dissonance. The user needs reliable information that is accurate, timely, and confident. Figure 19.17 shows that for DM there are technological operational conditions that affect the user’s ability to make informed decisions.68 The IFS must produce quality results for effective and efficient DM by increasing fused output quality. An IF design should be robust to object, data, and environment model variations.69

FIGURE 19.15

Output embedded in VITec.

FIGURE 19.16

AFT “image deck” reprioritization with “bonus” images.

FIGURE. 19.17

Machine-user interaction performance.

This chapter highlights important IF issues, complementing Hall’s,4* for UR (sensor, user, and mission [SUM] management) including (1) designing for users, (2) establishing a standard set of metrics for cost function optimization, (3) advocating rigorous fusion evaluation criteria to support effective DM, (4) planning for dynamic DM and requiring decentralized updates for mission planning, and (5) designing interface guidelines to support user’s control actions and user efficacy of IFSs.

References

1. Hall, D. Knowledge-Based Approaches, in Mathematical Techniques in Multisensor Data Fusion, Chapter 7, pp. 239, Artech House, Boston, MA, 1992.

2. Blasch, E. and P. Hanselman, Information Fusion for Information Superiority, NAECON (National Aerospace and Electronics Conference), pp. 290–297, October 2, 2000.

3. Blasch, E. and S. Plano, Level 5: User Refinement to Aid the Fusion Process, Proceedings of SPIE, Vol. 5099, 2003.

4. Hall, D. Dirty Little Secrets of Data Fusion, NATO Conference, 2000.

5. Hall, J.M., S.A. Hall and T. Tate, Removing the HCI Bottleneck: How the Human–Computer Interface (HCI) Affects the Performance of Data Fusion Systems, in D. Hall and J. Llinas (Eds.), Handbook of Multisensor Data Fusion, Chapter 19, pp. 1–12, CRC Press, Boca Raton, FL, 2001.

6. Cantoni, V., V. Di Gesu and A. Setti (Eds.), Human and Machine Perception: Information Fusion, Plenum Press/Kluwer, New York, 1997.

7. Abidi, M. and R. Gonzalez (Eds.), Data Fusion in Robotics and Machine Intelligence, pp. 560, Academic Press, Orlando, FL, 1992.

8. Blasch, E. Decision Making for Multi-Fiscal and Multi-Monetary Policy Measurements, Fusion98, Las Vegas, NV, pp. 285–292, July 6–9, 1998.

9. Waltz, E. and J. Llinas, Data Fusion and Decision Support for Command and Control, Multisensor Data Fusion, Chapter 23, Artech House, Boston, MA, 1990.

10. Looney, C.G. Exploring Fusion Architecture for a Common Operational Picture, in Information Fusion, Vol. 2, pp. 251–260, December 2001.

11. Llinas, J. Assessing the Performance of Multisensor Fusion Processes, in D. Hall and J. Llinas (Eds.), Handbook of Multisensor Data Fusion, Chapter 20, CRC Press, Boca Raton, FL, 2001.

12. Fabian, W. Jr., and E. Blasch, Information Architecture for Actionable Information Production, (for Bridging Fusion and ISR Management), NSSDF 02, August 2002.

13. Blasch, E. Ontological Issues in Higher Levels of Information Fusion: User Refinement of the Fusion Process, Fusion03, July 2003.

14. Blasch, E. and J. Schmitz, Uncertainty Issues in Data Fusion, NSSDF 02, August 2002.

15. Tversky, K. and D. Kahneman, Utility, Probability & Decision Making, in D. Wendt and C.A.J. Vlek (Eds.), Judgment Under Uncertainty, D. Reidel Publishing Co., Boston, MA, 1975.