As we note from writing our prior deviceQuery program in Python, a GPU has its own memory apart from the host computer's memory, which is known as device memory. (Sometimes this is known more specifically as global device memory, to differentiate this from the additional cache memory, shared memory, and register memory that is also on the GPU.) For the most part, we treat (global) device memory on the GPU as we do dynamically allocated heap memory in C (with the malloc and free functions) or C++ (as with the new and delete operators); in CUDA C, this is complicated further with the additional task of transferring data back and forth between the CPU to the GPU (with commands such as cudaMemcpyHostToDevice and cudaMemcpyDeviceToHost), all while keeping track of multiple pointers in both the CPU and GPU space and performing proper memory allocations (cudaMalloc) and deallocations (cudaFree).

Fortunately, PyCUDA covers all of the overhead of memory allocation, deallocation, and data transfers with the gpuarray class. As stated, this class acts similarly to NumPy arrays, using vector/ matrix/tensor shape structure information for the data. gpuarray objects even perform automatic cleanup based on the lifetime, so we do not have to worry about freeing any GPU memory stored in a gpuarray object when we are done with it.

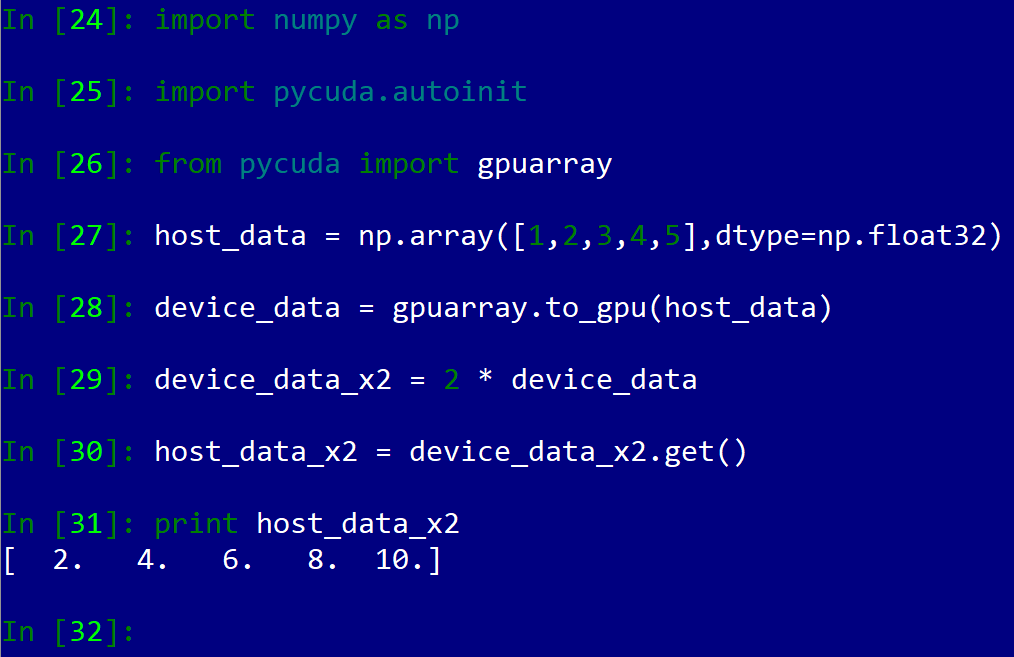

How exactly do we use this to transfer data from the host to the GPU? First, we must contain our host data in some form of NumPy array (let's call it host_data), and then use the gpuarray.to_gpu(host_data) command to transfer this over to the GPU and create a new GPU array.

Let's now perform a simple computation within the GPU (pointwise multiplication by a constant on the GPU), and then retrieve the GPU data into a new with the gpuarray.get function. Let's load up IPython and see how this works (note that here we will initialize PyCUDA with import pycuda.autoinit):

One thing to note is that we specifically denoted that the array on the host had its type specifically set to a NumPy float32 type with the dtype option when we set up our NumPy array; this corresponds directly with the float type in C/C++. Generally speaking, it's a good idea to specifically set data types with NumPy when we are sending data to the GPU. The reason for this is twofold: first, since we are using a GPU for increasing the performance of our application, we don't want any unnecessary overhead of using an unnecessary type that will possibly take up more computational time or memory, and second, since we will soon be writing portions of code in inline CUDA C, we will have to be very specific with types or our code won't work correctly, keeping in mind that C is a statically-typed language.