We'll now be using our new knowledge of CUDA kernels to implement the parallel prefix algorithm, also known as the scan design pattern. We have already seen simple examples of this in the form of PyCUDA's InclusiveScanKernel and ReductionKernel functions in the previous chapter. We'll now look into this idea in a little more detail.

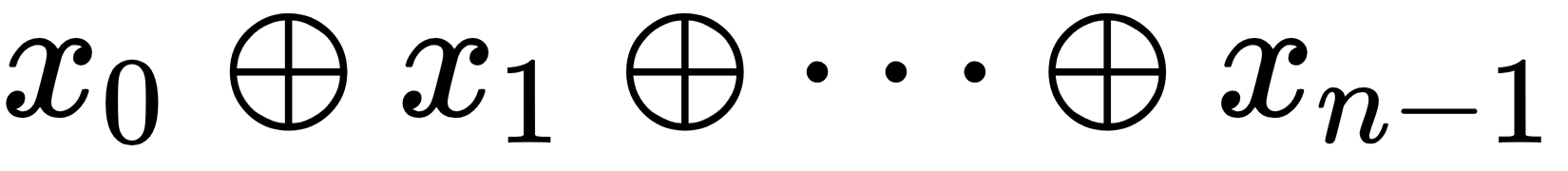

The central motivation of this design pattern is that we have a binary operator  , that is to say a function that acts on two input values and gives one output value (such as—+,

, that is to say a function that acts on two input values and gives one output value (such as—+,  ,

,  (maximum),

(maximum),  (minimum)), and collection of elements,

(minimum)), and collection of elements,  , and from these we wish to compute

, and from these we wish to compute  efficiently. Furthermore, we make the assumption that our binary operator

efficiently. Furthermore, we make the assumption that our binary operator  is associative—this means that, for any three elements, x, y, and z, we always have:

is associative—this means that, for any three elements, x, y, and z, we always have: .

.

We wish to retain the partial results, that is the n - 1 sub-computations— . The aim of the parallel prefix algorithm is to produce this collection of n sums efficiently. It normally takes O(n) time to produce these n sums in a serial operation, and we wish to reduce the time complexity.

. The aim of the parallel prefix algorithm is to produce this collection of n sums efficiently. It normally takes O(n) time to produce these n sums in a serial operation, and we wish to reduce the time complexity.

. (This is the case with PyCUDA.)

. (This is the case with PyCUDA.)There are actually several variations of the parallel prefix algorithm, and we'll first start with the simplest (and oldest) version first, which is called the naive parallel prefix algorithm.