Let's first briefly review how to create a single host thread in Python that can return a value to the host with a simple example. (This example can also be seen in the single_thread_example.py file under 5 in the repository.) We will do this by using the Thread class in the threading module to create a subclass of Thread, as follows:

import threading

class PointlessExampleThread(threading.Thread):

We now set up our constructor. We call the parent class's constructor and set up an empty variable within the object that will be the return value from the thread:

def __init__(self):

threading.Thread.__init__(self)

self.return_value = None

We now set up the run function within our thread class, which is what will be executed when the thread is launched. We'll just have it print a line and set the return value:

def run(self):

print 'Hello from the thread you just spawned!'

self.return_value = 123

We finally have to set up the join function. This will allow us to receive a return value from the thread:

def join(self):

threading.Thread.join(self)

return self.return_value

Now we are done setting up our thread class. Let's start an instance of this class as the NewThread object, spawn the new thread by calling the start method, and then block execution and get the output from the host thread by calling join:

NewThread = PointlessExampleThread()

NewThread.start()

thread_output = NewThread.join()

print 'The thread completed and returned this value: %s' % thread_output

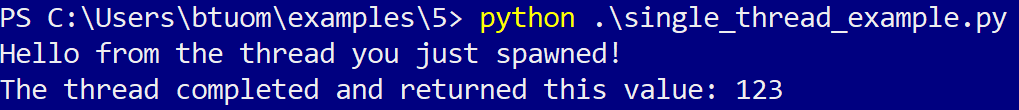

Now let's run this:

Now, we can expand this idea among multiple concurrent threads on the host to launch concurrent CUDA operations by way of multiple contexts and threading. We will now look at one last example. Let's re-use the pointless multiply/divide kernel from the beginning of this chapter and launch it within each thread that we spawn.

First, let's look at the imports. Since we are making explicit contexts, remember to remove pycuda.autoinit and add an import threading at the end:

import pycuda

import pycuda.driver as drv

from pycuda import gpuarray

from pycuda.compiler import SourceModule

import numpy as np

from time import time

import threading

We will use the same array size as before, but this time we will have a direct correspondence between the number of the threads and the number of the arrays. Generally, we don't want to spawn more than 20 or so threads on the host, so we will only go for 10 arrays. So, consider now the following code:

num_arrays = 10

array_len = 1024**2

Now, we will store our old kernel as a string object; since this can only be compiled within a context, we will have to compile this in each thread individually:

kernel_code = """

__global__ void mult_ker(float * array, int array_len)

{

int thd = blockIdx.x*blockDim.x + threadIdx.x;

int num_iters = array_len / blockDim.x;

for(int j=0; j < num_iters; j++)

{

int i = j * blockDim.x + thd;

for(int k = 0; k < 50; k++)

{

array[i] *= 2.0;

array[i] /= 2.0;

}

}

}

"""

Now we can begin setting up our class. We will make another subclass of threading.Thread as before, and set up the constructor to take one parameter as the input array. We will initialize an output variable with None, as we did before:

class KernelLauncherThread(threading.Thread):

def __init__(self, input_array):

threading.Thread.__init__(self)

self.input_array = input_array

self.output_array = None

We can now write the run function. We choose our device, create a context on that device, compile our kernel, and extract the kernel function reference. Notice the use of the self object:

def run(self):

self.dev = drv.Device(0)

self.context = self.dev.make_context()

self.ker = SourceModule(kernel_code)

self.mult_ker = self.ker.get_function('mult_ker')

We now copy the array to the GPU, launch the kernel, and copy the output back to the host. We then destroy the context:

self.array_gpu = gpuarray.to_gpu(self.input_array)

self.mult_ker(self.array_gpu, np.int32(array_len), block=(64,1,1), grid=(1,1,1))

self.output_array = self.array_gpu.get()

self.context.pop()

Finally, we set up the join function. This will return output_array to the host:

def join(self):

threading.Thread.join(self)

return self.output_array

We are now done with our subclass. We will set up some empty lists to hold our random test data, thread objects, and thread output values, similar to before. We will then generate some random arrays to process and set up a list of kernel launcher threads that will operate on each corresponding array:

data = []

gpu_out = []

threads = []

for _ in range(num_arrays):

data.append(np.random.randn(array_len).astype('float32'))

for k in range(num_arrays):

threads.append(KernelLauncherThread(data[k]))

We will now launch each thread object, and extract its output into the gpu_out list by using join:

for k in range(num_arrays):

threads[k].start()

for k in range(num_arrays):

gpu_out.append(threads[k].join())

Finally, we just do a simple assert on the output arrays to ensure they are the same as the input:

for k in range(num_arrays):

assert (np.allclose(gpu_out[k], data[k]))

This example can be seen in the multi-kernel_multi-thread.py file in the repository.