Machine translation is a sub-field of computational linguistics, and is about performing translation of text or speech from one language to another. Traditional machine translation systems typically rely on sophisticated feature engineering based on the statistical properties of text. Recently, deep learning has been used to solve this problem, with an approach known as Neural Machine Translation (NMT). An NMT system typically consists of two modules: an encoder and a decoder.

It first reads the source sentence using the encoder to build a thought vector: a sequence of numbers that represents the sentence's meaning. A decoder processes the sentence vector to emit a translation to other target language. This is called an encoder-decoder architecture. The encoders and decoders are typically forms of RNN. The following diagram shows an encoder-decoder architecture using stacked LSTMs. Here the first layer is an embedding layer used to represent the words in the source language by dense real vectors. The vocabulary is predefined for both the source language and the target language. Words not in the vocabulary are denoted by a fixed word, <unknown>, and are represented by a fixed embedding vector. The input to this network is the source sentence at first, then an end of sentence marker <s>, which indicates the transition from the encoding to the decoding mode, and then the target sentence is fed in:

The input embedding layer is followed by two stacked LSTM layers. Then, the projection layer turns the top hidden states into logit vectors of dimension V (the vocabulary size for the target language). Here cross-entropy loss is used to train the network with backpropagation. We see in training mode that both the source and target sentences are input to the network. In inference mode, we will have only the source sentence. In that case, the decoding can be done by several methods, such as greedy decoding, attention mechanisms combined with greedy decoding, and beam search decoding. We will cover the first two methods here:

In the greedy decoding scheme (see the left-hand diagram of the two preceding diagrams), we choose the most likely word, depicted by maximum logit value as the emitted word, before feeding it back to the decoder as the input. This decoding process continues until the end-of-sentence marker, </s>, is produced as an output symbol.

The context vector generated by the source sentence's end-of-sentence marker must encode everything we need to know about the source sentence. It must fully capture its meaning. For long sentences, this means we need to store very long-term memory. Researchers have found that reversing the source sequence or feeding the source sequence twice helps the network remember things better. For languages like French and German, which are quite similar to English, reversing the input makes sense. For Japanese, the last word of a sentence may be highly predictive of the first word in an English translation. So, here reversing can degrade the quality of translation. So, an alternative solution is to use an attention mechanism (shown in the right-hand diagram of the preceding two diagrams).

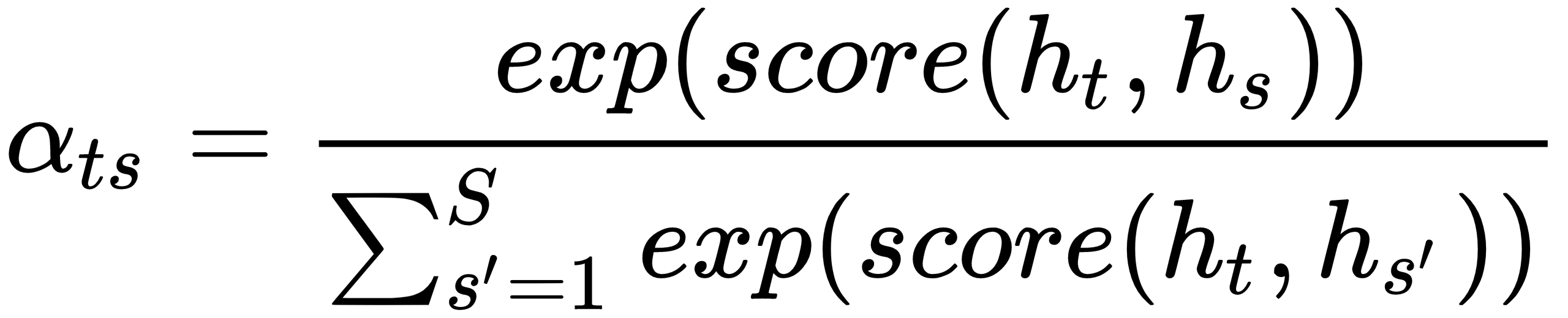

Now, instead of trying to encode the full source sentence into a fixed-length vector, the decoder is allowed to attend to different parts of the source sentence at each step of the output generation. Hence, we represent the attention-based context vector, ct, for the tth target language word as a weighted sum of all the previous source hidden states:  . The attention weights are

. The attention weights are  , and the score is calculated as follows: score(ht, hs) = htWhs.

, and the score is calculated as follows: score(ht, hs) = htWhs.

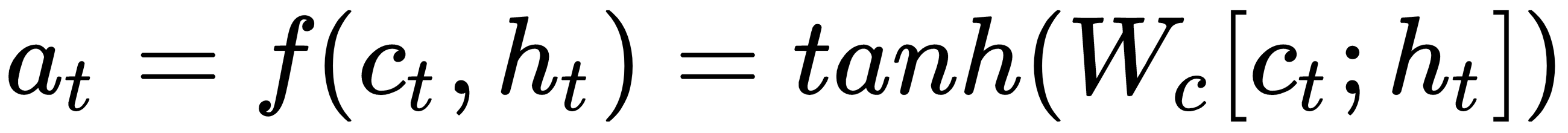

Here W is a weight matrix that will be jointly learned with the RNN weights. This score function is called Luong's multiplicative style score. There are a few other variants of this score as well. Finally, the attention vector, at, is calculated by combining the context vector with the current target hidden state as follows:  .

.

The attention mechanism is like read-only memory, where all previous hidden states of source are stored and then read at the time of decoding. The source code for NMT in TensorFlow is available here: https://github.com/tensorflow/nmt/.