Workload management

Workload management is the process of defining performance goals for the items of work in a system (such as in a CICSplex or across the network). Using a workload manager technology, the workload can be allocated to meet those performance goals. Workload management consists of two main features:

•Load balancing

•Affinity management

Load balancing (workload balancing) refers to the distribution of requests across multiple, available target regions. This allows you to increase the overall throughput of the system. There are different methods of workload balancing:

•The first approach is to use a predefined round-robin or randomized technique.

•The second approach is to use an advisor that checks the availability of the target servers before selecting the server to route the request to.

•The third approach is to have performance monitors in each server providing statistics that can be used to select a server based on its overall level.

Affinity management provides a way to manage your requests to a specific server instance, such as a CICS region or Liberty server. Using affinities means that you have the ability to route subsequent requests in a logical conversation to the same server that serviced the first request. This is a characteristic of a CICS application that constrains transactions to run in a specific region or server due to the presence of shared state, such as temporary storage. For web applications, affinities can be an issue when load balancing HTTP requests because of the use of HTTP session data. For more information about this, see 3.2.3, “HTTP session management” on page 52.

Within CICS, the ability to distribute the workload across multiple resources is important because it can prevent a CICS region from becoming constrained, and allow multiple CICS regions to be better used. There are different methods that can be used to provide high system availability and workload management for CICS web applications running in a Liberty JVM. In this section, we discuss workload balancing as it relates to the following technologies:

3.1 IP load balancing

To avoid being dependent on a single CICS router region, consider using more than one Liberty owning region to share the incoming HTTP connection from the network. You can use several techniques to balance the connections between your Liberty owning regions. The z/OS Communications Server integrates TCP/IP and IBM Workload Manager (WLM) on z/OS to provide two main technologies that can be used:

•Port sharing

•Sysplex Distributor

3.1.1 Port sharing

TCP/IP port sharing is a simple way to balance HTTP connections across a group of cloned Liberty JVM servers, providing failover and workload balancing across multiple Liberty owning regions within a single logical partition (LPAR). This load balancing is based primarily on the number of established sockets to each port.

Each Liberty server is configured to listen on the same TCP/IP port number, with either the SHAREPORT or SHAREPORTWLM option specified in the TCP/IP stack profile. This enables a group of cloned regions to listen on the same port. As the incoming client connections arrive for this port, TCP/IP distributes them across the available CICS regions listening on this shared port using a weighted round-robin approach. TCP/IP selects the CICS region with the least number of connections at the time of the incoming client connection request. SHAREPORT selects a CICS region based on a round-robin distribution. Figure 3-2 on page 48 illustrates configuring port sharing across cloned CICS regions. This configuration allows multiple Liberty servers to listen on the same port from the same IP stack. In the example that is shown in Figure 3-2 on page 48, CICSA and CICSB have Liberty servers that are listening on port 1234. All requests addressing this IP stack’s port 1234 are distributed between these CICS regions, in a round-robin fashion.

Figure 3-1 TCP/IP SHAREPORT configuration

Port sharing is ideal for failover scenarios because it can rapidly detect failing regions. It also works in tandem with the second option provided by TCP/IP, Sysplex Distributor, to provide a combined high-availability approach across CICS regions in the same LPAR and across different LPARs in a sysplex.

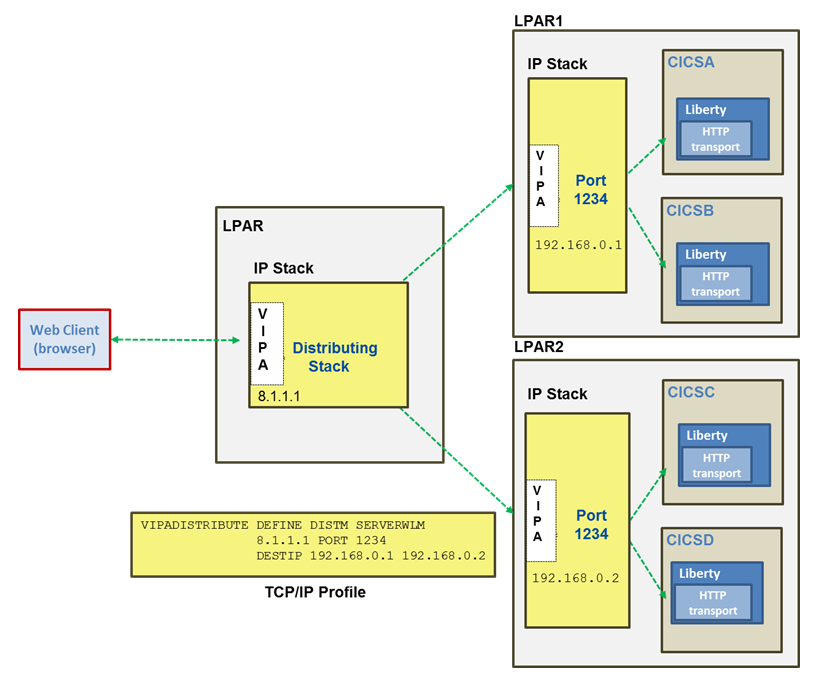

3.1.2 Sysplex Distributor

The second option is to set up an Internet Protocol network with Sysplex Distributor, which is designed to address the requirement of one single network-visible IP address for the whole of the sysplex.

Sysplex Distributor uses dynamic virtual IP addresses (DVIPAs) to increase availability and help distribute the workload by allowing connections to be distributed across listeners on multiple IP stacks within a sysplex. This option must be used if the CICS regions reside on separate LPARs or connect to separate IP stacks within the same LPAR.

With this function, you can implement a DVIPA as a single network-visible IP address that is used for a set of servers belonging to the same sysplex. A client on the IP network sees the sysplex as one IP address, regardless of the number of servers in the backend. With Sysplex Distributor, clients receive the benefits of workload distribution and a failover mechanism that is positioned at the entry to the sysplex.

There are several advantages to using Sysplex Distributor over other load balancing implementations:

•Cross-system coupling facility (XRF) links can be used between the distributing stack and target servers.

•Sysplex Distributor provides a total z/OS solution for TCP/IP workload distribution.

•Sysplex Distributor provides real-time load balancing for TCP/IP applications, even if clients cache the IP address of the server.

•Sysplex Distributor provides for takeover of the virtual IP address (VIPA) by a backup system if the distributing stack fails.

•Sysplex Distributor enables nondisruptive take back of the VIPA original owner to get the workload to where it belongs. The distributing function can be backed up and taken over.

HTTP listeners for multiple Liberty servers can be configured to listen on the same distributed DVIPA and port, and each VIPA is defined with the VIPADISTRIBUTE option. The TCP/IP stack then balances connection requests across the listeners.

Figure 3-2 Sysplex Distributor with TCP port sharing

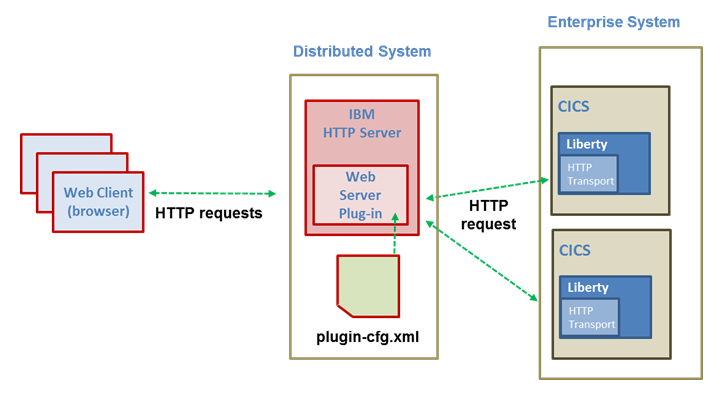

3.2 Web server plug-in

The web server plug-in is provided with WebSphere Application Server or WebSphere Application Server Liberty Profile and is licensed for usage with CICS TS. The plug-in acts as an HTTP client that forwards the HTTP requests from the web server to the application server, which in this case is the Liberty server in a CICS region. It is implemented as a library that needs to be installed into the web server and is loaded at startup, and uses the HTTP protocol to redirect HTTP requests from the web server to the Liberty server in CICS.

The web server can either run on the same z/OS system as the application server Figure 3-4, or on a different system than the application server, as shown in Figure 3-3.

Figure 3-3 Remote web server plug-in scenario

Figure 3-4 Local web server plug-in scenario

There are several reasons why you might want to use a web server and associated plug-in with a web application deployed to a Liberty JVM server:

•The plug-in provides workload management and failover capabilities by distributing requests evenly to multiple Liberty servers and routing requests away from a failed Liberty server.

•The web server provides an additional hardware layer between the web browser and the application server, which can be incorporated into a secure DMZ architecture. Existing HTTP-based security procedures, such as SSL client authentication, can be used in the DMZ layer to protect the enterprise systems and then valid requests forwarded onto the enterprise system by the plug-in.

•Encrypted SSL connections can be terminated at the web server, thus removing the requirement for SSL processing in the enterprise system.

•The plug-in provides integration with a web server for the serving of static content without doing a full round-trip to the application server and back. For more information about optimizing the serving static content, see Chapter 7, “Configuring the web server plug-in” on page 133.

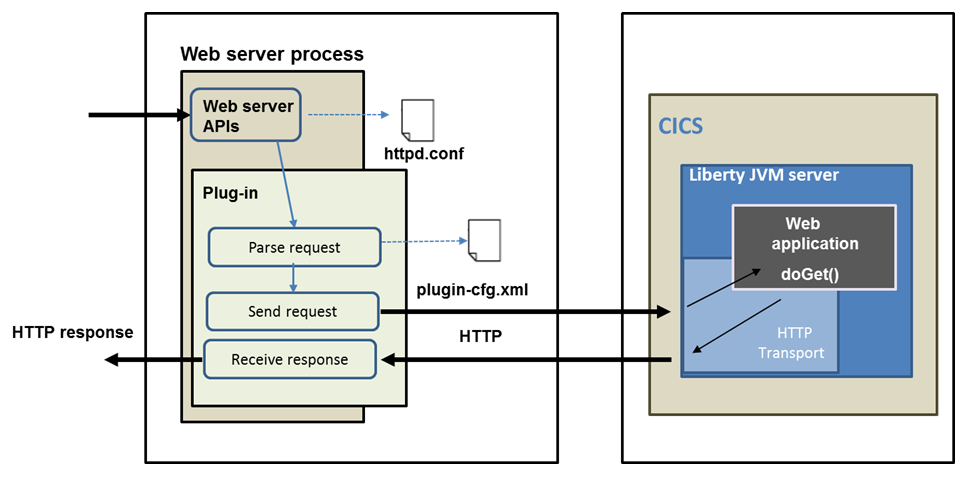

Figure 3-5 Web server plug-in processing

The web server plug-in consists of the following components (Figure 3-5):

•Web server process: This receives the request first

•The httpd.conf file: Contains the reference to the plug-in.so/dll file and reference to the plug-in configuration file

•The web server plug-in: This is loaded by the web server during startup and runs inside the same process as the web server

•The plug-in-cfg.xml file: The plug-in configuration file, which contains information about which URLs are served by the application servers

•The web container: In the CICS scenario, this is the Liberty JVM server that handles the request

The web server gives the plug-in an opportunity to handle every request first. It takes a request and checks the request against the configuration data. If the configuration data maps the URL for the HTTP request to the host name of an application server, the plug-in then uses this information to forward the request on to the application server. But if the plug-in is not configured to forward the request (URL), the request goes back to the web server for servicing.

The plug-in configuration file needs to be regenerated and propagated to the web servers when there are changes to your Liberty server configuration that affect how requests are routed from the web server to the application server. These changes include:

•Installing an application

•Creating or changing a virtual host

•Creating a new Liberty server

•Modifying HTTP transport settings

3.2.1 Load balancing

To do load balancing using plug-in files, the web server maintains information about the back-end web containers and applications. Based on these configured settings, the web server forwards requests to its corresponding back-end web container. The plug-in configuration file contains all of the information about the target servers, such as the application URLs, session affinities, and weightings.

The plug-in can be configured to route requests using one of two load balancing methods:

•Round-robin load balancing is the default algorithm where the workload is distributed across the group of servers based on the configured LoadBalanceWeights. The first request that arrives is routed to a randomly selected server. After that, the plug-in uses the next server in the list, and so forth. Each time that a server is chosen, the load balance weight is decremented by one. Any server with a weight below 0 is longer considered for new route requests. But, affinity requests still go through to that server. When all servers have a weight of less than 0, the plug-in resets the weights to the starting values.

•Random load balancing is where the requests are forwarded among the group of servers on a random basis. This approach does not use the load balance weight.

Using the web server plug-in, you can distribute the load between a single web server and multiple Liberty JVM servers running in CICS on z/OS. However, the load balancing options are impacted by the session affinity. After a session is created at the first request, all the subsequent requests have to be served by the same Liberty server. The plug-in retrieves the Liberty server that serviced the previous request by analyzing the session identifier and tries to route to this same server.

3.2.2 Failover

When the plug-in can no longer connect to or receive a response from a specific Liberty server, the session affinity is broken. This is considered a plug-in failover. This results in the Liberty server being marked as down. Therefore, the web server plug-in no longer attempts to route requests to that server. There are three plug-in properties that affect failover directly.

ServerIOTimeout is the number of seconds the plug-in waits for a response from the Liberty server before it times out the request. The recommended value is 120 seconds. Failover typically does not occur the first time that this time limit is exceeded for either a request or a response. Instead, the web server plug-in tries to resend the request to the same Liberty server, using a new stream. If the specified time is exceeded a second time, the web server plug-in marks the server as unavailable, and initiates the failover process.

ConnectTimeout is the number of seconds the plug-in should wait for a response when trying to open a socket connection to the Liberty server. The default is 0, which means to never time out. It is recommended to keep this value small. If a request connection exceeds this limit, or the Liberty server returns an HTTP 5xx response, the web server plug-in marks the server as down, and attempts to connect to the next application server in the list. If the plug-in successfully connects to another Liberty server, all requests that were pending for the down Liberty server are sent to this other server.

RetryInterval is the number of seconds the plug-in waits before attempting to route requests to a Liberty server that was previously marked as down. The recommended value is 60 seconds as most servers are recoverable.

If a Liberty server responds to an HTTP request with an application failure error, such as the HTTP Not Found error 404, the plug-in does not view this as a permanent failure. This response code will be returned by a Liberty JVM server for unknown URLs during the JVM server startup process as Liberty applications are being started.

3.2.3 HTTP session management

In some web applications, application state date will be dynamically created as users navigate through the website. Where the user goes next, and what the application displays as the user’s next page, or next choice, depends on what the user has chosen previously from the site. In order for this to occur, a web application needs a mechanism to hold the user’s state information over a period of time. However, HTTP does not recognize or maintain a user’s state. It treats each user request as a discrete, independent interaction.

The Java EE specification provides a mechanism known as a session for web applications to maintain a user’s state information. A session needs to be serviced all within the same JVM server. Otherwise, each JVM will see only one or two requests and get confused. This is known as a session affinity. The web server plug-in assures the session affinity is maintained in the following way: Each server Clone ID is appended to the session ID. When an HTTP session is created, its ID is passed back to the browser as part of a cookie or URL encoding. When the browser makes further requests, the cookie or URL encoding is sent back to the web server. The web server plug-in examines the HTTP session ID in the cookie or URL encoding, extracts the unique ID of the cluster member handling the session, and forwards the request.

The application server ID can be seen in the web server plug-in configuration file, as shown in Example 3-1.

Example 3-1 Server ID from the plug-in-cfg.xml file

<?xml version="1.0" encoding="ISO-8859-1"?>

<!--HTTP server plug-in config file for the cell ITSOCell generated on 2004.10.15 at 07:21:03 PM BST-->

<Config>

......

<ServerCluster Name="MyCluster">

<Server CloneID="vuel491u" LoadBalanceWeight="2" Name="NodeA_server1">

<Transport Hostname="wan" Port="9080" Protocol="http"/>

<Transport Hostname="wan" Port="9443" Protocol="https">

......

</Config>

Liberty keeps information about the user’s session on the server. It passes the user a session ID, which correlates an incoming user request with a session object maintained on the server. By default, Liberty stores session objects in memory. When session data must be maintained across a server restart or an unexpected failure, you can configure the Liberty server to persist the session data to a database using the sessionDatabase-1.0 feature. This configuration allows multiple servers to share the same session data, and session data can be recovered if there is a failover.

For more information about how to set up and configure the web server plug-in for usage with CICS Liberty, see Chapter 8, “Implementing security options” on page 143.

3.3 CICSPlex SM workload management

Workload management in CICS can be achieved by dynamically routing work between several CICS regions. You have to manage several CICS address spaces to manage the workload. You can choose to run these regions as separately connected systems in a multiregion operation (MRO) complex, or you could define them as CICSPlex System Manager (CICSPlex SM)-managed regions. Multiple CICS regions can communicate with each other and cooperate to handle inbound work requests. This specialized type of cluster is called a CICSplex.

Typically a CICSplex consists of one or more terminal-owning regions (TORs) connected to a group of application-owning regions (AORs). The workload originates in the TORs and is then routed to one of the available AORs. For web applications, the work is not initiated from a terminal, but from a web browser that sends an HTTP request to the HTTP listener in a Liberty JVM server. We term each of these CICS regions hosting a Liberty JVM server as a Liberty-owning region (LOR), each of which can then use dynamic routing of distributed program link (DPL) requests to the business logic in a set of remote AORs.

Figure 3-6 CICSPlex SM dynamic routing with Liberty

Using dynamic routing can provide high availability as well as manage the workload within a CICS sub-system. CICSPlex SM Workload Management (WLM) can be used to distribute the workload between multiple target regions. It augments its workload management routing decisions by using the current status information posted from the CICS regions. To optimize the workload routing in a sysplex, you must configure and monitor a region status (RS) server as part of a coupling facility data table.

CICSPlex SM Workload Management facilities create a list of suitable target candidate CICS regions based on:

•The transaction

•The terminal ID, LU-name, user ID, or process type

The list of candidate targets is based on the workload to which the requesting or routing region belongs.

CICSPlex SM does not do the routing. CICS still performs the routing based on information received from CICSPlex SM.

CICSPlex SM Workload Management is based on workloads. The workload determines the routing regions the workload will apply and the behavior of the work entering the routers using routing rules. When you establish a workload, you associate the work itself with the CICS regions to form a single, dynamic entity. Within this entity, you can route the work in two ways:

•Using workload balancing: You can route the task to a target region that is selected based on the availability and activity level of the regions.

•Using workload separation: You can route the task to a subset of the target regions based on a specific criteria.

3.3.1 Workload balancing

Workload balancing is the process that decides which target region is considered the most suitable to route the task to, assuming that it is possible to send work there, and it does not have an affinity in place. It is the most basic configuration because it allows you to balance the dynamic transactions and program links across all of the available target CICS regions that are associated with the workload. It does not distribute the work evenly or consistently. It simply provides CICS with the best target region at the moment of the request from all of the possible candidates. Workload balancing uses a combination of health factors to determine which region is the best fit and requires that three items are in place:

•A workload specification (WLMSPEC) to identify the default target scope for the workload. This is associated with each CICS region that specifies EYU9XLOP as the dynamic routing program or distributed routing program in the system initialization table (SIT).

•A CICS system definition (CSYSDEF) for each CICS system that specifies the Workload Manager status of the system.

•The CICS system connects to a CICSPlex SM address space (CMAS) allowing the workload management facility to initialize for transaction routing.

Figure 3-7 Monitoring of health factors for workload balancing

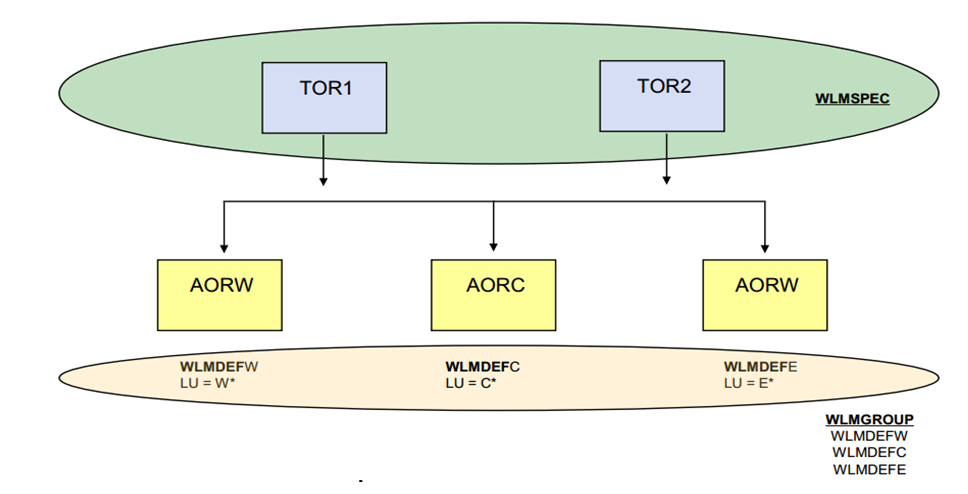

3.3.2 Workload separation

Workload separation allows you to separate tasks and program occurrences and direct them to a subset of target regions. The workload can be balanced across this subset of target regions. The target scope for a separated workload can vary from a single AOR to a large AOR group consisting of multiple CICS regions. If an AOR group is the target, the routing algorithm is applied to select the most suitable region from those defined to it.

The criteria that you use to separate transactions or programs can be based on the following items:

•The terminal ID and user ID that are associated with a transaction or program occurrence

•The process type that is associated with the CICS business transaction services (BTS) activity

•The transaction ID

•A selected target region based on its affinity relationship and lifetime

To perform workload separation, you also need a few more resources:

•A transaction group (TRANGRP) to identify the characteristics of a set of dynamic transactions.

•The WLM definition (WLMDEF) to identify the target scope used to route the transaction in the TRANGRP.

•A WLM group (WLMGROUP) that contains one or more WLMDEFs that are to be installed together. The WLMGROUP can be associated with a WLMSPEC in case all of the child WLMDEFs are installed at workload initialization.

Figure 3-8 Resources needed for workload separation

Workload balancing and workload separation can be active concurrently in the same or different workloads associated with a CICSplex. Workload balancing can be used in addition to, or in place of, workload separation.

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.