Hints, tips, and preferred practices

This chapter provides you with hints, tips, and preferred practices for the IBM Spectrum Archive Enterprise Edition (IBM Spectrum Archive EE). It covers various aspects about IBM Spectrum Scale, including reuse of tape cartridges, scheduling, and disaster recovery (DR). Some aspects might overlap with functions that are described in Chapter 6, “Managing daily operations of IBM Spectrum Archive Enterprise Edition” on page 129, and Chapter 9, “Troubleshooting IBM Spectrum Archive Enterprise Edition” on page 293. However, it is important to list them here in the context of hints, tips, and preferred practices.

This chapter includes the following topics:

•7.26, “Backing up files within file systems that are managed by IBM Spectrum Archive EE” on page 264

|

Important: All of the command examples in this chapter use the command without the full file path name because we added the IBM Spectrum Archive EE directory (/opt/ibm/ltfsee/bin) to the PATH variable of the operating system.

|

7.1 Preventing migration of the .SPACEMAN and metadata directories

This section describes an IBM Spectrum Scale policy rule that you should have in place to help ensure the correct operation of your IBM Spectrum Archive EE system.

You can prevent migration of the .SPACEMAN directory and the IBM Spectrum Archive EE metadata directory of a IBM General Parallel File System (GPFS) file system by excluding these directories by having an IBM Spectrum Scale policy rule in place. Example 7-1 shows how an exclude statement can look in an IBM Spectrum Scale migration policy file where the metadata directory starts with the text “/ibm/glues/.ltfsee”.

Example 7-1 IBM Spectrum Scale sample directory exclude statement in the migration policy file

define(

user_exclude_list,

(

PATH_NAME LIKE '/ibm/glues/.ltfsee/%'

OR PATH_NAME LIKE '%/.SpaceMan/%'

OR PATH_NAME LIKE '%/.snapshots/%'

)

)

For more information and detailed examples, see 6.11.2, “Threshold-based migration” on page 168 and 6.11.3, “Manual migration” on page 173.

7.2 Maximizing migration performance with redundant copies

To minimize drive mounts/unmounts and to maximize performance with multiple copies, set the mount limit per tape cartridge pool to equal the number of tape drives in the node group divided by the number of copies. The mount limit attribute of a tape cartridge pool specifies the maximum allocated number of drives that are used for migration for the tape cartridge pool. A value of 0 means no limit and is also the default value.

For example, if there are four drives and two copies initially, set the mount limit to 2 for the primary tape cartridge pool and 2 for the copy tape cartridge pool. These settings maximize the migration performance because both the primary and copy jobs are run in parallel by using two tape drives each for each tape cartridge pool. This action also avoids unnecessary mounts/unmounts of tape cartridges.

To show the current mount limit setting for a tape cartridge pool, run the following command:

eeadm pool show <poolname> [-l <libraryname>] [OPTIONS]

To set the mount limit setting for a tape cartridge pool, run the following command:

eeadm pool set <poolname> [-l <libraryname>] -a <attribute> -v <value>

To set the mount limit attribute to 2, run the eeadm pool show and eeadm pool set commands, as shown in Example 7-2.

Example 7-2 Set the mount limit attribute to 2

[root@saitama1 ~]# eeadm pool show pool1 -l lib_saitama

Attribute Value

poolname pool1

poolid 813ee595-2191-4e32-ae0e-74714715bb43

devtype 3592

mediarestriction none

format E08 (0x55)

worm no (0)

nodegroup G0

fillpolicy Default

owner System

mountlimit 0

lowspacewarningenable yes

lowspacewarningthreshold 0

nospacewarningenable yes

mode normal

[root@saitama1 ~]# eeadm pool set pool1 -l lib_saitama -a mountlimit -v 2

[root@saitama1 ~]# eeadm pool show pool1 -l lib_saitama

Attribute Value

poolname pool1

poolid 813ee595-2191-4e32-ae0e-74714715bb43

devtype 3592

mediarestriction none

format E08 (0x55)

worm no (0)

nodegroup G0

fillpolicy Default

owner System

mountlimit 2

lowspacewarningenable yes

lowspacewarningthreshold 0

nospacewarningenable yes

mode normal

7.3 Changing the SSH daemon settings

The default values for MaxSessions and MaxStartups are too low and must increase to allow for successful operations with IBM Spectrum Archive EE. MaxSessions specifies the maximum number of open sessions that is permitted per network connection. The default is 10.

MaxStartups specifies the maximum number of concurrent unauthenticated connections to the SSH daemon. More connections are dropped until authentication succeeds or the LoginGraceTime expires for a connection. The default is 10:30:100, which indicates:

•10 (start): Threshold of unauthenticated connections. The daemons will start to drop connections from this point on

•30 (rate): Percentage chance of dropping once the start value is reached (increases linearly beyond start value)

•100 (full): Maximum number of connections. All connection attempts will be dropped beyond this point

To change MaxSessions to 60 and MaxStartups to 1024, complete the following steps:

1. Edit the /etc/ssh/sshd_config file to set the MaxSessions and MaxStartups values:

MaxSessions = 60

MaxStartups = 1024

2. Restart the sshd service by running the following command:

systemctl restart sshd.service

|

Note: If SSH is slow, this might indicate that several things might be wrong. Often disabling GSSAPI Authentication and reversing DNS lookup will resolve this problem and speed up SSH. Thus, set the following lines in the sshd_config file:

GSSAPIAuthentication no

UseDNS no

|

7.4 Setting mmapplypolicy options for increased performance

The default values of the mmapplypolicy command options must be changed when running with IBM Spectrum Archive EE. The values for these three options should be increased for enhanced performance:

•-B MaxFiles

Specifies how many files are passed for each invocation of the EXEC script. The default value is 100. If the number of files exceeds the value that is specified for MaxFiles, mmapplypolicy starts the external program multiple times.

The preferred value for IBM Spectrum Archive EE is 10000.

•-m ThreadLevel

The number of threads that are created and dispatched within each mmapplypolicy process during the policy execution phase. The default value is 24.

The preferred value for IBM Spectrum Archive EE is 2x the number of drives.

•--single-instance

Ensures that, for the specified file system, only one instance of mmapplypolicy that is started with the --single-instance option can run at one time. If another instance of mmapplypolicy is started with the --single-instance option, this invocation does nothing and terminates.

As a preferred practice, set the --single_instance option when running with IBM Spectrum Archive EE.

•-s LocalWorkDirectory

Specifies the directory to be used for temporary storage during mmapplypolicy command processing. The default directory is /tmp. The mmapplypolicy command stores lists of candidate and chosen files in temporary files within this directory.

When you run mmapplypolicy, it creates several temporary files and file lists. If the specified file system or directories contain many files, this process can require a significant amount of temporary storage. The required storage is proportional to the number of files (NF) being acted on and the average length of the path name to each file (AVPL).

To make a rough estimate of the space required, estimate NF and assume an AVPL of 80 bytes. With an AVPL of 80, the space required is roughly 300 X NF bytes of temporary space.

•-N {all | mount | Node[,Node...] | NodeFile | NodeClass}

Specifies a set of nodes to run parallel instances of policy code for better performance. The nodes must be in the same cluster as the node from which the mmapplypolicy command is issued. All node classes are supported.

If the -N option is not specified, then the command runs parallel instances of the policy code on the nodes that are specified by the defaultHelperNodes attribute of the mmchconfig command. If the defaultHelperNodes attribute is not set, then the list of helper nodes depends on the file system format version of the target file system. If the target file system is at file system format version 5.0.1 or later (file system format number 19.01 or later), then the helper nodes are the members of the node class managerNodes. Otherwise, the command runs only on the node where the mmapplypolicy command is issued.

|

Note: When using -N option, specify only the node class defined for IBM Spectrum Archive EE nodes. This does not apply for cases where -I defer or -I prepare option is used.

|

•-g GlobalWorkDirectory

Specifies a global work directory in which one or more nodes can store temporary files during mmapplypolicy command processing. For more information about specifying more than one node to process the command, see the description of the -N option. For more information about temporary files, see the description of the -s option.

The global directory can be in the file system that mmapplypolicy is processing or in another file system. The file system must be a shared file system, and it must be mounted and available for reading and writing by every node that will participate in the mmapplypolicy command processing.

If the -g option is not specified, then the global work directory is the directory that is specified by the sharedTmpDir attribute of the mmchconfig command. If the sharedTmpDir attribute is not set to a value, then the global work directory depends on the file system format version of the target file system:

– If the target file system is at file system format version 5.0.1 or later (file system format number 19.01 or later), the global work directory is the .mmSharedTmpDir directory at the root level of the target file system.

– If the target file system is at a file system format version that is earlier than 5.0.1 then the command does not use a global work directory.

If the global work directory that is specified by -g option or by the sharedTmpDir attribute begins with a forward slash (/) then it is treated as an absolute path. Otherwise it is treated as a path that is relative to the mount point of the file system or the location of the directory to be processed.

If both the -g option and the -s option are specified, then temporary files can be stored in both the specified directories. In general, the local work directory contains temporary files that are written and read by a single node. The global work directory contains temporary files that are written and read by more than one node.

If both the -g option and the -N option are specified, then mmapplypolicy uses high-performance, fault-tolerant protocols during execution.

|

Note: It is always a preferred practice to specify the temp directory to something other than /tmp in case the temporary files get large. This can be the case in large file systems, and the use of the IBM Spectrum Scale file system is suggested.

|

7.5 Preferred inode size for IBM Spectrum Scale file systems

When you create the GPFS file systems, an option is available that is called -i InodeSize for the mmcrfs command. The option specifies the byte size of inodes. By default, the inode size is 4 KB and it consists of a fixed 128 byte header, plus data, such as disk addresses pointing to data, or indirect blocks, or extended attributes.

The supported inode sizes are 512, 1024, and 4096 bytes. Regardless of the file sizes, the preferred inode size is 4096 for all IBM Spectrum Scale file systems for IBM Spectrum Archive EE. This size should include user data file systems and the IBM Spectrum Archive EE metadata file system.

7.6 Determining the file states for all files within the GPFS file system

Typically, to determine the state of a file and to which tape cartridges the file is migrated, you run the eeadm file state command. However, it is not practical to run this command for every file on the GPFS file system.

In Example 7-3, the file is in the migrated state and is only on the tape cartridge JD0321JD.

Example 7-3 Example of the eeadm info files command

[root@saitama1 prod]# eeadm file state LTFS_EE_FILE_2dEPRHhh_M.bin

Name: /ibm/gpfs/prod/LTFS_EE_FILE_2dEPRHhh_M.bin

State: premigrated

ID: 11151648183451819981-3451383879228984073-1435527450-974349-0

Replicas: 1

Tape 1: JD0321JD@test4@lib_saitama (tape state=appendable)

Thus, use list rules in an IBM Spectrum Scale policy instead. Example 7-4 is a sample set of list rules to display files and file system objects. For those files that are in the migrated or premigrated state, the output line contains the tape cartridges on which that file is.

Example 7-4 Sample set of list rules to display the file states

define(

user_exclude_list,

(

PATH_NAME LIKE '/ibm/glues/.ltfsee/%'

OR PATH_NAME LIKE '%/.SpaceMan/%'

OR PATH_NAME LIKE '%/lost+found/%'

OR NAME = 'dsmerror.log'

)

)

define(

is_premigrated,

(MISC_ATTRIBUTES LIKE '%M%' AND MISC_ATTRIBUTES NOT LIKE '%V%')

)

define(

is_migrated,

(MISC_ATTRIBUTES LIKE '%V%')

)

define(

is_resident,

(NOT MISC_ATTRIBUTES LIKE '%M%')

)

define(

is_symlink,

(MISC_ATTRIBUTES LIKE '%L%')

)

define(

is_dir,

(MISC_ATTRIBUTES LIKE '%D%')

)

RULE 'SYSTEM_POOL_PLACEMENT_RULE' SET POOL 'system'

RULE EXTERNAL LIST 'file_states'

EXEC '/root/file_states.sh'

RULE 'EXCLUDE_LISTS' LIST 'file_states' EXCLUDE

WHERE user_exclude_list

RULE 'MIGRATED' LIST 'file_states'

FROM POOL 'system'

SHOW('migrated ' || xattr('dmapi.IBMTPS'))

WHERE is_migrated

RULE 'PREMIGRATED' LIST 'file_states'

FROM POOL 'system'

SHOW('premigrated ' || xattr('dmapi.IBMTPS'))

WHERE is_premigrated

RULE 'RESIDENT' LIST 'file_states'

FROM POOL 'system'

SHOW('resident ')

WHERE is_resident

AND (FILE_SIZE > 0)

RULE 'SYMLINKS' LIST 'file_states'

DIRECTORIES_PLUS

FROM POOL 'system'

SHOW('symlink ')

WHERE is_symlink

RULE 'DIRS' LIST 'file_states'

DIRECTORIES_PLUS

FROM POOL 'system'

SHOW('dir ')

WHERE is_dir

AND NOT user_exclude_list

RULE 'EMPTY_FILES' LIST 'file_states'

FROM POOL 'system'

SHOW('empty_file ')

WHERE (FILE_SIZE = 0)

The policy runs a script that is named file_states.sh, which is shown in Example 7-5. If the policy is run daily, this script can be modified to keep several versions to be used for history purposes.

Example 7-5 Example of file_states.sh

if [[ $1 == 'TEST' ]]; then

rm -f /root/file_states.txt

elif [[ $1 == 'LIST' ]]; then

cat $2 >> /root/file_states.txt

fi

To run the IBM Spectrum Scale policy, run the mmapplypolicy command with the -P option and the file states policy. This action produces a file that is called /root/file_states.txt, as shown in Example 7-6.

Example 7-6 Sample output of the /root/file_states.txt file

355150 165146835 0 dir -- /ibm/gpfs/prod

974348 2015134857 0 premigrated 1 JD0321JD@1d85a188-be4e-4ab6-a300-e5c99061cec4@ebc1b34a-1bd8-4c86-b4fb-bee7b60c24c7 -- /ibm/gpfs/prod/LTFS_EE_FILE_9_rzu.bin

974349 1435527450 0 premigrated 1 JD0321JD@1d85a188-be4e-4ab6-a300-e5c99061cec4@ebc1b34a-1bd8-4c86-b4fb-bee7b60c24c7 -- /ibm/gpfs/prod/LTFS_EE_FILE_2dEPRHhh_M.bin

974350 599546382 0 premigrated 1 JD0321JD@1d85a188-be4e-4ab6-a300-e5c99061cec4@ebc1b34a-1bd8-4c86-b4fb-bee7b60c24c7 -- /ibm/gpfs/prod/LTFS_EE_FILE_XH7Qwj5y9j2wqV4615rCxPMir039xLlt

68sSZn_eoCjO.bin

In the /root/file_states.txt file, the files states and file system objects can be easily identified for all IBM Spectrum Scale files, including the tape cartridges where the files or file system objects are.

7.7 Memory considerations on the GPFS file system for increased performance

To make IBM Spectrum Scale more resistant to out of memory scenarios, adjust the vm.min_free_kbytes kernel tunable. This tunable controls the amount of free memory that Linux kernel keeps available (that is, not used in any kernel caches).

When vm.min_free_kbytes is set to its default value, some configurations might encounter memory exhaustion symptoms when free memory should in fact be available. Setting vm.min_free_kbytes to a higher value of 5-6% of the total amount of physical memory, up to a max of 2 GB, helps to avoid such a situation.

To modify vm.min_free_kbytes, complete the following steps:

1. Check the total memory of the system by running the following command:

#free -k

2. Calculate 5-6% of the total memory in KB with a max of 2000000.

3. Add vm.min_free_kbytes = <value from step 2> to the /etc/sysctl.conf file.

4. Run sysctl -p /etc/sysctl.conf to permanently set the value.

7.8 Increasing the default maximum number of inodes in IBM Spectrum Scale

The IBM Spectrum Scale default maximum number of inodes is fine for most configurations. However, for large systems that might have millions of files or more, the maximum number of inodes to set at file system creation time might need to be changed or increased after file system creation time. The maximum number of inodes must be larger than the expected sum of files and file system objects being managed by IBM Spectrum Archive EE (including the IBM Spectrum Archive EE metadata files if there is only one GPFS file system).

Inodes are allocated when they are used. When a file is deleted, the inode is reused, but inodes are never deallocated. When setting the maximum number of inodes in a file system, there is an option to preallocate inodes. However, in most cases there is no need to preallocate inodes because by default inodes are allocated in sets as needed.

If you do decide to preallocate inodes, be careful not to preallocate more inodes than will be used. Otherwise, the allocated inodes unnecessarily consume metadata space that cannot be reclaimed.

Consider the following points when managing inodes:

•For file systems that are supporting parallel file creates, as the total number of free inodes drops below 5% of the total number of inodes, there is the potential for slowdown in the file system access. Take this situation into consideration when creating or changing your file system.

•Excessively increasing the value for the maximum number of inodes might cause the allocation of too much disk space for control structures.

To view the current number of used inodes, number of free inodes, and maximum number of inodes, run the following command:

mmdf Device

To set the maximum inode limit for the file system, run the following command:

mmchfs Device --inode-limit MaxNumInodes[:NumInodesToPreallocate]

7.9 Configuring IBM Spectrum Scale settings for performance improvement

The performance results in 3.7.2, “Planning for LTO-9 Media Initialization/Optimization” on page 63 were obtained by modifying the following IBM Spectrum Scale configuration attributes to optimize IBM Spectrum Scale I/O. In most environments, only a few of the configuration attributes need to be changed. The following values were found to be optimal in our lab environment and are suitable for most environments:

•pagepool = 50-60% of the physical memory of the server

•workerThreads = 1024

•numaMemoryInterleave = yes

•maxFilesToCache = 128k

For example, a file system block size should be 2 MB due to a disk subsystem of eight data disks plus one parity disk with a stripe size of 256 KB.

Refer to IBM Spectrum Scale Documentation for more details on cache related parameters such as maxFilesToCache and maxStatCache.

7.10 Use cases for mmapplypolicy

Typically, customers who use IBM Spectrum Archive with IBM Spectrum Scale manage one of two types of archive systems. The first is a traditional archive configuration where files are rarely accessed or updated. This configuration is intended for users who plan on keeping all their data on tape only. The second type is an active archive configuration. This configuration is more intended for users who continuously accesses the files. Each use case requires the creation of different IBM Spectrum Scale policies.

7.10.1 Creating a traditional archive system policy

A traditional archive system uses a single policy that scans the IBM Spectrum Archive name space for any files over 5 MB and migrates them to tape. This process saves the customer disk space immediately for new files to be generated. See “Using a cron job” on page 176 for information about how to automate the execution of this policy periodically.

|

Note: In the following policies, some optional attributes are added to provide efficient (pre)migration such as the SIZE attribute. This attribute specifies how many files to pass in to the EXEC script at a time. The preferred setting, which is listed in the following examples, is to set it to 20 GiB.

|

Example 7-7 shows a simple migration policy that chooses files greater than 5 MB to be candidate migration files and stubs them to tape. This is a good base policy that you can modify to your specific needs. For example, if you need to have files on three storage pools, modify the OPTS parameter to include a third <pool>@<library>.

Example 7-7 Simple migration file

define(user_exclude_list,(PATH_NAME LIKE '/ibm/gpfs/.ltfsee/%' OR PATH_NAME LIKE '/ibm/gpfs/.SpaceMan/%'))

define(is_premigrated,(MISC_ATTRIBUTES LIKE '%M%' AND MISC_ATTRIBUTES NOT LIKE '%V%'))

define(is_migrated,(MISC_ATTRIBUTES LIKE '%V%'))

define(is_resident,(NOT MISC_ATTRIBUTES LIKE '%M%'))

RULE 'SYSTEM_POOL_PLACEMENT_RULE' SET POOL 'system'

RULE EXTERNAL POOL 'LTFSEE_FILES'

EXEC '/opt/ibm/ltfsee/bin/eeadm'

OPTS '-p primary@lib_ltfseevm copy@lib_ltfseevm'

SIZE(20971520)

RULE 'LTFSEE_FILES_RULE' MIGRATE FROM POOL 'system'

TO POOL 'LTFSEE_FILES'

WHERE FILE_SIZE > 5242880

AND (CURRENT_TIMESTAMP - MODIFICATION_TIME > INTERVAL '5' MINUTES)

AND is_resident OR is_premigrated

AND NOT user_exclude_list

7.10.2 Creating active archive system policies

An active archive system requires two policies to maintain the system. The first is a premigration policy that selects all files over 5 MB to premigrate to tape, allowing users to still quickly obtain their files from disk. To see how to place this premigration policy into a cron job to run every 6 hours, see “Using a cron job” on page 176.

Example 7-8 shows a simple premigration policy for files greater than 5 MB.

Example 7-8 Simple premigration policy for files greater than 5 MB

define(user_exclude_list,(PATH_NAME LIKE '/ibm/gpfs/.ltfsee/%' OR PATH_NAME LIKE '/ibm/gpfs/.SpaceMan/%'))

define(is_premigrated,(MISC_ATTRIBUTES LIKE '%M%' AND MISC_ATTRIBUTES NOT LIKE '%V%'))

define(is_migrated,(MISC_ATTRIBUTES LIKE '%V%'))

define(is_resident,(NOT MISC_ATTRIBUTES LIKE '%M%'))

RULE 'SYSTEM_POOL_PLACEMENT_RULE' SET POOL 'system'

RULE EXTERNAL POOL 'LTFSEE_FILES'

EXEC '/opt/ibm/ltfsee/bin/eeadm'

OPTS '-p primary@lib_ltfseevm copy@lib_ltfseevm'

SIZE(20971520)

RULE 'LTFSEE_FILES_RULE' MIGRATE FROM POOL 'system'

THRESHOLD(0,100,0)

TO POOL 'LTFSEE_FILES'

WHERE FILE_SIZE > 5242880

AND (CURRENT_TIMESTAMP - MODIFICATION_TIME > INTERVAL '5' MINUTES)

AND is_resident

The second policy is a fail-safe policy that needs to be set so when a low disk space event is triggered, the fail-safe policy can be called. Adding the WEIGHT attribute to the policy enables the user to choose whether they want to start stubbing large files first or least recently used files. When the fail-safe policy starts running, it frees up the disk space to a set percentage.

The following commands are used for setting a fail-safe policy and calling mmadcallback:

•mmchpolicy gpfs failsafe_policy.txt

•mmaddcallback MIGRATION --command /usr/lpp/mmfs/bin/mmapplypolicy --event lowDiskSpace --parms “%fsName -B 20000 -m <2x the number of drives> --single-instance”

After setting the policy with the mmchpolicy command, run mmaddcallback with the fail-safe policy. This policy runs periodically to check whether the disk space has reached the threshold where stubbing is required to free up space.

Example 7-9 shows a simple failsafe_policy.txt, which gets triggered when the IBM Spectrum Scale disk space reaches 80% full, and stubs least recently used files until the disk space has 50% occupancy.

Example 7-9 failsafe_policy.txt

define(user_exclude_list,(PATH_NAME LIKE '/ibm/gpfs/.ltfsee/%' OR PATH_NAME LIKE '/ibm/gpfs/.SpaceMan/%'))

define(is_premigrated,(MISC_ATTRIBUTES LIKE '%M%' AND MISC_ATTRIBUTES NOT LIKE '%V%'))

define(is_migrated,(MISC_ATTRIBUTES LIKE '%V%'))

define(is_resident,(NOT MISC_ATTRIBUTES LIKE '%M%'))

RULE 'SYSTEM_POOL_PLACEMENT_RULE' SET POOL 'system'

RULE EXTERNAL POOL 'LTFSEE_FILES'

EXEC '/opt/ibm/ltfsee/bin/eeadm'

OPTS '-p primary@lib_ltfsee copy@lib_ltfsee copy2@lib_ltfsee'

SIZE(20971520)

RULE 'LTFSEE_FILES_RULE' MIGRATE FROM POOL 'system'

THRESHOLD(80,50)

WEIGHT(CURRENT_TIMESTAMP - ACCESS_TIME)

TO POOL 'LTFSEE_FILES'

WHERE FILE_SIZE > 5242880

AND is_premigrated

AND NOT user_exclude_list

7.10.3 IBM Spectrum Archive EE migration policy with AFM

For customers using IBM Spectrum Archive EE with IBM Spectrum Scale AFM, the migration policy would need to change to accommodate the extra exclude directories wherever migrations are occurring. Example 7-10 uses the same migration policy that is shown in Example 7-7 on page 244 with the addition of extra exclude and check parameters.

Example 7-10 Updated migration policy to include AFM

define(user_exclude_list,(PATH_NAME LIKE '/ibm/gpfs/.ltfsee/%' OR PATH_NAME LIKE '/ibm/gpfs/.SpaceMan/%' OR PATH_NAME LIKE '%/.snapshots/%' OR PATH_NAME LIKE '/ibm/gpfs/fset1/.afm/%' OR PATH_NAME LIKE '/ibm/gpfs/fset1/.ptrash/%'))

define(is_premigrated,(MISC_ATTRIBUTES LIKE '%M%' AND MISC_ATTRIBUTES NOT LIKE '%V%'))

define(is_migrated,(MISC_ATTRIBUTES LIKE '%V%'))

define(is_resident,(NOT MISC_ATTRIBUTES LIKE '%M%'))

define(is_cached,(MISC_ATTRIBUTES LIKE '%u%'))

RULE 'SYSTEM_POOL_PLACEMENT_RULE' SET POOL 'system'

RULE EXTERNAL POOL 'LTFSEE_FILES'

EXEC '/opt/ibm/ltfsee/bin/eeadm'

OPTS '-p primary@lib_ltfseevm copy@lib_ltfseevm'

SIZE(20971520)

RULE 'LTFSEE_FILES_RULE' MIGRATE FROM POOL 'system'

THRESHOLD(0,100,0)

TO POOL 'LTFSEE_FILES'

WHERE FILE_SIZE > 5242880

AND (CURRENT_TIMESTAMP - MODIFICATION_TIME > INTERVAL '5' MINUTES)

AND is_resident

AND is_cached

AND NOT user_exclude_list

7.11 Capturing a core file on Red Hat Enterprise Linux with the Automatic Bug Reporting Tool

The Automatic Bug Reporting Tool (ABRT) consists of the abrtd daemon and a number of system services and utilities to process, analyze, and report detected problems. The daemon runs silently in the background most of the time, and springs into action when an application crashes or a kernel fault is detected. The daemon then collects the relevant problem data, such as a core file if there is one, the crashing application’s command-line parameters, and other data of forensic utility.

For abrtd to work with IBM Spectrum Archive EE, two configuration directives must be modified in the /etc/abrt/abrt-action-save-package-data.conf file:

•OpenGPGCheck = yes/no

Setting the OpenGPGCheck directive to yes, which is the default setting, tells ABRT to analyze and handle only crashes in applications that are provided by packages that are signed by the GPG keys, which are listed in the /etc/abrt/gpg_keys file. Setting OpenGPGCheck to no tells ABRT to detect crashes in all programs.

•ProcessUnpackaged = yes/no

This directive tells ABRT whether to process crashes in executable files that do not belong to any package. The default setting is no.

Here are the preferred settings:

OpenGPGCheck = no

ProcessUnpackaged = yes

7.12 Anti-virus considerations

Although in-depth testing occurs with IBM Spectrum Archive EE and many industry-leading antivirus software programs, there are a few considerations to review periodically:

•Configure any antivirus software to exclude IBM Spectrum Archive EE and Hierarchical Storage Management (HSM) work directories:

– The library mount point (the /ltfs directory)

– All IBM Spectrum Archive EE space-managed GPFS file systems (which includes the .SPACEMAN directory)

– The IBM Spectrum Archive EE metadata directory (the GPFS file system that is reserved for IBM Spectrum Archive EE internal usage)

•Use antivirus software that supports sparse or offline files. Be sure that it has a setting that allows it to skip offline or sparse files to avoid unnecessary recall of migrated files.

7.13 Automatic email notification with rsyslog

Rsyslog and its mail output module (ommail) can be used to send syslog messages from IBM Spectrum Archive EE through email. Each syslog message is sent through its own email. Users should pay special attention to applying the correct amount of filtering to prevent heavy spamming. The ommail plug-in is primarily meant for alerting users of certain conditions and should be used in a limited number of cases. For more information, see this website.

Here is an example of how rsyslog ommail can be used with IBM Spectrum Archive EE by modifying the /etc/rsyslog.conf file:

If users want to send an email on all IBM Spectrum Archive EE registered error messages, the regular expression is “GLES[A-Z][0-9]*E”, as shown in Example 7-11.

Example 7-11 Email for all IBM Spectrum Archive EE registered error messages

$ModLoad ommail

$ActionMailSMTPServer us.ibm.com

$ActionMailFrom ltfsee@ltfsee_host1.tuc.stglabs.ibm.com

$ActionMailTo [email protected]

$template mailSubject,"LTFS EE Alert on %hostname%"

$template mailBody,"%msg%"

$ActionMailSubject mailSubject

:msg, regex, "GLES[A-Z][0-9]*E" :ommail:;mailBody

7.14 Overlapping IBM Spectrum Scale policy rules

This section describes how you can avoid migration failures during your IBM Spectrum Archive EE system operations by having only non-overlapping IBM Spectrum Scale policy rules in place.

After a file is migrated to a tape cartridge pool and is in the migrated state, it cannot be migrated to other tape cartridge pools (unless it is recalled back from physical tape to file system space).

Do not use overlapping IBM Spectrum Scale policy rules within different IBM Spectrum Scale policy files that can select the same files for migration to different tape cartridge pools. If a file was migrated, a later migration fails. The migration result for any file that already is in the migrated state is fail.

In Example 7-12, an attempt is made to migrate four files to tape cartridge pool pool2. Before the migration attempt, Tape ID JCB610JC is already in tape cartridge pool pool1, and Tape ID JD0321JD in pool2 has one migrated and one pre-migrated file. The state of the files on these tape cartridges before the migration attempt is shown by the eeadm file state command in Example 7-12.

Example 7-12 Display the state of files by using the eeadm file state command

[root@saitama1 prod]# eeadm file state *.bin

Name: /ibm/gpfs/prod/fileA.ppt

State: migrated

ID: 11151648183451819981-3451383879228984073-1435527450-974349-0

Replicas: 1

Tape 1: JCB610JC@pool1@lib_saitama (tape state=appendable)

Name: /ibm/gpfs/prod/fileB.ppt

State: migrated

ID: 11151648183451819981-3451383879228984073-2015134857-974348-0

Replicas: 1

Tape 1: JCB610JC@pool1@lib_saitama (tape state=appendable)

Name: /ibm/gpfs/prod/fileC.ppt

State: migrated

ID: 11151648183451819981-3451383879228984073-599546382-974350-0

Replicas: 1

Tape 1: JD0321JD@pool2@lib_saitama (tape state=appendable)

Name: /ibm/gpfs/prod/fileD.ppt

State: premigrated

ID: 11151648183451819981-3451383879228984073-2104982795-3068894-0

Replicas: 1

Tape 1: JD0321JD@pool2@lib_saitama (tape state=appendable)

The mig scan list file that is used in this example contains these entries, as shown in Example 7-13.

Example 7-13 Sample content of a scan list file

-- /ibm/gpfs/fileA.ppt

-- /ibm/gpfs/fileB.ppt

-- /ibm/gpfs/fileC.ppt

-- /ibm/gpfs/fileD.ppt

The attempt to migrate the files produces the results that are shown in Example 7-14.

Example 7-14 Migration of files by running the eeadm migration command

[root@saitama1 prod]# eeadm migrate mig -p pool2@lib_saitama

2021-12-21 01:19:12 GLESL700I: Task migrate was created successfully, task ID is 1074.

2021-12-21 01:19:13 GLESM896I: Starting the stage 1 of 3 for migration task 1074 (qualifying the state of migration candidate files).

2021-12-21 01:19:13 GLESM897I: Starting the stage 2 of 3 for migration task 1074 (copying the files to 1 pools).

2021-12-21 01:19:13 GLESM898I: Starting the stage 3 of 3 for migration task 1074 (changing the state of files on disk).

2021-12-21 01:19:13 GLESL840E: Failed to process the requested 4 file(s), with 2 succeeding and 2 failing.

2021-12-21 01:19:13 GLESL841I: Succeeded: 1 migrated, 1 already_migrated.

2021-12-21 01:19:13 GLESL843E: Failed: 0 duplicate, 2 wrong_pool, 0 not_found, 0 too_small, 0 too_early, 0 other_failure

The files on Tape ID JCB610JC (fileA.ppt and fileB.pdf) already are in tape cartridge pool pool1. Therefore, the attempt to migrate them to tape cartridge pool pool2 produces a migration result failed.

For the files on Tape ID JD0321JD, the attempt to migrate fileC.ppt file produces a already_migrated or a migration result fail for some cases with multiple library environments because the file is already migrated. Only the attempt to migrate the pre-migrated fileD.ppt file succeeds. Therefore, one operation succeeded and three other operations result with wrong_pool or already_migrated.

7.15 Storage pool assignment

This section describes how you can facilitate your IBM Spectrum Archive EE system export activities by using different storage pools for logically different parts of an IBM Spectrum Scale namespace.

If you put different logical parts of an IBM Spectrum Scale namespace (such as the project directory) into different LTFS tape cartridge pools, you can Normal Export tape cartridges that contain only the files from that specific part of the IBM Spectrum Scale namespace (such as project abc). Otherwise, you must first recall all the files from the namespace of interest (such as the project directory of all projects), migrate the recalled files to an empty tape cartridge pool, and then Normal Export that tape cartridge pool.

The concept of different tape cartridge pools for different logical parts of an IBM Spectrum Scale namespace can be further isolated by using IBM Spectrum Archive node groups. A node group consists of one or more nodes that are connected to the same tape library. When tape cartridge pools are created, they can be assigned to a specific node group. For migration purposes, it allows certain tape cartridge pools to be used with only drives within the owning node group.

7.16 Tape cartridge removal

This section describes the information that must be reviewed before you physically remove a tape cartridge from the library of your IBM Spectrum Archive EE environment.

For more information, see 6.8.2, “Moving tape cartridges” on page 156, and “The eeadm <resource type> --help command” on page 316.

7.16.1 Reclaiming tape cartridges before you remove or export them

To avoid failed recall operations, it is recommended that the tape cartridges are reclaimed before removing or exporting a cartridge.

When a cartridge that can be appended is planned for removal, use one of the following methods to perform reclaim before the removal:

•Run the eeadm tape reclaim command before you remove it from the LTFS file system (by running the eeadm tape unassign command)

•Export it from the LTFS library (by running the eeadm tape export command. This command will internally run the reclaim during its task.)

If tape cartridges are in a need_replace or a require_replace state, use the eeadm tape replace command. This command will internally run the reclaim command during its procedure.

The eeadm tape unassign --safe-remove can be used for cases where the replace command fails. Note that the --safe-remove option will recall all the active files on the tape back to an IBM Spectrum Scale file system that has adequate free space, and those files need to be manually migrated again to a good tape.

7.16.2 Exporting tape cartridges before physically removing them from the library

A preferred practice is always to export a tape cartridge before it is physically removed from the library. If a removed tape cartridge is modified and then reinserted in the library, unpredictable behavior can occur.

7.17 Reusing LTFS formatted tape cartridges

In some scenarios, you might want to reuse tape cartridges for your IBM Spectrum Archive EE setup, which were used before as an LTFS formatted media in another LTFS setup.

Because these tape cartridges still might contain data from the previous usage, IBM Spectrum Archive EE recognizes the old content because LTFS is a self-describing format.

Before such tape cartridges can be reused within your IBM Spectrum Archive EE environment, the data must be moved off the cartridge or deleted from the file system then the cartridges must be reformatted before they are added to an IBM Spectrum Archive EE tape cartridge pool. This task can be done by running the eeadm tape reclaim or eeadm tape unassign -E commands. Note that the tapes removed with the -E option will need a -f option when re-assigning with the eeadm tape assign command.

7.17.1 Reformatting LTFS tape cartridges through eeadm commands

If a tape cartridge was used as an LTFS tape, you can check its contents after it is added to the IBM Spectrum Archive EE system and loaded to a drive. You can run the ls -la command to display content of the tape cartridge, as shown in Example 7-15.

Example 7-15 Display content of a used LTFS tape cartridge (non-IBM Spectrum Archive EE)

[root@ltfs97 ~]# ls -la /ltfs/153AGWL5

total 41452613

drwxrwxrwx 2 root root 0 Jul 12 2012 .

drwxrwxrwx 12 root root 0 Jan 1 1970 ..

-rwxrwxrwx 1 root root 18601 Jul 12 2012 api_test.log

-rwxrwxrwx 1 root root 50963 Jul 11 2012 config.log

-rwxrwxrwx 1 root root 1048576 Jul 12 2012 dummy.000

-rwxrwxrwx 1 root root 21474836480 Jul 12 2012 perf_fcheck.000

-rwxrwxrwx 1 root root 20971520000 Jul 12 2012 perf_migrec

lrwxrwxrwx 1 root root 25 Jul 12 2012 symfile -> /Users/piste/mnt/testfile

You can also discover if it was an IBM Spectrum Archive EE tape cartridge before or just a standard LTFS tape cartridge that is used by IBM Spectrum Archive LE or IBM Spectrum Archive SDE release. Review the hidden directory .LTFSEE_DATA, as shown in Example 7-16. This example indicates that this cartridge was previously used as an IBM Spectrum Archive EE tape cartridge.

Example 7-16 Display content of a used LTFS tape cartridge (IBM Spectrum Archive EE)

[root@ltfs97 ltfs]# ls -lsa /ltfs/JD0321JD

total 0

0 drwxrwxrwx 4 root root 0 Jan 9 14:33 .

0 drwxrwxrwx 7 root root 0 Dec 31 1969 ..

0 drwxrwxrwx 3 root root 0 Jan 9 14:33 ibm

0 drwxrwxrwx 2 root root 0 Jan 10 16:01 .LTFSEE_DATA

The procedure for reuse and reformatting of a previously used LTFS tape cartridge depends on whether it was used before as an IBM Spectrum Archive LE or IBM Spectrum Archive SDE tape cartridge or as an IBM Spectrum Archive EE tape cartridge.

Before you start with the reformat procedures and examples, it is important that you confirm the following starting point. You can see the tape cartridges that you want to reuse by running the eeadm tape list command in the status unassign, as shown in Example 7-17.

Example 7-17 Output of the eeadm tape list command

[root@saitama2 ltfs]# eeadm tape list -l lib_saitama

Tape ID Status State Usable(GiB) Used(GiB) Available(GiB) Reclaimable% Pool Library Location Task ID

JCA561JC ok offline 0 0 0 0% pool2 lib_saitama homeslot -

JCA224JC ok appendable 6292 0 6292 0% pool1 lib_saitama homeslot -

JCC093JC ok appendable 6292 496 5796 0% pool1 lib_saitama homeslot -

JCB141JC ok unassigned 0 0 0 0% - lib_saitama homeslot -

Reformatting and reusing an LTFS SDE/LE tape cartridge

In this case, you run the eeadm tape assign command to add this tape cartridge to an IBM Spectrum Archive EE tape cartridge pool and format it at the same time, as shown in the following example and in Example 7-18:

eeadm tape assign <list_of_tapes> -p <pool> [OPTIONS]

If the format fails then there is data most likely written to the tape and a force format is required by appending the -f option to the eeadm tape assign command.

Example 7-18 Reformat a used LTFS SDE/LE tape cartridge

[root@saitama2 ~]# eeadm tape assign JCB141JC -p pool1 -l lib_saitama -f

2019-01-15 08:35:21 GLESL700I: Task tape_assign was created successfully, task id is 7201.

2019-01-15 08:38:09 GLESL087I: Tape JCB141JC successfully formatted.

2019-01-15 08:38:09 GLESL360I: Assigned tape JCB141JC to pool pool1 successfully.

Reformatting and reusing an IBM Spectrum Archive EE tape cartridge

If you want to reuse an IBM Spectrum Archive EE tape cartridge, the user can either reclaim the tape so the data is preserved onto another cartridge within the same pool. If the data on the cartridge is no longer needed then the user must delete all files on disk that have been premigrated/migrated to the cartridge and run the eeadm tape unassign command with the -E option. After the tape has been removed from the pool using the eeadm tape unassign -E command add the tape to the new pool using the eeadm tape assign command with the -f option to force a format.

7.18 Reusing non-LTFS tape cartridges

For your IBM Spectrum Archive EE setup, in some scenarios, you might want to reuse tape cartridges that were used before as non-LTFS formatted media in another server setup behind your tape library (such as backup tape cartridges from an IBM Spectrum Protect environment).

Although these tape cartridges still might contain data from the previous usage, they can be used within IBM Spectrum Archive EE the same way as new, unused tape cartridges. For more information about how to add new tape cartridge media to an IBM Spectrum Archive EE tape cartridge pool, see 6.8.1, “Adding tape cartridges” on page 154.

7.19 Moving tape cartridges between pools

This section describes preferred practices to consider when you want to move a tape cartridge between tape cartridge pools. This information also relates to the function that is described in 6.8.2, “Moving tape cartridges” on page 156.

7.19.1 Avoiding changing assignments for tape cartridges that contain files

If a tape cartridge contains any files, a preferred practice is to not move the tape cartridge from one tape cartridge pool to another tape cartridge pool. If you remove the tape cartridge from one tape cartridge pool and then add it to another tape cartridge pool, the tape cartridge includes files that are targeted for multiple pools. This is not internally allowed in IBM Spectrum Archive EE.

Before you export files you want from that tape cartridge, you must recall any files that are not supposed to be exported in such a scenario.

For more information, see 6.9, “Tape storage pool management” on page 162.

7.19.2 Reclaiming a tape cartridge and changing its assignment

Before you remove a tape cartridge from one tape cartridge pool and add it to another tape cartridge pool, a preferred practice is to reclaim the tape cartridge so that no files remain on the tape cartridge when it is removed. This action prevents the scenario that is described in 7.19.1, “Avoiding changing assignments for tape cartridges that contain files” on page 254.

For more information, see 6.9, “Tape storage pool management” on page 162 and 6.17, “Reclamation” on page 200.

7.20 Offline tape cartridges

This section describes how you can help maintain the file integrity of offline tape cartridges by not modifying the files of offline exported tape cartridges. Also, a reference to information about solving import problems that are caused by modified offline tape cartridges is provided.

7.20.1 Do not modify the files of offline tape cartridges

When a tape cartridge is offline and outside the library, do not modify its IBM Spectrum Scale offline files on disk and do not modify its files on the tape cartridge. Otherwise, some files that exist on the tape cartridge might become unavailable to IBM Spectrum Scale.

7.20.2 Solving problems

For more information about solving problems that are caused by trying to import a tape cartridge in offline state that was modified while it was outside the library, see “Importing offline tape cartridges” on page 203.

7.21 Scheduling reconciliation and reclamation

This section provides information about scheduling regular reconciliation and reclamation activities.

The reconciliation process resolves any inconsistencies that develop between files in the IBM Spectrum Scale and their equivalents in LTFS. The reclamation function frees up tape cartridge space that is occupied by non-referenced files and non-referenced content that is present on the tape cartridge. In other words, this is inactive content of data, but still occupying space on the physical tape.

It is preferable to schedule periodic reconciliation and reclamation, ideally during off-peak hours and at a frequency that is most effective. A schedule helps ensure consistency between files and efficient use of the tape cartridges in your IBM Spectrum Archive EE environment.

For more information, see 6.15, “Recalling files to their resident state” on page 196 and 6.17, “Reclamation” on page 200.

7.22 License Expiration Handling

License validation is done by the IBM Spectrum Archive EE program. If the license covers only a certain period (as in the case for the IBM Spectrum Archive EE Trial Version, which is available for three months), it expires if this time is passed. The behavior of IBM Spectrum Archive EE changes after that period in the following cases:

•The state of the nodes changes to the following defined value:

NODE_STATUS_LICENSE_EXPIRED

Once in this state, some commands will return errors with messages indicating licence expiration.

•When the license is expired, IBM Spectrum Archive EE can still read data, but it is impossible to write and migrate data. In such a case, not all IBM Spectrum Archive EE commands are usable.

When the license is expired and detected by the scheduler of the main IBM Spectrum Archive EE management components (MMM), it shuts down. This feature is necessary to have a proper clean-up if some jobs are still running or unscheduled. By doing so, a user is aware that IBM Spectrum Archive EE does not function because of the license expiration.

To give a user the possibility to access files that were previously migrated to tape, it is possible for IBM Spectrum Archive EE to restart, but it operates with limited functions. All functions that write to tape cartridges are not available. During the start of IBM Spectrum Archive EE (through MMM), it is detected that some nodes have the status of NODE_STATUS_LICENSE_EXPIRED.

IBM Spectrum Archive EE fails the following commands immediately:

•migrate

•save

These commands are designed to write to a tape cartridge in certain cases. Therefore, they fail with an error message. The transparent access of a migrated file is not affected. The deletion of the link and the data file on a tape cartridge because of a write or truncate recall is omitted. Other task that inherit such behaviors will also fail, since these type of commands that include writing to tape will be invalid.

In summary, the following steps occur after expiration:

1. The status of the nodes changes to the state NODE_STATUS_LICENSE_EXPIRED.

2. IBM Spectrum Archive EE shuts down to allow a proper clean-up.

3. IBM Spectrum Archive EE can be started again with limited functions.

7.23 Disaster recovery

This section describes the preparation of an IBM Spectrum Archive EE DR setup and the steps that you must perform before and after a disaster to recover your IBM Spectrum Archive EE environment.

7.23.1 Tiers of disaster recovery

Understanding DR strategies and solutions can be complex. To help categorize the various solutions and their characteristics (for example, costs, recovery time capabilities, and recovery point capabilities), definitions of the various levels and required components can be defined. The idea behind such a classification is to help those concerned with DR to determine the following issues:

•What solution they have

•What solution they require

•What it requires to meet greater DR objectives

In 1992, the SHARE user group in the United States, along with IBM, defined a set of DR tier levels. This action was done to address the need to describe and quantify various different methodologies for successful mission-critical computer systems DR implementations. So, within the IT Business Continuance industry, the tier concept continues to be used, and is useful for describing today’s DR capabilities.

The tiers’ definitions are designed so that emerging DR technologies can also be applied, as listed in Table 7-1.

Table 7-1 Summary of disaster recovery tiers (SHARE)

|

Tier

|

Description

|

|

6

|

Zero data loss

|

|

5

|

Two-site two-phase commit

|

|

4

|

Electronic vaulting to hotsite (active secondary site)

|

|

3

|

Electronic vaulting

|

|

2

|

Offsite vaulting with a hotsite (PTAM + hot site)

|

|

1

|

Offsite vaulting (Pickup Truck Access Method (PTAM))

|

|

0

|

Offsite vaulting (PTAM)

|

In the context of the IBM Spectrum Archive EE product, this section focuses only on the tier 1 strategy because this is the only supported solution that you can achieve with a product that handles physical tape media (off-site vaulting).

For more information about the other DR tiers and general strategies, see Disaster Recovery Strategies with Tivoli Storage Management, SG24-6844.

Tier 1: Offsite vaulting

A tier 1 installation is defined as having a disaster recovery plan (DRP) that backs up and stores its data at an off-site storage facility, and determines some recovery requirements. As shown in Figure 7-1 on page 257, backups are taken that are stored at an off-site storage facility.

This environment can also establish a backup platform, although it does not have a site at which to restore its data, nor the necessary hardware on which to restore the data, such as compatible tape devices.

Figure 7-1 Tier 1 - offsite vaulting (PTAM)

Because vaulting and retrieval of data is typically handled by couriers, this tier is described as the PTAM. PTAM is a method that is used by many sites because this is a relatively inexpensive option. However, it can be difficult to manage because it is difficult to know exactly where the data is at any point.

There is probably only selectively saved data. Certain requirements were determined and documented in a contingency plan and there is optional backup hardware and a backup facility that is available. Recovery depends on when hardware can be supplied, or possibly when a building for the new infrastructure can be located and prepared.

Although some customers are on this tier and seemingly can recover if there is a disaster, one factor that is sometimes overlooked is the recovery time objective (RTO). For example, although it is possible to recover data eventually, it might take several days or weeks. An outage of business data for this long can affect business operations for several months or even years (if not permanently).

|

Important: With IBM Spectrum Archive EE, the recovery time can be improved because after the import of the vaulting tape cartridges into a recovered production environment, the user data is immediately accessible without the need to copy back content from the tape cartridges into a disk or file system.

|

7.23.2 Preparing IBM Spectrum Archive EE for a tier 1 disaster recovery strategy (offsite vaulting)

IBM Spectrum Archive EE has all the tools and functions that you need to prepare a tier 1 DR strategy for offsite vaulting of tape media.

The fundamental concept is based on the IBM Spectrum Archive EE function to create replicas and redundant copies of your file system data to tape media during migration (see 6.11.4, “Replicas and redundant copies” on page 177). IBM Spectrum Archive EE enables the creation of a replica plus two more redundant replicas (copies) of each IBM Spectrum Scale file during the migration process.

The first replica is the primary copy, and other replicas are called redundant copies. Redundant copies must be created in tape cartridge pools that are different from the tape cartridge pool of the primary copy and different from the tape cartridge pools of other redundant copies.

Up to two redundant copies can be created, which means that a specific file from the GPFS file system can be stored on three different physical tape cartridges in three different IBM Spectrum Archive EE tape cartridge pools.

The tape cartridge where the primary copy is stored and the tapes that contain the redundant copies are referenced in the IBM Spectrum Scale inode with an IBM Spectrum Archive EE DMAPI attribute. The primary copy is always listed first.

Redundant copies are written to their corresponding tape cartridges in the IBM Spectrum Archive EE format. These tape cartridges can be reconciled, exported, reclaimed, or imported by using the same commands and procedures that are used for standard migration without replica creation.

Redundant copies must be created in tape cartridge pools that are different from the pool of the primary copy and different from the pools of other redundant copies. Therefore, create a DR pool named DRPool that exclusively contains the media you plan to Offline Export for offline vaulting. You must also plan for the following issues:

•Which file system data is migrated (as another replica) to the DR pool?

•How often do you plan to export and remove of physical tapes for offline vaulting?

•How do you handle media lifecycle management with the tape cartridges for offline vaulting?

•What are the DR steps and procedure?

If the primary copy of the IBM Spectrum Archive EE server and IBM Spectrum Scale do not exist due to a disaster, the redundant copy that is created and stored in an external site (offline vaulting) will be used for the disaster recovery.

Section 7.23.3, “IBM Spectrum Archive EE tier 1 DR procedure” on page 259 describes the steps that are used to perform these actions:

•Recover (import) the offline vaulting tape cartridges to a newly installed IBM Spectrum Archive EE environment

•Re-create the GPFS file system information

•Regain access to your IBM Spectrum Archive EE data

|

Important: The migration of a pre-migrated file does not create new replicas.

|

Example 7-19 shows you a sample migration policy to migrate all files to three pools. To have this policy run periodically, see “Using a cron job” on page 176.

Example 7-19 Sample of a migration policy

define(user_exclude_list,(PATH_NAME LIKE '/ibm/gpfs/.ltfsee/%' OR PATH_NAME LIKE '/ibm/gpfs/.SpaceMan/%'))

define(is_premigrated,(MISC_ATTRIBUTES LIKE '%M%' AND MISC_ATTRIBUTES NOT LIKE '%V%'))

define(is_migrated,(MISC_ATTRIBUTES LIKE '%V%'))

define(is_resident,(NOT MISC_ATTRIBUTES LIKE '%M%'))

RULE 'SYSTEM_POOL_PLACEMENT_RULE' SET POOL 'system'

RULE EXTERNAL POOL 'LTFSEE_FILES'

EXEC '/opt/ibm/ltfsee/bin/eeadm'

OPTS '-p primary@lib_ltfseevm,copy@lib_ltfseevm,DR@lib_ltfseevm'

SIZE(20971520)

RULE 'LTFSEE_FILES_RULE' MIGRATE FROM POOL 'system' TO POOL 'LTFSEE_FILES' WHERE

(

is_premigrated

AND NOT user_exclude_list

)

After you create redundant copies of your file system data on different IBM Spectrum Archive EE tape cartridge pools for offline vaulting, you can Normal Export the tape cartridges by running the IBM Spectrum Archive EE export command. For more information, see 6.19.2, “Exporting tape cartridges” on page 204.

|

Important: The IBM Spectrum Archive EE export command does not eject the tape cartridge to the physical I/O station of the attached tape library. To eject the DR tape cartridges from the library to take them out for offline vaulting, you can run the eeadm tape move command with the option -L ieslot. For more information, see 6.8, “Tape library management” on page 154 and 10.1, “Command-line reference” on page 316.

|

7.23.3 IBM Spectrum Archive EE tier 1 DR procedure

To perform a DR to restore an IBM Spectrum Archive EE server and IBM Spectrum Scale with tape cartridges from offline vaulting, complete the following steps:

1. Before you start a DR, a set of exported tapes that was exported from IBM Spectrum Archive EE must be stored in an offline vault. In addition, a “new” Linux server and an IBM Spectrum Archive EE cluster environment, including IBM Spectrum Scale, must be set up.

2. Confirm that the new installed IBM Spectrum Archive EE cluster is running and ready for the import operation by running the following commands:

– # eeadm node list

– # eeadm tape list

– # eeadm pool list

3. Insert the tape cartridges for DR into the tape library I/O station.

4. Use your tape library management GUI to assign the DR tape cartridges to the IBM Spectrum Archive EE logical tape library partition of your new IBM Spectrum Archive EE server.

5. From the IBM Spectrum Archive EE program, retrieve the updated inventory information from the logical tape library by running the following command:

# eeadm library rescan

6. Move the inserted tapes to homeslot with the following command:

# eeadm tape move <tape id> -L homeslot

7. Import the DR tape cartridges into the IBM Spectrum Archive EE environment by running the eeadm tape import command. The eeadm tape import command features various options that you can specify. Therefore, it is important to become familiar with these options, especially when you are performing DR. For more information, see Chapter 10, “Reference” on page 315.

When you rebuild from one or more tape cartridges, the eeadm tape import command adds the specified tape cartridge to the IBM Spectrum Archive EE library and imports the files on that tape cartridge into the IBM Spectrum Scale namespace.

This process puts the stub file back in to the IBM Spectrum Scale namespace, but the imported files stay in a migrated state, which means that the data remains on tape. The data portion of the file is not copied to disk during the import.

Restoring file system objects and files from tape

If a GPFS file system fails, the migrated files and the saved file system objects (empty regular files, symbolic links, and empty directories) that are located in an exported tape can be restored from tapes by running the eeadm tape import command1.

The eeadm tape import command reinstantiates the stub files in IBM Spectrum Scale for migrated files. The state of those files changes to the migrated state. Also, the eeadm tape import command re-creates the file system objects in IBM Spectrum Scale for saved file system objects.

|

Note: When a symbolic link is saved to tape and then restored by the eeadm tape import command, the target of the symbolic link is kept. However, this process might cause the link to break. Therefore, after a symbolic link is restored, it might need to be moved manually to its original location on IBM Spectrum Scale.

|

Recovery procedure by using the eeadm tape import command

Here is a typical user scenario for recovering migrated files and saved file system objects from tape by running the eeadm tape import command:

1. Re-create the GPFS file system or create a GPFS file system.

2. Restore the migrated files and saved file system objects from tape by running the eeadm tape import command:

eeadm tape import LTFS01L6 LTFS02L6 LTFS03L6 -p PrimPool -P /gpfs/ltfsee/rebuild

/gpfs/ltfsee/rebuild is a directory in IBM Spectrum Scale to be restored to, PrimPool is the storage pool to import the tapes into, and LTFS01L6, LTFS02L6, and LTFS02L6 are tapes that contain migrated files or saved file system objects.

Import processing for unexported tapes that are not reconciled

The eeadm tape import command might encounter tapes that are not reconciled when the command is applied to tapes that are not exported from IBM Spectrum Archive EE. In this case, the following situations can occur with the processing to restore files and file system objects, and should be handled as described for each case:

•The tapes might have multiple generations of a file or a file system object. If so, the eeadm tape import command restores an object from the latest one that is on the tapes that are specified by the command.

•The tapes might not reflect the latest file information from IBM Spectrum Scale. If so, the eeadm tape import command restores files or file system objects that were removed from IBM Spectrum Scale.

Rebuild and restore considerations

While the eeadm tape import command is running, do not modify or access the files or file system objects to be restored. During the rebuild process, an old generation of the file can appear on IBM Spectrum Scale.

For more information about the eeadm tape import command, see “Importing” on page 202.

7.24 IBM Spectrum Archive EE problem determination

If you discover an error message or a problem while you are running and operating the IBM Spectrum Archive EE program, you can check the IBM Spectrum Archive EE log file as a starting point for problem determination.

The IBM Spectrum Archive EE log file can be found in the following directory:

/var/log/ltfsee.log

In Example 7-20, we attempted to migrate two files (document10.txt and document20.txt) to a pool (myfirstpool) that contained two new formatted and added physical tapes (055AGWL5 and 055AGWL5). We encountered an error that only one file was migrated successfully. We checked the ltfsee.log to determine why the other file was not migrated.

Example 7-20 Check the ltfsee.log file

[root@mikasa1 gpfs]# eeadm migrate mig -p myfirstpool@lib_saitama

2019-01-21 08:37:54 GLESL700I: Task migrate was created successfully, task id is 7217.

2019-01-21 08:37:55 GLESM896I: Starting the stage 1 of 3 for migration task 7217 (qualifying the state of migration candidate files).

2019-01-21 08:37:55 GLESM897I: Starting the stage 2 of 3 for migration task 7217 (copying the files to 1 pools).

2019-01-21 08:38:36 GLESM898I: Starting the stage 3 of 3 for migration task 7217 (changing the state of files on disk).

2019-01-21 08:38:36 GLESL159E: Not all migration has been successful.

2019-01-21 08:38:36 GLESL038I: Migration result: 1succeeded, 1 failed, 0 duplicate, 0 duplicate wrong pool, 0 not found, 0 too small to qualify for migration, 0 too early for migration.

[root@ltfs97 gpfs]#

[root@ltfs97 gpfs]# vi /var/log/ltfsee.log

2019-01-21T08:37:55.399423-07:00 saitama2 mmm[22236]: GLESM148E(00704): File /ibm/gpfs/document20.txt is already migrated and will be skipped.

2019-01-21T08:38:36.694378-07:00 saitama2 mmm[22236]: GLESL159E(00142): Not all migration has been successful.

In Example 7-20, you can see from the message in the IBM Spectrum Archive EE log file that one file we tried to migrate was already in a migrated state and therefore skipped to migrate again, as shown in the following example:

2019-01-21T08:37:55.399423-07:00 saitama2 mmm[22236]: GLESM148E(00704): File /ibm/gpfs/document20.txt is already migrated and will be skipped.

For more information about problem determination, see Chapter 9, “Troubleshooting IBM Spectrum Archive Enterprise Edition” on page 293.

7.24.1 Rsyslog log suppression by rate-limiting

IBM Spectrum Archive uses rsyslogd and journald of the Red Hat Enterprise Linux system for logging. By default, rate-limiting of the log messages is enabled. However, this causes problems because all the logs are needed for problem analysis.

It is highly recommended to disable the rate-limiting so that no logs are suppressed, as follows:

1. Open /etc/systemd/journald.conf and add the following lines:

RateLimitInterval=0

RateLimitBurst=0

2. Open /etc/rsyslog.conf, and in the Global Directives section, add the following lines:

$imjournalRatelimitInterval 0

$imjournalRatelimitBurst 0

3. Restart the services:

systemctl restart systemd-journald

systemctl restart rsyslog.service

7.25 Collecting IBM Spectrum Archive EE logs for support

If you discover a problem with your IBM Spectrum Archive EE program and open a ticket at the IBM Support Center, you might be asked to provide a package of IBM Spectrum Archive EE log files.

A Linux script is available with IBM Spectrum Archive EE that collects all of the needed files and logs for your convenience to provide them to IBM Support. This task also compresses the files into a single package.

To generate the compressed .tar file and provide it on request to IBM Support, run the following command:

ltfsee_log_collection

Example 7-21 shows the output of the ltfsee_log_collection command. During the log collection run, you are asked what information you want to collect. If you are unsure, select Y to select all the information. At the end of the output, you can find the file name and where the log package was stored.

Example 7-21 The ltfsee_log_collection command

[root@kyoto ~]# ltfsee_log_collection

IBM Spectrum Archive Enterprise Edition - log collection program

This program collects the following information from your IBM Spectrum Scale (GPFS) cluster.

(1) Log files that are generated by IBM Spectrum Scale (GPFS), and IBM Spectrum Archive EE

(2) Configuration information that is configured to use IBM Spectrum Scale (GPFS)

and IBM Spectrum Archive EE.

(3) System information including

OS distribution and kernel information,

hardware information (CPU and memory) and

process information (list of running processes).

(4) Task information files under the following subdirectory

<GPFS mount point>/.ltfsee/statesave

If you agree to collect all the information, input 'y'.

If you agree to collect only (1) and (2), input 'p' (partial).

If you agree to collect only (4) task information files, input 't'.

If you don't agree to collect any information, input 'n'.

The following files are collected only if they were modified within the last 90 days.

- /var/log/messages*

- /var/log/ltfsee.log*

- /var/log/ltfsee_trc.log*

- /var/log/ltfsee_mon.log*

- /var/log/ltfs.log*

- /var/log/ltfsee_install.log

- /var/log/ltfsee_stat_driveperf.log*

- /var/log/httpd/error_log*

- /var/log/httpd/rest_log*

- /var/log/ltfsee_rest/rest_app.log*

- /var/log/logstash/*

You can collect all of the above files, including files modified within the last 90 days, with an argument of 'all'.

#./ltfsee_log_collection all

If you want to collect the above files that were modified within the last 30 days.

#./ltfsee_log_collection 30

The collected data will be zipped in the ltfsee_log_files_<date>_<time>.tar.gz file.

You can check the contents of the file before submitting it to IBM.

Input > y

Creating a temporary directory '/root/ltfsee_log_files'...

The collection of local log files is in progress.

Removing collected files...

Information has been collected and archived into the following file.

ltfsee_log_files_20190121_084618.tar.gz

7.26 Backing up files within file systems that are managed by IBM Spectrum Archive EE

The IBM Spectrum Protect Backup/Archive client and the IBM Spectrum Protect

HSM client from the IBM Spectrum Protect family are components of IBM Spectrum Archive EE and are installed as part of the IBM Spectrum Archive EE installation process. Therefore, it is possible to use them to back up files within the GPFS or IBM Spectrum Scale file systems. The mmbackup command can be used to back up some or all of the files of a GPFS or IBM Spectrum Scale file system to IBM Spectrum Protect servers using the IBM Spectrum Protect Backup-Archive client. After files have been backed up, you can restore them using the interfaces provided by IBM Spectrum Protect.

HSM client from the IBM Spectrum Protect family are components of IBM Spectrum Archive EE and are installed as part of the IBM Spectrum Archive EE installation process. Therefore, it is possible to use them to back up files within the GPFS or IBM Spectrum Scale file systems. The mmbackup command can be used to back up some or all of the files of a GPFS or IBM Spectrum Scale file system to IBM Spectrum Protect servers using the IBM Spectrum Protect Backup-Archive client. After files have been backed up, you can restore them using the interfaces provided by IBM Spectrum Protect.

The mmbackup command utilizes all the scalable, parallel processing capabilities of the mmapplypolicy command to scan the file system, evaluate the metadata of all the objects in the file system, and determine which files need to be sent to backup in IBM Spectrum Protect, as well which deleted files should be expired from IBM Spectrum Protect. Both backup and expiration take place when running mmbackup in the incremental backup mode.

The mmbackup command can inter-operate with regular IBM Spectrum Protect commands for backup and expire operations. However if after using mmbackup, any IBM Spectrum Protect incremental or selective backup or expire commands are used, mmbackup needs to be informed of these activities. Use either the -q option or the --rebuild option in the next mmbackup command invocation to enable mmbackup to rebuild its shadow databases.

These databases shadow the inventory of objects in IBM Spectrum Protect so that only new changes will be backed up in the next incremental mmbackup. Failing to do so will needlessly back up some files again. The shadow database can also become out of date if mmbackup fails due to certain IBM Spectrum Protect server problems that prevent mmbackup from properly updating its shadow database after a backup. In these cases it is also required to issue the next mmbackup command with either the -q option or the --rebuild options.

The mmbackup command provides the following benefits:

•A full backup of all files in the specified scope.

•An incremental backup of only those files that have changed or been deleted since the last backup. Files that have changed since the last backup are updated and files that have been deleted since the last backup are expired from the IBM Spectrum Protect server.

•Utilization of a fast scan technology for improved performance.

•The ability to perform the backup operation on a number of nodes in parallel.

•Multiple tuning parameters to allow more control over each backup.

•The ability to backup the read/write version of the file system or specific global snapshots.

•Storage of the files in the backup server under their GPFS root directory path independent of whether backing up from a global snapshot or the live file system.

•Handling of unlinked filesets to avoid inadvertent expiration of files.

For more information, see IBM Documentation.

7.26.1 Considerations

Consider the following points when you are using the IBM Spectrum Protect Backup/Archive client in the IBM Spectrum Archive EE environment:

•IBM Spectrum Protect requirements for backup

•Update the dsm.sys and dsm.opt files to support both IBM Spectrum Protect and IBM Spectrum Archive EE operations:

|

Note: In dsm.sys file, when multiple server stanza are defined for IBM Spectrum Archive and IBM Spectrum Protect, the following lines need to be placed at the beginning of the file. Otherwise, IBM Spectrum Archive will not be able to migrate or recall files.

|

HSMBACKENDMODE TSMFREE

ERRORLOGNAME /opt/tivoli/tsm/client/hsm/bin/dsmerror.log

MAXRECALLDAEMONS 64

MINRECALLDAEMONS 64

HSMMIGZEROBLOCKFILES YES

errorlogretention 180 S

•Ensure the files are backed up first with IBM Spectrum Protect followed by archiving the files with IBM Spectrum Archive EE to avoid recall storms.

7.26.2 Backing up a GPFS or IBM Spectrum Scale environment

The best practice is to always backup the files using IBM Spectrum Protect first and then archiving the files using IBM Spectrum Archive EE.The primary reason is that attempting to back up the stub of a file that was migrated to IBM Spectrum Archive EE causes it to be automatically recalled from LTFS (tape) to the IBM Spectrum Scale. This is not an efficient way to perform backups, especially when you are dealing with large numbers of files.

The mmbackup command is used to back up the files of a GPFS or IBM Spectrum Scale file system to IBM Spectrum Protect servers by using the IBM Spectrum Protect Backup/Archive Client of the IBM Spectrum Protect family. In addition, the mmbackup command can operate with regular IBM Spectrum Protect backup commands for backup. After a file system is backed up, you can restore files by using the interfaces that are provided by the IBM Spectrum Protect family.

Starting with IBM Spectrum Archive EE v1.3.0.0 and IBM Spectrum Scale v5.0.2.2, a new option has been added to the eeadm migrate command called --mmbackup. With the --mmbackup option is supplied, IBM Spectrum Archive EE will first verify that current backup versions of the files exist within IBM Spectrum Protect before it will archive them to IBM Spectrum Archive EE. If those files are not backed up, those files will be filtered out and thus not archived to tape. This ensures that there will be no recall storm due to the backup of files.

The --mmbackup option of the eeadm migrate command takes 1 option, which is the location of the mmbackup shadow database. This location is normally the same device or directory option of the mmbackup command.

7.27 IBM TS4500 Automated Media Verification with IBM Spectrum Archive EE

In some use cases where IBM Spectrum Archive EE is deployed, you might have the requirement to periodically ensure that the files and data that is migrated from the IBM Spectrum Scale file system to physical tape is still readable and can be recalled back from tape to the file system without any error. Especially in a more long-term archival environment, a function that checks the physical media based on a schedule that the user can implement is highly appreciated.

Starting with the release of the IBM TS4500 Tape Library R2, a new, fully transparent function is introduced within the TS4500 operations that is named policy-based automatic media verification. This new function is hidden from any ISV software, similar to the automatic cleaning.

No ISV certification is required. It can be enabled/disabled through the logical library with more settings to define the verify period (for example, every 6 months) and the first verification date.

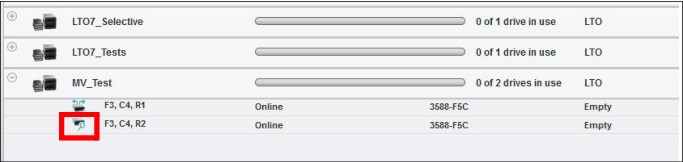

One or more designated media verification drives (MVDs) must be assigned to a logical library in order for the verification to take place. A preferred practice is to have two MVDs assigned at a time to ensure that no false positives occur because of a faulty tape drive. Figure 7-2 shows an example of such a setup.

Figure 7-2 TS4500 with one logical library showing two MVDs configured

|

Note: MVDs defined within a logical library are not accessible from the host or application by using the drives of this particular logical library for production.

|

Verify that results are simple pass/fail, but verify that failures are retried on a second physical drive, if available, before being reported. A failure is reported through all normal notification options (email, syslog, and SNMP). MVDs are not reported as mount points (SCSI DTEs) to the ISV application, so MVDs do not need to be connected to the SAN.

During this process, whenever access from the application or host is required to the physical media under media verification, the tape library stops the current verification process. It then dismounts the needed tape from the MVD, and mounts it to a regular tape drive within the same logical library for access by the host application to satisfy the requested mount. At a later point, the media verification process continues.

The library GUI Cartridges page adds columns for last verification date/time, verification result, and next verification date/time (if automatic media verification is enabled). If a cartridge being verified is requested for ejection or mounting by the ISV software (which thinks the cartridge is in a storage slot), the verify task is automatically canceled, a checkpoint occurs, and the task resumes later (if/when the cartridge is available).