15.1 Introduction to Machine Learning

In this chapter and the next, we’ll present machine learning—one of the most exciting and promising subfields of artificial intelligence. You’ll see how to quickly solve challenging and intriguing problems that novices and most experienced programmers probably would not have attempted just a few years ago. Machine learning is a big, complex topic that raises lots of subtle issues. Our goal here is to give you a friendly, hands-on introduction to a few of the simpler machine-learning techniques.

What Is Machine Learning?

Can we really make our machines (that is, our computers) learn? In this and the next chapter, we’ll show exactly how that magic happens. What’s the “secret sauce” of this new application-development style? It’s data and lots of it. Rather than programming expertise into our applications, we program them to learn from data.

We’ll present many Python-based code examples that build working machine-learning models then use them to make remarkably accurate predictions. The chapter is loaded with exercises and projects that will give you the opportunity to broaden and deepen your machine-learning expertise.

Prediction

Wouldn’t it be fantastic if you could improve weather forecasting to save lives, minimize injuries and property damage? What if we could improve cancer diagnoses and treatment regimens to save lives, or improve business forecasts to maximize profits and secure people’s jobs? What about detecting fraudulent credit-card purchases and insurance claims? How about predicting customer “churn,” what prices houses are likely to sell for, ticket sales of new movies, and anticipated revenue of new products and services? How about predicting the best strategies for coaches and players to use to win more games and championships? All of these kinds of predictions are happening today with machine learning.

Machine Learning Applications

Here’s a table of some popular machine-learning applications:

| Anomaly detection Chatbots Classifying emails as spam or not spam Classifying news articles as sports, financial, politics, etc. Computer vision and image classification Credit-card fraud detection Customer churn prediction Data compression Data exploration Data mining social media (like Facebook, Twitter, LinkedIn) |

Detecting objects in scenes Detecting patterns in data Diagnostic medicine Facial recognition Insurance fraud detection Intrusion detection in computer networks Handwriting recognition Marketing: Divide customers into clusters Natural language translation (English to Spanish, French to Japanese, etc.) Predict mortgage loan defaults |

Recommender systems (“people who bought this product also bought…”) Self-Driving cars (more generally, autonomous vehicles) Sentiment analysis (like classifying movie reviews as positive, negative or neutral) Spam filtering Time series predictions like stock-price forecasting and weather forecasting Voice recognition |

15.1.1 Scikit-Learn

We’ll use the popular scikit-learn machine learning library. Scikit-learn, also called sklearn, conveniently packages the most effective machine-learning algorithms as estimators. Each is encapsulated, so you don’t see the intricate details and heavy mathematics of how these algorithms work. You should feel comfortable with this—you drive your car without knowing the intricate details of how engines, transmissions, braking systems and steering systems work. Think about this the next time you step into an elevator and select your destination floor, or turn on your television and select the program you’d like to watch. Do you really understand the internal workings of your smart phone’s hardware and software?

With scikit-learn and a small amount of Python code, you’ll create powerful models quickly for analyzing data, extracting insights from the data and most importantly making predictions. You’ll use scikit-learn to train each model on a subset of your data, then test each model on the rest to see how well your model works. Once your models are trained, you’ll put them to work making predictions based on data they have not seen. You’ll often be amazed at the results. All of a sudden your computer that you’ve used mostly on rote chores will take on characteristics of intelligence.

Scikit-learn has tools that automate training and testing your models. Although you can specify parameters to customize the models and possibly improve their performance, in this chapter, we’ll typically use the models’ default parameters, yet still obtain impressive results. It gets even better. In the exercises, you’ll investigate auto-sklearn which automates many of the tasks you perform with scikit-learn.

Which Scikit-Learn Estimator Should You Choose for Your Project

It’s difficult to know in advance which model(s) will perform best on your data, so you typically try many models and pick the one that performs best. As you’ll see, scikit-learn makes this convenient for you. A popular approach is to run many models and pick the best one(s). How do we evaluate which model performed best?

You’ll want to experiment with lots of different models on different kinds of datasets. You’ll rarely get to know the details of the complex mathematical algorithms in the sklearn estimators, but with experience, you’ll become familiar with which algorithms may be best for particular types of datasets and problems. Even with that experience, it’s unlikely that you’ll be able to intuit the best model for each new dataset. So scikit-learn makes it easy for you to “try ’em all.” It takes at most a few lines of code for you to create and use each model. The models report their performance so you can compare the results and pick the model(s) with the best performance.

15.1.2 Types of Machine Learning

We’ll study the two main types of machine learning—supervised machine learning, which works with labeled data, and unsupervised machine learning, which works with unlabeled data.

If, for example, you’re developing a computer vision application to recognize dogs and cats, you’ll train your model on lots of dog photos labeled “dog” and cat photos labeled “cat.” If your model is effective, when you put it to work processing unlabeled photos it will recognize dogs and cats it has never seen before. The more photos you train with, the greater the chance that your model will accurately predict which new photos are dogs and which are cats. In this era of big data and massive, economical computer power, you should be able to build some pretty accurate models with the techniques you’re about to learn.

How can looking at unlabeled data be useful? Online booksellers sell lots of books. They record enormous amounts of (unlabeled) book purchase transaction data. They noticed early on that people who bought certain books were likely to purchase other books on the same or similar topics. That led to their recommendation systems. Now, when you browse a bookseller site for a particular book, you’re likely to see recommendations like, “people who bought this book also bought these other books.” Recommendation systems are big business today, helping to maximize product sales of all kinds.

Supervised Machine Learning

Supervised machine learning falls into two categories—classification and regression. You train machine-learning models on datasets that consist of rows and columns. Each row represents a data sample. Each column represents a feature of that sample. In supervised machine learning, each sample has an associated label called a target (like “dog” or “cat”). This is the value you’re trying to predict for new data that you present to your models.

Datasets

You’ll work with some “toy” datasets, each with a small number of samples with a limited number of features. You’ll also work with several richly featured real-world datasets, one containing a few thousand samples and one containing tens of thousands of samples. In the world of big data, datasets commonly have, millions and billions of samples, or even more.

There’s an enormous number of free and open datasets available for data science studies. Libraries like scikit-learn package up popular datasets for you to experiment with and provide mechanisms for loading datasets from various repositories (such as openml.org). Governments, businesses and other organizations worldwide offer datasets on a vast range of subjects. Between the text examples and the exercises and projects, you’ll work with many popular free datasets, using a variety of machine learning techniques.

Classification

We’ll use one of the simplest classification algorithms, k-nearest neighbors, to analyze the Digits dataset bundled with scikit-learn. Classification algorithms predict the discrete classes (categories) to which samples belong. Binary classification uses two classes, such as “spam” or “not spam” in an email classification application. Multi-classification uses more than two classes, such as the 10 classes, 0 through 9, in the Digits dataset. A classification scheme looking at movie descriptions might try to classify them as “action,” “adventure,” “fantasy,” “romance,” “history” and the like.

Regression

Regression models predict a continuous output, such as the predicted temperature output in the weather time series analysis from Chapter 10’s Intro to Data Science section. In this chapter, we’ll revisit that simple linear regression example, this time implementing it using scikit-learn’s LinearRegression estimator. Next, we use a LinearRegression estimator to perform multiple linear regression with the California Housing dataset that’s bundled with scikit-learn. We’ll predict the median house value of a U. S. census block of homes, considering eight features per block, such as the average number of rooms, median house age, average number of bedrooms and median income. The LinearRegression estimator, by default, uses all the numerical features in a dataset to make more sophisticated predictions than you can with a single-feature simple linear regression.

Unsupervised Machine Learning

Next, we’ll introduce unsupervised machine learning with clustering algorithms. We’ll use dimensionality reduction (with scikit-learn’s TSNE estimator) to compress the Digits dataset’s 64 features down to two for visualization purposes. This will enable us to see how nicely the Digits data “cluster up.” This dataset contains handwritten digits like those the post office’s computers must recognize to route each letter to its designated zip code. This is a challenging computer-vision problem, given that each person’s handwriting is unique. Yet, we’ll build this clustering model with just a few lines of code and achieve impressive results. And we’ll do this without having to understand the inner workings of the clustering algorithm. This is the beauty of object-based programming. We’ll see this kind of convenient object-based programming again in the next chapter, where we’ll build powerful deep learning models using the open source Keras library.

K-Means Clustering and the Iris Dataset

We’ll present the simplest unsupervised machine-learning algorithm, k-means clustering, and use it on the Iris dataset that’s also bundled with scikit-learn. We’ll use dimensionality reduction (with scikit-learn’s PCA estimator) to compress the Iris dataset’s four features to two for visualization purposes. We’ll show the clustering of the three Iris species in the dataset and graph each cluster’s centroid, which is the cluster’s center point. Finally, we’ll run multiple clustering estimators to compare their ability to divide the Iris dataset’s samples effectively into three clusters.

You normally specify the desired number of clusters, k. K-means works through the data trying to divide it into that many clusters. As with many machine learning algorithms, k-means is iterative and gradually zeros in on the clusters to match the number you specify.

K-means clustering can find similarities in unlabeled data. This can ultimately help with assigning labels to that data so that supervised learning estimators can then process it. Given that it’s tedious and error-prone for humans to have to assign labels to unlabeled data, and given that the vast majority of the world’s data is unlabeled, unsupervised machine learning is an important tool.

Big Data and Big Computer Processing Power

The amount of data that’s available today is already enormous and continues to grow exponentially. The data produced in the world in the last few years equals the amount produced up to that point since the dawn of civilization. We commonly talk about big data, but “big” may not be a strong enough term to describe truly how huge data is getting.

People used to say “I’m drowning in data and I don’t know what to do with it.” With machine learning, we now say, “Flood me with big data so I can use machine-learning technology to extract insights and make predictions from it.”

This is occurring at a time when computing power is exploding and computer memory and secondary storage are exploding in capacity while costs dramatically decline. All of this enables us to think differently about the solution approaches. We now can program computers to learn from data, and lots of it. It’s now all about predicting from data.

15.1.3 Datasets Bundled with Scikit-Learn

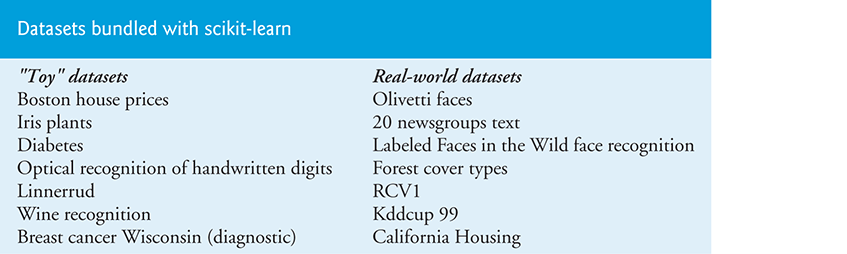

The following table lists scikit-learn’s bundled datasets.1 It also provides capabilities for loading datasets from other sources, such as the 20,000+ datasets available at openml.org.

15.1.4 Steps in a Typical Data Science Study

We’ll perform the steps of a typical machine-learning case study, including:

loading the dataset

exploring the data with pandas and visualizations

transforming your data (converting non-numeric data to numeric data because scikit-learn requires numeric data; in the chapter, we use datasets that are “ready to go,” but we’ll discuss the issue again in the “Deep Learning” chapter)

splitting the data for training and testing

creating the model

training and testing the model

tuning the model and evaluating its accuracy

making predictions on live data that the model hasn’t seen before.

In the “Array-Oriented Programming with NumPy” and “Strings: A Deeper Look” chapters’ Intro to Data Science sections, we discussed using pandas to deal with missing and erroneous values. These are important steps in cleaning your data before using it for machine learning.

Self Check

Self Check

(Fill-In) Machine learning falls into two main categories— ___________ machine learning, which works with labeled data and _________ machine learning, which works with unlabeled data

Answer: supervised, unsupervised.

(True/False) With machine learning, rather than programming expertise into our applications, we program them to learn from data.

Answer: True.