User: How does my schedule look today?

Software: You have a meeting with your leadership today at 9 AM.

User: Thank you, let me leave by 8:30 AM then.

Software: There looks to be heavy traffic downtown; leave by 8 AM instead?

User: Should I go by metro then?

Software Absolutely, if you take the 8:20 AM metro, you should be in the office by 8:45.

User: Thanks. Please also mark my calendar booked, as I have a Tea meeting with a prospective client.

Software: I have already done it tentatively, based on your email interaction. Don’t forget to come early today. Your favorite team, Barcelona, is in the finale with Real Madrid today.”

User: Oh! I can’t miss it! Please send me a reminder by 4 PM.

Software: Sure, will do.

Cognitive systems and types

Why Microsoft Cognitive API

Various Microsoft Cognitive API groups and their APIs

What Are Cognitive Systems?

Various categories of Cognitive APIs

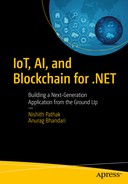

Cognition is the process of representing information and using that representation to reason. Observation enables machines to mimic human behavior. For example, interacting with speech, text, or vision the way humans do. Active learning is the process of improving automatically over time. A classic example of Active Learning is Microsoft Language Understanding Intelligent Service (LUIS), which we cover a little later in this chapter. Physical action requires using a combination of these three and devices to interact intelligently.

Cognitive systems understand like humans do. We have seen the era where AI led machines are defeating some of the champions of the game. Libratus, designed by a team from Carnegie-Mellon, has defeated several Poker champions. Poker, as you may know, requires information to be hidden until a point in time and it is very difficult to create a model to handle such a situation.

Cognitive systems have a unique ability to understand ideas and concepts, form a proposition, disambiguate, infer, and generate insights, based on which they can reason and act. For example, you can create a cognitive web application that can recognize human beings by looking at their images and then conversing with them in slang.

Unlike traditional computing programs, cognitive systems are always learning based on new data. In fact, each cognitive system gets cleverer day by day based on learning new information. Over a period, proficiency moves from novice to expert.

Why the Microsoft Cognitive API?

Now that we understand the essence of cognitive systems, you must have realized that creating a cognitive systems is not easy. It involves a great amount of research, understanding internals, understanding fuzzy algorithms, and much more. As an .NET developer you may have wondered, how can I make my software as smart as Microsoft’s Cortana, Apple’s Siri, or Google’s Assistant? You probably did not know where to start.

Over the years, we have spent a good amount of time with software developers and architects at top IT companies. A common perception that we've found among all of them is that adding individual AI elements, such as natural language understanding, speech recognition, machine learning etc., to their software would require a deep understanding of neural networks, fuzzy logic, and mind-bending computer science theories. Various companies like Microsoft, Google, IBM, and others realized this and agreed that AI is not just about inventing new algorithms and research. The good news is this perception is not the case anymore. To get started, each of these companies has exposed their years of research in form of an SDK, mostly in the RESTful API that helps developers create a smarter application with very few lines of code. Together, these APIs are called the Cognitive API.

Built by Experts and Supported by Community

The Microsoft Cognitive API is a result of years of research done at Microsoft. This includes experts from various fields and teams like Microsoft research, Azure machine learning, and Bing, to name a few. Microsoft certainly did a great job of abstracting all the nuances of the deep neural network, which are complex algorithms that exposing easy-to-use REST APIs. This means that whenever you consume the Microsoft Cognitive API, you are getting the best functionality exposed in a RESTful manner. All the APIs have been thoroughly tested. We personally have been playing with Cognitive API since its inception and feel that each of these APIs, like all cognitive APIs, has been improving over time. What makes it more appealing is that entire functionality is supported by class documentation and has great community backing from GitHub, user voice, MSDN, and others along with sample code. In fact, the entire Microsoft cognitive documentation is being hosted on GitHub and individual developers can contribute to making it more effective.

Ease to Use

The Microsoft Cognitive API is easy to consume. You just need to get the subscription key for your respective API and then you can consume the APIs by passing this subscription key. The functionality is accomplished in a few lines of code. We will go into more detail about the subscription key later. For now, just understand that before you can start using Cognitive Services, you need a subscription key for each service you want to use in your application. Almost all Cognitive APIs have a free and paid tier. You can use the free API keys with a Microsoft account.

Language and Platform Independent

Microsoft has started supporting open source in a better and more seamless way. Previously, Microsoft functionality was limited through the use of Microsoft-specific tools and languages like VB.NET, C#, etc. We discussed Microsoft Cognitive API being available in RESTful. What makes Microsoft Cognitive API more appealing is that it’s flexible. You can now integrate and consume Microsoft Cognitive API in any language or platform. This means it doesn’t matter if you are a Windows, Android, or Mac developer, or if your preferred language ranges from Python to C# to Node.js—you can consume Microsoft Cognitive APIs with just a few lines of code. Exciting!

Note

The Microsoft cognitive space has been expanding at a steady pace. What started with four Cognitive APIs last year has risen to 29 while writing this book. Microsoft has suites of product offerings. They also have a suite with Cloud-based capability. While consuming these services, one may wonder why Microsoft created these services rather than creating specific solution and products. Well, the approach has been to emphasize platforms rather than creating individual systems. These platforms and services will eventually be used by other companies and developers to resolve domain-specific problems. The intent of the platform is to scale up and create more and more offerings in the cognitive space and give the immersive experience of consuming them to the end users. Don’t be surprised if the cognitive list of APIs increases to 50+ in the next year or so.

Microsoft’s Cognitive Services

Cognitive Services is a set of software-as-a-service (SaaS) commercial offerings from Microsoft related to artificial intelligence. Cognitive Services is the product of Microsoft’s years of research into cognitive computing and artificial intelligence, and many of these services are being used by some of Microsoft’s own popular products, such as Bing (search, maps), Translator, Bot Framework, etc.

The main categories of Cognitive APIs

The rest of the chapter provides a good amount of overview of each of the APIs and their sub-APIs. Let's get a sneak preview of each of these APIs one by one in more detail.

Note

It requires a separate book to understand various Microsoft Cognitive APIs in more detail. If you are interested in getting a deeper understanding of Cognitive APIs, we recommend you read the Apress book entitled Artificial Intelligence for.NET.

Vision

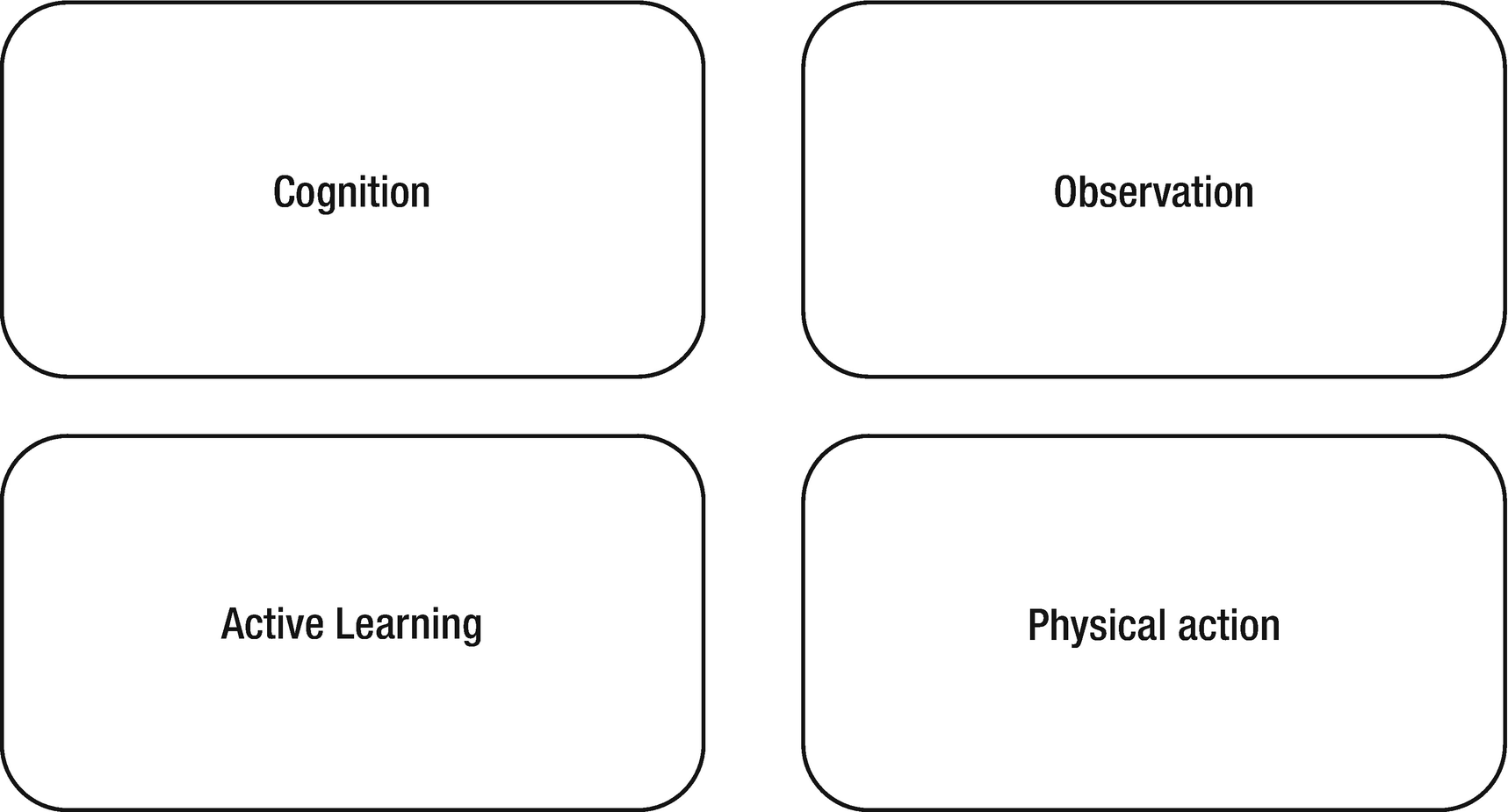

A group of riders on a dirt road

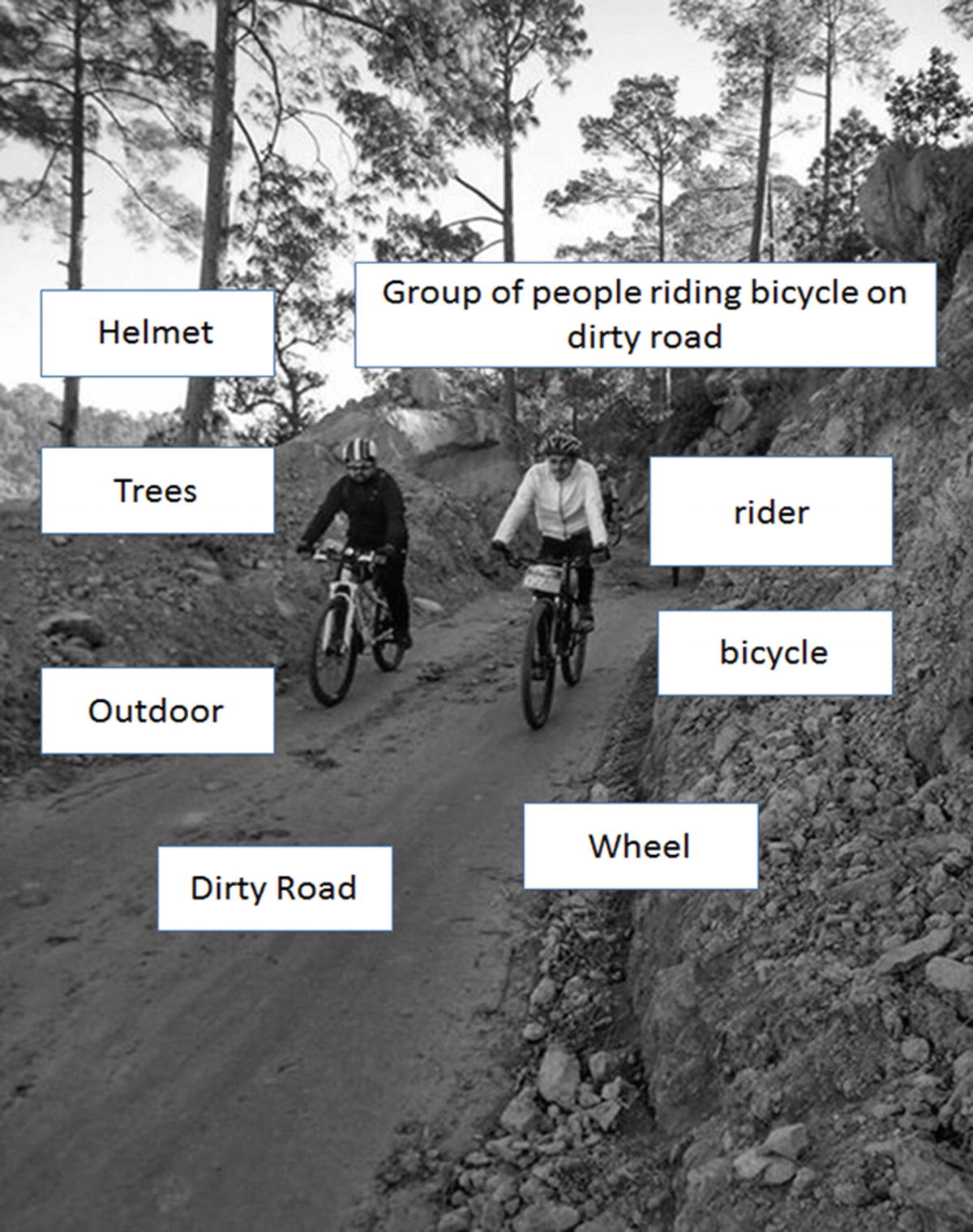

Subcategories of the Microsoft Computer Vision API

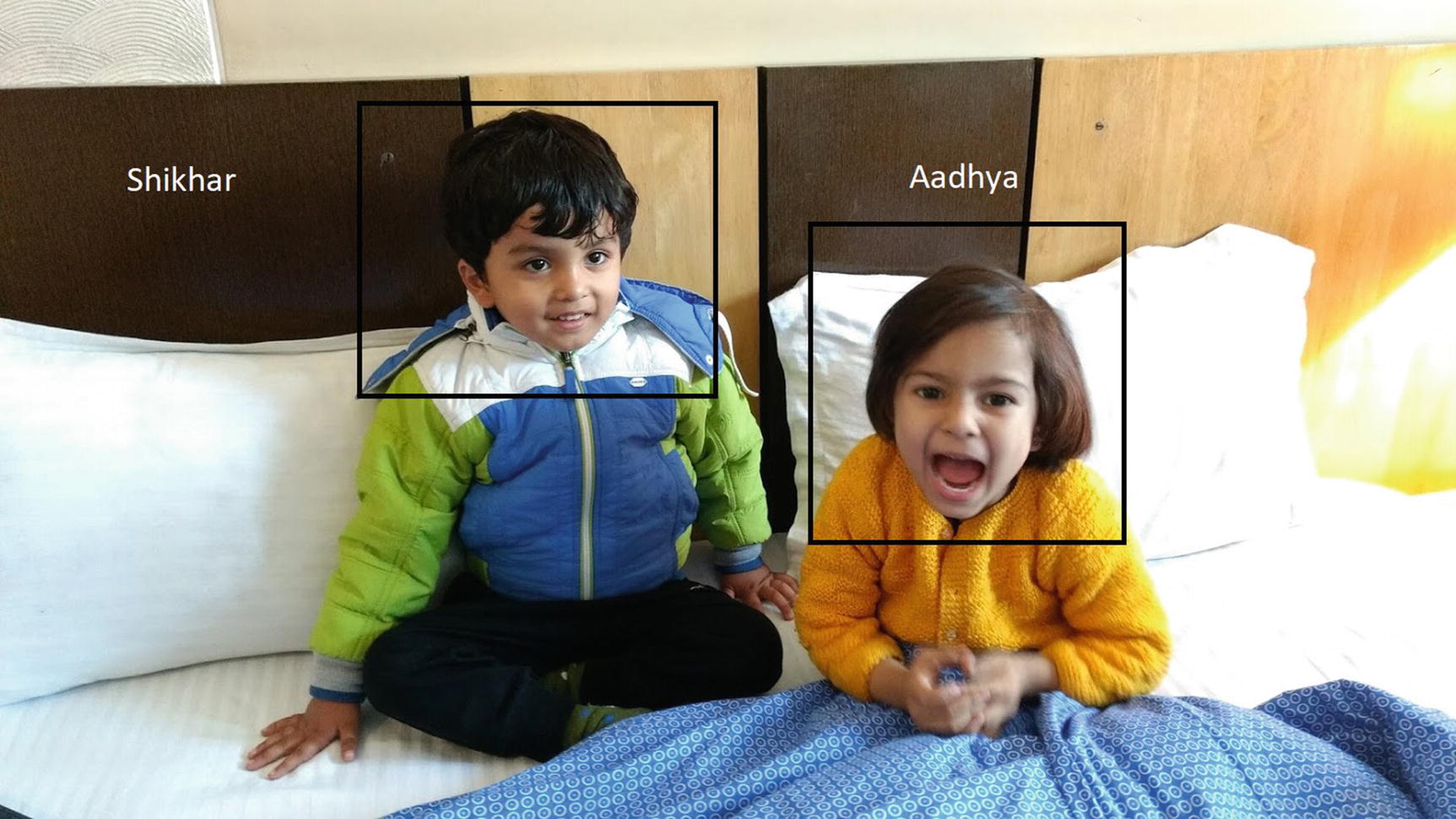

Face API

Faces being identified in an image

Emotions API

The Face API helps in detecting and recognizing faces. The Emotions API takes it to the next level by analyzing faces to detect a range of eight feelings—anger, happiness, sadness, fear, surprise, contempt, disgust, neutrality, etc. The Emotions API returns these emotions with a decreasing order of confidence for each face. The Emotions API also helps in recognizing emotions on a video. It helps in getting instant feedback. Think of a use case when the Emotions API can be applied to retail customers to evaluate their feedback instantaneously.

Computer Vision API

The Computer Vision API performs many tasks. It extracts rich information from an image about its contents: an intelligent textual description of the image, detected faces (with age and gender), and dominant colors in the image and whether the image has adult contents. It also provides an Optical Character Recognition (OCR) facility. OCR is a method to capture text in images and convert them into the stream that can eventually be used for processing. Image being captured can be handwritten notes, whiteboard materials, and the even various objects. Not only does computer vision do all these, it also helps in recognizing faces from their celebrity recognition model, which has around 200,000 celebrities from various fields, including entertainment, sports, politics, etc. It also helps in analyzing videos, providing information about videos, and generating a thumbnail for the images being captured. All in all, Computer Vision is the mother API of all the vision APIs. You will calling this API to get information in most scenarios unless you need more detailed information (such as the specific emotion of a person). Chapters 5 and 6 cover computer vision in more detail.

Content Moderation

Data, as discussed, is the new currency in any organization. Data and content are generated from various sources. Be it a social, messaging, or even peer platform, content is generated exponentially. There is always a need to moderate the content so it shouldn’t affect the platform, people, ethics and core values and of course the business. Content moderations help in moderating the content. Whether the content source is text, image, or videos, the Content Moderation API serves content moderation with various scenarios and through three methods (manual, automated, and hybrid) for moderating content.

Video Indexer API

The Video indexer API allows you to use intelligent video processing for face detection, motion detection (useful in CCTV security systems), to generate thumbnails, and for near real-time video analysis (textual description for each frame). It starts getting insights on the video in almost real time. This helps make your video more engaging to the end user and helps more contents be discovered.

Custom Vision Service

So far we have seen our Microsoft Cognitive API doing image recognition on things like scenes, faces, and emotions. There are requirements wherein you need a custom image classifier, suited specifically for a domain. Consider a banking scenario where you need a classifier just to understand various types of currency and its denominations. This is where Microsoft Custom Vision Services come in. You must train the system with domain-specific images and then you can consume its REST API in any platform. In an order to use the custom vision service, you need to access at https://www.customvision.ai/ . You then upload the domain-specific images, train them by labeling, and then exposing the REST API. You can evaluate custom vision service. With proper training , it works like a charm. Like LUIS, Custom Vision Service is also based on active machine learning, which means your custom image classifier keeps on improving over a period of time. More info about custom vision is found in later chapters.

Video Indexer

The Video Indexer API extracts insights from a video, such as a face recognition (names of people), speech sentiment analysis (positive, negative, neutral), and keywords.

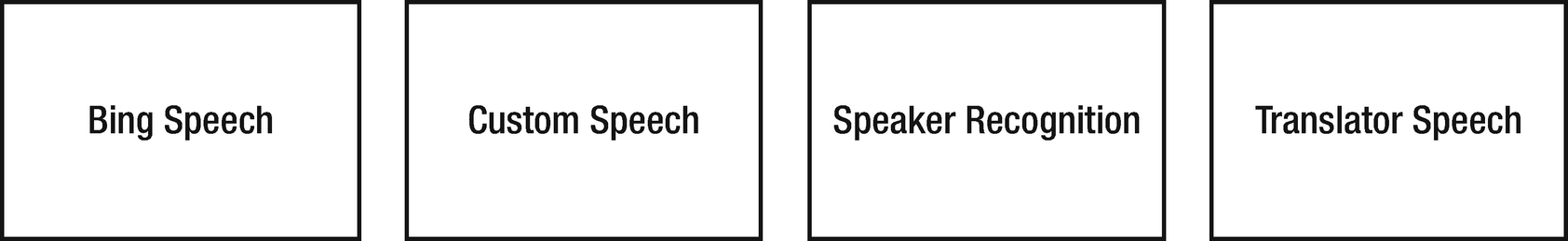

Speech

The subcategories of the Speech API

Bing Speech API

The Bing Speech API converts speech to text, optionally, understands its intent, and converts the text back to speech. It has two main components—Speech Recognition and Speech Synthesis. Speech Recognition, also referred as Speech To Text (STT), allows you to handle spoken words from your users in your application using a recognition engine and translates the speech to text. This text transcription can be used for a variety of purposes, including for hands-free applications. These spoken words can come directly from a microphone, speaker, or even from an audio file. All this is possible without typing a single character.

Contract to STT, Speech Synthesis aka Text to Speech (TTS), allows you to speak words or phrases back to the users through a speech synthesis engine. Every Windows machine has an built-in Speech Synthesizer that converts text to speech. This built-in synthesizer is especially beneficial for all those folks who have difficulty or can’t read text printed on the screen. This built-in synthesizer is good for simple scenarios. For enterprise scenarios, where performance, accuracy, and speed is important, the speech-based model should comply with the following prerequisites—ease of use, improves with time, upgrades continuously, handles complex computations, and is available on all platforms. The Bing Text to Speech API gives these features in an easy-to-consume REST API. Bing Text to Speech API supports Speech Synthesis Markup Language (SSML). SSML is a W3C specification and offers a uniform way of creating speech-based markup text. Check the official spec for the complete SSML syntax at https://www.w3.org/TR/speech-synthesis .

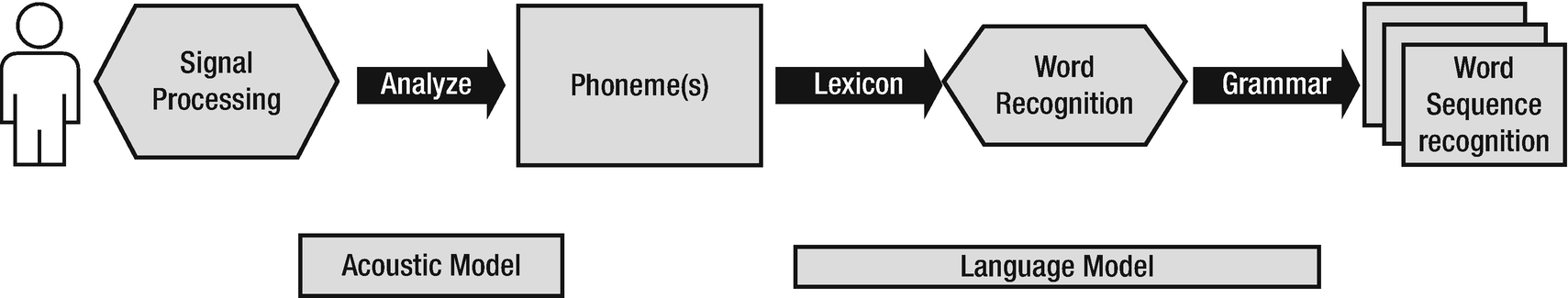

Custom Speech Services

The entire flow of speech recognition

As you see Figure 4-7, audio signals are converted into individual sounds, known as phonemes. For example, the word Surabhi is made up of “Su” “ra” “bhi”. These words are then mapped to the language model, which is a combination of lexicon and grammar. A catalog of language and words is called lexicon and the systems of rules combining these words are called grammar. This process of utilizing grammar is important, as some words and phrases sound the same but have different meanings (consider “stuffy nose” and “stuff he knows”).

Training speech recognition is the key to success. The Microsoft Speech to Text engine is also been trained well with the enormous speech training, making it one of the best in class for generic scenarios. Sometimes you need speech recognition systems in a closed domain or for a specific environment. For example, there is a need for speech recognition in environments where speech recognition engines deal with background noise, specific jargon, or diverse accents that are not common. Such scenarios mandate customization on both the acoustic side as well as the language model, making them suitable for a specific environment. The Custom Speech Service enables you to customize your speech recognition system by creating custom language models and an acoustic model that are specific to your domain, environment, and accents. It also allows you to create your own custom speech-to-text endpoint that suits your specific requirements once your custom acoustic and language model is created.

Speaker Recognition

The term voice recognition or speaker identification refers to identifying the speaker, rather than what they are saying. Recognizing the speaker can simplify the task of translating speech in systems that have been trained on a specific person’s voice. A voice has distinctive features that can be used to help recognize an individual. In actuality, each individual voice has an inimitable mixture of acoustic characteristics that makes it unique. The Speaker Recognition API helps in identifying the speaker in a recorded or live speech audio. In an order to recognize speaker, speech recognition requires enrollment before verification or identification. Speaker recognition systems were adapted to the Gaussian Mixture Model (GMM). The speaker recognition of Microsoft uses the most recent and advanced factor analysis based on the i-Vector speaker recognition system. Speaker recognition can be reliably used as an additional authentication mechanism for security scenarios.

Note

There is a clear distinction between speech recognition and speaker recognition. Speech recognition is the “what” part of the speech, whereas speaker recognition is the “who” part of the speech. In simple terms, speech recognition identifies what has been said. Speaker recognition recognizes who is speaking.

Speaker verification

Speaker identification

Speaker verification, also known as speaker authentication, is the process that verifies the claim with one pattern or record in the repository. In speaker verification, the input voice and phrase is matched against the enrollment voice and phrase to identify whether they are the same or a different individual. You can assume speaker verification works on a 1:1 mapping. Speaker identification, on the other hand, is a mapping to verify the claim with all the possible records in the repository and is primarily used for identifying an unknown person from a series of individuals. Speaker identification works on a 1:N mapping. Before the Speaker Recognition API is used for verification or identification, it undergoes a process called enrollment.

Translator Speech API

The Microsoft Translator Speech API helps translates speech from one language to another in real time. The key to this translation is the real time component. Imagine a scenario in which a Chinese-speaking person wants to communicate with a Spanish-speaking person. Neither of them know a common language. To make it worse and a little more real, let’s assume they are far apart in space. It would certainly be hard, if not impossible, for them to communicate. The Microsoft Translator Speech API helps resolve this barrier across languages in real time. As of the writing of this book, it supports 60 languages. It has various functionalities for both partial and final transcription, which can be used to handle various unique scenarios. The Translator Speech API internally uses the Microsoft Translator API, which is also used across various Microsoft products, including Skype, Bing, and translator apps, to name a few.

Language

The subcategories of the Language API

Bing Spell Check API

Momento

The Pursuit of Happiness

Kick Ass

A search just based on the grammatical based text editor would result in a “No movies found” error. These are classic examples of spelling issues. “Momento” is a common misspelling of “Memento”. In the second case, although the spelling was correct, the actual movie title was “The Pursuit of Happyness”. In the last case, the movie name was hyphenated as “Kick-Ass” rather than separate words. The system should have the ability to handle such spelling mistakes. Otherwise, you could end up losing some of your loyal customers. Some of the popular search engines like Bing and Google handle this issue by handling spell checks with ease.

Bing Spell Check is an online API that not only helps to correct spelling errors but considers word breaks, slang, person, places, and even brand names. For each detected error, it also provides a suggestion in decreasing order of confidence. Bing was created with years and years of research, data, and of course using the machine learning model to optimize it. In fact, most applications, including Bing.com, use the Bing Spell Check API to resolve spelling mistakes.

Language Understanding Intelligent Service (LUIS)

“Hi”

“Howdy”

“Hello”

“Hey”

NLU analyzes each sentence for two things—intent and entity.

As Figure 4-9 shows, NLU analyzes each sentence for two things—intent (the meaning or intended action) and entities. In the example, the nearest hospital information is the detected intent and the heart is the entity. A user may ask the same question in a hundred different ways. LUIS will always be able to extract the correct intent and entities from the user’s sentence. The software can then use this extracted information to query an online API and show the user their requested info.

LUIS provides prebuilt entities. If you want to create a new one, you can easily create a simple, composite, or even hierarchical entity.

To create LUIS application, you need to visit https://luis.ai , create an application, add the intent and entities, train it, and then publish the app to get a RESTful API, which can then used by various applications. Like any machine learning based application, training is the key for LUIS . In fact, LUIS is based on active machine learning, which has the ability to continue to be trained. We discuss LUIS more in coming chapters.

Linguistic Analysis

The Linguistic Analysis API helps in parsing text for granular linguistic analysis, such as sentence separation and tokenization (breaking the text into sentences and tokens) and part-of-speech tagging. This eventually helps in understanding the meaning and the context of the text. Linguistic analysis has been used as a preliminary step in many NLP-related processing, including NLU, TTS, and machine translations, to name a few.

Text Analytics

Machine learning uses historical data to identify patterns. The more data there is, the easier it is to define the pattern. The rise of IoT and new devices fuels this evolution. The last two years have generated 90% of all data. A full 80% of this data is unstructured in nature. Text Analytics helps resolve some areas of unstructured data by detecting sentiments (positive or negative), key phrases, topics, and language from your text. Consider the case of the online retail bot. Being a global online retail bot, it can expect users from various geographies and queries in many languages. Language detection is one of the classic problems being resolved by the Microsoft Text Analytics API. Other areas where the Text Analytics API can be used are sentiment analysis to evaluate sentiments, automatic minutes of meeting generation, call record analysis, etc.

Translator Text API

The Microsoft Translator API detects the language of a given text and translates it from one language to another. Internally, it uses the Microsoft internal machine learning based translation engine, which has gone through tens of thousands of training sentences. It understands patterns and then translates the text as well as determines the rules of the language. Microsoft has been using the Translator Text API internally in all its applications where multilanguage support is required. You can also create your own customized translation system built on the existing system, which is suitable for specific domains and environments.

Web Language Model API

The success of a language model is solely dependent on the data used for training. The Web contains an enormous amount of data in the textual format under millions of images. Although this data has several anomalies like grammatical errors, slang, profanity, lack of punctuation, etc., it can certainly help in understanding patterns, word prediction, or even breaking a word without spaces to understand it better. The Web Language Model or WebLM is the process of creating a language model using this method.

WebLM provides a variety of natural language processing tasks not covered under other Language APIs—word breaking (inserting spaces into a string of words lacking spaces), joint probabilities (calculating how often a particular sequence of words appear together), conditional probabilities (calculating how often a particular word tends to follow), and next word completions (getting the list of words most likely to follow).

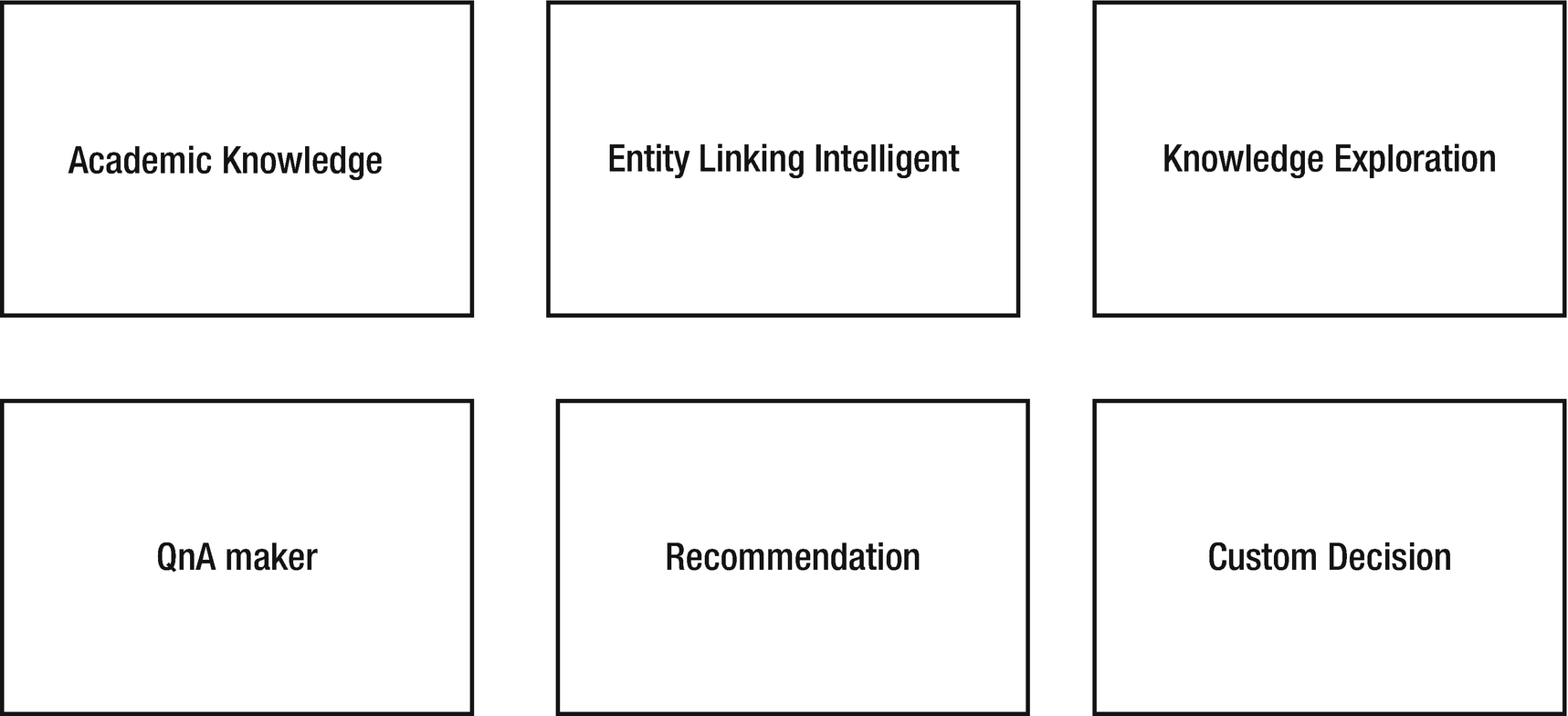

Knowledge

The subcategories of the Knowledge service

Academic Knowledge API

As the name implies, the Academic Knowledge API allows you to retrieve information from Microsoft Academic Graph, a proprietary knowledgebase of scientific/scholarly research papers and their entities. Using this API, you can easily find papers by authors, institutes, events, etc. It is also possible to find similar papers, check for plagiarism, and retrieve citation stats. It allows you to interpret and evaluate knowledge entity results. It also provides an option to calculate the histogram of the distribution of academic entities, thereby giving you a rich semantic experience by understanding the intent and context of the query being searched.

QnA Maker

QnA Maker creates FAQ-style questions and answers from the provided data. Data can include any file or links. QnA Maker offers a combination of a website and an API. Use the website to create a knowledgebase using your existing FAQs website, PDF, DOC, or text file. QnA Maker will automatically extract questions and answers from your documents and train itself to answer natural language user queries based on your data. You can think of it as an automated version of LUIS. You do not have to train the system, but you do get an option to do custom re-training. QnA Maker’s API is the endpoint that accepts user queries and sends answers for your knowledgebase. Optionally, QnA Maker can be combined with Microsoft’s Bot Framework to create out-of-the-box bots for Facebook, Skype, Slack, and more.

Entity Linking Intelligence Service

The use of the word “crane” in three different contexts

In these sentences, “crane” is being used to denote a bird, a large lift, or even the movement of a body. It requires considering the context of the sentence to identify the real meaning of the words being used. The Entity Linking Intelligence service allows you to determine keywords (named entities, events, locations, etc.) in the text based on its context.

Knowledge Exploration Service

KES, aka the Knowledge Exploration Service, adds support for natural language queries auto-completion search suggestions and more to your own data. You can make your search more interactive with autosuggestion in a fast and effective manner.

Recommendation API

YouTube uses a recommender system to recommend videos

Netflix uses a recommender system to recommend interesting videos/stories

Amazon is not just using recommendations for showing product results but also for recommending products to sell to their end users

Google is not just using recommendation systems to rank web links but is also suggesting web and news links for you

News sites like The New York Times are providing recommendations for news you should watch

Many successful companies continue to invest in, research, and re-innovate their recommendation systems to improve them and to provide more personalized user experiences to their end users. Creating a recommendation system is not easy. It requires deep expertise on data, having exemplary research and analytics wings, and of course lots of money.

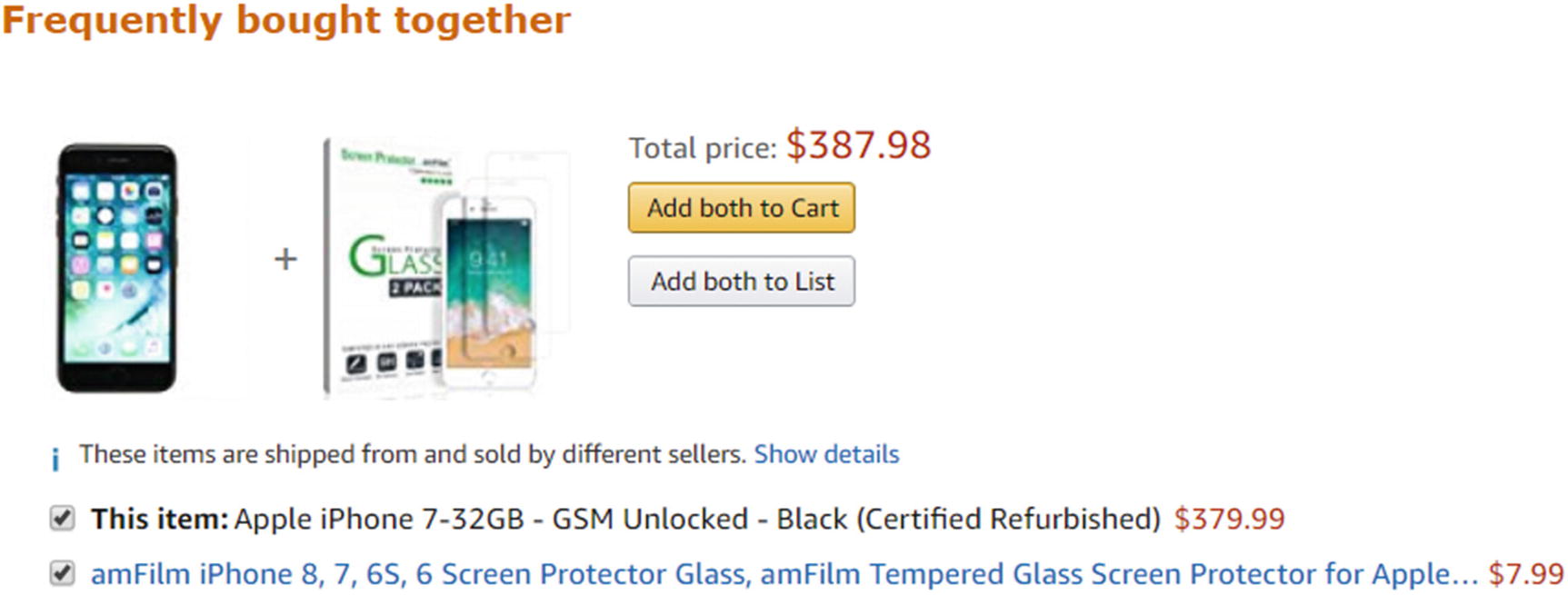

There are various types of recommendation systems available in the market. The Microsoft Recommendations API helps you deal with transaction-based recommendations. This is particularly useful to retail store—both online and offline—in helping them increase sales by offering their customers recommendations, such as items that are frequently bought together, personalized item recommendations for users based on their transaction history, etc. Like QnA Maker, you use the Recommendations UI website with your existing data to create product catalog and usage data.

Frequently brought together (FBT)

Item to item recommendations

Recommendations based on past user activity

The FBT use case while buying an iPhone

Custom Decision Service

Flying drones that do not require a human to operate them uses reinforcement learning to learn from the dynamic and challenging environment to improve their skills over time

You can use Custom Decision Service in pool or application-specific mode. Pool mode uses a single model for all applications and is suited for low traffic applications. Applications that have heavy traffic require application-specific mode.

Search

Search has been ubiquitously available, from the desktop, mobile search, web search, and even routine stuff in our daily lives. In the latest report, 50 billion connected devices are expected to be available by the end of 2020 and each of them requires a connected search. Microsoft also has certainly made great progress on search in bringing Bing in the platform. Microsoft search, which is based on Bing, provides an alternative option to Google search. One of the missions of Bing search API was to go against the Google monopoly and try to give an alternative option to search. Bing currently is the second most powerful search engine after Google. The Bing Search APIs allow you to implement the capabilities of Google or Microsoft Bing into your enterprise application with a few lines of code.

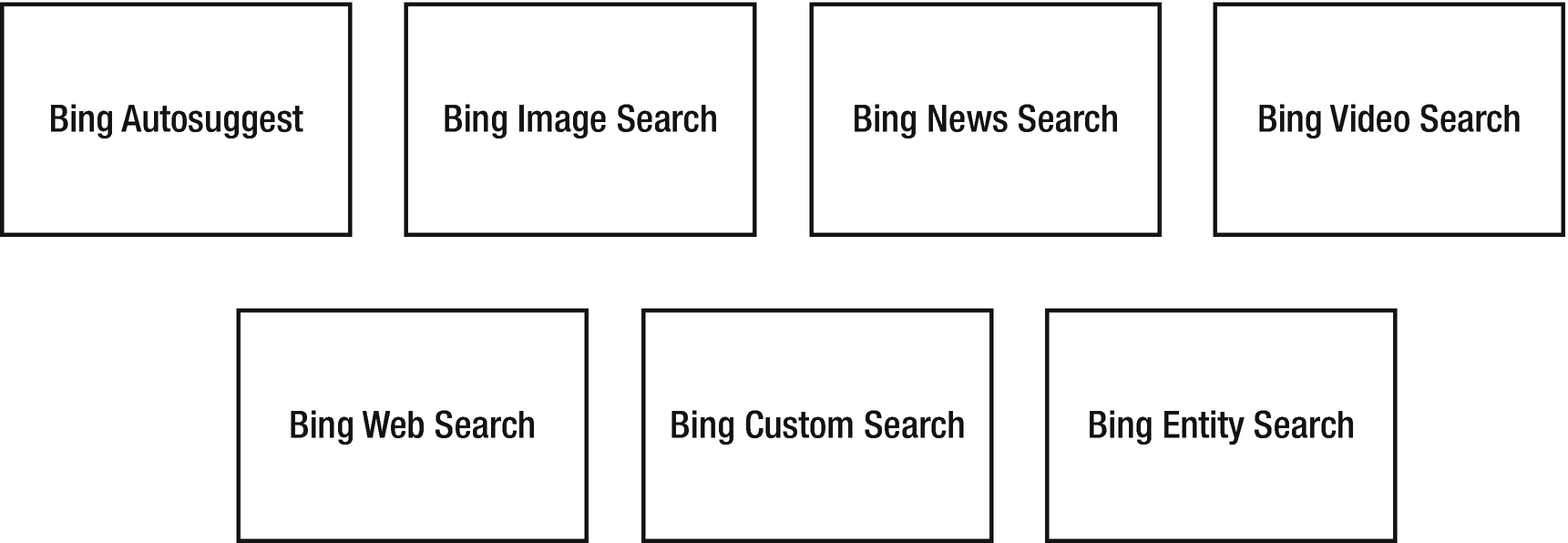

The subcategories of Search

Bing Autosuggest API

As the name suggests, the Bing Autosuggest API provides intelligent type-ahead and search suggestions, directly from the Bing search, when a user is typing inside the search box. As the user types in the search textbox, your application calls the API to show a list of options for the user to select. Based on each character entered, the Bing Autosuggest API shows distinctive relevant search results. Internally, this is achieved by passing partial search queries to Bing. This result is a combination of contextual search based on what other users have searched for in the past. All Bing APIs , including BingAutosuggest, provide customization of search results using query parameters and headers being passing while consuming APIs.

Bing Image Search API

Images are more expressive than textual content. The Bing Image Search API provides developers an opportunity to get an analogous experience of Bing.com/images in their applications. One thing to note here is that you should only use Bing Image Search API when the results are just images. It allows you to return images based on filters such as keywords, color, country, size, license, etc. As an added bonus, the Bing Image Search API breaks the query into various segments and provides suggestions to narrow down the search options. For example, if you search for the keyword “Microsoft search,” Bing smartly breaks it into various options like “Microsoft Desktop search,” “Microsoft Windows Search,” and “Microsoft Search 4.0”. You can choose one of the query expansions and get the search result as well. Overall, the Bing Search API provides an extensive set of properties and query parameters to get customized image search results.

Bing News Search API

The Bing News search API acts as a news aggregator that aggregates, consolidates, and categorizes news from thousands of newspapers and news articles across the world. You can narrow down the news results based on filters such as keywords, freshness, country, etc. This feature is available through Bing.com/news. Essentially at a high level, the Bing News Search API allows you to get top news articles/headlines based on specific categories, return news articles based on the user’s search, or allows news topics to be returned that are trending in social media.

Bing Video Search API

The Bing Video search API allows you to get videos based on various filters, such as keywords, resolutions, video length, country, etc., return more insight about a particular video, or show videos to be returned that are trending in social media. The Bing News Videos API provides immersive video searching capabilities and are the easiest way to create a video-based portal experience with just a few API calls. The Bing Video Search API has free and paid tiers, like Bing image search.

Bing Web Search API

By now, you got a fair idea about Bing API services. Each of these API gives specific information. For example, you use Bing Image API if you only need image results. Similarly, you would use the News API if you need news results. There are quite a lot of scenarios in which you need a combination of results, such as needing images and videos together in the result set. This is similar to Bing.com/search, where you get results that include image, videos, and more. The Bing Web search API provides solutions to these scenarios.

Bing Custom Search

The Bing Custom Search allows a search based on custom intents and topics. So instead of searching the entire web, Bing will search websites based on topics. It can also be used to implement site-specific searches on a single or a specified set of websites. Internally, custom search first identifies on-topic sites, applies Bing rankings, and returns the results. You also have an option to adjust the result by applying boost, pin, demote, or even blocking the site. In an order to customize the view and use custom search, visit https://www.customsearch.ai/ . Once you have created the instance, modified it as per the convenience, and are satisfied with the result, use the custom search API to consume it in any platform.

Bing Entity Search API

The Bing Entity Search API allows you to get search results that include entities (people and objects) and places (cinema hall, hotels, local business, etc.). This provides a great immersive experience for the end user by providing primary details about the entity being searched. For example, if you are searching for a famous person, you would certainly get the wiki link and a brief description of the person being searched.

All Cognitive Services APIs are available in free and pay-as-you-go pricing tiers. You can choose a tier based on your application’s usage volume. Although we would love to cover each of these in great detail, we are limited by the scope of this book. We will cover enough services from the speech, language, and search categories to launch you into building smart applications in little time.

You can learn more about these services (and possibly more that may have been added recently) by visiting www.microsoft.com/cognitive-services/en-us/apis .

Recap

This chapter serves as an introduction to Cognitive Services. As you understand by now, these APIs are a tremendous boost to resolve some of the complex problems that were not possible to resolve earlier. Another reason for this API-driven model is that it abstracts thousands of processor and VMs running behind the API. With the rise of technologies like IoT and sensors, there are unbelievable opportunities to automate and analyze data. You also learned about Cognitive Services and how are they different from the traditional programming model. The chapter then had a quick overview of the various commercial AI offerings by Microsoft in the form of their Cognitive Services REST APIs.

In the next chapter, you learn to install all the prerequisites for building AI-enabled software and build your first cognitive application using Visual Studio.