Use of Artefacts 103

CONSEQUENCES OF TECHNOLOGY CHANGE

Since technology is not value neutral, it is necessary to consider in further

detail what the consequences of changes may be and how they can be

anticipated and taken into account. The introduction of new or improved

artefacts means that new tasks will appear and that old tasks will change or

even disappear. Indeed, the purpose of design is partly to accommodate such

changes either by shaping the interface, by instructions and training, etc. In

some cases the changes are palpable and immediate. In many other cases the

changes are less obvious and only occur after a while. This is because it takes

time for people to adapt to the peculiarities of a new artefact and a changed

system, such as in task tailoring. From a cybernetic perspective, humans and

machines gradually develop an equilibrium where demands and resources

match reasonably well. This equilibrium is partly the result of system

tailoring, partly of task tailoring (Cook & Woods, 1996). Technically

speaking an equilibrium is said to exist when a state and a transformation are

so related that the transformation does not cause the state to change (Ashby,

1956, p. 73-74). From this point of view technology is value neutral only if

the new artefact does not disturb the established equilibrium.

One effect of the adjustment and tailoring that makes a system work is

that some aspects come to be seen as canonical and while others are seen as

exceptional. This means that users come to expect that the system behaves in

a specific manner and works in a specific way, i.e., that a range of behaviours

are normal. Similarly, there will be a range of infrequent (but actual)

behaviours that are seen as abnormal or exceptional, but which people

nevertheless come to recognise, and which they therefore are able to handle

in some way.

When a system is changed, the canonical and exceptional performances

also change. The canonical performance comprises the intended functions

and uses, which by and large can be anticipated – indeed that is what design

is all about. But the exceptional performance is, by definition, not

anticipated, hence will be new to the user. Representative samples of

exceptional performance may furthermore take a long time appear, since by

definition they are infrequent and unusual. After a given time interval – say

six months – people will have developed a new understanding of what is

canonical and what is exceptional. The understanding of canonical

performance will by and large be correct because this has occurred frequently

enough. There is, however, no guarantee that the understanding of

exceptional performance will also be correct.

The introduction of a new artefact can be seen as a disruption of an

equilibrium that has developed over a period of time, provided that the

system has been left to itself, i.e., that no changes have been made. How long

this time is depends on a number of things such as the complexity of the

104 Joint Cognitive Systems

system, the frequency of use, the ease of use, etc. After the disruption from a

change, the system will eventually reach a new equilibrium, which however

may differ somewhat from the former. It is important to take this transition

into account, and to be able to anticipate it. Specifically, if only one part of

the system is changed (e.g., the direct functions associated to the artefact) but

not others, such as the procedures, rules or the conditions for exceptions, then

the transition will create problems since the system in a sense is not ready for

the changed conditions.

Specifically, the manifestations of failures – or failure modes – will

change. The failure modes are the systematic ways in which incorrect

functions or events manifest themselves, such as the time delays in a

response. New failure modes may also occur, although new failure types are

rare. An example is when air traffic management changes from the use of

paper flight strips to electronic flight strips. With paper flight strips one strip

may get lost, or the order (sequence) of several may inadvertently be

changed. With electronic strips, i.e., the flight strips represented on a

computer screen rather than on paper, either failure mode becomes

impossible. On the other hand, all the strips may become lost at the same

time due to a software glitch, or it may be more difficult to keep track of

them.

Finally, since the structure of the system changes, so will the failure

pathways, i.e., the ways in which unwanted combinations of influences can

occur. Failures are, according to the contemporary view, not the direct

consequence of causes as assumed by the sequential accident model, but

rather the outcome of coincidences that result from the natural variability of

human and system performance (Hollnagel, 2004). If failures are seen as the

consequence of haphazard combinations of conditions and events, it follows

that any change to a system will lead to possible new coincidences.

Traffic Safety

An excellent example of how the substitution principle fails can be found in

the domain of traffic safety. Simply put, the assumption is that traffic safety

can be improved by providing cars with better brakes. The argument is

probably that if the braking capability of a vehicle is improved, and if the

drivers continue to drive as before, then there will be fewer collisions, hence

increased safety. The false assumption is, of course, that the drivers will

continue as before. The fact of the matter is that drivers will notice the

change and that this will affect their way of driving, specifically that they will

feel safer and therefore driver faster or with less separation to the car in front.

This issue has been debated in relation to the introduction of ABS

systems (anti-blocking brakes), and has been explained or expounded in

terms of risk homeostasis (e.g., Wilde, 1982). It is, however, interesting to

Use of Artefacts 105

note that a similar discussion took place already in 1938, long before notions

of homeostasis had become common. At that time the discussion was

couched in terms of field theory (Lewin, 1936; 1951) and was presented in a

seminal paper by Gibson & Crook (1938).

Gibson & Crook proposed that driving could be characterised by means

of two different fields. The first, called the field of safe travel, consisted at

any given moment of “the field of possible paths which the car may take

unimpeded” (p. 454). In the language of Kurt Lewin’s Field Theory, the field

of safe travel had a positive valence, while objects or features with a negative

valence determined the boundaries. The field of safe travel was spatial and

ever changing relative to the movements of the car, rather than fixed in space.

Steering was accordingly defined as “a perceptually governed series of

reactions by the driver of such a sort as to keep the car headed into the middle

of the field of safe travel” (p. 456).

Gibson & Crook further assumed that within the field of safe travel there

was another field, called the minimum stopping zone, defined by the

minimum braking distance needed to bring the car to a halt. The minimum

stopping zone would therefore be determined by the speed of the car, as well

as other conditions such as road surface, weather, etc.

It was further assumed that drivers habitually would tend to maintain a

fixed relation between the field of safe travel and the minimum stopping

zone, referred to as the field-zone ratio. If the field of safe travel would be

reduced in some way, for instance by increasing traffic density, then the

minimum stopping zone would also be reduced be a deceleration of the car.

The opposite would happen if the field of safe travel was enlarged, for

instance if the car came onto an empty highway. The field-zone ratio was

also assumed to be affected by the driver’s state or priorities, e.g., it would

decrease when the driver was in a hurry.

Returning to the above example of improving the brakes of the car,

Gibson & Crook made the following cogent observation:

Except for emergencies, more efficient brakes on an automobile will

not in themselves make driving the automobile any safer (sic!). Better

brakes will reduce the absolute size of the minimum stopping zone, it

is true, but the driver soon learns this new zone and, since it is his

field-zone ratio which remains constant, he allows only the same

relative margin between field and zone as before. (Gibson & Crook,

1938, p. 458)

The net effect of better brakes is therefore that the driver increases the

speed of the car until the familiar field-zone ratio is re-established. In other

words, the effect of making a technological change is that the driver’s

behaviour changes accordingly. In modern language, the field-zone ratio

corresponds to the notion of risk homeostasis, since it expresses a constant

106 Joint Cognitive Systems

risk. This is thus a nice illustration of the general consequences of

technological change, although a very early one.

Typical User Responses to New Artefacts

As part of designing of an artefact it is important to anticipate how people

will respond to it. By this we do not mean how they will try to use it, in

accordance with the interface design or the instructions, i.e., the explicit and

intentional relations, but rather the implicit relations, those that are generic

for most kinds of artefacts rather than specific to individual artefacts. The

generic reactions are important because they often remain hidden or obscure.

These reactions are rarely considered in the design of the system, although

they ought to be.

Prime among the generic reactions is the tailoring that takes place (Cook

& Woods, 1996). One type is the system tailoring, by means of which users

accommodate new functions and structure interfaces. This can also be seen as

a reconfiguration or clarification of the interface in order to make it obvious

what should be done, to clearly identify measures and movements, and to put

controls in the right place. In many sophisticated products the possibility of

system tailoring is anticipated and allowance is made for considerable

changes. One trivial example is commercial office software, which allows

changes to menus and icons, and a general reconfiguration or restructuring of

the interface (using the desk top metaphor). Another example is the modern

car, which allows for minor adjustments of seats, mirrors, steering wheel, and

in some cases even pedals. In more advanced versions this adjustment is done

automatically, i.e., the system carries out the tailoring or rather adapts to the

user. This can be done in the case of cars because the frame of reference is

the anthropometrical characteristics of the driver. In the case of software it

would be more difficult to do since the frame of reference is the subjective

preferences or cognitive characteristics, something that is far harder to

measure.

In many other cases the system tailoring is unplanned, and therefore not

done without effort. (Note that even in the case of planned tailoring, it is only

possible to do that within the limits of the built-in flexibility of the system.

This a driver cannot change from driving in the right side to driving in the

left side of the car – although Buckminster Fuller’s Dymaxion car from 1933

allowed for that.)

The reason for unplanned tailoring is often that the artefact is incomplete

or inadequate in some ways; i.e., that it has not been designed as well as it

should have. System tailoring can compensate for minor malfunctions, bugs,

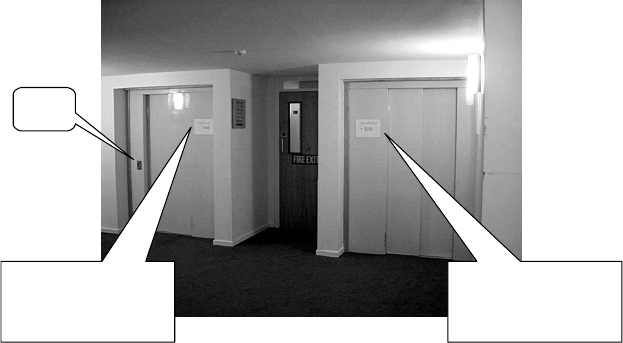

and oversights. A good example is how people label – or re-label – things. A

concrete example is the lifts found in a university building in Manchester,

UK (Figure 5.4). It is common to provide a call button by each lift, but for

Use of Artefacts 107

some reason that was not the case here. Consequently, a sign at the right-

hand lift instructed people that the call-button was by the left-hand lift, while

a sign at the left-hand lift instructed people that a bell-sound indicated that

the right-hand lift had arrived.

Another type of tailoring is task tailoring. This happens in the case where

it is not sufficient to tailor the system, or where system tailoring is

impossible. The alternative is then to tailor the tasks, i.e., to change the way

in which the system is used so that it becomes usable despite quirks of the

interface and functionality. Task tailoring amounts to adjusting the ways

things are done to make them feasible, even if it requires going beyond the

given instructions. Task tailoring does not go as far as violations, which are

not mandated by an inadequate interface but by other things, such as overly

complex rules or (from the operator’s point of view) unnecessary demands to,

e.g., safety. Task tailoring means that people change the way they go about

things so that they can achieve their goals. Task tailoring may, for instance,

involve neglecting certain error messages, which always appear and seem to

have no meaning but which cannot easily be got rid. The solution is to invoke

a common heuristic or an efficiency-thoroughness trade-off (ETTO) rule,

such as “there is no need to pay attention to that, it does not mean anything”

(Hollnagel, 2004). The risks in doing this should be obvious. Task tailoring

may also involve the development of new ‘error sensitive’ strategies, for

instance being more cautious when the system is likely to malfunction or

break down.

IF YOU HEAR THE

BELL PLEASE USE

THE RIGHT-HAND

LIFT

BOTH LIFTS ARE

OPERATED BY THE

BUTTON ON THE

LEFT

Call

button

Figure 5.4: Unusual call-button placement.

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.