Chapter Fifteen. Business Process Management

Information on its own doesn’t create business value; it is the systematic application of the information to the business issue that creates value.

Sinan Aral and Peter Weill1

1. Sinan Aral and Peter Weill, “IT Savvy Pays Off: How Top Performers Match IT Portfolios and Organizational Practices,” MIT Sloan Center for Information Science Working Paper No. 353, May 2005.

Business process management (BPM) is a broad topic. We do not cover the full spectrum of BPM in this chapter; an entire book could be written about it. Nonetheless, a high-level discussion is essential in the context of Lean Integration. Data must be understood within the context of the many different business processes that exist across silos of complex organizations. Data outside this context runs the risk of being misinterpreted and misused, causing potential waste to the business and the ICC. Through this contextual understanding of information, reuse, agility, and loose coupling can be achieved by understanding how data fits in its various business process contexts.

A BPM COE is a special kind of competency center that provides a broad spectrum of services related to the full life cycle of BPM activities, including process modeling, simulation, execution, monitoring, and optimization. From an ICC perspective, of primary interest are the integration aspects of BPM across the enterprise. To be more specific, this chapter focuses on

• Defining the most common models used to describe data in motion to different audiences, from low-level physical data exchanges to high-level business processes

• Describing five categories of BPM activities: design, modeling, execution, monitoring, and optimization

• Outlining architectural considerations in support of BPM

The chapter closes with a case from Wells Fargo and how that company applied the BEST architecture and Lean principles to achieve a highly efficient and continuously improving business process.

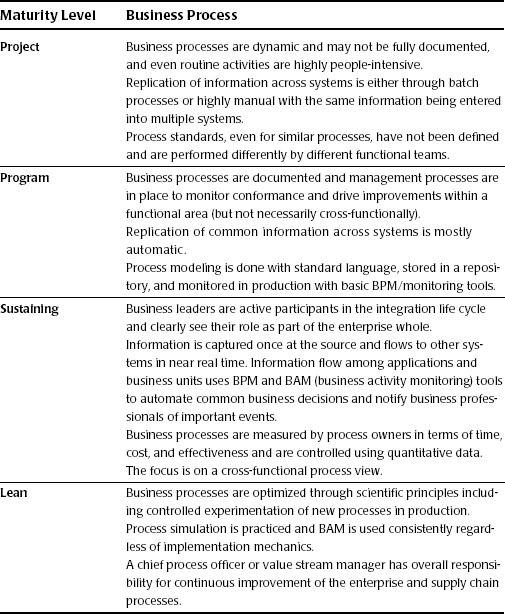

The business process maturity model shown in Table 15.1 is primarily related to the disciplines within the business areas and how consistently and formally the business process hierarchy is defined and managed across the enterprise. This is a critical factor in ensuring alignment between the IT organization and the business groups. A secondary factor of business process maturity is the degree of manual effort needed to keep the data in systems synchronized.

Table 15.1 Business Process Management Maturity

The challenges that the BPM competency and related Lean practices are intended to address include the following:

1. Organizational agility: Business processes are constantly changing as a result of competitive pressures, mergers and acquisitions, regulatory requirements, and new technology advancements. A methodology is needed from an integration perspective to enable rapid changes to business processes.

2. Loose coupling: When one step in a process changes for some reason, it is desirable to have an architecture and infrastructure that minimize the need to change downstream or upstream process steps.

3. Common business definitions: Multifunctional flow-through business processes have the challenge of maintaining consistent definitions of data. To make it even more challenging, the definitions of data can change depending on the process context. A common vocabulary is required for data and business processes in order to enable “one version of the truth.” The key to process integration is data standardization. As standardized data is made available, business owners can effectively integrate their processes.

4. Channel integration: Most organizations offer their customers more than one interaction channel. For example, retail organizations may have five or more (i.e., stores, call centers, Internet, mobile device, in-home service, third-party reseller, etc.). Business processes must therefore transcend multiple channels as seamlessly as possible. When this is done well, based on an integrated view of customer data and common processes, the systems can improve customer satisfaction and sales.

5. Operational monitoring and support: A major challenge in large, complex distributed computing environments is clearly understanding the implications of low-level technical events for higher-level business processes. Traceability among business processes and technical implementation is therefore critical.

Data-in-Motion Models

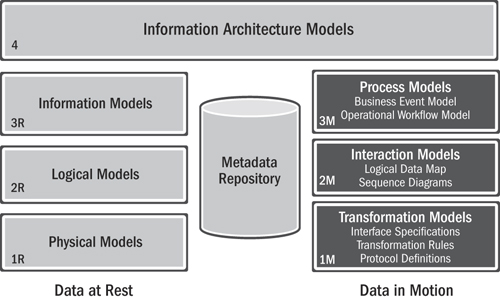

The prior chapter defined the models on the left side of the IA framework, the data-at-rest layers, so now we will take a closer look at the data-in-motion layers as highlighted in Figure 15.1. Like the data-at-rest layers, the data-in-motion layers also specify a series of models at different levels of abstraction with the information that is relevant to various roles. Collectively, these models describe how data moves between applications inside and outside the organization (such as supplier systems, customer systems, or cloud applications).

Figure 15.1 Data-in-motion models

Process Models (3M)

There are at least two process models on the business view (layer 3) of the IA framework:

• Business event model: This is a common (canonical) description of business events including business process triggers, target subscribers, and payload descriptions (from elements in the business glossary). This model also fits into the classification of semantic models but in this case for data in motion rather than data at rest.

• Operational workflow model: This model is a depiction of a sequence of operations, declared as the work of a person, a group, or a system. Workflow may be seen as any abstraction of real work at various levels of detail, thus serving as a virtual representation of actual work. The flow being described often refers to a document or information that is being transferred from one step to another or between a system and a person.

Interaction Models (2M)

There are two primary interaction models on the solution view (layer 2) of the IA framework:

• Logical data map: This describes the relationship between the extreme starting points and extreme ending points of information exchanges between applications. The logical data map also contains business rules concerning data filtering, cleansing, and transformations.

• Sequence diagram: The alternate representation of operational scenarios using UML techniques shows how process steps operate with one another and in what order. Individual operational process steps may have their own model representations, thereby creating a hierarchy of sequence diagrams at different levels of abstraction. Sequence diagrams are sometimes called “event-trace diagrams,” “event scenarios,” or “timing diagrams.”

Transformation Models (1M)

There are three primary transformation models on the technology view (layer 1) of the IA framework:

• Interface specifications: Interface generally refers to an abstraction that a system provides of itself to enable access to data or process stems and separates the methods of external communication from internal operation. The specifications are the documented description of the data, operations, and protocol provided by or supported by the interface. Mature Lean Integration teams maintain integration specifications in structured metadata repositories.

• Transformation rules: These are the technical transformation rules and sequence of steps that implement the business rules defined in the logical data map.

• Protocol definitions: This is a set of rules used to communicate between application systems across a network. A protocol is a convention or standard that controls or enables the connection, communication, and data transfer between computing endpoints. In its simplest form, a protocol can be defined as “the rules governing the syntax, semantics, and synchronization of communication.”

Activities

The activities that constitute BPM can be grouped into five categories: design, modeling, execution, monitoring, and optimization.

Business Process Design

Process design encompasses both identifying existing processes and designing the “to be” process. Areas of focus include representation of the process flow, the actors within it, alerts and notifications, escalations, standard operating procedures, SLAs, and task handover mechanisms.

Good design reduces the occurrence of problems over the lifetime of the process. Whether or not existing processes are considered, the aim of this step is to ensure that a correct and efficient theoretical design is prepared.

The proposed improvement can be in human-to-human, human-to-system, or system-to-system workflows and may target regulatory, market, or competitive challenges faced by the business.

Several common techniques and notations are available for business process mapping (or business process modeling), including Integration Definition (IDEF), BPWin, event-driven process chains, and BPMN.

Business Process Modeling

Modeling takes the theoretical design and introduces combinations of variables, for instance, changes in the cost of materials or increased rent, that determine how the process might operate under different circumstances.

It also involves running “what-if” analysis on the processes: “What if I have 75 percent of the resources to perform the same task?” “What if I want to do the same job for 80 percent of the current cost?”

A real-world analogy can be a wind-tunnel test of an airplane or test flights to determine how much fuel will be consumed and how many passengers can be carried.

Business Process Execution

One way to automate processes is to develop or purchase an application that executes the required steps of the process; however, in practice, these applications rarely execute all the steps of the process accurately or completely. Another approach is to use a combination of software and human intervention; however, this approach is more complex, making documenting the process difficult.

As a response to these problems, software has been developed that enables the full business process (as developed in the process design activity) to be defined in a computer language that can be directly executed by the computer. The system either uses services in connected applications to perform business operations (e.g., calculating a repayment plan for a loan) or, when a step is too complex to automate, sends a message to a human requesting input. Compared to either of the previous approaches, directly executing a process definition can be more straightforward and therefore easier to improve. However, automating a process definition requires flexible and comprehensive infrastructure, which typically rules out implementing these systems in a legacy IT environment.

The commercial BPM software market has focused on graphical process model development, rather than text-language-based process models, as a means to reduce the complexity of model development. Visual programming using graphical metaphors has increased productivity in a number of areas of computing and is well accepted by users.

Business rules have been used by systems to provide definitions for governing behavior, and a business rule engine can be used to drive process execution and resolution.

Business Process Monitoring

Monitoring encompasses tracking individual processes so that information on their state can be easily seen and statistics generated about their performance. An example of tracking is being able to determine the state of a customer order (e.g., ordered arrived, awaiting delivery, invoice paid) so that problems in the operation can be identified and corrected.

In addition, this information can be used to work with customers and suppliers to improve their connected processes. Examples of these statistics are the generation of measures on how quickly a customer order is processed or how many orders were processed in the last month. These measures tend to fit into three categories: cycle time, defect rate, and productivity.

The degree of monitoring depends on what information the business wants to evaluate and analyze and how the business wants it to be monitored, in real time or ad hoc. Here, business activity monitoring (BAM) extends and expands the monitoring tools generally provided by BPMS.

Process mining is a collection of methods and tools related to process monitoring. The aim of process mining is to analyze event logs extracted through process monitoring and to compare them with an a priori process model. Process mining allows process analysts to detect discrepancies between the actual process execution and the a priori model, as well as to analyze bottlenecks.

Business Process Optimization

Process optimization includes retrieving process performance information from modeling or monitoring and identifying the potential or actual bottlenecks and potential for cost savings or other improvements, and then applying those enhancements to the design of the process, thus continuing the value cycle of BPM.

Architecture

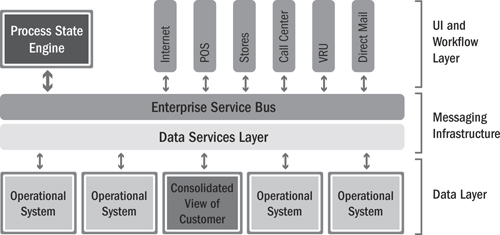

Figure 15.2 shows a typical high-level architecture that supports business process execution, monitoring, and optimization.

Figure 15.2 BPM architecture for channel integration or straight-through processing

The bottom layer of the graphic shows the business systems and integration systems that contain the data that is created, accessed, or updated as part of the business process. For simplicity, we refer to this as the data layer.

The messaging layer may be an enterprise service bus, or some combination of technologies that support real-time information exchanges between systems, event notifications, and publish/subscribe integration patterns.

The top layer of the architecture shows two key elements. The first is a process state engine that contains the business rules that define the process execution steps, the operational functions to monitor and control the processes, and the metrics and metadata that support process optimization. The process state engine may be a purchased application or an internally developed one, but in either case its primary purpose is to maintain information about the state of processes in execution independently of the underlying systems. In other words, the process state engine is an integration system that persists process state for cross-functional or long-running processes, whereas the other operational systems persist the business data. Long-running processes may be measured in minutes, hours, weeks, or even months. While it is possible for a process state engine to also control short-run processes (measured in seconds), these sorts of orchestrations are often handled in the service bus layer.

The second element on the top layer is the UI or user interface layer. This is a common architecture pattern for a multichannel integration scenario. The basic concept is to enable access to the data of record in the source system in real time from multiple channels such as the Internet, point of sale, or call center. Simple transactions are enabled by the enterprise service bus in a request/reply pattern. Longer-running processes may require the involvement of the process state engine in order to allow a process, such as opening an account, for example, to be started in one channel and finished in another channel without the user having to rekey all the data.

The net effect of this architecture is an extremely efficient (Lean) infrastructure that minimizes waste by providing a set of operational systems that are mutually exclusive and independent, yet provide users with a comprehensive and consistent view of the data regardless of which UI device they use to access it.

The following Post Closing case study is an exceptional example of a layered BPM architecture. This is the same case that was introduced earlier. Chapter 9 described the Lean principles that were used, and the following section completes the story with the integration architecture that enabled the impressive business outcomes.