18

The Future of Kubernetes

In this chapter, we will look at the future of Kubernetes from multiple angles. We’ll start with the momentum of Kubernetes since its inception across dimensions such as community, ecosystem, and mindshare. Spoiler alert – Kubernetes won the container orchestration wars by a landslide. As Kubernetes grows and matures, the battle lines shift from beating competitors to fighting against its own complexity. Usability, tooling, and education will play a major role as container orchestration is still new, fast-moving, and not a well-understood domain. Then we will take a look at some very interesting patterns and trends, and finally, we will review my predictions from the second edition, and I will make some new predictions.

The covered topics are as follows:

- The Kubernetes momentum

- The importance of CNCF

- Kubernetes extensibility

- Service mesh integration

- Serverless computing on Kubernetes

- Kubernetes and VMs

- Cluster autoscaling

- Ubiquitous operators

- Kubernetes for AI

- Kubernetes challenges

The Kubernetes momentum

Kubernetes is undeniably a juggernaut. Not only did Kubernetes beat all the other container orchestrators, but it is also the de facto solution on public clouds, utilized in many private clouds, and even VMware – the virtual machine company – is focused on Kubernetes solutions and integrating its products with Kubernetes.

Kubernetes works very well in multi-cloud and hybrid-cloud scenarios due to its extensible design.

In addition, Kubernetes makes inroads on the edge, too, with custom distributions that expand its broad applicability even more.

The Kubernetes project continues to release a new version every three months, like clockwork. The community just keeps growing.

The Kubernetes GitHub repository has almost 100,000 stars. One of the major drivers of this phenomenal growth is the CNCF (Cloud Native Computing Foundation).

Figure 18.1: Star History chart

The importance of the CNCF

The CNCF has become a very important organization in the cloud computing scene. While it is not Kubernetes-specific, the dominance of Kubernetes is undeniable. Kubernetes is the first project to graduate, and most of the other projects lean heavily toward Kubernetes. In particular, the CNCF offers certification and training only for Kubernetes. The CNCF, among other roles, ensures that cloud technologies will not suffer from vendor lock-in. Check out this crazy diagram of the entire CNCF landscape: https://landscape.cncf.io.

Project curation

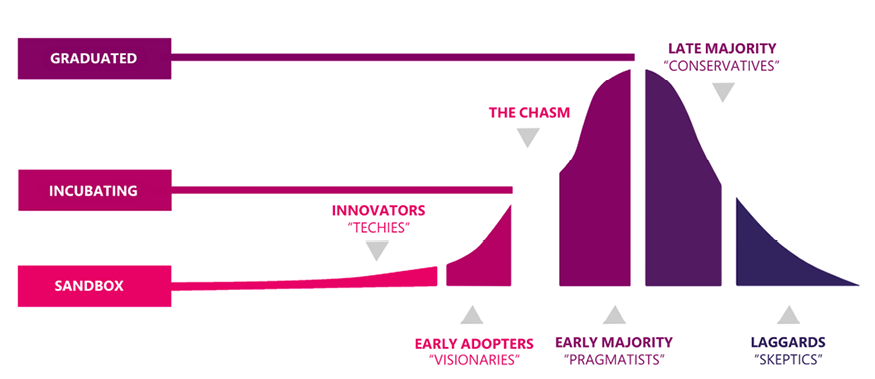

The CNCF assigns maturity levels to projects: graduated, incubating, and sandbox:

Figure 18.2: CNCF maturity levels

Projects start at a certain level – sandbox or incubating – and over time, can graduate. That doesn’t mean that only graduated projects can be safely used. Many incubating and even sandbox projects are used heavily in production. For example, etcd is the persistent state store of Kubernetes itself, and it is just an incubating project. Obviously, it is a highly trusted component. Virtual Kubelet is a sandbox project that powers AWS Fargate and Microsoft ACI. This is clearly enterprise-grade software.

The main benefit of the CNCF curation of projects is to help navigate the incredible ecosystem that grew around Kubernetes. When you look to extend your Kubernetes solution with additional technologies and tools, the CNCF projects are a good place to start.

Certification

When technologies start to offer certification programs, you can tell they are here to stay. The CNCF offers several types of certifications:

- Certified Kubernetes for conforming Kubernetes distributions and installers (about 90).

- Kubernetes Certified Service Provider (KCSP) for vetted service providers with deep Kubernetes experience (134 providers).

- Certified Kubernetes Administrator (CKA) for administrators.

- Certified Kubernetes Application Developer (CKAD) for developers.

- Certified Kubernetes Security Specialist (CKS) for security experts.

Training

The CNCF offers training too. There is a free introduction to Kubernetes course and several paid courses that align with the CKA and CKAD certification exams. In addition, the CNCF maintains a list of Kubernetes training partners (https://landscape.cncf.io/card-mode?category=kubernetes-training-partner&grouping=category).

If you’re looking for free Kubernetes training, here are a couple of options:

- VMware Kubernetes academy

- Google Kubernetes Engine on Coursera

Community and education

The CNCF also organizes conferences like KubeCon, CloudNativeCon, and meetups and maintains several communication avenues like Slack channels and mailing lists. It also publishes surveys and reports.

The number of attendees and participants keeps growing year after year.

Tooling

The number of tools to manage containers and clusters, the various add-ons, extensions, and plugins just keep growing. Here is a subset of the tools, projects, and companies that participate in the Kubernetes ecosystem:

Figure 18.3: Kubernetes tooling

The rise of managed Kubernetes platforms

Pretty much every cloud provider has a solid managed Kubernetes offering these days. Sometimes there are multiple flavors and ways of running Kubernetes on a given cloud provider.

Public cloud Kubernetes platforms

Here are some of the prominent managed platforms:

- Google GKE

- Microsoft AKS

- Amazon EKS

- Digital Ocean

- Oracle Cloud

- IBM Cloud Kubernetes service

- Alibaba ACK

- Tencent TKE

Of course, you can always roll your own and use the public cloud providers just as infrastructure providers. This is a very common use case with Kubernetes.

Bare metal, private clouds, and Kubernetes on the edge

Here, you can find Kubernetes distributions that are designed or configured to run in special environments, often in your own data centers as a private cloud or in more restricted environments like edge computing on small devices:

- Google Anthos for GKE

- OpenStack

- Rancher k3S

- Kubernetes on Raspberry PI

- KubeEdge

Kubernetes PaaS (Platform as a Service)

This category of offerings aims to abstract some of the complexity of Kubernetes and put a simpler facade in front of it. There are many varieties here. Some of them cater to the multi-cloud and hybrid-cloud scenarios, some expose a function-as-a-service interface, and some just focus on a better installation and support experience:

- Google Cloud Run

- VMware PKS

- Platform 9 PMK

- Giant Swarm

- OpenShift

- Rancher RKE

Upcoming trends

Let’s talk about some of the technological trends in the Kubernetes world that will be important in the near future.

Security

Security is, of course, a paramount concern for large-scale systems. Kubernetes is primarily a platform for managing containerized workloads. Those containerized workloads are often run in a multi-tenant environment. The isolation between tenants is super important. Containers are lightweight and efficient because they share an OS and maintain their isolation through various mechanisms like namespace isolation, filesystem isolation, and cgroup resource isolation. In theory, this should be enough. In practice, the surface area is large, and there were multiple breakouts out of container isolation.

To address this risk, multiple lightweight VMs were designed to add a hypervisor (machine-level virtualization) as an additional isolation level between the container and the OS kernel. The big cloud providers already support these technologies, and the Kubernetes CRI interface provides a streamlined way to take advantage of these more secure runtimes.

For example, FireCracker is integrated with containerd via firecracker-containerd. Google gVisor is another sandbox technology. It is a userspace kernel that implements most of the Linux system calls and provides a buffer between the application and the host OS. It is also available through containerd via gvisor-containerd-shim.

Networking

Networking is another area that is an ongoing source of innovation. The Kubernetes CNI allows any number of innovative networking solutions behind a simple interface. A major theme is the incorportation of eBPF – a relatively new Linux kernel technology – into Kubernetes.

eBPF stands for extended Berkeley Packet Filter. The core of eBPF is a mini-VM in the Linux kernel that executes special programs attached to kernel objects when certain events occur, such as a packet being transmitted or received. Originally, only sockets were supported, and the technology was called just BPF. Later, additional objects were added to the mix and that’s when the e for extended came along. eBPF’s claim to fame is its performance due to the fact it runs highly optimized compiled BPF programs in the kernel and doesn’t require extending the kernel with kernel modules.

There are many applications for eBPF:

- Dynamic network control: iptables-based approaches don’t scale very well in a dynamic environment like a Kubernetes cluster where you have a constantly changing set of pods and services. Replacing iptables with BPF programs is both more performant and more manageable. Cilium is focused on routing and filtering traffic using eBPF.

- Monitoring connections: Creating an up-to-date map of TCP connections between containers is possible by attaching a BPF program kprobes that track socket-level events. WeaveScope utilizes this capability by running an agent on each node that collects this information and sends it to a server that provides a visual representation through a slick UI.

- Restricting syscalls: The Linux kernel provides more than 300 system calls. In a security-sensitive container environment, it is highly desirable. The original seccomp facility was pretty rudimentary. In Linux 3.5, seccomp was extended to support BPF for advanced custom filters.

- Raw performance: eBPF provides significant performance benefits, and projects like Calico took advantage and implemented a faster data plane that uses fewer resources.

Custom hardware and devices

Kubernetes manages nodes, networking, and storage at a relatively high level. But there are many benefits to integrating specific hardware at a fine-grained level. For example, GPUs, high-performance network cards, FPGAs, InfiniBand adapters, and other compute and networking and storage resources. This is where the device plugin framework comes in, which can be found here: https://kubernetes.io/docs/concepts/extend-kubernetes/compute-storage-net/device-plugins. It has been in GA since Kubernetes 1.26, and there is ongoing innovation in this area. For example, monitoring device plugin resources is also in beta since Kubernetes 1.15. It is very interesting to see what devices will be harnessed to Kubernetes. The framework itself follows modern Kubernetes extensibility practices by utilizing gRPC.

Service mesh

The service mesh is arguably the most important trend in networking over the last couple of years. We covered service meshes in depth in Chapter 14, Utilizing Service Meshes. The adoption is impressive, and I predict that most Kubernetes distributions will provide a default service mesh and allow easy integration with other service meshes. The benefits that service meshes provide are just too valuable. It makes sense to provide a default platform that includes Kubernetes with an integrated service mesh. That said, Kubernetes itself will not absorb some service mesh and expose it through its API. This goes against the grain of keeping the core of Kubernetes small.

Google Anthos is a good example where Kubernetes + Knative + Istio are combined to provide a unified platform that provides an opinionated best-practices bundle that would take an organization a lot of time and resources to build on top of vanilla Kubernetes.

Another push in this direction is the sidecar container KEP; information about it can be found here: https://github.com/kubernetes/enhancements/blob/master/keps/sig-node/753-sidecar-containers/README.md.

The sidecar container pattern has been a staple of Kubernetes from the get-go. After all, pods can contain multiple containers. But there was no notion of a main container or a sidecar container. All containers in the pod have the same status. Most service meshes use sidecar containers to intercept traffic and perform their jobs. Formalizing sidecar containers will help those efforts and push service meshes even further.

It’s not clear at this stage if Kubernetes and the service mesh will be hidden behind a simpler abstraction on most platforms or if they will be front and center.

Serverless computing

Serverless computing is another trend that is here to stay. We discussed it at length in Chapter 12, Serverless Computing on Kubernetes. Kubernetes and serverless can be combined on multiple levels. Kubernetes can utilize serverless cloud solutions like AWS Fargate and AKS Azure Container Instances (ACI) to save the cluster administrator from managing nodes. This approach also caters to integrating lightweight VMs transparently with Kubernetes since the cloud platforms don’t use naked Linux containers for their container-as-a-service platforms.

Another avenue is to reverse the roles and expose containers as a service powered by Kubernetes under the covers. This is exactly what Google Cloud Run is doing. The lines blur here as there are multiple products from Google to manage containers and/or Kubernetes ranging from just GKE, through Anthos GKE (bring your own cluster to the GKE environment for your private data center), Anthos (managed Kubernetes + service mesh), and Anthos Cloud Run.

Finally, there are functions-as-a-service and scale-to-zero projects running inside your Kubernetes cluster. Knative may become the leader here, as it is already used by many frameworks, and is deployed heavily through various Google products.

Kubernetes on the edge

Kubernetes is the poster boy of cloud-native computing, but with the Internet of Things (IoT) revolution, there is more need to perform computation at the edge of the network. Sending all data to the backend for processing suffers from several drawbacks:

- Latency

- Need for enough bandwidth

- Cost

With edge locations collecting a lot of data via sensors, video cameras, etc., the amount of edge data grows, and it makes more sense to perform more and more sophisticated processing at the edge. Kubernetes grew out of Google’s Borg, which was definitely not designed to run at the edge of the network. But Kubernetes’ design proved to be flexible enough to accommodate it. I expect that we will see more and more Kubernetes deployments at the edge of the network, which will lead to very interesting systems that are composed of many Kubernetes clusters that will need to be managed centrally.

KubeEdge is an open-source framework that is built on top of Kubernetes and Mosquito – an open-source implementation of MQTT message broker – to provide a foundation for networking, application deployment, and metadata synchronization between the cloud and the edge.

Native CI/CD

For developers, one of the most important questions is the construction of a CI/CD pipeline. There are many options and choosing between them can be difficult. The CD Foundation is an open source foundation that was formed to standardize concepts like pipelines and workflows, and define industry specifications that will allow different tools and communities to interoperate better.

The current projects are:

- Jenkins

- Tekton

- Spinnaker

- Jenkins X

- Screwdriver.cd

- Ortelius

- CDEvents

- Pyrsia

- Shipwright

Note that only Jenkins and Tekton are considered graduated projects. The rest are incubating projects (even Spinnaker).

One of my favorite native CD projects, Argo CD, is not part of the CD Foundation. I actually opened a GitHub issue asking to submit Argo CD to the CDF, but the Argo team has decided that CNCF is a better fit for their project.

Another project to watch is CNB – Cloud Native Buildpacks. The project takes the source and creates OCI (think Docker) images. It is important for FaaS frameworks and native in-cluster CI. It is also a CNCF sandbox project.

Operators

The Operator pattern emerged in 2016 from CoreOS (acquired by RedHat, acquired by IBM) and gained a lot of success in the community. An Operator is a combination of custom resources and a controller used to manage an application. At my current job, I write operators to manage various aspects of infrastructure, and it is a joy. It is already the established way to distribute non-trivial applications to Kubernetes clusters. Check out https://operatorhub.io/ for a huge list of existing operators. I expect this trend to continue and intensify.

Kubernetes and AI

AI is the hottest trend right now. Large language models (LLMs) and Generative Pre-trained Transforms (GPT) surprised most professionals with their capabilities. The release of ChatGPT 3.5 by OpenAI was a watershed moment. AI suddenly excels in areas that were considered strongholds of human intelligence, such as creative writing, painting, understanding, answering nuanced questions, and, of course, coding. My perspective is that advanced AI is the solution to the big data problem. We learned to collect a lot of data, but analyzing and extracting insights from the data is a difficult and labor-intensive process. AI seems like the right technology to digest all the data and automatically understand, summarize, and organize it into a useful form to be used by humans and other systems (most likely AI-based systems).

Let’s see why Kubernetes is such a great fit for AI workloads.

Kubernetes and AI synergy

Modern AI is all about deep learning networks and huge models with billions of parameters trained on massive datasets, often using dedicated hardware. Kubernetes is a perfect fit for such workloads as it quickly adapts to the workload’s needs, takes advantage of new and improved hardware, and provides strong observability.

The best evidence is the field. Kubernetes is at the core of the OpenAI pipeline, and additional companies are developing and deploying massive AI applications. Check out this article that shows how OpenAI pushes the envelope with Kubernetes and runs huge clusters with 7,500 nodes: https://openai.com/research/scaling-kubernetes-to-7500-nodes.

Let’s consider training AI models on Kubernetes.

Training AI models on Kubernetes

Training large AI models is potentially slow and very expensive. Organizations that partake in training AI models on Kubernetes benefit from many of its properties:

- Scalability: Kubernetes provides a highly scalable infrastructure for deploying and managing AI workloads. With Kubernetes, it is possible to quickly scale resources up or down based on demand, enabling organizations to train AI models quickly and efficiently.

- Resource utilization: Kubernetes allows for efficient resource utilization, enabling organizations to train AI models using the most cost-effective infrastructure. With Kubernetes, it is possible to automatically allocate and manage resources, ensuring that the right resources are available for the workload.

- Flexibility: Kubernetes provides a high degree of flexibility in terms of the infrastructure used for training AI models. Kubernetes supports a wide range of hardware, including GPUs, FPGAs, and TPUs, making it possible to use the most appropriate hardware for the workload.

- Portability: Kubernetes provides a highly portable infrastructure for deploying and managing AI workloads. Kubernetes supports a wide range of cloud providers and on-premises infrastructure, making it possible to train AI models in any environment.

- Ecosystem: Kubernetes has a vibrant ecosystem of open-source tools and frameworks that can be used for training AI models. For example, Kubeflow is a popular open-source framework for building and deploying machine learning workflows on Kubernetes.

Running AI-based systems on Kubernetes

Once you have trained your models and built your application on top of your models, you need to deploy and run it. Kubernetes is, of course, a great platform for deploying workloads in general. AI-based workloads are often designed for a reliable and quick super-human response. The high availability that Kubernetes offers and the ability to quickly scale up and down based on demand satisfy these requirements.

In addition, if the system is designed to keep learning (as opposed to fixed pre-trained systems like GPTs), then Kubernetes offers strong security and control that support safe operation.

Let’s look at the emerging field of AIOps.

Kubernetes and AIOps

AIOps is a paradigm that leverages AI and machine learning to automate and optimize the management of infrastructure. AIOps can help organizations improve the reliability, performance, and security of their IT infrastructure while reducing the burden on human engineers.

Kubernetes is a perfect target for practicing AIOps. It is fully accessible programmatically. It is often deployed with deep observability. Those two conditions are necessary and sufficient to enable AI to scrutinize the state of the system and take action when necessary.

The future of Kubernetes seems bright, but it has some challenges too.

Kubernetes challenges

Is Kubernetes the answer to everything to do with infrastructure? Not at all. Let’s look at some challenges, such as Kubernetes’ complexity, and some alternative solutions for the problem of developing, deploying, and managing large-scale systems.

Kubernetes complexity

Kubernetes is a large, powerful, and extensible platform. It is mostly un-opinionated and very flexible. It has a huge surface area with a lot of resources and APIs. In addition, Kubernetes has a huge ecosystem. That translates to a system that is extremely difficult to learn and master. What does it say about Kubernetes’ future? One likely scenario is that most developers will not interact with Kubernetes directly. Simplified solutions built on top of Kubernetes will be the primary access point for most developers.

If Kubernetes is fully abstracted, then it may become a threat to its future since Kubernetes, as the underlying implementation, might be replaced by the solution provider. The final users may not need to make any changes at all to their code or configuration.

Another scenario is that more and more organizations negatively weigh the costs of building on top of Kubernetes compared to lightweight container orchestration platforms such as Nomad. This could lead to an exodus from Kubernetes.

Let’s look at some technologies that may compete with Kubernetes in different areas.

Serverless function platforms

Serverless function platforms offer organizations and developers similar benefits to Kubernetes using a simpler (if less powerful) paradigm. Instead of modeling your system as a set of long-running applications and services, you just implement a set of functions that can be triggered on demand. You don’t need to manage clusters, node pools, and servers. Some solutions offer long-running services, too, either pre-packaged as containers or directly from source. We covered it thoroughly in Chapter 12, Serverless Computing on Kubernetes. As the serverless platforms get better and Kubernetes become more complicated, more organizations may prefer to at least start using serverless solutions and possibly migrate to Kubernetes later.

First and foremost, all cloud providers offer various serverless solutions. The pure cloud functions models are:

- AWS Lambda

- Google Cloud Functions

- Azure Functions

There are also multiple strong and easy-to-use solutions out there that are not associated with the big cloud providers:

- Cloudflare Workers

- Fly.io

- Render

- Vercel

That concludes our coverage of Kubernetes challenges. Let’s summarize the chapter.

Summary

In this chapter, we looked at the future of Kubernetes, and it looks great! The technical foundation, the community, the broad support, and the momentum are all very impressive. Kubernetes is still young, but the pace of innovation and stabilization is very encouraging. The modularization and extensibility principles of Kubernetes let it become the universal foundation for modern cloud-native applications. That said, there are some challenges to Kubernetes and it might not dominate each and every scenario. This is a good thing. Diversity, competition, and inspiration from other solutions will just make Kubernetes better.

At this point, you should have a clear idea of where Kubernetes is right now and where it’s going from here. You should be confident that Kubernetes is not just here to stay, but that it will be the leading container orchestration platform for many years to come and integrate with any major offering and environment you can imagine, from planet-scale public cloud platforms, private clouds, data centers, edge locations and all the way down to your development laptop and Raspberry Pi.

That’s it! This is the end of the book.

Now it’s up to you to use what you’ve learned and build amazing things with Kubernetes!

Join us on Discord!

Read this book alongside other users, cloud experts, authors, and like-minded professionals.

Ask questions, provide solutions to other readers, chat with the authors via. Ask Me Anything sessions and much more.

Scan the QR code or visit the link to join the community now.

https://packt.link/cloudanddevops